Abstract

Interacting in 3D has considerable and growing importance today in several areas and most computer systems are equipped with both a mouse or touchscreen and one or more cameras that can be readily used for gesture-based interaction. Literature comparing mouse and free-hand gesture interaction, however, is still somewhat sparse in regards to user satisfaction, learnability and memorability, which can be particularly important attributes for applications in education or entertainment. In this paper, we compare the use of mouse-based interaction with free-hand bimanual gestures to translate, scale and rotate a virtual 3D object. We isolate object manipulation from its selection and focus particularly on those three attributes to test whether the related variables show significant differences when comparing both modalities. To this end, we integrate a gesture recognition system, Microsoft’s Kinect and an educational virtual atlas of anatomy, then design and perform an experiment with 19 volunteers, combining data from self-reported metrics and event logs. Our results show that, in this context, the difference is indeed significant and favors the use of gestures for most experimental tasks and evaluated attributes.

Similar content being viewed by others

Keywords

1 Introduction

Interaction in virtual or augmented 3D environments has been researched at length for several years, as well as explored in areas of considerable economic importance, such as Computer Aided Design, medical imaging, rehabilitation, data visualization, training and education, entertainment etc. [8, 9]. Furthermore, 3D interaction has also begun to reach a much more varied and large number of users in the last couple decades: video games are an important element in modern culture and feature 3D environments and interaction very often, being a major example of this wider reach.

Many devices and techniques were proposed and analyzed to facilitate this type of interaction beyond the use of keyboard and mouse. Since Put-That-There [4] combined pointing gestures and speech, and particularly since the first dataglove was proposed [20], hand gestures are among these options. Image-based gesture recognition using cameras as the only sensors has become more widespread, especially in the last decade, thanks in large part to the greater availability of such image capture devices and the growth in processing power. Microsoft’s Kinect sensorFootnote 1 and associated software, easily available and also providing reliable depth sensing and skeleton tracking, have intensified this trend. But the computer mouse still is one of the most used interaction devices for many tasks, including 3D interaction, despite having only two degrees of freedom to move. If, on one hand, it has this limitation, on the other a mouse or an equivalent device is present in most computer systems (with the notable and growing exception of portable devices with touch-based displays) and most users are very familiar with its use. We are now at a point in which both the mouse and some image capture devices are often already present in a large number of computer systems, making both gesture and mouse-based interaction accessible alternatives. Thus it is useful to compare these forms of interaction, particularly for 3D tasks, for which a mouse’s limited degrees of freedom might be a hindrance.

Our goal, then, is to compare the use of the mouse and of free-hand, bimanual gestures in a specific major 3D interaction task: virtual object manipulation, i.e. to translate, scale and rotate a virtual object in 3D. We wish to isolate object manipulation from its selection and to focus particularly on variables that indicate satisfaction, learnability and memorability.

These three attributes often receive less attention than performance measurements, such as time for task completion, or than presence and immersion in Virtual Reality systems [8], but they can be of decisive importance in certain areas of application, such as entertainment and education [16], since these areas have certain usage characteristics in common: in most cases, users engage in either activity, playing or studying, voluntarily, without such a drive for efficiency, and they keep performing it for a period of time which is also voluntarily chosen. They also often spend only a rather limited number of hours on each application, game or subject. This usage is quite different from how users interact, for instance, with applications for work, less voluntarily and for much longer periods of time. Given the significant and growing importance of entertainment and education as areas of application for 3D interaction (as well as our experience in these areas), we believe a greater focus on these attributes beyond only traditional performance metrics can be an important contribution.

To pursue this goal, we perform and analyze data from user experiments with VIDA [12], a virtual 3D atlas of anatomy for educational use with a mouse-and-keyboard-based user interface, and adapt Gestures2Go [3], a bimanual gesture recognition and interaction system, to use depth data from Kinect and allow gesture-based interaction in VIDA.

Following this introduction, this document is organized in this manner: Sect. 2 discusses some related work, followed by Sect. 3 which briefly describes the main software systems used in our experiment as well as the techniques designed and implement for both mouse- and gesture-based interaction; Sect. 4 details our experimental procedure, the results of which are presented and discussed in Sect. 5 which in turn paves the way for our conclusions in the final section.

2 Related Work

As discussed above, comparisons between interaction techniques taking advantage of conventional computer mice or similar devices and image-based gesture recognition are infrequent in literature, despite the availability of these devices. This is especially valid when applied to truly 3D interactions (and in particular object manipulation) and to evaluating learnability, memorability and subjective user satisfaction. Instead, comparisons only between different 3D interaction techniques or devices and with a focus on performance metrics are more frequent. While a systematic review of all such literature is outside the scope of this paper, we briefly discuss examples that are hopefully representative and help position our work within this context.

Poupyrev and Ichikawa [13], for instance, compare virtual hand techniques and the Go-Go technique for selecting and repositioning objects at differing distances from the user in a virtual environment and use task completion time as their primary measure of performance. Ha and Woo [6] present a formal evaluation process based on a 3D extension of Fitts’ law and use it to compare different techniques for tangible interaction, using a cup, a paddle or a cube. Rodrigues et al. [15] compare the use of a wireless dataglove and a 3D mouse to interact with a virtual touch screen for WIMP style interaction in immersive 3D applications.

One example of work that actually includes mouse and keyboard along with gesture-based interaction and compares the two is presented by Reifinger et al. [14], which focuses on the translation and rotation of virtual objects in 3D augmented environments and compare the use of mouse and keyboard, tangible interactions and free-hand gestures with the user seeing his hand inserted in the virtual environments. Fifteen participants were introduced to the environment and had five minutes to explore each technique before being assigned tasks involving translation and rotations, separately or together. Experiments measured task execution times and also presented the participants with a questionnaire evaluating immersion, intuitiveness, mental and physical workload for each technique. Their results showed that, for all tasks, gesture recognition provided the most immersive and intuitive interaction with the lowest mental workload, but demanded the highest physical workload. On average all tasks, but particularly those combining translation and rotation, were performed with gestures in considerably less time than with their tangible device and also faster than when using mouse and keyboard.

Wang et al. [18] show another such example: a system that tracks the positions of both hands, without any gloves or markers, so the user can easily transition from working with mouse and keyboard to using gestures. Direct hand manipulation and a pinching gesture are used for six degrees of freedom translation and rotation of virtual objects and of the scene camera but mouse and keyboard are still used for other tasks. Movements with a single hand are mapped to translations while bimanual manipulation becomes rotations. The authors focus on performance metrics and report that, when a user who is an expert both in a traditional CAD interface and in their hybrid system, they observed time savings of up to 40% in task resolution using their gesture-based technique, mostly deriving from significantly fewer modal transitions between selecting objects and adjusting the camera.

Benavides et al. [2] discuss another bimanual technique, this time for volumetric selection, which they also show is a challenging task. The technique also involves simple object manipulation and was implemented using a head-mounted stereoscopic display and a Leap Motion sensorFootnote 2. Their experiments indicate this technique has high learnability, with users saying they “had intuition with the movements”, and high usability but did not compare their technique with other alternatives (but the authors plan to do so in future work), either with mouse and keyboard or with other devices.

In the context of education, Vrellis et al. [17] compare mouse and keyboard with gesture-based interaction using a Kinect sensor for 3D object selection and manipulation. Their manipulation, however, was limited to object translation, because a pilot study showed that including rotation made the task too difficult for their target users. In their experiments with thirty two primary school students they found that student engagement and motivation was increased when using the gesture-based interface, perhaps in part due to the novelty factor, even though, for the simple task in their experiment, that interface was found to be less easy to use than mouse and keyboard interaction, which they believe might be caused, in great measure, by sensor limitations.

Moustakas et al. [10] propose a system combining a dataglove with haptic feedback, a virtual hand representation and stereoscopic display for another application in education: a learning environment for geometry teaching. They evaluate it in experiments with secondary school students performing two relatively simple tasks in 3D and show that interaction with their system was considered highly satisfactory by users and capable of providing a more efficient learning approach, but the paper does not mention any comparison with a mouse and keyboard interface, even though it was present in the experimental setup, or aspects of interface learnability and memorability.

Despite mouse and keyboard interfaces being more than adequate and most often quite familiar for most 2D tasks, they are sometimes compared to gesture based interfaces even for these 2D tasks in certain particular contexts. Farhadi-Niaki et al. [5], for instance, explore traditional 2D desktop tasks such as moving a cursor, selecting icons or windows, resizing or closing windows and running programs, both in desktop and in large screen displays. In a study with twenty participants, they show that even though, for desktop displays, gestures are slower, more fatiguing, less easy and no more pleasant to use than a mouse, for large screen displays they were considered superior, more natural and pleasing than using a mouse (although still more fatiguing as well). Juhnke et al. [7] propose an experiment to compare gestures captured with the Kinect and a traditional mouse and keyboard interface to adjust tissue densities in 3D medical images. While 3D medical image manipulation is indeed a 3D task (which is why we use an educational anatomy atlas in our experiment), this particular aspect of it, adjusting density involves manipulation in only one dimension, and all other manipulation functions are disabled in their proposed experiment. Still in the medical imaging field, Wipfli et al. [19] investigate 2D image manipulation (panning, zooming and clipping) using mouse, free hand gestures or voice commands to another person because, in the sterile conditions of an operating room, manipulating interaction devices brings complications. In an experiment with thirty users experienced with this particular 2D image manipulation, if using mouse interaction was feasible, it was more efficient and preferred by users, but using gestures was preferred to commands to another person. Once again, mouse-based techniques for 2D tasks being found superior, particularly with experienced users, comes as no surprise.

3 VIDA, Gesture Recognition and Interaction Techniques

As discussed before, we chose an educational interactive virtual atlas of anatomy, VIDA [12], to use in our experiment. As an educational tool it fits well with our goal of prioritizing learnability, memorability and satisfaction, which are often decisive for adoption and use in areas such as education and entertainment [16] and, furthermore, it is an application where isolated 3D object manipulation, without even need for selection, is actually a rather meaningful form of interaction, so students can explore and familiarize with the shown 3D anatomical structures from different angles, positions and levels of detail. VIDA was designed in a collaboration between engineers and teachers of medicine to serve both in classroom or distance learning as an alternative to the two most common ways to learn the subject. Currently, it is either learned from corpses, which are limited and present certain logistic limitations, or from static 2D images which cannot be manipulated or viewed tridimensionally, hindering the comprehension of such complex 3D structures. Another limitation of both approaches is that neither can show structures functioning dynamically, something that can only be observed in vivo during procedures by more experienced professionals, an alternative with even more logistic complications. VIDA, however, combines augmented reality by allowing the stereoscopic visualization of anatomic structures within the study environment and between user hands, animations to show their inner workings, 3D manipulation to further facilitate the comprehension of these 3D structures and dynamic behaviors and the use of gestures to make this manipulation as natural as possible, theoretically and according to the authors, freeing cognitive resources from the interaction to be employed in the learning process. shows that even for simple memorization tasks in a limited time, students who used VIDA had significantly better performances than those who only used 2D images, approximately 17% better on average. Figure 1 shows a simple visualization in VIDA of a model of the bones in the female pelvic region, without stereoscopy, textures or tags naming the structure’s features.

Using the mouse in VIDA, translations on the viewing plane are performed by dragging the mouse with the right button pressed (in this application, translations are actually less common than rotations, thus the choice of the right mouse button for them). Dragging with the left button pressed causes one of the two most used rotations, around the vertical axis with horizontal mouse movements and around the horizontal axis when moving vertically. Finally, the middle mouse button is used to zoom in and out by dragging vertically and dragging horizontally for the least common rotation, around the axis pointing out perpendicular to the viewing plane. With the exception of translations, the system treats diagonal mouse movements only as a horizontal or vertical, choosing the component with greater magnitude. VIDA also imposes a limitation on the maximum speed of all these transformations.

While VIDA also offers the option of manipulating objects using gestures (and that is actually an important feature in its design), its implementation at the time of the experiment, using optical flow, was somewhat limited, bidimensional, and not sufficiently robust for the experiments we wished to perform. So instead we decided to take advantage of Kinect’s depth sensing for more robust segmentation and tracking of 3D movements. We did not wish to hardcode the gesture-based interface within VIDA and recompile it, however. To that end, we took advantage of the modular architecture in Gestures2Go [3] and adapted it. Gestures2Go is a system for 3D interaction based on hand gestures which are recognized using computer vision. Its main contribution is a model that defines and recognizes gestures, with one or both hands, based on certain components (initial and final hand poses, initial location relative to the user, hand movements and orientations) so that the recognition of only a few of each of these components wields thousands of recognizable gesture combinations, allowing interaction designers to pick those that make most sense to associate with each action within the user interface. The system has a modular architecture shown in Fig. 2 which simplified the implementation of the adaptations we made and the integration with VIDA. First we simply replaced the image capture and segmentation modules with new versions for the Kinect, added depth as an additional gesture parameter extracted during the analysis and extended Desc2Input for integration with VIDA. Desc2Input is a module that receives descriptions and parameters, whether from Gestures2Go or from any other source (allowing the use of other modes of interaction), and translates them as inputs to an application. In this particular case, it was extended to respond to descriptions of gestures with one or both hands by generating the same mouse events used to manipulate objects in VIDA. Using Desc2Input in this way we did not need to modify any code in VIDA to have it respond to the 3D gestures we chose for object manipulation and acquired with Kinect.

When designing our gesture-based interaction techniques for virtual object manipulation, we elected first to use a closed hand pose, as if the users were grabbing the virtual object, to indicate their intent to manipulate it. In this way users can move their hands freely to engage in any number of other actions without interfering with the manipulation. This also includes the ability to open hands, move them to another position, close them again and continue the manipulation, allowing movements with higher amplitude, much in the same way as when keeping a button in a 2D mouse pressed but lifting the mouse from its surface to return to another position and continue the movement. We mapped movements with a single hand as translations. At first we intended this to include translations in depth, but during pilot studies we realized that, for this application, users were apparently more comfortable with restricting translations to the viewing plane and using the concept of zooming in and out instead of translation along the Z axis. We ended up mapping zooming in and out to bimanual gestures, bringing the closed hands together or pulling them apart, respectively, as if “squeezing” or “stretching” the object. Rotation around the Y axis is performed by “grabbing” the object by the sides and pulling one arm towards the body while extending the other away from it. The same movement works around the X axis, but the user must grab the object from above and below. To rotate the object around the Z axis one must also grab it by its sides, and then move one arm up and the other down, as if turning a steering wheel.

Even though the gestural interface is built upon the mouse interface, simply translating manipulation gestures as mouse events, it does not have the same limitation of only allowing one movement at a time. These mouse events are generated at a frequency of at least 15 Hz, which is sufficient to allow rotations around two or all three axes along with changes in scale all at the same time. Translation, however, cannot happen along with the other transformations, since we implemented it using the only gesture with a single hand. Since in VIDA users mostly wish to explore the object from different angles, with translations being less common, this was considered adequate. For other applications such as those discussed by Wang et al. [18] or Reifinger et al. [14], however, it should be interesting to eliminate this limitation, either by adding bimanual translation, which would be rather simple, or by adding versions with a single hand for the other forms of manipulation.

4 Comparing the Techniques

After elaborating and comprehensively documenting our experimental protocol and having it approved by our institution’s human research ethics committee, we performed a pilot study with four participants and then the experiment with nineteen participants, all of whom agreed with the Free and Informed Consent (FIC) document that was presented and explained to each one. Participation was completely voluntary, anonymous and non-remunerated.

4.1 Pilot Study

Four participants (2 experienced, 1 female) went through a pilot study to help detect issues with our protocol and to estimate the average amount of time required for each experimental session. This study pointed out several improvements that were implemented in a revised experimental protocol. We already discussed how we replaced translation along the Z axis with zooming in and out and changed the associated gestures accordingly. Another important discovery was that, with the gestures we had designed for the manipulation task, users would naturally open their hands or close them into fists in several different orientations and this presented a challenge that Gestures2Go combined with Kinect was not then able to overcome robustly. Because this change in hand pose was so important for the interaction, and the only one we would use, we disabled its automatic detection and, instead, made use of Wizard of Oz experimentation and implemented a way so that a human operator could indicate to the system, using a keyboard, whether one hand, both or none were closed. Hand positions are still tracked in 3D automatically by the system. This avoided the issue of a temporary technological hindrance distorting the results about the interaction techniques. We later implemented a more robust algorithm using the same device to detect whether hands are open or not regardless of their orientation. We also determined in this phase that the stereoscopic 3D visualization offered by VIDA (which is an important part of its design and supports several devices) was not only mostly unnecessary to our experiments but also added considerable complications and additional noise to its results. First, it works best with a previous step of calibration for each user that would demand extra time in each session. Participants were distracted from the manipulation either by a well done stereoscopic display or by a poor one. Finally, some participants quickly developed visual fatigue and discomfort when we used stereoscopy. We then decided to remove stereoscopic visualization from our protocol and show the virtual object directly on a large computer monitor. Finally, due to the reduced time we determined we would have in our experimental sessions for training each participant, we decided to choose relatively simple tasks for them to perform, attempting to reduce the gap that, without such training, we realized would remain significant between users less or more experienced with 3D object manipulation or in interacting with the Kinect sensor.

4.2 Summarized Experimental Procedure

After participants, individually in each experimental session, have the opportunity to read the FIC document, have it explained verbally and ask questions about it, if they agree to participate and sign the document, they fill out a quick profile form with information relevant to the experiment. We then briefly explain and demonstrate one manipulation technique (using either mouse or gestures, chosen randomly), tell them which tasks they will have to perform and give them 5 to 10 min to engage freely with the system using that technique. We then proceed with the test itself, which consists of five tasks. Before each task begins, the object is reset to its initial position in the center of the screen. The tasks are:

-

1.

Translation on the viewing plane: the user is asked to move the object so it touches the upper left corner of the screen, then the lower right corner and then to bring it back to the center of the screen.

-

2.

Scaling: the user must zoom in until the object has its maximum size inside the screen without escaping its boundaries, zoom out until it has approximately half its initial dimensions and then zoom in again to reach the initial size.

-

3.

Rotation around the X axis: the object must be rotated approximately 90\(^\circ \) counter-clockwise, then 180\(^\circ \) clockwise and finally 90\(^\circ \) counter-clockwise again to return to its initial position.

-

4.

Rotation around the Y axis and

-

5.

Rotation around the Z axis: same procedure as around the X axis.

Participants do not need to memorize these tasks and their order. After finishing one, they are reminded of the next one even though they were initially shown all five. Rotations are not described in terms of their axis, either. Instead, they are exemplified by rotating a real object, using only one hand to avoid giving any clues about how to do it with gestures. Once the participant finishes all tasks with one technique (either mouse or gestures), they answer a questionnaire about that experience and the entire process starts again for the remaining technique, from explanation and demonstration to exploration, task execution and answering the questionnaire.

Tasks are identical for both techniques. Even though our gestural interface is capable of performing multiple transformations in response to a single gesture while the mouse interface is not, and even though this is often seen as an advantage of gesture-based interaction [14], we decided to keep the tasks simple and more comparable instead of giving gesture-based interaction this advantage it usually would have.

4.3 Metrics and Questionnaires

Like one of the experiments we discussed in Sect. 2 [15], ours can be classified as a comparison of alternative designs [1] and we also record quantitative and qualitative data, including self-reported metrics, errors or success in execution, any issues arisen during the experiments, and task completion times (despite this not being the focus in this study). Self-reported metrics are very important for us as metrics of satisfaction and ease to learn and remember. To that end, we elaborated short questionnaires using semantic differential scales to measure these attributes and to determine participant profiles (age, gender, profession, and 3 point scales to measure how frequently they interact with each of the three technologies most significant for this experiment: mouse, gesture and 3D interaction, from never or almost never, to sometimes or almost always). To evaluate satisfaction and ease of learning and remembering, we used 5 point scales, going from very unsatisfied at 1 to very satisfied at 5 and from very hard at 1 to very easy at 5. For each of three tasks (translation, scaling and rotations, which are grouped together in the questionnaire) and for each technique (based on mouse or gestures), users choose values in these scales to answer how satisfactory it was to perform the task and how easy it was to learn and remember all inputs and avoid confusing them with each other. There is also a space reserved in the questionnaire allowing users to write any qualitative observations they wish. Another metric we use to indirectly gauge ease of learning and remembering is what we called retention errors. Given our simple tasks, there were not many errors users could commit, so what we recorded as errors are more related to faulty learning or an inability to recall the inputs required in each technique to perform a task, such as using the wrong command, forgetting to hold down the mouse button or to close a hand, manipulating the object in the opposite way than desired (for instance reducing its dimensions when they should be increased) or being unable to remember which command to use and asking to be reminded of it.

4.4 Participants

In this particular experiment, we did not wish to evaluate VIDA’s intended use as an aid in learning anatomy but only to compare the two interaction techniques described earlier. So since previous knowledge of or interest in anatomy was not necessary, we decided, for convenience, to recruit participants mostly from undergraduate students in our Information Systems program, many of which already held jobs in the area. Some of their family members and program faculty added a slightly wider spread of age and experience with the relevant technology. No participants from the pilot study were included in the main experiment.

5 Results and Discussion

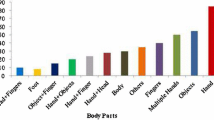

Out of our 19 participants, 6 of them were female, ages ranged from 19 to 56 years old and averaged exactly 24. Figure 3 shows how often participants use mouse, gesture and 3D interfaces. 18 of the 19 participants were very familiar with the mouse, using it frequently or always, and the remaining one uses it sometimes, while only 1 participant used gesture-based interaction frequently and 11 never or almost never used it. 3D interaction had a less skewed spread of participants, with 8 almost never using it, 6 using it sometimes and 5 frequently.

All result distributions obtained for our metrics were checked for normality using Kolmogorov-Smirnov tests. Even with \(\alpha =0.1\) the evidence was insufficient to deny the normality of all distributions of quantitative questionnaire answers. Retention errors were somewhat unusual and certainly were not distributed normally. For many tasks, the normality of the distribution of completion times could not be denied with \(\alpha =0.1\), but some distributions were more skewed to one extreme or the other and could only be taken as normal if \(\alpha =0.05\) (then again this was not our focus). Because of that, and because all distributions have the same number of samples (19), we used paired t-tests without testing for equal variance to calculate the probability of the averages being equal in the population for mouse interaction and for gesture interaction (which were independent, thanks in part to the randomized order in which the techniques were presented). These are the values of p discussed below and we adopted a significance level \(\alpha =0.05\) to test this hypothesis of difference between distributions.

Figure 4 shows the average values (as bars) and standard deviations (as error bars) for the answers to the questionnaires regarding subjective satisfaction for the three tasks. Satisfaction with gestures was significantly greater than that with mouse interaction for all tasks. Scaling the object was the preferred task for both mouse and gestures, but the difference was more marked with gestures. Indeed, several participants commented verbally, after the end of their experiment, that they thought “squeezing and stretching” the object with their hands to zoom in and out was the most memorable and fun task of all. Rotations in 3D were the least favorite task, which is no surprise considering that they are often cited as the most difficult aspect of 3D manipulation and one of the motivations to use more natural techniques. The fact that a single question encompassed three tasks might also have some influence in the answer. What is surprising, however, is how little difference there was between satisfaction with rotation and translation when using gestures.

Figure 5 shows the mean values and standard deviations for the answers to the questionnaires regarding ease to learn and remember how to use the interface for our three tasks. Once again gestures were considered easier to learn and remember for all tasks. Once again and for the same reasons discussed above, it is no surprise that rotations were considered the most difficult tasks to learn and remember. For the rotations, with our chosen \(\alpha \), we cannot deny the hypothesis that mouse and gestures have the same mean in the population, there is a 6% probability of that being true. We cannot be sure of the reason for this or for the large deviation in the distribution of answers for mouse rotations, but some qualitative user answers and observation hint at a hypothesis regarding our rotation gestures. These were the only gestures in which users had to move their arms back and forward along the Z axis and, particularly for the rotation around the X axis with hands grabbing the object from above and below, this was reported by many users as the most difficult gesture to execute. One user also reported in his qualitative comments that he imagined the virtual objects between his or her hands (which is as we intended) and wanted to naturally move the hands circularly around the object, but noticed that the implementation favored more linear movements. It really does, due to the equations we used to map the 3D bimanual movements into linear mouse movements. So we believe that our gesture-based technique for 3D rotations was indeed nearly as difficult to learn as VIDA’s mouse-based technique, albeit for different reasons, physical rather than cognitive. This also appears to agree with the observations about cognitive and physical load of gestures reported in previous work [14].

Figure 6 shows the percentage of retention errors for mouse and gestures. This percentage is calculated as the ratio between the sum of retention errors incurred by all participants and the total number of successful and failed attempts, which in turn is nothing but the total number of participants, since all eventually had success in all tasks, plus the number of errors for failed attempts. This figure shows perhaps the most impressive (and somewhat unexpected) result of our experiments. For all 19 participants completing all 5 tests, we did not witness a single retention error when using gestures. We did notice what we believe were users momentarily forgetting what gesture to use, for instance when, after being told what task they should accomplish (but not reminded about how to go about it), they would hesitate and sometimes even look at their hands or move them slightly while thinking, before closing them to start the manipulation. But eventually, before making any mistakes or asking for assistance, they could always figure out which gesture to use to achieve their desired action, and in the correct orientation, regardless of their previous experience or lack thereof with 3D or gestural interaction. We believe this is a very strong indicator of the cognitive ease to learn and remember this sort of gesture-based 3D manipulation technique (or to figure it out when forgotten) for all tasks we evaluated. With VIDA’s mouse-based technique, however, errors varied between approximately 21% and 24%, proving the technique was confusing for users with as little training or experience with the technique as our participants.

Using Pearson’s product-moment correlation coefficient, we found only negligible correlation between participants previous experience and most other variables we have discussed up to now, with a few exceptions. We found moderate positive correlations (between 0.4 and 0.5) between self-reported ease to learn rotations using gestures and previous experience with both 3D and gestures, and also between satisfaction with this same task and previous experience with gestures. This becomes more significant when we recall that rotations were the tasks reported as most difficult to learn and remember and least satisfactory to perform and leads us to believe that, as the experience of users with both gesture-based interfaces and 3D interaction in general increases, we may find an even greater advantage to the use of gestures for these difficult tasks. It also goes somewhat against the idea that a large portion of user satisfaction with gestures is due to their novelty and thus should decrease with increasing experience. Then again, these were not strong correlations and we had a single user that was very experienced with gestures, so our evidence for these speculations is not strong. But they do suggest interesting future experiments to try to prove or disprove them. Given the fact that only one of our participants was not very experienced in the use of computer mice, it comes as no surprise that we could not find significant correlations between that variable and any others. But it is curious that, when using VIDA’s mouse-based technique, we could find no correlation between experience with 3D interaction and any other variables either, unlike what happened between gestures and rotations. This might suggest that, for 3D manipulation, even for users experienced with using a mouse, its use within the technique, and not the user’s previous experience with 3D interaction, was the dominant factor in their satisfaction and ease to learn and remember.

Finally, Fig. 7 shows the mean values of completion times, in seconds, for all tasks, not grouping rotations together for once. These times were measured from the moment when users started the manipulation (by clicking or closing their hand), using the correct command to do it, to the moment when the task was completed. Scaling was not only the preferred task but also the fastest to perform. Given that we had calibrated the system to use ample gestures and that we did not explore one of the main advantages of gestures regarding execution times, that it is easier to perform several transformations at once using them, we expected gestures to show greater execution times than mouse. This was indeed true for three tasks, scaling and rotating around X and Y, which showed an advantage between 1.1 s and 1.9 s or 15% and 20% for mouse interaction, always with \(p<0.05\) (which also came as somewhat of a surprise). For translation using only one hand, however, gestures were significantly faster than using a mouse (31% faster, \(p<0.05\)). Results for rotation around Z are interesting. Using a mouse it took slightly longer on average, but with nearly identical means and distributions. The other two rotations, around X and Y, were considered by users the most difficult to perform, physically, because they demanded moving the arms back and forward along the Z axis (in the future we will try to avoid this). Without facing the same problem, rotations around Z were completed as quickly with gestures as with a mouse.

We took note of a couple of issues during the experiments but believe they had only a mild influence in our results, if any. The first was that, sometimes (6 times in the entire experiment, in fact), VIDA’s display would lock up for a small but noticeable period of time, which ended up increasing completion time for some tasks. This problem affected both mouse and gestures sporadically. The second is that some users would repeat the same movement several times, either because they enjoyed it or because they wanted to get the object at just the right position, which was rather subjective and difficult to ascertain, since only that single object was shown on the screen. This happened only 4 times, but always with gestures.

Finally, some of the qualitative comments from participants also bring up interesting points. Two of them complained that VIDA’s upper limit for transformation speeds was particularly annoying when using gestures but did not make the same comment regarding mouse interaction. Given the greater physical effort involved in the use of gestures, it is not a great surprise that being forced to act slower than one would like causes greater annoyance in this case. We believe this effort is the same reason why two other participants complained that moving the arms was “harder” than moving the mouse (even though one said closing the hand to “grab” was much better than clicking a mouse button) and one reported being hindered in some of the gestural manipulations by a shoulder which had been broken in the past. Another participant criticized our use of ample gestures. It is interesting to notice that, except for the rotation around X, all gestures could have been performed while following advice to create more ergonomic gestural interfaces [11]: keeping elbows down and close to the body. We, however, calibrated the system favoring precision over speed, in a way that, without using ample gestures with arms further away from the body, tasks would require more repeated movements, grabbing and releasing the object more often. We also always demonstrated gestures with arms well extended when explaining the technique to participants, inducing them to do the same. It is interesting to notice that, with just these two actions, we induced all 19 users to interact in a less ergonomic way than possible with our gesture-based technique. We also did not take advantage of the possibility of using Kinect at closer distances or while sitting down. Two participants complained about having to stand to use gestures, one because he felt more comfortable sitting down, the other because he said object visualization was worse when standing less close to the computer monitor. Finally, several users wrote down comments to the effect that using the gestures was of great assistance in memorizing the technique and felt more natural, intuitive or fun, or complained that trying to memorize VIDA’s mouse interaction technique was very difficult.

6 Conclusions

To our knowledge, this is the first report specifically comparing gesture-based and mouse-based interaction for virtual object manipulation in 3D with a focus in satisfaction, learnability and memorability rather than mostly or only in metrics of performance such as task completion time. The gesture-based technique we designed and implemented for this task, as well as the design of our experiment, had several points unfavorable to gestures. We forgot to ask participants about their handedness and, although we could have easily implemented functionality to allow the use of their dominant hand for translations, we did not and instead forced all to use the right hand (when later we realized that, we were surprised that no participants complained about it, even though we did observe a few that were left-handed). Our implementation using the Kinect sensor forced participants to use gestures only while standing, although that should not have been a technical necessity, and to stand further away from our display than needed. Our gesture system calibration for precision over speed and our technique demonstrations to participants induced them to use ample gestures which were less ergonomic. VIDA’s upper limit for transformation speeds, which is not at all a necessary component for either technique, was perceived more negatively when using gestures than with a mouse. Users imagined virtual objects between their hands and wanted to naturally move them circularly around the object, but our implementation favored more linear gestures because of the equations we used to map 3D bimanual movements into linear mouse movements. Finally, our experimental tasks did not allow gestures to benefit from one of their greatest advantages in 3D manipulation, the ability to combine several transformations in one gesture with far more ease than with most mouse-based techniques. Clearly, there are several improvements we can strive for in future works to design and implement a gesture-based interaction technique with much greater usability, not to mention new experiments we could design to play up to gestural interaction strengths. And yet, despite all these disadvantages imposed to the gesture-based interaction, we believe our greatest contribution with this work is to show unequivocally that there is indeed a statistically significant difference between the use gestures and mouse for 3D manipulation regarding user satisfaction and interface learnability and memorability, and that for all subtasks of manipulation this difference clearly favors the use of gestures, at least with the techniques we evaluated and most likely with similar alternatives. Our log of retention errors constitutes rather impressive evidence for this advantage. With mouse-based interaction, these errors, nearly always derived from failures in learning or remembering a technique correctly, varied between approximately 21% and 24% of all interactions, but for all 19 participants completing all 5 tests, we did not witness a single retention error when using gestures. While we suspect some participants may indeed have forgotten which gesture to use in certain tasks, they could always quickly remember or figure them out again on their own, without asking for assistance or first making any mistakes. Our experiments also pointed out several ways in which we can improve our gesture-based interaction techniques. Finally, while at first we believed that part of the advantage of using gestures was in its novelty and should fade with time, as users became more experienced with them, the correlations between previous participant experience and the rest of the questionnaire gives some evidence to the contrary, showing positive correlations between experience with gestures and both satisfaction and ease to learn and remember, indicating that as users become more experienced in the use of gestures, they might come to be more satisfied with them, not less so.

References

Albert, W., Tullis, T.: Measuring the User Experience: Collecting, Analyzing, and Presenting Usability Metrics. Newnes, Oxford (2013)

Benavides, A., Khadka, R., Banic, A.: Physically-based bimanual volumetric selection for immersive visualizations. In: Chen, J.Y.C., Fragomeni, G. (eds.) HCII 2019. LNCS, vol. 11574, pp. 183–195. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-21607-8_14

Bernardes, J., Nakamura, R., Tori, R.: Comprehensive model and image-based recognition of hand gestures for interaction in 3D environments. Int. J. Virtual Reality 10(4), 11–23 (2011). https://doi.org/10.20870/IJVR.2011.10.4.2825. https://ijvr.eu/article/view/2825

Bolt, R.A.: “Put-that-there”: voice and gesture at the graphics interface. SIGGRAPH Comput. Graph. 14(3), 262–270 (1980). https://doi.org/10.1145/965105.807503

Farhadi-Niaki, F., GhasemAghaei, R., Arya, A.: Empirical study of a vision-based depth-sensitive human-computer interaction system. In: Proceedings of the 10th Asia Pacific Conference on Computer Human Interaction, pp. 101–108 (2012)

Ha, T., Woo, W.: An empirical evaluation of virtual hand techniques for 3D object manipulation in a tangible augmented reality environment. In: 2010 IEEE Symposium on 3D User Interfaces (3DUI), pp. 91–98. IEEE (2010)

Juhnke, B., Berron, M., Philip, A., Williams, J., Holub, J., Winer, E.: Comparing the microsoft kinect to a traditional mouse for adjusting the viewed tissue densities of three-dimensional anatomical structures. In: Medical Imaging 2013: Image Perception, Observer Performance, and Technology Assessment, vol. 8673, p. 86731M. International Society for Optics and Photonics (2013)

Kim, Y.M., Rhiu, I., Yun, M.H.: A systematic review of a virtual reality system from the perspective of user experience. Int. J. Hum.-Comput. Interact. 1–18 (2019). https://doi.org/10.1080/10447318.2019.1699746

LaViola Jr., J.J., Kruijff, E., McMahan, R.P., Bowman, D., Poupyrev, I.P.: 3D User Interfaces: Theory and Practice. Addison-Wesley Professional, Boston (2017)

Moustakas, K., Nikolakis, G., Tzovaras, D., Strintzis, M.G.: A geometry education haptic VR application based on a new virtual hand representation. In: 2005 IEEE Proceedings, VR 2005, Virtual Reality, pp. 249–252. IEEE (2005)

Nielsen, M., Störring, M., Moeslund, T.B., Granum, E.: A procedure for developing intuitive and ergonomic gesture interfaces for HCI. In: Camurri, A., Volpe, G. (eds.) GW 2003. LNCS (LNAI), vol. 2915, pp. 409–420. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-24598-8_38

dos Santos Nunes, E.P, Nunes, F.L.S., Roque, L.G.: Feasibility analysis of an assessment model of knowledge acquisition in virtual environments: a case study using a three-dimensional atlas of anatomy. In: Proceedings of the 19th Americas Conference on Information Systems - AMCIS 2013, Chicago, USA (2013)

Poupyrev, I., Ichikawa, T.: Manipulating objects in virtual worlds: categorization and empirical evaluation of interaction techniques. J. Vis. Lang. Comput. 10(1), 19–35 (1999)

Reifinger, S., Laquai, F., Rigoll, G.: Translation and rotation of virtual objects in augmented reality: a comparison of interaction devices. In: 2008 IEEE International Conference on Systems, Man and Cybernetics, pp. 2448–2453. IEEE (2008)

Rodrigues, P.G., Raposo, A.B., Soares, L.P.: A virtual touch interaction device for immersive applications. Int. J. Virtual Reality 10(4), 1–10 (2011)

Shneiderman, B., et al.: Designing the User Interface: Strategies for Effective Human-Computer Interaction. Pearson, Essex (2016)

Vrellis, I., Moutsioulis, A., Mikropoulos, T.A.: Primary school students’ attitude towards gesture based interaction: a comparison between microsoft kinect and mouse. In: 2014 IEEE 14th International Conference on Advanced Learning Technologies, pp. 678–682. IEEE (2014)

Wang, R., Paris, S., Popović, J.: 6D hands: markerless hand-tracking for computer aided design. In: Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, pp. 549–558 (2011)

Wipfli, R., Dubois-Ferriere, V., Budry, S., Hoffmeyer, P., Lovis, C.: Gesture-controlled image management for operating room: a randomized crossover study to compare interaction using gestures, mouse, and third person relaying. PLoS ONE 11(4) (2016)

Zimmerman, T.G., Lanier, J., Blanchard, C., Bryson, S., Harvill, Y.: A hand gesture interface device. ACM SIGCHI Bull. 18(4), 189–192 (1986)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Bernardes, J. (2020). Comparing a Mouse and a Free Hand Gesture Interaction Technique for 3D Object Manipulation. In: Kurosu, M. (eds) Human-Computer Interaction. Multimodal and Natural Interaction. HCII 2020. Lecture Notes in Computer Science(), vol 12182. Springer, Cham. https://doi.org/10.1007/978-3-030-49062-1_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-49062-1_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-49061-4

Online ISBN: 978-3-030-49062-1

eBook Packages: Computer ScienceComputer Science (R0)