Abstract

There is scarcity of research on scalable peer-feedback design and student’s peer-feedback perceptions and therewith their use in Massive Open Online Courses (MOOCs). To address this gap, this study explored the use of peer-feedback design with the purpose of getting insight into student perceptions as well as into providing design guidelines. The findings of this pilot study indicate that peer-feedback training with the focus on clarity, transparency and the possibility to practice beforehand increases students willingness to participate in future peer-feedback activities and training, increases their perceived usefulness, preparedness and general attitude regarding peer-feedback. The results of this pilot will be used as a basis for future large-scale experiments to compare different designs.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Massive Open Online Courses (MOOCs) are a popular way of providing online courses of various domains to the mass. Due to their open and online character, they enable students from different backgrounds and cultures to participate in (higher) education. Studying in a MOOC mostly means freedom in time, location, and engagement, however differences in the educational design and teaching methods can be seen. The high heterogeneity of MOOC students regarding, for example, their motivation, knowledge, language (skills), culture, age and time zone, entails benefits but also challenges to the course design and the students themselves. On the one hand, a MOOC offers people the chance to interact with each other and exchange information with peers from different backgrounds, perspectives, and cultures [1]. On the other hand, a MOOC cannot serve the learning needs of such a heterogenic group of students [1,2,3]. Additionally, large-scale student participation challenges teachers but also students to interact with each other. How can student learning be supported in a course with hundreds or even thousands of students? Are MOOCs able to provide elaborated formative feedback to large student numbers? To what extend can complex learning activities in MOOCs be supported and provided with elaborated formative feedback? When designing education for large and heterogeneous numbers of students, teachers opt for scalable learning and assessment and feedback activities such as videos, multiple choice quizzes, simulations and peer-feedback [4]. In theory, all these activities have the potential to be scalable and thus used in large-scale courses, however, when applied in practice they lack in educational quality. Personal support is limited, feedback is rather summative and/or not elaborated and there is a lack in (feedback on) complex learning activities. Therefore, the main motivation of any educational design should be to strive for high educational scalability which is the capacity of an educational format to maintain high quality despite increasing or large numbers of learners at a stable level of total [4]. It is not only a matter of enabling feedback to the masses but also and even more to provide high quality design and education to the masses. Thus, any educational design should combine a quantitative with a qualitative perspective. When looking at the term feedback and what it means to provide feedback in a course one can find several definitions. A quite recent one is that of [5] “Feedback is a process whereby learners obtain information about their work in order to appreciate the similarities and differences between the appropriate standards for any given work” (p. 205). This definition includes several important characteristics about feedback such as being a process, requiring learner engagement and being linked to task criteria/learning goals. Ideally, students go through the whole circle and receive on each new step the needed feedback type. In recent years feedback is seen more and more as a process, a loop, a two-way communication between the feedback provider and the feedback receiver [6, 7]. In MOOCs, feedback often is provided via quizzes in an automated form or in forum discussion. Additionally, some MOOCs give students an active part in the feedback process by providing peer-feedback activities in the course. However, giving students an active part in the feedback process requires that students understand the criteria on which they receive feedback. It also implies that students understand how they can improve their performance based on the received feedback. By engaging students more in the feedback process, they eventually will learn how to assess themselves and provide themselves with feedback. However, before students achieve such a high-level of self-regulation it is important that they practice to provide and receive feedback. When practicing, students should become familiar with three types of feedback: feed-forward (where am I going?), feedback (how am I doing?) and feed-forward (how do I close the gap?) [8]. These types of feedback are usually used in formative assessment also known as ‘Assessment for Learning’ where students receive feedback throughout the course instead of at the end of a course. Formative feedback, hence elaborated, enables students to reflect on their own learning and provides them with information on how to improve their performance [9]. To provide formative feedback, the feedback provider has to evaluate a peer’s work with the aim of supporting the peer and improving his/her work. Therefore, positive as well as critical remarks must be given supplemented with suggestions on improvement [10]. In the following sections, we will have a closer look on the scalability of peer-feedback, how it is perceived by students and we will argue that it is not the idea of peer-feedback itself that challenges but rather the way it is designed and implemented in a MOOC.

1.1 Peer-Feedback in Face-to-Face Higher Education

Increased student-staff ratios and more diverse student profiles challenge higher education and influence the curriculum design in several ways such as a decrease in personal teacher feedback and a decrease of creative assignments in which students require personal feedback on their text and or design [1, 5, 11, 12]. However, at the same time feedback is seen as a valuable aspect in large and therefore often impersonalised, classes to ensure interaction and personal student support [13]. Research on student perception of peer-feedback in face-to-face education shows that students are not always satisfied with the feedback they receive [14, 15]. The value and usefulness of feedback is not perceived as high especially if the feedback is provided at the end of the course and therewith is of no use for learning and does not need to be implemented in follow-up learning activities [11]. It is expected that student perception of feedback can be enhanced by providing elaborated formative feedback throughout the course on learning activities that build upon each other. This, however, implies that formative feedback is an embedded component of the curriculum rather than an isolated, self-contained learning activity [5, 13] found that students value high-quality feedback meaning timely and comprehensive feedback that clarifies how they perform against the set criteria and which actions are needed in order to improve their performance. These results correspond to [8] distinction between feedback and feed-forward. Among other aspects, feedback was perceived as a guide towards learning success, as a learning tool and a means of interaction [13]. However, unclear expectations and criteria regarding the feedback and learning activity lead to unclear feedback and thus disappointing peer-feedback experiences [11, 12]. The literature on design recommendations for peer-feedback activities is highly elaborated and often comes down to the same recommendations of which the most important are briefly listed in Table 1 [5, 16, 17].

A rubric is a peer-feedback tool often used for complex tasks such as reviewing essays or designs. There are no general guidelines on how to design rubrics for formative assessment and feedback, however, they are often designed as two-dimensional matrixes including the following two elements: performance criteria and descriptions of student performance on various quality levels [18]. Rubrics provide students with transparency about the criteria on which their performance get reviewed and their level of performance which makes the feedback more accessible and valuable [7, 16, 17]. However, a rubric alone does not explain the meaning and goals of the chosen performance criteria. Therefore, students need to be informed about the performance and quality criteria before using a rubric in a peer-feedback activity. Although rubrics include an inbuilt feed-forward element in the form of the various performance levels, it is expected that students need more elaboration on how to improve their performance to reach the next/higher performance level. Students need to be informed and trained about the rubric criteria in order to be used effectively [17].

1.2 Peer-Feedback in (Open) Online Education

Large student numbers and high heterogeneity in the student population challenge the educational design of open online and blended education [3, 10]. A powerful aspect of (open) online education compared to face-to-face education is its technological possibilities. However, also with technology, large-scale remains a challenge for students to interact with teachers [19]. When it comes to providing students with feedback, hints or recommendations, automated feedback can be easily provided to large student numbers. However, the personal value of automated feedback is limited to quizzes and learning activities in which the semantic meaning of student answers is not taken into account [1]. Providing feedback to essays or design activities even with technological support is still highly complex [20]. When it comes to courses with large-scale student participation, peer-feedback is used for its scalable potential with mainly a quantitative approach (managing large student numbers) rather than a qualitative one [1].

Research focusing on student perceptions regarding the quality, fairness, and benefits of peer-feedback in MOOCs show mixed results [21, 22] ranging from low student motivation to provide peer-feedback [10], students’ mistrust of the quality of peer-feedback [23] to students recommending to include peer-feedback in future MOOCs [20].

Although reviewing peers’ work, detecting strong and weak aspects and providing hints and suggestions for improvement, trains students in evaluating the quality of work they first need to have the knowledge and skills to do so [3]. This raises the question if and how students can learn to provide and value peer-feedback. Although peer-feedback is used in MOOCs, it is not clear how students are prepared and motivated to actually participate in peer-feedback activities. Research of [18] has shown that students prefer clear instructions of learning activities and transparency of the criteria for example via rubrics or exemplars. Their findings are in line with research of [21] who found that especially in MOOCs the quality of the design is of great importance since participation is not mandatory. MOOC students indicated that they prefer clear and student-focused design: “Clear and detailed instructions. A thorough description of the assignment, explaining why a group project is the requirement rather than an individual activity. Access to technical tools that effectively support group collaboration” [21, p. 226]. The design of peer-feedback is influenced by several aspects such as the technical possibilities of the MOOC platform, the topic and learning goals of the MOOC. Nevertheless, some pedagogical aspects of peer-feedback design such as listed in Table 1 are rather independent of the technological and course context as mentioned above. Similar to research in face-to-face education, literature about peer-feedback in MOOCs shows that clear instructions and review criteria, cues and examples are needed in order to not only guide students in the review process [1, 3, 24] but also to prepare them for the review activity so that they trust their own abilities [25].

To extend our understanding of students’ peer-feedback perceptions and how they can be improved by scalable peer-feedback design, we focus on the following research question: “How do instructional design elements of peer-feedback (training) influence students’ peer-feedback perception in MOOCs?” The instructional design elements are constructive alignment, clarity of instruction, practice on task and examples from experts (see Table 1). To investigate student’s perception, we developed a questionnaire that included four criteria which derived from the Reasoned Action approach by [26]: Willingness (intention); Usefulness (subjective norm), Preparedness (perceived behavioral control) and general Attitude. The four criteria will be explained in more detail further on in the method section. By investigating this research question, we aim to provide MOOC teachers and designer with useful design recommendation on how to design peer-feedback for courses with large-scale participation.

This study explores whether explaining to students the value/usefulness of the peer-feedback activity and embedding it in the course, students will perceive peer-feedback as useful for their own learning. We also expect that their perceived preparedness will increase by giving students the chance to practice beforehand with the peer-feedback tools and criteria and giving them examples. The general attitude regarding peer-feedback should be positively improved by setting up valuable, clearly described learning activities that are aligned with the course.

2 Method

2.1 Background MOOC and Participants

To give an answer on how instructional design elements of peer-feedback training influence students’ learning experience in MOOCs, we set up an explorative study which contained a pre- and post-questionnaire, peer-feedback training, and a peer-feedback activity. The explorative study took place in the last week of a MOOC called Marine Litter (https://www.class-central.com/mooc/4824/massive-open-online-course-mooc-on-marine-litter). The MOOC (in English) was offered by UNEP and the Open University of the Netherlands at the EdCast platform. During the 15 weeks runtime students could follow two tracks: (1) the Leadership Track which took place in the first half of the MOOC where students got introduced to marine litter problems and taught how to analyse them and (2) the Expert Track which took place in the second half of the MOOC where more challenging concepts were taught and students learned how to developed an action plan to combat a marine litter problem of choice.

The explorative study took place in the last week of the MOOC from June to August 2017 and was linked to the final assignment in which students were asked to develop an action plan to reduce and or prevent a specific marine litter problem. Students could work in groups or individually on the assignment and would receive a certificate of participation by sending in their assignment. Given the complexity of the assignment, it would be useful for the students to get a critical review and feedback on their work. So, if necessary, they can improve it before handing it in. While tutor feedback was not feasible, reviewing others’ assignment would be beneficial to both sender and receiver [19]. Therefore, we added a peer-feedback activity including training. When trying to combat marine litter problems collaboration is important, since often several stakeholders with different needs and goals are included. Being able to receive but also provide feedback, therefore, added value to the MOOC. Participating in the peer-feedback training and activity was a voluntary, extra activity which might explain the low participant numbers for our study (N = 18 out of N = 77 active students). Although not our first choice, this decision suited the design of the MOOC best. There were 2690 students enrolled of which 77 did finish the MOOC.

2.2 Design

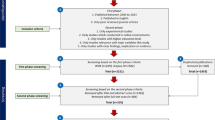

The peer-feedback intervention consisted of five components as shown in a simplified form in Fig. 1. Participation in the peer-feedback intervention added a study load of 45 min over a one week period. Before starting with the peer-feedback training, students were asked to fill in a pre-questionnaire. After the pre-questionnaire students could get extra instructions and practice with the peer-feedback criteria before participating in the peer-feedback activity. When participating in the peer-feedback activity students had to send in their task and had to provide feedback via a rubric on their peers work. Whether and in which order students participated in the different elements of the training was up to them but they had access to all elements at any time. After having participated in the peer-feedback activity students again were asked to fill in a questionnaire.

2.3 Peer-Feedback Training

The design of the peer-feedback training was based on design recommendation from the literature as mentioned previously. All instructions and activities were designed in collaboration with the MOOC content experts. In the instructions, we explained to students what the video, the exercise, and the peer-feedback activity are about. Additionally, we explained the value of participating in these activities (“This training is available for those of you who want some extra practice with the DPSIR framework or are interested in learning how you can review your own or another DPSIR.”). The objectives of the activities were made clear as well as the link to the final assignment (..it is a great exercise to prepare you for the final assignment and receive some useful feedback!”). An example video (duration 4:45 min) which was tailored to the content of previous learning activities and the final assignment of the MOOC was developed to give students insight into the peer-feedback tool (a rubric) they had to use in the peer-feedback activity later on. The rubric was shown, quality criteria were explained and we showed students how an expert would use the rubric when being asked to review a peer’s text. The rubric including the quality criteria was also used in the peer-feedback activity and therefore prepared students for the actual peer-feedback activity later on in the MOOC.

Next, to the video, students could actively practice with the rubric itself. We designed a multiple-choice quiz in which students were asked to review a given text exert. To review the quality of the text exerts students had to choose one of the three quality scores (low, average or high) and the corresponding feedback and feed-forward. After indicating the most suitable quality score & feedback students received automated feedback. In the automated feedback, students received an explanation of why their choice was (un)suitable, why it was (un)suitable and which option would have been more suitable. By providing elaborated feedback we wanted to make the feedback as meaningful as possible for the students [8,9,10, 14]. By providing students with clear instructions, giving them examples and the opportunity to practice with the tool itself we implemented all of the above-mentioned design recommendations given by [1, 3, 18, 21, 24].

2.4 Peer-Feedback Activity

After the peer-feedback exercise students got the chance to participate in the peer-feedback activity. The peer-feedback activity was linked to the first part of the final assignment of the MOOC in which students had to visualize a marine litter problem by means of a framework called DPSIR which is a useful adaptive management tool to analyze environmental problems and to map potential responses. To make the peer-feedback activity for the students focused (and therewith not too time-consuming) they were asked to provide feedback on two aspects of the DPSIR framework. Beforehand, students received instructions and rules about the peer-feedback process. To participate in the peer-feedback activity students had to send in the first part of their assignment via the peer-feedback tool of the MOOC. Then they received automatically the assignment of a peer to review and a rubric in which they had to provide a quality score (low, average or high), feedback and a recommendation on the two selected aspects. There was also space left for additional remarks. Within three weeks of time, students had to make the first part of the final assignment, send it in, provide feedback and if desired could use the received peer-feedback to improve their own assignment. After the three weeks, it was not possible anymore to provide or receive peer-feedback. The peer-feedback activity was tailored to the MOOC set-up in which students could either individually or in groups write the final assignment. To coordinate the peer-feedback process within groups, the group leader was made responsible for providing peer-feedback as a group, sending the peer-feedback in, sharing the received feedback on their own assignment with the group. Students who participated individually in the final assignment also provided the peer-feedback individually.

2.5 Student Questionnaires

Before the peer-feedback training and after the peer-feedback activity, students were asked to fill out a questionnaire. In the pre-questionnaire, we asked students about their previous experience with peer-feedback in MOOCs and in general. Nineteen items were divided among five variables. Seven items were related to students’ prior experience, two were related to student’s willingness to participate in peer-feedback (training), three items were related to the usefulness of peer-feedback, two items related to the students’ preparedness to provide feedback and five were related to their general attitude regarding peer-feedback (training) (see Appendix 1). After participating in the peer-feedback activity, students were asked to fill in the post-questionnaire (see Appendix 1). The post-questionnaire informed us about students’ experiences and opinions with the peer-feedback exercises and activities. It also showed whether and to what extent students changed their attitude regarding peer-feedback compared to the pre-questionnaire. The post-questionnaires contained 17 items which were divided among four variables: two items regarding the willingness, five items about the perceived usefulness, five items about their preparedness and another five items about their general attitude. Students had to score the items on a 7-point Likert scale, varying from “totally agree” to “totally disagree”.

3 Results

The aim of this study was to get insight into how instructional design elements of peer-feedback (training) influence students’ peer-feedback perception in MOOCs. To investigate this questions, we collected self-reported student data with two questionnaires. The overall participation in the peer-feedback training and activity was low and thus the response to the questionnaires was limited. Therefore, we cannot speak of significant results but rather preliminary findings which will be used in future work. Nevertheless, the overall tendency of our preliminary findings is a positive one since student’s perception in all five variables increased (willingness, usefulness, preparedness and general attitude).

3.1 Pre- and Postquestionnaire Findings

A total of twenty students did fill in the pre-questionnaire of which two did not give their informed consent to use their data. Of these eighteen students, only nine students did fill in the post-questionnaire. However, from these nine students, we only used the post-questionnaire results of six students since the results of the other three did show that they did not participate in the peer-feedback activities resulting in ‘not applicable’ answers. Only five of the eighteen students provided and received peer-feedback.

Of the eighteen students who responded on the pre-questionnaire, the majority had never participated in a peer-feedback activity in a MOOC (61.1%) and also had never participated in a peer-feedback training in a MOOC (77.7%). The majority also was not familiar with using a rubric for peer-feedback purposes (66.7%).

The results of the pre- and post-questionnaire (N = 18) show for all items an increase in agreement. Previous to the peer-feedback training and activity, the students already had a positive attitude towards peer-feedback. They were willing to provide peer-feedback and to participate in peer-feedback training activities. Additionally, they saw great value in reading peer’s comments. Students (N = 18) did not feel highly prepared to provide peer-feedback but found it rather important to receive instructions/training in how to provide peer-feedback. In general, students also agreed that peer-feedback should be trained and provided with some explanations. Comparing the findings of the pre-questionnaire (N = 18) with student’s responses on the post-questionnaire (N = 6), it can be seen that the overall perception regarding peer-feedback (training) improved. Student’s willingness to participate in future peer-feedback training activities increased from M = 2.0 to 2.7 (scores could range from −3 to +3). After having participated in our training and peer-feedback activity students found it more useful to participate in a peer-feedback training and activity in the future M = 2.2 to 2.7. Additionally, students scored the usefulness of our training high M = 2.7 because they provided them with guidelines on how to provide peer-feedback themselves. Students felt more prepared to provide feedback after having participated in the training M = 1.9 to 2.3 and they found it more important that peer-feedback is a part of each MOOC after having participated in our training and activity M = 1.4 to 2.7.

3.2 Provided Peer-Feedback

Next, to the questionnaire findings, we also investigated the provided peer-feedback qualitatively. In total five students provided feedback via the feedback tool in the MOOC. To get an overview we clustered the received and provided peer-feedback into two general types: concise general feedback and elaborated specific feedback. Three out of 5 students provided elaborated feedback with specific recommendations on how to improve their peer’s work. Their recommendations focused on the content of their peer’s work and were supported by examples such as “Although the focus is well-described, the environment education and the joint action plan can be mentioned.” When providing good remarks none of the students explained why they found their peer’s work good, however when providing critical remarks students gave examples with their recommendations.

4 Conclusions

In this paper, we investigated how instructional design elements of peer-feedback training, influences students’ perception of peer-feedback in MOOCs. Although small in number, the findings are encouraging that the peer-feedback training consisting of an instruction video, peer-feedback exercises and examples positively influence student’s attitude regarding peer-feedback. We found that student’s initial attitude towards peer-feedback was positive and that their perceptions after having participated in the training and the peer-feedback activity positively increased. However, since participation in the peer-feedback training and activity was not a mandatory part of the final assignment we cannot draw any general conclusions. Our findings indicate that by designing a peer-feedback activity according to design principles recommended in the literature, e.g. giving clear instructions, communicating expectations and the value of participating in peer-feedback [5, 13, 16, 17] does not only increase students’ willingness to participate in peer-feedback but also increases their perceived usefulness and preparedness. Our findings also seem to support the recommendations by [3, 17] who found that students need to be trained beforehand in order to benefit from peer-feedback by providing them with examples and explaining them beforehand how to use tools and how to interpret quality criteria in a rubric.

In the peer-feedback training, students were informed about how to provide helpful feedback and recommendations before getting the opportunity to practice with the rubric. The qualitative findings show that the feedback provided by students was helpful in a sense that it was supportive and supplemented with recommendations on how to improve the work [10, 27]. Since we were not able to test students’ peer-feedback skills beforehand we assume that the peer-feedback training with its clear instructions, examples and practice task supported students in providing valuable feedback [3].

Peer-feedback should be supported by the educational design of a course in such a way that it supports and guides students learning. To some extent design principles are context-dependent, however, we listed a preliminary list of design guidelines to offer MOOC designers and teachers some insight and inspiration:

-

1.

Providing feedback is a skill and thus should be seen as a learning goal students have to acquire. This implies that, if possible, the peer-feedback should be repeated within a MOOC. Starting early on relatively simple assignments and building up to more complex ones later in the course.

-

2.

Peer-feedback training should not only focus on the course content but also on student perception. This means that a training should not only explain and clarify the criteria and requirements but should also explain the real value for students to participate. A perfect design will not be seen as such as long as students are not aware of the personal value it has for them.

-

3.

Providing feedback is a time-consuming activity and therefore should be used in moderation. When is peer-feedback needed and when does it become a burden? Ask students to provide feedback only when it adds value to their learning experience.

Although we were only able to conduct an explorative study we see potential in the preliminary findings. To increase the value of our findings, our design will be tested in a forthcoming experimental study. Next, to self-reported student data, we will add a qualitative analysis of students’ peer-feedback performance by analyzing the correctness of the feedback and students’ perception of the received feedback. Moreover, learning analytics will provide more insight into student behaviour and the time they invest in the different peer-feedback activities.

References

Kulkarni, C., et al.: Peer and self-assessment in massive online classes. ACM Trans. Comput. Hum. Interact. 20, 33:1–31 (2013). https://doi.org/10.1145/2505057

Falakmasir, M.H., Ashely, K.D., Schunn, C.D.: Using argument diagramming to improve peer grading of writing assignments. In: Proceedings of the 1st workshop on Massive Open Online Courses at the 16th Annual Conference on Artificial Intelligence in Education, USA, pp. 41–48 (2013)

Yousef, A.M.F., Wahid, U., Chatti, M.A., Schroeder, U., Wosnitza, M.: The effect of peer assessment rubrics on learner’s satisfaction and performance within a blended MOOC environment. Paper presented at the 7th International Conference on Computer Supported Education, pp. 148–159 (2015). https://doi.org/10.5220/0005495501480159

Kasch, J., Van Rosmalen, P., Kalz, M.: A framework towards educational scalability of open online courses. J. Univ. Comput. Sci. 23(9), 770–800 (2017)

Boud, D., Molloy, E.: Rethinking models of feedback for learning: the challenge of design. Assess. Eval. High. Educ. 38, 698–712 (2013a). https://doi.org/10.1080/02602938.2012.691462

Boud, D., Molloy, E.: What is the problem with feedback? In: Boud, D., Molloy, E. (eds.) Feedback in Higher an Professional Education, pp. 1–10, Routledge, London (2013b)

Dowden, T., Pittaway, S., Yost, H., McCarthey, R.: Student’s perceptions of written feedback in teacher education. Ideally feedback is a continuing two-way communication that encourages progress. Assess. Eval. High. Educ. 38, 349–362 (2013). https://doi.org/10.1080/02602938.2011.632676

Hattie, J., Timperley, H.: The power of feedback. Rev. Educ. Res. 77, 81–112 (2007)

Narciss, S., Huth, K.: How to design informative tutoring feedback for multimedia learning. In: Niegemann, H., Leutner, D., Brünken, R. (eds.) Instructional Design for Multimedia Learning, Münster, pp. 181–195 (2004)

Neubaum, G., Wichmann, A., Eimler, S.C., Krämer, N.C.: Investigating incentives for students to provide peer feedback in a semi-open online course: an experimental study. Comput. Uses Educ. 27–29 (2014). https://doi.org/10.1145/2641580.2641604

Crook, C., Gross, H., Dymott, R.: Assessment relationships in higher education: the tension of process and practice. Br. Edu. Res. J. 32, 95–114 (2006). https://doi.org/10.1080/01411920500402037

Patchan, M.M., Charney, D., Schunn, C.D.: A validation study of students’ end comments: comparing comments by students, a writing instructor and a content instructor. J. Writ. Res. 1, 124–152 (2009)

Rowe, A.: The personal dimension in teaching: why students value feedback. Int. J. Educ. Manag. 25, 343–360 (2011). https://doi.org/10.1108/09513541111136630

Topping, K.: Peer assessment between students in colleges and universities. Rev. Educ. Res. 68, 249–276 (1998). https://doi.org/10.3102/00346543068003249

Carless, D., Bridges, S.M., Chan, C.K.Y., Glofcheski, R.: Scaling up Assessment for Learning in Higher Education. Springer, Singapore (2017). https://doi.org/10.1007/978-981-10-3045-1

Nicol, D.J., Macfarlane-Dick, D.: Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Stud. High. Educ. 31(2), 199–218 (2007). https://doi.org/10.1080/03075070600572090

Jönsson, A., Svingby, G.: The use of scoring rubrics: reliability, validity and educational consequences. Educ. Res. Rev. 2, 130–144 (2007). https://doi.org/10.1016/j.edurev.2007.05.002

Jönsson, A., Panadero, E.: The use and design of rubrics to support assessment for learning. In: Carless, D., Bridges, S.M., Chan, C.K.Y., Glofcheski, R. (eds.) Scaling up Assessment for Learning in Higher Education. TEPA, vol. 5, pp. 99–111. Springer, Singapore (2017). https://doi.org/10.1007/978-981-10-3045-1_7

Hülsmann, T.: The impact of ICT on the cost and economics of distance education: a review of the literature, pp. 1–76. Commonwealth of Learning (2016)

Luo, H., Robinson, A.C., Park, J.Y.: Peer grading in a MOOC: reliability, validity, and perceived effects. Online Learn. 18(2) (2014). https://doi.org/10.24059/olj.v18i2.429

Zutshi, S., O’Hare, S., Rodafinos, A.: Experiences in MOOCs: the perspective of students. Am. J. Distance Educ. 27(4), 218–227 (2013). https://doi.org/10.1080/08923647.2013.838067

Liu, M., et al.: Understanding MOOCs as an emerging online learning tool: perspectives from the students. Am. J. Distance Educ. 28(3), 147–159 (2014). https://doi.org/10.1080/08923647.2014.926145

Suen, H.K., Pursel, B.K.: Scalable formative assessment in massive open online courses (MOOCs). Presentation at the Teaching and Learning with Technology Symposium, University Park, Pennsylvania, USA (2014)

Suen, H.K.: Peer assessment for massive open online courses (MOOCs). Int. Rev. Res. Open Distance Learn. 15(3) (2014)

McGarr, O., Clifford, A.M.: Just enough to make you take it seriously: exploring students’ attitudes towards peer assessment. High. Educ. 65, 677–693 (2013). https://doi.org/10.1007/s10734-012-9570-z

Fishbein, M., Ajzen, I.: Belief, Attitude, Intention, and Behavior: An Introduction to Theory and Research. Addison-Wesley, Reading (1975)

Kaufman, J.H., Schunn, C.D.: Student’s perceptions about peer assessment for writing: their origin and impact on revision work. Instr. Sci. 3, 387–406 (2010). https://doi.org/10.1007/s11251-010-9133-6

Acknowledgements

This work is financed via a grant by the Dutch National Initiative for Education Research (NRO)/The Netherlands Organisation for Scientific Research (NWO) and the Dutch Ministry of Education, Culture and Science under the grant nr. 405-15-705 (SOONER/http://sooner.nu).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Appendix 1

Appendix 1

Pre-questionnaire items | Post-questionnaire items | ||||||

|---|---|---|---|---|---|---|---|

Item | Item | M | SD | Item | Item | M | SD |

Willingness | Willingness | ||||||

A1 | I am willing to provide feedback/comments on a peer’s assignment | 2.3 | 1.0 | PA1 | In the future I am willing to provide feedback/comments on a peer’s assignment | 2.3 | 1.2 |

A2 | I am willing to take part in learning activities that explain the peer-feedback process | 2.0 | 1.2 | PA2 | In the future I am willing to take part in learning activities that explain the peer-feedback process | 2.7 | 0.5 |

Usefulness | Usefulnes | ||||||

B1 | I find it useful to participate in a peer-feedback activity | 2.2 | 0.9 | PB1 | I found it useful to participate in a peer-feedback activity | 2.7 | 0.5 |

B2 | I find it useful to read the feedback comments from my peers | 2.3 | 1.0 | PB2 | I found it useful to read the feedback/ comments from my peer | 2.5 | 0.5 |

B3 | I find it useful to receive instructions/training on how to provide feedback | 2.1 | 1.0 | PB3 | 1 found it useful to receive instructions/training on how to provide feedback | 2.7 | 0.8 |

PB4 | I found it useful to see in the DPS1R peer-feedback training how an expert would review a DPSIR scheme | 2.5 | 1.2 | ||||

PB5 | The examples and exercises of the DPSIR peer-feedback training helped me to provide peer-feedback in the MOOC | 2.7 | 0.5 | ||||

Preparedness | Preparedness | ||||||

C1 | I feel confident to provide feedback/comments on a peer’s assignment | 1.9 | 1.5 | PC1 | I felt confident to provide feedback/comments on a peer’s assignment | 2.3 | 1.2 |

C2 | I find it important to be prepared with information and examples/exercises, before providing a peer with feedback comments | 1.9 | 1.5 | PC2 | 1 found it important to be prepared before providing a peer with feedback/comments | 2.0 | 1.3 |

PC3 | I felt prepared to give feedback and recommendations after having participated in the DPSIR peer-feedback training | 2.3 | 1.2 | ||||

PC4 | I felt that the DPSIR peer-feedback training provided enough examples and instruction on how to provide feedback | 2.3 | 0.8 | ||||

PC5 | The DPSIR peer-feedback training improved my performance in the final assignment | 1.3 | 1.5 | ||||

General attitude | General attitude | ||||||

D1 | Students should receive instructions and/or training in how to provide peer-feedback | 2.0 | 1.2 | PD1 | Students should receive instructions and/or training in how to provide peer-feedback | 2.3 | 1.2 |

D2 | Peer-feedback should be a part of each MOOC | 1.7 | 1.3 | PD2 | Peer-feedback should be part of each MOOC | 3.0 | 0.0 |

D3 | Students should explain their provided feedback | 1.9 | 1.1 | PD3 | Students should explain their provided feedback | 2.3 | 0.8 |

D4 | Peer-feedback training should be part of each MOOC | 1.4 | 1.6 | PD4 | Peer-feedback training should be part of each MOOC | 2.7 | 0.5 |

D5 | Peer-feedback gives me insight in my performance as | −.1 | 1.9 | PD5 | Peer-feedback gave me insight in my performance as | −.7 | 1.2 |

Pre- and postquestionnaire results with N = 18 for the pre-questionnaire and N = 6 for the post-questionnaire. Students were asked to express their agreement in the questionnaires on a scale of 3 (Agree), 0 (Neither agree/nor disagree) and −3 (Disagree). Excluding item D5 and PD5 where a different scale was used ranging from −3 (a professional) to 3 (a MOOC student).

Rights and permissions

<SimplePara><Emphasis Type="Bold">Open Access</Emphasis>This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made. </SimplePara> <SimplePara>The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. </SimplePara>

Copyright information

© 2018 The Author(s)

About this paper

Cite this paper

Kasch, J., van Rosmalen, P., Löhr, A., Ragas, A., Kalz, M. (2018). Student Perception of Scalable Peer-Feedback Design in Massive Open Online Courses. In: Ras, E., Guerrero Roldán, A. (eds) Technology Enhanced Assessment. TEA 2017. Communications in Computer and Information Science, vol 829. Springer, Cham. https://doi.org/10.1007/978-3-319-97807-9_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-97807-9_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-97806-2

Online ISBN: 978-3-319-97807-9

eBook Packages: Computer ScienceComputer Science (R0)