Abstract

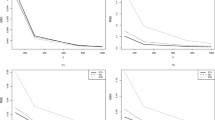

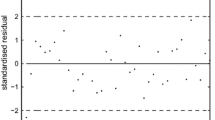

In this paper we present several diagnostic measures for the class of nonparametric regression models with symmetric random errors, which includes all continuous and symmetric distributions. In particular, we derive some diagnostic measures of global influence such as residuals, leverage values, Cook’s distance and the influence measure proposed by Peña (Technometrics 47(1):1–12, 2005) to measure the influence of an observation when it is influenced by the rest of the observations. A simulation study to evaluate the effectiveness of the diagnostic measures is presented. In addition, we develop the local influence measure to assess the sensitivity of the maximum penalized likelihood estimator of smooth function. Finally, an example with real data is given for illustration.

Similar content being viewed by others

References

Akaike H (1973) Information theory and an extension of the maximum likelihood principle. In: Second international symposium on information theory, pp 267–281

Boor C (1978) A practical guide to splines. Springer Verlag, Berlin

Buja A, Hastie T, Tibshirani R (1989) Linear smoothers and additive models. Ann Stat 17:453–510

Cook RD (1977) Detection of influential observation in linear regression. Technometrics 19(1):15–18

Cook RD (1986) Assessment of local influence. J Roy Stat Soc: Ser B (Methodol) 48(2):133–155

Cook RD, Weisberg S (1982) Residuals and influence in regression. Chapman and Hall, New York

Craven P, Wahba G (1978) Smoothing noisy data with spline functions. Numer Math 31(4):377–403

Dunn P, Smyth G (1996) Randomized quatile residuals. J Comput Graph Stat 52(5):236–244

Eilers P, Marx B (1996) Flexible smoothing with b-splines and penalties. Stat Sci 11:89–121

Emami H (2018) Local influence for Liu estimators in semiparametric linear models. Stat Pap 59(2):529–544

Eubank R (1984) The hat matrix for smoothing splines. Stat Probab Lett 2(1):9–14

Eubank R (1985) Diagnostics for smoothing splines. J R Stat Soc Ser B (Methodological) 47:332–341

Eubank R, Gunst R (1986) Diagnostics for penalized least-squares estimators. Stat Probab Lett 4(5):265–272

Eubank RL, Thomas W (1993) Detecting heteroscedasticity in nonparametric regression. J Roy Stat Soc: Ser B (Methodol) 55(1):145–155

Fang K, Kotz S, Ng KW (1990) Symmetric multivariate and related distributions. Chapman and Hall, London

Ferreira CS, Paula GA (2017) Estimation and diagnostic for skew-normal partially linear models. J Appl Stat 44(16):3033–3053

Fung WK, Zhu ZY, Wei BC, He X (2002) Influence diagnostics and outlier tests for semiparametric mixed models. J R Stat Soc Ser B (Statistical Methodology) 64(3):565–579

Green PJ, Silverman BW (1993) Nonparametric regression and generalized linear models: a roughness penalty approach. CRC Press, Boca Raton

Hastie T, Tibshirani R (1990) Generalized additive models, vol 4343. CRC Press, Boca Raton

Huber PJ (2004) Robust statistics, vol 523. John Wiley & Sons, New Jersey

Ibacache-Pulgar G, Paula GA (2011) Local influence for student-t partially linear models. Comput Stat Data Anal 55(3):1462–1478

Ibacache-Pulgar G, Paula GA, Galea M (2012) Influence diagnostics for elliptical semiparametric mixed models. Stat Model 12(2):165–193

Ibacache-Pulgar G, Paula GA, Cysneiros FJA (2013) Semiparametric additive models under symmetric distributions. TEST 22(1):103–121

Kim C (1996) Cook’s distance in spline smoothing. Stat Probab Lett 31(2):139–144

Kim C, Park BU, Kim W (2002) Influence diagnostics in semiparametric regression models. Stat Probab Lett 60(1):49–58

Peña D (2005) A new statistic for influence in linear regression. Technometrics 47(1):1–12

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6(2):461–464

Silverman BW (1985) Some aspects of the spline smoothing approach to non-parametric regression curve fitting. J Roy Stat Soc: Ser B (Methodol) 47(1):1–21

Speckman P (1988) Kernel smoothing in partial linear models. J Roy Stat Soc: Ser B (Methodol) 50(3):413–436

Thomas W (1991) Influence diagnostics for the cross-validated smoothing parameter in spline smoothing. J Am Stat Assoc 86(415):693–698

Türkan S, Toktamis Ö (2013) Detection of influential observations in semiparametric regression model. Revista Colombiana de Estadística 36(2):271–284

Vanegas L, Paula G (2016) An extension of log-symmetric regression models: R codes and applications. J Stat Comput Simul 86(9):1709–1735

Wahba G (1983) Bayesian “confidence intervals’’ for the cross-validated smoothing spline. J Roy Stat Soc: Ser B (Methodol) 45(1):133–150

Wei WH (2004) Derivatives diagnostics and robustness for smoothing splines. Comput Stat Data Anal 46(2):335–356

Wood SN (2003) Thin plate regression splines. J R Stat Soc Ser B (Statistical Methodology) 65(1):95–114

Zhang D, Lin X, Raz J, Sowers M (1998) Semiparametric stochastic mixed models for longitudinal data. J Am Stat Assoc 93(442):710–719

Zhu ZY, He X, Fung WK (2003) Local influence analysis for penalized gaussian likelihood estimators in partially linear models. Scand J Stat 30(4):767 780

Acknowledgements

This research was funded by FONDECYT 11130704, Chile, and DID S-2017-32, Universidad Austral de Chile, grant.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Hessian matrix

Let \({\mathbf {L}}_{\mathrm {p}}\left( p^{*} \times p^{*}\right)\) be the Hessian matrix with \(\left( j^{*}, \ell ^{*}\right)\) -element given by \(\partial ^{2} L_{\mathrm {p}}(\varvec{\theta }, \varvec{\alpha }) / \partial \theta _{j^{*}} \theta _{\ell ^{*}}\) for \(j^{*}, \ell ^{*}=1, \ldots , p^{*} .\) After some algebraic manipulations we find

where \({\mathbf {D}}_{a}={\mathrm {diag}}_{1 \le i \le n}\left( a_{i}\right) , {\mathbf {D}}_{\zeta ^{\prime }}={\mathrm {diag}}_{1 \le i \le n}\left( \zeta _{i}^{\prime }\right) , {\mathbf {b}}=\left( b_{1}, \ldots , b_{n}\right) ^{T}, \varvec{\delta }=\left( \delta _{1}, \ldots , \delta _{n}\right) ^{T}\), \(\varvec{\epsilon } = \left( \epsilon _{1}, \ldots , \epsilon _{n}\right) ^{T}, a_{i}=-2\left( \zeta _{i}+2 \zeta _{i}^{\prime } \delta _{i}\right) , b_{i}=\left( \zeta _{i}+\zeta _{i}^{\prime } \delta _{i}\right) \epsilon _{i}, \epsilon _{i} = \textit{y}_{i} - \mu _{i}, \zeta _{i}^{\prime }=\frac{d \zeta _{i}}{d \delta _{i}}\) and \(\delta _{i}=\phi ^{-1} \epsilon _{i}^{2}.\)

1.1.1 Cases-weight perturbation

Let us consider cases-weight perturbation for the observations in the penalized log-likelihood function as

where \(\varvec{\omega }=\left( \omega _{1}, \ldots , \omega _{n}\right) ^{T}\) is the vector of weights, with \(0 \le \omega _{i} \le 1,\) for \(i=1, \ldots , n\). In this case, the vector of no perturbation is given by \(\varvec{\omega }_{0}=(1, \ldots , 1)^{T}\). Differentiating \(L_{\mathrm {p}}(\varvec{\theta }, \alpha | \varvec{\omega })\) with respect to the elements of \(\varvec{\theta }\) and \(\omega _{i},\) we obtain after some algebraic manipulation

where \(\widehat{\epsilon }_{i} = y_i - \widehat{\mu {_i}}\), for \(i=1, \ldots , n\).

1.1.2 Scale perturbation

Under scale parameter perturbation scheme it is assumed that \({\mathrm {y}}_{i} \sim S(\mu _{i}, \omega _{i}^{-1} \phi , g ),\) where \(\omega =\left( \omega _{1}, \ldots , \omega _{n}\right) ^{T}\) is the vector of perturbations, with \(\omega _{i}>0,\) for \(i=1, \ldots , n .\) In this case, the vector of no perturbation is given by \(\omega _{0}=(1, \ldots , 1)^{T}\) such that \(L_{\mathrm {p}}(\varvec{\theta }, \alpha | \varvec{\omega =\omega _{0}})=L_{\mathrm {p}}(\varvec{\theta }, \alpha ).\) Taking differentials of \(L_{\mathrm {P}}(\varvec{\theta }, \alpha | \varvec{\omega })\) with respect to the elements of \(\varvec{\theta }\) and \(\omega _{i},\) we obtain after some algebraic manipulation

where \(\widehat{\epsilon }_{i} = y_i - \widehat{\mu {_i}}\), for \(i=1, \ldots , n\).

1.1.3 Response variable perturbation

To perturb the response variable values we consider \(y_{i \omega }=y_{i}+\omega _{i},\) for \(i=1, \ldots , n\), where \(\omega =\left( \omega _{1}, \ldots , \omega _{n}\right) ^{T}\) is the vector of perturbations. Here, the vector of no perturbation is given by \(\varvec{\omega }_{0}=(0, \ldots , 0)^{T}\) and the perturbed penalized log-likelihood function is constructed from (5) with \({\mathrm {y}}_{i}\) replaced by \({\mathrm {y}}_{i \omega },\) that is,

where \(L(\cdot )\) is given by (4) with \(\delta _{i \omega }=\phi ^{-1}\left( {\mathrm {y}}_{i \omega }-\mu _{i}\right) ^{2}\) in the place of \(\delta _{i} .\) Differentiating \(L_{\mathrm {p}}(\varvec{\theta }, \alpha | \varvec{\omega })\) with respect to the elements of \(\varvec{\theta }\) and \(\omega _{i},\) we obtain, after some algebraic manipulation, that

where \(\widehat{\epsilon }_{i} = y_i - \widehat{\mu {_i}}\), for \(i=1, \ldots , n\).

Rights and permissions

About this article

Cite this article

Ibacache-Pulgar, G., Villegas, C., López-Gonzales, J.L. et al. Influence measures in nonparametric regression model with symmetric random errors. Stat Methods Appl 32, 1–25 (2023). https://doi.org/10.1007/s10260-022-00648-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-022-00648-z