Abstract

A family of measures of a journal’s impact is considered that takes account of the dispersion, as well as the mean, of the number of citations in a journal. These measures, called the Mean Dispersion Indices (MDI), generalize the stabilized-JIF recently introduced by Lando and Bertoli-Barsotti (J Informetr 11(3):689–703, 2017). The MDI use a geometric weighted average of the number of citations and the Gini coefficient to measure the dispersion of the number of citations. Journal rankings based on these measures are compared with those obtained from the Journal Impact Factor, the Scimago Journal Rank, the h-index measure, and the Eigenfactor rankings, four of the most well-known current impact measures. This comparison suggests that the different rankings may implicitly place different weights on dispersion and the average number of citations and some appear to show little correlation with dispersion.

Similar content being viewed by others

Introduction

The citation analysis of journals has become increasingly popular in the last two decades. A simple Google Scholar search of the word “bibliometrics” will return almost 60,000 results since 2000 alone. In the current academic atmosphere, citation data is used as a measure of a journal’s value, an institution’s prestige, and even a scholar’s contribution to his or her field. Citation analysis influences journal submissions as well as hiring and funding decisions in many disciplines. Interest in citation analysis has therefore been both interdisciplinary and personally important for many scholars since the advent of the Journal Impact Factor (JIF) by Garfeld and Sher (1963). This was preceded by Garfield’s development of the Genetics Citation Index and the Science Citation Index, Garfield (1955, 2006). Garfield (1972) in Science introduced the importance and possibility of journal evaluation through citation analysis. Many papers, including Garfield (2006), admit that there are some drawbacks to the JIF, but Garfield’s conclusion is that there is still nothing better than the JIF. Since then, scholars have introduced many additional impact measures: the h-index (Hirsch 2005), the Eigenfactor (Bergstrom 2007), the Scimago Journal Rank (SJR) (González-Pereira et al. 2010), the Source-Normalized Impact per Paper (Moed 2010), and many variations of the h-index (Bar-Ilan 2008). An important part of bibliometrics is identifying the purpose of a metric. Each of the above metrics is intended to measure either the impact of a journal, the impact of the average article in a journal, or the impact of an individual author. In fact, Mike Thelwall writes, “Citation counts do not measure research impact under any reasonable definition of impact. Instead they are indicators of research impact or quality in the sense that… they tend to correlate positively and statistically significantly with human judgements of impact or quality” (Thelwall 2016). It is his later conclusion that any index with “significant positive correlation with human judgements” could be useful in supporting peer review. With this in mind, we will focus on the JIF, SJR, h-index, and Eigenfactor.Footnote 1

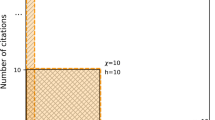

The JIF is the ratio of citations in a given year for articles published the two previous years to the number of citable items in those 2 years. To measure the JIF of the Journal of Finance (JoF) in 2014, the formula is \(JIF = \frac{\# \;citations\;for\;JoF\;in\;2014\;for\;2012\;\& \;2013\;publications}{Total\;\# \;of\;JoF\;publications\;in\;2012\;\& \;2013}\). The SJR uses a 3 years window, compared with the 2 years accounted for in the JIF. It adjusts for the size of a journal, and weights citations based on the impact of the citing journal using the connectedness as defined by a graph structure of the academic network (González-Pereira et al. 2010). The Eigenfactor uses methods similar to Google’s PageRank, where the Eigenfactor is representative of how often a researcher would read the given journal if he or she progressed through citations randomly. A journal has an h-index of h if h of the N papers have at least h citations each and the other papers have fewer than h citations.

Each of these metrics has various attributes that make them attractive for different reasons. Each of the newer measures is often mentioned as a potential replacement for the JIF for several reasons, but each also has drawbacks of its own. The following are limitations of some of the prominent measures (Leydesdorff 2008):

-

JIF’s 2 years time window can be limiting in many fields, especially the social sciences.

-

JIF is useful in comparing journals, but cannot be used across disciplines because of different citation habits.

-

JIF is limited to publications in the Web of Science database published by Clarivate Analytics. These publications preclude many other journals of varying importance in their respective fields.

-

JIF can be skewed by highly-cited papers in a journal.

-

The h-index guarantees only that a journal has at least \(h^{2}\) total citations, and therefore may leave out some information that could be valuable (Harzing and van der Wal 2009).

-

The h-index and the Eigenfactor are sensitive to journal size. The h-index is non-decreasing in the set of articles, and the Eigenfactor will show a higher probability of reading a journal if there are more articles associated with it.

Leydesdorff’s study (2009) compares the PageRank methods, the h-index, the SJR, the JIF, and other measures based on their correlation with size and “impact.” Leydesdorff found that PageRank methods typically place greater importance on the number of articles in each journal, while the SJR and the JIF account for size and thus correlate better with impact. The h-index on the other hand correlates relatively equally with both. As Thelwall (2016) states in his paper, “All citation-based indicators have problems.” One of those problems discussed by Thelwall includes that averaging of citations, since this may discourage publication of many articles, since less cited articles will have a negative impact on the average. Many critics of this method have suggested the median or other criterion. Thus, our purpose is to propose another factor that contributes towards measuring a journal’s impact, the dispersion of the number of journal citations in a journal. The dispersion of citations helps to show how representative the average article in a journal is. If the citations in a journal have a low level of dispersion, that would suggest the articles are of similar quality or impact. If the mean is high (low), then the journal has many good (questionable) articles. Conversely, a high level of dispersion suggests that the journal has articles of unequal quality or impact. If the distribution of citations is skewed, one might want to consider the median rather than the mean.

Lando and Bertoli-Barsotti (2017) propose an improvement on the JIF called the stabilized-JIF, for which they provide the theoretical justification using generalized Lorenz curves (GLC). They suggest that it improves the JIF because of “(1) its mathematical simplicity (it is a simple function of two basic indices); (2) its powerful graphical interpretation (namely, the area under the GLC); and (3) its interpretation (namely an average partial JIF).” We support the use of this index, and generalize it into a family of indices in this paper.

“Data” section will review the data used in this paper. “The mean dispersion indices (MDI)” section will describe the methods used in evaluating and analyzing the Mean Dispersion Index. “Comparison of current ranking systems” section will illustrate the similarities and differences in the ranking structures when compared with the dispersion of citations, and “Conclusion” section will conclude.

Data

Questions about data sources have also been a topic of great interest. In the early stages of Scopus and Google Scholar, Bar-Ilan (2008) found that Google Scholar rankings varied greatly from those using the Scopus and Web of Science databases; whereas rankings based on the Scopus and Web of Science databased appeared to match in most categories, with a few exceptions.Footnote 2 Harzing (2013) completed a more recent study and found that Google Scholar rankings had greatly improved in their stability and were gaining coverage across all disciplines. Harzing and van der Wal (2008) acknowledges that there are some drawbacks to using Google Scholar as a source but that it includes non-source citations and therefore better measures the impact on a journal. That being said, both the JIF and the Eigenfactor indices use the Clarivate AnalyticsFootnote 3 Web of Science database, while typically the h-index uses Google Scholar and the SJR uses the Scopus database. Since two of the four indices typically use the Web of Science data, this paper uses Web of Science data as well. Though the h-index is often calculated using Google Scholar data, Clarivate Analytics now include the h-index in its citation report. Therefore, this paper utilizes this h-index to keep the data as consistent as possible. The methods in this paper, however, could be applied quite simply to any data source. The source of data is not the focus of this paper, but rather how to use that data.

The Web of Science database is provided by Clarivate Analytics and is used as part of the calculations to produce the JIF. According to the Clarivate Analytics website, the “Web of Science Core Collection is optimized for linking cited references to source items, a precise point-to-point connection. It is an article-level product, built on and built by article characteristics and article-to-article relationships. Journal Citation Reports, on the other hand, aggregates citations at the journal level, using any and all variants of the journal’s title that occur in a citation, regardless of whether or not the citation has been linked to a specific source item in the journal.” It is therefore important to recognize that any calculations done using the Web of Science database will not match the JIF calculations because citations to the journal as a whole will not be counted. This is viewed as an advantage in this paper. When citations are counted only for specific published articles, it can be much easier to see the impact a journal’s publications are having on an article level and may facilitate author or institution analysis. Using citations to articles alone ensures that only the quality of research as perceived by the academic community will contribute to the influence of the journal. Still, an interested researcher must be careful to know exactly what a citation metric is analyzing.

We referenced the 344 journals that are included in the Economics section of the Journal Citation Report for 2015 as published by Clarivate Analytics. We acquired all publications that are labeled as “Articles” or “Proceedings Papers” from these 344 journals through the Web of Science database for the years 2009 through 2015. These years allow us to compare the JIFs for 2012 through 2015. This provides over 100,000 articles with citation data during those 7 years. We then filtered our results to the top 100 journals that have a calculated JIF. Using that list, we further limited the selection to journals with calculated SJR, h-index, and Eigenfactor reported. This creates a list of 56 journals which are reported in the “Appendix” section.

A major question that arises with impact factors is the time frame. The JIF limits their analysis to the 2 years before the target year as shown above. This time frame selection causes major variations across disciplines because social sciences have much longer citation half-lives than other fields. However, citation habits vary by more than just the time frame, so interdisciplinary comparisons are relatively unviable regardless of the timeline. Within a given field, however, the selected time frame has very little impact on journal rankings (Garfield 1998). Therefore, we restrict the summary statistic calculations to the 2 years prior to the stated year and report some summary statistics in Table 1. Using the same time frame, we calculated the mean number of citations during those 2 years and summarized the means in Table 2. Lastly, the median number of citations is summarized in Table 3 using the same method. We similarly gathered the SJR rankings from Scimago Journal Rank’s official website and the Eigenfactor values from eigenfactor.org which uses the Web of Science database. The h-index is often calculated using the Google Scholar database, but in an attempt to compare indices with as similar data as possible, we use the h-index from the updated Web of Science citation reports.Footnote 4

The mean dispersion indices (MDI)

The MDI takes account of the average number of citations and the dispersion of the number of citations as measured by the Gini Coefficient. The Gini Coefficient (G) was developed by Corrado Gini in 1912 to describe the level of income inequality. G is a scalar, bounded between 0 and 1, with a value of 0 corresponding to everyone having the same income and a 1 would be obtained if one person received all of the income. In our application to citations, if only one article in a journal was cited, then the corresponding G would be 1 and a value of zero would correspond to every article being cited the same number of times. The Gini coefficient is calculated using the following equation:

where each \(x_{i}\) is each article’s total citations in the given year.Footnote 5 We then can calculate the dispersion of citations within a given journal. The Gini coefficient is a useful tool in dispersion analysis, because it is a scalar, normalized between 0 and 1, is independent of the mean, and therefore allows for a simple ranking structure. Gini coefficient analysis is used extensively across authors, institutions, and disciplines in Glötzl and Aigner (2017). Their analysis observed that there is a high concentration of citations in a small number of institutions and authors. For example, they find that 42% of all citations correspond to authors at only twenty academic institutions. Their analysis, however, did not focus on journal impact measures. Table 4 summarizes the Gini coefficients for the top 100 journals according to the JIF.

The Gini coefficient can discount the impact of a journal having a few highly cited articles mixed with many rarely cited items. Lando and Bertoli-Barsotti (2017) present a new index with theoretical motivation, called the stabilized-JIF (s-JIF), which is calculated as the mean number of citations multiplied by one minus the Gini coefficient. With this in mind, we propose the following family of indices:

where \(\mu\) is the mean number of citations in the chosen time period, \(G\) is the Gini coefficient, and \(\lambda\) (\(0 \le \lambda \le 1\)) is a parameter that determines the weights placed on the average number of citations and the dispersion of citations in the journal. The stabilized-JIF is equal to \(({\text{MDI(0}} . 5 ))^{2}\), and the rankings of journals from the stabilized-JIF and MDI(0.5) are therefore identical. From (2) we see that MDI increases as the mean number of citations increases and as the dispersion decreases. The exponent \(\lambda\) allows the weight placed on the mean or dispersion to be adjusted as part of the analysis. This can be a helpful way to measure how citation metrics correlate with dispersion versus mean citations. If \(\mu\) increases by 1%, other things equal, the value of MDI will increase by \(\lambda \%\). Similarly, if dispersion decreases (say \((1 - G)\) increases) by 1% the dispersion index will increase by \((1 - \lambda )\)%. If \(\mu\) increases by 1% and \((1 - G)\) increases) by 1%, then MDI will increase by 1%.

Though developed independently of the s-JIF, the mean dispersion indices support the work of Lando and Bertoli-Barsotti (2017). In addition to expanding and generalizing the s-JIF, the mean dispersion indices add to the current measures because they facilitate a variety of analyses of how dispersion of citations affects the measure. Additionally, the MDI allows, but does not require, a linear relationship between the mean number of citations and the measure itself. It also shows how dispersion can be added as a factor in the impact of a journal. The family of indices can be useful in measuring how dispersion is accounted for in existing or future indices.

The MDI represents the perceived impact of an “average” publication, and is therefore size independent. Size could be accounted for by multiplying by the average number of articles in a journal, but this will not be explored in this paper. Table 5 shows the top 10 ranked economics journals in 2014 using each of the five metrics discussed in this paper. The reported MDI rankings correspond to \(\lambda = 0.5\), the geometric mean of \(\mu\) and \(1 - G\). The MDI was calculated using the 2-year window associated with the JIF.

It is interesting to note the similarities and differences between the rankings reported in Table 5. For example, the Quarterly Journal of Economics, the Journal of Finance, Econometrica, the Journal of Financial Economics, and the American Economic Review are rated in the top ten by all indices; the Review of Economic Studies and the Journal of Economic Perspectives are in the top ten for four of the five indices; and the Journal of Economic Literature and the Journal of Political Economy are in the top ten for three of the five indices. It is also interesting to see that the h-index includes two journals (Energy Economics and World Development) that are not included using any of the other measures. Similarly, Economic Systems Research and Review of Environmental Economics and Policy are only selected by the JIF and MDI, respectively. Of course, the comparison between size independent and size dependent rankings is perhaps an unfair comparison, the size dependent measures are included to demonstrate later in the paper how dispersion can be a helpful factor in determining the impact of publications, journals, or research institutions.

The MDI in Table 5 corresponds to an equal weighting of the mean and dispersion. One might wonder what impact different weights would have on the rankings. This question is addressed in Table 6 where the rankings of the top ten are reported for \(\lambda = 0,0.25,0.50,0.75\), and 1.0. The extremes, \(\lambda = 0\) and 1.0, correspond to where the MDI considers only the dispersion and average number of citations which is almost identical to the JIF, respectively, with 0.25, 0.50, and 0.75 giving increasing weight to the average number of publications. It should be noted that the authors do not suggest that MDI \((\lambda = 0)\) is a viable measure of journal worth, but instead it is a useful tool in comparing journals. For example, it becomes clear from this that Econometrica, Journal of Finance, Journal of Political Economy, and the Review of Economic Studies have very high numbers of citations across all of the articles published, because they appear in the top ten regardless of the weighting scheme. The Quarterly Journal of Economics consistently has the highest rank for \(\lambda\) equal to 0.5 or higher and only drops to second for \(\lambda\) of 0.25, suggesting that articles in the Quarterly Journal of Economics are of relatively high quality. The rankings of some journals, e.g. Journal of Economic Perspectives, and the Review of Economic Studies tend to increase as \(\lambda\) increases; whereas the ranking of the Journal of Political Economy and the Review of Environmental Economics and Policy decline as \(\lambda\) increases. Other journals, e.g. Emerging Markets Review, Economic Journal, and the Journal of Economic Growth are selected only by one index

Comparison of current ranking systems

There are several possible causes of different rankings of journals: the data source, the time frame, and the index. We will explore each of these causes and discuss their impact on the MDI. Table 7 shows each of the variations of the indices used in this paper. Each of these three columns will be discussed briefly in terms of its effect on the rankings and what was done, or could be done, to account for these variations.

-

(a)

The data source

As discussed in the data section, there are many arguments for or against each of the Web of Science, Scopus, and Google Scholar databases. The MDI is very easy to calculate regardless of the data source, and thus the focus of this paper is on the method rather than the data. However, all the index values used in this paper were provided using the Web of Science database except for the SJR, which uses Scopus. Since Bar-Ilan (2008) found that the h-index rankings using the Web of Science compared with Scopus is nearly the same with economics journals, comparison between these rankings should not be too heavily impacted by the data source, though it is a possible source of variability among rankings.

-

(b)

Time frame

Table 7 shows each index’s typical timeframe of consideration. Since the MDI can be calculated using any time frame, any correlation or comparison calculation uses the years that correspond to the time frame of the index in question. For example, any comparison between the MDI and the SJR uses a 3 years time frame for the MDI, and any comparison with the h-index or the Eigenfactor uses a 5 years time frame for the MDI. Table 8 reports the Spearman correlations between the various time frames of the MDI. Since each rank correlation is very high, it is clear that the MDI rankings are not highly sensitive to the time frame.

Table 8 Spearman rank correlations for 2-, 3-, and 5-year time frames of the MDI -

(c)

The methods

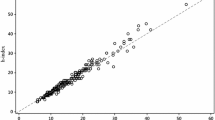

By setting \(\lambda = 0.5\) we have a base case that equally balances average citations and dispersion. Table 9 shows the corresponding Spearman rank correlation matrix. The associated scatter plots for 2014 are included in Fig. 1 and provide a visual representation of the correlation between the rankings of each index and the MDI. We reemphasize that the ranks of the MDI were calculated using the years that correspond to each index’s time frame.

Table 9 Spearman rank correlation matrix of the ranks for 2014

Using the following equation

we estimated the \(\lambda\) that maximized the Spearman rank correlation for each index. In theory, a \(\lambda = 1\) should give the rankings of the JIF. The difference between the MDI with \(\lambda = 1\) and the JIF depends on the way citable and citing documents are counted, as discussed by Stern (2013). The results comparing these indices are summarized in Table 10.

The results summarized in Table 10 suggest that the MDI best matches the rankings of the JIF even when \(\lambda\) is optimized. The \(\lambda\) values suggest that the other indices indeed place some weight on the dispersion of citations among articles, but the lower spearman correlation shows that in actuality these measures are not that highly correlated with the dispersion or the mean. Simple Spearman correlations between the rankings of the various indices and the Gini rankings are given in Table 11. This is a comparison using only \((1 - G)\) rather than including the mean and corresponds to \(\lambda = 0\). Though measuring the value of a journal purely on the dispersion of citations is clearly not a good measure of journal impact, it does show that the SJR appears to account for dispersion better than other indices except for the MDI (\(\lambda = 0.5\)).

Conclusion

We introduced a new family of journal impact measures called the Mean Dispersion Indices which take account of the mean and variability of the number of citations in a journal. The equation for the MDI generalizes the stabilized-JIF and also lends itself to a comparison with the other indices, and its calculation is straightforward and simple. From the analysis in “Comparison of current ranking systems” section, we determined that of the four indices considered, the JIF is most heavily correlated with average citation levels followed by the h-index, while the Eigenfactor and SJR indices place greater weight on the dispersion as shown in Table 11. However, the MDI had a higher correlation with the dispersion than any of the indices, while maintaining a high correlation with the mean and each of the other indices. Dispersion could be included in citation analysis to better quantify the impact of journals, institutions, and authors.

As the number of scholars, articles, and journals continues to increase, the ability to measure their relative importance becomes increasingly valuable. In addition to being helpful in evaluating the relative benefits of other impact measures, the MDI is also an effective measure of a journal’s impact on its own. It is correlated with each of the other measures discussed, but it yields different enough rankings to provide an additional approach in citation analysis. Further research could include using the MDI to measure an institution’s average academic impact or a scholar’s average impact.

Notes

The Source Normalized Impact per Paper (SNIP) is a widely used index not included in this paper. It is calculated by dividing the mean number of citations in a 3-year period by a scalar that is determined based on the subject area. It allows comparing journals across disciplines. This would suggest that within disciplines SNIP rankings will not vary much from the rankings produced by the JIF when applied to the same citation database. Since this paper is focused within the Economics discipline, SNIP is not included in the comparisons.

These exceptions were mainly found in computer science related fields since many of the prominent publications are conference proceedings. Since this paper uses economics journals, these differences should not affect the analysis.

Formerly Thomson Reuters Web of Science.

The h-index included in this report uses a 5 years time window (see Table 7).

For example, if your list of journal citations were (1, 10, 100), the Gini coefficient would be equal to \(\frac{{\left| {1 - 10} \right| + \left| {1 - 100} \right| + \left| {10 - 1} \right| + \left| {10 - 100} \right| + \left| {100 - 1} \right| + \left| {100 - 10} \right|}}{{2*3*\left( {1 + 10 + 100} \right)}} = 0.595\).

References

Bar-Ilan, J. (2008). Which h-index? A comparison of WoS, Scopus and Google Scholar. Scientometrics, 74(2), 257–271.

Bergstrom, C. (2007). Eigenfactor: Measuring the value and prestige of scholarly journals. College & Research Libraries News, 68(5), 314–316.

Garfeld, E., & Sher, I. H. (1963). New factors in the evaluation of scientific literature through citation indexing. Journal of the Association for Information Science and Technology, 14(3), 195–201.

Garfield, E. (1955). Citation indexes for science: A new dimension in documentation through association of ideas. Science, 122, 108–111.

Garfield, E. (1972). Citation analysis as a tool in journal evaluation. Science, 178, 471–479.

Garfield, E. (1998). Long-term vs. short-term journal impact: Does it matter. Scientist, 12(3), 11–12.

Garfield, E. (2006). The history and meaning of the journal impact factor. Journal of the American Medical Association, 295(1), 90–93.

Glötzl, F., & Aigner, E. (2017). Six dimensions of concentration in economics: Scientometric evidence from a large-scale data set. Ecological Economics Papers, 15, WU Vienna University of Economics and Business, Vienna. Institute of Ecological Economics.

González-Pereira, B., Guerrero-Bote, V. P., & Moya- Anegón, F. (2010). A new approach to the metric of journals’ scientific prestige: The SJR Indicator. Journal of Informetrics, 4(3), 379–391.

Harzing, A. W. (2013). A preliminary test of Google Scholar as a source for citation data: A longitudinal study of Nobel Prize winners. Scientometrics, 94(3), 1057–1075.

Harzing, A. W., & Van Der Wal, R. (2008). Comparing the Google Scholar h-index with the ISI journal impact factor. Reserach in International Management Products and Services for Academics.

Harzing, A. W., & Van Der Wal, R. (2009). A Google Scholar h-index for journals: An alternative metric to measure journal impact in economics and business. Journal of the Association for Information Science and Technology, 60(1), 41–46.

Hirsch, J. E. (2005). An index to quantity an individual’s scientific research output. Proceedings of the National Academy of Science, 102(46), 16569–16572.

Lando, T., & Bertoli-Barsotti, L. (2017). Measuring the citation impact of journals with generalized Lorenz curves. Journal of Informetrics, 11(3), 689–703.

Leydesdorff, L. (2008). Caveats for the use of citation indicators in research and journal evaluations. Journal of the Association for Information Science and Technology, 59(2), 278–287.

Leydesdorff, L. (2009). How are new citation-based journal indicators adding to the bibliometric toolbox? Journal of the Association for Information Science and Technology, 60(7), 1327–1336.

Moed, H. F. (2010). Measuring contextual citation impact of scientific journals. Journal of Informetrics, 4(3), 265–277.

Stern, D. I. (2013). Uncertainty measures for economics journal impact factors. Journal of Economic Literature, 51, 173–189.

Thelwall, M. (2016). Not dead, just resting: The practical value of per publication citation indicators. arXiv preprint arXiv:1606.00193.

Waltman, L. (2016). A review of the literature on citation impact indicators. Journal of Informetrics, 10(2), 365–391.

Acknowledgements

The authors appreciate the helpful and supportive comments provided by the editor and referees.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Table of All 56 Journals with Mean and Gini Coefficient for the 2 years period leading up to 2014.

Journal | Mean | Gini coefficient | |

|---|---|---|---|

1 | American Economic Review | 3.7618 | 0.6002 |

2 | American Journal of Agricultural Economics | 1.2038 | 0.6966 |

3 | Annual Review of Economics | 2.9762 | 0.5731 |

4 | Brookings Papers On Economic Activity | 3.0833 | 0.6903 |

5 | Cambridge Journal of Economics | 1.5037 | 0.6310 |

6 | Ecological Economics | 2.7991 | 0.5702 |

7 | Econometrica | 4.0347 | 0.4906 |

8 | Economic Development and Cultural Change | 1.3774 | 0.5795 |

9 | Economic Geography | 2.0645 | 0.5796 |

10 | Economic Journal | 2.0863 | 0.5112 |

11 | Economic Policy | 2.6364 | 0.5670 |

12 | Economic Systems Research | 3.3333 | 0.7279 |

13 | Economy and Society | 1.6364 | 0.6521 |

14 | Emerging Markets Review | 1.4815 | 0.5259 |

15 | Energy Economics | 2.9622 | 0.5717 |

16 | Energy Journal | 1.6282 | 0.6640 |

17 | European Journal of Political Economy | 1.5229 | 0.5942 |

18 | European Review of Agricultural Economics | 1.1143 | 0.6070 |

19 | Food Policy | 1.8022 | 0.5845 |

20 | Journal of Agricultural Economics | 1.2278 | 0.6454 |

21 | Journal of Applied Econometrics | 1.5636 | 0.6738 |

22 | Journal of Econometrics | 1.7769 | 0.5571 |

23 | Journal of Economic Geography | 2.5227 | 0.5839 |

24 | Journal of Economic Growth | 3.5417 | 0.6123 |

25 | Journal of Economic History | 1.4355 | 0.6029 |

26 | Journal of Economic Literature | 6.2927 | 0.5576 |

27 | Journal of Economic Perspectives | 5.5517 | 0.5461 |

28 | Journal of Economic Surveys | 2.2105 | 0.5500 |

29 | Journal of Environmental Economics and Management | 2.6984 | 0.5965 |

30 | Journal of Finance | 5.4961 | 0.4606 |

31 | Journal of Financial and Quantitative Analysis | 1.5310 | 0.6177 |

32 | Journal of Financial Econometrics | 1.2326 | 0.6222 |

33 | Journal of Financial Economics | 4.1661 | 0.5606 |

34 | Journal of Human Resources | 1.4928 | 0.5499 |

35 | Journal of International Economics | 2.6865 | 0.6027 |

36 | Journal of Labor Economics | 2.0357 | 0.5132 |

37 | Journal of Monetary Economics | 1.7297 | 0.5811 |

38 | Journal of Political Economy | 3.6271 | 0.4754 |

39 | Journal of Public Economics | 1.6162 | 0.6108 |

40 | Journal of Urban Economics | 1.6848 | 0.5524 |

41 | Land Economics | 1.4125 | 0.7006 |

42 | Marine Resource Economics | 1.8571 | 0.6398 |

43 | Mathematical Finance | 1.1290 | 0.6171 |

44 | Oxford Bulletin of Economics and Statistics | 1.3218 | 0.6435 |

45 | Quantitative Economics | 1.7667 | 0.4849 |

46 | Quarterly Journal of Economics | 7.2346 | 0.5582 |

47 | Rand Journal of Economics | 1.3594 | 0.6302 |

48 | Review of Economic Dynamics | 1.4110 | 0.6472 |

49 | Review of Economic Studies | 4.1827 | 0.5376 |

50 | Review of Economics and Statistics | 2.7487 | 0.5600 |

51 | Review of Environmental Economics and Policy | 3.5161 | 0.4782 |

52 | Review of Financial Studies | 3.0955 | 0.5217 |

53 | Review of International Political Economy | 1.7867 | 0.6714 |

54 | Small Business Economics | 1.8995 | 0.5950 |

55 | World Bank Economic Review | 1.3721 | 0.6985 |

56 | World Development | 2.0701 | 0.5883 |

Rights and permissions

About this article

Cite this article

Cockriel, W.M., McDonald, J.B. The influence of dispersion on journal impact measures. Scientometrics 116, 609–622 (2018). https://doi.org/10.1007/s11192-018-2755-1

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-018-2755-1