Abstract

Approaches from metrology can assist earth observation (EO) practitioners to develop quantitative characterisation of uncertainty in EO data. This is necessary for the credibility of statements based on Earth observations in relation to topics of public concern, particularly climate and environmental change. This paper presents the application of metrological uncertainty analysis to historical Earth observations from satellites, and is intended to aid mutual understanding of metrology and EO. The nature of satellite observations is summarised for different EO data processing levels, and key metrological nomenclature and principles for uncertainty characterisation are reviewed. We then address metrological approaches to developing estimates of uncertainty that are traceable from the satellite sensor, through levels of data processing, to products describing the evolution of the geophysical state of the Earth. EO radiances have errors with complex error correlation structures that are significant when performing common higher-level transformations of EO imagery. Principles of measurement-function-centred uncertainty analysis are described that apply sequentially to each EO data processing level. Practical tools for organising and traceably documenting uncertainty analysis are presented. We illustrate these principles and tools with examples including some specific sources of error seen in EO satellite data as well as with an example of the estimation of sea surface temperature from satellite infra-red imagery. This includes a simulation-based estimate for the error distribution of clear-sky infra-red brightness temperature in which calibration uncertainty and digitisation are found to dominate. The propagation of these errors to sea surface temperature is then presented, illustrating the relevance of the approach to derivation of EO-based climate datasets. We conclude with a discussion arguing that there is broad scope and need for improvement in EO practice as a measurement science. EO practitioners and metrologists willing to extend and adapt their disciplinary knowledge to meet this need can make valuable contributions to EO.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 3.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

Glossary

| AVHRR | Advanced very high resolution radiometer |

| BT | Brightness temperature |

| CCI3 | Climate change initiative |

| CDR | Climate data record |

| CEOS | Committee on Earth observation satellites |

| ECMWF | European centre for medium-range weather forecasting |

| EO | Earth observation |

| ERA-Interim | Interim version of the ECMWF numerical weather prediction re-analysis |

| ESA | European space agency |

| FIDUCEO3 | Fidelity and uncertainty in climate data records from earth observation |

| FRM4STS3 | Fiducial reference measurements for validation of surface temperature from satellites |

| GAC | Global area coverage |

| GAIA-CLIM3 | Gap analysis for integrated atmospheric essen- tial climate variable climate monitoring |

| GCOS | Global climate observing system |

| GEO | Geosynchronous Earth orbit |

| GOES | Geostationary operational environmental sat- ellite |

| GUM | Guide to the expression of uncertainty in mea- surement |

| GRUAN | GCOS reference upper air network |

| ICT | Internal calibration target |

| IR | Infrared |

| L0, L1, L2 etc | Data processing levels, see table 1 |

| LPU | Law of propagation of uncertainties |

| MetEOC3 | Metrology for Earth observation and climate |

| MODIS | Moderate-resolution image spectroradiometer |

| NCEP/CRTM | National centers for environmental prediction community radiative transfer model |

| NMI | National Metrology Institute |

| NOAA | National oceanic and atmospheric adminis- tration |

| OE | Optimal estimation |

| Probability distribution function | |

| PRT | Platinum resistance thermometer |

| QA4EO | Quality assurance framework for Earth observation |

| RTM | Radiative transfer model |

| SI | Système International d'Unités |

| SRF | Spectral response function |

| SST | Sea surface temperature |

| VIM | International vocabulary of metrology |

| WMO | World meteorological organisation |

1. Introduction

The environment and climate of the Earth have been explored from space for over 50 years. The radiation balance instrument placed on Explorer 7, launched in October 1959 (Suomi 1961), initiated an era of satellite-based earth observation (EO) which to this day continues to expand in scope, diversity and detail. Over time sustained programmes of EO have been established to support weather forecasting and, via the Copernicus space programme (Clery 2014, Berger et al 2012), routine observation of other aspects of the environment are now undertaken for the benefit of society. The EO data of recent and coming decades will be a legacy of information about environmental and climate change likely to be of immense value to future generations seeking to manage their collective existence within the environments of Earth. EO data inform multi-decadal re-analyses of atmospheric and oceanic states through assimilation into physical models (e.g. Dee et al (2011), Hersbach et al (2015) and Compo et al (2011)). EO data are blended with in situ data to reconstruct historical climate (e.g. Titchner and Rayner (2014) and Curry et al (2004)), and EO datasets are analysed independently for decadal-scale variability and trends (Merchant et al (2012), Parkinson (2014) and Thorne et al (2011)). Exploitation of multi-decadal EO time series to address scientific and societal questions is increasingly widespread.

Table 1. Typical characteristics of level 0 (L0) to level 4 (L4) satellite products. Note that some products in practice mix the properties of more than one level. Furthermore, there are sub-categories (e.g. 'L1b', 'L2P') whose meanings are not fully standardised across agencies and projects.

| Level of product | Institution(s) typically responsible | Indicative content | How produced | Science application |

|---|---|---|---|---|

| L0 | Space agency or meteorological agency | Raw telemetry: timings, counts, instrument data, etc | Downlinked to receiving stations and consolidated | Usually none |

| L1 | Space agency or meteorological agency | Calibrated radiances (and/or counts and gain parameters), with location, time, and viewing geometry | Calculated from L0 using in-flight calibration results, and platform navigation | Basis for retrieval of geophysical variables |

| L2 | Space agency, meteorological agency, research organisation, commercial | Estimates of geophysical variables on the spatio-temporal sampling pattern of the L1 radiances ('swath data') | Retrieved, combining radiances (may also exploit auxiliary datasets) | Full spatial resolution analyses of retrieved variable, e.g. for process study |

| L3 | Meteorological agency, research organisation, commercial | L2 data transformed to a fixed spatio-temporal sampling ('grid') often at reduced spatio-temporal resolution. | Aggregation in space and/or time, e.g. by averaging available L2 data within grid | Model testing, analysis of change and variability |

| L4 | Meteorological agency, research organisation | Spatio-temporally complete fields on a regular grid | Gap filling of L2 and/or L3 data by interpolation in space and/or time, perhaps combining data from more than one sensor | Model testing, prescribed field for simulations, convenient analysis of change and variability |

This paper highlights the particular problems inherent in using historical EO time series to analyse environmental or climatic change, given the complex forms of uncertainty that are present in such time series. The measured values in such time series usually have been obtained from a series of different sensors that are considered in some sense compatible because of their similar design. Many of the sensors with the longest continuous time series were designed in the 1970s to specifications well below those required for climate research. The instrumental stability of each sensor and their relative calibrations in flight are often poorly known, and thus the measurement stability of the geophysical time series is typically difficult to estimate. Nevertheless, since these records provide a global dataset spanning a period of relatively rapid climatic change, there are strong scientific imperatives to maximise the scientific value from such archives. Ultimately, science and society need to know the degree of certainty that can be ascribed to an environmental or climatic change inferred from these long EO time series. Climate data records (CDRs) of 'essential climate variables' (ECVs; as defined by the Global Climate Observing System (GCOS) 2010, 2016) are an example where demonstrated credibility and transparency are paramount, and where requirements on accuracy and stability are stringent.

These are problems to which the discipline of metrology, the science of measurement, may contribute solutions.

The classic task of metrology is the linking of practical measurements made in science and industry to internationally-defined standards, particularly the Système International d'Unités (SI; e.g. http://www.bipm.org/en/publications/si-brochure/). This endeavour is crucial if empirical measurements made in one time and place are to be interpretable with confidence at a different time or elsewhere. A measurement that has been thus linked to standards is referred to as 'traceable', and the key aspect of traceability is that it allows the intrinsic uncertainty of the measurement relative to standards to be stated. Metrology is therefore deeply engaged with the science and mathematics of uncertainty, and with the understanding and calibration of instruments.

EO covers a wide range of sensing techniques, including altimetry (a range-finding technique, relying on measurements of time; Wunsch and Gaposchkin (1980), Smith and Sandwell (1997)), gravimetry (relying on measurements of distance as a function of time, to infer acceleration; Tapley et al (2004), Pail et al (2011)), and magnetometry (Friis-Christensen et al 2006). In this paper, only EO by passive measurement of 'top-of-atmosphere' electromagnetic radiance or flux is explicitly considered, although the general principles will be applicable more widely. The majority of long EO time series are in this category, being measurements of reflected solar radiance in visible and/or near-infrared wavelengths, or measurements of Earth's thermal emission in infra-red and/or microwave wavelengths.

There are ongoing efforts to bring in-flight SI traceability to future EO missions. But this paper addresses a different focus: what can be done metrologically for historical Earth observations?

This paper is intended to facilitate further collaboration between metrologists and EO practitioners recognising that some progress has been made from the inaugural formal meeting between the communities held at WMO in 2010 http://www.bipm.org/utils/common/pdf/rapportBIPM/2010/08.pdf. It therefore provides a brief introduction to the nature of both EO and key metrology concepts. Section 2 discusses the nature of EO measurements and levels of data processing at a level intended to be useful for metrologists less familiar with current EO practice. The section then concludes with some perspectives on the role of SI standards in EO.

Section 3 introduces the context of metrology as a discipline, as a primer for EO practitioners. It then presents the key ideas of this paper: a framework for metrological analysis of uncertainty in multi-decadal Earth observations through measurement-function centred analysis of EO levels of processing. Forms of uncertainty and of error correlation structure found to be common in EO are discussed. Practical aids to organising and traceably documenting uncertainty analysis are presented.

As a concrete illustration, section 4 presents both an analysis of the infrared calibration of a long lived historic sensor together with a somewhat simplified analysis for a particular case highly relevant to climate change science: derivation of sea surface temperature (SST) from thermal infra-red imagery. This is an example where EO measurements intended for operational meteorology are of potential value to climate science, if measurement errors can be reduced, observational stability increased, and uncertainties can be rigorously quantified.

The concluding discussion in section 5 argues that there is broad scope and need for improvement in EO practice as a measurement science, through collaboration between metrologists and EO practitioners.

2. The nature of satellite measurements of Earth

2.1. Measurands, sensors, orbits and variability

For the historic EO data sets discussed here, the typical measurand (measured quantity) in space is the spectral radiance of Earth integrated over the pass-band of the measuring instrument ('sensor'). Typically, sensors have several channels, with pass-band characteristics described by a spectral response function (SRF) determined during pre-launch characterisation. The SRF-integrated spectral radiance is simply called 'radiance' in the EO community, and we adopt this usage. The radiance in channels sensitive to reflected sunlight may be expressed as reflectance (with various definitions). For channels sensitive to Earth's thermal emission, the radiance is often expressed as brightness temperature (the temperature of an ideal emitter emitting the measured radiance). For the purpose of this paper, we will use 'radiance' as a general term that encompasses reflectance and brightness temperature, which are transformations of radiance. Several sensors may be put on a given satellite 'platform' (the infrastructure that supports, houses and powers the sensors). These sensors are typically complementary in terms of channels observed, spatial resolution, spectral resolution, radiometric resolution, viewing geometry and/or sampling pattern.

Satellite orbits are determined by the Newtonian dynamics of essentially un-propelled motion through the gravitational potential of Earth. Orbits are optimised for viewing Earth within the constraints imposed by Earth's non-uniform gravitational potential. Most environmental satellites fly in either sun-synchronous (low-Earth) or geo-synchronous (high altitude) orbits.

Sun-synchronous orbits precess annually so as to maintain the orientation of the orbital plane relative to the Sun. This means the local solar time of observation of low and mid-latitude locations is roughly constant, and the geometry of solar illumination (of Earth and satellite) is approximately repeated between consecutive orbits. Sun-synchronous platforms generally complete one orbit in ~100 min at an altitude of ~800 km. In a typical Sun-synchronous orbit, the sub-satellite point traces a path on Earth's surface that spans ~82 °S to ~82 °N and crosses the equator twice, once in sunlight and once during local night (e.g. figure 1). Such alternation between the Earth's shadow and exposure to the Sun changes the general radiation environment of the platform and, in response, the temperatures of sensor components are not stable. The platforms and sensors are engineered to meet specified requirements on the stability of the instrument state, but nonetheless, this is a marked contrast to a controlled laboratory environment.

Figure 1. Location of observations made by a single sensor on a low-Earth orbiting platform. Left panel: time of observation in seconds since the first data collected. Right panel: brightness temperature in the 11 µm channel of the sensor. The projection is Mollweide. The first data are collected as the satellite is moving north, and the pass over the UK is southward, referred to as a descending overpass. The swath of data is symmetrical around the sub-satellite point, so it is clear that the satellite orbit plane does not go over the north and south poles (i.e. the inclination of the orbit plane relative to the equator is less than 90°), but nevertheless, such satellites are referred to as 'polar orbiting'. The satellite is again moving northward at the end of this orbit, but at a longitude shifted westward relative to the start of the orbit. This is because the Earth has rotated eastward by about 25° during the duration of the orbit. In this way, during the course of one day, coverage of most of Earth's surface may be achieved with a sensor of sufficient swath width. In the brightness temperature data, the most obvious features are the strong contrasts in temperature between warm clear-sky areas and high, cold clouds. Latitudinal differences and land-sea temperature contrasts are also visible.

Download figure:

Standard image High-resolution imageGeo-synchronous orbits are those where the orbital period matches the ~23 h 56 m period of Earth's rotation. 'GEO' orbits are usually also geostationary, i.e. in the equatorial plane such that the longitude of the satellite above the ground is fixed. Since the altitude of such orbits is high (≅3786 km), shadowing by Earth is infrequent, but the orientation of the sensor view of Earth relative to the Sun undergoes a daily cycle with implications for observational stability.

These considerations highlight a major difference between laboratory metrology and the measurements of EO. In laboratory metrology, a recognised method for estimating uncertainty is statistical evaluation of repeated measurements. In the case of EO, repeat measurements are generally not available, because of continual variations in sensor state, viewing geometries that repeat only approximately between different satellite overpasses, and because of natural geophysical variability. From the visible to the microwave portions of the electromagnetic spectrum, the atmosphere modifies the 'top-of-atmosphere' radiance observable from space (see e.g. Merchant and Embury (2014), and references therein) by processes of scattering (all wavelengths), absorption (all wavelengths) and emission (thermal infra-red and microwave wavelengths). The impact on radiance depends on the vertical profile of radiatively-active gases, aerosols and clouds. The turbulent, chaotic nature of atmospheric motions on timescales of minutes and longer ensures that the observed state is never exactly repeated between any two orbits. The surface state also affects the observed radiance, and also changes in time. There are locations of high surface stability and low atmospheric variability that provide something close to repeated measurements (e.g. the sites, typically deserts, used for vicarious validation of satellite reflectance e.g. Müller (2014), but these are exceptional.

The total variance of top-of-atmosphere measured radiances combines geophysical variability and the variance of the errors in the measured values. Absence of repeat measurements means the latter cannot be empirically isolated. The geophysical variability of Earth is the signal which EO aims to measure. In the context of EO metrology, it is better to avoid using 'variance' to mean 'error variance', since Earth-system scientists would interpret the 'variance' as a number quantifying geophysical variability.

2.2. Data product levels

EO is a field replete with acronyms and jargon. A key term is the level of processing of an EO product. Processing level reflects both distinct computational stages in handling data streams downlinked from satellites and the different institutional arrangements for creating products at different levels. Typical characteristics of product levels are summarised table 1. The subsequent sub-sections discuss levels 0 to 4, drawing out points relevant to EO metrology. To assist all readers, a table of all other acronyms used in the paper is provided as an appendix.

2.2.1. Level 0.

Level 0 (L0) is the raw telemetry downlinked by a ground receiving station, comprising a mix of raw scientific observations from sensors together with engineering data from sensors and the platform. Transforming L0 data to scientifically useful products is a complex engineering task, and is a responsibility of the satellite operator.

The raw sensor data in L0 are in the form of binary numbers, referred to as 'counts' or 'digital numbers'. Each count is recorded using typically 10 to 16 bits. Such digitisation is coarse compared to laboratory metrology, and is driven by band-width limitations in downlinking data. Binary representation of the raw sensor values places a fundamental lower limit on the uncertainty present in calibrated radiances and derived geophysical estimates. 10-bit digitisation corresponds to ~0.1% resolution of the range.

Part of L0 processing involves estimating the satellite orbit and calculating, from the attitude of the platform in space, the origin of the measured radiances projected onto Earth's surface. This is known as geo-locating the satellite imagery. Orbit estimation can generally be improved retrospectively, so near-real time ('operational') satellite data may be superseded for non-time-critical applications by delayed mode and/or reprocessed data, using improved orbit information.

2.2.2. Level 1.

Level 1 (L1) datasets contain calibrated radiances or counts together with calibration parameters to map the counts into radiance. Auxiliary data locate the radiances in time, latitude and longitude on the Earth's surface. The satellite and solar azimuthal and zenith angles of the measured radiances are often included for convenience. Varying amounts of information from onboard calibration processes and engineering data (e.g. instrument temperatures, scanning rate, power consumption, etc) are provided. L1 data files are generally organised in image layers, one per channel, that have a common set of pixels. L1 data for microwave imagers can be an exception, since the footprint of an observation on the ground generally varies dramatically with microwave frequency for a fixed antenna size.

In scanning sensors, the individual measured radiances comprise roughly non-overlapping pixels that combine to create an image. For sensors on low Earth orbit, across-track scanning may be used, in which the image is obtained by successively scanning the Earth perpendicularly to the platform motion. In other cases, the scanning arrangement may be conical, in which cases pixels may, for convenience, be re-arranged (e.g. by nearest neighbour sampling) onto a rectilinear image grid. Pixels in an image may be referred to by their line (l) and element (e) indices which define the pixel position.

L1 radiances are typically calculated using an equation similar to:

where:  is the calculated Earth radiance for channel c of the pixel at image co-ordinate (l, e);

is the calculated Earth radiance for channel c of the pixel at image co-ordinate (l, e);  is the Earth count for the pixel recorded by the sensor;

is the Earth count for the pixel recorded by the sensor;  are calibration parameters, with

are calibration parameters, with ![${{\boldsymbol{a}}^{{\rm E}}}=\left[ {{a}_{0}},{{a}_{1}},{{a}_{2}} \right]$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn004.gif) . Uncertainty in the calculated radiance arises in part from uncertainties in the values of the quantities on the right hand side. In general, there are also other effects expected to have zero mean that contribute uncertainty in the calculated radiance; the '+ 0' is included as a reminder of this, and will be discussed further in section 3.2.1. (Note that equation (2.1) is not representative of the calculation of radiance spectra from interferometers.)

. Uncertainty in the calculated radiance arises in part from uncertainties in the values of the quantities on the right hand side. In general, there are also other effects expected to have zero mean that contribute uncertainty in the calculated radiance; the '+ 0' is included as a reminder of this, and will be discussed further in section 3.2.1. (Note that equation (2.1) is not representative of the calculation of radiance spectra from interferometers.)

In general, the calibration parameters are determined by in-flight calibration data plus other information; section 4 gives an example of the calibration parameters for a specific sensor. Sensors are generally reasonably linear, so  is often small.

is often small.  is the 'gain' of the sensor. In-flight calibration systems typically have two reference points, such as a dark and bright target, to estimate changes in gain over time in flight. With two references, characterisation of non-linearity needs external information, such as pre-flight characterisation or in-flight characterisation against another sensor.

is the 'gain' of the sensor. In-flight calibration systems typically have two reference points, such as a dark and bright target, to estimate changes in gain over time in flight. With two references, characterisation of non-linearity needs external information, such as pre-flight characterisation or in-flight characterisation against another sensor.

Changes in the gain and offset are to be expected as the sensor's space environment changes and the sensor degrades. An important source of change in the space environment is any precession of the orbit plane relative to the Sun (changing local solar time) over the mission lifetime. Material properties of components of the in-flight calibration system may degrade in time. The uncertainties associated with measured radiances will therefore evolve, and the stability of measurement may not be calculable from the satellite data alone.

Typically, 3 to 10 years after launch, the sensor will fail or the platform will be decommissioned. Multi-decadal datasets are built from sensors on a series of missions. Ideally, the sensors in a series would have identical spectral response and missions would overlap by a year or more. In practice, nominally equivalent channels have significant differences in SRFs and the overlaps between missions are not fully controllable.

Metrologists may be surprised that uncertainty estimates are not routinely included in L1 products under current practices in EO. A metrological approach to L1 products would include adopting the principle that every measured value in an L1 product should have associated context-specific uncertainty information (provided per datum if necessary). Although this principle (for Level 1 and higher products) and a set of associated guidance was endorsed by CEOS (Committee on Earth Observation Satellites) in 2010 as part of the Quality Assurance Framework for Earth Obervation (QA4EO) http://qa4eo.org/docs/QA4EO_guide.pdf this is still in the process of adoption at all space agencies.

2.2.3. Level 2.

Sensor channels are chosen to provide differential sensitivity to aspects of the state of the Earth's surface and atmosphere that are of interest. This differential sensitivity provides the information which is exploited in 'retrieval', which is inverse estimation from radiances of one or more geophysical variables. Retrieval algorithms used in EO are highly varied: analytic and implicit; empirical and physics-based; simple and complex; using only the observed radiances and employing significant external information.

The defining characteristic of L2 products is that retrieved geophysical variables are presented on the L1 image co-ordinates, i.e. at the highest spatial resolution possible.

The retrieval of L2 variables,  , can often be written in a form

, can often be written in a form

Here, z is the 'retrieved state' for a given pixel at line-element (l, e); this 'state vector' may contain a single variable (at the surface or a particular atmospheric level), a vertical profile of a single variable, or a set of various variables. Along with other lower-case bold variables, it is a column vector.  is the measurement function for the L2 product. This may be a simple, analytic equation, or considerably more complex. The

is the measurement function for the L2 product. This may be a simple, analytic equation, or considerably more complex. The  values of radiance used for the retrieval are in the 'observation vector'

values of radiance used for the retrieval are in the 'observation vector' ![$\boldsymbol{y}={{\left[ {{L}_{1,l,e}},~\ldots ,{{L}_{{{n}_{c}},l,e}}~ \right]}^{{\rm T}}}$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn010.gif) .

.  is an evaluation of the error covariance matrix of the measured radiances.

is an evaluation of the error covariance matrix of the measured radiances.  would ideally be based on uncertainty information available in the L1 product, but presently this is rarely, if ever, the case—an example of the need for improved metrology of Earth observations.

would ideally be based on uncertainty information available in the L1 product, but presently this is rarely, if ever, the case—an example of the need for improved metrology of Earth observations.  is a prior estimate of the state with estimated error covariance

is a prior estimate of the state with estimated error covariance  .

.  ,

,  and

and  are explicitly present in some retrieval algorithms such as optimal estimation (Rogers 2000), in which case they can be explicitly used to evaluate the error covariances of the retrieved state. In other cases, they are implicit and unevaluated. All retrieval algorithms have additional parameters used in retrieval, b. This can be as simple as a set of weights for combining radiances, or could be tens of thousands of spectroscopic parameters embedded within a radiative transfer model (or 'forward model') used in the inversion. More generically, one could regard all the terms

are explicitly present in some retrieval algorithms such as optimal estimation (Rogers 2000), in which case they can be explicitly used to evaluate the error covariances of the retrieved state. In other cases, they are implicit and unevaluated. All retrieval algorithms have additional parameters used in retrieval, b. This can be as simple as a set of weights for combining radiances, or could be tens of thousands of spectroscopic parameters embedded within a radiative transfer model (or 'forward model') used in the inversion. More generically, one could regard all the terms  distinguished above as retrieval parameters, and write the retrieval equation as

distinguished above as retrieval parameters, and write the retrieval equation as  . The '+ 0' indicates that not all aspects of the inverse problem are necessarily captured by terms in the measurement function; some uncertainty is associated with those aspects of the inverse problem, even though their net effect is assumed to be zero mean. The symbols used here are somewhat adapted from widespread EO usage (e.g. Rogers (2000)) in that we use

. The '+ 0' indicates that not all aspects of the inverse problem are necessarily captured by terms in the measurement function; some uncertainty is associated with those aspects of the inverse problem, even though their net effect is assumed to be zero mean. The symbols used here are somewhat adapted from widespread EO usage (e.g. Rogers (2000)) in that we use  for the state vector rather than

for the state vector rather than  .

.

The expression in equation (2.2) does not encompass all possibilities. The retrieval for pixel (l, e) may use radiances in other pixels in the image, to exploit spatial coherence in state. Classification or clustering of pixels may be performed prior to retrieval, for example to distinguish whether a water body or a particular class of land cover is present; different retrievals may then be applied to pixels according to their class or cluster.

Users of L2 products are presently accustomed to there being no or limited uncertainty information available in many L2 products, and perhaps relying on validation results reported in the literature. Users of L2 products often interrogate pixel-level quality indicators for indications about which values are more trustworthy than others. Quality indicators have different meanings in different L2 products. They may indicate the likely magnitude of uncertainty, or reflect the degree of confidence in the classification of the pixel, or a contextual judgement about the validity (in some sense) of the retrieval, or a combination of such factors. A metrological approach to L2 products would include adopting the principle that every geophysical estimate should have associated with it context-specific uncertainty information. EO experts working on several ECVs in the European Space Agency Climate Change Initiative (Hollmann et al 2013) have reached consensus with regards to best practice for geophysical products (Merchant et al 2017), and that consensus is consistent with this view.

2.2.4. L3 (gridded data).

The locations of geophysical observations in L2 do not exactly repeat between orbits, which is not always convenient. L2 product data volumes may also be large (many terabytes). Therefore L2 data are used where maximum spatial resolution is needed, but for applications tolerant of lower spatiotemporal sampling, L3 products are generated that are easier to use and have smaller volumes.

L3 products are gridded products, made by aggregating L2 values in space and/or time on a regular space-time grid. Aggregation involves the averaging of the L2 data obtained within the space-time volume of a given cell. The averaging may be weighted: for example, a weight of 0 may be applied to data whose L2 quality indicator is lower than that of the best quality data available within the cell. The formula used to obtain the gridded product value is usually a relatively simple equation, similar to:

where the m index runs over all the L2 measured values within the latitude-longitude-time interval of the cell, wm is the weight of the pixel value zm, and  is the cell value in the L3 product, which represents the estimated mean value over the measurand across a spatio-temporal cell.

is the cell value in the L3 product, which represents the estimated mean value over the measurand across a spatio-temporal cell.

The uncertainty in the '+ 0' term here includes sampling uncertainty (e.g. Bulgin et al (2016)), also known as representativity. Geophysical variability is continuous in space and time, and the L2 values sample this variability at certain, usually irregular, locations and/or times within the cell. Assuming the sampling is independent of the variability, the resulting sampling error has an expectation of zero, but can be drawn from an error distribution whose width is not negligible compared to the uncertainty in  arising directly from the uncertainties in the zm.

arising directly from the uncertainties in the zm.

A metrological approach to L3 products would involve the following principles: when creating aggregated products, propagate uncertainty appropriately, accounting for error correlation; clearly define the intended measurand represented by an aggregated observation and estimate the uncertainty from representativity/sampling accordingly. General solutions to these challenges are yet to be established and applied in practice.

2.2.5. Level 4.

Some applications require gridded data that are complete (gap free in space and time). An example would be where a climate or other environmental model is run with one or more variables being prescribed (i.e. imposed rather than calculated within the model). This may be done to explore the response of other variables to the 'real' history of the prescribed variable. A common example is running the atmospheric circulation of a global climate model while prescribing a spatially complete sea surface temperature field as a boundary condition.

L2 and L3 products are not gap free, and therefore L4 production requires additional processing involving spatio-temporal interpolation. L2 and L3 data from many satellite sensors may be used as inputs, and L4 analyses (as they are called) may also ingest values measured in situ. There are many possibilities, and the metrology of this level of processing will not be explored further in this paper.

2.3. Additional remarks: processing chain, SI references

This discussion of the nature of satellite measurements and concepts of processing levels is inevitably simplified. We have focussed on passive remote sensing concepts and generic measurement functions for data at each level. EO practitioners recognise a range of sub-categories within the nomenclature of processing levels, and these are not necessarily standardised between different agencies and communities of practice. Pragmatism and the particularities of different missions or applications can lead to products and practices that do not neatly fit the descriptions in this overview.

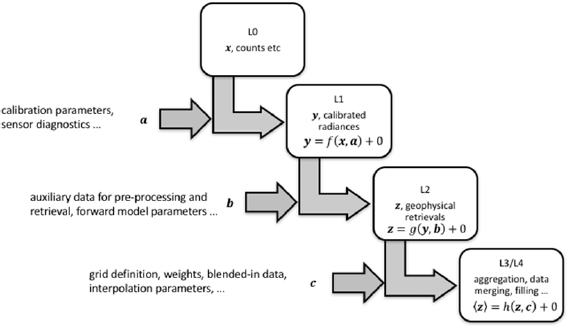

Nonetheless, the overall picture is valid: EO products are obtained by a sequence of data transformations, with higher level products being created from lower level datasets. At each transformation, uncertainty intrinsic to the lower level data propagates to the higher level, and additional factors may also introduce uncertainty. This simplified view is shown in figure 2.

Figure 2. Relationship of satellite data processing levels from level 0 to 3+. In each transformation, data from the lower level ( ,

,  ,

,  ) are transformed to the next level by a function (or process;

) are transformed to the next level by a function (or process;  ,

,  ,

,  ).The transformation function or process uses the lower-level data plus auxiliary parameters and data (

).The transformation function or process uses the lower-level data plus auxiliary parameters and data ( ,

, ,

,  ). In each transformation, uncertainty in lower-level data propagates to the higher level. The auxiliary parameters, data and the assumptions implicit in the function/process (represented by '+ 0') introduce new sources of uncertainty. Some aspects of the transformation may reduce uncertainty, e.g. in averaging over values subject to independent errors.

). In each transformation, uncertainty in lower-level data propagates to the higher level. The auxiliary parameters, data and the assumptions implicit in the function/process (represented by '+ 0') introduce new sources of uncertainty. Some aspects of the transformation may reduce uncertainty, e.g. in averaging over values subject to independent errors.

Download figure:

Standard image High-resolution imageTo our knowledge, no complete, traceable analysis and propagation of uncertainty from L0 or L1 to L2, L3 or L4 exists for any current EO processing chain.

Since a classic concern of laboratory-based metrology is to trace measurements to SI standards with a stated uncertainty, we need to address the role of SI standards in satellite measurements. SI-traceable reference standards have not yet been established in orbit (although concepts have been proposed to bring in-orbit SI traceability to future EO missions (e.g. Fox et al (2011) and Topham et al (2015)). Because of this currently sensors are characterised and data are validated by sensor calibration systems (e.g. reference sources or reflectors that have been characterised pre-flight) and in situ measurements (e.g. ground measurements of geophysical quantities that can be compared with those derived from satellite EO measurements). Even where sensor calibration systems have been characterised pre-flight in a fully SI traceable manner, traceability in orbit is compromised by the stresses of launch, the operation of sensors in the harsh environment of space, and the degradation of sensor components over time. On-the-ground validation data for geophysical quantities retrieved from EO measurements also often do not have established SI-traceability, although some agencies are now investing in fiducial reference measurements (Thorne et al 2018) to support the Copernicus space programme in particular. SI-traceability for reflectance is being developed within the instrumented calibration network, RadCalNet (www.radcalnet.org). For EO-derived sea surface temperature (SST), there are efforts towards SI-traceable at-surface references for radiometric SST using ship-borne radiometers (FRM4STS http://www.frm4sts.org/). For atmospheric variables, the GRUAN (GCOS reference upper air network) provides observations traceable to a SI unit or an internationally accepted standard (Bodeker et al 2016).

Even where SI-traceability is being established via ground truth, uncertainty in that traceability chain may be larger than desirable for applications such as climate research. Other challenges include accounting for differences between what the ground instrument is measuring and what the satellite measures, which can include problems of scale of sampling ('point-to-pixel' effects) and differences in the detailed meaning of the measurand between satellite and in situ systems.

What, then, is the relevance of metrology to historical Earth observations obtained in the absence of SI traceability either in orbit or on the ground? Historical sensors provide critical information of the state of the planet during a period of relatively rapid climatic change. Even without full SI-traceability, there is a key role for metrology to work with EO practitioners towards rigorous characterisation of measurement errors, so that a historic EO dataset can be used appropriately for science in the light of well-understood uncertainty and stability estimates. Establishing the stability of an EO time series in relation to a community-agreed reference is a crucial and essentially metrological task in support of defensible interpretation of observed long-term change. This process can lead to an improved post-calibration of historical sensors as the metrological review provides new insight to the physical processes on the sensor.

3. Uncertainty analysis for Earth observation

3.1. Introductory comments on metrology

The metrological community, through the Metre Convention and the international system of units (SI), has achieved century-long stability and international consistency of measurement through key principles of traceability: uncertainty analysis and comparison.

Metrological traceability is a property of a measurement that relates the measured value to a reference through an unbroken chain of calibrations or comparisons. Uncertainty analysis is the review of all sources of uncertainty and the propagation of those uncertainties through the traceability chain. Traceability and uncertainty analysis at National Metrology Institutes (NMIs) are rigorously audited through the Mutual Recognition Arrangement (Comité international des poids et mesures 1999), which involves formal international comparisons.

Here we consider selected core principles of metrology and how they apply to EO. It should be noted here that these core principles form the basis of the QA4EO framework endorsed by CEOS in 2010 and in this paper we expand on this guidance to provide more detailed practical examples of implementation and in doing so extend the concepts to address some specific EO issues.

Key Uncertainty Concepts for EO

3.1.1. Error, uncertainty, effect.

The Guide to the Expression of Uncertainty in Measurement (GUM 2008; hereafter 'the GUM'), and its supplements provide guidance on how to express, determine, combine and propagate uncertainty. The GUM and its supplements are maintained by the JCGM (Joint Committee for Guides in Metrology), a joint committee of all the relevant standards organisations and the International Bureau of Weights and Measures, the BIPM.

The GUM defines the uncertainty of measurement as a:

parameter, associated with the result of a measurement, that characterizes the dispersion of the values that could reasonably be attributed to the measurand.

Uncertainty is non-negative. The 'standard uncertainty' is the measurement uncertainty expressed as a standard deviation. Evaluations of uncertainty in this paper refer throughout to standard uncertainty.

The error of measurement is defined in the GUM (section 2.2.19) as the:

result of a measurement minus a true value of the measurand

Since the true value of the measurand is unknown, the measurement error is unknown. However, by analysing the sources of errors in an EO measurement, the uncertainty in the measured value can be evaluated. This is the topic of section 3.2 below.

Estimating the uncertainty involves complex considerations, because any measurement is subject to several different sources of error, or 'effects'. The approach to uncertainty analysis presented below is to evaluate the uncertainty arising from different effects and combine these to determine the uncertainty associated with a measured value.

3.1.2. Independent, structured and common errors, correlation.

The measured value in each pixel of an EO image is the result of a sequence of steps and transformations. In transforming from raw data (L0) to calibrated radiances (L1), many measured values relevant to calibration measurements may be combined. In transforming L1 radiances to L2 geophysical products (figure 2), radiances from different spectral bands may be used. In L2 to L3 + processing, data across different pixels are then combined. Where correlation exists between errors in different measured values (different wavelengths and/or pixels), this error correlation needs to be considered in the uncertainty analysis.

The GUM defines systematic and random errors, concepts that are widely used in science.

Random errors are errors manifesting independence: the error in one instance is in no way predictable from knowledge of the error in another instance. A complication arises in EO imagery when one instance of a parameter in the radiance measurement function is used in the calculation of the Earth radiance across many pixels. That component of the error in the radiance image is then correlated across pixels, even though the originating effect is random. Put another way, the originating random error contributes errors with a particular structure to the image.

Systematic errors are those that could in principle be corrected for if we had sufficient information to do so: that is, they arise from unknowns that could in principle be estimated rather than from chance processes. All systematic errors in EO are structured in that there is a pattern of influence on multiple data. They include, but are not limited to, effects that are constant for a significant proportion of a satellite mission—i.e. biases, for which the structure is a simple error in common.

When considering EO imagery, it is can be useful to categorise effects primarily according to their cross-pixel error correlation properties, as independent, structured or common effects.

3.1.2.1. Independent errors.

Independent errors arise from random effects causing errors that manifest independence between pixels, such that the error in  is in no way predictable from knowledge of the error in

is in no way predictable from knowledge of the error in  , were that knowledge available. Independent errors therefore arise from random effects operating on a pixel level, the classic example being detector noise.

, were that knowledge available. Independent errors therefore arise from random effects operating on a pixel level, the classic example being detector noise.

3.1.2.2. Structured errors.

Structured errors arise from effects that influence more than one measured value in the satellite image, but are not in common across the whole image. The originating effect may be random or systematic (and acting on a subset of pixels), but in either case the resulting errors are not independent, and may even be perfectly correlated across the affected pixels. Since the sensitivity of different pixels/channels to the originating effect may differ, even if there is perfect error correlation, the error (and associated uncertainty) in the measured radiance can differ in magnitude. Structured errors are therefore complex, and, at the same time, important to understand, because their error correlation properties affect how uncertainty propagates to higher-level data.

3.1.2.3. Common errors.

Common errors are constant (or nearly so) across the satellite image, and may be shared across the measured radiances for a significant proportion of a satellite mission. Common errors might typically be referred to as biases in the measured radiances. Effects such as the progressive degradation of a sensor operating in space mean that such biases may slowly change.

3.1.2.4. Notes on usage of 'error' and 'uncertainty'.

In this section, we have classified the effects by the correlation structure of the errors they cause. Since uncertainty describes the dispersion of errors, and not the nature of the errors, uncertainty should not be described as random, systematic, independent, etc. Metrologists generally use phrases such as 'the uncertainty associated with random effects' rather than 'random uncertainty'.

Some metrologists avoid the word 'error' to avoid the confusion arising from incorrect usage of 'error' and 'uncertainty' in much scientific literature. There is often no ambiguity in the case of a repeated measurements in a laboratory, where the dispersion in measured values arises solely from the dispersion of measurement errors. But, in EO, it is essential to distinguish the dispersion in measured radiances due to geophysical variability (signals of interest) from the dispersion due to measurement errors. To maintain that distinction, we find it necessary to use terms such as 'error correlation' and 'error covariance' intentionally and consistently.

3.2. Uncertainty analysis

3.2.1. Overview.

Uncertainty analysis in the GUM begins with modelling the measurement, i.e. linking the measurand to the input quantities from which it is derived. A generic measurement model for a L1 radiance would be

where:  are input quantities; A is the vector of calibration parameters (which are also input quantities but are usefully distinguished); and

are input quantities; A is the vector of calibration parameters (which are also input quantities but are usefully distinguished); and  is an input quantity introduced to represent any inadequacy of the function f to represent all phenomena that affect the measurand.

is an input quantity introduced to represent any inadequacy of the function f to represent all phenomena that affect the measurand.

The equations, such as equation (3.1), used to populate L1 products, evaluate the measurand (radiance) using estimates of the input quantities. In the GUM, the convention is for estimates to be represented with the lower-case characters corresponding to the quantities written in upper case. Equation (2.1) is then seen as a particular case of the expression by which the measurand is estimated:

where the input estimates include the recorded sensor counts, etc. This clarifies the meaning of the ' + 0' term previously introduced: 0 is our best estimate of  , which is the expectation of

, which is the expectation of  (assuming we are using the best measurement model we can formulate).

(assuming we are using the best measurement model we can formulate).

The uncertainty in the measured value is derived from the evaluation of the uncertainty in each input estimate (or, strictly, from the distribution of possible values of  given

given  ). The uncertainty in all input estimates, including calibration parameters and

). The uncertainty in all input estimates, including calibration parameters and  is relevant. Evaluation of the uncertainty in

is relevant. Evaluation of the uncertainty in  means propagation through the measurement model of these uncertainties (or, strictly, distributions).

means propagation through the measurement model of these uncertainties (or, strictly, distributions).

The GUM and its supplements describe both the 'Law of Propagation of Uncertainty' (hereafter, LPU) and Monte Carlo methods of uncertainty propagation.

The LPU propagates standard uncertainties for the input quantities through a locally-linear first-order Taylor series expansion of the measurement function to obtain the standard uncertainty associated with the estimate,  , of the measurand. Higher order approximations can be applied if necessary.

, of the measurand. Higher order approximations can be applied if necessary.

Monte Carlo methods approximate the input probability distributions by finite sets of random draws from those distributions and propagate the sets of input values through the measurement function to obtain a set of output values regarded as random draws from the probability distribution of the measurand. The output values are then analysed statistically, for example to obtain expectation values, standard deviations and error covariances. The measurement function in this case need not be linear nor written algebraically. Steps such as inverse retrievals and iterative processes can be addressed in this way. The input probability distributions can be as complex as needed, and can include distributions for digitised quantities, which are very common in EO, where signals are digitised for on-board recording and transmission to ground.

Monte Carlo methods can provide information about the shape of the output probability distribution for the measurand, deal better with highly non-linear measurement functions and with more complex probability distributions, and can be the only option for models that cannot be written algebraically. However, they are computationally more expensive, which is an important consideration with the very high data volumes of EO.

Often uncertainty analyses will use a combination of Monte Carlo methods and the LPU, for example by using Monte Carlo methods to determine the uncertainty for a particular quantity, which is then used as an input to LPU in a subsequent uncertainty analysis. In this section, we discuss and illustrate the LPU, while acknowledging the role of Monte Carlo methods.

3.2.2. Propagation of uncertainty: single measurand.

The LPU for a single-variable measurand evaluated from  input quantities with values,

input quantities with values, ![${{\boldsymbol{x}}^{{\rm T}}}=~\left[ {{x}_{1}},\ldots {{x}_{{{n}_{j}}}} \right]$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn045.gif) , can be written as

, can be written as

for a generic measurement function of the form  . Here,

. Here, ![${{\boldsymbol{c}}^{{\rm T}}}=[{{c}_{1}},\ldots ,{{c}_{{{n}_{j}}}}]$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn047.gif) contains the sensitivity coefficients of the measurand with respect to the input quantities. The input error covariance matrix,

contains the sensitivity coefficients of the measurand with respect to the input quantities. The input error covariance matrix, ![$ \newcommand{\e}{{\rm e}} \boldsymbol{S}(\boldsymbol{x})=\left[ \begin{array}{@{}cccccccccccccccccccc@{}} {{u}^{2}}\left( {{x}_{1}} \right) & u({{x}_{1}},{{x}_{2}}) & \cdots \\ u({{x}_{2}},{{x}_{1}}) & {{u}^{2}}\left( {{x}_{2}} \right) & \cdots \\ \vdots & \vdots & \ddots \end{array} \right]$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn048.gif) , is square. Each row and each column corresponds to one input quantity. The elements represent the error covariance associated with pairs of input quantities, and, down the diagonal, the error variance (uncertainty squared) of each input quantity.

, is square. Each row and each column corresponds to one input quantity. The elements represent the error covariance associated with pairs of input quantities, and, down the diagonal, the error variance (uncertainty squared) of each input quantity.

The sensitivity coefficients are the first-order derivatives of the measurement function evaluated at the estimates of the input quantities and denoted by  . They can be analytically calculated when the measurement function can formally be differentiated, or can be numerically approximated using finite-difference methods, or can be experimentally determined.

. They can be analytically calculated when the measurement function can formally be differentiated, or can be numerically approximated using finite-difference methods, or can be experimentally determined.

Using the symbol conventions per satellite processing level in figure 2, consider a single-pixel L2 product calculated using a function  which combines measured Earth radiances,

which combines measured Earth radiances,  in

in  spectral channels and uses estimates, b, of the retrieval parameters. Then, the LPU takes the form

spectral channels and uses estimates, b, of the retrieval parameters. Then, the LPU takes the form  , where

, where ![${{\boldsymbol{y}}^{{\rm T}}}=\left[ {{y}_{1}},\ldots {{y}_{{{n}_{c}}}},{{\boldsymbol{b}}^{{\rm T}}} \right]$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn054.gif) . The vector of sensitivity coefficients relates the Earth radiance values in different spectral channels and the retrieval parameters to the retrieved L2 variable (see section 2.2.3):

. The vector of sensitivity coefficients relates the Earth radiance values in different spectral channels and the retrieval parameters to the retrieved L2 variable (see section 2.2.3):

and ![${{\left[ \boldsymbol{S}(\boldsymbol{y}) \right]}_{1,1}}={{u}^{2}}\left( {{y}_{1}} \right)$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn055.gif) ,

, ![${{\left[ \boldsymbol{S}(\boldsymbol{y}) \right]}_{1,2}}=u\left( {{y}_{1}},{{y}_{2}} \right),~$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn056.gif) etc. The derivatives in equation (3.2) are evaluated at the estimates (measured values) of the corresponding input quantities.

etc. The derivatives in equation (3.2) are evaluated at the estimates (measured values) of the corresponding input quantities.

3.2.3. Propagation of uncertainty: multiple measurands.

The LPU can be extended to multiple measurands (output quantities) (GUM-102 2011). Consider the case of retrieval of L2 variables from radiances,  , where Z consists of several geophysical quantities of interest obtained jointly from a common set of multi-channel radiances, Y. The propagated uncertainties associated with values of z are the contents of the following error covariance matrix:

, where Z consists of several geophysical quantities of interest obtained jointly from a common set of multi-channel radiances, Y. The propagated uncertainties associated with values of z are the contents of the following error covariance matrix:

Here,  is the error covariance matrix for the input quantities, expressed in terms of a diagonal matrix of input standard uncertainties, U, and a matrix of error correlation coefficients between input quantities,

is the error covariance matrix for the input quantities, expressed in terms of a diagonal matrix of input standard uncertainties, U, and a matrix of error correlation coefficients between input quantities, ![$ \newcommand{\e}{{\rm e}} \boldsymbol{R}=\left[ \begin{array}{@{}cccccccccccccccccccc@{}} 1 & {{r}_{1,2}} & \cdots \\ {{r}_{2,1}} & 1 & \cdots \\ \vdots & \vdots & \ddots \end{array} \right]$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn059.gif) . The sensitivity coefficient matrix has elements

. The sensitivity coefficient matrix has elements ![${{\left[ \boldsymbol{C} \right]}_{n,m}}=\frac{\partial {{g}_{n}}}{\partial {{y}_{m}}}$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn060.gif) , i.e.

, i.e.  , evaluated at the estimates of the corresponding input quantities. The output error covariance matrix, S, contains the error covariance of the estimates of the measurands. Even if none of the input terms has correlated errors (and thus R is diagonal), S will generally still be non-diagonal, if (as is likely) the sensitivity matrix includes terms for those output quantities that depend on the same input quantities. A summation of the impact of all error effects is implicit in the summation over all the input quantities.

, evaluated at the estimates of the corresponding input quantities. The output error covariance matrix, S, contains the error covariance of the estimates of the measurands. Even if none of the input terms has correlated errors (and thus R is diagonal), S will generally still be non-diagonal, if (as is likely) the sensitivity matrix includes terms for those output quantities that depend on the same input quantities. A summation of the impact of all error effects is implicit in the summation over all the input quantities.

A subtly different restatement of the LPU will prove useful in section 3.3, where the contribution to the overall error covariance matrix will be calculated one-effect-at-a-time and then summed over the effects explicitly. This approach will be adopted for considering the error covariances between different pixels in an image and between different channels at a given pixel location. This situation differs from the multiple-measurand case above in that now each pixel/channel radiance (output value) is the same function of its own set of input values—i.e. ![${{\boldsymbol{y}}^{{\rm T}}}=\left[\,f\left( {{\boldsymbol{x}}_{1}} \right),f\left( {{\boldsymbol{x}}_{2}} \right),\ldots \right]$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn062.gif) . In this case,

. In this case, ![${{\left[ \boldsymbol{C} \right]}_{n,m}}=0$](https://content.cld.iop.org/journals/0026-1394/56/3/032002/revision2/metab1705ieqn063.gif) for

for  , and C is square and diagonal. For a single effect,

, and C is square and diagonal. For a single effect,  , applying to measurement function term,

, applying to measurement function term,  , the contributed error covariance is

, the contributed error covariance is  . This looks the same as equation (3.5), but the meanings and structures of the matrices are a particular case. These points will be explained more fully below.

. This looks the same as equation (3.5), but the meanings and structures of the matrices are a particular case. These points will be explained more fully below.

3.3. Uncertainty analysis at Level 1

3.3.1. Hierarchical structure of error effects.

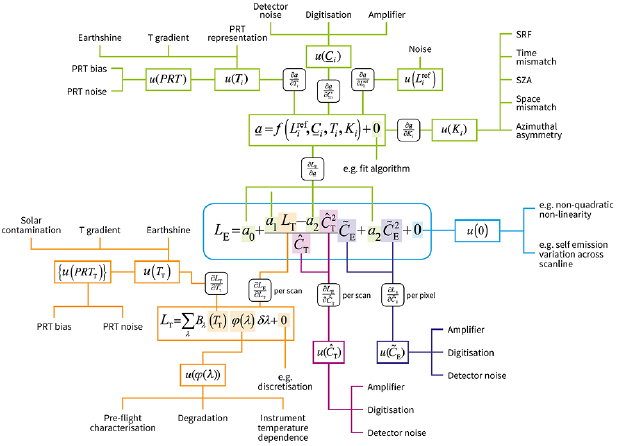

The uncertainty analysis we describe here is centred on the measurement function for calibrated radiances, the data of L1 products. Typically, some input quantities are directly determined (by measurement, parameterisation or data analysis), while others are determined through their own measurement function of other input quantities. Thus, there can be a hierarchical aspect to uncertainty analysis. To help organise a measurement-function centred analysis of uncertainty, it can be useful to prepare a graphical representation in the form of an uncertainty analysis diagram (figure 3).

Figure 3. Uncertainty analysis diagram: hypothetical case for a measurement function  , where the input quantities, the

, where the input quantities, the  are metrologically independent (have no common error). There are three identified effects that cause uncertainty in the first measurement function term,

are metrologically independent (have no common error). There are three identified effects that cause uncertainty in the first measurement function term,  . The contribution to uncertainty and error correlation (from spectral band to spectral band and/or from image pixel to image pixel) properties for each of these effects have been evaluated and encapsulated in a quantitative model for each effect, these models being indicated symbolically by

. The contribution to uncertainty and error correlation (from spectral band to spectral band and/or from image pixel to image pixel) properties for each of these effects have been evaluated and encapsulated in a quantitative model for each effect, these models being indicated symbolically by  , etc. The uncertainty in

, etc. The uncertainty in  propagates to the measurand via the sensitivity coefficient

propagates to the measurand via the sensitivity coefficient  . The second term is itself the result of an explicitly-calculated measurement function, meaning that the analysis is hierarchical. Two effects operating on different terms cause uncertainty in

. The second term is itself the result of an explicitly-calculated measurement function, meaning that the analysis is hierarchical. Two effects operating on different terms cause uncertainty in  . The propagation of uncertainty

. The propagation of uncertainty  , for example, to the measurand is via a sensitivity obtained by the chain-rule of sensitivity coefficients along the connecting branches:

, for example, to the measurand is via a sensitivity obtained by the chain-rule of sensitivity coefficients along the connecting branches:  . Three identifiable physical effects contribute to the uncertainty

. Three identifiable physical effects contribute to the uncertainty  , but these cannot be separately quantified. Instead, they are jointly evaluated in terms of their combined uncertainty and error correlation properties, and treated in calculation of propagated uncertainty as the single effect

, but these cannot be separately quantified. Instead, they are jointly evaluated in terms of their combined uncertainty and error correlation properties, and treated in calculation of propagated uncertainty as the single effect  . Lastly,

. Lastly,  represents the fact that the form of the measurement function itself embodies assumptions and approximations that cause uncertainty in the measurand that should be evaluated.

represents the fact that the form of the measurement function itself embodies assumptions and approximations that cause uncertainty in the measurand that should be evaluated.

Download figure:

Standard image High-resolution imageWe find that most radiance measurement function terms are sensitive to one or more effects, and the uncertainty due to each effect needs to be estimated. Quantities not subject to effects include mathematical and physical constants, and agreed reference quantities. In some cases, contributing effects may be estimated separately and then combined, and in other cases, all the effects operative on a single quantity may only be jointly estimated. Many effects are possible in EO: examples at L1 include detector noise, temperature gradients across reference targets, stray light ingress and temperature sensitivity of electronics. The '+ 0' term in a radiance measurement function represents effects relating to the assumptions underlying the form of the measurement function, e.g. that the sensor response is linear or quadratic in underlying radiance. Example effects for a particular sensor are discussed in section 4. Uncertainty analysis starts at the terminations of the uncertainty analysis diagram and propagates uncertainty through each measurement function, in sequence through the hierarchy as necessary.

Uncertainty analysis presumes that the result of a measurement has been corrected for all recognized systematic effects, and that every effort has been made to identify such effects (GUM 2008 section 3.2.4). This means that the measurand will be as accurate as possible given the current state of knowledge. When we perform analyses at the effects level we need to decide whether the effect could be fully, or partially corrected for, and if so we should apply the correction. The residual uncertainty after correction is smaller. Performing uncertainty analysis therefore often has the co-benefit of improving EO data.

3.3.2. Correlation of effects.

Each effect causes uncertainty, meaning it gives rise to an unknown error in each measured value of radiance. Errors may be independent, in common, or have intermediate degrees of correlation. Three different forms of correlation need to be understood to characterise L1 uncertainty for users:

- The error correlation between different quantities in the measurement function. Such error correlations arise from effects in common between different quantities in the measurement function, and where quantities are determined from a common fit to data.

- The error correlation between image pixels for a given spectral band, which may arise when different image pixels have used some of the same input data in the calculation.

- The error correlation between spectral bands for a given pixel, which may arise when different image pixels have used some of the same input data in the calculation.

It is advantageous for uncertainty analysis to formulate the measurement function to avoid the first form of correlation, so that effects are defined by construction to be independent. The LPU can then be expressed as a sum of covariance matrices over effects. Effect independence can be achieved by using hierarchical analysis to pre-combine terms that have a common error component. For example, consider calculation of a reflectance value that depends on the satellite view angle. The satellite view angle may be calculated from longitude and latitude values, both of which are sensitive to a common time error. We can construct the measurement function in terms of the satellite view angle and consider the effect of time error on that angle. A second example arises when there is error covariance between empirical calibration parameters that were obtained in a joint fitting procedure. We can treat these parameters as a single, vector input quantity, and handle the error correlation using their estimated error covariance.

Error correlation between pixels and spectral bands also arises from errors in common. It is important to distinguish 'common effects' from 'common errors'. The same effect might be present in multiple measurements (for example, all measured radiances will be sensitive to noise), but a common error only arises if the same instance (value) of a quantity is used. Earth-view radiances in an image come from different measurements for each channel and pixel, and therefore a different error instance in each radiance. In contrast, a single view of an on-board calibration target may be used to calibrate several lines of pixels, and therefore there is a common error instance for all those pixels. Similarly, any error in characterising the temperature of a black-body calibration target affects all the calibrated thermal channels.

3.3.3. Calculation of error covariance for L1.

Since our uncertainty analysis is based on metrologically-independent effects by construction of the measurement function, we can find the error covariance matrix for a vector of Earth radiance values (e.g. radiance values for different spectral channels and/or for different image pixels) as a sum over effect-specific error covariance matrices:

This is written as a sum over input quantities,  , in the measurement model. The sum is over the associated effects,

, in the measurement model. The sum is over the associated effects,  , i.e.

, i.e.  given

given  . We write it this way to make explicit that there may be multiple effects for a given input quantity, although, since effects influence only one quantity by construction, we could simply sum over effects. The sensitivity coefficient matrix,

. We write it this way to make explicit that there may be multiple effects for a given input quantity, although, since effects influence only one quantity by construction, we could simply sum over effects. The sensitivity coefficient matrix,  , is diagonal, because each radiance is separately determined from its own evaluation of its measurement model and any quantities in common are addressed in the correlation matrix (see below). Each row/column refers to one corresponding radiance in the vector measurand (i.e. per spectral band or per image pixel). The units of the sensitivity coefficient are the units of radiance divided by the units of the input quantity,

, is diagonal, because each radiance is separately determined from its own evaluation of its measurement model and any quantities in common are addressed in the correlation matrix (see below). Each row/column refers to one corresponding radiance in the vector measurand (i.e. per spectral band or per image pixel). The units of the sensitivity coefficient are the units of radiance divided by the units of the input quantity,  , and the derivatives are evaluated at the estimate of the quantity.

, and the derivatives are evaluated at the estimate of the quantity.

The uncertainty matrix  is a diagonal matrix of the same dimension, with the standard uncertainty associated with the effect down the diagonal. These uncertainties are in the units of the input quantity, j .

is a diagonal matrix of the same dimension, with the standard uncertainty associated with the effect down the diagonal. These uncertainties are in the units of the input quantity, j .

The correlation coefficient matrix  shows the error correlation for the effect

shows the error correlation for the effect  between the evaluations of the measurement function for the different radiances. Typically, all the radiances have the same form of measurement function, with the same terms. Some of these terms have an independent value for each evaluation of radiance, as when each radiance is estimated from the corresponding Earth counts. In this case, there is no correlation, and

between the evaluations of the measurement function for the different radiances. Typically, all the radiances have the same form of measurement function, with the same terms. Some of these terms have an independent value for each evaluation of radiance, as when each radiance is estimated from the corresponding Earth counts. In this case, there is no correlation, and  is the identity matrix. Other terms may have the same value for each evaluation of radiance, in which case

is the identity matrix. Other terms may have the same value for each evaluation of radiance, in which case  is the matrix of ones. In more complex cases, terms may have different values whose errors are neither fully correlated nor uncorrelated, in which case

is the matrix of ones. In more complex cases, terms may have different values whose errors are neither fully correlated nor uncorrelated, in which case  will have a complex, non-diagonal form.

will have a complex, non-diagonal form.

We choose to decompose the error covariance matrix for the term into uncertainty and correlation matrices,  , because correlation structures are often fixed even if uncertainty varies. Not all effects lend themselves to this treatment, and in such cases the error covariance matrix,

, because correlation structures are often fixed even if uncertainty varies. Not all effects lend themselves to this treatment, and in such cases the error covariance matrix,  , may be better evaluated directly. In this case, the contribution to the error covariance matrix for that effect would be

, may be better evaluated directly. In this case, the contribution to the error covariance matrix for that effect would be  .

.

The description above has considered a general set of radiances constituting a vector measurand. Typically, we are interested in one of three specific cases: radiance values across the elements of a line for a particular channel; radiance values across the lines for a particular element in a particular channel; and radiance values for a given pixel (line and element) across the channels measured by the sensor. In the first case, the result is the cross-element error covariance matrix for the given channel,  . In the second case, it is the cross-line error covariance matrix,

. In the second case, it is the cross-line error covariance matrix,  . The third case gives the cross-channel error covariance matrix,

. The third case gives the cross-channel error covariance matrix,  . The indices in this notation operate as follows. The subscript indicates the dimension across which the error covariances are calculated: thus the cross-channel matrix has subscript,

. The indices in this notation operate as follows. The subscript indicates the dimension across which the error covariances are calculated: thus the cross-channel matrix has subscript,  , for example. The superscript indicates a dimension that is not averaged across. Thus, the cross-element error covariance is evaluated for a particular line,

, for example. The superscript indicates a dimension that is not averaged across. Thus, the cross-element error covariance is evaluated for a particular line,  . Since it would never make sense to average error covariance matrices across channels, there is no need to have

. Since it would never make sense to average error covariance matrices across channels, there is no need to have  in the superscript: that is implicit. To calculate a cross-element error covariance matrix representative of many lines in an image,

in the superscript: that is implicit. To calculate a cross-element error covariance matrix representative of many lines in an image,  , we would need to evaluate

, we would need to evaluate  for many different lines, and then average these. Denoting by

for many different lines, and then average these. Denoting by  the average of

the average of  over an adequate set of lines,

over an adequate set of lines,  . Further,

. Further,  indicates that a cross-channel error covariance matrix is calculated at a particular pixel,

indicates that a cross-channel error covariance matrix is calculated at a particular pixel,  . Such a notation is required because there are many possible covariance matrices that can be calculated and used, and they need to be uniquely indexed.

. Such a notation is required because there are many possible covariance matrices that can be calculated and used, and they need to be uniquely indexed.

To make this description more concrete we can consider the cross-channel error covariance matrix calculation for a three-channel sensor subject to two effects. Let the first effect,  , be fully correlated, because a common value,

, be fully correlated, because a common value,  , is used in the calculation of all three channel radiances.