3.2. Roof Segmentation

Several of the typical segmentation methods for roof segmentation used in the comparison test are RANSAC [

19], 3D Hough transformation [

27], PCA + region growth (RG_PCA) [

36], iterative PCA + region growth (RG_IPCA) [

37], and the global optimization-based algorithm Graphcuts (Global energy) [

38]. The algorithms are all implemented with Microsoft Visual C++ under the Microsoft Windows 7 operating system. A personal computer with Intel Core i5, 2.5 GHz CPU, 4GB memory is used for the testing. The ground truth of roof segmentation for quality evaluation is obtained through manual editing.

The seven metrics utilized to evaluate CLEG and the compared algorithms are computation time (time), completeness (comp), correctness (corr) [

49], reference cross-lap (RCL), detection cross-lap (DCL) [

50,

51], boundary precision (BP), and boundary recall (BR) [

52].

Completeness is defined as the percentage of reference planes that are correctly segmented. This metric is related to the number of misdetected planes.

Correctness denotes the percentage of correctly segmented planes in the segmentation results. It indicates the stability of the methods.

TP in Equations (4) and (5) denotes the number of planes found in both the reference and segmentation results. Only the planes with a minimum overlap of 50% with the reference are true positives. FN denotes the number of reference planes not found in the segmentation results (i.e., false negatives). FP is the number of detected planes not found in the reference (i.e., false positives).

Reference cross-lap rate is defined as the percentage of reference planes that overlap multiple detected planes. This metric shows the over-segmentation of the methods.

Nr in Equation (6), denotes the number of reference planes, and is the number of reference roof planes that overlap more than one detected plane.

Detection cross-lap rate denotes the percentage of detected planes that overlap multiple reference roof planes. This metric shows the under-segmentation of the methods.

Nd in Equation (7) denotes the number of detected planes, and is the number of detected planes that overlap more than one reference roof plane.

Boundary precision measures the percentage of correct boundary points in the detected boundary points.

Boundary recall measures the percentage of correct boundary points in the reference boundary points.

Br in Equations (8) and (9) denotes the boundary point set in reference, Bd denotes the boundary point set in the segmentation results, and ││ denotes the number of points in a dataset. Over-segmentation may result in a high boundary recall ratio, whereas under-segmentation may lead to high boundary precision. Only when boundary precision and boundary recall are both high can the precision of the method be determined.

The same parameters are utilized in the comparison test to ensure the comparability of the results as shown in

Table 2.

Many gable roofs with large slopes are found in Vaihingen. The roof structure is also complex, as shown in

Figure 8a. Some noise points also exist (

Figure 8b). A complex roof structure with planes that have a small slope difference with its neighbor planes, and also with small structures, is shown in

Figure 8c. Many flat and gable roofs are found in Wuhan. The slope of gable roofs is not large. A flat roof is close to the gable roofs, as shown in

Figure 9a. A complex symmetric roof structure is shown in

Figure 9b. A symmetric trapezoid roof is shown in

Figure 9c. Many gable roofs with small slopes are found in Guangzhou. The nearly arc-shaped roofs results in weak edges of the planes, as shown in

Figure 10a.

Figure 10b,c show several complex structures and roofs close to one another.

Segmentation results of roof points in the Vaihingen area are shown in

Figure 8, and the evaluation of precision is listed in

Table 3.

Segmentation results of roof points in the Wuhan area are shown in

Figure 9, and the evaluation of precision is listed in

Table 4.

Segmentation results of roof points in the Guangzhou area are shown in

Figure 10, and the evaluation of precision is listed in

Table 5.

RANSAC runs fast when the point number is small (

Table 4). The time of dataset (a) is less than 1 ms. However, the algorithm runs slow when the point number is large. The voting procedure with all the left points is undertaken afresh when a plane is found. When the roof structure is complex, many errors occur because the spatial relationship of the neighbors is not considered. The results are shown in the black rectangles in

Figure 8a–c,

Figure 9a–c and

Figure 10a–c.

The voting space in 3D Hough transformation is first computed. The votes are then sorted, and the planes are detected in order. The region growth is finally used to obtain an entire plane in the supported points. The results of 3D Hough transformation are sometimes worse than those of RANSAC because one point may support many planes, and the remaining planes may not be the most supported ones. Many false planes are detected, as shown by the red rectangles in

Figure 8a–c and

Figure 9a–c. 3D Hough transformation has the same disadvantage as RANSAC and causes cross-planes without the use of normal vectors. The terminal condition is difficult to decide, and it uses the ratio of the smallest plane to the largest plane and the ratio of number of remaining points to total points may also lead to missing small planes, as shown by the center red rectangles in

Figure 10a–c.

RG_PCA employs the K-nearest neighbors (KNN) to obtain the neighbor relationship and compute the normal vectors using PCA. The regions are then grown using the normal vectors. PCA may produce unstable results in estimating the normal vector at the edge regions. Therefore, the methods do not perform well in segmenting the points close to the facet boundary, as shown by the green rectangles in

Figure 8a–c,

Figure 9a and

Figure 10c. KNN may produce an unstable neighbor relationship in areas with a largely uneven point density and results in over-segmentation, as shown by the green rectangles in

Figure 9b,c. The difference of the normal vectors at the edge areas is small when the slope is small. This causes under-segmentation, as shown by the green rectangles in

Figure 10a,b.

RG_IPCA utilized a triangulated irregular network (TIN) to obtain the neighbor relationship, compute the initial normal vectors using PCA, and grow to regions. This method can properly estimate the normal vectors at several boundary regions but may also lead to errors in several areas, as shown by the blue rectangles in

Figure 8b,c,

Figure 9a,b and

Figure 10c. RG_IPCA has the same disadvantage as RG_PCA when the slope is small. The method results is under-segmentation, as shown by the blue rectangles in

Figure 10a,b. Over-segmentation also exists in RG_IPCA, as denoted by the blue rectangles in

Figure 8a and

Figure 9c.

The global energy method utilizes Graphcuts to obtain the minimum energy. This method yields quiet accurate results but depends on a good initial input. Consequently, missed planes will also be missed in the optimization results, as shown by the yellow rectangles in

Figure 8c and

Figure 10a. The method also causes over-segmentation in noisy areas, as shown by the yellow rectangles in

Figure 8b and

Figure 9c. The improper neighbor relationship causes under-segmentation, as denoted by the yellow rectangle in

Figure 9b. The separated planes are combined because TIN may connect faraway points. The two facets are on the same plane because of symmetry. In other conditions, global energy can perform quite well and obtain complete results with the fewest points left. CLEG can properly handle these complex structures with very few missing points.

The proposed CLEG algorithm also has several disadvantages caused by the strict conditions of seed CLE selection. A seed CLE is not detected when the plane is small. Therefore, the plane may be missed, as shown by the red rectangle in

Figure 11.

3.3. Region Segmentation

The CLEG algorithm can also process the point cloud containing terrains, buildings, trees,

etc. The proposed method is similar to region growth methods. The comparison methods only include RG_PCA and RG_IPCA. The parameters used are shown in

Table 6. The difference is that the minimum number of points required for a valid plane is larger than that in roof segmentation, because if the number is small, there may be many false planes detected in tree areas.

Seven datasets are utilized to prove the effectiveness and speed of the proposed method. The description is listed in

Table 7.

Building the neighbor relationship possesses the highest computation cost in RG_PCA and RG_IPCA during the comparison test. The methods are different in the two algorithms. KNN is used in RG_PCA, and TIN is used in RG_IPCA. RG_PCA employs PCA to estimate the normal vectors of each point. The results may be unstable at boundary points, which often results in over-segmentation, as denoted by the blue rectangles in

Figure 12 and

Figure 13. RG_IPCA sometime estimates the false normal vectors and results in some false segmentation, as shown by the yellow rectangles in

Figure 12 and

Figure 14. Over-segmentation is also found in noisy areas, as shown by the blue rectangle in

Figure 13. Although the minimum point of a valid plane is 20, some planes are found in the tree areas, as shown by the green rectangles in

Figure 12 and

Figure 13. CLEG can handle these cases well with faster speed (

Table 7).

Segmentation results of the point cloud Vaighingen using a small dataset.

Segmentation results of the point cloud in the Wuhan area using a small dataset.

Segmentation results of the point cloud in the Guangzhou area using a small dataset.

KNN is very slow when large datasets are used for segmentation. Therefore, only RG_IPCA is used for comparison. RG_IPCA may result in false segmentation at the roof areas, as shown by the blue rectangles in

Figure 15,

Figure 16 and

Figure 17. False segmentation is also observed at ground area, as shown by the green rectangle in

Figure 16. Under-segmentation is found when the slope is small. This result is denoted by the red rectangles in

Figure 15 and

Figure 16. A cross-plane is denoted by the black rectangle in

Figure 17. Furthermore, many planes are found in the tree areas, as shown by the yellow rectangles in

Figure 15,

Figure 16 and

Figure 17. CLEG can still handle these areas well with less processing time.

Segmentation results of point cloud in the Vaighingen area using a large dataset.

Segmentation results of the point cloud in the Wuhan area using a large dataset.

Segmentation results of the point cloud in the Guangzhou area using a large dataset.

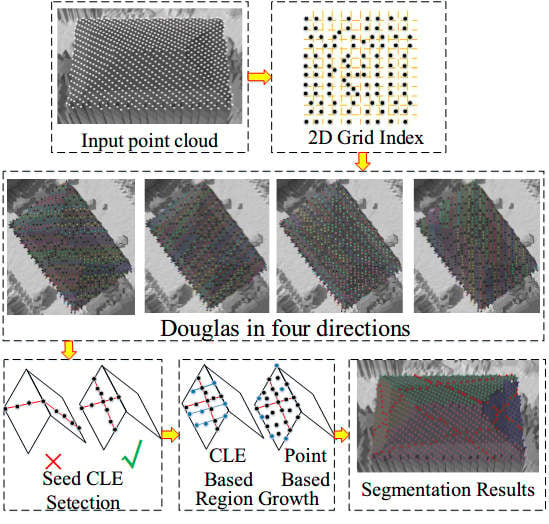

Building TIN in RG_IPCA during the test causes shortage in memory when a large point cloud with 12 million points is used. The CLEG algorithm can handle this large dataset, and completes the segmentation within 1 min (

Figure 18). The proposed algorithm uses grid indexing instead of point-based neighbor relationship and CLE growth to overcome the shortage of uneven point cloud density. The process that consumes the most computation time in CLEG is the sorting of the seed points, which can be improved in the future by parallel computing.