Abstract

Spatial ventriloquism refers to the phenomenon that a visual stimulus such as a flash can attract the perceived location of a spatially discordant but temporally synchronous sound. An analogous example of mutual attraction between audition and vision has been found in the temporal domain, where temporal aspects of a visual event, such as its onset, frequency, or duration, can be biased by a slightly asynchronous sound. In this review, we examine various manifestations of spatial and temporal attraction between the senses (both direct effects and aftereffects), and we discuss important constraints on the occurrence of these effects. Factors that potentially modulate ventriloquism—such as attention, synesthetic correspondence, and other cognitive factors—are described. We trace theories and models of spatial and temporal ventriloquism, from the traditional unity assumption and modality appropriateness hypothesis to more recent Bayesian and neural network approaches. Finally, we summarize recent evidence probing the underlying neural mechanisms of spatial and temporal ventriloquism.

Similar content being viewed by others

Introduction

At the opening ceremony of the 2008 Beijing Summer Olympic Games, the successful debut performance of a lovely little girl had attracted widespread attention. Accompanying her on-stage performance, indeed, an off-stage voice got “attached” to the lip-syncing girl. Her vivid vocal performance had been finally revealed as a successful implementation of spatial ventriloquism (http://www.youtube.com/watch?v=8BlEiCqQRxQ&feature=related). Obviously, there are many more mundane examples of spatial ventriloquism, such as simply watching a TV. Here, the audio is perceived where the action is seen, rather than at the actual location of the sound (i.e., the position of the loudspeaker). A similar illusion occurs in the temporal domain (i.e., temporal ventriloquism), because the sound and the video of the TV appear to be synchronous despite delays between the two signals. The spatial and temporal ventriloquist effects have also received considerable attention in the scientific literature, because they demonstrate a more general phenomenon—namely, that sensory modalities such as vision, audition, and touch interact and sometimes change the percept of each other (Calvert, Spence & Stein, 2004; Stein, 2012; Stein & Meredith, 1993). The resulting cross-modal illusions have turned out to be extremely useful tools for probing how the brain combines information from different modalities. In essence, it appears to be the case that when information from two different modalities are in slight conflict with each other, cross-modal combinational fusions arise that produce multisensory illusions that can be every bit as compelling as those within a given sense (Stein, 2012).

Here, we review the literature on these intersensory illusions in space and time. The main focus will be on interactions between audition and vision, because these modalities have been examined most often, but touch and motor actions will also be mentioned. We further distinguish whether an effect is an immediate effect or an aftereffect. Immediate effects refer to the direct perceptual consequences of presenting conflicting information in two modalities. Immediate effects are informative in telling whether the information sources were integrated or not. Aftereffects are measured later, and they can tell whether the modalities were readjusted or recalibrated, relative to each other, so that, in the future, they remain aligned in space or time. After describing these basic phenomena, we examine the extent to which intersensory binding in space and time depends on factors like attention, synesthetic congruency, and other more high-level semantic relations. Finally, we mention some recent Bayesian and neural network approaches. The reader should realize that in the vastly expanding literature on these topics, we had to be very selective. Table 1 provides a selection of studies that we considered a representative example of the paradigms currently used, while Table 2 summarizes the basic findings of this literature. We apologize for all those that have not been mentioned.

Spatial ventriloquism: Immediate effect

Probably the best known example of intersensory binding is the visual bias of auditory location, here referred to as spatial ventriloquism. In a typical demonstration of spatial ventriloquism, the performing artist would synchronize the movements of a puppet’s mouth with his own speech while avoiding movements of his/her own head or lips. The source of the sound is then mislocalized toward the position of the puppet’s mouth. Fortunately, experimental psychologists do not need to be as artistic as that, because they can use a stripped-down version of this setup that quite often consists of a single beep from one location delivered with a synchronized flash from another location. The task of the observer might be to point or make a saccade toward the (apparent) location of the sound or to decide whether the sound came from the left or the right of a reference point, while at the same time trying to ignore the visual distractor (see Fig. 1). Alternatively, observers may also be asked to judge whether the flash and beep originated from a single location (whether they “fused”) or not, in which case the visual stimulus cannot be ignored but is task relevant.

The spatial ventriloquist illusion manifests itself when the immediate pointing response toward the sound is shifted toward the visual stimulus despite instructions to ignore the latter (Alais & Burr, 2004b; Bertelson, 1999; Bertelson & Radeau, 1981; Brancazio & Miller, 2005; Howard & Templeton, 1966; Munhall, Gribble, Sacco & Ward, 1996; Radeau & Bertelson, 1987) or when, in the case of a fusion response, despite spatial separation, synchronized audiovisual stimuli fuse and are perceived as coming from a single location (Bertelson & Radeau, 1981; Godfroy, Roumes & Dauchy, 2003). This illusion has been demonstrated not only in human observers, but also in species such as cats, ferrets, and birds (Kacelnik, Walton, Parsons & King, 2002; King, Doubell, & Skaliora, 2004; Knudsen, Knudsen & Esterly, 1982; Knudsen & Knudsen, 1985, 1989; Meredith & Allman, 2009; Wallace & Stein, 2007).

Cross-modal mutual biases in localization responses have also been found in other modalities than the auditory and visual. In the visuomotor domain, there are famous prism adaptation studies that have been known since the late 19th century, when von Helmholtz published his seminal work in optics (von Helmholtz, 1962). During the mid-1960s, Held (1965) demonstrated that prism adaptation depends on the interaction between the motor and the visual systems and that such interaction normally induces a plastic change in the brain. There are also more recent studies reporting spatial attraction between the visual and somatosensory modalities (Blakemore, Bristow, Bird, Frith & Ward, 2005; Dionne, Meehan, Legon & Staines, 2010; Forster & Eimer, 2005; Rock & Victor, 1964; Serino, Farnè, Rinaldesi, Haggard & Làdavas, 2007 Taylor-Clarke, Kennett & Haggard, 2002) and between the auditory and tactile modalities (Bruns & Röder, 2010a, b; Caclin, Soto-Faraco, Kingstone & Spence, 2002; Occelli, Bruns, Zampini & Röder, 2012). Spatial ventriloquism can also be found with dynamic stimuli. In apparent motion, visual motion direction can attract the perceived direction of auditory motion (Kitajima & Yamashita, 1999; Mateeff, Hohnsbein & Noack, 1985; Soto-Faraco, Lyons, Gazzaniga, Spence & Kingstone, 2002; Soto-Faraco, Spence & Kingstone, 2004a, b, 2005; Stekelenburg & Vroomen, 2009), and auditory motion can attract visual motion (Alais & Burr, 2004a; Chen & Zhou, 2011; Meyer & Wuerger, 2001; Wuerger, Hofbauer & Meyer, 2003).

From a theoretical point of view, it is important to realize that in spatial ventriloquism, there is not a complete capture of sound by vision but, rather, a mutual attraction in space. The effect of vision on sound location is usually robust, whereas the reverse effect—sound attracting visual location—is usually quite subtle and has mostly been observed with visual displays that are difficult to localize (Alais & Burr, 2004b; Bertelson & Radeau, 1981, 1987). More recently, though, a particularly clear effect of sound on visual localization has been reported by Hidaka et al. (2009; see also Teramoto, Hidaka, Sugita, Sakamoto, Gyoba, Iwaya & Suzuki, 2012). These authors presented a blinking visual stimulus at a fixed location against a nontextured dark background. This static blinking stimulus is perceived to be moving laterally when the flash onsets are synchronized with an alternating left–right sound source. This illusory visual motion is particularly powerful when retinal eccentricity is increased, and it also works in the vertical dimension when sounds alternate in upper and lower space (for a demo, see www.journal.pone.0008188.s003.mov).

Temporal ventriloquism: Immediate effect

Less well-known is that an analogous phenomenon of intersensory binding occurs in the time dimension, referred to as temporal ventriloquism. Here, temporal aspects of a visual stimulus, such as its onset, interval, or duration, can be shifted by slightly asynchronous auditory stimuli (Alais & Burr, 2004a; Bertelson, 1999; Burr, Banks & Morrone, 2009; Chen, Shi & Müller, 2010; Fendrich & Corballis, 2001; Freeman & Driver, 2008; Getzmann, 2007; Morein-Zamir, Soto-Faraco & Kingstone, 2003; Recanzone, 2009; Scheier, Nijhawan & Shimojo, 1999; Sekuler, Sekuler & Lau, 1997; Vroomen & de Gelder, 2004b; Watanabe & Shimojo, 2001). One particularly clear manifestation of temporal ventriloquism is that an abrupt sound attracts the apparent onset of a slightly asynchronous flash (see Fig. 2). As in the spatial case, temporal ventriloquism can also be evoked by touch, and it can also become manifest in motor–sensory illusions (Bresciani & Ernst, 2007; Keetels & Vroomen, 2008a).

In general, researchers have interpreted temporal ventriloquism in terms of “capture” of auditory time onsets (or time intervals) over corresponding visual time onsets (or time intervals), rather than as a mutual bias between vision and audition, as in the case of spatial ventriloquism (e.g., Recanzone, 2009). An early demonstration of what one might, arguably, refer to as an example of temporal ventriloquism was reported by Gebhard and Mowbray (1959) in a phenomenon called auditory driving. They presented observers with a flickering light (5–40 Hz) and a fluttering sound (varying between ~5 and ~40 Hz). Observers reported that a constant flicker rate altered when the flutter changed, whereas the reverse effect (visual flicker altering auditory flutter) could not be observed. In more recent years, temporal ventriloquism has been demonstrated in a number of other paradigms: Besides auditory driving (Bresciani & Ernst, 2007; Gebhard & Mowbray, 1959; Recanzone, 2003; Shipley, 1964; Welch, DuttonHurt & Warren, 1986), or a variant of this called the double-flash illusion (Shams, Kamitani & Shimojo, 2000), researchers have used the flash-lag effect with accompanying sounds (Vroomen & de Gelder, 2004b), visual temporal order judgment (TOJ) tasks with accompanying sounds (Bertelson & Aschersleben, 2003; Morein-Zamir et al., 2003; Vroomen & Keetels, 2006), sensorimotor synchronization (Aschersleben & Bertelson, 2003; Repp, 2005; Repp & Penel, 2002; Stekelenburg, Sugano & Vroomen, 2011; Sugano, Keetels & Vroomen, 2010, 2012), and other variants of cross-modal temporal capture (Alais & Burr, 2004b; Bruns & Getzmann, 2008; Chen & Zhou, 2011; Freeman & Driver, 2008; Getzmann, 2007; Kafaligonul & Stoner, 2010; Shi, Chen & Müller, 2010; Vroomen & de Gelder, 2000, 2003).

A particularly useful setup that has provided a relative bias-free measure of temporal capture was first described by Scheier et al. (1999). They used a visual TOJ task in which observers judged which of two flashes had appeared first (top or bottom flash first?). Scheier et al. added two sounds that could be presented either before the first and after the second flash (condition “AVVA”) or in between the two flashes (condition “VAAV”; see Fig. 3).

Observers judge which of two flashes (upper or lower) appeared first. Judgment of visual temporal order is difficult because the flashes are presented at a stimulus onset asynchrony (SOA) below the just noticeable difference (JND). Two click sounds, one just before the first flash and the other after the second flash, make this task easier because the clicks shift the apparent onsets of the flashes, thereby increasing the apparent SOA above the JND. Sensitivity for visual temporal order thus improves when flashes are “sandwiched” by clicks (AVVA). If the clicks were presented in between the two flashes (VAAV), sensitivity would become worse, because clicks then decrease the apparent SOA (Scheier et al., 1999)

Participants could ignore the sounds because they were not in any sense informative about visual temporal order (e.g., the first sound does not tell whether the “upper” or “lower” light was presented first). Yet the two sounds effectively pulled the lights further apart in time in the AVVA condition, making the visual TOJ task easier in the sense that the sounds improved the visual just noticeable difference (JND). On the contrary, the two sounds attracted the two lights to be closer together in time in the VAAV condition, rendering the visual TOJ task more difficult. A single sound, either before or after the two flashes did not affect JNDs, thus indicating that the number of sounds had to match the number of flashes. Morein-Zamir et al. (2003) replicated Scheier et al. (1999), but with extended sound–light intervals. They found that abrupt sounds attract the onsets of lights if presented within a time window of ~±200 ms from the flash. Using the same paradigm, temporal ventriloquism has also been demonstrated between the visual and tactile modalities, in which taps capture the onsets of visual stimuli (Keetels & Vroomen, 2008a, 2008b), and between the auditory and tactile modalities (Ley, Haggard & Yarrow, 2009; Wilson, Reed & Braida, 2009).

Temporal ventriloquism is not restricted to the capture of onsets only but has also been observed in temporal interval perception in which visual intervals are perceived to be longer in the presence of concurrently paired auditory stimuli (Burr et al., 2009; Shi et al., 2010). Strong cross-modal temporal capture can also occur for an emergent attribute of dynamic arrays. Several recent studies examining apparent motion have shown that the direction of motion in a target modality (visual or tactile) can be modulated by spatially uninformative but temporally irrelevant grouping stimuli in the distractor (auditory) modality (Chen, Shi & Müller, 2011; Freeman & Driver, 2008; Kafaligonul & Stoner, 2010). Cross-modal temporal capture in motion perception has also been demonstrated in a task of categorizations of visual motion percepts (Getzmann, 2007; Shi et al., 2010). Temporal capture has also been demonstrated in synchronization tasks in which observers are quite capable of tapping a finger in synchrony with a click while ignoring a temporally misaligned flash, but when trying to tap in synchrony with a flash, participants have great difficulty ignoring a temporally misaligned click (Aschersleben & Bertelson, 2003; Repp, 2005).

Spatial ventriloquist aftereffects

Another signature of a “true” merging of the senses is that prolonged exposure to an intersensory conflict leads to compensatory aftereffects. For spatial ventriloquism, it consists of postexposure shifts in auditory localization toward the visual distractor (Bertelson, Frissen, Vroomen, & de Gelder, 2006; Canon, 1970; Frissen, Vroomen, de Gelder & Bertelson, 2003, 2005; Lewald, 2002; Radeau, 1973, 1992; Radeau & Bertelson, 1969, 1974, 1976, 1977, 1978; Recanzone, 1998; Zwiers, Van Opstal & Paige, 2003) and sometimes also in visual localization (e.g., Radeau, 1973; Radeau & Bertelson, 1974, 1976, Experiment 1). A simple procedure for measuring aftereffects is depicted in Fig. 4, where, after exposure to an audiovisual spatial conflict, unimodally presented test sounds are displaced in the direction of the conflicting visual stimulus seen during the exposure phase.

Setup for measuring a spatial ventriloquist aftereffect: Observers are first exposed for a prolonged time to an audiovisual spatial conflict (here, a train of flashes to the right of sounds). In an auditory posttest, the apparent location of the sound is shifted in the direction of the previously experienced conflict

It is generally agreed that these aftereffects reflect a recalibration process that is evoked to reduce the discrepancy between the senses. Most likely, this kind of recalibration is essential in achieving and maintaining a coherent intersensory representation of space, as in the case of prism adaptation (Held, 1965; Redding & Wallace, 1997; Welch, 1978). On a long-term scale, recalibration may compensate for growth of the body, head, and limbs, while on a short-term scale, it likely accommodates all kinds of changes in the acoustic environment that occur when, for example, one enters a new room.

Examining aftereffects has several interesting properties that are not available when testing immediate effects: One is that, during the posttest, observers do not need to ignore a visual distractor, because the test stimuli are presented unimodally. The advantage of this is that Stroop-like response conflicts between modalities, like an observer who points by mistake to a flash rather than a target sound, do not contaminate the picture. Another advantage is that one can probe for the occurrence of aftereffects at different stimulus values than the one used during exposure. This can tell one whether the changes were specific to the values used in the exposure situation or, instead, generalize to a range of neighboring values.

The magnitude of the aftereffect typically depends on the number of exposure trials and the spatial discrepancy experienced during exposure (usually between 5° and 15°). Usually, it is a fraction of that discordance, although it can vary considerably by about 10 %–50 % in humans (Bertelson et al., 2006; Frissen, Vroomen & de Gelder, 2012; Kopco, Lin, Shinn-Cunningham & Groh, 2009) and 25 % in monkeys (but see Recanzone, 1998, who obtained the same amount of aftereffect as the adapting displacement). When observers are adapted in a single location in space, visual recalibration of apparent sound location does not shift uniformly to the left or right, but the effect is bigger at the trained than at the untrained location (Bertelson et al., 2006). This location-specific aftereffect partly shifts with eye gaze (Kopco et al., 2009).

The transfer of the aftereffect has also been examined in the auditory frequency domain to investigate whether adaptation is specific for sound localization cues on the basis of interaural time differences (mainly used for low-frequency tones) and interaural level differences (mainly used for high-frequency tones). The critical examination is to use the same or different auditory frequencies in the exposure and test phases. The picture here is not entirely clear: Recanzone (1998) and Lewald (2002) reported that aftereffects did not transfer across frequencies of 750 and 3000 Hz (Bruns & Röder, 2012; Lewald, 2002; Recanzone, 1998), while Frissen and collaborators obtained transfer across even wider frequency differences of 400 and 6400 Hz (Frissen et al., 2003, 2005)

Spatial ventriloquism and its aftereffect are also effective in improving spatial hearing in monaural conditions when interaural difference cues are not available. For example, Strelnikov, Rosito and Barone (2011) had observers wear an ear plug for 5 days, during which time they were trained in five 1-h sessions to localize monaural sounds. Sound localization became most accurate if, during training, monoaural sounds were paired with spatially congruent flashes (in which case the flashes thus effectively evoked a ventriloquist effect because the monaural sounds were initially hard to localize). Training was less effective if participants received corrective feedback only after each response (i.e., right/wrong) or no feedback at all, thus demonstrating that the simultaneous flash was an effective “teacher” for sound location.

The speed with which the ventriloquist aftereffect builds up has also been examined. In traditional demonstration of the ventriloquism aftereffect, the acquisition takes minutes or even longer (Frissen et al., 2003; Recanzone, 1998). More recent evidence, though, has shown that the acquisition of a spatial ventriloquist aftereffect can be very fast, even after a single exposure to auditory–visual discrepancy, while the retention of the aftereffect is strong (Frissen et al., 2012; Wozny & Shams, 2011b). The ventriloquist aftereffect has also been examined in other modalities. Audiotactile ventriloquist aftereffects have been demonstrated by Bruns, Liebnau and Röder (2011a); Bruns, Spence and Röder (2011b). They also suggested that auditory space can be rapidly recalibrated to compensate for audiotactile spatial disparities. These rapidly established ventriloquism aftereffects likely reflect the fact that our perceptual system is in a continuous state of recalibration, an idea already proposed by Held (1965), but it remains to be examined whether these rapid adaptation effects differ from long-term adaptation.

Temporal ventriloquist aftereffects

It has only been discovered recently that prolonged exposure to relatively small cross-modal asynchronies (e.g., a sound trailing a light by 100 ms) result, as in the spatial domain, in compensatory aftereffects. Eventually, this asynchrony between the senses—or more precisely, a fraction thereof—may go unnoticed, and it becomes the reference for what is “in sync.” Both Fujisaki, Shimojo, Kashino and Nishida (2004) and Vroomen, Keetels, de Gelder and Bertelson (2004) first showed this in a similar paradigm (see Fig. 5): They exposed observers to a train of sounds and light flashes with a constant but small delay (~ − 200– ~ +200 ms) and then tested them in an audiovisual TOJ or simultaneity judgment (SJ) task. Both studies found that at the point where the two stimuli were perceived to be maximally synchronous, the point of subjective simultaneity (PSS), or the area in which the two stimuli were perceived as synchronous (the temporal window of simultaneity), was shifted (or expanded) in the direction of the exposure lag (Fujisaki et al., 2004; Vroomen et al., 2004).

Setup for measuring a temporal ventriloquist aftereffect: Observers are exposed for a prolonged time to an audiovisual temporal conflict (a sound appearing after a flash). In a posttest, observers then judge the temporal order of a flash and sound (“sound-late” or “sound-early”). After exposure to sound-late stimuli, a test sound appearing at an unexpected short interval after the flash is experienced to occur before the flash

Since then, many other studies have reported similar effects, extending these findings to other modalities, including tactile and motor–sensory timing and other domains like audiovisual speech (for an extensive review on perception of synchrony, see Vroomen & Keetels, 2010).

A particularly interesting finding of the temporal ventriloquist aftereffect is that repeated delays between actions and the perceptual consequence of that action can also result in a shift of the PSS such that the immediate feedback seems to precede the causative act (i.e., consequence-before-cause). Stetson, Cui, Montague and Eagleman (2006) examined this by using a fixed delay between participants’ actions (keypresses) and subsequent sensations (flashes). Observers often perceived flashes at unexpectedly short delays after the keypress as occurring before the keypress, thus demonstrating a recalibration of motor–sensory TOJs (Stetson et al., 2006). Similar findings have also been reported when the visual feedback consists of the otherwise naturalistic image of one’s own hand, projected on a mirror via a time-delayed camera, rather than an artificial flash (Keetels & Vroomen, 2012).

Of current interest is determining the exact locus at which temporal adaptation occurs. One view is that there are sensory-specific criterion shifts for when visual or auditory stimuli are detected. On this account, audiovisual asynchrony adaptation is achieved by a modulation of processing speed such that lagging stimuli are sped up and/or leading stimuli are slowed down (Di Luca, Machulla & Ernst, 2009; Navarra, Hartcher-O’Brien, Piazza & Spence, 2009). Ultimately, these shifts in the processing speed of the involved modalities may bring the two signals into temporal alignment with one another. One prediction of this account is that unimodal stimulus detection in the adjusted modality becomes slower for the leading modality and/or faster for the lagging modality. In line with this view, Navarra et al. (2009) reported that after audiovisual adaptation to sound-lagging stimuli, unimodal detection of the sound was sped up. Likewise, Di Luca et al. (2009) reported that auditory and visual latency shifts were dependent on the reliability of the involved modality.

Another prediction of this sensory-specific criterion-shift account is that the adjusted modality causes a shift of equal magnitude in other cross-modal combinations. For example, Sugano et al. (2010) compared lag adaption in motor–visual and motor–auditory pairings (i.e., a finger tap followed by a delayed click or a delayed flash) and reported that the PSS was uniformly shifted within and across modalities. Adaptation to a delayed tap–click thus shifted not only the perceived timing of a tap–click test stimulus, but also the perceived timing of a tap–flash test stimulus (and vice versa). They argued that this pattern was most easily accounted for by assuming that the timing of the motor component (when was the tap?) shifted.

Yet another prediction of the sensory-specific criterion-shift account is that adaptation to asynchrony produces uniform recalibration across a whole range of SOAs. Observers who shift their PSS by 30 ms to sound-leading thus should do this across a whole range of SOAs. Interestingly, contrary to this prediction, it has been reported that the magnitude of the induced shift is not equal for each SOA but actually increases as the SOA of the test stimulus moves away from the adapted delayFootnote 1 (Roach, Heron, Whitaker & McGraw, 2011). Roach et al. examined this by adapting observers to an audiovisual delay of either 100-ms sound-first or 100-ms light-first trials and then measured the perceived magnitude of temporal separation at a wider range of SOAs. The authors found that the magnitude of the induced bias was practically zero at the adapted delays themselves (if compared with no delay—i.e., 0-ms adaptation baseline) but increased as the SOA of the test stimulus moved away from that of the adaptor. To explain these findings, Roach et al. proposed that multisensory timing is represented by a dedicated population code of neurons that are each specifically tuned to different asynchronies. Intersensory timing is represented by the distributed activity across these neurons. When observers adapt to a specific delay—say, audiovisual pairs of 100 ms sound-first—it results in a reduction of the response gain of the neurons around the adapted delay of 100-ms sound-first. A simultaneous sound–light pair (at 0-ms lag) then causes a repulsive shift of the population response profile away from the adapted SOA, and a simultaneous pair is then perceived as ‘light-first’ (see also Cai, Stetson & Eagleman, 2012, for a similar model).

Another line of research has examined whether temporal recalibration is stimulus specific or, rather, generalizes across different stimulus values. Navarra, García-Morera and Spence (2012) reported that audiovisual temporal adaptation only partly generalizes across auditory frequencies, since exposure to lagging sounds of 250 Hz caused shifts of the PSS in an SJ task if the test sounds were of the same frequency (250 Hz) or slightly different (300 Hz) but the effect was smaller (although still significant) if the test sound was 2500 Hz. In a further quest for stimulus specificity, Roseboom and Arnold (2011) adapted observers to a male actor on the left of the center of a screen whose lip movements lagged the sound track, whereas a female actor was shown on the right of the screen whose lip movements preceded the soundtrack. Results showed that audiovisual synchrony estimates for each actor were shifted toward the preceding audiovisual timing relationship for that actor. Temporal recalibrations thus occurred in positive and negative directions concurrently. This refutes the idea of a generalized timing mechanism but, rather, supports the idea that perceivers can form multiple concurrent estimates of appropriate timing for audiovisual synchrony. In a similar vein, Heron, Roach, Hanson, McGraw and Whitaker (2012) showed that observers were able to simultaneously adapt to two opposing temporal relationships, provided stimuli were segregated in space. Perceivers thus could concurrently be adapted to “sound-first on the left” and “flash-first on the right.” Interestingly, no stimulus-specific recalibration was found when the spatial segregation was replaced by contextual stimulus features like “high-pitched sound-first” and “low-pitched sound late.” This may suggest that audiovisual timing is spatially selective or, alternatively, that adapters need to be sufficiently different from each other so that separate timing relations can be maintained.

Spatial and temporal criteria for intersensory pairing

The underlying notion for both spatial and temporal ventriloquism is that the brain integrates, despite small deviances in space and time, signals from different modalities into a single multisensory event. Critically, when the deviance between the signals in space or time is too large, the signals likely originate from different events, in which case there is no reason to “bind” the information streams. Consequently, there is then also no reason to fuse, integrate, or recalibrate, because two separate events are perceived. This notion raises the question of whether spatial and temporal ventriloquism actually depends on the same a priori criteria for intersensory binding. Surprisingly, from the literature, it appears that this is most likely not the case. There is a well-established finding that a variety of multisensory illusions are preserved over a time window of several hundred milliseconds surrounding simultaneity, giving rise to the notion of a “temporal window of integration” (Colonius & Diederich, 2004; Dixon & Spitz, 1980; van Wassenhove, Grant & Poeppel, 2007). In the same vein, one can adopt a “spatial window of integration” for when multisensory illusions are likely to occur. The question is whether these putative spatial and temporal windows of integration are the same for spatial and temporal ventriloquism. From the literature, it appears that they are quite different. For spatial ventriloquism, several behavioral and physiological studies have shown that the spatial ventriloquist effect disappears when the audiovisual temporal alignment is outside a −100 – + 300 ms window (−100 ms = sound before vision; +300 ms = sound after vision), while the horizontal spatial alignment should not exceed ~15° (Godfroy et al., 2003; Hairston, Wallace, Vaughan, Stein, Norris & Schirillo, 2003; Lewald & Guski, 2003; Slutsky & Recanzone, 2001; Radeau & Bertelson, 1977), although the specific degree of tolerated disparities could take a wide range (Wallace, Roberson, Hairston, Stein, Vaughan & Schirillo, 2004).

For temporal ventriloquism, though, these windows are quite different, since the temporal audiovisual asynchrony should not exceed ~200 ms, whereas spatial disparity plays almost no role. Concerning the temporal window, Morein-Zamir et al. (2003) reported that accessory auditory stimuli could shift the perceived time of occurrence of visual stimuli if presented within ~200 ms (Morein-Zamir et al., 2003). The double-flash illusion (the illusory flashing in the presence of multiple beeps) also declines when audiovisual asynchrony exceeds ~70 ms (Shams et al., 2000). Jaekl and Harris (2007) reported that temporal cross-capture of audiovisual stimuli takes place within a temporal disparity of ~125 ms (Jaekl & Harris, 2007). These relatively narrow temporal criteria for temporal ventriloquism to take effect may reflect the narrow integration time of polysensory neurons in the brain (Meredith, Nemitz & Stein, 1987; Recanzone, 2003).

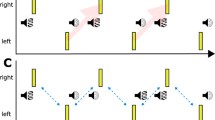

The most striking difference with spatial ventriloquism, though, is that temporal ventriloquism is hardly affected by spatial discordance (Bruns & Getzmann, 2008; Keetels & Vroomen, 2008a; Recanzone, 2003; Vroomen & Keetels, 2006). Vroomen and Keetels (2006) examined this in a setup shown in Fig. 6.

Observers judged which of two flashes (upper or lower) appeared first. Sensitivity for visual temporal order improved, relative to a silent control condition, when clicks were presented in an AVVA style (temporal ventriloquism [TV]). This TV-effect was not affected by whether the sounds came from the same location as rather than a different location than the lights (a), were static rather than moving (b), and came from the same rather than the opposite side of fixation (c). The laterally presented sounds in panel c were potent because they did capture visuospatial attention, but this did not affect TV (Vroomen & Keetels, 2006)

Observers were asked to judge whether a lower or upper LED was presented first (a visual TOJ task) while two accessory sounds were sandwiched in an AVVA style at ±100-ms SOAs such that they improved the visual JND (= temporal ventriloquism). Crucially, the improvement by the sounds was equal when sounds came from the same location as or a different location than the lights (Fig. 6a), for static sound sounds from the same location or for dynamic sounds with apparent motion from left to right or right to left (Fig. 6b), and for sounds and lights coming from the same side or the opposite sides of central fixation (Fig. 6c). In the setup of Fig. 6c, it could also be demonstrated in a visual detection task that the lateral sounds actually captured visuospatial attention, because observers were faster to detect a flash when the sounds came from the same, rather than the opposite, side of fixation. The sounds thus captured visuospatial attention (see, e.g., Driver & Spence, 1998). Temporal ventriloquism thus appears to be independent of the spatial separation between sound and flash, despite the fact that the location of the sounds was potent enough to capture visuospatial attention (see also Keetels & Vroomen, 2008a, for similar effects with tactile–visual stimuli). For audiovisual temporal recalibration, it also appears that spatial misalignment between sound and flashes does not decrease temporal recalibration (Keetels & Vroomen, 2007), although audiovisual spatial alignment can be of importance when sounds and flashes are presented in continuous streams with an ambiguous temporal ordering (Yarrow, Roseboom & Arnold, 2011). To summarize, it appears that temporal ventriloquism has, in comparison with spatial ventriloquism, a somewhat smaller window of temporal integration but a much wider, if not a nonexisting, window of spatial integration.

The role of attention for the spatial ventriloquist effect

An important controversy regarding the mechanism of multisensory binding is the degree to which it operates automatically, without the need for attention (e.g., Talsma, Senkowski, Soto-Faraco & Woldorff, 2010). In the visual domain, adaptation effects that were once thought to be entirely stimulus-driven have since proved to be remarkably susceptible to the attentional state of the observer (e.g., Verstraten & Ashida, 2005). Does the same apply to spatial and temporal ventriloquism?

The initial evidence about the role of attention suggested that spatial ventriloquism is largely an automatic phenomenon (e.g., Alais & Burr, 2004a; Bertelson & Aschersleben, 1998; Bertelson, Pavani, Ladavas, Vroomen & de Gelder, 2000a; Bertelson, Vroomen, de Gelder & Driver, 2000b; Bonath, Noesselt, Martinez, Mishra, Schwiecker, Heinze & Hillyard, 2007; Driver, 1996; Vroomen, Bertelson & de Gelder, 2001a, b). This assumption is supported by the observation that the ventriloquist effect is quite robust even if participants are instructed to be aware of the spatial discrepancy between the auditory and visual stimuli (Radeau & Bertelson, 1974) or, alternatively, the task becomes nontransparent by adopting a psychophysical staircase procedure (Bertelson & Aschersleben, 1998). Behavioral evidence from spatial cuing studies has also provided converging evidence demonstrating that (spatial) ventriloquism can be dissociated from where endogenous and exogenously cued visual attention is oriented (Bertelson et al., 2000a; Vroomen et al., 2001a, b). The automatic nature of the ventriloquist illusion is further supported by a study with patients with left visual neglect who consistently failed to detect a stimulus presented in their left visual field; nevertheless, their pointing to a sound that was delivered simultaneously with the unseen flash was shifted in the direction of the visual stimulus (Bertelson et al., 2000a).

More recently, though, some authors have questioned the full automaticity of the ventriloquist effect and suggested that attention might at least have a modulating influence (Fairhall & Macaluso, 2009; Röder & Büchel, 2009; Sanabria, Soto-Faraco & Spence, 2007b). Maiworm, Bellantoni, Spence and Röder (2012) examined whether audiovisual binding, as indicated by the magnitude of the ventriloquist effect, is influenced by threatening auditory stimuli presented prior to the ventriloquist experiment. This emotional stimulus manipulation resulted in a reduction of the magnitude of the subsequently measured ventriloquist effect in both hemifields, as compared with a control group exposed to a similar attention-capturing but nonemotional manipulation. This piece of evidence was taken to show that the ventriloquist illusion is not fully automatic, although there is no straightforward explanation why it is reduced. Borjon, Shepherd, Todorov and Ghazanfar (2011) also reported a novel finding of visual gaze steering auditory spatial attention. Specifically, visual perception of eye gaze and arrow cues presented slightly before sounds shifted the apparent origin of these sounds (delivered through headphones) in the direction by the arrows or eye gaze. In both conditions, the shifts were equivalent, suggesting a generic, supramodal attentional influence by visual cues on immediate sound localization. The authors claimed that they could distinguish (in their mathematical model) a simple response bias from a genuine perceptual shift, although this seems questionable to us. A simple response bias might be that whenever an observer is unsure about sound location, he/she responds in the direction of the eyes or arrow. The sounds whose location is ambiguous should then show a shift by gaze or arrows, but not the nonambiguous clearly localizable sounds. This was the actual pattern in the data, and it seems, therefore, that future studies need to examine the exact nature of this gaze/arrow effect on sound location in more detail.

The putative role of attention for the spatial ventriloquist aftereffect

The extent to which the ventriloquist aftereffect depends on attention has also been examined. A study by Eramudugolla, Kamke, Soto-Faraco and Mattingley (2011) reported that a robust auditory spatial ventriloquist aftereffect could be induced when participants fixated on a central visual stimulus while the audiovisual adaptors (a click sound with a spatially discordant flash) were presented in the periphery. A ventriloquist aftereffect was thus obtained despite the fact that the visual inducer had not been in the focus of attention. Possibly, though, despite central fixation, attention could have slipped through to the visual inducer. In a further attempt to examine the role of focal attention, the authors used a dual-task paradigm in which participants had to detect, during the exposure phase, a visual target at central fixation that was either easy or difficult to detect. In contrast with the notion that the difficult task would deplete attentional resources necessary for adaptation, these authors found that increasing load from low to high levels did not abolish the aftereffect but, in some conditions, actually enhanced it. The underlying basis of this load effect on the ventriloquist aftereffect remains unknown, but the results are in clear contradiction with the notion that attention increases (or is necessary for) intersensory binding. Clearly, though, further testing is necessary to resolve this controversy regarding the mechanisms of multisensory integration and the degree to which spatial ventriloquism might operate automatically.

The role of attention for the temporal ventriloquist effect

There is substantial evidence that the brain has “hard-wired” mechanisms for extracting spatial information from sound and vision, but the neural evidence for “hard-wired” mechanism to extract the relative timing from different senses is much weaker. In fact, explicit judgments about cross-modal temporal order can be very difficult and require high-level processing, which is quite unlike spatial judgments about sound and flashes, like pointing or saccades that are mainly driven in an automatic fashion. One simple but important observation is that detection of cross-modal synchrony can become almost impossible if the presentation rates are above 5 Hz, which is far below the temporal limits of the individual visual or auditory systems (Benjamins, van der Smagt & Verstraten, 2008; Fujisaki & Nishida, 2005, 2007). Fujisaki et al. proposed that audiovisual asynchrony perception is mediated by a ‘mid-level” mechanism that first needs to extract salient auditory and visual features before making temporal cross-correlations across sensory channels (Fujisaki & Nishida, 2005, 2007, 2008, 2010), as opposed to earlier detection by specialized low-level sensors. Further evidence in support of the idea that intersensory binding needs temporally salient events comes from the “pip-and-pop” paradigm (Van der Burg, Olivers, Bronkhorst & Theeuwes, 2008). These authors reported that only transient pips (as opposed to slowly changing sounds) can make a synchronized color/luminance change in the visual periphery more salient (Van der Burg, Cass, Olivers, Theeuwes & Alais, 2010). In addition, the capturing effect of transient sounds requires that visual attention be spread over the visual field rather than focused on fixation. The visual stimulus thus needs some attentional resources before a sound can capture its onset (Van der Burg, Olivers & Theeuwes, 2012).

It also appears that the auditory capturing stimulus needs to be segregated from the background. Keetels, Stekelenburg and Vroomen (2007) examined this in a visual TOJ task in which paired lights were embedded in a train of auditory beeps (see Fig. 7).

a Sounds presented in a train of other sounds need to differ in pitch, rhythm, or location to capture flashes. b When the sounds are identical to the other sounds, there is no intersensory binding with the flashes, because sounds are now grouped as one stream. Within-modality auditory grouping then takes priority over cross-modal audiovisual binding (Keetels et al., 2007)

The capturing sounds (those temporally closest to the visual flashes) had either the same features as or different features (like pitch, rhythm, or location) from the flanker sounds. The authors found that temporal ventriloquism occurred only when the two capturing sounds differed from the flankers, which made them stand out, thus demonstrating that (intramodal) grouping of the sounds in the auditory stream took priority over intersensory pairing. Audiovisual temporal ventriloquism thus requires salient auditory and visual stimuli with a sharp rise time of energy. The extent to which spatial ventriloquism requires similarly “sharp” auditory and visual transient stimuli to bias sounds in the spatial domain has, to the best of our knowledge, not been examined in a systematic way.

The role of attention for the temporal ventriloquist aftereffect

When studying audiovisual temporal recalibration, the majority of studies tried to ensure that observers actually saw the visual part of the audiovisual adapter by engaging observers in either a visual fixation task or a visual detection task of oddball stimuli that changed in luminance or size (Fujisaki et al., 2004; Keetels & Vroomen, 2007, 2008b; Navarra et al., 2009; Takahashi, Saiki & Watanabe, 2008; Vroomen et al., 2004). Heron et al. (2012; Heron, Roach, Whitaker & Hanson, 2010), though, had observers focus during adaptation on the temporal relationship of the adapting stimulus itself by using audiovisual oddball stimuli that differed in their temporal relation from the adapters. When observers selectively attended to the temporal relationship, the aftereffect was almost tripled relative to situations in which selective attention was focused on visual features of the same stimuli. Attending to the temporal order of the adapting stimuli itself thus appeared to be an effective booster of temporal recalibration, although it is important to note that diverting attention away from temporal order did not abolish the basic effect. This thus suggests that aftereffects of temporal recalibration may depend more on high-level processing than do aftereffects in spatial ventriloquism, but this idea needs further testing.

The role of cognitive factors for intersensory binding

When information is presented in two modalities, a decision has to be made (usually unconscious) about whether these two information sources represent a single object/event or multiple objects/events. This putative intersensory binding process likely involves an assessment of the degree of concordance of the total sensory inputs with a unitary source (Bertelson, 1998; Radeau, 1994a, b; Radeau & Bertelson, 1977, 1987). Only if the evidence points to a unitary source is information in the involved modalities integrated. A vexing question about this binding processing is whether higher-order cognitive factors play a role. Early studies examined factors that could bias intersensory binding or pairing toward “single” or “multiple” events. One of the factors considered was the compellingness of the situation. These early studies used fairly realistic situations, simulating real-life events such as a voice speaking and the concurrent sight of a face (e.g., Bertelson et al., 1994; Warren, Welch & McCarthy, 1981), or the sight of whistling kettles (Jackson, 1953) or of beating drums (Radeau & Bertelson, 1977, Experiment 1). Radeau and Bertelson (1977), for example, combined a voice with a realistic face (the sight of the speaker) and a simplified visual input (light flashes in synchrony with the amplitude peaks of the sound) and found that exposure to these two situations produced comparable spatial ventriloquist effects, suggesting that realism plays little if any role in spatial ventriloquism.

A critique on these older studies, though, is that comparisons were made across arbitrary stimulus classes (e.g., flashes vs. faces). Bertelson, Vroomen, Wiegeraad and de Gelder (1994) avoided this stimulus confound and obtained more direct evidence in an experiment in which observers heard, on each trial, a speech sound, either /ama/ or /ana/, from an array of seven hidden loudspeakers. At the same time, observers saw on a centrally located screen a face, either upright or inverted, that articulated /ama/ or /ana/ or remained still (the baseline; see Fig. 8).

Observers report what they hear (/ma/ or /na/) and point to where the sound comes from. The video of the speaker attracts, as compared with a static face, the apparent location of the sound (spatial ventriloquism), and it biases the identity of the perceived sound when sound and face are incongruent (McGurk effect). Inverting the face reduces the McGurk effect, but not spatial ventriloquism (Bertelson et al., 1994)

Participants had two tasks: They pointed toward the apparent origin of the sound, and they reported what had been said. The orientation of the face had a large effect on “what” was perceived (i.e., the McGurk effect; McGurk & MacDonald, 1976), but not on “where” the sound came from, because the upright and inverted faces were equally effective in attracting the apparent location of the speech sound. The spatial ventriloquist effect was also equally big for when the sound and the face were congruent (e.g., hearing /ama/ and seeing /ama/) or incongruent (hearing /ana/ and seeing /ama/). For speech sounds, it thus appeared that the orientation of the face and statistical co-occurrence between sound and lip movements did not affect spatial ventriloquism.

Similar questions have been asked more recently by a number of investigators, but with a slightly different approach. One prediction is that for strongly paired stimuli, it is difficult to judge the relative temporal order or the relative spatial location of the individual components, because they are fused (e.g., Arrighi, Alais & Burr, 2006; Kohlrausch & van de Par, 2005; Levitin, MacLean, Matthews, Chu & Jensen, 2000; Vatakis, Ghazanfar & Spence, 2008; Vatakis & Spence, 2007, 2008). Vatakis was the first to report such a “unity” effect in the temporal domain with audiovisual speech stimuli (human voices and moving lips) that were either gender matched (i.e., voices and moving lips belonging to the same person) or mismatched (i.e., voices and moving lips belonging to a different person). When the voice and the face were gender congruent, more multisensory binding took place, leading to a “unity effect,” which was evidenced by poor discrimination thresholds for audiovisual temporal asynchronies. Subsequent studies, though, showed that this phenomenon could not be observed when participants were presented with realistic nonspeech stimuli (Vatakis et al., 2008). Vroomen and Stekelenburg (2011) argued that these comparisons might suffer from the fact that stimuli were compared that differed on a number of low-level acoustic and visual dimensions. To address this concern, they used sine-wave speech (SWS) replicas of pseudowords and the corresponding video of a face that articulated these words. SWS is artificially degraded speech that, depending on instruction, is perceived as either speech or nonspeech whistles. Using these identical SWS stimuli, the authors found that listeners in speech and nonspeech modes were equally sensitive at judging audiovisual temporal order. In contrast, when the same stimuli were used to measure the McGurk effect, they found that the phonetic content of the SWS was integrated with lipread speech only if the SWS stimuli were perceived as speech, but not if perceived as nonspeech. Listeners in speech mode, but not those in nonspeech mode, thus bound sound and vision, but judging audiovisual temporal order was unaffected by this. The evidence for or against a role of cognitive factors in intersensory binding is, at present, thus rather mixed and waits further testing.

The role of synesthetic congruency for intersensory binding

Another factor that may contribute to the “compellingness” of the situation and, thus, may affect intersensory binding is synesthetic congruency. Synesthetic congruency, also referred to as crossmodal correspondences (Spence, 2011), refers to natural or semantic correspondences between stimulus features such as pitch/loudness in the auditory dimension with size/brightness in the visual dimension (Evans & Treisman, 2010; Gallace & Spence, 2006; Guzman-Martinez, Ortega, Grabowecky, Mossbridge & Suzuki, 2012; Makovac & Gerbino, 2010; Parise & Spence, 2008, 2009, 2012; Spence, 2011; Sweeny, Guzman-Martinez, Ortega, Grabowecky & Suzuki, 2012). As an example, people usually associate higher-pitched sounds with smaller/higher/brighter/sharper objects and lower-pitched sounds with larger/lower/dimmer/rounder objects (Hubbard, 1996), although this is not always consistent across cultures (Dolscheid, Shayan, Majid & Casasanto, 2011). In accord with the unity assumption, Parise and Spence (2009) reported that the relative temporal order and relative spatial position of synesthetically congruent pitch/size pairs (high–small or low–large) were more difficult to judge than in incongruent pairs (high–large or low–small; see Fig. 9). These findings cannot be explained in terms of simple response biases or strategies, and the reduced sensitivity for congruent pairings in space and time likely may reflect a stronger binding between the unisensory signals (see also Bien, ten Oever, Goebel & Sack, 2012; Parise & Spence, 2008).

a Large or small flashes were combined with high- or low-pitched tones in a synesthetically congruent (low–large, high–small) or incongruent (low–small, high–large) fashion. b Participants judged whether the sound or flalsh came second. c Judgments of temporal order were more sensitive (steeper curve = smaller just noticeable difference) with incongruent than with congruent pairs, presumably because congruent pairs fuse (Parise & Spence, 2009)

It might also be worth looking at what is known about the neural mechanisms of synesthetic congruency effects. The study by Bien et al. (2012) investigated pitch–size associations in spatial ventriloquism with transcranial magnetic stimulation (TMS) and event-related potentials (ERPs). As in Parise and Spence (2009), observers had more difficulty judging the location of low- or high-pitched tones when these tones were combined with small or large visual circles in a congruent fashion (small–high or large–low) rather than an incongruent fashion (small–low or large–high). ERP recordings showed that the P2 component at right parietal recording sites was, around 250 ms after stimulus onset, also more positive for congruent than for incongruent pairings. In addition, continuous theta-burst TMS applied over the right intraparietal sulcus diminished this congruency effect, thus indicating that the right intraparietal sulcus was likely involved in synesthetic congruency.

Audiovisual synesthetic congruency has also been examined in an fMRI study by Sadaghiani, Maier and Noppeney (2009), who examined whether sounds bias visual motion perception. They used a visual-selective attention paradigm in which observers discriminated the direction of visual motion at several levels of reliability while an irrelevant auditory stimulus was presented that was congruent, absent, or incongruent. The auditory stimulus could be a train of sounds moving from left to right or right to left (termed real auditory motion), a train of static sounds changing in pitch in an upward or downward direction (inducing “synesthetic motion” and biasing visual motion in the vertical direction), or a speech sound saying “up” or “down.” At the behavioral level, all three sounds induced an equally large directional bias of the visual motion percept. At the neural level, though, only the first stimulus (sounds moving in a leftward or rightward direction) influenced the classic visual motion processing area in the left human motion complex (hMT+/V5+), while the speech stimulus—and to a lesser extent, the pitched sounds—influenced the right intraparietal sulcus. This indicates that only natural motion signals were integrated in audiovisual motion areas, whereas the influence of speech and pitch emerged primarily in higher-level convergence regions.

Theoretical accounts and models for immediate effect and aftereffect

Bayesian approaches

When conflicting information is presented via two or more modalities, the modality having the best acuity usually dominates. For a long time, this finding was known as the modality appropriateness and precision hypothesis (Welch & Warren, 1980). In audiovisual spatial ventriloquism, the visual modality has better spatial acuity, and the auditory stimulus is therefore usually biased toward the visual stimulus (Howard & Templeton, 1966). In temporal ventriloquism, on the contrary, the auditory modality is more precise in discriminating the temporal relations, and the perceived arrival time of a visual event is therefore pulled toward the sound. A modern variant of this idea is that stimulus integration follows Bayesian laws. The idea here is that auditory and visual cues are combined in an optimal way by weighting each cue relative to an estimate of its noisiness, rather than one modality capturing the other (Alais & Burr, 2004b; Burr & Alais, 2006; Sato, Toyoizumi & Aihara, 2007).

Several studies have modeled audiovisual interactions with Bayesian inference. The general understanding of the Bayesian approach is that an inference is based on two factors, the likelihood and the prior. The likelihood represents the sensory noise in the environment or in the brain, whereas the prior captures the statistics of the events in the environment (Alais & Burr, 2004b; Battaglia, Jacobs & Aslin, 2003; Burr & Alais, 2006; Ernst & Banks, 2002; Sato et al., 2007; Shams & Beierholm, 2010; Shi et al., 2010; Witten & Knudsen, 2005). With a localization task, Alais and Burr (2004b) demonstrated that the ventriloquist effect results from near-optimal integration. When visual localization is good, vision dominates and captures sound, but for severely blurred visual stimuli, sound captures vision. Körding et al. (2007) extended this idea by formulating an ideal-observer model that first infers whether two sensory cues likely originate from the same event (the intersensory binding) and only then estimates the location from the two sources. They also argued that the capacity to infer causal structure is not limited to conscious, high-level cognition but is performed continually and effortless in perception (Körding et al., 2007).

Bayesian inference in spatial ventriloquism has also been examined with saccadic eye or head movements (Bell, Meredith, Van Opstal & Munoz, 2005; Van Wanrooij, Bell, Munoz & Van Opstal, 2009). When audiovisual stimuli are spatially aligned within ~20° of vertical separation and observers have to orient to an auditory or a visual target, both latency and accuracy improve, relative to the unisensory and misaligned conditions. This thus indicates a rule of “best-of-both-worlds” (Corneil, Van Wanrooij, Munoz & Van Opstal, 2002), in which observers benefit from the spatial accuracy provided by the visual component, and a shorter latency onset that is triggered by the auditory component. Prior expectations about the audiovisual spatial alignment also matter: That is, participants are faster on aligned trials when 100 % of the trials are aligned, rather than only 10 % of the trials (Van Wanrooij, Bremen & Van Opstal, 2010), thus suggesting that audiovisual binding may have a dynamic component that depends on the evidence for stimulus congruency as acquired from prior experience.

In the spatial ventriloquist effect, locations computed by vision, sound, and touch need to be coordinated. Each sensory modality, though, encodes the position of objects in different frames of reference. Visual stimuli are represented by neurons with receptive fields on the retina, auditory stimuli by neurons with receptive fields around the head, and tactile stimuli by neurons with receptive fields on the skin. A change in eye position, head position, or body posture will result in a change in the correspondence between the visual, auditory, and tactile neural responses that encode the same object. To combine these different information streams, the brain must therefore take into account the positions of the receptors in space. Pouget and colleagues have developed a framework to examine how these computations may be performed (Deneve, Latham & Pouget, 2001; Pouget, Deneve & Duhamel, 2002). This theory, targeting multisensory spatial integration and sensorimotor transformations, is based on a neural architecture that combines basis functions and attractor dynamics. Basis function units are used to solve the recoding problem and provide a biologically plausible solution for performing spatial transformations (Poggio, 1990; Pouget & Sejnowski, 1994, 1997), whereas attractor dynamics are used for optimal statistical inference. Most recently, Magosso, Cuppini and Ursino (2012) proposed a neural network model to account for spatial ventriloquism and the ventriloquist aftereffects, using two reciprocally interconnected unimodal layers (unimodal visual and auditory neurons). This model fits nicely with related biological mechanisms (Ben-Yishai, Bar-Or & Sompolinsky, 1995; Ghazanfar & Schroeder, 2006; Georgopoulos, Taira & Lukashin, 1993; Martuzzi, Murray, Michel, Thiran, Maeder, Clarke & Meuli, 2007; Rolls & Deco, 2002; Schroeder & Foxe, 2005). Both neural network models thus suggest that neural processing may be based on probabilistic population coding. The models dovetail well with in vivo observations and nicely explain the spatial ventriloquist effect and its aftereffect

Computational approaches on temporal ventriloquism: Bayes and low-level neural models

The Bayesian approach has also been adopted to understand temporal ventriloquism (Burr et al., 2009; Hartcher-O'Brien & Alais, 2011; Ley et al., 2009; Shi et al., 2010). Interestingly, different from the findings in spatial ventriloquism, all these studies report that the quantitative fit (dominance of auditory over visual in temporal perception) was less perfect than predicted by maximum likelihood estimation, although temporal localization of audiovisual stimuli was better than for the visual sense alone.

Miyazaki, Yamamoto, Uchida and Kitazawa (2006) hypothesized that Bayesian calibration is at work during judgments regarding audiovisual temporal order but that the effect is concealed behind lag adaptation mechanism (Miyazaki et al., 2006). By canceling lag adaptation by using two pitches of sounds, they successfully uncovered “Bayesian calibration” that was working behind lag adaptation (Yamamoto, Miyazaki, Iwano & Kitazawa, 2012). In a recent study, Sato and Aihara (2009, 2011) proposed a unifying Bayesian model to account for temporal ventriloquism aftereffects. According to the model, both lag adaptation and “Bayesian calibration” can be regarded as Bayesian adaptation, the former as adaptation of the likelihood function and the latter as adaptation of the prior probability. The model incorporates trial-by-trial update rules for the size of lag adaptation and the estimate of the peak of prior distribution.

Another interesting approach to understanding the neural and computational mechanism underlying temporal recalibration has been proposed by Roach et al. (2011) for audiovisual timing and by Cai et al. (2012) for motor–visual timing. In both accounts, the relative timing of two modalities is represented by the distributed activity across a relatively small number of neurons tuned to different delays. There is an algorithm that reads out this population code. In Roach et al., exposure to a specific delay selectively reduces the gain of neurons tuned to this delay, resulting in a repulsive shift of the population response to subsequent stimuli away from the adapted value. Cai et al. adopted a somewhat different approach to simulate adaptation to delayed feedback by changing the input weights of the delay-sensitive neurons. It seems that both models are well able to explain temporal recalibration, but at this stage, there is not sufficient evidence to reject either of the models. An appeal of this approach is that it is computationally specific and that shifts in perceived simultaneity following asynchrony adaptation could actually arise from computationally similar processes known to underlie classic sensory adaptation phenomena, such as the tilt aftereffect.

Neural mechanisms in spatial ventriloquism

To what extent is a sound that originates from, say, 5° on the left neurally identical to a sound that actually originates from the center but is ventriloquized by 5° to the left? This question relates to an ongoing debate about whether spatial ventriloquism reveals a genuine sensory process or is just the by-product of response biases. The sensory account emphasizes that inputs from the two modalities are combined at early stages of processing and that only the product of the integration process is available to conscious awareness (Bertelson, 1999; Colin, Radeau, Soquet, Dachy & Deltenre, 2002; Kitagawa & Ichihara, 2002; Stekelenburg, Vroomen & de Gelder, 2004; Vroomen et al., 2001a, b; Vroomen & de Gelder, 2003). The decisional account suggests that inputs from the two modalities are available independently and that the behavioral results mainly originate from response interference, decisional biases, or cognitive biases (Alais & Burr, 2004a; Meyer & Wuerger, 2001; Sanabria, Spence & Soto-Faraco, 2007; Wuerger et al., 2003).

The ERP technique, owing to its excellent temporal resolution, may provide a tool to further assess the level at which the ventriloquism effect occurs. The mismatch negativity (MMN), which indexes the automatic detection of a deviant sound rarely occurring in a sequence of standard background sounds, is assumed to be elicited at a preattentive level (Carles, 2007; Näätänen, Paavilainen, Rinne & Alho, 2007). It is known that a shift in the location of a sound can evoke an MMN. From the literature, it appears that a similar MMN can be evoked by an illusory shift when the location of a sound is ventriloquized by a flash, thus emphasizing the sensory nature of the phenomenon. Several studies have indeed reported that the MMN is reduced when the illusory perception of auditory spatial separation is diminished by the ventriloquist effect (Colin et al., 2002), while others have reported that an MMN can be evoked when an illusory shift (but physically stationary) sound is induced by a concurrent flash (Stekelenburg & Vroomen, 2009; Stekelenburg et al., 2004).

Bonath et al. (2007) combined ERPs with event-related functional magnetic resonance imaging (fMRI) to demonstrate that a precisely timed biasing of the left–right balance of auditory cortex activity by the discrepant visual inputs underlies the ventriloquist illusion. ERP recordings showed that the presence of the ventriloquist illusion was associated with a laterally biased cortical activity between 230 and 270 ms (i.e., the N260 component) as revealed in auditory–visual interaction waveforms. This N260 component was colocalized with a lateralized blood-oxygen-level-dependent (BOLD) response located in the posterior/medial region of the auditory cortex in the planum temporale (Bonath et al., 2007). Bruns and Röder (2010a) also substantiated the N260 as a signature for audiotactile spatial discrepancies. Furthermore, Bertini, Leo, Avenanti and Ladavas (2010) used repetitive transcranial magnetic stimulation (rTMS) to investigate an auditory localization task and replicated the finding that cortical visual processing in the occipital cortex modulates the ventriloquism effect (Bonath et al., 2007). From these results, it thus appears that sound localization is biased by vision and touch around 260 ms, with neural consequences in the auditory cortex (see also Bien et al., 2012). It should be noted, though, that this time course is relatively late if compared with the initial processing of sound location that already takes place at the level of the brainstem. A sound from central location that is ventriloquized by 5° to the left is, at least in the initial processing stages, thus not identical to a sound that originates from 5° on the left. Neural identity—or better, neural similarity—for these two sounds likely occurs later, around 260 ms, in the auditory cortex. It remains for future studies, though, to examine the exact nature of this.

Single neuron approach

Groundbreaking neurophysiological studies of the superior colliculus (SC) have established many principles of our understanding of multisensory processing at the level of single neurons (Meredith & Stein, 1983), and they continue to improve our understanding of multisensory integration in general (Stein & Stanford, 2008). This endeavor has made it possible to examine the mechanism of spatial ventriloquism on the single neuron basis. However, in order to probe the underlying neural mechanisms of the ventriloquist effect at the single neuron level, a suitable animal model where invasive studies can be conducted needs to be identified. Studies have documented several critical regions of the nonhuman primate brain that have multisensory responses, including the superior temporal sulcus (e.g., Benevento, Fallon, Davis & Rezak, 1977; Bruce, Desimone & Gross, 1981, Cusick, 1997), the parietal lobe (e.g., Cohen, Russ & Gifford, 2005, Mazzoni, Bracewell, Barash & Andersen, 1996, Stricanne, Andersen & Mazzoni, 1996), and the frontal lobe (Benevento et al., 1977; Russo & Bruce, 1994). Each of these areas could potentially be involved in multisensory processing that leads to the spatial ventriloquism effect. Direct evidence is lacking, though, due to lacking techniques and experimental methodology, given that it is difficult to have an animal perform a task in which an illusion may (or may not) occur, especially for a one-shot discrimination task of stimulus localization (Recanzone & Sutter, 2008).

Concluding remarks

The spatial and temporal ventriloquist illusions continue to serve as extremely valuable tools for studying multimodal integration. However, they also call for further endeavors of research. One challenge for the near future will be to design experiments that assess spatial and temporal ventriloquism in naturalistic environments so as to examine the role of cognitive factors for intersensory pairing. Another challenge is to specify how the brain represents stimulus reliability of different modalities and how it dynamically weighs stimulus timing and location. Bayesian observers and neural network models assume a correspondence between the architecture of the models and its underlying biological substrates (Ma & Pouget, 2008; Magosso et al., 2012; Stein & Stanford, 2008), but this awaits further proof. This “linking” holds the promise that a potential unifying theory based on an integrative “behavior–neurophysiology–computation” model is awaiting that will give a comprehensive depiction of spatial and temporal ventriloquism. Such a model would clearly facilitate our understanding of some of the basic principles of intersensory interactions in general, even if such understanding needs continuous refining.

References

Alais, D., & Burr, D. (2004a). No direction-specific bimodal facilitation for audiovisual motion detection. Cognitive Brain Research, 19, 185–194.

Alais, D., & Burr, D. (2004b). The ventriloquist effect results from near-optimal bimodal integration. Current Biology, 14, 257–262.

Arrighi, R., Alais, D., & Burr, D. (2006). Perceptual synchrony of audiovisual streams for natural and artificial motion sequences. Journal of Vision, 6, 260–268.

Aschersleben, G., & Bertelson, P. (2003). Temporal ventriloquism: Crossmodal interaction on the time dimension. 2. Evidence from sensorimotor synchronization. International Journal of Psychophysiology, 50, 157–163.

Battaglia, P. W., Jacobs, R. A., & Aslin, R. N. (2003). Bayesian integration of visual and auditory signals for spatial localization. Journal of the Optical Society of America. A, Optics, Image Science, and Vision, 20, 1391–1397.

Bedford, F. L. (1989). Constraints on learning new mappings between perceptual dimensions. Journal of Experimental Psychology. Human Perception and Performance, 15, 232–248.

Benjamins, J. S., van der Smagt, M. J., & Verstraten, F. A. (2008). Matching auditory and visual signals: Is sensory modality just another feature? Perception, 37, 848–858.

Bell, A. H., Meredith, M. A., Van Opstal, A. J., & Munoz, D. P. (2005). Crossmodal integration in the primate superior colliculus underlying the preparation and initiation of saccadic eye movements. Journal of Neurophysiology, 93, 3659–3673.

Benevento, L. A., Fallon, J., Davis, B. J., & Rezak, M. (1977). Auditory-visual interaction in single cells in the cortex of the superior temporal sulcus and the orbital frontal cortex of the macaque monkey. Experimental Neurology, 57, 849–872.

Ben-Yishai, R., Bar-Or, R. L., & Sompolinsky, H. (1995). Theory of orientation tuning in visual cortex. Proceedings of the National Academy of Sciences, 92, 3844–3848.

Bertelson, P. (1998). Starting from the ventriloquist: The perception of multimodal events. In M. Sabourin, F. I. M. Craik, & M. Robert (Eds.), Advances in psychological science. Vol.2: Biological and cognitive aspects (pp. 419–439). Sussex: Psychology Press.

Bertelson, P. (1999). Ventriloquism: A case of cross-modal perceptual grouping. In G. Aschersleben, T. Bachmann, & J. Müsseler (Eds.), Cognitive contributions to the perception of spatial and temporal events (pp. 347–362). Amsterdam: Elsevier.

Bertelson, P., & Aschersleben, G. (1998). Automatic visual bias of perceived auditory location. Psychonomic Bulletin & Review, 5, 482–489.

Bertelson, P., & Aschersleben, G. (2003). Temporal ventriloquism: Crossmodal interaction on the time dimension. 1. Evidence from auditory-visual temporal order judgment. International Journal of Psychophysiology, 50, 147–155.

Bertelson, P., Frissen, I., Vroomen, J., & de Gelder, B. (2006). The aftereffects of ventriloquism: Patterns of spatial generalization. Perception & Psychophysics, 68, 428–436.

Bertelson, P., Pavani, F., Ladavas, E., Vroomen, J., & de Gelder, B. (2000a). Ventriloquism in patients with unilateral visual neglect. Neuropsychologia, 38, 1634–1642.

Bertelson, P., & Radeau, M. (1981). Cross-modal bias and perceptual fusion with auditory-visual spatial discordance. Perception & Psychophysics, 29, 578–584.

Bertelson, P., & Radeau, M. (1987). Adaptation to auditory–visual conflict: Have top-down influences been overestimated here also? Madrid: Paper presented at the 2nd meeting of the European Society for Cognitive Psychology.

Bertelson, P., Vroomen, J., de Gelder, B., & Driver, J. (2000b). The ventriloquist effect does not depend on the direction of deliberate visual attention. Perception & Psychophysics, 62, 321–332.

Bertelson, P., Vroomen, J., Wiegeraad, G., & de Gelder, B. (1994). Exploring the relation between McGurk interference and ventriloquism. Proceedings of the International Congress on Spoken Language Processing, 559–562.

Bertini, C., Leo, F., Avenanti, A., & Ladavas, E. (2010). Independent mechanisms for ventriloquism and multisensory integration as revealed by theta-burst stimulation. European Journal of Neuroscience, 31, 1791–1799.

Bien, N., ten Oever, S., Goebel, R., & Sack, A. T. (2012). The sound of size crossmodal binding in pitch-size synesthesia: A combined TMS, EEG and psychophysics study. NeuroImage, 59, 663–672.

Blakemore, S. J., Bristow, D., Bird, G., Frith, C., & Ward, J. (2005). Somatosensory activations during the observation of touch and a case of vision-touch synaesthesia. Brain, 128, 1571–1583.

Bonath, B., Noesselt, T., Martinez, A., Mishra, J., Schwiecker, K., Heinze, H. J., & Hillyard, S. A. (2007). Neural basis of the ventriloquist illusion. Current Biology, 17, 1697–1703.

Borjon, J. I., Shepherd, S. V., Todorov, A., & Ghazanfar, A. A. (2011). Eye-gaze and arrow cues influence elementary sound perception. Proceedings of the Royal Society. B:Biological Sciences, 278, 1997–2004.

Brancazio, L., & Miller, J. L. (2005). Use of visual information in speech perception: Evidence for a visual rate effect both with and without a McGurk effect. Perception & Psychophysics, 67, 759–769.

Bresciani, J. P., & Ernst, M. O. (2007). Signal reliability modulates auditory-tactile integration for event counting. Neuroreport, 18, 1157–1161.

Bruce, C., Desimone, R., & Gross, C. G. (1981). Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. Journal of Neurophysiology, 46, 369–384.

Bruns, P., & Getzmann, S. (2008). Audiovisual influences on the perception of visual apparent motion: Exploring the effect of a single sound. Acta Psychologica, 129, 273–283.

Bruns, P., Liebnau, R., & Röder, B. (2011a). Cross-modal training induces changes in spatial representations early in the auditory processing pathway. Psychological Science, 22, 1120–1126.

Bruns, P., & Röder, B. (2010a). Tactile capture of auditory localization: An event related potential study. European Journal of Neuroscience, 31, 1844–1857.

Bruns, P., & Röder, B. (2010b). Tactile capture of auditory localization is modulated by hand posture. Experimental Psychology, 57, 267–274.

Bruns, P., Spence, C., & Röder, B. (2011b). Tactile recalibration of auditory spatial representations. Experimental Brain Research, 209, 333–344.

Bruns, P., & Röder, B. (2012). Frequency specificity of the ventriloquism aftereffect revisited. Poster presented at 4th International Conference on Auditory Cortex. August 31st – September 3rd, 2012 in Lausanne, Switzerland

Burr, D., & Alais, D. (2006). Combining visual and auditory information. Progress in Brain Research, 155, 243–258.

Burr, D., Banks, M. S., & Morrone, M. C. (2009). Auditory dominance over vision in the perception of interval duration. Experimental Brain Research, 198, 49–57.

Caclin, A., Soto-Faraco, S., Kingstone, A., & Spence, C. (2002). Tactile “capture” of audition. Perception & Psychophysics, 64, 616–630.

Cai, M. A., Stetson, C., & Eagleman, D. M. (2012). A neural model for temporal order judgments and their active recalibration: A common mechanism for space and time? Frontiers in Psychology, 3, 470.

Calvert, G. A., Spence, C., & Stein, B. E. (2004). The Handbook of multisensory processes. Cambridge: MIT Press.

Canon, L. K. (1970). Intermodality inconsistency of input and directed attention as determinants of the nature of adaptation. Journal of Experimental Psychology, 84, 141–147.

Carles, E. (2007). The mismatch negativity 30 years later: How far have we come? Journal of Psychophysiology, 21, 129–132.

Chen, L., Shi, Z., & Müller, H. J. (2010). Influences of intra- and crossmodal grouping on visual and tactile Ternus apparent motion. Brain Research, 1354, 152–162.

Chen, L., Shi, Z., & Müller, H. J. (2011). Interaction of Perceptual Grouping and Crossmodal Temporal Capture in Tactile Apparent-Motion. PLoS One, 6(2), e17130.

Chen, L., & Zhou, X. (2011). Capture of intermodal visual/tactile apparent motion by moving and static sounds. Seeing and Perceiving, 24, 369–389.