Abstract

A real-time machine vision detection system based on computer for fabric defect detection is presented in this paper. Hardware platform and software algorithm are the two main parts included in it. In hardware platform, image acquisition subsystem and transmission operated synchronously to achieve synchronization between motion and acquisition through the encoder and video capture card. Moreover, double-buffer technique with an alternative acquisition mode is applied to make the system more real-time. Each defect detection algorithm is regarded as a single detection unit which is integrated in the software system. Then different detection units are employed at different fabrics and defects to gain better detection efficacy. It could be concluded that the proposed system provides a lower cost, higher performance and more excellent expansibility solution for enterprises via the variety of experiments.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Matters to the economic benefits, it is crucial for every enterprise to have quality control and product testing in textile industry. The existence of defects will drop 45 % to 60 % on the value of fabric according to the statistical data [2], which has a great effect on its market competitiveness. Actually, defect detection is the main task of product quality checking for the textile enterprises. Although with low efficiency, poor accuracy, high missing rate and human factors, human vision inspection has occupied the dominant position in practical production [8], which will no longer meets the need of modern textile industry. It is reported that the detection rate will be lower than 60 % while an experienced inspector detecting a batch of 2 m-width cloth at the speed of 30 m/s [3]. Vision exhausted, human sight damaged and exponential decline of detection rate will be caused after continuous human vision inspecting. It will be a trend that human vision inspection method is doomed to be eliminated, so a low cost, high performance and real-time detection system is urgently demanded. In recent years, many researches for automatic fabric defect detection have been done at domestic and abroad. Most of them can be classified into four classes including structure based, statistics based, model based and spectral analyze based [9]. Many algorithms are considered as classical methods in image processing field such as Fast Fourier Transform, Gabor filter [10], gray level co-occurrence matrix and Wavelet transform [6, 7]. However, these methods are unavailable to various target objects and most of them are only validated at a simulation environment without applying to industrial situations. In addition, these algorithms are fruitless for the research achievements to solve the practical problems because of their relatively poor efficiency and real time in the industry.

In this paper, machine vision detection system based on Personal Computer (PC) is presented for fabric detection with low cost, high accuracy (0.264 mm/pixel) and good real time. For selection of standard components, transmission and image acquisition subsystem are possesses with great scalability in whole system. Each algorithm is taken as a single detection unit integrated in software system. Furthermore, the import of double-buffer [3] alternative acquisition technique, image mosaic [4], database and report printing will greatly strengthen real time and input-output abilities of the system. Finally, software system and hardware platform are combined through a feature-rich graphical user interface, which brings much convenience.

The paper is constructed as follow: Sect. 2 shows the system hardware platform in details. Section 3 introduces core detection methods including Otsu method (Otsu), Golden image subtraction method (GIS), and then a graphical user interface is designed. Section 4 gives performance tests and experiment results, including detection effects, precision and real time.

2 Hardware Platform

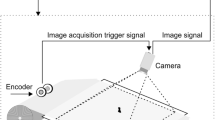

The hardware platform of Machine vision detection system is mainly contains three aspects, mechanical frame, transmission and image acquisition subsystem. The interaction between proposed system and looms could be come true, which brings full automatic production. The design of whole system is shown in Fig. 1. Besides, transmission and image acquisition subsystem play essential roles in the detection system.

2.1 Transmission

Transmission is made up of shafts, motors, transducers and encoder. Several driven shafts and three driving shafts that are driven by motors in transmission. When the system is running in a high speed, the phenomenon of fabric shaking will leads to poor acquisition quality in practical application. To solve the problem, driven shafts are installed as a structure of shaft group, then tension of fabric is enlarged on shafts and fabric shaking is eliminated in detection area.

Transmission in machine vision detection system is operated as followings:

-

(1)

Through 485 bus, commands are transmitted from computer to transducers in modbus protocol.

-

(2)

Motors are driven by transducers when the commands have been received.

-

(3)

The rolling shafts driven by motors makes testing fabric running.

-

(4)

Pulse signal generated from encoder that is driven by shafts, which will be external trigger signal for synchronous acquisition.

2.2 Image Acquisition

Image acquisition subsystem is a critical part in the whole system, which affects the performance of detection. Line scan cameras, video capture card, illuminant and a high performance computer are involved in image acquisition.

The procedure of image acquisition could be executed in four steps:

-

(1)

Encoder driven by a shaft outputs external trigger signal to PCI video capture card.

-

(2)

From I/O port, video capture card receives external trigger signal and controls the CCD cameras exposuring, collecting a row of image (2048 pixels) via camera link ports.

-

(3)

After running step 1 and step 2, object image is collected in form of row by row until it reaches 500 rows and a frame (2048 \(\times \) 500) is captured.

-

(4)

Video capture card sends the image data to RAM through PCI port in computer and displays it on VGA monitor.

The image acquisition subsystem is shown in .

To capture high quality image of target object, E2V SM2-CL series line scan CDD cameras are selected. Their line scan frequency reaches to 28 KHz. The camera has a high resolution of 2048 pixels and equips a camera link port with the data rate of 60 Mb/s, which meets the need of image acquisition at a high speed and resolution.

As for Automated optical inspection equipment, the quality of image acquisition is relevant to illuminant. The LED matrix illuminant is chosen to provide system lighting for the reason of long life, energy saving, uniform stability and lasting operation. The incident light and transmitted light are integrated in the system in practical application. The incident light is introduced to enhance fabric surface texture and defects. While transmitted light is installed below the target object to stand out background defects.

The video capture card and computer play key roles in section of image acquisition and processing. For the need of image acquisition, Bitflow Inc Neon-CL series video capture card is selected because of its advanced data processing ability, high speed camera link ports, PCI data exchange interface and rich external I/O ports. Owing to the design of duo-core FPGA and DSP structure, video capture card has acquisition speed of 85 MHz 24 bit, which supports the real-time performance. In addition, synchronization between image acquisition subsystem and transmission is another job of video capture card.

Concurrent working strategy of multi-camera is introduced to adapt to wide scale detecting situation. Cameras are installed at same distance, receiving a same external trigger signal and outputting synchronous images. In Fig. 3, the coordinate of detection area is built with X axis for running direction and Y axis for width direction. The scan area of each camera could have its own coordinate which has the same X axis and offset Y axis. With the help of coordinate system, defects locating could be achievable, then combined with image mosaic algorithm, all scan images fuse together and build up full scale detection.

3 Detection Algorithm

In proposed system, machine vision detection algorithm has mach effect on the detection performance. The precision and running speed will be key factors in researching to detection algorithms for the need of practical applications in industrial field. As for the current research in fabric field, its not an easy thing to find up a detection algorithm to deal with various fabrics and defects in practical production. The combination of multi-algorithm is proposed in fabric defect detection. Every detection algorithm is regarded as a detection unit in the software system and then different algorithms exert their advantages to different target fabric, which makes the system stronger and smarter. Figure 4 gives the model of detection method.

To realize the real-time system, operational efficiency and time consumption are strictly demanded in the design of algorithms. Imagining, three line scan CCD cameras are selected to give a 1500 mm-width cloth a full scale scan with its running speed of 80 m/min. Furthermore, the defects with the size of 1 mm \(\times \) 1 mm on fabric surface are required to be detected. Calculation period should strictly limited in a third period of single cycle acquisition. That is to say, three images (resolution 0.264 mm/pixel) with the size of 2048 \(\times \) 500 must be processed within a period of capturing a frame in detecting task. The parallel working mode in double-buffer alternative acquisition technique is shown in Fig. 5. The data in Buffer A is being processed after acquisition and Buffer B captures the image data at the same time. It would be cycles in this way. Compared with the serial data processing mode of single buffer, double-buffer alternative acquisition technique has doubled the running efficiency of the system that enhances the real time in data processing.

3.1 Otsu Algorithm

As a method of maximum variance between the two classes, Otsu algorithm divides test image into two classes including foreground and background [11]. In this system, background can be seen as image texture and foreground represents surface defects. A suitable threshold T is gained from algorithm to give defects segmentation, then fabric defects and background texture will be decoupled.

Owing to the principle, Otsu algorithm has a feature of high speed and shorter detection period which is most suitable for the fast fabric detection. Figure 6 shows the procedure of algorithm.

Assuming the test fabric image has L gray levels, the pixels in level q have an amount of \(n_{q}\) and the total of pixels in the image can be expressed as \(n=n_{0}+n_{1}+\ldots +n_{L-1}\). In the condition of uniform quantization, gray level probability \(P_{q}\) is given in Eq. (1). \(\omega (K)\) and \(\mu (K)\) represent gray probability cumulative value and mean value of pixels below the K gray level as shown in Eqs. (2) and (3):

If the test image is divided into two classes by the gray level threshold T, class \(C_{0}\) represents image background texture (gray level from 0 to T) and class \(C_{1}\) represents surface defects (gray level from \(T+1\) to \(L-1\)). The probability of class \(C_{0}\) and class \(C_{1}\) can be defined as Eq. (4):

The mean values of two classes are shown in Eq. (5) :

The average gray value of whole image can be expressed as Eq. (6):

where, when the variance \(\sigma ^{2}_{B}\) between two classes reaches its maximum value, the corresponding threshold T selected from gray level \([0, L-1]\) is the perfect segmentation threshold of Otsu algorithm. The image will be given a binary processing by threshold T.

3.2 Golden Image Subtraction Method

Golden image subtraction method (GIS) is a kind of algorithm which is specialized in the defects on fabric with repeating patterns, such as grey cloth, plaid cloth and other cloth with the same pattern [5].

Preprocessing, defect detection and post processing are the three main sections in algorithm procedure. First of all, Preprocessing is necessary after the image acquisition. The use of Gaussian smoothing is to eliminate image background texture and random noise, then histogram equalization is to enhance the defects; Secondly, defects are segmented from test image by a proper threshold that is gained from training algorithm on the reference image; Finally, the detection results from GIS algorithm are dealt with median filtering, which could eliminate the noise.

GIS algorithm is based on the theory of graphic subtraction as shown in Fig. 7. With the theory, defects could be segmented from test images through the subtraction between golden template image and the sub image that is intercepted from test image of same size. The energy of GIS is defined as Eq. (8):

where \(x=1,...,M-m+1\), \(y=1,...,N-n+1\). The golden template image G is extracted from reference image with the size of \(m\,\times \,n\) that should be at least bigger than the smallest repeating pattern on test image. While the sub image \(H_{xy}=H_{xy}(i,j)\) extracted from the test image has the same size with G. R in a piece of area can be defined as the mean of absolute value between G and H. The execution steps of GIS algorithm are shown as follow:

-

(1)

Extract golden template image G with the size of \(m\,\times \,n\) from reference image.

-

(2)

According to Eq. (8), the GIS energy of a pixel can be achieved by calculating the mean of absolute value between G (golden template image) and H (extracted sub image from test image). As show in Fig. 8, from the 1-st pixel to the \((M-m+1)\)-th pixel in first row and from the 1-st row to the \((N-n+1)\)-th row, GIS energy matrix with the size of \((M-m+1)\times (N-n+1)\) is created.

-

(3)

As output from GIS detection, GIS energy matrix R will be returned.

-

(4)

Every element from GIS energy matrix of test image will be compared with the threshold T. When the element value is bigger than T, it will be set as 1. On the opposite, it will be 0. Then binary image with the size of \((M-m+1)\times (N-n+1)\) can be gotten. Owing to repeating pattern and no defects, the reference image has uniform values in GIS energy matrix. Defects have obvious difference from normal areas in test image and energy is happened to jump beyond the threshold. The threshold T can be defined as the maximum value of reference image GIS energy matrix.

In Post processing, result generated from GIS thresholding has been mixed with white noise and random noise, which can’t be the final result of detection. It should be filtered to reduce the effect of noise and improve the accuracy of detection. Through validation, the introduction of median filtering has a best effect on noise smoothing and final detection result will be given in Sect. 4.

3.3 Graphical User Interface

To validate proposed algorithm, both completed software system and well designed graphical user interface are to be developed. Meanwhile, their development could put the detection system into practical use. Software of proposed machine vision detection system is exploited with C++ programming language at Windows 7 32 bit operation system and development to detection algorithm involved in C++ library, OpenCV and video capture card software development kit. Graphical user interface is built in visual C++ MFC development environment. In Fig. 9, framework of machine vision software system is given.

As shown in Fig. 9, software in machine vision detection system mainly consists of three sections.

-

(1)

Module of user management contains many functions such as adding users, user delete, system login and authority management.

-

(2)

As shown in Fig. 10, system controlling module has realized the real-time online detection. Users could handle the whole system including transmission, image acquisition and image processing. Furthermore, operation status, captured image and detecting results could also be shown in graphical user interface during detection to accomplish the purpose of interaction between human and machine.

In menu or toolbar at the top, many control functions including direction control, speed control, printer control, etc. are offered to user. Captured image and detection result are real-time shown in median area. Operation status of the system could be found at the bottom, which one could know how fast is the system running or how many defects detected.

After automatic detection, all defects information during detection such as positions, types and saving paths will be written in database, and then saved data is available to the functions of print, analysis and other further works.

-

(3)

Module of data analysis and statistic respectively provides user a function of offline data analyze and statistic to defects information during the production.

4 Results and Discussion

Hit rate, fault rate [1], detection precision, real time and other performances of machine vision detection system would be tested in this section. Through the offline-online experiments, hit rate and fault rate could be validated. In the tests to real time and running speed, time consumption of single period detection is taken as benchmark here. All the proposed tests are running at a situation of indoor, ambient temperature and natural light.

4.1 Algorithm Effectiveness

At first, Offline tests to detection algorithm would be executed and all test defect sample images are from textile surface defects reference benchmark database of Technische Universit at Hamburg-Harburg University in Germany. About fifty defect test images with the size of 256 \(\times \) 256 and 52 defect free reference images are selected. Among the samples, there are 13 kinds of fabric texture and 9 kinds of common defect are covered. The detection results of partial selected sample images are shown in Fig. 11.

In online test, a batch of grey cloth with 500 m length and 1 m width is detected at a speed of 30 m/min, and then some of the detection results are given in Fig. 12.

The offline-online experiment results are given in Table 1. The hit rate (HR), missing rate (MR) and fault rate (FR) are given in Eqs. (9), (10) and (11), respectively.

It can be seen that many different kinds of fabric defects could be better detected.

4.2 Performance Verification

The computer is in charge of controlling and processing, so system performance has close relevance to computer hardware. The computer hardware configuration is: CPU i7-4790 3.6GHz, RAM 8G DDR3, GPU Nvidia GTX745. Resolution of captured image has reached 0.264 mm per pixel owing to the installation and debugging to the image acquisition subsystem. It can be seen that every piece of area with the size of 1 mm \(\times \) 1 mm on fabric surface will be covered with 16 pixels. It is easy for the system to detect defects of 1 mm level. The line scan CDD cameras with resolution of 2048 pixels are selected in the proposed system of which the output images have a height of 500 rows as a frame. That is to say, the output image with the size of 2048 \(\times \) 500 from a single camera need to be processed. A camera has a scan width of 540 mm (0.264 mm/pixel \(\times \) 2048 pixels). With the help of good scalability of system, one can add hardware equipment to realize full scale scan according to the project.

Two cameras are selected to give detection to a batch of cloth with the width of 1m. Time consumption of single period calculation is taken as test benchmark for system real-time verification. Table 2 shows that all kinds of defect image have their processing time recorded in online test and each five experiment results are as a group to obtain the average.

It can be concluded from experiment results that proposed detection method has a shorter running time within the period of capturing single frame and fulfils the requirement of real time, which makes sure the maximum running speed of system beyond 80 m/min.

5 Conclusion

Machine vision detection system is presented for fabric defect on Personal Computer platform. The system has many advantages than other embedded platforms in terms of lower cost and stronger scalability. Furthermore, it could cooperate with other textile equipment like loom or detection machine, which makes the production automatically. Good scalability of hardware helps the system competent for various target objects. However, traditional single detection algorithm is more and more difficult to distinguish a variety of fabrics and defects. A single algorithm is regarded as a detection unit for one certain defect in the software system, which means the more schemes integrated in, the more kinds of defects can be detected. The proposed method, integrating Otsu and GIS, is different from theoretical research with real time, high detection rate and practical application. Combined with the double-buffer alternative acquisition technique, the detection scheme would be more efficient. Graphical user interface provides an easy way to handle and puts the system into practical use. With the help of image acquisition subsystem, system resolution reaches 0.264 mm per pixel, which could be precise enough to distinguish the defects of 1 mm level. The maximum detection speed is 80 m/min with detection rate beyond 90 % by various online-offline experiments.

References

Schneider, D., Holtermann, T., Merhof, D.: A traverse inspection system for high precision visual on-loom fabric defect detection. Mach. Vis. Appl. 25(6), 1585–1599 (2014)

Stojanovic, R.: Real-time vision-based system for textile fabric inspection. Real-Time Imaging 7(6), 507–518 (2001)

Cho, C.-S., Chung, B.-M.: Development of real-time vision-based fabric inspection system. IEEE Trans. Ind. Electron. 52(4), 1073–1079 (2005)

Sari-Sarraf, H.: Vision system for on-loom fabric inspection. IEEE Trans. Ind. Appl. 35(6), 1252–1259 (1999)

Ngan, H.Y.T., et al.: Wavelet based methods on patterned fabric defect detection. Pattern Recogn. 38(4), 559–576 (2005)

Jing, J., Hang, L.: Combined fabric defects detection approach and quadtree decomposition. J. Ind. Text. 41(4), 331–344 (2012)

Wang, J.-W.: Singular value decomposition combined with wavelet transform for LCD defect detection. Electron. Lett. 48(5), 266–267 (2012)

Chandra, J.K., Datta, A.K.: Detection of defects in fabrics using subimage-based singular value decomposition. J. Text. Inst. 104(3), 295–304 (2013)

Mahajan, P.M., Kolhe, S.R., Patil, P.M.: A review of automatic fabric defect detection techniques. Adv. Comput. Res. 1(2), 18–29 (2009)

Kumar, A., Pang, G.: Defect detection in textured materials using gabor filters. IEEE Trans. Ind. Appl. 38(2), 425–440 (2002)

Xue, J.-H., Titterington, D.M.: Tests and Otsu’s methods for image thresholding. IEEE Trans. Image Process. 20(8), 2392–2396 (2011)

Acknowledgments

The authors gratefully thank the Scientific Research Program Funded by Natural Science Foundation of China (61301276), Xian Polytechnic University Young Scholar Backbone Supporting Plan, Discipline Construction Funds of Xian Polytechnic University (107090811). Project supported by the Xi’an Polytechnic University Scientific Research Foundation for doctors (BS1416).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Li, P., Zhao, Z., Zhang, L., Zhang, H., Jing, J. (2015). The Real-Time Vision System for Fabric Defect Detection with Combined Approach. In: Zhang, YJ. (eds) Image and Graphics. Lecture Notes in Computer Science(), vol 9219. Springer, Cham. https://doi.org/10.1007/978-3-319-21969-1_41

Download citation

DOI: https://doi.org/10.1007/978-3-319-21969-1_41

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21968-4

Online ISBN: 978-3-319-21969-1

eBook Packages: Computer ScienceComputer Science (R0)