Abstract

The ability to use digital technologies to live, work and learn in today’s knowledge-based society is considered to be an essential competence. In schools, digital technologies such as smart devices offer new possibilities to improve student learning, but research is still needed to explain how to effectively apply them. In this paper we developed an instrument to investigate the digital competences of students based on constructs from the DIGCOMP framework and in the contexts of learning science and mathematics in school and outside of school. Pilot testing results of 173 students from the 6th and 9th grades (M = 12.7 and 15.7 years of age, respectively) were analyzed to remove unnecessary items from the instrument. The pilot study also showed preliminary smart device usage patterns that require confirmation by a large-scale study. Digitally competent use of smart devices may help facilitate widespread use of computer-based resources in science education.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The ability to use digital technologies to live, work and learn in today’s knowledge-based society is considered to be so essential that in 2006 the European Parliament and Council specifically acknowledged digital competence as one of eight key competences necessary for personal fulfilment, active citizenship, social cohesion and employability [1]. More recently, a report commissioned by the European Commission entitled DIGCOMP: A Framework for Developing and Understanding Digital Competence in Europe identified 21 digital competences and grouped them into 5 areas: Information, Communication, Content Creation, Safety and Problem Solving [2]. The DIGCOMP framework was developed based on a review of the literature on the concept of digital competence [3], an analysis of 15 different case-studies [4] and consultations with 95 experts [5]. In the context of the DIGCOMP framework the term ‘digital competence’ is defined to be a set of knowledge, skills and attitudes needed by citizens to use ICT to achieve goals related to work, employability, learning, leisure, inclusion and/or participation in society [2].

In Estonia the DIGCOMP framework was used as a basis for introducing a new general competence (digital competence) into the national curriculum in August 2014. This general competency requirement defines digital competence as:

the ability to use new digital technology to cope in a rapidly changing society when studying, acting and communicating as a citizen; to find and store information through digital tools and judge its relevance and credibility; to participate in digital content creation, including making and using text, pictures and multimedia; to use digital tools and strategies to solve problems; to interact and collaborate in digital environments; to be aware of risks and protect one’s privacy, personal information and digital identity in online environments; to abide by the same morals and values in online environments as in everyday life [6].

Now that education policy in Estonia explicitly expects schools to play a major role in developing students’ digital competence [7], there is a need to understand digital competence as it is defined in the national curriculum and study how it informs teaching and learning. Since the DIGCOMP framework served as the basis for defining digital competence in the Estonian national curriculum it is important to investigate how that framework can be practically applied in schools to develop the various digital competences listed in the curriculum requirements. At a practical level the suitability of the DIGCOMP framework for school-age students has not been established. The framework describes individual competences using analogous terms (e.g., I can browse for, search for and filter information online) that may be perceived by some to actually address separate competences. Unlike survey items about digital skills found in the Eurostat Community Survey on ICT Usage in Households and by Individuals [8], which asks respondents whether they perform specific activities (e.g., Do you use the Internet to read online news sites?), the DIGCOMP self-assessment items tend to be more abstract (e.g., I can browse the Internet for information; I can search for information online). Therefore, there is a need to study whether the relatively abstract terms used to describe digital competences in the DIGCOMP framework are understood in a consistent manner by school-age students.

Especially important nowadays is to engage young people in science and mathematics. These subjects are fundamental for building the next generation of innovators in today’s technology-driven economy. However, studies show that students in Europe [9], including Estonia [10], have low motivation towards learning science and mathematics. A potential solution is to seize upon the interest young people show with smart devices (smartphones, tablet computers) and integrate new pedagogies such as BYOD (Bring Your Own Device) in the science and mathematics classroom [11]. Computer-based science resources, such as those found in the online learning environment Go-Lab [12], offer opportunities for students to learn science through personalized experiments and apply a scientific inquiry methodology using their smartphone or a tablet. Initial results of implementing Go-Lab science scenarios on Wi-Fi enabled tablets have shown positive results with 15-year-old students [13]. Further uptake of Go-Lab resources for large-scale use in education can benefit from a better understanding of the digital competence of today’s students. There is still a lack of research about the use of smart devices by students to learn science and mathematics [14]. The instrument developed in this study is a first step towards collecting accurate data about the use of smart devices by students and provides data to make preliminary inferences about the digitally competent use of smart devices by students to learn science and mathematics.

2 Method

The instrument in this study aimed to collect data from school-age students about their use of smart devices and their digitally competent use of smart devices to learn science and mathematics. It adopts some useful layout formats and scales from the survey instrument used by PISA to investigate use of ICT by students [15], and similarly uses a self-report questionnaire to collect data about how often students perform certain activities in specific contexts. The activities in this instrument are related directly to the digital competences described in the DIGCOMP framework. The contexts include in school and outside of school learning, as well as a context to measure use of smart devices for activities not related to school learning.

2.1 Designing and Developing the Instrument

The DIGCOMP framework [2] was examined closely to determine which constructs related to digital competence to study. The DIGCOMP framework identifies five main areas of digital competence (Information, Communication, Content Creation, Safety and Problem-Solving) which are interpreted in this study as our main constructs. The 21 individual digital competences in the DIGCOMP framework are then taken to be subscales of these main constructs. Selection of which digital competences to include in the instrument was influenced by our choice to employ a self-report questionnaire. Maderick et al. [16] concluded that self-assessment of digital competences that can be otherwise assessed through criterion-referenced tests are not accurate or valid. They reported that participants in their study (preservice teachers) overestimated their digital competence through self-assessment [16]. We decided that the constructs Safety and Problem-Solving can be better assessed by tests and therefore excluded these two main constructs from this instrument. Likewise, 6 of the 13 subscales in the remaining three main constructs were also judged to be more appropriately measured by assessing actual performance on a task rather than with a self-report usage survey. These subscales were likewise excluded. The remaining 7 digital competences included in this instrument were: browsing, searching and filtering information; storing and retrieving information; interacting through technologies; sharing information and content; collaborating through digital channels; developing content; and integrating and re-elaborating.

Definitions given in the DIGCOMP framework for each of the 7 digital competences under consideration appear to include similar and perhaps redundant terminology. For example, the digital competence Browsing, searching and filtering information is described to be “To access and search for online information, to articulate information needs, to find relevant information, to select resources effectively, to navigate between online sources, to create personal information strategies.” [2, p. 17]. Within that definition there are 6 separate clauses and even the title of the digital competence includes three similar terms (browsing, searching and filtering). Hence, to create items for our instrument based on the 7 selected digital competences we decided to write three versions of each digital competence and test them in a pilot study. The three variations were generated by looking at the terms used in the DIGCOMP definition and choosing those believed to be most readily understood by our target audience (school-age students). Table 1 gives an overview of the instrument items that were developed. The item order was chosen such that each version of a digital competence is asked once before asking alternative versions of the same digital competence.

The question format and frequency scale for presenting the item in our instrument are similar to the survey instrument used by PISA to investigate use of ICT by students [15]. However, an important difference is that we were interested in studying how often a student performs a certain activity in four differing contexts. These contexts not only give information about the use of smart devices in school, where current teaching practice may not yet apply such digital technology to a wide extent, but also on the use of smart devices outside of school where usage may be more prevalent. The four differing contexts are: (1) at school for tasks assigned by a science or mathematics teacher, (2) outside of school for tasks assigned by a science or mathematics teacher (e.g., homework), (3) outside of school for learning additional science or mathematics information not required by a teacher, and (4) outside of school for activities not related to school learning. These four contexts describe possible ways students are currently using smart devices. Table 2 summarizes the structure of the instrument and shows how a question about a single digital competence item is presented in a matrix layout for the four differing contexts.

2.2 Implementing the Instrument

Considering the age of our target audience, the need to maintain their interest in completing the questionnaire, our desire to record analytics data such as time spent per question, our need for flexible styling and formatting options of content and a solution that would be scalable for large numbers of respondents, it was decided that the instrument should be administered electronically on tablet computers. In addition, electronic administration of the instrument needed to work offline to ensure that locations with poor or no Wi-Fi coverage would still permit data collection. Given all the needs of this instrument we proceeded to create the technical solution ourselves.

The technical solution for administering the instrument on tablet computers is based on saving an HTML file to a tablet computer’s internal memory and running the file locally in the tablet’s web browser. The HTML file was created using the hypertext markup language to define the layout and style of the instrument and the JavaScript programming language to add interactivity to the instrument.

Figure 1 shows how the instrument appears on both iPad and Android tablets. The interactive functionality in the HTML file included forward and backward buttons to navigate between different items, checks to ensure only one response to single-choice questions, checks for nonresponse and an alert message to a respondent that they cannot continue until all questions are answered, calculation of a unique timestamp when a respondent selects their first response, and calculation of the time spent per question. A submit button at the end of the instrument was also created and when pressed saved all the data into a format that could be easily read by a spreadsheet application for data analysis. Another HTML file was used to upload (when it was possible to establish a Wi-Fi Internet connection with the tablet) all the data (multiple respondent data may have been collected on a single tablet) to an online spreadsheet file.

2.3 Pilot Study

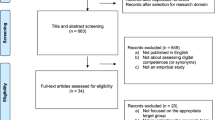

A pilot study was organized to test the instrument with the target audience and gather data to improve the reliability, practicality and conciseness of the instrument. Recall that three potentially redundant items were created for each digital competence construct (see Table 1). Statistical analysis and feedback from the pilot study suggested shortening the instrument via removal of unnecessary items.

A total of 173 students from the 6th and 9th grades (12.7 and 15.7 years of age, respectively) from 2 urban and 2 rural schools participated in the pilot study.

The instrument was completed voluntarily by students who had returned an informed consent form (the form required parental approval for participation and was distributed at least one week before administration of the instrument). Two trained university students familiar with the questionnaire traveled to schools on separate occasions to administer the instrument. They were available to answer questions about the instrument from students.

3 Results

The results of data collection from the pilot study were analyzed and used to remove ineffective items from the instrument. Analysis of the pilot study data also revealed some interesting preliminary usage patterns of smart devices by students.

3.1 Time Spent Per Item

Included in the data collection was analytics dealing with the time spent per item. Since each item appeared separately on the tablet screen it was possible to record the time spent completing that particular item. Figure 2 shows the average time spent per item (in seconds) for each of the 21 items. The figure distinguishes between 6th and 9th grade students. It can be seen that in the beginning there is a learning curve to get familiar with the questionnaire structure and format. After the first few questions the students in general answer subsequent items more quickly. One concern of the instrument was response burden. However, we see that even by the end of the questionnaire the average time spent per item remains above 10 s. Thus, it does not appear that students get tired or start rapidly making responses on the final items.

It should be noted that before students began answering these items related to digital competences there was a section asking them to enter data about their background (name, age, gender, grades, etc.) and a section about availability of smart devices. These sections together took 6th graders an average time of 3.34 min to complete and 9th graders an average time of 2.95 min to complete.

3.2 Final Version of the Instrument

Two stages of analysis were used to remove ineffective items from the instrument. The first stage relied on calculating internal consistency using Cronbach’s alpha correlation coefficient. The items were grouped under the three main constructs (see Table 1) and analyzed to see if their removal improves the internal consistency (reliability) of the instrument. Items 1, 2, 3, 6, 12, 13, 16, and 18 showed an increase in Cronbach’s alpha correlation coefficient in at least one of the four contexts studied. All of items that showed an increase in Cronbach’s alpha were removed except for item 6. Unlike the other items, the frequency distribution for item 6 showed a greater number of ‘every day’ responses compared to its two alternative versions. It also showed the least number of ‘never or hardly ever’ responses compared to its alternatives. This suggested that students better understand item 6 and were able to answer that they performed the activity more often, in contrast to the alternate item versions where confusion may have prevented students from answering that they performed the activity as often as their responses to item 6.

After the first stage of item removal there remained 3 items in the Information construct, 6 items in the Communication construct, and 5 items in the Content Creation construct. It was decided to perform another stage of item removal with the aim of obtaining 3 to 4 items in each construct. For the second stage of item removal it was also decided that there must be at least one item representing each of the 7 digital competences in the instrument. The second stage of item removal relied in part at looking at levels of skewness in the distribution of responses to the items as well as on consideration of item wording. Two researchers compared the remaining items using this criterion and concluded that items 11, 14 and 19 should be removed. Thus, the final instrument was reduced from 21 items to 11 items. Each of the of 7 digital competences measured by our final instrument is represented by at least one item: browsing, searching and filtering information (items 8 and 15); storing and retrieving information (item 9); interacting through technologies (items 10 and 17); sharing information and content (item 4); collaborating through digital channels (item 5); developing content (items 6 and 20); and integrating and re-elaborating (items 7 and 21).

3.3 Smart Device Usage Patterns of 6th and 9th Grade Students

Although space limitations prevent a detailed presentation and discussion of the pilot study results, some preliminary patterns are apparent. Table 3 presents the pilot study results categorized by main construct, context and student grade level. It can be seen that use of smart devices outside of school for purposes other than school learning result in the highest frequency responses. Also, it is evident that use of smart devices for Content Creation is considerably less than use of smart devices for Information or for Communication. These results begin to show that the developed instrument is useful for extracting usage patterns of students’ smart device use. More detailed analysis with a larger representative sample size is needed to make generalizable conclusions as well as to indicate which background characteristics of students can explain the smart device usage patterns.

4 Discussion

Applying this instrument on a large scale in schools is expected to give us an overview of the current situation of how students use smart devices and provide data to assess the digital competence of primary and secondary school students. It is important to keep in mind that this initial instrument is based on a self-report survey. Research shows that the accuracy of self-evaluation instruments cannot always be assumed because students tend to be overconfident in rating their skills [17]. It is also important to note that some of the digital competences might not be assessed with high validity in self-report tests [16]. For this reason we did not focus in our study on assessing two competence areas of the DIGCOMP framework, namely the areas of Safety and Problem Solving. International assessments of computer and information literacy, such as ICILS [18], use computer-based interactive tasks to assess how well students can solve problems. In order to ensure that students can solve problems with ICT or problems in using ICT they actually need to face these problems and come up with solutions. The solutions they present should then be evaluated (how appropriate the solution is and how fast was it obtained). For the same reason, it is not reasonable to ask if a student is able to use his or her smart device safely or can avoid safety issues like viruses. It is something that needs actual testing or observation of students’ behavior in action. The tests and/or observations are also needed to increase the validity of self-report surveys in the competence areas of Information, Communication and Content Creation. Therefore, we see a need for future studies to evaluate the validity of the results of the current study by triangulation while using other methods to characterize students’ digital competence. It is also noted that assessment of digital competences appears to have logical links to computer-based assessment, since demonstration of task-based performance of digital competence is most reasonably done in digital environments. Computer-based assessment of problem solving skills has been used by the PISA test since 2006, and more recently in 2015 PISA began assessing collaborate problem solving skills using virtual computer agents [19]. Thus, the assessment of digital competences in a computer-based environment seems to be feasible and warrants further study.

Nevertheless, the instrument developed in the current study is an important first step towards understanding the learning opportunities offered by smart technologies in science and mathematics classrooms. It provides an essential overview of how students are currently using smart devices. The next step is to integrate data collection tools in smart devices so that automatic data collection can serve approaches for learning analytics and support students in improving their digital competence. A better understanding of students’ digital competence will help facilitate quicker uptake of computer-based science resources such as those provided by the Go-Lab project [12]. Initial results of implementing Go-Lab science scenarios on Wi-Fi enabled tablets have shown positive results [13].

5 Conclusion

In conclusion, a self-report questionnaire was developed, pilot tested, refined and is now ready for large-scale implementation. The instrument surveys how often students use a smart device to perform a digitally competent activity in four differing contexts. The contexts relate to in school and out of school learning of science and mathematics, as well as a context about using smart devices outside of school for activities not related to school learning. The results of a pilot study with 173 students from the 6th and 9th grades were analyzed to select which items from the DIGCOMP framework are best understood by school-age children. Implementation of this instrument on a large scale will allow us to accurately determine how often, in which ways and for what digital competent activities students use smart devices. Moreover, it is expected that data analysis of large-scale data will reveal which background characteristics of students best explain their smart device use.

References

European Parliament and the Council: Recommendation of the European parliament and of the council of 18 December 2006 on key competences for lifelong learning. Official Journal of the European Union, L394/310 (2006)

Ferrari, A.: DIGCOMP: A Framework for Developing and Understanding Digital Competence in Europe. Publications Office of the European Union, Luxembourg (2013)

Ala-Mutka, K.: Mapping Digital Competence: Towards a Conceptual Understanding. Publications Office of the European Union, Luxembourg (2011)

Ferrari, A.: Digital Competence in Practice: Analysis of Frameworks. Publications Office of the European Union, Luxembourg (2012)

Janssen, J., Stoyanov, S.: Online Consultation on Experts’ Views on Digital Competence. Publications Office of the European Union, Luxembourg (2012)

Põhikooli riiklik õppekava: [National curriculum for basic schools]. Riigi Teataja I, 29.08.2014, 20 (2014). https://www.riigiteataja.ee/akt/129082014020. Accessed 26 Feb 2016

Republic of Estonia Ministry of Education and Research: The Estonian Lifelong Learning Strategy 2020. Tallinn (2014). https://hm.ee/en/activities/digital-focus

European Schoolnet and University of Liege: Survey of schools: ICT in education. Benchmarking access, use and attitudes to technology in Europe’s schools. Final Report (ESSIE). European Union, Brussels (2013). http://ec.europa.eu/digital-agenda/sites/digital-agenda/files/KK-31-13-401-EN-N.pdf

Sjøberg, S., Schreiner, C.: The ROSE project. An overview and key findings (2010). http://roseproject.no/network/countries/norway/eng/nor-Sjoberg-Schreiner-overview-2010.pdf

Teppo, M., Rannikmäe, M.: Paradigm shift for teachers: more relevant science teaching. In: Holbrook, J., Rannikmäe, M., Reiska, P., Ilsley, P. (eds.) The Need for a Paradigm Shift in Science Education for Post-Soviet Societies: Research and Practice (Etonian Example), pp. 25–46. Peter Lang GmbH, Frankfurt (2008)

Song, Y.: Bring your own device (BYOD) for seamless science inquiry in a primary school. Comput. Educ. 74, 50–60 (2014)

de Jong, T., Sotiriou, S., Gillet, D.: Innovations in STEM education: the Go-Lab federation of online labs. Smart Learn. Environ. 1, 1–16 (2014)

Mäeots, M., Siiman, L., Kori, K., Eelmets, M., Pedaste, M., Anjewierden, A.: The role of a reflection tool in enhancing students’ reflection. In: Proceedings of the 10th International Technology, Education and Development Conference (INTED), 7th–9th March 2016, Valencia, Spain (2016, in press)

Crompton, H., Burke, D., Gregory, K.H., Gräbe, C.: The use of mobile learning in science: a systematic review. J. Sci. Educ. Technol. (2016). doi:10.1007/s10956-015-9597-x, (Published online before print 14 Jan 2015)

OECD: PISA 2012 ICT Familiarity Questionnaire. OECD, Paris (2011)

Maderick, J.A., Zhang, S., Hartley, K., Marchand, G.: Preservice teachers and self-assessing digital competence. J. Educ. Comput. Res. (2015). doi:10.1177/0735633115620432, (Published online before print 24 Dec 2015)

Dunning, D., Heath, C., Suls, J.: Flawed self-assessment: implications for health, education, and the workplace. Psychol. Sci. Publ. Interest 5, 69–106 (2004)

Fraillon, J., Schulz, W., Ainley, J.: International Computer and Information Literacy Study: Assessment Framework. IEA, Amsterdam (2013)

OECD: PISA 2015: Draft Collaborative Problem Solving Framework (Web-Based Material) (2013). http://www.oecd.org/pisa/pisaproducts/Draft%20PISA%202015%20Collaborative%20Problem%20Solving%20Framework%20.pdf. Accessed 29 Sept 2015

Acknowledgments

This study was partially funded by the Estonian Research Council through the institutional research funding project “Smart technologies and digital literacy in promoting a change of learning” (Grant Agreement No. IUT34-6). This study was also partially funded by the European Union in the context of the Go-Lab project (Grant Agreement No. 317601) under the Information and Communication Technologies (ICT) theme of the 7th Framework Programme for R&D (FP7). This document does not represent the opinion of the European Union, and the European Union is not responsible for any use that might be made of its content.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Siiman, L.A. et al. (2016). An Instrument for Measuring Students’ Perceived Digital Competence According to the DIGCOMP Framework. In: Zaphiris, P., Ioannou, A. (eds) Learning and Collaboration Technologies. LCT 2016. Lecture Notes in Computer Science(), vol 9753. Springer, Cham. https://doi.org/10.1007/978-3-319-39483-1_22

Download citation

DOI: https://doi.org/10.1007/978-3-319-39483-1_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39482-4

Online ISBN: 978-3-319-39483-1

eBook Packages: Computer ScienceComputer Science (R0)