Abstract

Quantifying \(T_2\) and \(T_2^*\) relaxation times from MRI becomes a standard tool to assess modifications of biological tissues over time or differences between populations. However, due to the relationship between the relaxation time and the associated MR signals such an analysis is subject to error. In this work, we provide a Bayesian analysis of this relationship. More specifically, we build posterior distributions relating the raw (spin or gradient echo) acquisitions and the relaxation time and its modifications over acquisitions. Such an analysis has three main merits. First, it allows to build hierarchical models including prior information and regularisations over voxels. Second, it provides many estimators of the parameters distribution including the mean and the \(\alpha \)-credible intervals. Finally, as credible intervals are available, testing properly whether the relaxation time (or its modification) lies within a certain range with a given credible level is simple. We show the interest of this approach on synthetic datasets and on two real applications in multiple sclerosis.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Relaxometry imaging provides a way to quantify modifications of biological tissues over time or differences between different populations. In this context, the problem of estimating \(T_2\) values from echo train acquisitions is discussed in many works [10–12]. Since we deal with quantitative values, being able to then detect and assess significant differences and changes seems an important goal to achieve. However, to our knowledge, there is still a lack of statistical method to analyse such data. In this work, we focus on the analysis of the \(T_2\) or \(T_2^*\) modification between two time-points (e.g. baseline versus 3 months later or pre versus post contrast agent injection) for a given subject. A naive approach to perform such a task consists in first computing the \(T_2\) maps for the pre and post acquisitions using an optimisation algorithm and then in comparing the variation level inside a region of interest -typically multiple sclerosis lesions- to the variation inside the normal appearing white matter (NAWM). However, this solution may drive to important issues. The reproducibility error of \({T_{2}}\) and \({T_{2}^{*}}\) maps is indeed significantly smaller in the NAWM than in regions with higher intensities. This makes the task, in the best case, complex and, in the worst, error prone with many false positive detections in the higher intensities regions.

In fact, due to the form of the relationship relating the MR signal and the relaxation time, the uncertainty of estimation increases with the relaxation time (see [7] for illustrating experiments on phantoms). In this work, we provide a Bayesian analysis of this relationship. More specifically, we build posterior distributions relating the raw (spin or gradient echo) acquisitions and the relaxation time and its modification over time. These posterior distributions extract the relevant information from the data and provide complete and coherent characterisations of the parameters distribution. Our approach has three main advantages over the existing \({T_{2}}\) and \({T_{2}^{*}}\) estimation methods. First, it allows to build complex models including prior belief on parameters or regularisations over voxels. Second, it provides many estimators of the parameters distribution including the mean and \(\alpha \)-credible highest posterior density (HPD) intervals. Finally, once the credible intervals estimated, testing properly whether the relaxation time (or its modification) lies to a certain range given a credible level becomes simple.

The article is organized as follows. In Sect. 2, we describe a set of models to analyse the \({T_{2}}\) and \({T_{2}^{*}}\) relaxation times. More specifically, in Sect. 2.1, we give a posterior for the \({T_{2}}\) (or \({T_{2}^{*}}\)) estimation. In Sect. 2.2, we give a procedure to assess differences of \({T_{2}}\) in a voxel between two time points at a given credible level. Then, in Sect. 2.3, we slightly modify the posterior so that the estimation is not anymore performed voxel-wise but region-wise leading to non-independent multivariate estimations and testings. In Sect. 2.4, we propose a prior to use the extended phase graph function instead of the exponential decay function used in the previous models. Then, in Sect. 3, we assess our method on synthetic data. In Sect. 4, we provide two examples of applications on Multiple Sclerosis data. Finally, in Sect. 5, we discuss this work and give perspectives.

2 Models

2.1 Bayesian Analysis of \(T_2\) Relaxometry

For a given voxel in a volume, the MR signal \(S_i\) for a given echo time \(\tau _i\) can be related to the two (unknown) characteristics of the observed tissue \({T_{2}}\) and \(M\) (where \(M\) accounts for a combination of several physical components) through:

where \(f_{{t_{2}},m}(\tau _i)=m\cdot \exp \left( -\frac{\tau _i}{{t_{2}}} \right) \) and \(N(\mu ,\sigma ^2)\) is the normal distribution with mean \(\mu \) and variance \(\sigma ^2\). The Gaussian error term allows to account for measurement noise as well as for model inadequacy (due to e.g. multi exponential decay of the true signal, partial volumes or misalignment). Then we consider that for all \(i \ne j\) \((S_i | {t_{2}},m,\sigma ) \perp (S_j | {t_{2}},m,\sigma )\) (\(\perp \) standing for independence).

The associated reference prior [1] for \(\sigma \) and \((M,{T_{2}})\) in different groups writes:

where \(l_k({t_{2}})=\sum _i \tau _i^k \exp (-2\frac{\tau _i}{{t_{2}}})\) for \(k=0,1,2\) and where \(1_{A}(x)=1\) if \(x \in A\) and 0 elsewhere. Notice that the upper limit for \(M\) and positive lower limit for \({T_{2}}\) in the prior support are needed to ensure posterior properness. This prior leads to invariance of the inference under reparametrisation of the exponential decay function. Moreover, as it will be shown in Sect. 2.1, it provides satisfying performances whatever the actual values of \({T_{2}}\) (so, under normal and pathological conditions). Estimators for the resulting marginal posterior \(p({T_{2}}|(s_i))\) can then be computed using a Markov Chain Monte Carlo algorithm (details in Sect. 3).

2.2 Bayesian Analysis of \(T_2\) Modification

We are now concerned with the following question: how to assess that the \({T_{2}}\) value associated to a given voxel has changed between two acquisitions with a given minimal credible level. Let call \(X_{a}\) the random variable associated to a quantity for the pre acquisitions and \(X_{b}\) for the post acquisitions. We assume that the volumes are aligned and model for the pre acquisition:

and introduce \(C\) as the \({T_{2}}\) modification between the two acquisitions through:

where (additionally to above independences) for all i, j \((S_{b,i} | {t_{2}},c,m_{b},\sigma _{b}) \perp (S_{a,j} | {t_{2}},m_{a},\sigma _{a})\). From Eq. 2 we can define the prior:

that defines, with Eqs. 3 and 4, the marginal posterior for (among others) the \({T_{2}}\) modification \(p(C|(s_{a,i}),(s_{b,i}) )\). Then a voxel can be defined as negatively (resp. positively) altered at \(\alpha \) level, if the \(\alpha \)-credible HPD interval for \(C\) does not contain any positive (resp. negative) value (see [8] for a testing perspective).

The previous model of variation \({T_{2}}_{b}={T_{2}}_{a}+C\) was dedicated to \({T_{2}}\) modification. Another important alternative model of variation states that when adding a contrast agent to a biological tissue the effect on its \({T_{2}}\) property is additive with the rate \(1/{T_{2}}\, : \, 1/{T_{2}}_{b}=1/{T_{2}}_{a}+C_R\) and that \(C_R\) (we use this notation to distinguish it from the above C) is proportional to the contrast agent concentration. From the posterior of \({T_{2}}\) and \(C\) designed above, its posterior writes: \( p(C_R=c_R|(s_{a,i}),(s_{b,i})) = p(\frac{-C}{{T_{2}}(C+{T_{2}})}=c_R|(s_{a,i}),(s_{b,i})).\)

2.3 Region-Wise Analysis

The models proposed previously allow a voxel-wise analysis where each voxel is processed independently from others. Performing a grouped inference for all the voxels of a given region (e.g. lesion) can be performed by adding a supplemental layer to the model. Let us use j to index voxels, then one can replace each prior \(\varPi _2(c^j)\) by for example: \(\varPi _2(c^j|\mu _C,\sigma _C) =\psi _{N}(\mu _C-c^j,\sigma ^2_C) (\psi _{N}\) being the Normal kernel). We then assume that \(\forall i_1,i_2\) and \(j_1 \ne j_2, (S_{i_1}^{j_1} | {t_{2}}^{j_1},m^{j_1},\sigma ^{j_1}) \perp (S_{i_2}^{j_2} | {t_{2}}^{j_2},m^{j_2},\sigma ^{j_2})\) (in particular, we consider the errors as independent between voxels). For the two hyperparameters \(\mu _C\) and \(\sigma _C\), we use the weakly informative priors (see [5] for details) \(\mu _C \sim N(0,10^{6})\) (approximating the uniform density over \(\mathrm{{I}}\!\mathrm{R}\)) and \(\sigma _C \sim \mathrm {Cauchy}(x_0=0,\gamma =100) I_{\mathrm{{I}}\!\mathrm{R}^{+}}\) (allowing \(\sigma _C\) to go well below 400ms), where \(I_{\mathrm{{I}}\!\mathrm{R}^+}\) denotes a left-truncation. Such a model allows the set of inferences over the \((C^j)\) to be performed not independently thus dealing in a natural way with multiple comparisons by shrinking exceptional \(C^j\) toward its estimated region-wise distribution [6] and improving the overall inference. Depending on the expected regularity of the \(C^j\) within the regions, we can alternatively opt for a Cauchy density and/or add an informative prior for the error variances \(\sigma ^2_{a,i}\) and \(\sigma ^2_{b,i}\) to favour goodness of the fit. Similarly, a spatial Markovian regularisation can also be considered.

2.4 Using the Extended Phase Graph

When the signals \((s_i)_{i=1:N}\) are obtained using sequences of multiple echoes spin echoes (e.g. CMPG sequence), the exponential decay function is only a rough approximation of the relation between \({T_{2}}\) and the MR signals. Some solutions to adapt it to this situation exist [10] and could be easily added to our model. In the following, we propose a broader solution that consists in replacing the exponential decay by the Extended Phase Graph function (EPG) [9] that relates the signal \(S_i\) to two other quantities (additionally to \({T_{2}}\) and \(M\)) so that \((S_i)=EPG({T_{2}},M,T_1,B_1,(\tau _i)) + {\epsilon }\) (\(T_1\) being the spin-lattice relaxation time, \(B_1\) the field inhomogeneity i.e. multiplicative departure from the nominal flip angle and \({\epsilon }\) representing the noise term of Eq. 1). This function is complicated (product of \(N\, 3\, {\times }\,3\) matrices involving non-linearly the different parameters) and derivating a dedicated reference prior would be cumbersome. Nevertheless, it consists of small departures from the exponential function and mainly depends on \(M\) and \({T_{2}}\). Thus a reasonable choice consists in using the same priors as those derived for the exponential function. Then, an informative prior is designed for \(B_1\). In practice, \(B_1\) typically takes 95 % of its values in [0.4, 1.6] (we deliberatively use this symmetric form to avoid \(B_1=1\) to be a boundary of the prior support) so we set \(B_1 \sim \mathop {\mathrm {Gamma}}(k=8.5,\theta =0.1)\). We did the same for \(T_1\) by choosing a gamma distribution with 95 % of its density in \([20,2000] (T_1 \sim \mathop {\mathrm {Gamma}}(2.3,120)\)). In practice, \(T_1\) has a very small impact on the EPG and on the inference. Then the EPG model can be used by simply replacing \(f_{{t_{2}},m}(\tau _i)\) by \(EPG({t_{2}},m,t_1,b_1,(\tau _i))\) (prior sensitivity analyses for \(T_1\) and \(B_1\) give satisfying results).

3 Results on Synthetic Data

3.1 Implementation, Datasets and Convergence Diagnosis

For the sake of concision, for the previous models, we only exhibited the likelihoods, the priors and the independence assumptions. The resulting posteriors can be obtained using the Bayes rule. Then for each marginal posterior \(p({T_{2}}|(s_{i}))\) (Sect. 2.1), \(p(C|(s_{a,i}),(s_{b,i}))\) (Sect. 2.2) and \(p((C^j)|(s^j_{a,i}),(s^j_{b,i}))\) (Sect. 2.3), we get its statistics using the “logarithm scaling” adaptive one variable at a time Metropolis-Hastings algorithm [13]. We used 10k samples (40k for the region-wise model) after discarding the first 5k (20k for the region-wise model). Convergence has been assessed using the Geweke score [2].

The data we use in this section are numerically generated \(T_2\) spin echo acquisitions with 7 echo times \(\tau \) equally spaced by 13.8 ms and a repetition time of 1 s. Since the \(EPG({t_{2}},t_1,b_1,(\tau _i))\) function is time consuming and many function calls are needed, it is tabulated on a fine grid for different values of \({t_{2}}, t_1\) and \(b_1\). All the results given below are those using the EPG (for both generating data and inference), those obtained using the exponential decay are slightly better and lead to the same conclusion.

3.2 Results

\({T_{2}}\) estimation: We run 400 estimations for different configurations of \({T_{2}}\) and \(\sigma \) (realistic values are randomly given to \(T_1, B_1\) and \(M\)). Results are summarized in Table 1 and illustrate how the length of the credible intervals increases as expected with \(T_2\) and \(\sigma \). Moreover, these intervals exhibit excellent coverage properties, illustrating the interest of the derived priors in the absence of prior information for \(T_2\). Notice that other choices of prior such as the reference prior with \(\sigma \) and \(M\) as nuisance parameters [1] do not lead us to analytical solutions.

\(C\) estimation: For different configurations of \({T_{2}}, \sigma \) and \(C\), we analyse the specificity (denoted \(p^-\)) and sensitivity (denoted \(p^{+}\)) of the estimator for C modification (Sect. 2.2) for \(\alpha \)=0.05. Results are summarized in Table 2 and illustrate that for the used acquisition protocol, detecting low \({T_{2}}\) modifications in high values is limited with a 0.95 specificity level. Such simulations can be used to design decision rules providing an optimized specificity/sensitivity trade-off for given \({T_{2}}/C\) values by adjusting \(\alpha \). We also observe that the region-wise model can lead to strong improvements of the performances.

4 Two Applications on Real Data

The present method has been developed in a clinical context including a dataset of about 50 pre/post acquisitions from relapsing-remitting multiple sclerosis patients. In this section, we exemplified applications of our method on a few data from this study. Data are acquired on a 3T Verio MRI scanner (Siemens Healthcare, 32-channel head coil) and consists of volumes of \(192\, {\times }\,192\, {\times }\,44\) voxels of size \(1.3\,{\times }\,1.3\, {\times }\,3\) \(\mathrm{mm}^3\). The sequence parameters are the same as those used in Sect. 3. For each patient, all volumes are rigidly co-registered using a robust block-matching method [3]. No other preprocessing is applied.

4.1 Assessing USPIO Enhancement in MS Lesion

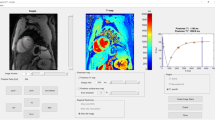

Ultra small superparamagnetic iron oxide (USPIO) is a contrast agent used to assess macrophagic activity that reduces the \({T_{2}}\) relaxation of the surrounding tissues [4]. We use, in this section, sets of pre-post injection acquisitions to detect the presence of USPIO in MS lesions using our model. Figure 1 displays detection with a \(5\,\%\) level for a patient acquired twice without USPIO injection and for a patient with potentially active lesions. The pre-post \(T_2\) relaxation maps illustrate the difficulty to label the lesions as enhanced or not by simply using the point estimates. The map for the patient without USPIO injection, for which our method does not detect any voxels, highlights this point. More generally, on this data we never detected more than 5 % of the voxels. For the active patient with USPIO injection, the method detects lesion 1 -for which we have independent clues that it is effectively active (i.e. high variation of MTR, FA and ADC values between baseline and acquisitions 3 months later)- as enhanced. By contrast, lesion 2 -for which we had no initial clue that it was actually active- is not detected as enhanced, while it shows a substantially positive pre-post signal.

Pictures (a) and (b): acquisitions performed without USPIO injection (so all lesions should be detected as negative). (a) superposition of a \(T_2\) weighted image, the lesion segmentation mask (white patches) and the enhanced voxels (red) for the 5 % level and (b) the pre-post \(T_2\) relaxation map (using ML estimates). Pictures (c) and (d): an active patient. Same displays than for (a) and (b) (Color figure online)

4.2 Assessing \(T_2\) Recovery in an Active MS Lesion

In this section, we use our model to assess the evolution of the \(T_2\) associated to a MS lesion just after its emergence \((m_0)\) and 3 (\(m_3\)) and 6 (\(m_6\)) months after. To illustrate the interest of the credible intervals, we display the minimal plausible C values for the 0.05 level i.e. the lower bound of the 0.05-credible interval for \([m_3,m_0]\) (Fig. 2.a) and the upper one for \([m_6,m_3]\) (Fig. 2.b). Notice that, for a sake of clarity, we quantify here differences from \(m_3\) to \(m_0\) so that expected difference \(C\) (a decrease of the \(T_2\) signals over time) is positive (and similarly for \(m_3\) and \(m_6\)). These maps offer a quantitative scale to compare lesions recovery among and within patient. More precisely, for lesion 3, the minimal admissible \(C\) is positive for \([m_3, m_0]\) (thus the interval does not contain 0) demonstrating that the lesion is recovering during this period. By contrast, this is not the case for the other lesions of the slice and for lesion 3 at \([m_6,m_3]\) (not displayed): it cannot be excluded with a 5 % level that the recovering process is finished. Moreover, the maximal admissible value for \([m_6,m_3]\) (Fig. 2.b) is far lower than the minimal one for \([m_3,m_0]\). We also give the minimal USPIO concentration i.e. \(C_R\) changes for the 5 % level (Fig. 2.c): we observe a good adequacy between USPIO concentration and \(T_2\) change for \([m_0,m_3]\).

5 Discussion and Conclusion

In this paper, we proposed a Bayesian analysis of \(T_2/T_2^*\) relaxation time assessment and modification. Then, we showed the interesting properties of our models on synthetic datasets. Finally, we exemplified the interest of the obtained credible intervals with two applications. As illustrated, when available, interval estimates can be more powerful than point ones to address naturally complex issues in medical imaging. Moreover, this work can be extended in several ways:

-

It can be used as a basis for the development of more informative hierarchical models (this is a motivation for considering a Bayesian setting). For example, the \(B_1^j\)s are spatially correlated. This could be accounted for adding a supplemental layer to the model (similarly to what proposed in Sect. 2.3). An informative prior for \(\sigma ^j\) could also be of interest to get less conservative intervals.

-

In this work, we considered the errors (modelled by the error term of Eq. 1 or Eqs. 3 and 4) as not correlated between voxels and modelling correlated errors (with unknown structure) would be of interest and is a challenge to meet.

-

The method is computationally intensive (about 2 s per voxel for the EPG region-wise approach) and for more demanding applications, other strategies e.g. maximisation over nuisance parameters could be investigated.

More generally, we think there is room for potential applications of interval estimation in e.g. \(T_1\) or DTI and hope this work will encourage such development.

References

Berger, J.O., et al.: The formal definition of reference priors. Ann. Stat. 37(2), 905–938 (2009)

Brooks, S., et al.: Handbook of Markov Chain Monte Carlo. Chapman & Hall/CRC Handbooks of Modern Statistical Methods. CRC Press, Boca Raton (2011)

Commowick, O., et al.: Block-matching strategies for rigid registration of multimodal medical images. In: ISBI, pp. 700–703, May 2012

Corot, C., et al.: Recent advances in iron oxide nanocrystal technology for medical imaging. Adv. Drug Deliv. Rev. 58(14), 1471–1504 (2006)

Gelman, A.: Prior distributions for variance parameters in hierarchical models. Bayesian Anal. 1(3), 515–534 (2006)

Gelman, A., et al.: Why we (usually) don’t have to worry about multiple comparisons. J. Res. Educ. Effectiveness 5(2), 189–211 (2012)

Kjos, B.O., et al.: Reproducibility of T1 and T2 relaxation times calculated from routine mr imaging sequences: phantom study. Am. J. Neuroradiol. 6(2), 277–283 (1985)

Kruschke, J.K.: Bayesian assessment of null values via parameter estimation and model comparison. Perspect. Psychol. Sci. 6(3), 299–312 (2011)

Matthias, W.: Extended phase graphs: dephasing, RF pulses, and echoes - pure and simple. J. Magn. Reson. Imaging 41(2), 266–295 (2015)

Milford, D., et al.: Mono-exponential fitting in T2-relaxometry: relevance of offset and first echo. PLoS ONE 10(12), e0145255 (2015). Fan X Editor

Neumann, D., et al.: Simple recipe for accurate T2 quantification with multi spin-echo acquisitions. Magn. Reson. Mater. Phys. Biol. Med. 27(6), 567–577 (2014)

Petrovic, A., et al.: Closed-form solution for T2 mapping with nonideal refocusing of slice selective CPMG sequences. Magn. Reson. Med. 73, 818–827 (2015)

Roberts, G.O., Rosenthal, J.S.: Examples of adaptive MCMC. J. Comput. Graph. Stat. 18(2), 349–367 (2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Combès, B., Kerbrat, A., Commowick, O., Barillot, C. (2016). A Bayesian Model to Assess \(T_2\) Values and Their Changes Over Time in Quantitative MRI. In: Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W. (eds) Medical Image Computing and Computer-Assisted Intervention - MICCAI 2016. MICCAI 2016. Lecture Notes in Computer Science(), vol 9902. Springer, Cham. https://doi.org/10.1007/978-3-319-46726-9_66

Download citation

DOI: https://doi.org/10.1007/978-3-319-46726-9_66

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46725-2

Online ISBN: 978-3-319-46726-9

eBook Packages: Computer ScienceComputer Science (R0)