Abstract

In this paper we present an approach for the georeferencing of historical World War II images by registering the images to present-day satellite imagery, with the aim of supporting the risk assessment of unexploded ordnances. We propose to exploit the local geometry of corresponding interest points in a Hough voting scheme to identify the most likely transformation parameters between the images. Our method combines the evidences from local as well as global correspondences and uses a spatial zoning rule to establish solutions with preferably uniformly distributed correspondences. An experimental evaluation is conducted on a set of 42 pairs of historical and present-day images and reveals the outstanding performance of our method compared to state-of-the-art image matching and registration algorithms, including commonly used hypothesize-and-verify and graph matching methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Assessing the risk of UneXploded Ordnances (UXOs) is an important concern for public safety [20]. Nowadays, a particular risk still comes from World War II bombing, as it is assumed that 10–\(30\%\) of bombs remained unexploded [4]. UXO risk assessment involves the analysis of aerial photographs taken after bombing [17]. Indications of past bombardment such as craters can be used by analysts to derive such risk maps, but demands for a tedious prior georeferencing process by manually registering the images with modern satellite imagery.

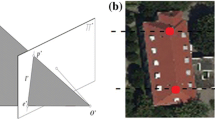

In this paper we present an approach to register old World War II images with modern satellite images for automatic georeferencing. While being a typical image registration problem [25], this task poses specific challenges that demand for a well-adapted solution. First and foremost, the time spans of over 70 years lead to changes in image content of varying degrees, as shown in Fig. 1. Furthermore, the historical images are grayscale only and are affected by over- or underexposure, uneven illumination, low spatial resolution, blurring, sensor noise or cloud coverage. Due to this challenges, existing solutions for registering historical aerial photographs rely on manual interaction steps like line and point feature detection [18], prior rough alignment [7] or registration of a reference image [1, 10].

In image registration, commonly feature-based registration techniques are exploited due to their ability to ground the registration only on a few salient image parts, which makes them robust against occlusions and other image deterioration effects and allows to handle complex spatial transformation between the images [25]. These techniques consist of a feature matching step followed by a spatial verification step, where outlier correspondences are filtered out and the transformation with highest support from the set of putative matches is identified. A dominant example for spatial verification are hypothesize-and-verify methods such as RANSAC [8] and similar techniques [21], where transformation model hypotheses are created from randomly chosen correspondence samples and evaluated by means of congruence with the remaining correspondences. However, these estimators are only effective if the extracted local features are discriminative enough to deliver a certain inlier ratio in the set of putative matches. For weaker local features the feature matching step can be coupled with the spatial verification step to increase the inlier ratio, as done by graph matching methods [6, 22]. Here feature matching is cast as an optimization problem involving both the similarities of the local feature vectors as well as pairwise or higher-order geometric consistencies of matches. However, graph matching is only computationally tractable for extracted feature sets in the order of \(10^1\)–\(10^3\) [6], thus allowing only a small set of initial candidate matches resulting in low recall.

The method presented in this paper builds upon the idea of using Hough voting in the transformation space, as used for object recognition [14], image retrieval [11] or image co-segmentation [5]. Due to the available local geometry of interest points, each single correspondence is able to cast a weighted vote based on feature similarity. Although a single correspondence gives only a weak evidence about the image transformation, stronger evidences are produced the higher the number of correspondences, and false votes can be effectively ruled out. As adding new correspondences has only linear costs, we can rely the transformation estimation on much more correspondences (in our case in the order of \(10^5\)). This is especially effective for high-resolution remote sensing image data, as all small local structures in the comparatively large earth surface areas covered are possibly useful for image registration. Hence, we transfer the idea of Hough voting from single correspondences to our problem of old-to-new image aerial registration and propose two reasonable extensions to improve the performance: the combination of local and global image similarities and the use of a correspondence zoning scheme to favor solutions with spatially evenly distributed correspondences.

The remainder of this paper is organized as follows. Section 2 reviews related work in the registration of remote sensing images in general and aerial WWII images in particular. Our methodology is described in Sect. 3. In Sect. 4, quantitative results are reported and discussed. Concluding remarks are given in Sect. 5.

2 Related Work

Registration of Remote Sensing Images: Image registration plays a major role in various remote sensing applications, such as image fusion, change detection and georeferencing [12]. Depending on the specific scenario, the effectiveness of an algorithm is mainly determined by weather it uses the global image information or rather focuses on local parts of the image [24]. Global techniques aim at optimizing the transformation parameters based on a global similarity metric of pixel intensities, e.g. mutual information [13]. They are usually favored when the detection of salient structures in the image is not possible and accurate sub-pixel registration is privileged, but suffer from high computational load and local minima trapping and are thus limited to registration problems with a bounded search space of transformation parameters, e.g. the fine registration of roughly aligned image pairs [13]. Therefore, local techniques are more prominently used in general scenarios [15, 16], as here the registration is based on a few salient features, which makes them also more robust against dissimilar, non-matchable image parts and other types of appearance changes. Typical appearance changes that are considered between remote sensing images are different modalities [16], illumination effects or disaster damages [3]. Automatic multitemporal image registration is also followed [9, 19, 23], but commonly not for such long time-spans as in our case of historical-to-modern image registration.

Registration of WWII Aerial Images to Present-Day Satellite Imagery: only a few works consider the problem addressed in our paper, with Murino et al. [18] being the first to provide a semi-automatic solution. In their approach, all possible matches between interactively selected line and point features are included for a RANSAC homography estimation. Automatic alignment of aerial images from WWII was also addressed in the GeoMemories project [1], but only between the historical images, whereas the actual georeferencing is achieved by selecting a reference historical image with known coordinates. The same principle is followed by Jao et al. [10], as historical-to-historical image registration has to deal with much less image variations and thus spatially verified SIFT feature matching is a suitable choice. Another solution to simplify the problem is presented by Cléry et al. [7]. Here a coarse initial registration is assumed, which can then be automatically refined by matching line features to a topographic map of the area. In contrast, our method does not make such assumption and represents, to the best of our knowledge, the first fully automatic approach to register aerial WWII images to present-day satellite imagery with a-priori unknown orientation and translation relation.

3 Methodology

In our application scenario, historical aerial images cover an area of 2.5–16 km\(^2\) and are registered to regions-of-interest in modern satellite imagery with a size of 1.5–10 times the size of the aerial image. We exploit the already known approximate image scale of the historical images derived from the recorded aircraft altitude and camera focal length to scale-normalize both images and limit the Hough parameter space to translation and rotation only. A preliminary inspection of our test data revealed an error of the estimated image scales of only 4.7%, with the maximum being at 30%. Hence, neglecting scale differences in local feature extraction and the Hough transformation space is a justified choice, whereas the small scale differences are respected in the final estimation of the image transformation from the correspondences responsible for the global peak in Hough space.

3.1 Hough Voting from Corresponding Local Interest Point Geometry

Extraction of local image features, e.g. SIFT features [14], from an image delivers descriptor vectors \(\mathbf {d}_i\) as well as local feature frames \(\mathbf {f}_i = (x_i,y_i,\sigma _i,\theta _i)\). Here, \((x_i,y_i)\) is the feature location relative to the image center, \(\sigma _i\) is its scale and \(\theta _i\) is the orientation. When registering image \(I^\prime \) to image \(I^{\prime \prime }\), we first compute similarities between all features \(\mathbf {d}^\prime _i\) and \(\mathbf {d}^{\prime \prime }_j\) as \(s_{i,j} = (||\mathbf {d}^\prime _i - \mathbf {d}^{\prime \prime }_j ||_2)^{-1}\). For computation of the Hough space, we take the subset \(\mathcal {M}\) containing the N matches with highest similarity, \(\mathbf {m}_{i,j} = (\mathbf {f}^\prime _i,\mathbf {f}^{\prime \prime }_j) \in \mathcal {M}\). Each \(\mathbf {m}_{i,j} \in \mathcal {M}\) votes for a rigid transformation in the 3D Hough Space \(H(x_{\mathbf {m}_{i,j}}, y_{\mathbf {m}_{i,j}}, \theta _{\mathbf {m}_{i,j}})\), with

H is initialized with zeros and updated as

for each \(\mathbf {m}_{i,j} = (\mathbf {f}^\prime _i,\mathbf {f}^{\prime \prime }_j) \in \mathcal {M}\).

After the N votes are cast, the transformation parameters \(x^{*}\), \(y^{*}\) and \(\theta ^{*}\) are identified at the maximum value in H. We then select the matches \(\mathcal {M}^{*}\) that voted for a similar transformation by thresholding the translation and rotation differences, i.e.

with \(T_t\) and \(T_\theta \) being the thresholding values for translation and orientation, respectively. From all correspondences in \(\mathcal {M}^*\), we estimate the final similarity transformation to account also for small scale differences between the images.

3.2 Combination of Votes from Local and Global Features

The strong appearance changes between old and new images not only decreases the discriminative power of the extracted features, but also affects the repeatability of interest point detection. Therefore, we rather apply a dense feature extraction scheme where local features are sampled on a regular grid with a fixed feature scale.

In this case, the selection of feature scale is influential to the performance of feature matching as it decides the level of image details to be compared. For multitemporal remote sensing data, both smaller and larger structures are potentially helpful: while smaller scales capture finer details like buildings, larger scales capture rougher structures like the courses of rivers and streets, which are likely less affected by changes over time. Therefore, we combine evidences from correspondences of both local and global image features by constructing two Hough spaces \(H_l\) and \(H_g\) and join them to the combined Hough space \(H_c\) as

where \(\lambda \) serves as weighting parameter.

3.3 Correspondence Zoning

Dense feature extraction ensures that features are uniformly distributed in both images, but the feature points belonging to correspondences responsible for a certain peak in Hough space might be concentrated on very few image parts with high appearance similarity. However, spatially uniform distributed correspondences should be preferred over clustered ones, since the estimation of the global image transformation is more robust and the final result is more trustable as it is grounded on evidences from different image parts.

Therefore, we utilize a zoning procedure that ensures that only one vote is allowed between two image regions, and consequently gives preference to solutions with spatially uniformly distributed correspondences. For this purpose, we set up an indicator function \(Z(x^{\prime }_i, y^{\prime }_i, x^{\prime \prime }_j,y^{\prime \prime }_j) \in \{0,1\}\) that defines if a correspondence between the points \((x^{\prime }_i, y^{\prime }_i)\) and \((x^{\prime \prime }_j,y^{\prime \prime }_j)\) has already been used. The Hough space update of Eq. 3 for \(H_l\) is applied only if \(Z(x^{\prime }_i, y^{\prime }_i, x^{\prime \prime }_j,y^{\prime \prime }_j) = 0\) and after an update with the match \(\mathbf {m}_{i,j}\), \(Z(x^\prime ,y^\prime ,x^{\prime \prime },y^{\prime \prime })\) is set to 1 for all \((x^\prime ,y^\prime ) \in \mathcal {N}_{x^{\prime }_i, y^{\prime }_i}\) and all \((x^{\prime \prime },y^{\prime \prime }) \in \mathcal {N}_{x^{\prime \prime }_j,y^{\prime \prime }_j}\), with \(\mathcal {N}_{x,y}\) specifying a neighborhood system of the point (x, y).

The effect of correspondence zoning is exemplarily shown in Fig. 2. When no correspondence zoning is applied, nearby erroneous matches produce a global peak in Hough space, resulting in a wrong registration result (Fig. 2a). However, with correspondence zoning the influence of nearby matches is reduced and the final matches of the global Hough peak are correct and more uniformly distributed over the image (Fig. 2b).

3.4 Implementation Details

In our implementation, we use SIFT features [14] to describe both the local and global image patches. For the local Hough space \(H_l\), features are extracted on a regular grid with an interval of 40 m and a patch size of 120 m, based on empirical tests. For each feature the orientation \(\theta _i\) is determined from the dominant gradient direction within the patch [14]. For the global Hough space \(H_g\), we extract a SIFT feature over the whole historical image area for 18 regularly spaced orientations. Features of the same size are extracted from the present-day satellite image with a fixed orientation and an interval of 100 m.

For the discretization of the Hough space, we use a step size of one pixel for the translation parameters and \(\frac{2\pi }{18}\) for the orientation. Due to the rougher discretization of the orientation parameter, we use bilinear interpolation to distribute the value of \(\theta _i\) to adjacent bins. For the correspondence zoning, a circular neighborhood with a radius of 80 m is used. Other parameters of our method are empirically set as follows: we equally weight the contribution of the local and global Hough space (\(\lambda \,=\,0.5\) in Eq. 5), take the \(N\,=\,10^5\) best correspondences to fill the Hough space, and set the thresholding values for the final inlier correspondences in Eq. 4 to \(T_t=100\) m and \(T_\theta \,=\,\frac{2\pi }{36}\).

4 Experiments

In this section we report quantitative results of our method on annotated test data and compare it to other image matching and registration algorithms proposed in literature.

Dataset and Evaluation Protocol: Our dataset consists of 8 reference satellite images from urban and non-urban areas in Austria. For each reference image, 3–11 historical aerial images are available, leading to a total of 42 image pairs. All images have been scale-normalized to a spatial resolution of 1 m prior to processing. Manually selected ground truth correspondences are used to measure the root mean squared error (RMSE) [2] of the image transformations determined by the different evaluated algorithms.

Algorithms: We compare our method to the following algorithms:

SIFT+RANSAC: standard SIFT feature matching [14] with RANSAC [8] for spatial verification serves as baseline performance for the comparison. For a fair comparison, we again use a dense feature extraction with fixed scale, set to 360 m in this case for best performance. Putative matches are achieved by SIFT matching with an inlier ratio threshold of 1.3 [14]. Additionally, RANSAC solutions of the similarity transform are validated only if the scale difference \(\varDelta s\) is within the bounds \( (1/T_{\varDelta s}) \le \varDelta s \le T_{\varDelta s}\), with \(T_{\varDelta s}\) set to 1.4.

Locally Linear Transforming (LLT) [15]: similar to RANSAC, LLT is a method for the simultaneous transformation estimation and outlier removal from a set of putative matches, but embedded in a maximum-likelihood framework with a locally linear constraint. The method is included in the evaluation as it showed an outstanding performance compared to other robust estimators on remote sensing data [15]. In our evaluation, we applied the rigid transformation LLT version to the same set of putative matches as for SIFT+RANSAC. We used the parameter settings reported in [15], but changed the uniform distribution parameter from 10 to 5 due to a better performance (see [15] for details).

Position-Scale-Orientation-SIFT (PSO-SIFT) [16]: PSO-SIFT is another recently proposed method for remote sensing image registration. Like our method, it is also based on statistical evidences from the local geometry of correspondences. However, transformation parameters are treated individually and their modes are only used for an enhanced distance metric of local descriptors.

Progressive Graph Matching (PGM) [6]: PGM is a general image matching algorithm based on graph matching. Starting with a set of initial matches, graph matching results are iteratively enriched with correspondences and re-matched. We use the SIFT matching results from SIFT+RANSAC to initialize PGM and apply RANSAC to its final matching results for transformation estimation.

Additionally, we report results on various versions of our Hough voting (HV) method in order to demonstrate the effects of the proposed improvements of local-global feature combination and correspondence zoning: a version with local Hough voting only (\(\text {HV}_\text {local}\)), global Hough voting only (\(\text {HV}_\text {global}\)), combined Hough voting according to Eq. 5 (\(\text {HV}_\text {local+global}\)), and the full method with correspondence zoning (\(\text {HV}_\text {local+global,CZ}\)).

Results and Discussion: In Fig. 3 the number of correct registration results achieved with the various algorithms are plotted. Correctness of a result is determined by comparing its RMSE with the threshold on the logarithmically scaled x-axis of the plot. It can be seen that the competing methods perform poorly on the test data set compared to the proposed methods. SIFT+RANSAC and LLT have a similar low correct registration rate which demonstrates the limits of outlier removal methods when weak feature descriptions produce too low inlier ratios in the set of putative matches. PGM has a slightly higher correct registration rate for higher acceptable errors due to its correspondence enrichment, but still does not reach the performance of our method.

The results shown in Fig. 3 also verify the effectiveness of combining local and global correspondences as well as zoning the local correspondences. \(\text {HV}_\text {local+global}\) shows a better performance than \(\text {HV}_\text {local}\) and \(\text {HV}_\text {global}\), and the full method \(\text {HV}_\text {local+global,CZ}\) with correspondence zoning gives another significant performance boost. Examples of correct registration results are shown in Fig. 4. For these examples, \(\text {HV}_\text {local+global,CZ}\) is the only method able to achieve a correct registration. Nevertheless, it is evident from the results that correctly registering images with such a high time distance is an enormously challenging problem, as even the best performing method \(\text {HV}_\text {local+global,CZ}\) is only able to register around 45 % of the cases with an error of less than 350 m.

The generally low precision of our Hough voting methods is primarily a result of the regular feature sampling with a step size of 40 m. This is a necessary compromise as feature detection has shown to have very unreliable repeatability, but on the other hand regular sampling prevents a precise localization of corresponding features. As part of future research, we plan to investigate fine registration as postprocessing step with a flexible transformation model, also to account for the actually non-linear spatial transformation between the images.

Runtime Analysis: In Table 1 we compare the average runtimes of our MATLAB implementation of Hough voting to the runtimes of the competing methods on the same machine. The tests for the competing methods have been performed with the original MATLAB implementations provided by the authors. Due to the high number of matches to be processed (\(N\,=\,10^5\)), the better registration performance of our method comes at the price of an considerably longer runtime than the outlier removal methods SIFT+RANSAC and LLT. It can also be seen that correspondence zoning does not only give a boost in registration performance, but also saves around 8% of computation time as not all of the N matches have to be evaluated for their local transformation and included in the Hough space.

5 Conclusions

Registration of aerial images from the times of WWII to modern satellite imagery proves to be a challenging problem due to the severe changes between images. Therefore, previously published approaches rely on strong initial assumptions about the geometric relation of images or have to make use of a manual step in the processing pipeline. In this paper, we introduced a Hough voting strategy that allows for the fully automatic historical-to-modern aerial image registration with a-priori unknown translation and orientation differences.

Although our method outperforms state-of-the-art methods for this kind of problem, it offers much potential for further improving the performance. For instance, our voting strategy can be easily extended to combined Hough spaces leveraging multiple descriptors for matching. The encoded evidences about relative geometric relations between images can also be integrated to reason about the overall geometric relations of images in a groupwise registration scenario. Additionally, a final fine registration step can be used to obtain a more precise solution. These issues, besides adapting the methodology to other domains, will be investigated for future research.

References

Abrate, M., Bacciu, C., Hast, A., Marchetti, A., Minutoli, S., Tesconi, M.: Geomemories a platform for visualizing historical, environmental and geospatial changes in the italian landscape. ISPRS Int. J. Geo-Inf. 2(2), 432–455 (2013)

Bouchiha, R., Besbes, K.: Comparison of local descriptors for automatic remote sensing image registration. Sig. Image Video Process. 9(2), 463–469 (2015)

Bowen, F., Hu, J., Du, E.Y.: A multistage approach for image registration. IEEE Trans. Cybern. 46(9), 2119–2131 (2016)

Busé, M.S.: WWII ordnance still haunts europe and the asia-pacific rim. J. Conv. Weapons Destr. 4(2), 83–87 (2016)

Chen, H.Y., Lin, Y.Y., Chen, B.Y.: Co-segmentation guided hough transform for robust feature matching. IEEE PAMI 37(12), 2388–2401 (2015)

Cho, M., Lee, K.M.: Progressive graph matching: making a move of graphs via probabilistic voting. In: CVPR, pp. 398–405 (2012)

Cléry, I., Pierrot-Deseilligny, M., Vallet, B.: Automatic georeferencing of a heritage of old analog aerial photographs. ISPRS Ann. 2(3), 33–40 (2014)

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981)

Gong, M., Zhao, S., Jiao, L., Tian, D., Wang, S.: A novel coarse-to-fine scheme for automatic image registration based on sift and mutual information. IEEE GRS 52(7), 4328–4338 (2014)

Jao, F.J., Chu, H.J., Tseng, Y.H.: Historical image registration and land-use land-cover change analysis. Environments 1(2), 181–189 (2014)

Jégou, H., Douze, M., Schmid, C.: Improving bag-of-features for large scale image search. IJCV 87(3), 316–336 (2010)

Le Moigne, J., Netanyahu, N.S., Eastman, R.D.: Image Registration for Remote Sensing. Cambridge University Press, Cambridge (2011)

Liang, J., Liu, X., Huang, K., Li, X., Wang, D., Wang, X.: Automatic registration of multisensor images using an integrated spatial and mutual information (SMI) metric. IEEE GRS 52(1), 603–615 (2014)

Lowe, D.: Distinctive image features from scale-invariant keypoints. IJCV 60(2), 91–110 (2004)

Ma, J., Zhou, H., Zhao, J., Gao, Y., Jiang, J., Tian, J.: Robust feature matching for remote sensing image registration via locally linear transforming. IEEE GRS 53(12), 6469–6481 (2015)

Ma, W., Wen, Z., Wu, Y., Jiao, L., Gong, M., Zheng, Y., Liu, L.: Remote sensing image registration with modified sift and enhanced feature matching. IEEE GRSL 14(1), 3–7 (2017)

Merler, S., Furlanello, C., Jurman, G.: Machine learning on historic air photographs for mapping risk of unexploded bombs. In: Roli, F., Vitulano, S. (eds.) ICIAP 2005. LNCS, vol. 3617, pp. 735–742. Springer, Heidelberg (2005). doi:10.1007/11553595_90

Murino, V., Castellani, U., Etrari, A., Fusiello, A.: Registration of very time-distant aerial images. In: ICIP, vol. 3, pp. 989–992. IEEE (2002)

Nagarajan, S., Schenk, T.: Feature-based registration of historical aerial images by area minimization. ISPRS J. Photogramm. Remote Sens. 116, 15–23 (2016)

Paunila, S.: Managing residual clearance: learning from Europe’s past. J. Conv. Weapons Destr. 18(1), 22–25 (2015)

Raguram, R., Frahm, J.M., Pollefeys, M.: A comparative analysis of RANSAC techniques leading to adaptive real-time random sample consensus. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008. LNCS, vol. 5303, pp. 500–513. Springer, Heidelberg (2008). doi:10.1007/978-3-540-88688-4_37

Sanromà, G., Alquézar, R., Serratosa, F.: A new graph matching method for point-set correspondence using the EM algorithm and softassign. CVIU 116(2), 292–304 (2012)

Vakalopoulou, M., Karantzalos, K., Komodakis, N., Paragios, N.: Simultaneous registration and change detection in multitemporal, very high resolution remote sensing data. In: CVPR Workshops, pp. 61–69 (2015)

Wen, G.J., Lv, J.J., Yu, W.X.: A high-performance feature-matching method for image registration by combining spatial and similarity information. IEEE GRS 46(4), 1266–1277 (2008)

Zitova, B., Flusser, J.: Image registration methods: a survey. IVC 21(11), 977–1000 (2003)

Acknowledgements

This work is supported by the Austrian Research Promotion Agency (FFG) under project grant 850695. The authors wish to thank Luftbilddatenbank Dr. Carls GmbH.

Acquisition of historical aerial imagery: Luftbilddatenbank Dr. Carls GmbH; Sources of historical aerial imagery: National Archives and Records Administration (Washington, D.C.) and Historic Environment Scotland (Edinburgh).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Zambanini, S., Sablatnig, R. (2017). A Hough Voting Strategy for Registering Historical Aerial Images to Present-Day Satellite Imagery. In: Battiato, S., Gallo, G., Schettini, R., Stanco, F. (eds) Image Analysis and Processing - ICIAP 2017 . ICIAP 2017. Lecture Notes in Computer Science(), vol 10484. Springer, Cham. https://doi.org/10.1007/978-3-319-68560-1_53

Download citation

DOI: https://doi.org/10.1007/978-3-319-68560-1_53

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68559-5

Online ISBN: 978-3-319-68560-1

eBook Packages: Computer ScienceComputer Science (R0)