Abstract

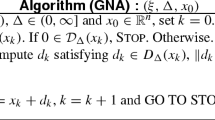

An extension of the Gauss—Newton method for nonlinear equations to convex composite optimization is described and analyzed. Local quadratic convergence is established for the minimization ofh ο F under two conditions, namelyh has a set of weak sharp minima,C, and there is a regular point of the inclusionF(x) ∈ C. This result extends a similar convergence result due to Womersley (this journal, 1985) which employs the assumption of a strongly unique solution of the composite functionh ο F. A backtracking line-search is proposed as a globalization strategy. For this algorithm, a global convergence result is established, with a quadratic rate under the regularity assumption.

Similar content being viewed by others

References

A. Ben-Israel, “A Newton—Raphson method for the solution of systems of equations,”Journal of Mathematical Analysis and its Applications 15 (1966) 243–252.

S.C. Billups and M.C. Ferris, “Solutions to affine generalized equations using proximal mappings,” Mathematical Programming Technical Report 94-15 (Madison, WI, 1994).

P.T. Boggs, “The convergence of the Ben-Israel iteration for nonlinear least squares problems,”Mathematics of Computation 30 (1976) 512–522.

J.M. Borwein, “Stability and regular points of inequality systems,”Journal of Optimization Theory and Applications 48 (1986) 9–52.

J.V. Burke, “Algorithms for solving finite dimensional systems of nonlinear equations and inequalities that have both global and quadratic convergence properties,” Report ANL/MCS-TM-54, Mathematics and Computer Science Division, Argonne National Laboratory (Argonne, IL, 1985).

J.V. Burke, “Descent methods for composite nondifferentiable optimization problems,”Mathematical Programming 33 (3) (1985) 260–279.

J.V. Burke, “An exact penalization viewpoint of constrained optimization,”SIAM Journal on Control and Optimization 29 (1991) 968–998.

J.V. Burke and M.C. Ferris, “Weak sharp minima in mathematical programming,”SIAM Journal on Control and Optimization 31 (1993) 1340–1359.

J.V. Burke and R.A. Poliquin, “Optimality conditions for non-finite valued convex composite functions,”Mathematical Programming 57 (1) (1992) 103–120.

J.V. Burke and P. Tseng, “A unified analysis of Hoffman's bound via Fenchel duality,”SIAM Journal on Optimization, to appear.

L. Cromme, “Strong uniqueness. A far reaching criterion for the convergence analysis of iterative procedures,”Numerische Mathematik 29 (1978) 179–193.

R. De Leone and O.L. Mangasarian, “Serial and parallel solution of large scale linear programs by augmented Lagrangian successive overrelaxation,” in: A. Kurzhanski et al., eds.,Optimization, Parallel Processing and Applications, Lecture Notes in Economics and Mathematical Systems, Vol. 304 (Springer, Berlin, 1988) pp. 103–124.

J.E. Dennis and R.B. Schnabel,Numerical Methods for Unconstrained Optimizations and Nonlinear Equations (Prentice-Hall, Englewood Cliffs, NJ, 1983).

M.C. Ferris, “Weak sharp minima and penalty functions in mathematical programming,” Ph.D. Thesis, University of Cambridge (Cambridge, 1988).

R. Fletcher, “Generalized inverse methods for the best least squares solution of non-linear equations,”The Computer Journal 10 (1968) 392–399.

R. Fletcher, “Second order correction for nondifferentiable optimization,” in: G.A. Watson, ed.,Numerical Analysis, Lecture Notes in Mathematics, Vol. 912 (Springer, Berlin, 1982) pp. 85–114.

R. Fletcher,Practical Methods of Optimization (Wiley, New York, 2nd ed., 1987).

U.M. Garcia-Palomares and A. Restuccia, “A global quadratic algorithm for solving a system of mixed equalities and inequalities,”Mathematical Programming 21 (3) (1981) 290–300.

K. Jittorntrum and M.R. Osborne, “Strong uniqueness and second order convergence in nonlinear discrete approximation,”Numerische Mathematik 34 (1980) 439–455.

K. Levenberg, “A method for the solution of certain nonlinear problems in least squares,”Quarterly Applied Mathematics 2 (1944) 164–168.

K. Madsen, “Minimization of nonlinear approximation functions,” Ph.D. Thesis, Institute of Numerical Analysis, Technical University of Denmark (Lyngby, 1985).

J. Maguregui, “Regular multivalued functions and algorithmic applications,” Ph.D. Thesis, University of Wisconsin (Madison, WI, 1977).

J. Maguregui, “A modified Newton algorithm for functions over convex sets,” in: O.L. Mangasarian, R.R. Meyer and S.M. Robinson, eds.,Nonlinear Programming 3 (Academic Press, New York, 1978) pp. 461–473.

O.L. Mangasarian, “Least-norm linear programming solution as an unconstrained minimization problem,”Journal of Mathematical Analysis and Applications 92 (1) (1983) 240–251.

O.L. Mangasarian, “Normal solutions of linear programs,”Mathematical Programming Study 22 (1984) 206–216.

O.L. Mangasarian and S. Fromovitz, “The Fritz John necessary optimality conditions in the presence of equality and inequality constraints,”Journal of Mathematical Analysis and its Applications 17 (1967) 37–47.

D.W. Marquardt, “An algorithm for least-squares estimation of nonlinear parameters,”SIAM Journal of Applied Mathematics 11 (1963) 431–441.

J.M. Ortega and W.C. Rheinboldt,Iterative Solution of Nonlinear Equations in Several Variables (Academic Press, New York, 1970).

M.R. Osborne and R.S. Womersley, “Strong uniqueness in sequential linear programming,”Journal of the Australian Mathematical Society. Series B 31 (1990) 379–384.

B.T. Polyak,Introduction to Optimization (Optimization Software, New York, 1987).

M.J.D. Powell, “General algorithm for discrete nonlinear approximation calculations,” in: C.K. Chui, L.L. Schumaker and J.D. Ward, eds.,Approximation Theory IV (Academic Press, New York, 1983) pp. 187–218.

H. Rådström, “An embedding theorem for spaces of convex sets,”Proceedings of the American Mathematical Society 3 (1952) 165–169.

S.M. Robinson, “Extension of Newton's method to nonlinear functions with values in a cone,”Numerische Mathematik 19 (1972) 341–347.

S.M. Robinson, “Normed convex processes,”Transactions of the American Mathematical Society 174 (1972) 127–140.

S. Robinson, “Stability theory for systems of inequalities, Part I: linear systems,”SIAM Journal on Numerical Analysis 12 (1975) 754–769.

S. Robinson, “Regularity and stability for convex multivalued functions,”Mathematics of Operations Research 1 (1976) 130–143.

S. Robinson, “Stability theory for systems of inequalities, Part II: Differentiable nonlinear systems,”SIAM Journal on Numerical Analysis 13 (1976) 497–513.

R.T. Rockafellar,Convex Analysis (Princeton University Press, Princeton, NJ, 1970).

R.T. Rockafellar, “First- and second-order epi-differentiability in nonlinear programming,”Transactions of the American Mathematical Society 307 (1988) 75–108.

R.S. Womersley, “Local properties of algorithms for minimizing nonsmooth composite functions,”Mathematical Programming 32 (1) (1985) 69–89.

Author information

Authors and Affiliations

Additional information

This material is based on research supported by National Science Foundation Grants CCR-9157632 and DMS-9102059, the Air Force Office of Scientific Research Grant F49620-94-1-0036, and the United States—Israel Binational Science Foundation Grant BSF-90-00455.

Rights and permissions

About this article

Cite this article

Burke, J.V., Ferris, M.C. A Gauss—Newton method for convex composite optimization. Mathematical Programming 71, 179–194 (1995). https://doi.org/10.1007/BF01585997

Received:

Revised:

Issue Date:

DOI: https://doi.org/10.1007/BF01585997