Abstract

The set-membership affine projection (SM-AP) algorithm has many desirable characteristics such as fast convergence speed, low power consumption due to data-selective updates, and low misadjustment. The main reason hindering the widespread use of the SM-AP algorithm is the lack of analytical results related to its steady-state performance. In order to bridge this gap, this paper presents an analysis of the steady-state mean square error (MSE) of a general form of the SM-AP algorithm. The proposed analysis results in closed-form expressions for the excess MSE and misadjustment of the SM-AP algorithm, which are also applicable to many other algorithms. This work also provides guidelines for the analysis of the whole family of SM-AP algorithms. The analysis relies on the energy conservation method and has the attractive feature of not assuming a specific model for the input signal. In addition, the choice of the upper bound for the error of the SM-AP algorithm is addressed for the first time. Simulation results corroborate the accuracy of the proposed analysis.

Similar content being viewed by others

Notes

In this paper, the convergence of an algorithm must be understood as the convergence of its MSE sequence \(\{ \operatorname{E}[e^{2}(k)] \}_{k \in\mathbb{N}}\) to its steady-state value.

A projection of a point z on a set Z is any point z p ∈Z which is closest to z.

The motivation is to highlight the similarities between the SM-AP and AP algorithms.

The same behavior is also valid for L=0. The only reason we did not plot a curve for L=0 is because the point τ o in which such a curve changes its inclination is out of the range of τ shown in Fig. 3. In addition, if we were to increase the range of τ to accommodate the case L=0, it would become harder to distinguish the other values of τ o for 1≤L≤4.

References

J.R. Deller, Set-membership identification in digital signal processing. IEEE ASSP Mag. 6, 4–20 (1989)

P.S.R. Diniz, Adaptive Filtering: Algorithms and Practical Implementation, 3rd edn. (Springer, New York, 2008)

P.S.R. Diniz, Convergence performance of the simplified set-membership affine projection algorithm, in Circuits, Systems and Signal Processing, vol. 30 (Birkhäuser, Basel, 2011), pp. 439–462

P.S.R. Diniz, S. Werner, Set-membership binormalized data reusing LMS algorithms. IEEE Trans. Signal Process. 51, 124–134 (2003)

E. Fogel, Y.-F. Huang, On the value of information in system identification—bounded noise case. Automatica 18, 229–238 (1982)

S.L. Gay, S. Tavathia, The fast affine projection algorithm, in Proc. IEEE ICASSP 1995, May, Detroit, MI, USA (1995), pp. 3023–3026

S. Gollamudi, S. Nagaraj, S. Kapoor, Y.-F. Huang, Set-membership filtering and a set-membership normalized LMS algorithm with an adaptive step size. IEEE Signal Process. Lett. 5, 111–114 (1998)

S. Gollamudi, S. Nagaraj, S. Kapoor, Y.-F. Huang, Set-membership adaptive equalization and updator-shared implementation for multiple channel communications systems. IEEE Trans. Signal Process. 46, 2372–2384 (1998)

L. Guo, Y.-F. Huang, Set-membership adaptive filtering with parameter-dependent error bound tuning, in Proc. IEEE ICASSP 2005, May, Philadelphia, PA, USA (2005), pp. IV-369–IV-372

M.V.S. Lima, P.S.R. Diniz, Steady-state analysis of the set-membership affine projection algorithm, in Proc. IEEE ICASSP 2010, March, Dallas, TX, USA (2010), pp. 3802–3805

M.V.S. Lima, P.S.R. Diniz, On the steady-state MSE performance of the set-membership NLMS algorithm, in Proc. IEEE ISWCS 2010, York, UK (2010), pp. 389–393

W.A. Martins, M.V.S. Lima, P.S.R. Diniz, Semi-blind data-selective equalizers for QAM, in Proc. IEEE SPAWC2008, July, Recife, Brazil (2008), pp. 501–505

S. Nagaraj, S. Gollamudi, S. Kapoor, Y.-F. Huang, BEACON: an adaptive set-membership filtering technique with sparse updates. IEEE Trans. Signal Process. 47, 2928–2941 (1999)

K. Ozeki, T. Umeda, An adaptive filtering algorithm using an orthogonal projection to an affine subspace and its properties. Electron. Commun. Jpn. 67(A), 19–27 (1984)

A. Papoulis, Probability, Random Variables, and Stochastic Processes, 3rd edn. (McGraw Hill, New York, 1991)

R. Price, A useful theorem for nonlinear devises having Gaussian Inputs. IEEE Trans. Inf. Theory IT-4, 69–72 (1958)

S.G. Sankaran, A.A.L. Beex, Convergence behavior of affine projection algorithms. IEEE Trans. Signal Process. 48, 1086–1096 (2000)

A.H. Sayed, Fundamentals of Adaptive Filtering (Wiley/IEEE, New York, 2003)

A.H. Sayed, M. Rupp, Error-energy bounds for adaptive gradient algorithms. IEEE Trans. Signal Process. 44, 1982–1989 (1996)

F.C. Schweppe, Recursive state estimate: unknown but bounded errors and system inputs. IEEE Trans. Autom. Control 13, 22–28 (1968)

H.-C. Shin, A.H. Sayed, Mean-square performance of a family of affine projection algorithms. IEEE Trans. Signal Process. 52, 90–102 (2004)

S. Werner, P.S.R. Diniz, Set-membership affine projection algorithm. IEEE Signal Process. Lett. 8, 231–235 (2001)

N.R. Yousef, A.H. Sayed, A unified approach to the steady-state and tracking analyses of adaptive filters. IEEE Trans. Signal Process. 49, 314–324 (2001)

www.lps.ufrj.br/~markus (click on the link Publications in the menu)

Author information

Authors and Affiliations

Corresponding author

Additional information

Thanks to CNPq and FAPERJ, Brazilian research agencies, for funding this work.

Appendices

Appendix A: Proof of Proposition 1

Proof

Squaring the Euclidean norm of both sides of Eq. (27), we have

and since \(\tilde{\boldsymbol{\varepsilon}} (k)= - \mathbf {X}^{T}(k) \Delta\mathbf{w}(k+1)\) and \(\tilde{\mathbf{e}}(k) = -\mathbf{X}^{T}(k) \Delta\mathbf {w}(k)\), it follows that

Then, removing the equal terms on both sides of the last equation, we get

□

Appendix B: Correlation Expression

Utilizing Eq. (23), we can eliminate \(\tilde {\boldsymbol{\varepsilon}} (k)\) from Eq. (29), and since \(\hat{\mathbf{R}}(k)\) and \(\hat{\mathbf{S}}(k)\) are symmetric matrices, after some manipulations, it follows that

Using \(\mathbf{e}(k) = \tilde{\mathbf{e}}(k) + \mathbf{n}(k)\) (see Eq. (25)) and defining

Eq. (52) can be rewritten as

Expanding the equation above and considering \(\hat{\mathbf{S}}(k) \approx\hat{\mathbf{R}}^{-1} (k)\) (see statement St-4), we get

Using the relation \(\underline{\tilde{\mathbf{e}}}^{T} (k) \hat{\mathbf{S}}(k) \mathbf {n}(k) = \mathbf{n}^{T} (k) \hat{\mathbf{S}}(k) \underline{\tilde{\mathbf{e}}} (k)\), applying the trace to the equation above, and using the property \(\operatorname{tr}\{ \mathbf{A}\mathbf{B}\} = \operatorname{tr}\{\mathbf{B}\mathbf{A}\}\), we can write

Assuming that at steady-state, \(\hat{\mathbf{S}}(k)\) is uncorrelated with the random matrices \(\underline{\tilde{\mathbf{e}}}(k) \underline {\tilde {\mathbf{e}}}^{T}(k)\), \(\underline{\tilde{\mathbf{e}}}(k) \mathbf{n}^{T}(k)\), \(\underline {\tilde{\mathbf{e} }}(k) \boldsymbol{\gamma}^{T}(k)\), n(k)n T(k), and n(k)γ T(k) (see assumption As-2), we get

It is possible to eliminate the dependence on \(\underline{\tilde {\mathbf{e} }}(k)\) by substituting (53) in the equation above as follows:

Appendix C: Calculating \(\mathrm{E}{[\tilde{\mathbf{e}}(k)\tilde{\mathbf{e}}^{T}(k)]}\)

Examining the (i+1)th row of Eqs. (24) and (25), we have

for i=0,…,L. Since \(\hat{\mathbf{R}}(k) \hat{\mathbf{S}}(k) \approx\textbf {I}\) (see statement St-4), the (i+1)th row of Eq. (23) is

Using Eq. (58) to replace e i (k) in the equation above, it follows that

Squaring the equation above, we get

Note that the noiseless a posteriori error vector is related to the noiseless a priori error vector through the following relation:

Now, considering Eq. (61) at iteration k−1, substituting Eq. (62) into Eq. (61), and taking the expected value, we get

where we considered that at steady-state, \(P_{\operatorname{up}} (k)\) is a constant \(P_{\operatorname{up}}\).

Expanding Eq. (63), we get

which can be simplified using Eq. (32), the relation \(\operatorname{E} [ \gamma_{i}^{2}(k-1) ] = \overline{\gamma}^{2} \text { for } i = 0, 1, \ldots, L\), and \(\operatorname{E} [ n^{2}(k) ] = \sigma_{n}^{2}\) for all k (see Definition 1), leading to

Assuming that at steady-state, \(\tilde{e}_{i}(k-1)\) is a zero-mean Gaussian RV (see assumption As-4), we can apply Result 2 to Eq. (65), leading to the following relation:

where

Utilizing the relation \(e_{i}(k-1) = \tilde{e}_{i}(k-1) + n(k-1-i)\) in order to remove the dependence on the a priori error signal and rearranging the terms, we get

where we have used \(\operatorname{E} [{e}_{i}^{2}(k-1) ] = \operatorname{E} [{e}_{i}^{2}(k) ]\) and \(\operatorname{E} [\tilde{e}_{i}^{2}(k-1) ] = \operatorname{E} [\tilde {e}_{i}^{2}(k) ]\) (see statement St-2), and we neglected the terms depending on \(\operatorname{E} [ n(k-1-i) \tilde {e}_{i}(k-1) ]\) (see statement St-3).

Assuming that \(\rho_{i}(k) \approx\rho_{0}(k) \text{ for $i = 0, 1, \ldots, L$ }\) (see assumption As-5), in order to simplify the mathematical manipulations, we can rewrite the recursion given by (68) as

where

By induction, for 0≤i≤L−1, one can prove that

Assuming that \(\operatorname{E} [ \tilde{\mathbf{e}}(k) \tilde{\mathbf {e}}^{T}(k) ]\) is diagonally dominant (see assumption As-3), we can write

with \(\mathbf{A}_{1} = \operatorname{diag} \{ 1, a, a^{2}, \dots, a^{L} \}\), and \(\mathbf{A}_{2} = \operatorname{diag} \{ 0, 1, 1+a, \dots, \sum_{l=0}^{L-1} a^{l} \}\).

Appendix D: Modeling ρ 0(k)

Equation (67) shows that ρ 0(k) is completely specified by \(\operatorname{E}[e_{0}^{2}(k)]\). Therefore, we propose the following approximation for \(\operatorname{E}[e_{0}^{2}(k)]\):

where

The approximation above originates from experimental observations and is a refinement of the approximation used in [3] for a simplified version of SM-AP algorithm, the SM-AP algorithm with simple-choice constraint vector (SCCV). Observing the SCCV (see [2, 22]), it is clear that the SM-AP algorithm with fixed modulus error-based constraint vector is more sensitive to the data reuse factor L, since the SCCV is chosen in such a way that many degrees of freedom are discarded. This dependence is represented in the approximation for \(\operatorname{E}[e_{0}^{2}(k)]\).

Appendix E: Modeling P up

Using arguments based on the central limit theorem [15], we can consider that, for a sufficiently large k, the error signal e 0(k)=d(k)−w T(k)x(k) will have a Gaussian distribution. This can also be observed in practice by plotting the histogram of e 0(k), as done in [18]. In addition, due to the signal model (see Definition 1), the error signal is a zero-mean random variable.

The probability of updating the filter coefficients at a certain iteration k is given by \(P_{\operatorname{up}}(k) = P[ |e_{0}(k)| > \overline{\gamma}]\). After convergence it can be written as

where \(\sigma_{e}^{2}\) is the variance of e 0(k), and Q(⋅) is the Gaussian complementary function, defined as \(Q ( x ) = \int_{x}^{\infty}{\frac{1}{\sqrt{2\pi }}e^{-t^{2}/2} \,dt} \) .

If we use the independence assumption, the variance of the error could be written as \(\sigma^{2}_{e}=\sigma_{n}^{2} + \operatorname{E} [\Delta\mathbf{w}^{T}(k) {\mbox{\bf{R}}} \Delta\mathbf{w}(k) ] \), and utilizing the Rayleigh quotient [2], we can determine the region of possible values for \(\sigma_{e}^{2}\),

where λ min and λ max are the smallest and the largest eigenvalues of the autocorrelation matrix R, respectively. Since both λ min,λ max≥0 [2] and at steady-state, we expect that \(\operatorname{E} [ \| \Delta\mathbf{w}(k) \|^{2} ]\) is small, we can consider \(\sigma_{e}^{2}\) to be close to \(\sigma_{n}^{2}\). So, the following model seems reasonable:

where η is a small positive constant that must be chosen as

These values of η were empirically determined and tested in many simulation scenarios. In fact, the results are not very sensitive to the choice of η. Any choice of η∈[0.1,0.3] leads to good results. If some information about the autocorrelation of the input signal is available, one should choose η as in Eq. (78) in order to enhance the theoretical approximations.

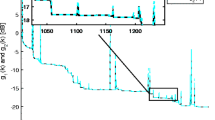

Figure 7 depicts the steady-state probability of update \(P_{\operatorname{up}}\) for different values of L, considering the Basic Scenario of Sect. 5. As can be observed, the theoretical curve follows closely the experimental one for L=0. When L≠0, however, we observe that the minimum value of \(P_{\operatorname{up}}\) stabilizes at a value different from 0.

The formula for \(P_{\operatorname{up}}\) given in Eq. (75) does not take into account that the Gaussian assumption is not valid for L>0. As can be seen in Fig. 7, the steady-state probability of updating of the SM-AP algorithm increases with L, and for L>0, the tail of the curve does not fall to 0. So, in order to properly estimate \(P_{\operatorname{up}}\), we need to add a constant, leading to the following expression:

where P min is a rough estimate of the smallest value that \(P_{\operatorname{up}}\) assumes as a function of \(\overline{\gamma}\). Table 2 summarizes the values of P min that were used in our experiments. These values provided good steady-state MSE results in different scenarios, especially for values of τ that yield low steady-state probability of update, which agrees with assumption As-6 used to simplify the expression for the EMSE of the SM-AP algorithm. Moreover, the approximation given by Eq. (79) has the attractive feature of not being very sensitive to small variations in P min.

Appendix F: Assumptions and Statements

Now we discuss the assumptions and statements used in the paper. The assumptions are:

As-1 The RV p(k) is independent of the event \(\{ |e_{0}(k)| > \overline{\gamma}\}\). This assumption is reasonable at steady-state, when it is expected that the algorithm updates in different directions but always maintaining w(k) close to w o , no matter the value of |e 0(k)|.

As-2 At steady-state \(\hat{\mathbf{S}}(k)\) is uncorrelated with the random matrices \(\underline{\tilde{\mathbf{e}}}(k) \underline {\tilde {\mathbf{e}}}^{T}(k)\), \(\underline{\tilde{\mathbf{e}}}(k) \mathbf{n}^{T}(k)\), \(\underline {\tilde{\mathbf{e} }}(k) \boldsymbol{\gamma}^{T}(k)\), n(k)n T(k), and n(k)γ T(k). Uncorrelation assumptions are required in all adaptive filtering analyses in order to maintain the mathematical tractability. The RVs \(\hat{\mathbf{S}}(k)\) and n(k)n T(k) are uncorrelated due to Definition 1. The other uncorrelation assumptions are motivated by the fact that \(\hat{\mathbf{S}}(k)\) varies slowly with k, especially for high values of N.

As-3 Diagonally dominant assumption. Since \(\operatorname{E} [ \tilde{\mathbf{e}}(k) \tilde{\mathbf{e}}^{T}(k) ]\), \(\operatorname{E} [ \boldsymbol{\gamma}(k) \boldsymbol{\gamma}^{T} (k) ]\), and \(\operatorname{E} [ \mathbf{n} (k) \mathbf{n}^{T}(k) ]\) are autocorrelation matrices, they have higher values on the main diagonal. In addition, from Definition 2 and Result 2, the cross-correlation matrices presented in Eq. (30) can be written as a sum of one of the autocorrelation matrices with the cross-correlation matrix \(\operatorname{E} [ \tilde{\mathbf{e}} (k) \mathbf{n}^{T} (k) ] \approx\mathbf{0}\), see Eq. (36).

As-4 At steady-state \(\tilde{e}_{i}(k-1)\) is a zero-mean Gaussian RV. By using this assumption together with the distribution of n(k) given in Definition 1, we have that n(k−1−i) and e i (k−1) as well as \(\tilde{e}_{i}(k-1)\) and e i (k−1) are jointly Gaussian RVs [15]. So, we can apply Result 2 to Eq. (65).

As-5 At steady-state ρ i (k)≈ρ 0(k), for i=0,…,L. This is reasonable for small values of L; e.g., for L=0, the relation given by assumption As-5 is an equality rather than an approximation.

As-6 \(P_{\operatorname{up}} \ll1\), or μ≪1, or \(P_{\operatorname{up}} \mu\ll1\). Since μ=1 for the SM-AP algorithm, we must have \(P_{\operatorname{up}} \ll1\). This is not true for small values of \(\overline{\gamma}\). For example, in the limiting case where \(\overline{\gamma}\rightarrow 0\), we have \(P_{\operatorname{up}} \rightarrow1\). This implies that the proposed theoretical MSE expressions of the SM-AP algorithm are not so accurate for small values of \(\overline{\gamma}\). However, from Chebyshev’s inequality [15, 18] we know that \(P[\mathbf{w}_{o} \in\mathcal{H}(k)] = P[|d(k) - \mathbf{w}_{o}^{T} \mathbf{x}(k)| \leq\overline{\gamma}] = 1 - P[| n(k) | > \overline{\gamma}] \geq1 - ( {\sigma_{n}}/{\overline{\gamma}} )^{2} \), i.e., in order to have \(\mathbf{w}_{o} \in\mathcal{H}(k)\) with high probability, we must choose \(\overline{\gamma}\gg\sigma_{n}\) (in fact, we know that Chebyshev’s inequality provides a conservative lower bound; for n(k) satisfying Definition 1(c), e.g., choosing \(\overline{\gamma}=2\sigma_{n}\) makes \(P[\mathbf{w}_{o} \in\mathcal {H}(k)] \approx 95\ \%\)). On the other hand, for too large values of \(\overline{\gamma}\), the algorithm may not update at all, thus not converging to a point close to w o .

As-7 The elements on the main diagonal of \(\operatorname{E} [ \hat{\mathbf{S}}(k) ]\) are equal. This assumption on the input-signal model is required to maintain the mathematical tractability of the problem at hand. Note that, for L=0, this is always true (i.e., not an assumption), and for L=1, this is equivalent to satisfy \(\operatorname{E} [ \| \mathbf{x}(k) \|^{2} ] = \operatorname{E} [ \| \mathbf {x}(k-1) \|^{2} ]\), which is very likely to be a good approximation, especially for long vectors (since the difference between the terms on the left-hand side and right-hand side corresponds to just one sample/element) or for well behaved input signals (e.g., stationary signals). In addition, since A 1 is a diagonal matrix, the ratio \(\frac{ \operatorname{tr} \{ \mathbf{A}_{1} \operatorname{E} [ \hat {\mathbf{S}}(k) ] \} }{ \operatorname{tr} \{ \operatorname{E} [ \hat{\mathbf{S}}(k) ] \} }\) represents a weighted mean of the elements on the main diagonal of A 1, whose weights are the diagonal elements of \(\operatorname{E} [ \hat{\mathbf{S}}(k) ]\). This assumption enables us to exchange the weighted mean by an arithmetic mean, which is much easier to solve and also avoids the problem of determining the weights.

The statements used in the analysis are:

St-1 X(k) has full column rank. This guarantees the existence of \(\hat{\mathbf{R}}^{-1} (k)\) and is usually true for a tapped-delay-line structure with a random input signal during steady-state. An example of exception would be a signal that is constant during a long interval, but this is not likely to occur with random input signals.

St-2 At steady-state \(\operatorname{E} [{e}_{i}^{2}(k-1) ] = \operatorname{E} [{e}_{i}^{2}(k) ]\) and \(\operatorname{E} [\tilde{e}_{i}^{2}(k-1) ] = \operatorname{E} [\tilde {e}_{i}^{2}(k) ]\). Since the starting point of the analysis is to assume that the algorithm converged in order to analyze its steady-state behavior, the sequence \(\{ \operatorname{E} [{e}_{i}^{2}(k) ] \}_{k\in\mathbb{N}}\) converges, and, therefore, the first equality in St-2 always holds at steady-state. The second equality follows by a combination of the first equality and the noise model given in Definition 1.

St-3 At steady-state \(\operatorname{E} [ n(k-1-i) \tilde {e}_{i}(k-1) ]\) can be neglected. This statement implies that \(\operatorname{E} [ n(k-1-i) \tilde{e}_{i}(k-1) ] \approx0\) for all i. Recalling Result 1 and Definition 1, we know that, for i=0, \(\operatorname{E} [ n(k-1) \tilde{e}_{0}(k-1) ] = 0\). Note that St-3 becomes less accurate as i grows (or equivalently, for large values of L).

St-4 \(\hat{\mathbf{S}}(k) \approx\hat{\mathbf {R}}^{-1} (k)\). Follows from St-1 and the fact that δ is chosen as a small constant used to avoid numerical instability problems that may occur especially in the first iterations, i.e., δ≪1 as described in Sect. 3.2.

Rights and permissions

About this article

Cite this article

Lima, M.V.S., Diniz, P.S.R. Steady-State MSE Performance of the Set-Membership Affine Projection Algorithm. Circuits Syst Signal Process 32, 1811–1837 (2013). https://doi.org/10.1007/s00034-012-9545-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-012-9545-4