Abstract

The massive consumption of antibiotics in the ICU is responsible for substantial ecological side effects that promote the dissemination of multidrug-resistant bacteria (MDRB) in this environment. Strikingly, up to half of ICU patients receiving empirical antibiotic therapy have no definitively confirmed infection, while de-escalation and shortened treatment duration are insufficiently considered in those with documented sepsis, highlighting the potential benefit of implementing antibiotic stewardship programs (ASP) and other quality improvement initiatives. The objective of this narrative review is to summarize the available evidence, emerging options, and unsolved controversies for the optimization of antibiotic therapy in the ICU. Published data notably support the need for better identification of patients at risk of MDRB infection, more accurate diagnostic tools enabling a rule-in/rule-out approach for bacterial sepsis, an individualized reasoning for the selection of single-drug or combination empirical regimen, the use of adequate dosing and administration schemes to ensure the attainment of pharmacokinetics/pharmacodynamics targets, concomitant source control when appropriate, and a systematic reappraisal of initial therapy in an attempt to minimize collateral damage on commensal ecosystems through de-escalation and treatment-shortening whenever conceivable. This narrative review also aims at compiling arguments for the elaboration of actionable ASP in the ICU, including improved patient outcomes and a reduction in antibiotic-related selection pressure that may help to control the dissemination of MDRB in this healthcare setting.

Similar content being viewed by others

This narrative review summarizes the available evidence, emerging options, and unsolved controversies for the optimization of antibiotic therapy in the ICU. The potential benefit of antibiotic stewardship programs to improve patient outcomes and reduce the ecological side effects of these drugs is also discussed. |

Introduction

Antibiotics are massively used in ICUs around the world [1]. While the adequacy and the early implementation of empirical coverage are pivotal to cure patients with community- and hospital-acquired sepsis, antimicrobial therapy is not always targeted and, in more than one out of two cases, may be prescribed in patients without confirmed infections. Moreover, antibiotic de-escalation is insufficiently considered. The resulting selection pressure together with the incomplete control of cross-colonization with multidrug-resistant bacteria (MDRB) makes the ICU an important determinant of the spread of these pathogens in the hospital. As instrumental contributors of antimicrobial stewardship programs (ASP), intensivists should be on the leading edge of conception, optimization, and promotion of therapeutic schemes for severe infections and sepsis, including the limitation of antimicrobial overuse.

In this narrative review based on a literature search (MEDLINE database) completed in September 2018, we sought to summarize recent advances and emerging perspectives for the optimization of antibiotic therapy in the ICU, notably better identification of patients at risk of MDRB infection, more accurate diagnostic tools enabling a rule-in/rule-out approach for bacterial sepsis, an individualized reasoning for the selection of single-drug or combination empirical regimen, the use of adequate dosing and administration schemes to ensure the attainment of pharmacokinetics/pharmacodynamics targets, concomitant source control when appropriate, and a systematic reappraisal of initial therapy in an attempt to minimize collateral damage on commensal ecosystems through de-escalation and treatment-shortening whenever conceivable. We also aimed to compile arguments for the elaboration of actionable ASP in the ICU, including improved patient outcomes and a reduction in antibiotic-related selection pressure that may help to control the dissemination of MDRB in this healthcare setting.

How antimicrobial therapy influences bacterial resistance

The burden of infections due to extended-spectrum beta-lactamase-producing Enterobacteriaceae (ESBL-E) and MDR Pseudomonas aeruginosa is rising steadily, carbapenem-resistant Acinetobacter baumannii and carbapenemase-producing Enterobacteriaceae (CRE) are spreading globally, while methicillin-resistant Staphylococcus aureus (MRSA) and vancomycin-resistant enterococci generate major issues in several geographical areas [2,3,4,5,6,7,8,9,10,11,12,13,14]. These trends now apply for both ICU-acquired infections and imported bacterial sepsis as a result of the successful dissemination of MDRB in hospital wards and other healthcare environments (Fig. 1).

Current resistance rates in major pathogens responsible for hospital-acquired infections according to World Health Organization (WHO) regions. 3GCR third-generation cephalosporin-resistant, CR carbapenem-resistant, MDR multidrug-resistant, MR methicillin-resistant. Data were extracted from the WHO Antimicrobial Resistance Global Report 2014 [171], National Healthcare Safety Network/Centers for Disease Control and Prevention Report 2011–2014 [11], European Antimicrobial Resistance Surveillance Network Annual Report 2016 [172], International Nosocomial Infection Control Consortium Report 2010–2015 [10], CHINET Surveillance Network Report 2014 [14], and other references [5, 12, 13]. Available resistance rates in the specific context of ICU-acquired infections are in the upper ranges of reported values for all geographical areas

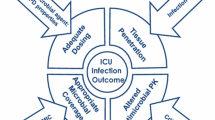

Up to 70% of ICU patients receive empirical or definite antimicrobial therapy on a given day [1]. The average volume of antibiotic consumption in this population has been recently estimated as 1563 defined daily doses (DDD) per 1000 patient-days (95% confidence interval 1472–1653)—that is, almost three times higher than in ward patients, with marked disparities for broad-spectrum agents such as third-generation cephalosporins [15]. Whilst most of the underlying mechanisms ensue from a succession of sporadic genetic events that are not directly induced by antibiotics, the selection pressure exerted by these drugs stands as a potent driver of bacterial resistance (Fig. 2) [16, 17].

Drivers of antimicrobial resistance in the ICU. MDRB multidrug-resistant bacteria, ASP antimicrobial stewardship programs, ICP infection control programs. “Direct” selection pressure indicates the selection of a pathogen with resistance to the administered drug. Green vignettes indicate the positioning of countermeasures. ASP may notably encompass every intervention aimed at limiting the ecological impact of antimicrobials agents, including rationalized empirical initiation, choice of appropriate drugs with the narrowest spectrum of activity (especially against resident intestinal anaerobes) and minimal bowel bioavailability, and reduced treatment duration [173]. ICP may include educational interventions to ensure a high level of compliance to hand hygiene and other standard precautions, targeted contact precautions in MDRB carriers (e.g., carbapenemase-producing Enterobacteriaceae), appropriate handling of excreta, and environment disinfection [167]

At the patient level, antimicrobial exposure allows the overgrowth of pathogens with intrinsic or acquired resistance to the administered drug within commensal ecosystems or, to a lesser extent, at the site of infection. Of note, some mechanisms may confer resistance to various classes, notably the overexpression of efflux pumps in non-fermenting Gram-negative bacteria, thereby resulting in the selection of MDR mutants following only a single-drug exposure [18]. At the ICU scale, consumption volumes of a given class correlate with resistance rates in clinical isolates, including for carbapenems or polymyxins [19,20,21,22,23,24,25,26], although this may fluctuate depending on bacterial species and settings [27, 28].

Yet, in addition to its clinical spectrum, anti-anaerobic properties should be considered when appraising the ecological impact of each antibiotic [29]. Indeed, acquisition of MDR Gram-negative bacteria through in situ selection, cross-transmission, or environmental reservoirs may be eased by antimicrobial-related alterations of the normal gut microbiota—primarily resident anaerobes—and the colonization resistance that it confers [30]. A prior course of anti-anaerobic drugs may notably predispose to colonization with ESBL-E [31], AmpC-hyperproducing Enterobacteriaceae [32], or CRE [33, 34]. The degree of biliary excretion of the drug appears as another key factor to appraise its potential impact on intestinal commensals [35,36,37].

Risk factors for multidrug-resistant pathogens

The clinical value of identifying risk factors for MDRB infection is to guide empirical therapy before the availability of culture results—that is, pathogen identification and antimicrobial susceptibility testing (AST). However, no single algorithm may be used to predict a MDRB infection given the complex interplay between the host, the environment, and the pathogen, thus requiring an individualized probabilistic approach for the selection of empirical drugs (Table 1).

Colonization markedly amplifies the risk of subsequent infection with a given MDRB. However, the positive predictive value of this risk factor never exceeds 50% whatever the colonizer is [2, 38,39,40]. For instance, ESBL-E infections occur during the ICU stay in only 10–25% of ESBL-E carriers [41]. Whether an MDRB carrier becomes infected is related to a further series of factors that may or not be related to those associated with the risk of acquired colonization [2, 38, 39]. Overall, the presence or absence of documented carriage should not be considered as the unique requisite for the choice of empirical therapy.

Patients with advanced co-morbid illnesses, prolonged hospital stays, use of invasive procedures, and prior antibiotic exposure are at increased risk of MDRB infections [42, 43].

The patient location is another determinant of risk as there are vast differences in the epidemiology of MDRB globally, regionally, and even within hospitals in the same city [2, 44]. Reasons for these discrepancies may include socioeconomic factors as well as variations in case-mix, antimicrobial consumption, and hygiene practices.

When not to start antimicrobials in the ICU

Although mixed [45], the available evidence supports a beneficial effect of prompt antibiotic administration on survival rates in sepsis and septic shock, irrespective of the number of organ dysfunctions [46,47,48,49]. However, the clinical diagnosis of sepsis is challenging in critically ill patients having multiple concurrent disease processes, with up to 50% of febrile episodes being of non-infectious origin [50]. Furthermore, collection of microbiological evidence for infection is typically slow, and previous antibiotic exposure may render results unreliable. Indeed, cultures remain negative in 30–80% of patients clinically considered infected [51, 52]. Uncertainty regarding antibiotic initiation in patients with suspected lower respiratory tract infection is further complicated by the fact that as many as one-third of pneumonia cases requiring ICU admission are actually viral [53, 54].

In 2016, the Sepsis-3 task force introduced the quick sepsis-related organ failure assessment score (qSOFA), a bedside clinical tool for early sepsis detection [55]. Although the predictive value of qSOFA for in-hospital mortality has been the focus of several external validation studies [56], it remains to be investigated whether this new score may help to rationalize antibiotic use in patients with suspected infection. Yet, published data suggest that qSOFA may lack sensitivity for early identification of patients meeting the Sepsis-3 criteria for sepsis [57].

Hence, antibiotics are mostly used empirically in ICU patients [58]. A provocative before–after study, however, suggested that aggressive empirical antibiotic use might be harmful in this population [59]. In fact, a conservative approach—with antimicrobials started only after confirmed infection—was associated with a more than 50% reduction in adjusted mortality as well as higher rates of appropriate initial therapy and shorter treatment durations.

Biomarkers may help to identify or—perhaps more importantly—rule out bacterial infections in this setting, thus limiting unnecessary antibiotic use and encouraging clinicians to search for alternative diagnoses. Many cytokines, cell surface markers, soluble receptors, complement factors, coagulation factors, and acute phase reactants have been evaluated for sepsis diagnosis [60], yet most offer only poor discrimination [61]. Procalcitonin (PCT) levels are high in bacterial sepsis but remain fairly low in viral infections and most cases of non-infectious systemic inflammatory response syndrome (SIRS). However, a PCT-based algorithm for initiation (or escalation) of antibiotic therapy in ICU patients neither decreases overall antimicrobial consumption nor shortens time to adequate therapy or improves patient outcomes [62]. Thus, PCT is currently not recommended as part of the decision-making process for antibiotic initiation in ICU patients [49].

Considering the complexity of the host response and biomarker kinetics, a combined approach which integrates the clinical pretest probability of infection could facilitate the discrimination between bacterial sepsis and non-infectious SIRS in emergency departments and probably also in critically ill patients [63]. Given their high sensitivity, such multi-marker panels may be primarily used to rule out sepsis, albeit only in a subset of patients as a result of their suboptimal specificity (Table E1). In contrast, novel molecular assays for rapid pathogen detection in clinical samples show good specificity, yet poor sensitivity, thus providing a primarily rule-in method for infection (see below). For the foreseeable future, however, physicians will remain confronted with considerable diagnostic uncertainty and, in many cases, still have to rely on their clinical judgment for decisions to withhold or postpone antimicrobial therapy.

Impact of immune status

The host immune status is a key factor for the initial choice of antimicrobial therapy in the ICU [64]. Solid organ transplant recipients receiving immunosuppressive medications to prevent allograft rejection can present with sepsis or septic shock and very few or even no typical warning signs such as fever or leukocytosis. The level of required immunosuppression and the site of infections vary according to the allograft type; the timing of infection from original transplant surgery delineates the occurrence of nosocomial sepsis and opportunistic infections (Table 2) [65]. In hematological of solid cancer patients receiving cytoablative chemotherapy, the duration and level of neutropenia will be essential factors for the choice of empirical therapy [66, 67]. HIV-infected patients are not only susceptible to community-acquired infections but also to a vast panel of opportunist infections depending on CD4 cell count [68]. Other host immune profiles encompass immunoglobulin deficiencies and iatrogenic immunosuppression (Table 2) [69]. Because immunocompromised patients may have multiple concomitant dysfunctional immune pathways, co-infections (bacterial and/or viral and/or fungal) are possible and, when suspected, required several antimicrobials as part of empirical therapy. Of note, ageing has been associated with impairments in both innate and adaptive immunity that may predispose to severe bacterial infections; yet, the impact of immunosenescence on the management of ICU patients warrants further investigation [70, 71].

Early microbiological diagnosis: from empirical to immediate adequate therapy

The concepts of empirical therapy and de-escalation originate from the timeframe of routine bacteriological diagnosis. With culture-based methods, the turnaround time from sampling to AST results necessitates 48 h or more, leaving much uncertainty about the adequacy of empirical coverage at the acute phase of sepsis. Molecular diagnostic solutions have therefore been developed to accelerate the process without losing performance in terms of sensitivity and specificity.

A wide array of automated PCR-based systems targeting selected pathogens and certain resistance markers have recently been introduced (Table 3). Several panels are now widely available in clinical laboratories for specific clinical contexts (e.g., suspected bloodstream infections, pneumonia, or meningoencephalitis), offering a “syndromic approach” to microbiological diagnosis [72, 73]. Syndromic tests can be run with minimal hands-on time and identify pathogens faster than conventional methods (i.e., 1.5–6 h), especially when implemented as point-of-care systems. However, these tests remain expensive (> 100 USD per test) and must be performed alongside conventional cultures, which they cannot entirely replace. They also provide partial information about antibiotic susceptibility since only a limited number of acquired resistance genes are screened (e.g., those encoding ESBL or carbapenemase). Overall, further investigations are warranted to fully appraise their potential impact on patient outcomes [72].

A next step will be the daily use of clinical metagenomics—that is, the sequencing of nucleic acids extracted directly from a given clinical sample for the identification of all bacterial pathogens and their resistance determinants [74]. Fast sequencers such as the Nanopore (Oxford Nanopore Technologies, Oxford, UK) allow turnaround times of 6–8 h at similar costs to that of syndromic tests [75, 76]. This approach can also assess the host response at the infection site by sequencing the retro-transcribed RNA, possibly adding to its diagnostic yield [77]. Nonetheless, significant improvements in nucleic extraction rates, antibiotic susceptibility inference, and the exploitation of results into actionable data must be made before clinical metagenomics can be part of routine diagnostic algorithms.

Besides new-generation tools, rapidly applicable information can still be obtained from culture-based methods such as direct AST on lower respiratory tract samples (time from sample collection ca. 24 h) [78] or lab automation with real-time imaging of growing colonies—for instance, the Accelerate Pheno™ system (Accelerate Diagnostics, Tucson, AZ) provides AST results in 6–8 h from a positive blood culture [79].

To be effective, all these tests must be integrated into the clinical workflow, thereby raising other organizational challenges and requiring the implementation of ASP [80].

The right molecule(s) but avoid the wrong dose

Key features to appraise the optimal dozing of a given antibiotic include the minimum inhibitory concentration (MIC) of the pathogen and the site of infection. Still, for most cases, clear guidance on how to adapt the dose on the basis of such characteristics is lacking, leaving much uncertainty on this issue. Defining the right dose in patients with culture-negative sepsis is a further challenge, although targeting potential pathogens with the highest MICs may appear to be a reasonable approach.

Underdosing of antibiotics is frequent in critically ill patients. Indeed, up to one out of six patients receiving beta-lactams does not reach the minimal concentration target (i.e., free antibiotic concentrations above the MIC of the pathogen during more than 50% of the dosing interval), and many more do not reach the target associated with maximal bacterial killing (i.e., concentrations above 4 × MIC during 100% of the dosing interval) [81]. This is particularly worrisome in the first hours of therapy when a maximal effect is highly desirable. Unfortunately, no standard remedy for this problem is available, and the solution depends on the physicochemical properties of the drug (e.g., hydrophilic versus lipophilic), patient characteristics, administration scheme, and the use of organ support (e.g., renal replacement therapy or extracorporeal membrane oxygenation) [82].

The volume of distribution—an important determinant of adequate antibiotic concentrations—is not measurable in critically ill patients. Yet, those with evidence for increased volume of distribution (e.g., positive fluid balance) require a higher loading dose to rapidly ensure adequate tissue concentrations, particularly for hydrophilic antibiotics, and for both intermittent and continuous infusion schemes [83]. This first dose must not be adapted to the renal function for antibiotics with predominant or exclusive renal clearance.

Many antibiotics used in the ICU are cleared by the kidneys; so, dosing adaptation for subsequent infusions must be considered in case of acute kidney injury (AKI) or augmented renal clearance (i.e., a measured creatinine clearance of 130 mL/min/1.73 m2 or higher). This latter situation is associated with lower antibiotic exposure [84] and implies higher maintenance doses to keep concentrations at the targeted level, yet therapeutic drug monitoring (TDM) appears necessary to avoid overdosing.

These features can be integrated into pharmacokinetics (PK)/pharmacodynamics (PD) optimized dosing which can be considered a three-step process (Fig. 3). PK models can be used when selecting the dose for each of these steps [85] even if these predictions are estimations only with still important intra- and inter-individual variations. These are nowadays available in several stand-alone software packages, and integration in prescription drug monitoring systems (PDMS) will be the next step. TDM can be used to further refine therapy for many antibiotics [86].

Sequential optimization of antimicrobial pharmacokinetics in critically ill patients. In obese patients, dosing regimen should be adapted on the basis of lean body weight or adjusted body weight for hydrophilic drugs (e.g., beta-lactams or aminoglycosides) and on the basis of lean body weight for lipophilic drugs (e.g., fluoroquinolones or glycylcyclines)—see Ref. [174] for details. Dosing regimens for the first antibiotic dose (unchanged, increased, or doubled) are proposed by comparison with those usually prescribed in non-critically ill patients. PD pharmacodynamics, MIC minimal inhibitory concentration, AUC area under the curve, ARC augmented renal clearance, TDM therapeutic drug monitoring, AKI acute kidney injury, CRRT continuous renal replacement therapy, CrCL creatinine clearance

Is there a role for routine therapeutic drug monitoring?

TDM may be employed to minimize the risk of antimicrobial toxicity and maximize drug efficacy through optimized PK, especially for aminoglycosides and glycopeptides. Indeed, high peak levels of aminoglycosides over the pathogen MIC appear beneficial in patients with ventilator-associated pneumonia or other life-threatening MDRB infections [87,88,89], while adequate trough vancomycin concentrations improve the clinical response in those with bloodstream infection due to MRSA [90].

However, the role of routine TDM in optimizing beta-lactam dosing remains controversial. The main issues that nowadays prevent the implementation of such a strategy in clinical practice are (1) the lack of a standardized method to reliably measure beta-lactam concentrations with a high intercenter reproducibility, (2) the delayed results of TDM for clinicians (i.e., the lack of a “point-of-care” for beta-lactam TDM in most of hospitals), (3) the optimal timing and number of samples to adequately describe the time course of drug concentrations, (4) the fact that the association between insufficient beta-lactam concentrations and the increased risk of therapeutic failure or impaired outcome is based only on retrospective studies, (5) the absence of clinical data showing a potential role of adequate beta-lactam levels in the emergence of resistant strains, (6) the poor characterization of the optimal duration of beta-lactam levels exceeding the MIC of the infective pathogen, when available, or of the optimal PK target in case of empirical therapy, and (7) the time needed to obtain the MIC of the infective pathogen, which precludes an adequate targeted therapy using PK principles [81, 91]. It is therefore possible that epidemiological cutoff (ECOFF) values are an acceptable option [92], but further studies are needed before the routine TDM of beta-lactams becomes available in most ICUs. Interestingly, high beta-lactam concentrations may result in drug-related neurotoxicity, which represents another potential role for TDM in critically ill patients [93, 94].

Key questions about antimicrobials

New and long-established antimicrobials

Polymyxins are considered the cornerstone of therapy for infections due to extremely drug-resistant (XDR) Gram-negative bacteria, including carbapenem-resistant A. baumannii, P. aeruginosa, and K. pneumoniae. Of note, recent studies indicate that colistin and polymyxin B are associated with less renal and neurological toxicity than previously reported. Several questions remain incompletely addressed, including the need and type of combination therapies, optimal dosing regimen, ways to prevent the emergence of resistance, and role of aerosolized therapy. Fosfomycin may also have a role in these infections.

Drugs newly approved or in late development phase mainly include ceftolozane–tazobactam, ceftazidime–avibactam, ceftaroline–avibactam, aztreonam–avibactam, carbapenems combined with new beta-lactamase inhibitors (e.g., vaborbactam, relebactam), cefiderocol, plazomicin, and eravacycline (Table 4). These drugs have mainly been tested in complicated urinary tract infection, complicated intra-abdominal infections (cIAI), or skin and soft tissue infection (SSTI). Limited data are currently available in ICU patients [95], notably for dosing optimization in severe MDRB infections. Piperacillin–tazobactam appears less effective than carbapenems in bloodstream infections caused by ESBL-E [96, 97]; however, ceftolozane–tazobactam and ceftazidime–avibactam might be considered as carbapenem-sparing options for treatment of such infections in areas with high prevalence of CRE. The actual question is should we still save carbapenems instead of saving new antibiotics?

In addition to glycopeptides, long-established antibiotics with activity against MRSA mainly include daptomycin (e.g., for bloodstream infections) and linezolid (e.g., for hospital-acquired pneumonia, HAP) [98, 99]. These alternatives may be preferred in patients with risk factors for AKI. Daptomycin appears safe even at high doses and in prolonged regimens, with rhabdomyolysis representing a rare, reversible side effect. Conversely, linezolid has been linked with several adverse events most often associated with specific risk factors (e.g., renal impairment, underlying hematological disease, or extended therapy duration), suggesting a role for TDM in patients at high risk of toxicity. Next, “new-generation” cephalosporins such as ceftaroline and ceftobiprole have been approved for the treatment of MRSA infections and seem promising in overcoming the limitations associated with the older compounds. Other new agents with activity against MRSA include lipoglycopeptides (dalbavancin, oritavancin, and telavancin), fluoroquinolones (delafloxacin, nemonoxacin, and zabofloxacin), an oxazolidinone (tedizolid), a dihydrofolate reductase inhibitor (iclaprim), and a tetracycline (omadacycline); yet, the yield of these new options remains to be investigated in critically ill patients with severe MRSA infection [100].

Single-drug or combination regimen

The question of whether antibiotic combinations provide a beneficial effect beyond the empirical treatment period remains unsettled. Meta-analyses of randomized controlled trials (RCTs) comparing beta-lactams vs. beta-lactams combined with another agent demonstrate no difference in clinical outcomes in a variety of infections caused by Gram-negative pathogens; however, patients with sepsis or septic shock were underrepresented [101, 102]. In contrast, a meta-analysis of randomized and observational studies focused on sepsis or septic shock showed that combination therapy is beneficial in high-risk patients (i.e., projected mortality rate greater than 25%) [103]. This positive impact may be especially pronounced in neutropenic patients and when a pathogen with reduced antimicrobial susceptibility is involved (e.g., P. aeruginosa) [104].

To date, there is no RCT to examine whether combination therapy is superior to monotherapy for CRE infections. Observational studies suggest that the benefit of combination therapy is mainly observed in patients with serious underlying diseases or high pretreatment probability of death (e.g., septic shock) [105,106,107,108,109]. The most effective regimen is challenging to define, as only one of the aforementioned studies reported survival benefit with a specific drug combination (colistin plus tigecycline plus meropenem) after adjustment for potential confounders [109].

Although there have been five RCTs and several meta-analyses for the treatment of carbapenem-resistant A. baumannii infections, the optimal treatment regimen has not yet been determined [110,111,112,113,114,115]. Notably, none of the RCTs demonstrated a survival benefit with combination therapy, although one study showed a better clinical response with colistin plus high-dose ampicillin/sulbactam and three studies reported faster microbiological clearance when combining colistin with rifampin or fosfomycin. A recent meta-analysis, however, demonstrated survival benefit in bacteremic patients who were receiving high doses of colistin (more than 6 MIU per day) in combination with another agent [116].

Continuous prolonged or intermittent administration of beta-lactams and other time-dependent antimicrobials

The proportion of the interdose interval with drug concentration above the pathogen MIC is predictive of efficacy for time-dependent antibiotics, including beta-lactams. This parameter may be increased by reducing the interdose interval and/or by using extended infusions (EI) over 3–4 h or continuous infusion (CI). Stochastic models show that prolonged beta-lactam infusions increase the probability of target attainment against isolates with borderline MIC, especially in patients with ARC or increased volume of distribution [117].

Most RCTs comparing intermittent versus prolonged beta-lactam infusions could not find significant differences in outcomes. However, in a recent meta-analysis of RCTs comparing prolonged (EI or CI) and intermittent infusions of antipseudomonal beta-lactams in patients with sepsis, prolonged infusion was associated with improved survival, including when carbapenems or beta-lactam/beta-lactam inhibitor combinations were analyzed separately [118]. Prolonged infusions might only be needed in some patients—e.g., those with beta-lactam underdosing using intermittent administration schemes, or infections caused by isolates with elevated MICs. Because these features cannot be anticipated, it seems reasonable to consider the use of prolonged infusions of sufficiently stable antipseudomonal beta-lactams in all patients with sepsis.

For some other drugs such as vancomycin, the ratio area under the curve/MIC is considered the PK/PD parameter predictive of efficacy (Fig. 3). A recent meta-analysis suggested that continuous vancomycin infusion is associated with lower nephrotoxicity but not better cure or lower mortality than intermittent infusions [119]; nevertheless, included studies had many limitations and further investigations are needed to address this issue.

De-escalation: impact in practice

Conceptually, de-escalation is a strategy whereby the provision of effective antibiotic treatment is achieved, while minimizing unnecessary exposure to broad-spectrum agents that would promote the development of resistance. Practically, it consists in the reappraisal of antimicrobial therapy as soon as AST results are available. However, no clear consensus on de-escalation components exists and various definitions have been used (e.g., changing the “pivotal” agent for a drug with a narrower spectrum and/or lower ecological effects on microbiota, or discontinuing an antimicrobial combination), resulting in equivocal interpretation of the available evidence [120, 121].

De-escalation is applied in only 40–50% of inpatients with bacterial infection [121]. This reflects physician reluctance to narrow the covered spectrum when caring for severely ill patients with culture-negative sepsis and/or MDRB carriage [120]. Importantly, the available evidence does not suggest a detrimental impact of de-escalation on outcomes [120, 122], including in high-risk patients such as those with bloodstream infections, severe sepsis, VAP, and neutropenia [123, 124]. However, further well-designed RCTs are needed to definitely solve this issue.

Increasing physician confidence and compliance with de-escalation has become a cornerstone of ASP. Paradoxically, there is a lack of clinical data regarding the impact of de-escalation on antimicrobial consumption and emergence of resistance [120]. While this strategy has been associated with reduced use of certain antimicrobial classes [125, 126], no study demonstrated that it may allow a decrease in overall antimicrobial consumption, and an increase in antibiotic exposure has even been observed [123, 125, 127]. Similarly, the few studies that addressed this point reported no impact—or only a marginal effect—of de-escalation on the individual hazard of MDRB acquisition or local prevalence of MDRB [125,126,127].

In light of these uncertainties, efforts should focus on microbiological documentation to increase ADE rates in patients with sepsis. New diagnostic tools should be exploited to hasten pathogen identification and AST availability. Lastly, human data on the specific impact of each antimicrobial on commensal ecosystems and the risk of MDRB acquisition are needed to optimize antibiotic streamlining and further support de-escalation strategies [37, 128].

Duration of antibiotic therapy and antibiotic resistance

Prolonged durations of antibiotic therapy have been associated with the emergence of antimicrobial resistance [129]. Yet, short-course antibiotic therapy has been shown to be effective and safe in a number of infections, including community-acquired pneumonia, VAP, urinary tract infections, cIAI, and even some types of bacteremia [130,131,132,133,134,135,136]. The shortening of antibiotic durations on the basis of PCT kinetics has also been shown to be safe, including in patients with sepsis [51, 52]. However, the recent ProACT trial failed to confirm the ability of PCT to reduce the duration of antibiotic exposure compared to usual care in suspected lower respiratory tract infections [137]. Given the importance of overruling in available RCTs and the relatively long duration of therapy in control groups, the question remains unresolved. In particular, the efficacy and costs of PCT if an active ASP is in place remain to be evaluated.

Many national and international guidelines encourage physicians to shorten the overall durations of antibiotic therapy for a number of infections. Shorter courses are now recommended for pneumonia, urinary tract infections, and cIAI with source control [49, 138,139,140,141,142]. However, despite the presence of these recommendations, recent studies suggest that excessive durations of antibiotics are still being administered, thereby offering further opportunities for ASP [143, 144]. However, clinicians should also be aware that, under some circumstances, short-course therapy may be detrimental to patient outcomes, especially in case of prolonged neutropenia, lack of adequate source control, infection due to XDR Gram-negative bacteria, and endovascular or foreign body infections [130, 145].

Source control

Source control to eliminate infectious foci follows principles of drainage, debridement, device removal, compartment decompression, and often deferred definitive restoration of anatomy and function [146]. If required, source control is a major determinant of outcome, more so than early adequate antimicrobial therapy [147,148,149], and should never be considered as “covered” by broad-spectrum agents. Therefore, surgical and radiological options for intervention must be systematically discussed, especially in patients with cIAI or SSTI. The efficacy of source control is time-dependent [150,151,152,153] and adequate procedures should therefore be performed as rapidly as possible in patients with septic shock [49], while longer delays may be acceptable in closely monitored stable patients. Failure of source control should be considered in cases of persistent or new organ failure despite resuscitation and appropriate antimicrobial therapy, and requires (re)imaging and repeated or alternative intervention. Importantly, source control procedures should include microbiological sampling whenever possible to facilitate ADE initiatives.

Antibiotic stewardship programs in the ICU

Implementing ASP in the ICU improves antimicrobial utilization and reduces broad-spectrum antimicrobial use, incidence of infections and colonization with MDRB, antimicrobial-related adverse events, and healthcare-associated costs, all without increase in mortality [26, 154, 155]. According to the ESCMID Study Group for Antimicrobial Stewardship, ASP should be approached as “a coherent set of actions which promote using antimicrobials in ways that ensure sustainable access to effective therapy for all who need them” [156]. Therefore, ASP should be viewed as a quality improvement initiative, requiring (1) an evidence-based, ideally bundled, change package, (2) a clear definition of goals, indicators, and targets, (3) a dynamic measurement and data collection system with feedback to prescribers, (4) a strategy for building capacity, and (5) a plan to identify and approach areas for improvement and solve quality gaps. This necessarily implies the appointment of a member of the ICU staff as a leader with expertise in the field of antimicrobial therapy and prespecified functions for the implementation of the local ASP.

Three main kinds of interventions may be used in ASP [157,158,159]:

-

Restrictive, in which one tries to reduce the number of opportunities for bad behavior, such as formulary restrictions, pre-approval by senior ASP doctor (either an external infectious disease specialist or a specified expert in the ICU team), and automatic stop orders

-

Collaborative or enhancement, in which one tries to increase the number of opportunities and decrease barriers for good behavior, such as education of prescribers, implementation of treatment guidelines, promotion of ADE, use of PK/PD concepts, and prospective audit and feedback to providers

-

Structural, which may include the use of computerized antibiotic decision support systems, faster diagnostic methods for antimicrobial resistance, antibiotic consumption surveillance systems, ICU leadership commitment, staff involvement, and daily collaboration between ICU staff, pharmacists, infection control units, and microbiologists

The implementation of ASP should take into account the need for a quick answer from the system in case of severe infections (e.g., regarding as unacceptable the delay in the first antimicrobial delivery due to too restrictive pharmacy-driven prescription policies).

An ASP should consensually rest on multifaceted interventions to achieve its fundamental goals (Table 5), namely improving outcomes and decreasing antimicrobial-related collateral damage in infected patients. Yet, the weight of each component must be customized according to the context and culture of every single ICU in terms of habits for antibiotic prescription, MDRB prevalence, local organizational aspects, and available resources. For this purpose, concepts of implementation science should be applied—that is, identifying barriers and facilitators that impact the staff’s compliance to guidelines in order to design and execute a structured plan for improvement [160].

The appropriate dashboard in the ICU

The availability of constantly updated information is pivotal to improve decision-making processes in the ICU [161, 162]. As the epidemiology of MDRB is continuously evolving, close monitoring of local resistance patterns may help to rationalize the empirical use of broad-spectrum antibiotics in this setting. With the expanding utilization of electronic medical records and applications specifically developed for the ICU, streaming analytics can provide dashboards containing real-time and easily accessible data for intensivists [162, 163]. Such dashboards should capture data from medical records and microbiology systems, display an intuitive and user-friendly interface, and be available on both ICU computers and mobile devices to allow easy access to actionable data at the bedside. Finally, a complete dashboard should include information not only on dynamics of resistance patterns but also on local antimicrobial consumption, adherence to protocols of care and antibiotic guidelines, healthcare-associated infections (e.g., source, type, severity), and general patient characteristics (e.g., comorbidities, severity of illness, main diagnosis, and length of the ICU stay) (Fig. 4). Although studies demonstrating the efficacy of such dashboards in reducing resistance have not been published so far, these tools could allow a structured audit-feedback approach that is one of the cornerstones of ASP implementation in the ICU [164,165,166].

Concluding remarks

Both the poor outcomes associated with bacterial sepsis and the current epidemiology of MDRB urge the need for improving the management of antibiotic therapy in ICU patients. Well-designed studies remain warranted to definitely address several aspects of this issue, notably the clinical input of rapid diagnostic tools and TDM, the potential benefit of combination versus single-drug therapies, the optimal dosing regimens before the availability of AST results or for patients with culture-negative sepsis, and the prognostic yield of ASP. Although beyond the scope of this review, the exploitation of other research axes may further help to control the spread of MDRB in the ICU setting, including optimization of infection control policies [167], a comparative appraisal of the impact of broad-spectrum antibiotics on the gut microbiota through novel metagenomics approaches [168], and the evaluation of emerging options such as orally administered antimicrobial-adsorbing charcoals, probiotics, or fecal microbiota transplantation to protect or restore the commensal ecosystems of ICU patients [29, 169, 170].

References

Versporten A, Zarb P, Caniaux I, Gros MF, Drapier N, Miller M et al (2018) Antimicrobial consumption and resistance in adult hospital inpatients in 53 countries: results of an internet-based global point prevalence survey. Lancet Glob Health 6(6):e619–e629

Detsis M, Karanika S, Mylonakis E (2017) ICU acquisition rate, risk factors, and clinical significance of digestive tract colonization with extended-spectrum beta-lactamase-producing Enterobacteriaceae: a systematic review and meta-analysis. Crit Care Med 45(4):705–714

Kollef MH, Chastre J, Fagon JY, Francois B, Niederman MS, Rello J et al (2014) Global prospective epidemiologic and surveillance study of ventilator-associated pneumonia due to Pseudomonas aeruginosa. Crit Care Med 42(10):2178–2187

Bulens SN, Yi SH, Walters MS, Jacob JT, Bower C, Reno J et al (2018) Carbapenem-nonsusceptible Acinetobacter baumannii, 8 US metropolitan areas, 2012-2015. Emerg Infect Dis 24(4):727–734

Hsu LY, Apisarnthanarak A, Khan E, Suwantarat N, Ghafur A, Tambyah PA (2017) Carbapenem-resistant Acinetobacter baumannii and Enterobacteriaceae in South and Southeast Asia. Clin Microbiol Rev 30(1):1–22

Rodriguez CH, Balderrama Yarhui N, Nastro M, Nunez Quezada T, Castro Canarte G, Magne Ventura R et al (2016) Molecular epidemiology of carbapenem-resistant Acinetobacter baumannii in South America. J Med Microbiol 65(10):1088–1091

Nowak J, Zander E, Stefanik D, Higgins PG, Roca I, Vila J et al (2017) High incidence of pandrug-resistant Acinetobacter baumannii isolates collected from patients with ventilator-associated pneumonia in Greece, Italy and Spain as part of the MagicBullet clinical trial. J Antimicrob Chemother 72(12):3277–3282

Lob SH, Biedenbach DJ, Badal RE, Kazmierczak KM, Sahm DF (2015) Antimicrobial resistance and resistance mechanisms of Enterobacteriaceae in ICU and non-ICU wards in Europe and North America: SMART 2011-2013. J Glob Antimicrob Resist 3(3):190–197

Bonomo RA, Burd EM, Conly J, Limbago BM, Poirel L, Segre JA et al (2018) Carbapenemase-producing organisms: a global scourge. Clini Infect Dis 66(8):1290–1297

Rosenthal VD, Al-Abdely HM, El-Kholy AA, AlKhawaja SAA, Leblebicioglu H, Mehta Y et al (2016) International nosocomial infection control consortium report, data summary of 50 countries for 2010–2015: device-associated module. Am J Infect Control 44(12):1495–1504

Weiner LM, Webb AK, Limbago B, Dudeck MA, Patel J, Kallen AJ et al (2016) Antimicrobial-resistant pathogens associated with healthcare-associated infections: summary of data reported to the National Healthcare Safety Network at the Centers for Disease Control and Prevention, 2011-2014. Infect Control Hosp Epidemiol 37(11):1288–1301

Gao L, Lyu Y, Li Y (2017) Trends in drug resistance of Acinetobacter baumannii over a 10-year period: nationwide data from the China surveillance of antimicrobial resistance program. Chin Med J (Engl) 130(6):659–664

Bonell A, Azarrafiy R, Huong VTL, Viet TL, Phu VD, Dat VQ et al (2018) A systematic review and meta-analysis of ventilator associated pneumonia in adults in Asia; an analysis of national income level on incidence and etiology. Clin Infect Dis. https://doi.org/10.1093/cid/ciy543

Hu FP, Guo Y, Zhu DM, Wang F, Jiang XF, Xu YC et al (2016) Resistance trends among clinical isolates in China reported from CHINET surveillance of bacterial resistance, 2005-2014. Clin Microbiol Infect 22(Suppl 1):S9–S14

Bitterman R, Hussein K, Leibovici L, Carmeli Y, Paul M (2016) Systematic review of antibiotic consumption in acute care hospitals. Clin Microbiol Infect 22(6):561

Holmes AH, Moore LS, Sundsfjord A, Steinbakk M, Regmi S, Karkey A et al (2016) Understanding the mechanisms and drivers of antimicrobial resistance. Lancet 387(10014):176–187

Barbier F, Luyt CE (2016) Understanding resistance. Intensive Care Med 42(12):2080–2083

Ruppé E, Woerther PL, Barbier F (2015) Mechanisms of antimicrobial resistance in Gram-negative bacilli. Ann Intensive Care 5:21

Abdallah M, Badawi M, Amirah MF, Rasheed A, Mady AF, Alodat M et al (2017) Impact of carbapenem restriction on the antimicrobial susceptibility pattern of Pseudomonas aeruginosa isolates in the ICU. J Antimicrob Chemother 72(11):3187–3190

Zagorianou A, Sianou E, Iosifidis E, Dimou V, Protonotariou E, Miyakis S et al (2012) Microbiological and molecular characteristics of carbapenemase-producing Klebsiella pneumoniae endemic in a tertiary Greek hospital during 2004–2010. Euro Surveill 17(7):20088

Meyer E, Schwab F, Schroeren-Boersch B, Gastmeier P (2010) Dramatic increase of third-generation cephalosporin-resistant E. coli in German intensive care units: secular trends in antibiotic drug use and bacterial resistance, 2001–2008. Crit Care 14(3):R113

Bassetti M, Cruciani M, Righi E, Rebesco B, Fasce R, Costa A et al (2006) Antimicrobial use and resistance among Gram-negative bacilli in an Italian intensive care unit. J Chemother 18(3):261–267

Samonis G, Korbila IP, Maraki S, Michailidou I, Vardakas KZ, Kofteridis D et al (2014) Trends in isolation of intrinsically resistant to colistin Enterobacteriaceae and association with colistin use un a tertiary hospital. Eur J Clin Microbiol Infect Dis 33(9):1505–1510

Jacoby TS, Kuchenbecker RS, Dos Santos RP, Magedanz L, Guzatto P, Moreira LB (2010) Impact of hospital-wide infection rate, invasive procedures use and antimicrobial consumption on bacterial resistance inside an intensive care unit. J Hosp Infect 75(1):23–27

Fihman V, Messika J, Hajage D, Tournier V, Gaudry S, Magdoud F et al (2015) Five-year trends for ventilator-associated pneumonia: correlation between microbiological findings and antimicrobial drug consumption. Int J Antimicrob Agents 46(5):518–525

Kaki R, Elligsen M, Walker S, Simor A, Palmay L, Daneman N (2011) Impact of antimicrobial stewardship in critical care: a systematic review. J Antimicrob Chemother 66(6):1223–1230

Bell BG, Schellevis F, Stobberingh E, Goossens H, Pringle M (2014) A systematic review and meta-analysis of the effects of antibiotic consumption on antibiotic resistance. BMC Infect Dis 14:13

Nijssen S, Fluit AC, van de Vijver D, Top J, Willems R, Bonten MJM (2010) Effects of reducing beta-lactam antibiotic pressure on intestinal colonization of antibiotic-resistant Gram-negative bacteria. Intensive Care Med 36(3):512–519

Ruppe E, Burdet C, Grall N, de Lastours V, Lescure FX, Andremont A et al (2018) Impact of antibiotics on the intestinal microbiota needs to be re-defined to optimize antibiotic usage. Clin Microbiol Infect 24(1):3–5

Pamer EG (2016) Resurrecting the intestinal microbiota to combat antibiotic-resistant pathogens. Science 352(6285):535–538

Razazi K, Derde LP, Verachten M, Legrand P, Lesprit P, Brun-Buisson C (2012) Clinical impact and risk factors for colonization with extended-spectrum beta-lactamase-producing bacteria in the intensive care unit. Intensive Care Med 38(11):1769–1778

Poignant S, Guinard J, Guiguon A, Bret L, Poisson D-M, Boulain T et al (2015) Risk factors and outcomes of intestinal carriage of AmpC-hyperproducing Enterobacteriaceae in ICU patients. Antimicrob Agents Chemother 60(3):1883–1887

Hilliquin D, Le Guern R, Thepot Seegers V, Neulier C, Lomont A, Marie V et al (2018) Risk factors for acquisition of OXA-48-producing Klebsiella pneumoniae among contact patients: a multicentre study. J Hosp Infect 98(3):253–259

Papadimitriou-Olivgeris M, Marangos M, Fligou F, Christofidou M, Bartzavali C, Anastassiou ED et al (2012) Risk factors for KPC-producing Klebsiella pneumoniae enteric colonization upon ICU admission. J Antimicrob Chemother 67(12):2976–2981

Tan BK, Vivier E, Ait Bouziad K, Zahar JR, Pommier C, Parmeland L et al (2018) A hospital-wide intervention replacing ceftriaxone with cefotaxime to reduce rate of healthcare-associated infections caused by extended-spectrum beta-lactamase-producing Enterobacteriaceae in the intensive care unit. Intensive Care Med 44(5):672–673

Grohs P, Kerneis S, Sabatier B, Lavollay M, Carbonnelle E, Rostane H et al (2014) Fighting the spread of AmpC-hyperproducing Enterobacteriaceae: beneficial effect of replacing ceftriaxone with cefotaxime. J Antimicrob Chemother 69(3):786–789

Woerther PL, Lepeule R, Burdet C, Decousser JW, Ruppe E, Barbier F (2018) Carbapenems and alternative beta-lactams for the treatment of infections due to ESBL-producing Enterobacteriaceae: what impact on intestinal colonization resistance? Int J Antimicrob Agents 52:762–770

Kao KC, Chen CB, Hu HC, Chang HC, Huang CC, Huang YC (2015) Risk factors of methicillin-resistant Staphylococcus aureus infection and correlation with nasal colonization based on molecular genotyping in medical intensive care units: a prospective observational study. Medicine (Baltimore) 94(28):e1100

Ziakas PD, Anagnostou T, Mylonakis E (2014) The prevalence and significance of methicillin-resistant Staphylococcus aureus colonization at admission in the general ICU setting: a meta-analysis of published studies. Crit Care Med 42(2):433–444

Raman G, Avendano EE, Chan J, Merchant S, Puzniak L (2018) Risk factors for hospitalized patients with resistant or multidrug-resistant Pseudomonas aeruginosa infections: a systematic review and meta-analysis. Antimicrob Resist Infect Control 7:79

Zahar JR, Blot S, Nordmann P, Martischang R, Timsit JF, Harbarth S et al (2018) Screening for intestinal carriage of ESBL-producing Enterobacteriaceae in critically ill patients: expected benefits and evidence-based controversies. Clin Infect Dis. https://doi.org/10.1093/cid/ciy864

Mazzeffi M, Gammie J, Taylor B, Cardillo S, Haldane-Lutterodt N, Amoroso A et al (2017) Healthcare-associated infections in cardiac surgery patients with prolonged intensive care unit stay. Ann Thorac Surg 103(4):1165–1170

van Vught LA, Klein Klouwenberg PM, Spitoni C, Scicluna BP, Wiewel MA, Horn J et al (2016) Incidence, risk factors, and attributable mortality of secondary infections in the intensive care unit after admission for sepsis. JAMA 315(14):1469–1479

Tabah A, Koulenti D, Laupland K, Misset B, Valles J, de Bruzzi Carvalho F et al (2012) Characteristics and determinants of outcome of hospital-acquired bloodstream infections in intensive care units: the EUROBACT International Cohort Study. Intensive Care Med 38(12):1930–1945

Sterling SA, Puskarich MA, Glass AF, Guirgis F, Jones AE (2017) The impact of the Sepsis-3 septic shock definition on previously defined septic shock patients. Crit Care Med 45(9):1436–1442

Ferrer R, Martinez ML, Goma G, Suarez D, Alvarez-Rocha L, de la Torre MV et al (2018) Improved empirical antibiotic treatment of sepsis after an educational intervention: the ABISS-Edusepsis study. Crit Care 22(1):167

Liu VX, Fielding-Singh V, Greene JD, Baker JM, Iwashyna TJ, Bhattacharya J et al (2017) The timing of early antibiotics and hospital mortality in sepsis. Am J Respir Crit Care Med 196(7):856–863

Seymour CW, Gesten F, Prescott HC, Friedrich ME, Iwashyna TJ, Phillips GS et al (2017) Time to treatment and mortality during mandated emergency care for sepsis. N Engl J Med 376(23):2235–2244

Rhodes A, Evans LE, Alhazzani W, Levy MM, Antonelli M, Ferrer R et al (2017) Surviving Sepsis Campaign: international guidelines for management of sepsis and septic shock: 2016. Intensive Care Med 43(3):304–377

Laupland KB, Zahar JR, Adrie C, Schwebel C, Goldgran-Toledano D, Azoulay E et al (2012) Determinants of temperature abnormalities and influence on outcome of critical illness. Crit Care Med 40(1):145–151

Lam SW, Bauer SR, Fowler R, Duggal A (2018) Systematic review and meta-analysis of procalcitonin-guidance versus usual care for antimicrobial management in critically ill patients: focus on subgroups based on antibiotic initiation, cessation, or mixed strategies. Crit Care Med 46(5):684–690

Schuetz P, Wirz Y, Sager R, Christ-Crain M, Stolz D, Tamm M et al (2018) Effect of procalcitonin-guided antibiotic treatment on mortality in acute respiratory infections: a patient level meta-analysis. Lancet Infect Dis 18(1):95–107

van Someren Greve F, Juffermans NP, Bos LDJ, Binnekade JM, Braber A, Cremer OL et al (2018) Respiratory viruses in invasively ventilated critically ill patients—a prospective multicenter observational study. Crit Care Med 46(1):29–36

Loubet P, Voiriot G, Houhou-Fidouh N, Neuville M, Bouadma L, Lescure FX et al (2017) Impact of respiratory viruses in hospital-acquired pneumonia in the intensive care unit: a single-center retrospective study. J Clin Virol 91:52–57

Singer M, Deutschman CS, Seymour CW, Shankar-Hari M, Annane D, Bauer M et al (2016) The third international consensus definitions for sepsis and septic shock (Sepsis-3). JAMA 315(8):801–810

Fernando SM, Tran A, Taljaard M, Cheng W, Rochwerg B, Seely AJE et al (2018) Prognostic accuracy of the quick sequential organ failure assessment for mortality in patients with suspected infection: a systematic review and meta-analysis. Ann Intern Med 168(4):266–275

Williams JM, Greenslade JH, McKenzie JV, Chu K, Brown AFT, Lipman J (2017) Systemic inflammatory response syndrome, quick sequential organ function assessment, and organ dysfunction: insights from a prospective database of ED patients with infection. Chest 151(3):586–596

Klein Klouwenberg PM, Cremer OL, van Vught LA, Ong DS, Frencken JF, Schultz MJ et al (2015) Likelihood of infection in patients with presumed sepsis at the time of intensive care unit admission: a cohort study. Crit Care 19:319

Hranjec T, Rosenberger LH, Swenson B, Metzger R, Flohr TR, Politano AD et al (2012) Aggressive versus conservative initiation of antimicrobial treatment in critically ill surgical patients with suspected intensive-care-unit-acquired infection: a quasi-experimental, before and after observational cohort study. Lancet Infect Dis 12(10):774–780

Parlato M, Cavaillon JM (2015) Host response biomarkers in the diagnosis of sepsis: a general overview. Methods Mol Biol 1237:149–211

Parlato M, Philippart F, Rouquette A, Moucadel V, Puchois V, Blein S et al (2018) Circulating biomarkers may be unable to detect infection at the early phase of sepsis in ICU patients: the CAPTAIN prospective multicenter cohort study. Intensive Care Med 44(7):1061–1070

Layios N, Lambermont B, Canivet JL, Morimont P, Preiser JC, Garweg C et al (2012) Procalcitonin usefulness for the initiation of antibiotic treatment in intensive care unit patients. Crit Care Med 40(8):2304–2309

Mearelli F, Fiotti N, Giansante C, Casarsa C, Orso D, De Helmersen M et al (2018) Derivation and validation of a biomarker-based clinical algorithm to rule out sepsis from noninfectious systemic inflammatory response syndrome at emergency department admission: a multicenter prospective study. Crit Care Med 46(9):1421–1429

Kalil AC, Syed A, Rupp ME, Chambers H, Vargas L, Maskin A et al (2015) Is bacteremic sepsis associated with higher mortality in transplant recipients than in nontransplant patients? A matched case-control propensity-adjusted study. Clin Infect Dis 60(2):216–222

Kalil AC, Sandkovsky U, Florescu DF (2018) Severe infections in critically ill solid organ transplant recipients. Clin Microbiol Infect 24:1257–1263

NCCN (2018) Clinical practice guidelines in oncology. prevention and treatment of cancer-related infections. version 1.2018. https://www.nccn.org/store/login/login.aspx?ReturnURL=https://www.nccn.org/professionals/physician_gls/pdf/infections.pdf. Accessed 1 Sept 2018

Schnell D, Azoulay E, Benoit D, Clouzeau B, Demaret P, Ducassou S et al (2016) Management of neutropenic patients in the intensive care unit (NEWBORNS EXCLUDED) recommendations from an expert panel from the French Intensive Care Society (SRLF) with the French Group for Pediatric Intensive Care Emergencies (GFRUP), the French Society of Anesthesia and Intensive Care (SFAR), the French Society of Hematology (SFH), the French Society for Hospital Hygiene (SF2H), and the French Infectious Diseases Society (SPILF). Ann Intensive Care 6(1):90

European AIDS Clinical Society guidelines. Updated yearly. http://www.eacsociety.org/Guidelines.aspx. Accessed 1 Sept 2018

Singh JA, Cameron C, Noorbaloochi S, Cullis T, Tucker M, Christensen R et al (2015) Risk of serious infection in biological treatment of patients with rheumatoid arthritis: a systematic review and meta-analysis. Lancet 386(9990):258–265

Venet F, Monneret G (2018) Advances in the understanding and treatment of sepsis-induced immunosuppression. Nat Rev Nephrol 14(2):121–137

Martin S, Perez A, Aldecoa C (2017) Sepsis and immunosenescence in the elderly patient: a review. Front Med 4:20

Ramanan P, Bryson AL, Binnicker MJ, Pritt BS, Patel R (2018) Syndromic panel-based testing in clinical microbiology. Clin Microbiol Rev 31(1):e00024-17

van de Groep K, Bos MP, Savelkoul PHM, Rubenjan A, Gazenbeek C, Melchers WJG et al (2018) Development and first evaluation of a novel multiplex real-time PCR on whole blood samples for rapid pathogen identification in critically ill patients with sepsis. Eur J Clin Microbiol Infect Dis 37(7):1333–1344

Ruppe E, Baud D, Schicklin S, Guigon G, Schrenzel J (2016) Clinical metagenomics for the management of hospital- and healthcare-acquired pneumonia. Future Microbiol 11(3):427–439

Charalampous T, Richardson H, Kay GL, Baldan R, Jeanes C, Rae D et al (2018) Rapid diagnosis of lower respiratory infection using nanopore-based clinical metagenomics. BioRXIV. https://doi.org/10.1101/387548

Pendleton KM, Erb-Downward JR, Bao Y, Branton WR, Falkowski NR, Newton DW et al (2017) Rapid pathogen identification in bacterial pneumonia using real-time metagenomics. Am J Respir Crit Care Med 196(12):1610–1612

Langelier C, Zinter MS, Kalantar K, Yanik GA, Christenson S, O’Donovan B et al (2018) Metagenomic sequencing detects respiratory pathogens in hematopoietic cellular transplant patients. Am J Respir Crit Care Med 197(4):524–528

Le Dorze M, Gault N, Foucrier A, Ruppe E, Mourvillier B, Woerther PL et al (2015) Performance and impact of a rapid method combining mass spectrometry and direct antimicrobial susceptibility testing on treatment adequacy of patients with ventilator-associated pneumonia. Clin Microbiol Infect 21(5):468

Lutgring JD, Bittencourt C, McElvania TeKippe E, Cavuoti D, Hollaway R, Burd EM (2018) Evaluation of the accelerate pheno system: results from two academic medical centers. J Clin Microbiol 56(4):e01672-17

Timbrook TT, Morton JB, McConeghy KW, Caffrey AR, Mylonakis E, LaPlante KL (2017) The effect of molecular rapid diagnostic testing on clinical outcomes in bloodstream infections: a systematic review and meta-analysis. Clin Infect Dis 64(1):15–23

Roberts JA, Paul SK, Akova M, Bassetti M, De Waele JJ, Dimopoulos G et al (2014) DALI: defining antibiotic levels in intensive care unit patients: are current beta-lactam antibiotic doses sufficient for critically ill patients? Clin Infect Dis 58(8):1072–1083

Roberts JA, Taccone FS, Lipman J (2016) Understanding PK/PD. Intensive Care Med 42(11):1797–1800

De Waele JJ, Lipman J, Carlier M, Roberts JA (2015) Subtleties in practical application of prolonged infusion of beta-lactam antibiotics. Int J Antimicrob Agents 45(5):461–463

Bergen PJ, Bulitta JB, Kirkpatrick CM, Rogers KE, McGregor MJ, Wallis SC et al (2017) Substantial impact of altered pharmacokinetics in critically ill patients on the antibacterial effects of meropenem evaluated via the dynamic hollow-fiber infection model. Antimicrob Agents Chemother 61(5):e02642-16

Tangden T, Ramos Martin V, Felton TW, Nielsen EI, Marchand S, Bruggemann RJ et al (2017) The role of infection models and PK/PD modelling for optimising care of critically ill patients with severe infections. Intensive Care Med 43(7):1021–1032

Huttner A, Harbarth S, Hope WW, Lipman J, Roberts JA (2015) Therapeutic drug monitoring of the beta-lactam antibiotics: what is the evidence and which patients should we be using it for? J Antimicrob Chemother 70(12):3178–3183

Duszynska W, Taccone FS, Hurkacz M, Kowalska-Krochmal B, Wiela-Hojenska A, Kubler A (2013) Therapeutic drug monitoring of amikacin in septic patients. Crit Care 17(4):R165

Brasseur A, Hites M, Roisin S, Cotton F, Vincent JL, De Backer D et al (2016) A high-dose aminoglycoside regimen combined with renal replacement therapy for the treatment of MDR pathogens: a proof-of-concept study. J Antimicrob Chemother 71(5):1386–1394

Pajot O, Burdet C, Couffignal C, Massias L, Armand-Lefevre L, Foucrier A et al (2015) Impact of imipenem and amikacin pharmacokinetic/pharmacodynamic parameters on microbiological outcome of Gram-negative bacilli ventilator-associated pneumonia. J Antimicrob Chemother 70(5):1487–1494

Prybylski JP (2015) Vancomycin trough concentration as a predictor of clinical outcomes in patients with Staphylococcus aureus bacteremia: a meta-analysis of observational studies. Pharmacotherapy 35(10):889–898

Wong G, Brinkman A, Benefield RJ, Carlier M, De Waele JJ, El Helali N et al (2014) An international, multicentre survey of beta-lactam antibiotic therapeutic drug monitoring practice in intensive care units. J Antimicrob Chemother 69(5):1416–1423

Mouton JW, Muller AE, Canton R, Giske CG, Kahlmeter G, Turnidge J (2018) MIC-based dose adjustment: facts and fables. J Antimicrob Chemother 73(3):564–568

Beumier M, Casu GS, Hites M, Wolff F, Cotton F, Vincent JL et al (2015) Elevated beta-lactam concentrations associated with neurological deterioration in ICU septic patients. Minerva Anestesiol 81(5):497–506

Imani S, Buscher H, Marriott D, Gentili S, Sandaradura I (2017) Too much of a good thing: a retrospective study of beta-lactam concentration-toxicity relationships. J Antimicrob Chemother 72(10):2891–2897

Torres A, Zhong N, Pachl J, Timsit JF, Kollef M, Chen Z et al (2018) Ceftazidime-avibactam versus meropenem in nosocomial pneumonia, including ventilator-associated pneumonia (REPROVE): a randomised, double-blind, phase 3 non-inferiority trial. Lancet Infect Dis 18(3):285–295

Harris PNA, Tambyah PA, Lye DC, Mo Y, Lee TH, Yilmaz M et al (2018) Effect of piperacillin-tazobactam vs meropenem on 30-day mortality for patients with E. coli or Klebsiella pneumoniae bloodstream infection and ceftriaxone resistance: a randomized clinical trial. JAMA 320(10):984–994

Bertolini G, Nattino G, Tascini C, Poole D, Viaggi B, Carrara G et al (2018) Mortality attributable to different Klebsiella susceptibility patterns and to the coverage of empirical antibiotic therapy: a cohort study on patients admitted to the ICU with infection. Intensive Care Med 44(10):1709–1719

Murray KP, Zhao JJ, Davis SL, Kullar R, Kaye KS, Lephart P et al (2013) Early use of daptomycin versus vancomycin for methicillin-resistant Staphylococcus aureus bacteremia with vancomycin minimum inhibitory concentration > 1 mg/L: a matched cohort study. Clin Infect Dis 56(11):1562–1569

Wunderink RG, Niederman MS, Kollef MH, Shorr AF, Kunkel MJ, Baruch A et al (2012) Linezolid in methicillin-resistant Staphylococcus aureus nosocomial pneumonia: a randomized, controlled study. Clin Infect Dis 54(5):621–629

Abbas M, Paul M, Huttner A (2017) New and improved? A review of novel antibiotics for Gram-positive bacteria. Clin Microbiol Infect 23(10):697–703

Tamma PD, Cosgrove SE, Maragakis LL (2012) Combination therapy for treatment of infections with gram-negative bacteria. Clin Microbiol Rev 25(3):450–470

Paul M, Lador A, Grozinsky-Glasberg S, Leibovici L (2014) Beta lactam antibiotic monotherapy versus beta lactam-aminoglycoside antibiotic combination therapy for sepsis. Cochrane Database Syst Rev 1:CD003344

Kumar A, Safdar N, Kethireddy S, Chateau D (2010) A survival benefit of combination antibiotic therapy for serious infections associated with sepsis and septic shock is contingent only on the risk of death: a meta-analytic/meta-regression study. Crit Care Med 38(8):1651–1664

Ripa M, Rodriguez-Nunez O, Cardozo C, Naharro-Abellan A, Almela M, Marco F et al (2017) Influence of empirical double-active combination antimicrobial therapy compared with active monotherapy on mortality in patients with septic shock: a propensity score-adjusted and matched analysis. J Antimicrob Chemother 72(12):3443–3452

Daikos GL, Tsaousi S, Tzouvelekis LS, Anyfantis I, Psichogiou M, Argyropoulou A et al (2014) Carbapenemase-producing Klebsiella pneumoniae bloodstream infections: lowering mortality by antibiotic combination schemes and the role of carbapenems. Antimicrob Agents Chemother 58(4):2322–2328

Tumbarello M, Trecarichi EM, De Rosa FG, Giannella M, Giacobbe DR, Bassetti M et al (2015) Infections caused by KPC-producing Klebsiella pneumoniae: differences in therapy and mortality in a multicentre study. J Antimicrob Chemother 70(7):2133–2143

Falcone M, Russo A, Iacovelli A, Restuccia G, Ceccarelli G, Giordano A et al (2016) Predictors of outcome in ICU patients with septic shock caused by Klebsiella pneumoniae carbapenemase-producing K. pneumoniae. Clin Microbiol Infect 22(5):444–450

Gutierrez-Gutierrez B, Salamanca E, de Cueto M, Hsueh PR, Viale P, Pano-Pardo JR et al (2017) Effect of appropriate combination therapy on mortality of patients with bloodstream infections due to carbapenemase-producing Enterobacteriaceae (INCREMENT): a retrospective cohort study. Lancet Infect Dis 17(7):726–734

Tumbarello M, Viale P, Viscoli C, Trecarichi EM, Tumietto F, Marchese A et al (2012) Predictors of mortality in bloodstream infections caused by Klebsiella pneumoniae carbapenemase-producing K. pneumoniae: importance of combination therapy. Clin Infect Dis 55(7):943–950

Aydemir H, Akduman D, Piskin N, Comert F, Horuz E, Terzi A et al (2013) Colistin vs. the combination of colistin and rifampicin for the treatment of carbapenem-resistant Acinetobacter baumannii ventilator-associated pneumonia. Epidemiol Infect 141(6):1214–1222

Durante-Mangoni E, Signoriello G, Andini R, Mattei A, De Cristoforo M, Murino P et al (2013) Colistin and rifampicin compared with colistin alone for the treatment of serious infections due to extensively drug-resistant Acinetobacter baumannii: a multicenter, randomized clinical trial. Clin Infect Dis 57(3):349–358

Sirijatuphat R, Thamlikitkul V (2014) Preliminary study of colistin versus colistin plus fosfomycin for treatment of carbapenem-resistant Acinetobacter baumannii infections. Antimicrob Agents Chemother 58(9):5598–5601

Paul M, Daikos GL, Durante-Mangoni E, Yahav D, Carmeli Y, Benattar YD et al (2018) Colistin alone versus colistin plus meropenem for treatment of severe infections caused by carbapenem-resistant gram-negative bacteria: an open-label, randomised controlled trial. Lancet Infect Dis 18(4):391–400

Zusman O, Altunin S, Koppel F, Dishon Benattar Y, Gedik H, Paul M (2017) Polymyxin monotherapy or in combination against carbapenem-resistant bacteria: systematic review and meta-analysis. J Antimicrob Chemother 72(1):29–39

Makris D, Petinaki E, Tsolaki V, Manoulakas E, Mantzarlis K, Apostolopoulou O et al (2018) Colistin versus colistin combined with ampicillin-sulbactam for multiresistant Acinetobacter baumannii ventilator-associated pneumonia treatment: an open-label prospective study. Indian J Crit Care Med 22(2):67–77

Vardakas KZ, Mavroudis AD, Georgiou M, Falagas ME (2018) Intravenous colistin combination antimicrobial treatment vs. monotherapy: a systematic review and meta-analysis. Int J Antimicrob Agents 51(4):535–547

Sime FB, Roberts MS, Roberts JA (2015) Optimization of dosing regimens and dosing in special populations. Clin Microbiol Infect 21(10):886–893

Vardakas KZ, Voulgaris GL, Maliaros A, Samonis G, Falagas ME (2018) Prolonged versus short-term intravenous infusion of antipseudomonal beta-lactams for patients with sepsis: a systematic review and meta-analysis of randomised trials. Lancet Infect Dis 18(1):108–120

Hao JJ, Chen H, Zhou JX (2016) Continuous versus intermittent infusion of vancomycin in adult patients: a systematic review and meta-analysis. Int J Antimicrob Agents 47(1):28–35

Tabah A, Cotta MO, Garnacho-Montero J, Schouten J, Roberts JA, Lipman J et al (2016) A systematic review of the definitions, determinants, and clinical outcomes of antimicrobial de-escalation in the intensive care unit. Clin Infect Dis 62(8):1009–1017

Weiss E, Zahar JR, Lesprit P, Ruppe E, Leone M, Chastre J et al (2015) Elaboration of a consensual definition of de-escalation allowing a ranking of beta-lactams. Clin Microbiol Infect. 21(7):649

Silva BN, Andriolo RB, Atallah AN, Salomao R (2013) De-escalation of antimicrobial treatment for adults with sepsis, severe sepsis or septic shock. Cochrane Database Syst Rev 3:CD007934

Mokart D, Slehofer G, Lambert J, Sannini A, Chow-Chine L, Brun JP et al (2014) De-escalation of antimicrobial treatment in neutropenic patients with severe sepsis: results from an observational study. Intensive Care Med 40(1):41–49

Paul M, Dickstein Y, Raz-Pasteur A (2016) Antibiotic de-escalation for bloodstream infections and pneumonia: systematic review and meta-analysis. Clin Microbiol Infect 22(12):960–967

Leone M, Bechis C, Baumstarck K, Lefrant JY, Albanese J, Jaber S et al (2014) De-escalation versus continuation of empirical antimicrobial treatment in severe sepsis: a multicenter non-blinded randomized noninferiority trial. Intensive Care Med 40(10):1399–1408

Weiss E, Zahar JR, Garrouste-Orgeas M, Ruckly S, Essaied W, Schwebel C et al (2016) De-escalation of pivotal beta-lactam in ventilator-associated pneumonia does not impact outcome and marginally affects MDR acquisition. Intensive Care Med 42(12):2098–2100

De Bus L, Denys W, Catteeuw J, Gadeyne B, Vermeulen K, Boelens J et al (2016) Impact of de-escalation of beta-lactam antibiotics on the emergence of antibiotic resistance in ICU patients: a retrospective observational study. Intensive Care Med 42(6):1029–1039

Ruppe E, Martin-Loeches I, Rouze A, Levast B, Ferry T, Timsit JF (2018) What’s new in restoring the gut microbiota in ICU patients? Potential role of faecal microbiota transplantation. Clin Microbiol Infect 24(8):803–805

D’Agata EM, Magal P, Olivier D, Ruan S, Webb GF (2007) Modeling antibiotic resistance in hospitals: the impact of minimizing treatment duration. J Theor Biol 249(3):487–499

Chastre J, Wolff M, Fagon JY, Chevret S, Thomas F, Wermert D et al (2003) Comparison of 8 vs 15 days of antibiotic therapy for ventilator-associated pneumonia in adults: a randomized trial. JAMA 290(19):2588–2598

Klompas M, Li L, Menchaca JT, Gruber S (2017) Ultra-short-course antibiotics for patients with suspected ventilator-associated pneumonia but minimal and stable ventilator settings. Clin Infect Dis 64(7):870–876

Sandberg T, Skoog G, Hermansson AB, Kahlmeter G, Kuylenstierna N, Lannergard A et al (2012) Ciprofloxacin for 7 days versus 14 days in women with acute pyelonephritis: a randomised, open-label and double-blind, placebo-controlled, non-inferiority trial. Lancet 380(9840):484–490

Chotiprasitsakul D, Han JH, Cosgrove SE, Harris AD, Lautenbach E, Conley AT et al (2018) Comparing the outcomes of adults with enterobacteriaceae bacteremia receiving short-course versus prolonged-course antibiotic therapy in a multicenter, propensity score-matched cohort. Clin Infect Dis 66(2):172–177

Montravers P, Tubach F, Lescot T, Veber B, Esposito-Farese M, Seguin P et al (2018) Short-course antibiotic therapy for critically ill patients treated for postoperative intra-abdominal infection: the DURAPOP randomised clinical trial. Intensive Care Med 44(3):300–310

Royer S, DeMerle KM, Dickson RP, Prescott HC (2018) Shorter versus longer courses of antibiotics for infection in hospitalized patients: a systematic review and meta-analysis. J Hosp Med 13(5):336–342

Hanretty AM, Gallagher JC (2018) Shortened courses of antibiotics for bacterial infections: a systematic review of randomized controlled trials. Pharmacotherapy. 38(6):674–687

Huang DT, Yealy DM, Filbin MR, Brown AM, Chang CH, Doi Y et al (2018) Procalcitonin-guided use of antibiotics for lower respiratory tract infection. N Engl J Med 379(3):236–249

Mazuski JE, Tessier JM, May AK, Sawyer RG, Nadler EP, Rosengart MR et al (2017) The surgical infection society revised guidelines on the management of intra-abdominal infection. Surg Infect 18(1):1–76

Woodhead M, Blasi F, Ewig S, Garau J, Huchon G, Ieven M et al (2011) Guidelines for the management of adult lower respiratory tract infections—full version. Clin Microbiol Infect 17(Suppl 6):E1–E59

Mandell LA, Wunderink RG, Anzueto A, Bartlett JG, Campbell GD, Dean NC et al (2007) Infectious Diseases Society of America/American Thoracic Society consensus guidelines on the management of community-acquired pneumonia in adults. Clin Infect Dis 44(Suppl 2):S27–S72

Gupta K, Hooton TM, Naber KG, Wullt B, Colgan R, Miller LG et al (2011) International clinical practice guidelines for the treatment of acute uncomplicated cystitis and pyelonephritis in women: a 2010 update by the Infectious Diseases Society of America and the European Society for Microbiology and Infectious Diseases. Clin Infect Dis 52(5):e103–e120

Torres A, Niederman MS, Chastre J, Ewig S, Fernandez-Vandellos P, Hanberger H et al (2017) International ERS/ESICM/ESCMID/ALAT guidelines for the management of hospital-acquired pneumonia and ventilator-associated pneumonia: Guidelines for the management of hospital-acquired pneumonia (HAP)/ventilator-associated pneumonia (VAP) of the European Respiratory Society (ERS), European Society of Intensive Care Medicine (ESICM), European Society of Clinical Microbiology and Infectious Diseases (ESCMID) and Asociacion Latinoamericana del Torax (ALAT). Eur Respir J 50(3):1700582

Klein EY, Van Boeckel TP, Martinez EM, Pant S, Gandra S, Levin SA et al (2018) Global increase and geographic convergence in antibiotic consumption between 2000 and 2015. Proc Natl Acad Sci USA 115(15):E3463–E3470

ECDC. Summary of the latest data on antibiotic consumption in the European Union ESAC-Net surveillance data November 2017. https://ecdc.europa.eu/sites/portal/files/documents/Final_2017_EAAD_ESAC-Net_Summary-edited%20-%20FINALwith%20erratum.pdf2017. Accessed 13 Oct 2018

Kollef MH, Chastre J, Clavel M, Restrepo MI, Michiels B, Kaniga K et al (2012) A randomized trial of 7-day doripenem versus 10-day imipenem-cilastatin for ventilator-associated pneumonia. Crit Care 16(6):R218

Schein M, Marshall J (2004) Source control for surgical infections. World J Surg 28(7):638–645

Martinez ML, Ferrer R, Torrents E, Guillamat-Prats R, Goma G, Suarez D et al (2017) Impact of source control in patients with severe sepsis and septic shock. Crit Care Med 45(1):11–19

Chao WN, Tsai CF, Chang HR, Chan KS, Su CH, Lee YT et al (2013) Impact of timing of surgery on outcome of Vibrio vulnificus-related necrotizing fasciitis. Am J Surg 206(1):32–39

Karvellas CJ, Abraldes JG, Zepeda-Gomez S, Moffat DC, Mirzanejad Y, Vazquez-Grande G et al (2016) The impact of delayed biliary decompression and anti-microbial therapy in 260 patients with cholangitis-associated septic shock. Aliment Pharmacol Ther 44(7):755–766

Bloos F, Ruddel H, Thomas-Ruddel D, Schwarzkopf D, Pausch C, Harbarth S et al (2017) Effect of a multifaceted educational intervention for anti-infectious measures on sepsis mortality: a cluster randomized trial. Intensive Care Med 43(11):1602–1612

Bloos F, Thomas-Ruddel D, Ruddel H, Engel C, Schwarzkopf D, Marshall JC et al (2014) Impact of compliance with infection management guidelines on outcome in patients with severe sepsis: a prospective observational multi-center study. Crit Care 18(2):R42

Azuhata T, Kinoshita K, Kawano D, Komatsu T, Sakurai A, Chiba Y et al (2014) Time from admission to initiation of surgery for source control is a critical determinant of survival in patients with gastrointestinal perforation with associated septic shock. Crit Care 18(3):R87