Abstract

The paper concerns \(M\)-estimation with probabilistic models of geodetic observations that is called \(M_{\mathcal {P}}\) estimation. The special attention is paid to \(M_{\mathcal {P}}\) estimation that includes the asymmetry and the excess kurtosis, which are basic anomalies of empiric distributions of errors of geodetic or astrometric observations (in comparison to the Gaussian errors). It is assumed that the influence function of \(M_{\mathcal {P}}\) estimation is equal to the differential equation that defines the system of the Pearson distributions. The central moments \(\mu _{k},\, k=2,3,4\), are the parameters of that system and thus, they are also the parameters of the chosen influence function. The \(M_{\mathcal {P}}\) estimation that includes the Pearson type IV and VII distributions (\(M_{\mathrm{PD(l)}}\) method) is analyzed in great detail from a theoretical point of view as well as by applying numerical tests. The chosen distributions are leptokurtic with asymmetry which refers to the general characteristic of empirical distributions. Considering \(M\)-estimation with probabilistic models, the Gram–Charlier series are also applied to approximate the models in question (\(M_{\mathrm{G-C}}\) method). The paper shows that \(M_{\mathcal {P}}\) estimation with the application of probabilistic models belongs to the class of robust estimations; \(M_{\mathrm{PD(l)}}\) method is especially effective in that case. It is suggested that even in the absence of significant anomalies the method in question should be regarded as robust against gross errors while its robustness is controlled by the pseudo-kurtosis.

Similar content being viewed by others

1 Introduction and motivation

When adjusting geodetic observations, anomalies (in relation to the conventional assumptions) are more often considered. The expected anomalies concern probability distributions and/or moments of measurement errors. In this context, for example, the observations which are affected by gross errors are considered. Such disturbances are identified in two basic ways. The first way assumes that gross errors are regarded as random variables which have the same variance as other measurement errors but differ from them in expected values. Thus, identification is carried out before the least squares adjustment and by applying various testing approaches (e.g., Baarda 1968; Prószyński 1997; Cen et al. 2003). Another approach is to use a robust method of estimation, including \(L\)-estimation or \(R\)-estimation (Huber 1981; Hodges and Lehmann 1963; Duchnowski 2011, 2013); \(L_{1}\)-norm estimation (Marshall 2002) or \(M\)-estimation (Huber 1981). While applying robust \(M\)-estimation, it is usually assumed that gross errors are realizations of random variables which differ from other measurement errors in variances.

Anomalies which concern the expected value and/or the variance are however not the only ones which can be expected in the sets of geodetic observations. The problem can concern the higher order moments and hence asymmetry and excess coefficients (namely, anomalies in relation to the normal distribution). In such a case, usage of \(M\)-estimation with an adequate probabilistic model of measurement errors gives the opportunity to estimate the parameters of the functional model.

If the vector of the independent observations y is described by the functional model y \(=\) F(X) \(+\) v, \(M\)-estimation can be considered as the solution of the optimization problem \(\min _\mathbf{X} \phi (\mathbf{y};\mathbf{X}) = \phi (\mathbf{y};{\hat{\mathbf{X}}})\) with the objective function

(e.g., Huber 1981; Hampel et al. 1986). Here \(v_i =y_i -F_i\) (X) is a random error of the observation. The error distribution \(P_{\theta _i}\) is indexed with the parameter \(\theta _{i}\epsilon \Theta \) (\(\Theta \) is a space of the parameter). The class of the distributions \({\mathcal {P}}=\{{P_{\theta _i} :\theta \in \Theta }\}\) will be regarded as a probabilistic model of the observation error, and after some suitable rearrangements of the parameters, as a probabilistic model of the observation. The functions \(\rho (y_i ;\mathbf{X})=\rho (v_i)\) are arbitrary components of the objective function \(\phi (\mathbf{y};\mathbf{X})\) and usually only in general terms they are referred to the probabilistic models of the observations. However, the probabilistic models are strongly related to the background of \(M\)-estimation, namely the maximum likelihood method (ML-method, see, e.g., Serfling 1980; Huber 1981; Winkelmann and Boes 2006).

One should notice that, for example, the least squares method (LS-method) also belongs to the class of \(M\)-estimation. In such case \(\rho (y;\mathbf{X})=\rho (v)=pv^{2}\). Here, \(p~\propto ~{\mu }_{2}^{-1}\) is the weight of the observation related to the variance \({\mu }_{2}={\sigma }^{2}\) which is the central moment \({\mu }_{k}={\mu }_{k} (v)=E\{[v-E(v)]^{k}]\}\) for \(k=2\) (\(\sigma \) is the standard deviation, \(E\)(\(\circ )\) is the expected value). On the other hand, the subclass of robust \(M\)-estimates is of great interest (Huber 1981; Hampel et al. 1986; Serfling 1980). Application of that subclass in geodetic adjustment is analyzed, for example, in Krarup and Kubik 1983; Yang 1994; Huang and Mertikas 1995; Gui and Zhang 1998. Generally, robust \(M\)-estimates are determined by using the function \(\rho (v )=\mathop {p}\limits ^{\frown } v^{2} \), where \({\mathop {p}\limits ^{\frown }}\propto \) \({{\mathop {\mu }\limits ^{\frown }}_2^{-1}} \) is the equivalent weight (\({\mathop {\mu }\limits ^{\frown }}_2={\mathop {\sigma }\limits ^{\frown }}^{2}\) is the equivalent variance; see, e.g., Krarup and Kubik 1983; Yang 1994, 1999; Koch 1996).

Considering \(M\)-estimation, which applies the presented components of the objective function, only two moments of \(v\) should be determined, namely \(E(v)\) and \({\mu }_{2}\)(usually \(E(v)=0\)). Thus, the classes of distributions indexed with the parameter \({\theta }~{\in }~\{E(v),~{\mu }_{2}\}\) are the implicit probabilistic models. The class of symmetric and mesokurtic distributions is a very important group here. It consists of distributions for which the asymmetry coefficient \({\beta }_{1}={\mu }_{3}^{2}/{\mu }_{2}^{3}\) is equal to zero and the kurtosis \(\beta \) \(_{2}\) = \(\mu \) \(_{4}\)/\(\mu \) \(_{2}^{2}\) is equal to 3 (within the paper we will also use the asymmetry coefficient defined as \(\gamma _1 =\mathrm{sgn} (\mu _3 )\beta _1^{1/2} \) and the excess \(\gamma \) \(_{2}=\) \(\beta \) \(_{2}-3\)). Note, that \(\beta \) \(_{1}=0\) and \(\beta \) \(_{2}=3\) if a random variable is normally distributed, \(\hbox {ND}[E(v),\;\mu _2 ]\).

Applying such \(M\)-estimation we should hope that the values of the higher moments are close to the values for the normal distribution, namely \({\mu }_{3}={\mu }_{5} \cong 0, {\mu }_{4}/{\mu }_{2}^{2} \cong 3\) etc. (similar assumptions might also concern other robust methods, see, for example, Duchnowski 2013). Such hope can be based on the Gaussian theory of measurement errors; however, analyses of observations, among others geodetic measurements, show that this is not always justified. The non-zero asymmetry and excess may also concern the algorithms for the Gaussian white noise simulations. For example, the algorithm that was analyzed in Hu et al. (2001) showed the kurtosis \({\beta }_{2}=3.10 \div ~3.15 \) (it can be checked that the simulator of the Gaussian random numbers in MatLab is also not free of such anomalies; which will be used in the empirical part of the paper).

Determination of deviations from the theoretical values of the moments is especially important in the case of precise observations. For example, Dzhun’ (1992) showed that every astronomical instrument gives measurement errors with a certain kurtosis, which is mostly close to \({\beta }_{2}=3.8\). In contemporary astrometric experiments the kurtosis is even bigger, for example, within the project MERIT \({\beta }_{2}=4.858\) (Dzhun’ 2012). Here, the asymmetry coefficient is equal to \({\beta }_{1}=0.0048\). The high value of the kurtosis means that the random errors of the measurements are concentrated around zero more than in the case of the Gaussian errors. Winter (1978) obtained high positive excess when he analyzed the distribution of measurements of a precise distance meter. Similar property can also be observed for other measurement errors, e.g., Wassef (1959) obtained \({\beta _{2}=3.8 }\) for precise leveling and Kukuča (1967) for hydrostatic leveling (\({\beta }_{1}=0.012, {\beta }_{2}=4.325\)).

Description of measurement errors only by the second moments (or their estimates, for example, by RMS) is also not enough in relation to modern techniques of measurements, like the satellite laser ranging (SLR) or the global positioning system (GPS). Hu et al. (2001) analyzed normalized residuals of SLR observations for Lageos-2 from 13 stations. The kurtosis varied within the interval \({\beta }_{2}=2.69 \div 9.46\) which depended on the range of the additional parameters (e.g., difference of orbits, the Earth orientation parameters). It is worth noting that only in one of the six cases, the kurtosis obtained value \(<\)3. The similar values, namely \({\beta }_{2} = 2.98 \div 9.26\), were obtained for the regional GPS network (GPS data of 10 sites, Hu et al. 2001). The authors of both analyses did not compute the asymmetry coefficients, however, the values obtained for the third moment \({\mu }_{3}=-1.51\div 0.01\) (for SLR data) and \({\mu }_{3}=-0.06\div 0.12\) (for GPS data) showed the slight asymmetry especially in the first case. Luo et al. (2011) presented the analysis of the influences of different factors on the probability distribution of GNSS observables (phase measurements from the SAPOS \(^{\circledR })\). The analysis concerned the representative studentized double difference residuals (SDDR) and the results are consistent with the presented in the previous paragraph. For 268 SDDR time series (5 baselines) and for the significance level \(\alpha \) = 0.01, the authors obtained \(\gamma \) \(_{1}=-0.11 \div 0.11\) and \(\beta \) \(_{2}=2.79 \div 3.29\) (with the predominance of \(\beta \) \(_{2}>3\)).

The review of the kurtosis and asymmetry of the empirical distributions of the observations shows that the distributions are generally leptokurtic (\(\beta \) \(_{2}>3\)) with more or less significant asymmetry (\(\gamma \) \(_{1}\ne 0\)). Romanowski and Green (1983) showed that the leptokurtosis is a dominant property of real sets of observations, especially of high precision ones. Platykurtic distributions (\(\beta \) \(_{2}<3\)) are also possible, however, they are very rare.

Approximation of empirical distributions of measurement errors, which kurtosis and asymmetry are known, can be conveniently done by applying theoretical distributions which are “controlled” by the coefficients \(\beta \) \(_{1 }\) and \(\beta \) \(_{2}\). To choose such distribution one can use probability estimation methods. Among others, one can apply the methods based on the Pearson distributions (Pearson 1920), the saddlepoint approximation (Daniels 1954), maximum entropy principle (MEP) (Jaynes 1957) and the Johnson system (Johnson 1949). The extensive comparative analysis which is presented in (Xi et al. 2012) shows that none of those methods has an overall advantage over others. However, the Pearson system presents better accuracy for moderate asymmetry and for a wide range of kurtosis. Note that for large asymmetry the method which gains the advantage is MEP (probabilistic density function approximated by maximizing the entropy subject to the known moments). We showed previously that one should not expect such large anomalies for geodetic observation sets. Thus, but also on the basis of the previous papers of the author (Wiśniewski 1985, 1987, 1991), we will assume that the system of the Pearson distributions (PD-system) which contains types I \(\div \) XII (Pearson 1920; Elderton 1953; Friori and Zenga 2009; Xi et al. 2012) is the set of theoretical distributions which are steered by the four first moments.

The density functions of the PD-system have different shapes: skewed or symmetric, limited or unlimited range, bell-, U-, J-shaped (including the Gaussian bell curve as a boundary case). It is obvious that only a few types of PD-system can be applied in the theory of measurement errors. Symmetric leptokurtic distributions are described by the Pearson distributions of the type VII (PVII). Such distributions were applied, for example, to analyze errors of precise levelling (Wassef 1959) and to approximate observation errors in astrometry (Dzhun’ 1969, 1991, 1992). PVII were also the basis for the analysis of how kurtosis \(\beta \) \(_{2}>3\) affects LS-estimates (Wiśniewski 1985). The Pearson distributions of the type IV (PIV) are asymmetric leptokurtic distributions which under \(\beta \) \(_{1}\) \(\rightarrow \) 0 tends to PVII. Analyzing empirical distributions of geodetic measurement errors one could also consider type I (\(\beta \) \(_{1}\) \(>\) 0, \(\beta \) \(_{2}\) \(<\) 3) and its boundary case, namely type II (\(\beta \) \(_{1}\) = 0, \(\beta \) \(_{2}\) \(<\) 3). However, there are some problems which result from the limited range of the random variable as well as the resulting U-shaped weight function (Wiśniewski 1987, 1989, 1991). Fortunately empirical distributions of observations are rarely platykurtic (Romanowski and Green 1983). Thus, such distributions will not be discussed within the paper in detail.

For small asymmetry and moderate excess one can consider replacing a theoretical distribution by its expansion derived from the normal distribution. This can be done by applying the expansion with respect to orthogonal polynomials which is called the Gram–Charlier of the type A (see, e.g., Elderton 1953; Kolassa 2006; Berberan-Santos 2007).

It is important to know how non-normal distribution influences the estimates obtained. The problem is a general one and from such point of view it is considered, for example, in Mooijaart (1985); Klein and Moosbrugger (2000); Mukhopadhyay (2005). It is also an issue discussed in the applied statistics, for example, in marketing Andreassen et al. (2006) and in econometrics Nagahara (2011). The influence of deviation of an empirical distribution from the normal distribution on LS-estimates in the case of geodetic networks was discussed in Gleinsvik (1971,1972) and Wiśniewski (1985). It was shown that the asymmetry of the distribution, which changed the expected value of the measurement errors, also influenced the bias of the LS-estimates of the parameters of the observation models. Such influences are reinforced or weakened by the kurtosis.

To consider the asymmetry or excess of observations during an adjustment procedure it is necessary to assume a certain probabilistic model of observations, which can be acceptable. Such model results in a particular optimization problem of ML-method, or equivalent particular influence and weight functions of \(M\)-estimation. Within this paper we assume that leptokurtic distributions with asymmetry are described by the Pearson distribution of the types IV or VII; or are approximated by the Gram–Charlier series (G–C series). The optimization problem of ML-method with the application of the Pearson distributions was considered in my previous papers (Wiśniewski 1987, 1989, 1991). Dumalski and Wiśniewski (1994) proposed a method of adjustment based on G–C series. The general form of the weight function, which is related to the systems of the Pearson distributions, was presented in Dzhun’ (2011). We will propose a new variant of \(M\)-estimation which depends on the variance but also on the kurtosis and the asymmetry of the observation error distribution.

2 Theoretical foundations

2.1 ML and \(M_{\mathcal {P}}\) estimates

Let \(P_{\theta _i } \in {\mathcal {P}}\) be distributions of independent random errors \(v_{i }\) and let they be indexed with the parameters: \(E_{i} = E(v_{i}),{\mu }_{2,i} = {\mu _{2} (v_{i})} = {\sigma }_{i}^{2}, {\mu }_{3,i} = {\mu }_{3} (v_{i})\) and \({\mu }_{4,i} = {\mu }_{4} (v_{i})\). To estimate the moments \({\mu }_{3 }\) and \({\mu }_{4 }\) one can apply the methods proposed in the following papers (Wiśniewski 1995, 1996; Kasietczuk 1997). When we are interested in estimation of only one of these parameters, then it is usually assumed that the others are fixed (however, it is not necessary to know their particular values). The set of distributions that are possible probabilistic models of geodetic observation errors will be denoted as \(W_v =\{P_{\theta _i } :\;v_i \in \mathfrak {I}_v ,\;\theta _i \in \mathfrak {I}_\theta \subset {\Theta }\}\); where \(\mathfrak {I}_{v }\) is the set of possible values of random errors, for example, \(\mathfrak {I}_{v}=\langle a_{1},a_{2}\rangle ;\mathfrak {I}_{\theta }\) is the set of practically acceptable values of the parameter \({\theta }_{i}\). Thus, we choose a probabilistic model of measurement errors from the distributions that belong to \(W_v\) (Wiśniewski 1987).

Let \(\mathbf{y = AX + v}\) be a conventional functional model of the observation vector \(\mathbf{y}=[y_1 ,\ldots ,y_n ]^{\mathrm{T}} (\mathbf{v}=[v_1 ,\ldots ,v_n ]^{\mathrm{T}}\) is a vector of random errors, \(\mathbf{A}\in R^{n,r}\), \(\hbox {rank} (\mathbf{A})=r\), is a known matrix of coefficients, \(\mathbf{X}\in (\Theta =R^{r})\) is a vector of unknown parameters). Additionally, let independent observations \(y_i =\mathbf{a}_i \mathbf{X}+v_{i},i=1,2,\ldots ,n, (\mathbf{a}_i -i\)th row of the matrix A) have a fixed probability density function (PDF). Thus, each observation brings a piece of \(f\)-information \(I(y_i ;\mathbf{X})=-\ln f(y_i ;\mathbf{X})=-\ln f(v_i )\) (see, e.g., Jones and Jones 2000; Wiśniewski 2009). Considering the parameter X, all observations included within the vector \(\mathbf{y}\) bring the global amount of information as follows

Note, that Eq. (2) is also an empirical version of Shannon’s entropy (e.g. Ferrari and Yang 2010). Considering the global amount of information, one can search such estimate of the parameter \(\mathbf{X}\) which minimizes the amount of information included in the set of the observations (also information which is rather unexpected). Thus, the estimate \({ \hat{\mathbf{X}}}\) should solve the optimization problem \(\min _\mathbf{X} I(\mathbf{y};\mathbf{X})=I(\mathbf{y};{ \hat{\mathbf{X}}})\) (assuming that such extremum exists). For example, let \(v_i \sim \hbox {ND}[E(v_i )=0,\;\sigma _i^2 ]\) and \(y_i \sim \hbox {ND}[E(y_i )=\mathbf{a}_i \mathbf{X},\;\sigma _i^2 ]\), then on the basis of the following PDF, \(f(y_i ;\mathbf{X})=f(v_i )\propto \exp (-p_i v_i^2 /2)\), one can write \(I(y_i ;\mathbf{X})=p_i v_i^2 /2\) and hence \(I(\mathbf{y};\mathbf{X})\propto \sum _{i=1}^n {p_i v_i^2 } \). Since LS-estimate of the parameter X minimizes the objective function \(\phi (\mathbf{y};\mathbf{X})=\sum _{i=1}^n {p_i v_i^2 }\) then, for the normal distributions, it also minimizes the amount of \(f\)-information related to the parameter X. However, such estimates do not show such property for the other distribution which belongs to the set \(W_v \). In such a case, the global amount of information is minimized by ML-estimates. Here, we look for the estimate \({ \hat{\mathbf{X}}}\) which maximizes the likelihood

(see, e.g., Serfling 1980; Huber 1981; Koch 1990). Since \(\max _\mathbf{X} \mathcal{L} (\mathbf{y};\mathbf{X}) \Leftrightarrow \min _\mathbf{X} [-\mathcal{L} (\mathbf{y};\mathbf{X})=I(\mathbf{y};\mathbf{X})=\phi (\mathbf{y};\mathbf{X})]\), where

and \({\rho } (v_{i})=-\ln f(v_{i})\), then the idea of maximum likelihood is equivalent to the postulate of the minimum \(f\)-information related to \({\hat{\mathbf{X}}}\).

Now, let PDF be at least a twice differentiable function. Then, the Newton method can be applied to solve the optimization problem \(\min _\mathbf{X} \phi (\mathbf{y};\mathbf{X})\). Such solution uses the gradient and the Hessian of the function of Eq. (4) (see, e.g., Teunissen 1990). Thus, let us write the following form for the gradient

where \(\mathbf{b} (\mathbf{v})=[b(v_1 ),\ldots ,b(v_n )]^{\mathrm{T}}\) is a vector consisting of the following elements

and \(\partial v_i /\partial \mathbf{X}=-\mathbf{a}_i \) (if it is convenient and not misleading, the derivatives \(\mathrm{d}^{j}f/ \mathrm{d}f^{j}, j=1,2,3,4,\ldots \) will be denoted as \(f^{\prime }, f^{\prime \prime }, f^{\prime \prime \prime }, f^{(4)},\ldots \)). If \(v_i \sim \hbox {ND}[E(v_i )=0, \sigma _i^2 ]\) and \(f(v_{i})\propto \) exp(\(-p_{i}v_{i}^{2}\)/2), then \(b(v_{i})=p_{i}v_{i}\).

To find out the form of the Hessian of the objective function of Eq. (4), one can write

The diagonal elements of the matrix \(\mathbf{R} (\mathbf{X})=\hbox {Diag[}r(v_1 ),\ldots ,r(v_n )]\) are computed as the values of the following function

which is also known as the rigor function (e.g. Kamiński and Wiśniewski 1994). The necessary condition for the minimum of the function from Eq. (4) is that the Hessian from Eq. (7) be positively defined. It is obvious that such condition is satisfied for \(\forall i:r(v_{i})~>\) 0.

The gradient of Eq. (5) and the Hessian of Eq. (7) are the basis for the following iterative procedure (\(j=0,1,\ldots ,k)\)

with the iterative change of the parameter

The determination procedure of the ML-estimate of the vector \(\mathbf{X}\) ends for such \({\hat{\mathbf{X}}}=\mathbf{X}^{k}\) that \(\mathbf{g}_\phi (\mathbf{X}^{k})=\mathbf{0}\) and (\(\mathbf{X}^{k+1}-\mathbf{X}^{k}=\mathbf{0})\Leftrightarrow \mathrm{d}\mathbf{X}^{k+1}=\mathbf{0}\).

ML-estimates can also be considered as a special case of the broader class of the estimates, namely \(M\)-estimates which are determined on the basis of influence functions. Let us consider a set of probability distributions \({\mathcal {P}}=\{P_\mathbf{X} :\;\mathbf{X}\in R^{r}\}\) with cumulative distributions \(F(y_i ;\mathbf{X})=F(v_i)\), then one can write the related influence function in the following form (Hampel 1974; Serfling 1980; Huber 1981; Hampel et al. 1986; Yang 1997)

where \(c_{\mathrm{IF}}\) = \(\smallint {\psi }^{\prime }\mathrm{d}F\). Due to the proportion \(\mathrm{IF} (y_i ;\mathbf{X},F)\propto \psi (v_i )\), the function \({\psi } (v_{i})\) is also very often called the influence function. \({\hat{\mathbf{X}}}\) is an \(M\)-estimate of the parameter X if it solves the following equation (see, e.g., Hampel et al. 1986; Yang 1999; Yang et al. 1999)

where \(\mathbf{\psi } (\mathbf{v})=[\psi (v_1 ),\ldots ,\psi (v_n )]^{\mathrm{T}}\). The function \(\psi (y_i ,\mathbf{X})\) can be written (in relation to the objective function from Eq. (1)) as follows

where

Let

be a weight function (Huber 1981; Hampel et al. 1986; Youcai and Mertikas 1995; Yang 1997). Then, for the fixed functions \({\rho }(v_{i})\) one can write that \(\mathbf{g}_\phi (\mathbf{X})=\mathbf{A}^{\mathrm{T}}\mathbf{\psi }(\mathbf{v})\). Taking into account the expressions in Eqs. (14) and (15), and considering the following vector \(\mathbf{\psi }(\mathbf{v})=\mathbf{w}(\mathbf{v})\mathbf{v}\), the Eq. (12) can be rewritten as \(\mathbf{A}^{\mathrm{T}}\mathbf{\psi }(\mathbf{v})=\mathbf{A}^{\mathrm{T}}\mathbf{w}(\mathbf{v})\mathbf{v}=\mathbf{0}\), where \(\mathbf{w}(\mathbf{v})\) is a diagonal matrix with the elements \(\mathbf{w}(\mathbf{v})_{ii} =w(v_i )\). For the model \(\mathbf{v=y}-\mathbf{AX}\), one can obtain the following iterative solution

where \({\hat{\mathbf{v}}=\mathbf{y}}-{\mathbf{A}\hat{\mathbf{X}}}\).

From a practical point of view, the approximation of the asymptotic covariance matrix is satisfactory in that case (Yang 1997). Thus, let

where \(\hat{{\sigma }}_{0,\psi }^2\) is an iterative estimate of the variance coefficient computed as

Note that Eqs. (17) and (18) follow from the application of the empirical influence function \(\mathrm{EIF}(y_i ,\mathbf{X})=[\mathbf{A}^{\mathrm{T}}\mathbf{w}(\mathbf{v})\mathbf{A}]^{-1}\mathbf{a}_i^\mathrm{T} w(v_i )v_i \) (Yang 1997).

The estimate from Eq. (16), which solves the Eq. (12), also satisfies the necessary condition \(\mathbf{g}_\phi (\mathbf{X})=\mathbf{0}\) for the minimum of the function from Eq. (1). For \({\rho }(v_{i})=-\ln f(v_{i})\), the following equality holds (see, e.g., Youcai and Mertikas 1995; Dzhun’ 2011)

The estimate from Eq. (16) together with the weight function from Eq. (19) can be regarded as \(M_{\mathcal {P}} \) estimator with the explicit probabilistic model \({\mathcal {P}}=\{P_\mathbf{X} :\;\mathbf{X}\in R^{r}\}\).

In the case of LS-method, where \({\rho }(v_{i}) = p_{i}v_{i}^{2}\), we get \({\psi }(v_{i}) = 2p_{i}v_{i }\) and \(w(v_{i}) = p_{i}~\propto ~{\psi }(v_{i})/v_{i}\). Such variant of \(M_{\mathcal {P}} \) estimation is related to the set of normal distributions \({\mathcal {P}}=\{\hbox {ND}[E(v_i )=0,\;\sigma _i^2 ]\}\). Another example of the probabilistic models in \(M_{\mathcal {P}}\) estimation is the following set \({\mathcal {P}}=\{(1-\varepsilon )P_{\mathbf{X},\alpha } +\varepsilon P_{\mathbf{X},\beta } :\;\mathbf{X}\in R^{r}\}, 0 \le \varepsilon \le \) 1, which is the basis for robust \(M\)-estimation (Huber 1981; Hampel et al. 1986). Here, we assume that \(P_{\mathbf{X},\alpha } \in W_v \) is an acceptable distribution of measurement errors, while \(P_{\mathbf{X},\beta } \) is an unacceptable (strange) distribution. Considering LS-method with the equivalent weights \(\mathord {\mathrm{\scriptscriptstyle \frown }\over {p}}_{i} \propto \mathord {\mathrm{\scriptscriptstyle \frown }\over {\mu }} _{2,i}^{-1} \), we can write that \(P_{\mathbf{X},\alpha } =P_{\mathbf{X}/\mu _2 } =\hbox {ND}[\mathbf{a}_i \mathbf{X},\;\sigma _i^2 ]\) and \(P_{\mathbf{X},\beta } =P_{\mathbf{X}/\mathord {\mathrm{\scriptscriptstyle \frown }\over {\mu }} _2 } =\hbox {ND}[\mathbf{a}_i \mathbf{X},\;\mathord { \mathrm{\scriptscriptstyle \frown }\over {\sigma }} _i^2 ]\), where \(\mathord {\mathrm{\scriptscriptstyle \frown }\over {\sigma }} _i^2 =\mathord {\mathrm{\scriptscriptstyle \frown }\over {\mu }} _{2,i} \) is the equivalent variance.

Remember that usually \(\mathord {\mathrm{\scriptscriptstyle \frown }\over {p}}_{i}=t(v_i )p_i \), where \(0 \le t(v_{i}) \le 1\) is an attenuation function (e.g., Krarup and Kubik 1983; Yang 1994; Yang et al. 2002). In such a case, the equivalent variance can be written as \(\mathord {\mathrm{\scriptscriptstyle \frown }\over {\mu }} _{2,i} =\mu _{2,i} /t(v_i )\). Probabilistic models are also applied in a development of \(M\)-estimation, namely \(M_\mathrm{split}\) estimation (Wiśniewski 2009, 2010; Duchnowski and Wiśniewski 2011). In the basic variant of the method in question, it is assumed that \({\mathcal {P}}=\{P_{\mathbf{X}_\alpha } ,P_{\mathbf{X}_\beta } :\;\mathbf{X}_\alpha ,\mathbf{X}_\beta \in R^{r}\}\subset W_v.\) \(\mathbf{X}_\alpha \) and \(\mathbf{X}_\beta \) are acceptable variants of the same parameter X. Assuming that \({\mathcal {P}}\) is a set of normal distributions that differ from each other in the parameter X, we get the squared \(M_{\mathrm{split}}\) estimation with the following components of the objective function \(\rho (\mathbf{X}_\alpha ,\mathbf{X}_\beta )=v_{i\alpha }^2 v_{i\beta }^2 \).

2.2 Influence, weight and rigor functions in \(M_{\mathcal {P}} \) estimation: robustness of the method

When \({\mathcal {P}}\) is a set of distributions which are symmetric about \(v\) = 0, then \(E\) = \(E(v)\) = 0 and the mode \(M_{0 }\) is at the origin of the coordinate system, namely \(M_{0}\) = 0. For such distributions, it holds that \({\psi }(v)=-{\psi }(-v)\), and the weight functions \(w(v)\) as well as the rigor functions are symmetric ones. For asymmetric distributions, the expected value moves away from the mode with the increase of the asymmetry coefficient, with \(\hbox {sgn}(\delta )=\hbox {sgn}(\gamma _1 )\), where \({\delta }~=~E~-~M_{0}\) is the shift between the expected value and the mode. For such distributions the origin on the coordinate system might lay: except the mode (\(\hbox {PDF}_{(M_0 \ne 0)} )\), at the mode (\(\hbox {PDF}_{(M_0 =0)} )\) or at the expected value (\(\hbox {PDF}_{(E=0)} )\).

For the mode of the variable \(v\) and for the arbitrary \(\epsilon \! >\! 0\) it holds that \(\forall v_{0}~\ne ~M_{0}:P(v~\in ~{\Delta M}_{0(\epsilon )})>P(v~\in ~{\Delta v}_{0(\epsilon )})\) where \({\Delta M}_{0(\epsilon )}=\langle M_{0}-\epsilon ,~M_{0}+\epsilon \rangle \) and \({\Delta v}_{0(\epsilon )}=\langle v_{0}-\epsilon ,v_{0}+\epsilon \rangle \). The postulate of the minimum information is satisfied by the application of the individual information \(I(y;\mathbf{X})=I(v-s)=I(\tilde{v})\) where \(s=M_{0 }\) for \(\hbox {PDF}_{(M_0 \ne 0)} , s=0\) for \(\hbox {PDF}_{(M_0 =0)}\) and \(s=-{\delta }\) for \(\hbox {PDF}_{(E=0)}\). In such case, \(M_{\mathcal {P}} \) estimates should follow from the influence function determined on the basis of the objective function which is shifted, namely on the basis of its components \(\rho (v-s)=\rho (\tilde{v})\). Thus

where

is a weight function which is determined with respect to the mode \(M_{0}\). If \(v=M_{0}\ne 0\) then \(w_{s}(M_{0})~=~{\psi }_{s}(M_{0})/M_{0}\). However, if \(M_{0}=0\) then lim \(_{v\rightarrow 0}w_{s}(v)~=~{\psi }^{\prime }_{s}\)(0) = \(r_{s}\)(0) (such value was derived by Kadaj (1988) for the symmetric distributions and for the conventional influence function). Considering the functions from Eqs. (20) and (21), one can obtained the following rigor function

If the weight function \(w_{s}(v)\) has a maximum at \(v\) = \(M_{0}\), then \(w^{\prime }_{s}(M_{0})\) = 0 and \(r_{s}(M_{0})\) = 2\(w_{s}(M_{0})\). It does not follow that every rigor function of Eq. (22) has a maximum at that point. Considering that \(r^{\prime }_{s}(v)\) = 2 [\(2w^{\prime }_{s}(v)+vw^{\prime \prime }_{s}(v)\)] it holds only if \(w^{\prime \prime }_{s}(M_{0})=0\). From a theoretical point of view, such property is adverse; however, one can propose a following corrected rigor function

that is free of such property. Note that for such corrected rigor function \({r}'_{s,M_0 } (v)=4{w}'_s (v)+2v{w}''_s (v)-2M_0 {w}''_s (v)\), hence \(\max _v r_{s,M_0 } (v)=r_{s,M_0 } (M_0 )\) holds independently of the value of the derivative \(w^{\prime \prime }_{s}(v)\) at \(M_{0}\).

While analyzing the expected properties of \(M_{\mathcal {P}} \) estimates we can apply the influence function \(\mathrm{IF}(y;\mathbf{X},F)\propto \psi (v)\) to determine the following parameters: the maximum sensitivity to gross errors, \({\gamma }^{*}~=~ \mathrm{sup}~_{v}{\vert }{\psi }(v){\vert }\); the local-shift sensitivity, \({\lambda }^{*}~=~\mathrm{sup}~_{v}{\vert }[{\psi }(v~+~{\delta v})~-~{\psi }(v)]/{\delta v}{\vert }\) and the rejection point \({\rho }_{v}^{*}~=~\mathrm{inf}~\{v^{*}:{\psi }(v)~=~0,~{\vert }v{\vert }~>~v^{*}\) } (Hampel 1974; Hampel et al. 1986). In the case of asymmetric distributions, one can consider left- or right-handed rejection points, namely \({\rho }_{-}^{*}~=~\mathrm{inf}~\{v_{-}^{*}:{\psi }(v)~=~0,~v~<~v_{-}^{*}\)} and \({\rho }_{+}^{*}~=~\mathrm{inf}~\{v_{+}^{*}:{\psi }(v)~=~0,~v~>~v_{+}^{*}\}\). For \({\gamma }_{1}\) = 0

we obtain \({\vert }{\rho }_{-}^{*}{\vert }~=~{\vert }{\rho }_{+}^{*}{\vert }~=~{\rho }^{*}\). Additionally, we also consider a parameter which describes the maximum sensitivity to observation disturbances

Such parameter is of course related to the sensitivity \({\lambda }^{*}\) (for the influence function \(\mathrm{IF}(y-s;\mathbf{X},F)\propto \psi (v-s)=\psi _s (v))\). The important problem is that this parameter is not achieved at the mode. However, if it relates to the corrected rigor function, namely if

then \(\max _v r_{s,M_0 } (v)=r_{s,M_0 } (M_0 )=\lambda _{r,M_0 }^{*} \Leftrightarrow \mathrm{max}~_{v}w_{s}(v) = w_{s}(M_{0}) = {\lambda }_{w}^{*}\) (if only such extreme points exist).

The class of \(M\)-estimates can be divided into the following subclasses (Kadaj 1988): robust \(K_- \), neutral \(K_0 \) and weak \(K_+ \). Let us extend such classification to \(M_{\mathcal {P}} \) estimates, thus let

where \({\Delta M}_{0(l)} = \langle M_{0}~-~l,~M_{0}~+~l\rangle , l~\ge 0\). For example, in the case of LS-method and the set of the normal distributions we have \(\forall v:w_{s}(v)~=~{\lambda }_{w}^{*}\) = \(p\), hence \(\mathrm{LS}\equiv M_{{\mathcal {P}}=\{\mathrm{ND}[0,\sigma ^{2}]\}} \in K_0 \). In the case of \(M\)-estimation with the probabilistic model \({\mathcal {P}}=\{(1-\varepsilon )P_\alpha +\varepsilon P_\beta \}\) with implicit distributions \(P_{\alpha }\) and \(P_{\beta }\), and with application of the Huber function (Huber 1981), one can write the following forms of the weight functions (for \(\bar{{v}}=v/\sigma \) and, e.g., \(l=2.0, 2.5, 3.0\))

Here \(\forall \bar{{v}}\in \Delta \bar{{v}}=\langle {-l,\;l} \rangle : \;w(\bar{{v}})=\lambda _w^{*} \) and \(\forall \bar{{v}}\notin \Delta \bar{{v}}:w(\bar{{v}})<\lambda _w^{*} \), where \({\lambda }_{w}^{*}~=~1 (\Delta \bar{{v}}=\langle {-l,\;l} \rangle \) is the interval for acceptable standardized random errors \(\bar{{v}})\). Thus, such method of \(M\)-estimation, which will be denoted as \(M_{H}\), belongs to the subclass \(K_- \). On the other hand \(M_{\mathcal {P}}\) estimations which have U-shaped weight functions will belong to the subclass of weak estimations \(K_+ \).

3 Probabilistic models

3.1 Pearson distributions

The Pearson distribution system can play an important role in \(M_{\mathcal {P}}\) estimation with the weight function from Eq. (19). The probability density functions of PD-system are solutions of the following differential equation (Pearson 1920; Elderton 1953; Hald 2007; Nagahara 2007, 2011; Friori and Zenga 2009; Dzhun’ 2011; Xi et al. 2012)

where:

The papers cited above also showed other variants of the differential equation in question which depends on the parameter notation. The special role of the Pearson distributions in \(M\)-estimation with probabilistic models follows from the fact that Eq. (28) can be regarded as the influence function of \(M_{\mathcal {P}} \) estimation, namely \({\psi }(v)=-{\Omega }(v)=-f^{\prime }(v)/f(v)\). Such assumption refers to the general proposition of the application the expression \({\Omega }(v)/v\) as the weight function in ML-method (Dzhun’ 2011).

The asymmetry \({\beta }_{1}={\gamma }_{1}^{2}\) and kurtosis \({\beta }_{2 }\) are the parameters in Eq. (28), hence they are also parameters of the influence function \({\psi }(v)=-{\Omega }(v)\). This allows us to obtain \(M\)-estimates which can consider anomalies in observation distributions (in this paper context). The range of the steering parameters of \(M\)-estimation might have also another meaning. For example, suppose that \({\beta }_{2 }\) is not necessarily the real kurtosis of the distribution, then it can be the parameter which influences the robustness of \(M\)-estimates (this will be noted in the next part of the paper).

The Eq. (28) has three basic solutions, namely three function types: type I, IV and VI and additionally 10 transitional functions (including the probability density function of the normal distribution). The solution in question depends on the roots of the equation \({\sigma }^{2}c_{0}-{\sigma c}_{1}v+c_{2}v^{2}=0\), and are classified by the criterion (e.g. Elderton 1953; Hald 2007)

If \(k<0\), then two real roots exist which differ from each other in the sign. In such a case we get PDF of type I (\(\hbox {PD{F}'PI}_{\beta _1 >0,\beta _2 <3} )\), which under \({\beta }_{1}=0\) changes into type II (\(\hbox {PD{F}'PII}_{\beta _1 =0,\beta _2 <3} )\), and if additionally \({\beta }_{2}=3\) it changes into PDF of the normal distribution (\(\hbox {PD{F}'ND}_{\beta _1 =0,\beta _2 =3} )\). PDFs of the types I and II have limited range and are usually bell-shaped, but may be U-shaped and J-shaped (Elderton 1953). If \(0<k<1\), then the roots are complex, and the solution of the Eq. (28) is the function of type IV, for which

Probability density functions from Eq. (31) have unlimited range and are bell-shaped. If \(k>1\), the roots are real and of the same sign, and one can get the curve of type VI (if \(k=1\), then we can get the curve of type V). Those curves as well as other transitional curves are rarely applied for PDF. Since distributions of geodetic observations are usually leptokurtic, most of all we will be interested in the distributions PIV (\(0<k<1\)) and PVII (\(k=0\)) (note the general relation from Eq. (31)). The leptokurtic model which includes the chosen classes of the distributions will be denoted as \({{\mathcal {P}}_{\mathrm{PD}(l)}} =\{\hbox {PIV, PVII}:\sigma ,\beta _1 ,\beta _2 >3\}\). \(M_{\mathcal {P}} \) estimation which applies such a model will be denoted as \(M_{\mathrm{PD}(l)}\). Consequently, \(\mathcal {P}_{\mathrm{PD}(p)} =\{\hbox {PI, PII}:\sigma ,\beta _1 ,\beta _2 <3\}\) is a model of platykurtic distributions and \(\mathcal {P}_{\mathrm{PD}(m)} =\{\hbox {PVII}:\sigma ,\beta _1 ,\beta _2 =3\}\) is a model of mesokurtic distributions.

Considering the model \({\mathcal {P}}_{\mathrm{PD}(l)} \), then \(k\) should be within the interval \(0 \le k<1\). If \(k=0\) and additionally \({\beta }_{1}=0, {\beta }_{2}=3\), then \({\mathcal {P}}_{\mathrm{PD}(l)} \rightarrow {\mathcal {P}}_{\mathrm{PD}(m)} \equiv {\mathcal {P}}_{\mathrm{ND}} \). The conditions concerning the value of \(k \) entail limitations of values of \({\beta }_{1 }\) and \({\beta }_{2}\). Generally speaking, in the case of \(\hbox {PD{F}'PIV}_{\beta _1 >0,\beta _2 >3} \) the tolerance of increasing asymmetry of the platykurtic distribution becomes greater in the case of increasing kurtosis. If the excess is small and the asymmetry is large, then for such PDF \(k \) exceeds the acceptable limits (Fig. 1).

For the function (28), the origin of the coordinate system is at the point \(E=E(v)=0\) (Elderton 1953). The distance between the expected value \(E={\sigma c}_{1}/2 c_{2}\) and the mode \(M_{0}={\sigma c}_{1}(c_{0}+c_{2})/2c_{2}(c_{0}+3c_{2})\) is equal to \({\delta }=E-M_{0}={\sigma c}_{1}/(c_{0}+3c_{2})\) (for PVII \({\beta }_{1}=0\), hence \(c_{1}=0\) and \({\delta }=0\)). Thus creating the shifted influence function \(\psi (v-s)=\psi (\tilde{v})\) (with the shift \(s=-{\delta }\)) one can write

Such influence function is related to the following \(\hbox {PD{F}'PIV}\), which mode \(M_{0 }\) coincides with the origin (Elderton 1953; Wiśniewski 1987, 1989)

where: \(v_{M_0 ,a} =(v-M_0 )/a, a={\sigma z}/4, {\vartheta }={\gamma }_{1}(c_{0}^{2}-c_{2}^{2})/{zc}_{2}^{2},m=(c_{0}+3c_{2})/2 c_{2}, z=2(4 c_{0}c_{2}-c_{1}^{2})^{1/2}/c_{2}\). For such PDF one can write the objective function of ML-method as follows \(\rho (v)=m\ln (1+v_{M_0 ,a}^2 )+\vartheta {}\hbox {arctg}v_{M_0 ,a}^2 \). If \({\beta }_{1}=0\) and \(v_{M_0 ,a} =v_a =v/a\), then one can obtain \(\hbox {PD{F}'PVII}\) in the form \(f(v)\propto \)(1+\(v_{a}^{2})^{-m}\), and hence \({\rho }(v)=m\)ln(1+\(v_{a}^{2})\).

The weight function which corresponds to the influence function from Eq. (32) has the following form

Now let us find the point at which such function has the maximum. The derivative \(w^{\prime }_{\delta }(v)\) is equal to zero for such \(\tilde{v}=v+\delta =v+\sigma c_1 (c_0 +3c_2 )\), for which

and hence

If \({\beta }_{2}>\)3, then for each \(v \ne M_{0 }\) it holds that \(r_{\delta ,M_0 } (v)\le \lambda _{r,M_0 }^{*} \) and \({ w_\delta (v)< \lambda _w^{*}}\). Thus, \(M_{\mathcal {P}} \) estimates, in which the model \({\mathcal {P}}_{\mathrm{PD}(l)} =\{\hbox {PIV, PVII}:\sigma ,\beta _1 \ge 0,\beta _2 >3\}\) is applied, belong to the class of robust estimation, namely \(M_{\mathrm{PD}(l)} \in K_- \). The graphs of the functions which characterize that method are presented in Fig. 2. The graphs of the weight functions for the models \({\mathcal {P}}_{\mathrm{PD}(l)} =\{\hbox {PIV}:\sigma =1,\beta _1 >0,\beta _2 >3\}\) and \({\mathcal {P}}_{\mathrm{PD}(l)} =\{\hbox {PVII}:\sigma =1,\beta _1 =0,\beta _2 >3\}\) are presented in Figs. 3 and 4.

Weight functions of \(M_{\mathcal {P}} \) estimation and LS for \({\mathcal {P}}_{\mathrm{PD}(l)} =\{\hbox {PVII:}\, \sigma =1,\beta _1 =0,\, 3<\beta _2 \le 8\}{\mathop {\rightarrow }^{\beta _2 \rightarrow 3_+ }}{\mathcal {P}}_{\mathrm{ND}} =\{\mathrm{ND}\hbox {:}\, \sigma =1,\beta _1 =0,\beta _2 =3\}\)

For the sake of comparing, let us now consider the weight functions of \(M_{\mathcal {P}} \) estimation with the platykurtic Pearson distributions of type I and II. If the mode is at the origin, then \(\hbox {PD{F}'PI}\) can be written as follows (Elderton 1953; Wiśniewski 1987, 1989)

where: \(m_{1}=-2 m+{\vartheta }, m_{2}=-2 m-{\vartheta }\). The quantity \(z\), which appears in the parameter \({\vartheta }\) (see, Eq. (33)), can be written as \(z=2(c_{1}^{2}-4c_{0}c_{2})^{1/2}/c_{2}\). For \({\beta }_{1}=0\) one can write that \(f(v)\propto (1-v^{2}/a^{2})^{m}\). For the distributions PI and PII, the variable \(v\) can vary within the interval \(\langle a_{1},a_{2}\rangle \), with \(a_{1}={\sigma m}_{1}z/ 2(m_{1}+m_{2})\) and \(a_{2}=-a_{1}m_{2}/m_{1}\). If \({\beta }_{1}=0\), then \(c_{0}=4{\beta }_{2}, c_{1}=0, c_{2}=2{\beta }_{2}-6, {\vartheta }=0, m_{1}=m_{2}=m\) and \(z=2(-4c_{0}c_{2})^{1/2}/c_{2 }\) (for \({\beta }_{2}<3\) and acceptable values of the asymmetry coefficient \({\beta }_{1}\), the quantity \(c_{2 }\) has negative values). In such a case \(a_{1}={\sigma mz}/4 m={\sigma z}/\)4, then for the distribution PII we can write \(a_{1}=-a_{2}=-[2{\beta }_{2}/({\beta }_{2}-3)]^{1/2}\).

The weight functions of \(M_{\mathcal {P}} \) estimation with symmetric lepto- and platykurtic distributions \({\mathcal {P}}_{\mathrm{PD}(l,p)} =\{\hbox {PII, PVII:} \sigma =1, \beta _1 =0, \beta _2 \}\) is presented in Fig. 5.

For the distributions PI and PII it holds that \(\forall v \ne M_{0}: w_{\delta }(v) > {\lambda }_{w}^{*}\). Thus, \(M_{\mathcal {P}}\) estimates for \({\mathcal {P}}_{\mathrm{PD}(p)} =\{\hbox {PI, PII:}\, \sigma , \beta _1 \ge 0, \beta _2 <3\}\) belong to the class of the weak estimates \(K_+ \). Such models might be interesting in some particular estimation problems. However, such problems are beyond the scope and objective of this paper, thus the models in question will not be discussed here anymore.

3.2 Gram–Charlier series

Assume that the set of distributions \({\mathcal {P}}=\{f(v):\mu _k ,\;k=2,3,4,\ldots \}\subset W_v \) is not much different from the set \({\mathcal {P}}_{\mathrm{PD}(m)} =\{\hbox {ND}:\sigma ,\;\gamma _1 =0,\;\gamma _2 =0\}\). Then, the function \(f(v)\) can be replaced by the sum \(f(v) = f_{\mathrm{ND}}(v) + {\zeta }(v; {\mu }_{2}, {\mu }_{3}, \ldots )\), where the remainder term \({\zeta }(v)\) is expanded as a series of \(v\) (e.g. Elderton 1953). Here, we will apply an orthogonal expansion, namely the Gram–Charlier A series (Charlier 1906; Tomozawa 1974; Berberan-Santos 2007). A similar asymptotic expansion was also proposed by Edgeworth (see, e.g., Elderton 1953; Crawford and Walsh 1960; Kolassa 2006). The Gram–Charlier series (G–C) can be presented as polynomials which are orthogonal with respect to PDF’ND, namely (e.g. Hald 2007)

where \(h(\bar{{v}})_\infty =\sum _{j=0}^\infty {\lambda _j (-1)^{j}H_j (\bar{{v}})/j!} \) (for \(\bar{{v}}=v/\sigma )\) in which, \(H_j (\bar{{v}})\) is the Hermite polynomial of degree\(~j \) for which \(H_j^{(i)} (\bar{{v}})=jH_{j-1}^{(i-1)} (\bar{{v}})\). We usually apply the first few polynomials of G–C series. For example, if \(j\) = 4 then: \(H_0 (\bar{{v}})=1, H_1 (\bar{{v}})=\bar{{v}}, H_2 (\bar{{v}})=\bar{{v}}^{2}-1, H_3 (\bar{{v}})=\bar{{v}}^{3}-3\bar{{v}}, H_4 (\bar{{v}})=\bar{{v}}^{4}-6\bar{{v}}^{2}+3\).

If PDF in the set of distributions \({\mathcal {P}}=\{f(v):\sigma ,\;\gamma _1 ,\;\gamma _2 \}\) is replaced by the function from Eq. (38), then the component of the objective function of ML-method with the approximating model \(\tilde{\mathcal {P}}_{\mathrm{G-C}} =\{\tilde{f}_\infty (v):\sigma ,\;\gamma _1 ,\;\gamma _2 \}\) takes the following form

This is the basis for determination of the influence function of \(M_{\mathcal {P}} \) estimation as well as the rigor function of ML-method, hence

The orthogonality condition can be used to determine the coefficients \({\lambda }_{i}\), thus (see, e.g., Elderton 1953): \({\lambda }_{0} = 1, {\lambda }_{1} = {\lambda }_{2} = 0,{\lambda }_{3} = - {\gamma }_{1}, {\lambda }_{4} = {\gamma }_{2}, {\lambda }_{5} = - {\mu }_{5}/{\sigma }^{5} + 10{\mu }_{3}/{\sigma }^{3}\) etc. The terms of the G–C series for which \(j>4\) include the respective moments of the order higher than \(k=4\). In accordance with the paper assumptions (the distribution parameters are the moments of at most the fourth order), we will not consider such terms of the G–C series. Such limitation of the expansion leads to the following expression

and to the respective derivatives. Considering estimation of the parameters from the functional model y \({=}\) AX \({+}\) v, the series \(\tilde{f}(v)_4 =f_{\mathrm{ND}} (v)h(\bar{{v}})_4 \) can be sufficient in many cases, especially for small asymmetry and moderate excess. However, the G–C series that are discussed here are not expected to have the same properties as non-approximated PDFs (for example, for particular intervals of the variable \(v\), \(\tilde{f}(v)_4 \) might attain negative values). The functions that characterize \(M_{\mathcal {P}} \) estimation with the approximating model \(\tilde{\mathcal {P}}=\{\tilde{f}(v)_4 :\sigma ,\;\gamma _1 ,\;\gamma _2 \}\), which will be denoted as \(M_{\mathrm{G-C}}\), are presented in Figs. 6 and 7.

The influence and weight functions show some unfavorable features for bigger values of observation errors and growing excess (e.g., there is a point \(q\) from which the functions tend to the respective functions of LS-method). For some values of the excess, there are also points \({\lambda }_{w,1}^{*}\) and \({\lambda }_{w,2}^{*}\) at which the weight function has the local maxima (see, Fig. 7). When analyzing the weight function \(w(v)~=~{\psi }(v)/v\) which is related to the influence function (40), it is worth noting that for the non-zero excess, \(\lim _{v\rightarrow 0_- } w(v)=\lambda _{w,1}^{*} =-\infty \) and \(\lim _{v\rightarrow 0_+ } w(v)=\lambda _{w,2}^{*} =+\infty \) (fluctuation of the weight function). Thus, in the close neighborhood of the point \(v=0\), the weight function should be numerically corrected. For example, let \({\vert }v_{i}{\vert }~<~\epsilon _{v }\) then \(w(v_{i}):=w(v_{i-1})\), where \(\epsilon _{v }\) is the boundary point for acceptable fluctuation of the weight function. Note, that such discontinuity does not concern the rigor function \(r(v)\) (in the next section, \(M_{\mathcal {P}} \) estimates with the correction of the weight function are verified by the corresponding ML-estimates)

4 Numerical tests

The basic properties of \(M_{\mathcal {P}} \) estimation with the probabilistic model \({\mathcal {P}}_{\mathrm{PD(l)}} =\{\hbox {PIV,PVII:}\;\sigma ,\;\beta _1 ,\;\beta _2 >3\}(M_{\mathrm{PD(l)}}\) method) as well as with the approximating model \(\tilde{\mathcal {P}}_{\mathrm{G-C}} =\{\tilde{f}(v)_4 \}(M_{\mathrm{G-C} }\) method), are illustrated with an example of a simulated levelling network (Fig. 8). The network consists of the five fixed points and four new points (\(A, B, C, D)\) and 16 height differences which are measured. It is assumed that each height difference is measured \(s\) times (in the main tests \(s=4\)). Thus, the known matrix of coefficient can be written as \(\mathbf{A}=\mathbf{A}_0 \otimes \mathbf{1}_s\), where \(\mathbf{A}_0 \in R^{n,r}\) is a matrix related to single measurements of the height differences \(h_{i}\), and \(\mathbf{1}_s =[1_1 ,\ldots ,1_s ]^{\mathrm{T}}(\otimes \) is the Kronecker product). All point heights are assumed to be equal to zero, namely \(\mathbf{X}=[H_A ,H_B ,H_C ,H_D ]^{\mathrm{T}}=\mathbf{0}\), hence all measurement results are equal to respective simulated measurement errors.

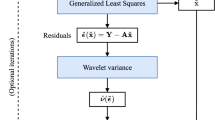

In accordance with the objective of the paper, the measurement errors are characterized by non-zero asymmetry and the excess. For small samples (for example, when \(n=64\)), sets which are simulated by the Gaussian random number generators show some anomalies in comparison with the assumed normal distribution. Such property concerns also the one-dimensional generator randn (\(n\), 1) (\(n \) is a number of the generated numbers) which is the component of MatLab. We are interested in anomalies which concern the asymmetry and the excess of measurement distribution. Thus, the simulation is to generate numbers \(v_{i}, i=1, \ldots , n\), with the application of the function in question (for the theoretical values of \(E(v)=0, {\sigma }=1\) and \({\gamma }_{1}=0, {\beta }_{2}=3\)). Then, for such generated set, one can compute the empirical moments \(\mathord {\mathrm{\scriptscriptstyle \smile }\over {E}} =n^{-1}\sum _{i=1}^n {v_i } , \mathord {\mathrm{\scriptscriptstyle \smile }\over {\mu }} _{{k}}=n^{-1}\sum _{i=1}^n {(v_i -\mathord {\mathrm{\scriptscriptstyle \smile }\over {E}} )^{k}} , k=2,3,4\), and hence the empirical values of the asymmetry coefficients \(\mathord {\mathrm{\scriptscriptstyle \smile }\over {\gamma }} _{{1}}=\mathord {\mathrm{\scriptscriptstyle \smile }\over {\mu }} _{3} /(\mathord {\mathrm{\scriptscriptstyle \smile }\over {\sigma }})^{3}, \mathord {\mathrm{\scriptscriptstyle \smile }\over {\beta }} _{{1}}=(\mathord {\mathrm{\scriptscriptstyle \smile }\over {\gamma }} _1)^{2}\) and the empirical value of the kurtosis \(\mathord {{\scriptscriptstyle \smile }\over {\beta }} _{{2}}= \mathord {{\scriptscriptstyle \smile }\over {\mu }} _4 /(\mathord {{\scriptscriptstyle \smile }\over {\sigma }} )^{4}\) and the excess \(\mathord {\mathrm{\scriptscriptstyle \smile }\over {\gamma }} _{{2}}=\mathord {\mathrm{\scriptscriptstyle \smile }\over {\beta }} _{{2}}-{3}\). To simplify the notation the empirical values will be denoted as the theoretical values, respectively. However, one should realize that the estimates which will be determined afterwards may be disturbed by the difference between theoretic and empiric value of the respective moments. \(M_{\mathcal {P}} \) estimates with the models \(\mathcal {P}_{\mathrm{PD(l)}} \) and \(\tilde{\mathcal {P}}_{\mathrm{G-C}} \) are compared to the neutral LS-estimates and to the robust Huber \(M\)-estimates (see, Eq. (27)). To compare the estimate, we will apply the root-mean-squared (for \({\hat{\mathbf{X}}}={\hat{\mathbf{X}}}_{\mathrm{PD(l)}} ,\;{\hat{\mathbf{X}}}_{\mathrm{G-C}} ,\;{\hat{\mathbf{X}}}_H )\)

To verify \(M_{\mathcal {P}} \) estimates from Eq. (16), they are compared with the respective ML-estimates which are computed on the basis of the algorithm in Eqs. (9) and (10).

The very important problem which concerns the Huber method (\(M_{H})\) is to choose the value of \( l \) that defines the interval \(\Delta \bar{{v}}=\langle {-l,l} \rangle \) for acceptable random errors \(\bar{{v}}\). It is especially significant in the case of asymmetric distributions. For example, if the distribution has a positive asymmetry coefficient, then some rather large positive errors should be acceptable as random ones (a right heavy tail which is acceptable), thus they should not be treated as gross errors. Figure 9 shows six chosen histograms of the simulated observations (such sets will be applied to show the properties of \(M_{\mathcal {P}} \) estimates). Such observations are not affected by gross errors, thus LS-estimates and \( M_{H }\) estimates of the parameter X should be equal to each other. The analyses, which are obtained for the vectors \(\mathbf{y}_1 \) and \(\mathbf{y}_3 \), show that the equality \({\hat{\mathbf{X}}}_{\mathrm{LS}} {=\hat{\mathbf{X}}}_{M_H } \) holds for \(l \ge 3\) (Table 1). Despite some disturbances of the vector \({\hat{\mathbf{X}}}_{M_H } \), the value \(l=2.5\), which is often chosen in practice, can also be acceptable (this value will be chosen to the numerical tests). The smaller values of \(l \) might lead to unacceptable \(M_{H}\) estimates of the parameter X (arbitrary reduction of the influence of some observations which is not justified by the probabilistic model).

The new methods are supposed to be robust against outliers thus they are compared with the method which belongs to the class of robust \(M\)-estimation. Tests were carried out for many simulated sets of observations (usually \(s=4\), \(n=64\)), including the sets which were also affected by gross errors. Mostly, it holds that \(\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{PD(l)}} } <\mathrm{RMS}_{{\hat{\mathbf{X}}}_{\mathrm{G-C}} } <\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{LS}} } \), and when gross errors occur \(\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{PD(l)}} } <\mathrm{RMS}_{{ \hat{\mathbf{X}}}_H } \) (in each case \(M_{\mathrm{PD(l)} }\) and \(M_{\mathrm{G-C} }\) estimates with the correction of the weight function for \(\epsilon _{v}~=~0.1{\sigma }\) were equal to the corresponding ML-estimates). Note that if the observation sets are free of gross errors and have small asymmetry then \({\hat{\mathbf{X}}}_{\mathrm{PD(l)}} { =\hat{\mathbf{X}}}_{\mathrm{G-C}} { =\hat{\mathbf{X}}}_{\mathrm{LS}} \) (regardless of the value of the kurtosis), which is in line with theoretical predictions. The detailed results of the tests for the simulated vectors \(\mathbf{y}_1 ,\ldots ,\mathbf{y}_6 \) are presented in Table 2. Gross errors of \(g=10\) and \(g=20\) affected the first measurement of the observation sets.

The \(M_{\mathrm{PD(l)}}\) estimates obtained are closer to the theoretical assumed values of the vector \(\mathbf{X=0}\) than LS- and \(M_{H }\) estimates. The approximating model \(\tilde{\mathcal {P}}_{\mathrm{G-C}} =\{\tilde{f}(v)_4 \}\) is not promising here, especially in the case of the observation sets which are affected by gross errors. This is due to the already mentioned properties of the influence function and the weight function of the method (for some values of \(v\), they both tend to the corresponding functions of the neutral LS-estimation). It is also worth noting that \(M_{\mathrm{PD(l)} }\) estimates are more robust than \(M_{H }\) estimates for \(l=2.5\). This becomes illuminating if one compares the ratios \(r_H =\mathrm{RMS}_{{ \hat{\mathbf{X}}}_H } /\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{LS}} } \) and \(r_{\mathrm{PD(l)}} =\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{PD(l)}} } /\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{LS}} } \), which are listed in Table 3. In one particular case, in which the gross error was relatively small, \(M_{H }\) estimate was worse than the conventional LS-estimate.

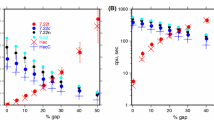

Now let us compare the estimates \(\hat{{\sigma }}_{0,\psi } \) of the standard deviation coefficient obtained for the methods compared here (their values are presented in Table 4). The results obtained are similar to the results of the estimation of the parameter vector of the functional model. Thus, in the case of \(M_{\mathrm{G-C} }\) method, the estimate \(\hat{{\sigma }}_{{0,\psi }}=[{ \hat{\mathbf{v}}}^{\mathrm{T}}\mathbf{w}(\mathbf{v}){ \hat{\mathbf{v}}}/(n-r)]^{1/2}\)is very similar to the non-robust estimate with the weight function \(\mathbf{w}(\mathbf{v})=\sigma ^{-2}\mathbf{I}_n \). On the other hand, the estimates obtained in \(M_{H }\) and \(M_{\mathrm{PD(l)} }\) methods are similar to each other. However, one should expect the larger values of the estimate for larger values of the gross error in the case of \(M_{\mathrm{PD(l)} }\) method (in comparison to the theoretical value \({\sigma }_{0}=1\)). To verify such tendency, another test was carried out. It was assumed a smaller observation set (\(s=2\), \(n=32\)) with the moderate asymmetry and excess (\({\sigma }=1.19, {\gamma }_{1}=-0.18, {\gamma }_{2}=0.18\)). Thus, we limited redundancy of the observations as well as the distribution anomalies. Such assumptions allow us to avoid blurring of the influence of gross errors on the estimation results. The gross errors of the values \(g\) = 10, \(g\) = 20, \(g\) = 40 and \(g\) = 80 affected the first measurement (as it was previously). The test results show that the estimate of the coefficient \({\sigma }_{0}\) obtained by applying \(M_{\mathrm{PD(l)} }\) method is a little bit worse than that obtained in the case of \(M_{H }\) method (see, Fig. 10). However, such difference is not growing with the increase of the value of the gross error.

Such specific properties of \(M_{\mathrm{PD(l)} }\) estimates (consideration of the known asymmetry and excess) seems substantial, however, in some particular cases it would be more important to make the estimate of X be highly robust (and hence also the estimate of the variance coefficient from Eq. (18)). \(M_{\mathrm{PD(l)} }\) estimate can be more robust if we increase artificially the value of the kurtosis. Thus, let us consider the previously used observation set for which \({\beta }_{2} = 3.18\) (\(s = 2\), \(n = 32\), \({\sigma } = 1.19\), \({\gamma }_{1} = -0.18\), \({\gamma }_{2} = 0.18\)), and the same values of the gross error. Then, one can obtain the results for which \(\mathrm{RMS}_{{\hat{\mathbf{X}}}_{\mathrm{PD(l)}}} \) and \(\hat{{\sigma }}_{0,\psi }\) are presented in Fig. 11 (for \(3.18 \le {\beta }_{2}~\le \) 6.00) and in Table 5, respectively.

5 Conclusions

The Pearson distributions of types IV and VII are regarded as probabilistic models of \(M_{\mathcal {P}} \) estimation which is discussed in the paper. Such variant of \(M_{\mathcal {P}} \) estimation is denoted as \(M_{\mathrm{PD(l)}}\). The platykurtic distributions of types I and II seem also theoretically interesting. However, application of those distributions as probabilistic models of observation errors should be justified convincingly, and the respective optimization procedure of \(M_{\mathrm{PD(p)} }\) estimation should be proposed. In the case of the probabilistic models with bounded domains, one should apply appropriate objective functions with constraints. In the theoretical part of the paper we paid attention only to the weight functions of \(M_{\mathcal {P}} \) estimations with such models. The distributions PIV and PVII, for which the values of the asymmetry coefficient and the excess are acceptable, were assumed to be an alternative for some distributions which belong to the set \(W_v \). We also considered that a non-Gaussian probability density function can be approximated by series, first of all by the Gram–Charlier series. The influence functions and the weight functions derived from the approximated PDF are the basis for \(M_{\mathcal {P}} \) estimation which is denoted as \(M_{\mathrm{G-C}}\).

Considering the influence and weight functions, both \(M_{\mathrm{PD(l)} }\) and \(M_{\mathrm{G-C} }\) estimation can be regarded as robust \(M\)-estimations. Thus, the estimates obtained by applying those methods (the estimates of the parameters of the functional model and the estimates of the variance coefficient) were compared with LS-estimates and the robust estimates of the Huber method (\(M_{H})\). The theoretical analysis show that \(M_{\mathrm{G-C} }\) formally belongs to the class of robust estimations, however, for the larger values of gross errors, the robustness of the method is lost. The conclusion is also confirmed by the numerical tests. It follows that \(M_{\mathrm{G-C} }\) estimation is only “a bit” robust against gross errors. For example, if the gross error is equal to \(g\) = 20, then the ratio \(\mathrm{RMS}_{{\hat{\mathbf{X}}}_{\mathrm{G-C}} } /\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{LS}} } \) is within the range 0.82 \(\div \) 1.07. Similar ration computed for \(M_{\mathrm{PD(l)} }\) estimates is within the range 0.11 \(\div \) 0.37. The results of \(M_{\mathrm{G-C} }\) estimation are also not promising for the sets which are not affected by gross errors (in comparison with \(M_{\mathrm{PD(l)} }\) estimates). In such case, the ratio \(\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{G-C}} } /\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{LS}} } \) is within the range 0.20 \(\div \) 0.83 (and 0.16 \(\div \) 0.76 for \(M_{\mathrm{PD(l)} }\) estimates). If the further terms of the G–C series were applied, one would obtain better results. However, in such a case the higher order moments, namely \({\mu }_{5}, {\mu }_{6 }\) etc. should be known. Such moments are rarely determined for sets of geodetic observations so far.

\(M_{\mathrm{PD(l)} }\) estimates are closer to the true values of the parameter \(\mathbf{X=0}\) than the corresponding LS-estimates in most cases which are presented in the paper (Sect. 4 shows several such examples, see Tables 2 and 3). This conclusion concerns sets affected by gross errors as well as the sets which are free of such errors. One should pay attention to robustness of \(M_{\mathrm{PD(l)} }\) estimates. Comparing those estimates with the Huber estimates (for rather rigorous value of \(l\) = 2.5), we can say that the ratio \(\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{PD(l)}} } /\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{LS}} } \)is smaller than \(\mathrm{RMS}_{{ \hat{\mathbf{X}}}_H } /\mathrm{RMS}_{{ \hat{\mathbf{X}}}_{\mathrm{LS}} } \). The tests presented show that for the gross error of \(g\) = 20 we obtained the ratio values within the range 0.18 \(\div \) 0.52 for \(M_{H } \) estimates and 0.11 \(\div \) 0.37 for \(M_{\mathrm{PD(l)} }\) estimates.

Estimation of the variance coefficients plays a major role in quality assessment of the adjustment results (Sect. 4 presents the values of the respective estimates). Robustness of the estimate \(\hat{{\sigma }}_{0,\psi }^2 \) seems to be important from a theoretical as well as a practical point of view. For the sets which are not affected by gross errors, the values of the estimates of the variance coefficient obtained for the methods compared are similar (see, Table 4 for \(g\) = 0). If gross errors occur, then the estimates of the coefficient \({\sigma }_{0}^{2}\) obtained in the case of \(M_{\mathrm{PD(l)} }\) method are a little bit larger than those for \(M_{H }\) estimation (however, they are always much smaller than non-robust estimates obtained with application of the weight function \(\mathbf{w}(\mathbf{v})=\sigma ^{-2}\mathbf{I}_n )\). The special tests show that the estimates \(\hat{{\sigma }}_{0,\psi }^2 \) obtained in \(M_{\mathrm{PD(l)} }\) method do not get worse with the growing value of the gross error.

The robustness of \(M_{\mathrm{PD(l)} }\) estimates is related to value of the kurtosis. Remember that \(M_{\mathcal {P}} \) method is a weak estimation in the case of \({\beta }_{2}<\) 3 (platykurtic distributions) and the probabilistic model \(\mathcal {P}_{\mathrm{PD(p)}} =\{\hbox {PI},\;\hbox {PII}\}\). For \({\beta }_{2}\) = 3 and the model \({\mathcal {P}}_{\mathrm{PD(m)}} \equiv {\mathcal {P}}_{\mathrm{ND}} \) (LS-method), it becomes a neutral estimation. Robustness of the method increases with the growing value of the kurtosis (if the asymmetry coefficient is the same). Thus, if \({\beta }_{1}\) = 0, then \(M_{\mathrm{PD(l)} }\) estimation can be regarded as a robust \(M\)-estimation which robustness is adjusted by \({\beta }_{2}\). In such a case the kurtosis becomes only a parameter which controls the robustness of the method (pseudo-kurtosis); hence it does not have to be related to the real values of the second and fourth moments. In such an approach, the Gaussian distributions with an unacceptable admixture are replaced with the Pearson distributions with the equivalent kurtosis which is artificially overestimated. Such replacement of the distribution is just formal, however, it might be of importance to statistical interpretation of the results (for example, it can be done by applying appropriate statistical tests, which requires some additional theoretical researches and analyses). In respect of robust \(M\)-estimation, such an approach is related to changes in the influence function and the weight function which are easy to be planned.

References

Andreassen TW, Lorentzen BG, Olsson H (2006) The impact of non-normality and estimation methods in SEM on satisfaction research in marketing. Qual Quant 40:39–58

Baarda W (1968) A test procedure for use in geodetic networks. Neth Geod Comm Publ Geod, New Ser 2(5):27–55

Berberan-Santos MN (2007) Expressing a probability density function in terms of another PDF: a generalized Gram–Charlier expansion. J Math Chem 42:585–594

Cen M, Li Z, Ding X, Zhuo J (2003) Gross error diagnostics before least squares adjustment of observations. J Geod 77:503–513

Charlier CVL (1906) Researches into the theory of probability. Hâkan Ohlsson, Lund

Crawford JR, Walsh JE (1960) Empirical examination of Edgeworth series. Ann Inst Stat Math 1:13–26

Daniels HE (1954) Saddlepoint approximations in statistics. Ann Math Stat 25(4):631–650

Duchnowski R (2011) Robustness of the strategy for testing leveling mark stability based on rank tests. Surv Rev 43:687–699

Duchnowski R (2013) Hodges–Lehmann estimates in deformation analysis. J Geod 87:873–884

Duchnowski R, Wiśniewski Z (2011) Estimation of the shift between parameters of functional models of geodetic observations by applying M\(_{split}\) estimation. J Surv Eng 138:1–8

Dumalski A, Wiśniewski Z (1994) Concept of adjustment method with application of Edgeworth series. Polish Acad Sci Geod Cartogr 43:219–237

Dzhun’ IV (1969) Pearson VII type distribution in errors of latitude oscillation observations. Astrom Astrofiz 2:101–115

Dzhun’ IV (1991) Pearson VII type distribution of errors of HIS laser observations. Kinem Fiz Nebes Tel 7:82–91

Dzhun’ IV (1992) Pearson distribution of type VII used to approximate observation errors in astronomy. Meas Tech 35:277–282

Dzhun’ IV (2011) Method for diagnostics of mathematical models in theoretical astronomy and astrometry. Kinemat Phys Celest Bodies Math Proc Astron Data 27:260–264

Dzhun’ IV (2012) What should be the observation–calculation residuals in modern astrometric experiments. Kinemat Phys Celest Bodies Math Proc Astron Data 28:72–80

Elderton WP (1953) Frequency curves and correlation. Cambridge University Press, London

Ferrari D, Yang Y (2010) Maximum L\(_{q}\)-likelihood estimation. Ann Stat 38:753–783

Friori AM, Zenga M (2009) Karl Pearson and the origin of kurtosis. Int Stat Rev 77:40–50

Gleinsvik P (1971) Zur Leistungsfähigkeit der Methode der kleinsten Quadrate bei Ausgleichung nicht normalverteilter Beobachtungen. Theoretische Untersuchungen. ZfV, Heft 6:224–233

Gleinsvik P (1972) Zur Leistungsfähigkeit der Methode der kleinsten Quadrate bei Ausgleichung nicht normalverteilter Beobachtungen. Experimentelle Prüfung. ZfV, Heft 9:385–393

Gui Q, Zhang J (1998) Robust biased estimation and its applications in geodetic adjustment. J Geod 72:430–435

Hald A (2007) A history of parametric statistical inference from Bernoulli to Fisher, 1713–1935. Sources and studies in the history of mathematics and physical sciences. Springer Science+Business Media, LLC, pp 111–129

Hampel FR (1974) The influence curve and its role in robust estimation. J Am Stat Assoc 69:383–397

Hampel FR, Ronchetti EM, Rousseuw PJ, Stahel WA (1986) Robust statistics. The approach based on influence functions. Wiley, New York

Hodges JL, Lehmann EL (1963) Estimates of location based on rank tests. Ann Math Stat 34:598–611

Hu X, Huang C, Liao X (2001) A new solution assessment approach and its application to space geodesy data analysis. Celest Mech Dyn Astron 81:265–278

Huang Y, Mertikas SP (1995) On the design of robust regression estimators. Manuscr Geod 20:145–160

Huber PJ (1981) Robust statistics. The approach based on influence functions. Wiley, New York

Jaynes ET (1957) Information theory and statistical mechanics. Phys Rev 106(4):620–630

Johnson NL (1949) Systems of frequency curves generated by methods of translation. Biometrika 36:149–176

Jones GA, Jones JM (2000) Information and coding theory. Springer undergraduate mathematics series. Springer, Heidelberg

Kadaj R (1988) Eine verallgemeinerte Klasse von Schätzverfahren mit praktischen Anwendungen. ZfV 113:157–166

Kamiński W, Wiśniewski Z (1994) The method of growing rigor for the adjustment of geodetic observations contaminated by gross errors. Manuscr Geod 19:55–61

Kasietczuk B (1997) Estimation of asymmetry and kurtosis coefficients in the process of geodetic network adjustment by the least-squares method. J Geod 7:131–136

Klein A, Moosbrugger H (2000) Maximum likelihood of latent interaction effects with the LMS method. Psychometrica 65:457– 474

Koch KR (1990) Bayesian inference with geodetic applications. Springer, Berlin

Koch KR (1996) Robuste Parameterschätzung. Allg Vermess Nach 103:1–18

Kolassa JE (2006) Series approximation methods in statistics. Lecture Notes on Statistics, vol 88. Spinger, Berlin, pp 31–62

Krarup T, Kubik K (1983) The Danish method; experience and philosophy. Deutsche Geodätische Kommission, München, Reihe A, Heft 7:131–134

Kukuča J (1967) Some problems in estimating the accuracy of a measuring method. Studia Geoph et Geod 11:21–33

Luo X, Mayre M, Heck B (2011) On the probability distribution of GNSS carrier phase observations. GPS Solut 15:369–379

Marshall J (2002) L\(_{1}\)-norm pre analysis measures for geodetic networks. J Geod 76:334–344

Mooijaart A (1985) Factor analysis for non-normal variables. Psychometrika 50:323–342

Mukhopadhyay N (2005) Dependence or independence of the sample mean and variance in non -IID or non-normal cases and the role of some tests of independence. Recents advances in applied probability. Springer Science + Business Media, Inc., Book Chapter, pp 397–426

Nagahara Y (2007) A method of calculating the downside risk by multivariate nonnormal distributions. Asia Pac Finan Mark 15:175–184

Nagahara Y (2011) Using nonnormal distributions to analyze the relationship between stock returns in Japan and US. Asia Pac Finan Mark 18:429–443

Pearson K (1920) The fundamental problem of practical statistics. Statistics 13:1–16

Prószyński W (1997) Measuring the robustness potential of the least-squares estimation: geodetic illustration. J Geod 71:652–659

Romanowski M, Green E (1983) Reflexions on the kurtosis of samples of errors. Bull Géod 57:62–82

Serfling R (1980) Approximation theorems of mathematical statistics. Wiley, New York

Teunissen PJG (1990) Nonlinear least squares. Manuscr Geod 15:137–150

Tomozawa Y (1974) Quasinormal distributions and expansion at the mode. J Stat Phys 11:195–204

Wassef AM (1959) Note of the application of mathematical statistics to the analysis of levelling errors. Bull Géod 52:19–26

Winkelmann R, Boes S (2006) Analysis of microdata. Springer, Berlin

Winter R (1978) Theoretische und praktische untersuchungen zu modulierten Normalverteilungen nach Romanowski. Wissenschaftliche Arbeiten der Lehrstühle für Geodäsie, Photogrammetrie und Kartographie an der Technischen Universität Hannover

Wiśniewski Z (1985) The effect of the asymmetry of geodetic observation error distribution on the results of adjustment by least squares method. Polish Acad Sci Geod Cartogr 34:11–21

Wiśniewski Z (1987) Method of geodetic network adjustment in extend to probabilistic measurement error properties. Sci Bull Staszic Acad Min Metall Geod 95:73–88

Wiśniewski Z (1989) The RP method. Part I-III (in Polish). Geodezja i Kartografia 38:25–69

Wiśniewski Z (1991) Comparative categories in analysis of the methods of geodetic observations adjustment. Sci Bull Staszic Acad Min Metall Geod 112:41–55

Wiśniewski Z (1995) Moment vectors and their estimation after least squares adjustment. Bollettino di Geodesia e Scienze Affini 54:373–386

Wiśniewski Z (1996) Estimation of the third and fourth order central moments of measurement errors from sums of powers of least squares adjustment residuals. J Geod 70:256–262

Wiśniewski Z (2009) Estimation of parameters in a split functional model of geodetic observations (\(M_{split}\) estimation). J Geod 83:105–120

Wiśniewski Z (2010) \(M_{split(q) }\)estimation: estimation of parameters in a multi split functional model of geodetic observations. J Geod 84:355–372

Xi Z, Hu C, Youn BD (2012) A comparative study of probability estimation methods for reliability analysis. Struct Multidiscip Optim 45:33–52

Yang Y (1994) Robust estimation for dependent observations. Manuscr Geod 19:10–17

Yang Y (1997) Estimators of covariance matrix at robust estimation based on influence functions. ZfV 122:166–174

Yang Y (1999) Robust estimation of geodetic datum transformation. J Geod 73:268–274

Yang Y, Cheng MK, Shum CK, Tapley BD (1999) Robust estimation of systematic errors of satellite laser range. J Geod 73:345–349

Yang Y, Song L, Xu T (2002) Robust estimation for correlated observations based on bifactor equivalent weights. J Geod 76:353–358

Youcai H, Mertikas SP (1995) On the design of robust regression estimators. Manuscr Geod 20:145–160

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Wiśniewski, Z. M-estimation with probabilistic models of geodetic observations. J Geod 88, 941–957 (2014). https://doi.org/10.1007/s00190-014-0735-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00190-014-0735-7