Abstract

We derive a simple criterion that ensures uniqueness, Lipschitz stability and global convergence of Newton’s method for the finite dimensional zero-finding problem of a continuously differentiable, pointwise convex and monotonic function. Our criterion merely requires to evaluate the directional derivative of the forward function at finitely many evaluation points and for finitely many directions. We then demonstrate that this result can be used to prove uniqueness, stability and global convergence for an inverse coefficient problem with finitely many measurements. We consider the problem of determining an unknown inverse Robin transmission coefficient in an elliptic PDE. Using a relation to monotonicity and localized potentials techniques, we show that a piecewise-constant coefficient on an a-priori known partition with a-priori known bounds is uniquely determined by finitely many boundary measurements and that it can be uniquely and stably reconstructed by a globally convergent Newton iteration. We derive a constructive method to identify these boundary measurements, calculate the stability constant and give a numerical example.

Similar content being viewed by others

1 Introduction

New technologies for medical imaging, non-destructive testing, or geophysical exploration are often based on mathematical inverse coefficient problems, where the coefficient of a partial differential equation is to be reconstructed from (partial) knowledge of its solutions. A prominent example is the emerging technique of electrical impedance tomography (EIT), cf. [1, 12, 14, 23, 24, 31, 62, 63, 83, 86, 88, 90, 95, 101, 103], and the references therein for a broad overview. Inverse coefficient problems are usually highly non-linear and ill-posed, and uniqueness and stability questions, as well as the design and the theoretical study of reconstruction algorithms are extremely challenging topics in theoretical and applied research.

In practical applications, only finitely many measurements can be made, the unknown coefficient has to be parametrized with finitely many variables (e.g., by assuming piecewise-constantness on a given partition), and physical considerations typically limit the unknown coefficient to fall between certain a-priori known bounds. Thus, after shifting and rescaling, a practical inverse coefficient problem can be put in the form of finding the zero \(x\in [0,1]^n\) of a non-linear function \(F:\ \mathbb {R}^n\rightarrow \mathbb {R}^m\),

It is of utmost importance to determine what measurements make this finite-dimensional inverse (or zero-finding) problem uniquely solvable, to evaluate the stability of the solution with respect to model and measurement errors, and to design convergent numerical reconstruction algorithms.

In this paper, we will study this problem under the assumption that F is a pointwise monotonic and convex function, which often arises in elliptic inverse coefficient problems (cf. our remarks on the end of this introduction). We will derive a simple criterion that implies the existence of a zero, and the injectivity of F in a certain neighborhood of \([0,1]^n\). It also allows us to quantify the Lipschitz stability constant of the left inverse \(F^{-1}\) and, for \(n=m\), ensures global convergence of Newton’s method. The criterion is easy-to-check as it merely requires to evaluate the directional derivative \(F'(z)d\) for a finite number of evaluation points z and finitely many directions d.

We then show how our result can be applied to the inverse problem of determining a Robin transmission coefficient in an elliptic PDE from the associated Neumann-to-Dirichlet operator that is motivated by EIT-based corrosion detection [53]. We assume that the Robin coefficient is piecewise-constant on a-priori known partition of the interface into n parts, and that we a-priori know upper and lower bounds for the Robin coefficient’s values. We then show how to construct n boundary measurements that uniquely determine the n unknown Robin values, and for which a standard Newton method globally converges. We also quantify the stability of the solution with respect to errors, and numerically demonstrate our result on a simple setting.

Let us give some references to put our result in the context of existing literature. The arguably most famous elliptic inverse coefficient problem is the Calderón problem [27, 28], that arises in EIT, cf. [10, 30, 34, 44, 72, 79,80,81, 89, 98] for an incomplete list of seminal breakthroughs for the uniqueness question in an infinite-dimensional setting.

Several recent works have addressed uniqueness and Lipschitz stability questions for the problem of determining finitely many parameters (e.g., by assuming piecewise-constantness) from finitely or infinitively many measurements in inverse coefficient problems, cf., [2,3,4,5,6,7, 11, 15, 18,19,20,21,22, 32, 48, 51, 53, 68, 69, 71, 76, 77, 87, 93, 96, 104, 105]. To the knowledge of the author, the results presented herein, is the first on explicitly calculating those measurements that uniquely determine the unknown parameters, and, together with [53], it is the first result to explicitly calculate the Lipschitz constant for a given setting. Moreover, we obtain the unique existence of a solution also for noisy measurements, so that Lipschitz stability also yields an error estimate in the case of noise.

Reconstruction algorithms for inverse coefficient problems typically rely on Newton-type approaches or on globally minimizing a non-convex regularized data-fitting functional. Both approaches require an initial guess close to the unknown solution, so that most algorithms are only known to converge locally. For the sake of rigor, it should be noted at this point, that the difficulty in this context is not to construct globally convergent methods but computationally feasible globally convergent methods. To elaborate on this point, let us consider a minimization problem with a continuous functional \(f:\ I\rightarrow \mathbb {R}\) over a finite-dimensional interval \(I\subseteq \mathbb {R}^n\). A trivial optimization algorithm is to choose a countable dense subset \((q_m)_{m\in \mathbb {N}}\subset I\), and setting \(x_0:=q_0\),

Then, obviously, any accumulation point of \((x_m)_{m\in \mathbb {N}}\) is a global minimizer of f. But this type of approach requires a completely unfeasible amount of function evaluations and is thus usually disregarded in practice. Note, however, that together with estimates on the convergence range of an iterative algorithm as in the recent preprint of Alberti and Santacesaria [3], and the progress of parallel computing power, these type of approaches may become feasible at least for lower dimension numbers.

To obtain (computationally feasible) globally convergent algorithms, quasi-reversibility and convexification ideas have been developed in the the seminal work of Klibanov et al., cf., e.g. [16, 17, 74, 75]. Alternatively, one can use very specific properties of the considered problem, cf., e.g., the global convergence result of Knudsen, Lassas, Mueller and Siltanen [78] for the d-bar method for EIT, or resort to only reconstructing the shape of an anomaly, cf. [54, 55, 58, 59, 64, 66, 67] for some globally convergent approaches. The theory developed in this work shows that, somewhat surprisingly, global convergence holds for the standard zero-finding Newton method, when the right measurements are being used, and this also implies fast quadratic convergence. On the other hand, so far, our theory does not allow to utilize more measurements than unknowns or to explicitly add regularization, which would be advantageous in practical applications. Moreover, the calculated measurements tend to become more or more oscillatory for higher dimensional problems. Hence, so far, we can only expect our approach to be practically feasible for relatively few unknowns where discretization sufficiently regularizes the ill-posed problem.

On the methodological side, this work builds upon [48, 53] and stems from the theory of combining monotonicity estimates with localized potentials, cf. [9, 13, 25, 35, 43, 45, 46, 50,51,52, 56,57,58,59, 61, 94] for related works, and [29, 37,38,39,40, 49, 54, 55, 60, 85, 97, 99, 100, 102, 106] for practical monotonicity-based reconstruction methods. In this work, the monotonicity and convexity of the forward function is based on the so-called monotonicity relation which goes back to Ikehata, Kang, Seo, and Sheen [65, 70]. The existence of measurements that fulfill the extra criterion on the directional derivative evaluations follows from localized potentials arguments [41]. Hence, it might be possible to extend the theory developed herein to other elliptic inverse coefficient problems where monotonicity and localized potentials results are also available. Note however, that this extension is not obvious since the localized potentials results for the herein considered Robin transmission problem are stronger than those known for other coefficient problems such as EIT.

Finally, it should be noted, that the monotonicity and localized potentials techniques evolved from the factorization method [26, 42, 47, 73, 82], and that global convergence for Newton’s method for finite-dimensional zero-finding problems is a classical result for pointwise convex functions that are inverse monotonic (also called Collatz monotone [33]), cf., e.g., the book of Ortega and Rheinboldt [91, Thm. 13.3.2]. Such problems arise, e.g., in solving non-linear elliptic PDEs. Roughly speaking, one might be tempted to say, that elliptic forward coefficient problems lead to inverse monotonic convex function, and inverse elliptic coefficient problems lead to forward monotonic convex functions. Our extra criterion on the directional derivative evaluations allows us to write our forward monotonic function as an affine transformation of an inverse monotonic function in a certain region and (together with some technical arguments to ensure the iterates staying in this region), this is the major key in proving our global convergence result.

The paper is organized as follows. In Sect. 2, we prove uniqueness, stability and global convergence of the Newton method for continuously differentiable, pointwise convex and monotonic functions under a simple extra condition on the directional derivatives. In Sect. 3, we apply this result to an inverse Robin coefficient problem, and show how to determine those measurements that uniquely and stably determine the unknown coefficient with a desired resolution via a globally convergent Newton iteration. We also give a numerical example in Sect. 3. Throughout this paper, we take the somewhat lengthy, but hopefully reader-friendly approach of first presenting less technical intermediate results to motivate our approach.

2 Uniqueness, stability and global Newton convergence

We consider a continuously differentiable, pointwise convex and monotonic function

where \(n,m\in \mathbb {N}\), \(m\ge n \ge 2\), and U is a convex open set. In this section, we will derive a simple criterion that implies injectivity of F on a multidimensional interval. The criterion also allows us to estimate the Lipschitz stability constant of the left inverse \(F^{-1}\) and, for \(n=m\), ensures global convergence of Newton’s method.

Remark 1

Throughout this work, ”\(\le \)” is always understood pointwise for finite-dimensional vectors and matrices, and \(x\not \le y\) denotes the converse, i.e., that \(x-y\) has at least one positive entry.

Monotonicity and convexity are understood with respect to this pointwise partial order, i.e., \(F:\ U\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^m\) is monotonic if

and F is convex if

We also say that a function F is anti-monotone if \(-F\) is monotonic.

For continuously differentiable functions, it is easily shown that monotonicity is equivalent to

and thus equivalent to \(F'(x)\ge 0\). Convexity is known to be equivalent to

cf., e.g., [91, Thm. 13.3.2]. All the proofs in this section use the monotonicity and convexity assumption in the form (1) and (2).

Throughout this work, we denote by \(e_j\in \mathbb {R}^n\) the jth unit vector in \(\mathbb {R}^n\), \(\mathbb {1}:=(1,1,\ldots ,1)^T\in \mathbb {R}^n\), and \(e_j':=\mathbb {1}-e_j\). \(I_n\in \mathbb {R}^{n\times n}\) denotes the n-dimensional identity matrix, and \(\mathbb {1} \mathbb {1}^T\in \mathbb {R}^{n\times n}\) is the matrix containing 1 in all of its entries.

2.1 A simple criterion for uniqueness and Lipschitz stability

Before we state our result in its final form in Sect. 2.3, we derive two weaker (and less technical) results that motivate our arguments and may be of independent interest. We first show a simple criterion that yields injectivity of F and allows us to estimate the Lipschitz stability constant of its left inverse \(F^{-1}\).

Theorem 1

Let \(F:\ U\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^m\), \(m\ge n\ge 2\), be a continuously differentiable, pointwise convex and monotonic function on a convex open set U containing \([-1,3]^n\). If, additionally,

then the following holds:

-

(a)

F is injective on \([0,1]^n\).

-

(b)

\(F'(x)\in \mathbb {R}^{m\times n}\) is injective for all \(x\in [0,1]^n\).

-

(c)

With

$$\begin{aligned} L:=2 \left( \min _{j=1,\ldots ,n}\ \max _{k=1,\ldots ,m} e_k^T F'(-e_j+ 3e_j') \left( e_j - 3e_j'\right) \right) ^{-1}>0, \end{aligned}$$(4)we have that for all \(x,y\in [0,1]\)

$$\begin{aligned} \Vert x-y\Vert _\infty \le L \Vert F(x)-F(y)\Vert _\infty , \quad \text { and } \quad \Vert F'(x)^{-1}\Vert _\infty \le L, \end{aligned}$$where \(F'(x)^{-1}\in \mathbb {R}^{n\times m}\) denotes the left inverse of \(F'(x)\in \mathbb {R}^{m\times n}\).

To prove Theorem 1, we will first formulate an auxiliary lemma, which will also be used in our more technical results. Note that assumption (5) in the following lemma simply means that a row permutation of the non-negative matrix \(F'(x)\in \mathbb {R}^{m\times n}\) is strictly diagonally dominant in its first n rows.

Lemma 1

Let \(F:\ U\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^m\), \(m\ge n\ge 2\), be continuously differentiable, pointwise convex and monotonic on a convex open set U, and let \(L>0\).

-

(a)

If \(x\in U\) fulfills

$$\begin{aligned} \max _{k=1,\ldots ,m} e_k^T F'(x) \left( e_j - e_j'\right) \ge L^{-1} \quad \text { for all } j\in \{1,\ldots ,n\}, \end{aligned}$$(5)then \(F'(x)\in \mathbb {R}^{m\times n}\) is injective, and its left inverse fulfills \( \Vert F'(x)\Vert _\infty ^{-1}\le L\).

-

(b)

If, additionally, \(y\in U\) also fulfills (5), then

$$\begin{aligned} \Vert x-y\Vert _\infty \le L \Vert F(x)-F(y)\Vert _\infty . \end{aligned}$$

Proof

-

(a)

For all \(0\ne d \in \mathbb {R}^n\), at least one of the entries of \(\frac{d}{ \Vert d\Vert _\infty }\) must be either 1 or \(-1\), so that there exists \(j\in \{1,\ldots ,n\}\) with either

$$\begin{aligned} \frac{d}{ \Vert d\Vert _\infty }\ge e_j-e_j', \quad \text { or } \quad \frac{d}{ \Vert d\Vert _\infty }\le -e_j+e_j'. \end{aligned}$$We thus obtain from the monotonicity assumption (1) that either

$$\begin{aligned} F'(x)\frac{d}{ \Vert d\Vert _\infty }\ge F'(x)(e_j-e_j'), \quad \text { or } \quad F'(x)\frac{-d}{ \Vert d\Vert _\infty }\ge F'(x)(e_j-e_j'). \end{aligned}$$In both cases, it follows from (5) that

$$\begin{aligned} \frac{ \Vert F'(x)d\Vert _\infty }{ \Vert d\Vert _\infty }\ge \max _{k=1,\ldots ,m} e_k^T F'(x) \left( e_j - e_j'\right) \ge L^{-1}. \end{aligned}$$This proves injectivity of \(F'(x)\) and the bound on its left inverse.

-

(b)

Likewise, for \(x\ne y\), either

$$\begin{aligned} \frac{x-y}{ \Vert y-x\Vert _\infty }\ge e_j-e_j', \quad \text { or } \quad \frac{y-x}{ \Vert x-y\Vert _\infty }\ge e_j-e_j', \end{aligned}$$so that by monotonicity (1) and assumption (5), either

$$\begin{aligned} \max _{k=1,\ldots ,m} e_k^T F'(x)\frac{y-x}{ \Vert x-y\Vert _\infty } \ge L^{-1}, \text { or } \max _{k=1,\ldots ,m} e_k^T F'(y)\frac{x-y}{ \Vert x-y\Vert _\infty } \ge L^{-1}. \end{aligned}$$By convexity (2), it then follows that

$$\begin{aligned} \Vert F(y)-F(x)\Vert _\infty&= \max _{k=1,\ldots ,m} |e_k^T (F(y)-F(x))|\\&= \max _{k=1,\ldots ,m} \max \{ e_k^T (F(y)-F(x)), e_k^T (F(x)-F(y)) \}\\&\ge \max _{k=1,\ldots ,m} \max \{ e_k^T F'(x)(y-x), e_k^T F'(y)(x-y) \}\\&\ge \Vert y-x\Vert _\infty L^{-1}. \end{aligned}$$

\(\square \)

We can now prove Theorem 1 with Lemma 1.

Proof of Theorem 1

Let \(j\in \{1,\ldots ,n\}\). Writing

we have that

Thus we deduce from (1) and (2) that for all \(x\in [0,1]^n\)

With the definition of L in (4), this shows that

so that (a), (b) and (c) follow from Lemma 1. \(\square \)

2.2 A simple criterion for global convergence of the Newton iteration

We will now show how to ensure that a convex monotonic function F has a unique zero, and that the Newton method globally converges against this zero.

Theorem 2

Let \(F:\ U\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^n\), \(n\ge 2\), be continuously differentiable, pointwise convex and monotonic on a convex open set U. If \([-2,n(n+3)]^n\subset U\), and

with

then the following holds:

-

(a)

F is injective on \([-1,n]^n\), \(F'(x)\) is invertible for all \(x\in [-1,n]^n\), and for all \(x,y\in [-1,n]^n\)

$$\begin{aligned} \Vert x-y\Vert _\infty \le L \Vert F(x)-F(y)\Vert _\infty , \quad \text { and } \quad \Vert F'(x)^{-1}\Vert _\infty \le L, \end{aligned}$$(8)where

$$\begin{aligned} L:=(n+2) \left( \min _{j=1,\ldots ,n}\ \max _{k=1,\ldots ,n} e_k^T F'(z^{(j)})d^{(j)}\right) ^{-1}>0. \end{aligned}$$(9) -

(b)

If, additionally, \(F(0)\le 0\le F(\mathbb {1})\), then there exists a unique

$$\begin{aligned} {\hat{x}}\in \left( \textstyle -\frac{1}{n-1},1+\frac{1}{n-1}\right) ^n\quad \text { with } \quad F({\hat{x}})=0, \end{aligned}$$and this is the only zero of F in \([-1,n]^n\). The Newton iteration sequence

$$\begin{aligned} x^{(k+1)}:=x^{(k)}- F'(x^{(k)})^{-1}F(x^{(k)})\quad \text {with initial value}\, x^{(0)}:=\mathbb {1} \end{aligned}$$(10)is well defined (i.e., \(F'(x^{(k)})\) is invertible in each step) and converges against \({\hat{x}}\). Furthermore, for all \(k\in \mathbb {N}\), \(x^{(k)}\in (-1,n)^n\), and

$$\begin{aligned} 0\le M{\hat{x}}\le M x^{(k+1)}\le M x^{(k)}\le Mx^{(0)}=(n+1)\mathbb {1}, \end{aligned}$$where \(M:=\mathbb {1}\mathbb {1}^T+ I_n\in \mathbb {R}^{n\times n}\). The rate of convergence of \(x_k\rightarrow {\hat{x}}\) is superlinear. If \(F'\) is locally Lipschitz, then the rate of convergence is quadratic.

To prove Theorem 2 we will first show the following lemma.

Lemma 2

Under the assumptions and with the notations of Theorem 2, the following holds:

-

(a)

For all \(x\in [-1,n]^n\),

$$\begin{aligned} \max _{k=1,\ldots ,n} e_k^T F'(x)(e_j-n e_j')\ge L^{-1}. \end{aligned}$$ -

(b)

F is injective on \([-1,n]^n\), \(F'(x)\in \mathbb {R}^{n\times n}\) is invertible for all \(x\in [-1,n]^n\), and, for all \(x,y\in [-1,n]^n\),

$$\begin{aligned} \Vert x-y\Vert _\infty \le L \Vert F(x)-F(y)\Vert _\infty , \quad \text { and } \quad \Vert F'(x)^{-1}\Vert _\infty \le L. \end{aligned}$$ -

(c)

For all \(x\in [-1,n]^n\), and all \(0\ne y\in \mathbb {R}^n\)

$$\begin{aligned} F'(x)y\ge 0 \quad \text { implies } \quad \max _{j=1,\ldots ,n} y_j= \Vert y\Vert _\infty \ \text { and } \ \min _{j=1,\ldots ,n} y_j> -\frac{1}{n} \Vert y\Vert _\infty . \end{aligned}$$ -

(d)

M is invertible, \(M^{-1}=I_n-\frac{1}{n+1}\mathbb {1} \mathbb {1}^T\). For all \(x\in [-1,n]^n\), and \(y\in \mathbb {R}^n\)

$$\begin{aligned} F'(x)y\ge 0 \quad \text { implies } \quad My\ge 0, \end{aligned}$$and thus \(M F'(x)^{-1}\ge 0\).

Proof

-

(a)

Let \(j\in \{1,\ldots ,n\}\). Using \(z^{(j)}=-2 e_j + n(n+3) e_j'\), we have that for all \(x\in [-1,n]^n\)

$$\begin{aligned} d^{(j)}=e_j - (n^2+3n+1) e_j' \le x-z^{(j)}\le (n+2)e_j - n(n+2)e_j' \end{aligned}$$and thus it follows from monotonicity (1) and convexity (2) that

$$\begin{aligned} F'(x) \left( e_j - n e_j'\right)&=\frac{1}{n+2} F'(x) \left( (n+2)e_j - n(n+2)e_j'\right) \\&\ge \frac{1}{n+2} F'(x) \left( x - z^{(j)}\right) \ge \frac{1}{n+2} \left( F(x) - F(z^{(j)}) \right) \\&\ge \frac{1}{n+2} F'(z^{(j)}) \left( x - z^{(j)}\right) \ge \frac{1}{n+2} F'(z^{(j)}) d^{(j)}, \end{aligned}$$which proves (a) using the definition of L in (9).

-

(b)

Since (a) implies a fortiori that for all \(x\in [-1,n]^n\)

$$\begin{aligned} \max _{k=1,\ldots ,n} e_k^T F'(x)(e_j- e_j')\ge L^{-1}, \end{aligned}$$the assertion (b) follows from Lemma 1.

-

(c)

Let \(x\in [-1,n]^n\), and \(0\ne y\in \mathbb {R}^n\). If there exists an index \(j\in \{1,\ldots ,n\}\) with \(y_j\le -\frac{1}{n} \Vert y\Vert _\infty \), then \(y\le -\frac{1}{n} \Vert y\Vert _\infty e_j + \Vert y\Vert _\infty e_j'\), so that

$$\begin{aligned} - \frac{n}{ \Vert y\Vert _\infty } y \ge e_j -n e_j', \quad \text { and thus, by (a),} \quad - \frac{n}{ \Vert y\Vert _\infty } F'(x) y\not \le 0. \end{aligned}$$By contraposition, this shows that

$$\begin{aligned} F'(x)y\ge 0 \quad \text { implies } \quad \min _{j=1,\ldots ,n} y_j> -\frac{1}{n} \Vert y\Vert _\infty , \end{aligned}$$which also shows that \( \Vert y\Vert _\infty = \max _{j=1,\ldots ,n} y_j\).

-

(d)

It is easily checked that

$$\begin{aligned} \left( I_n-\frac{1}{n+1}\mathbb {1} \mathbb {1}^T \right) \left( \mathbb {1} \mathbb {1}^T+I_n\right) =I_n, \end{aligned}$$which shows that M is invertible and \(M^{-1}=I_n-\frac{1}{n+1}\mathbb {1} \mathbb {1}^T\)

Moreover, using (c) it follows that \(F'(x)y\ge 0\) implies that for all \(k\in \mathbb {N}\),

$$\begin{aligned} \sum _{j=1}^n y_j + y_k\ge \max _{j=1,\ldots ,n} y_j + n \min _{j=1,\ldots ,n} y_j\ge 0. \end{aligned}$$so that \(F'(x)y\ge 0\) implies \(My=(\mathbb {1} \mathbb {1}^T+I_n)y\ge 0\). This also shows that

$$\begin{aligned} F'(x) F'(x)^{-1} y=y\ge 0 \quad \text { implies } \quad M F'(x)^{-1} y\ge 0, \end{aligned}$$and thus \(M F'(x)^{-1}\ge 0\).

\(\square \)

Note that by Lemma 2(d), \({\tilde{F}}(x):=F(M^{-1}x)\) is a convex function with Collatz monotone derivative [33], i.e. \({\tilde{F}}'(x)^{-1}= M F'(M^{-1} x)^{-1}\ge 0\). If the Newton iterates do not leave the region where convexity and Collatz monotony holds, then classical results on monotone Newton methods (cf., e.g., Ortega and Rheinboldt [91, Thm. 13.3.4]) yield global Newton convergence for \({\tilde{F}}\), and thus for F since the Newton method is invariant under linear transformation. The following lemma utilizes some arguments from the classical result [91, Thm. 13.3.4] for our situation.

Lemma 3

Let \(F:\ U\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^n\) be continuously differentiable and pointwise convex on a convex open set U containing zero, and let \(M\in \mathbb {R}^{n\times n}\). We assume that for some point \(x\in U\), \(F'(x)\in \mathbb {R}^{n\times n}\) is invertible,

Then for all \(t\in [0,1]\)

fulfills \(0\le Mx^{(t)}\le Mx\). Moreover, if \(x^{(t)}\in U\) then \(F(x^{(t)})\ge 0\).

Proof

The assumptions (11) and the convexity (2) yield that for all \(t\in [0,1]\)

Moreover,

so that \(Mx\ge Mx^{(t)}\ge (1-t)Mx\ge 0\) is proven.

If \(x^{(t)}\in U\), then we also obtain from the convexity assumption (2) that

which shows that \(F(x^{(t)})\ge (1-t)F(x)\ge 0\). \(\square \)

Proof of Theorem 2

Theorem 2(a) has been proven in Lemma 2(b).

To prove (b), let \(x^{(k)}\in (-1,n)^n\) with \(F(x^{(k)})\ge 0\) and \(0\le M x^{(k)}\le M\mathbb {1}\). Then, by (a), \(F'(x^{(k)})\in \mathbb {R}^{n\times n}\) is invertible, so that

is well defined.

We will prove that \(x^{(k+1)}\in (-1,n)^n\). We argue by contradiction, and assume that this is not the case. Then, by continuity, there exists \(t\in (0,1]\) with \(x^{(k+t)}\in [-1,n]^n{\setminus } (-1,n)^n\) and, by lemma 3,

Convexity (2) then yields that

and using Lemma 2(c) this would imply that

For all \(l\in \{1,\ldots ,n\}\), we obtain from (12) and (13)

Hence, \(\max _{j=1,\ldots ,n}\, x^{(k+t)}_j< n\), so that \(x^{(k+t)}\in (-1,n)^n\). Since this contradicts \(x^{(k+t)}\in [-1,n]^n{\setminus } (-1,n)^n\), we have proven that \(x^{(k+1)}\in (-1,n)^n\).

Using Lemma 3 again, this shows that for all

the next Newton iterate \(x^{(k+1)}\) is well-defined and also fulfills

Hence, for \(x^{(0)}:=\mathbb {1}\), the Newton algorithm produces a well-defined sequence \(x^{(k)}\in (-1,n)^n\) for which \(Mx^{(k)}\) is monotonically non-increasing and bounded. Hence, \((Mx^{(k)})_{k\in \mathbb {N}}\) and thus also \((x^{(k)})_{k\in \mathbb {N}}\) converge. We define

Since F is continuously differentiable and \(F'({\hat{x}})\) is invertible, it follows from the Newton iteration formula (10) that \(F({\hat{x}})=0\). Also, the monotone convergence of \((Mx^{(k)})_{k\in \mathbb {N}}\) shows that

Moreover, since this is the standard Newton iteration, the convergence speed is superlinear and the speed is quadratic if \(F'\) is Lipschitz continuous in a neighbourhood of \({\hat{x}}\).

It only remains to show that \({\hat{x}}\in (-\frac{1}{n-1},\frac{n}{n-1})^n\subset (-1,2)^n\). For this, we use the convexity to obtain

which then implies by Lemma 2(c) that

From this we obtain that

which yields \(\min _{j=1,\ldots ,n}\, {\hat{x}}_j> -\frac{1}{n-1}\). Using (14) again, we then obtain

which shows \(\max _{j=1,\ldots ,n}\, {\hat{x}}_j<\frac{n}{n-1}\). \(\square \)

2.3 A result with tighter domain assumptions

Our results in Sects. 2.1 and 2.2 require the considered function F to be defined (and convex and monotonic) on a much larger set than \([0,1]^n\). For some applications (such as the inverse coefficient problem in Sect. 3), the following more technical variant of Theorem 2 is useful, as it allows us treat the case where the domain of definition is an arbitrarily small neighbourhood of \([0,1]^n\).

Theorem 3

Let \(\epsilon >0\) and \(c\ge 2+\frac{2}{\epsilon }\). Let \(F:\ U\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^n\), \(n\ge 2\), be continuously differentiable, pointwise convex and monotonic on a convex open set U. If \([-\frac{1+\epsilon }{cn}-\frac{\epsilon }{2cn},1+2\epsilon ]^n\subset U\), and

where \(K:={\text {ceil}}(\frac{2cn}{\epsilon }\left( 1+\epsilon + \frac{1+\epsilon }{cn}\right) )\in \mathbb {N}\),

then the following holds on \(O:=(-\frac{1+\epsilon }{cn},1+\epsilon )^n\supset [0,1]^n\).

-

(a)

F is injective on \({\overline{O}}\). For all \(x,y\in {\overline{O}}\), \(F'(x)\) is invertible and

$$\begin{aligned} \Vert x-y\Vert _\infty \le L \Vert F(x)-F(y)\Vert _\infty , \quad \text { and } \quad \Vert F'(x)^{-1}\Vert _\infty \le L, \end{aligned}$$where

$$\begin{aligned} L:= \left( \min _{\begin{array}{c} j=1,\ldots ,n,\\ k=1,\ldots ,K \end{array}}\ \max _{l=1,\ldots ,n} e_l^T F'(z^{(j,k)})d^{(j)}\right) ^{-1}>0. \end{aligned}$$(18) -

(b)

If, additionally, \(F(0)\le 0\le F(\mathbb {1})\), then there exists a unique

$$\begin{aligned} {\hat{x}}\in {\overline{O}} \quad \text { with } \quad F({\hat{x}})=0. \end{aligned}$$The Newton iteration sequence

$$\begin{aligned} x^{(i+1)}:=x^{(i)}- F'(x^{(i)})^{-1}F(x^{(i)})\quad \text {with initial value}\, x^{(0)}:=\mathbb {1} \end{aligned}$$(19)is well defined (i.e., \(F'(x^{(i)})\) is invertible in each step) and converges against \({\hat{x}}\). For all \(i\in \mathbb {N}\)

$$\begin{aligned} x^{(i)}&\in O , \quad \text { and } \quad 0\le M{\hat{x}}\le M x^{(i+1)}\le M x^{(i)}\le Mx^{(0)}=(1+cn)\mathbb {1}, \end{aligned}$$where \(M:=\mathbb {1} \mathbb {1}^T+(1+(c-1)n)I_n\in \mathbb {R}^{n\times n}\). The rate of convergence of \(x_i\rightarrow {\hat{x}}\) is superlinear. If \(F'\) is locally Lipschitz then the rate of convergence is quadratic.

To prove Theorem 3 we first prove a variant of Lemma 2 with tighter domain assumptions.

Lemma 4

Under the assumptions and with the notations of Theorem 3, the following holds:

-

(a)

For all \(x\in {\overline{O}}\), and \(j\in \{1,\ldots ,n\}\),

$$\begin{aligned} \max _{l=1,\ldots ,n} e_l^T F'(x)(e_j-c n e_j')\ge L^{-1}. \end{aligned}$$ -

(b)

F is injective on \({\overline{O}}\). For all \(x,y\in {\overline{O}}\), \(F'(x)\) is invertible,

$$\begin{aligned} \Vert x-y\Vert _\infty \le L \Vert F(x)-F(y)\Vert _\infty , \quad \text { and } \quad \Vert F'(x)^{-1}\Vert _\infty \le L. \end{aligned}$$ -

(c)

For all \(x\in {\overline{O}}\), and \(0\ne y\in \mathbb {R}^n\)

$$\begin{aligned} F'(x)y\ge 0 \ \text { implies } \ \max _{j=1,\ldots ,n} y_j= \Vert y\Vert _\infty \ \text { and } \ \min _{j=1,\ldots ,n} y_j> -\frac{1}{c n} \Vert y\Vert _\infty . \end{aligned}$$ -

(d)

M is invertible, \(M^{-1}=\frac{1}{1+(c-1)n} \left( I_n- \frac{1}{1+cn} \mathbb {1} \mathbb {1}^T \right) \). For all \(x\in {\overline{O}}\), and \(y\in \mathbb {R}^n\)

$$\begin{aligned} F'(x)y\ge 0 \quad \text { implies } \quad My\ge 0, \end{aligned}$$and thus \(M F'(x)^{-1}\ge 0\).

Proof

We use the same arguments as in Lemma 2. To prove (a) let \(j\in \{1,\ldots ,n\}\) and \(x\in {\overline{O}}=[-\frac{1+\epsilon }{cn},1+\epsilon ]^n\). Then, by the definition of K, there exists \(k\in \{1,\ldots ,K\}\), so that

It follows from the definition of \(z^{(j,k)}\) and \(d^{(j)}\) in (16) and (17) that

We thus obtain

which proves (a). Since this also implies a fortiori that

(b) follows from Lemma 1.

To show (c) by contraposition, let \(x\in {\overline{O}}\), \(0\ne y\in \mathbb {R}^n\), and assume that for some index \(j\in \{1,\ldots ,n\}\), we have that \(y_j\le -\frac{1}{cn} \Vert y\Vert _\infty \). Then \(y\le -\frac{1}{cn} \Vert y\Vert _\infty e_j + \Vert y\Vert _\infty e_j'\), so that

By contraposition, this shows that

and the latter also implies that \( \Vert y\Vert _\infty = \max _{j=1,\ldots ,n} y_j\).

For the proof of (d), it is easily checked that

which shows the invertibility of M and the asserted formula for \(M^{-1}\). Moreover, for all \(y\in \mathbb {R}^n\), and \(l\in \{1,\ldots ,n\}\),

Hence, using (c), for all \(x\in {\overline{O}}\), \(F'(x)y\ge 0\) implies \(My\ge 0\). As this also shows that

we have \(M F'(x)^{-1}\ge 0\). \(\square \)

Proof of Theorem 3

We proceed as in the proof of Theorem 2. Assertion (a) has already been proven in Lemma 4(b).

To prove (b), let \(x^{(i)}\in O\) with \(F(x^{(i)})\ge 0\) and \(0\le M x^{(i)}\le M\mathbb {1}\). Then, by (a), \(F'(x^{(i)})\in \mathbb {R}^{n\times n}\) is invertible, so that

is well defined.

We will prove that \(x^{(i+1)}\in O=(-\frac{1+\epsilon }{cn},1+\epsilon )^n\). We argue by contradiction, and assume that this is not the case. Then, by continuity, there exists \(t\in (0,1]\) with \(x^{(i+t)}\in {\overline{O}}{\setminus } O\), so that, by Lemma 3,

Convexity (2) then yields that

and using Lemma 4(c) this would imply

For all \(l\in \{1,\ldots ,n\}\), we obtain from (20) and (21)

and thus

An elementary computation shows that

where we used \(cn> 1\) for the first inequality, and we used the assumption \(c\ge 2+\frac{2}{\epsilon }=\frac{2+\epsilon }{\epsilon }+1\) for the last inequality. Hence, for all \(l\in \{1,\ldots ,n\}\),

This contradicts \(x^{(i+t)}\in {\overline{O}}{\setminus } O\), and thus shows that \(x^{(i+1)}\in O\).

Using Lemma 3 again, this shows that for all

the next Newton iterate \(x^{(i+1)}\) is well-defined and also fulfills

Hence, for \(x^{(0)}:=\mathbb {1}\), the Newton algorithm produces a well-defined sequence \(x^{(i)}\in O\) for which \(Mx^{(i)}\) is monotonically non-increasing and bounded. Hence, \((Mx^{(i)})_{i\in \mathbb {N}}\) and thus also \((x^{(i)})_{k\in \mathbb {N}}\) converge. We define

Since F is continuously differentiable and \(F'({\hat{x}})\) is invertible, it follows from the Newton iteration formula that \(F({\hat{x}})=0\). Also, the monotone convergence of \((Mx^{(i)})_{i\in \mathbb {N}}\) shows that

Moreover, since this is the standard Newton iteration, the convergence speed is superlinear and the speed is quadratic if \(F'\) is Lipschitz continuous in a neighbourhood of \({\hat{x}}\). \(\square \)

3 Application to an inverse Robin transmission problem

We will now show how to use our result to obtain uniqueness, stability and global convergence results for an inverse coefficient problem with finitely many measurements. More precisely, we show how to choose finitely many measurements so that they uniquely determine the unknown coefficient function with a given resolution, Lipschitz stability holds, and Newton’s method globally converges.

3.1 The setting

We consider the inverse Robin transmission problem for the Laplace equation from [53], that is motivated by corrosion detection. Note that similar problems have also been studied for the Helmholtz equation under the name conductive boundary condition or transition boundary condition [8, 84]. We first introduce the idealized infinite-dimensional forward and inverse problem following [53] and then study the case of finitely many measurements.

3.1.1 The infinite-dimensional forward and inverse problem

Let \({\varOmega } \subset \mathbb {R}^d\) (\(d\ge 2\)), be a bounded domain with Lipschitz boundary \(\partial {\varOmega }\) and let D be an open subset of \({\varOmega }\), with \({\overline{D}}\subset {\varOmega }\), Lipschitz boundary \({\varGamma }:=\partial D\) and connected complement \({\varOmega }{\setminus }\overline{D}\), cf. Fig. 1 in the numerical section for a sketch of the setting.

We assume that \({\varOmega }\) describes an electrically conductive imaging domain, with a-priori known conductivity that we set to 1 for the ease of presentation. (Note that all of the following results remain valid if the conductivity in D and in \({\varOmega }{\setminus }\overline{D}\) are a-priori known spatially dependent functions as long as they are sufficiently regular to allow unique continuation arguments). We assume that corrosion effects on the interface \({\varGamma }\) can be modelled with an unknown Robin transmission parameter \(\gamma \in L^\infty _+({\varGamma })\), where \(L^\infty _+\) denotes the subset of \(L^\infty \)-functions with positive essential infima.

Applying an electrical current flux \(g\in L^2(\partial {\varOmega })\) on the boundary \(\partial {\varOmega }\) then yields an electric potential \(u\in H^1({\varOmega })\) solving the following Robin transmission problem with Neumann boundary values

where \(\nu \) is the unit normal vector to the interface \({\varGamma }\) or \(\partial {\varOmega }\) pointing outward of D, resp., \({\varOmega }\), and

denote the jump of the Dirichlet, resp., Neumann trace values on \({\varGamma }\), with the superscript ”\(+\)” denoting that the trace is taken from \({\varOmega }{\setminus } D\) and ”−” denoting the trace taken from D. In the following, we often denote the solution of (22)–(25) by \(u_\gamma ^{(g)}\) to point out its dependence on the Robin transmission coefficient \(\gamma \) and the Neumann boundary data g.

It is easily seen that this problem is equivalent to the variational formulation of finding \(u_\gamma ^{(g)}\in H^1({\varOmega })\) such that

and that (26) is uniquely solvable by the Lax–Milgram-Theorem. Hence, we can define the Neumann-to-Dirichlet map

It is easy to show that \({\varLambda }(\gamma )\) is a compact self-adjoint linear operator.

One may regard \({\varLambda }(\gamma )\) as an idealized model (the so-called continuum model) of all electric current/voltage measurements that can be carried out on the outer boundary \(\partial {\varOmega }\). Hence the infinite-dimensional inverse coefficient problem of determining a Robin transmission coefficient from boundary measurements can be formulated as the problem to

We summarize some more properties of the infinite-dimensional forward mapping \({\varLambda }\) in the following lemma.

Lemma 5

-

(a)

The non-linear mapping

$$\begin{aligned} {\varLambda }:\ L^\infty _+({\varGamma })\rightarrow {\mathcal {L}}(L^2(\partial {\varOmega })), \quad \gamma \mapsto {\varLambda }(\gamma ) \end{aligned}$$is Fréchet differentiable. Its derivative

$$\begin{aligned} {\varLambda }':\ L^\infty _+({\varGamma })\rightarrow {\mathcal {L}}(L^\infty ({\varGamma }),{\mathcal {L}}(L^2(\partial {\varOmega }))) \end{aligned}$$is given by the bilinear form

$$\begin{aligned} \int _{\partial {\varOmega }} g \left( {\varLambda }'(\gamma )\delta \right) h\,{\mathrm{d}} s = -\int _{\varGamma } \delta u_\gamma ^{(g)}u_\gamma ^{(h)} \,{\mathrm{d}} s, \end{aligned}$$(27)for all \(\gamma \in L^\infty _+({\varGamma })\), \(\delta \in L^\infty ({\varGamma })\), and \(g,h\in L^2(\partial {\varOmega })\), where \(u_\gamma ^{(g)}\in H^1({\varOmega })\) solves (26). \({\varLambda }'\) is locally Lipschitz continuous and \({\varLambda }'(\gamma )\delta \in {\mathcal {L}}(L^2(\partial {\varOmega }))\) is compact and self-adjoint.

-

(b)

For all \(g\in L^2(\partial {\varOmega })\) and all \(\gamma _1,\gamma _2\in L^\infty _+({\varOmega })\),

$$\begin{aligned} \int _{\partial {\varOmega }} g \left( {\varLambda }(\gamma _2)-{\varLambda }(\gamma _1) \right) g \,{\mathrm{d}} s \ge \int _{\partial {\varOmega }} g \left( {\varLambda }'(\gamma _1) (\gamma _2-\gamma _1)\right) g \,{\mathrm{d}} s. \end{aligned}$$ -

(c)

For all \(\gamma \in L^\infty _+({\varOmega })\), \(\delta \in L^\infty ({\varOmega })\), and \(g\in L^2(\partial {\varOmega })\),

$$\begin{aligned} \delta (x)\ge 0 \text { for}\, x\in {\varOmega }\,\text { a.e.} \quad \text { implies } \quad \int _{\partial {\varOmega }} g \left( {\varLambda }'(\gamma )\delta \right) g \,{\mathrm{d}} s\le 0. \end{aligned}$$

Proof

Obviously, for all \(\gamma \in L^\infty _+({\varGamma })\) and \(\delta \in L^\infty ({\varGamma })\), (27) defines a compact self-adjoint linear operator \({\varLambda }'(\gamma )\delta \in {\mathcal {L}}(L^2(\partial {\varOmega }))\). Moreover, it follows from the monotonicity estimate in [53, Lemma 4.1] that for all \(\delta \in L^\infty ({\varGamma })\) (that are sufficiently small so that \(\gamma +\delta \in L^\infty _+({\varGamma })\))

and thus

This shows that \({\varLambda }\) is Fréchet differentiable for all \(\gamma \in L^\infty _+({\varGamma })\), and that \({\varLambda }'(\gamma )\) is its derivative. Since it is easily shown that \(u_\gamma ^{(g)}\in H^1({\varOmega })\) depends locally Lipschitz continuously on \(\gamma \in L^\infty _+({\varGamma })\), it also follows that \({\varLambda }'\) is locally Lipschitz continuous. This proves (a).

(b) is shown in [53, Lemma 4.1], and (c) follows from (27). \(\square \)

Note that Lemma 5 shows that \({\varLambda }\) is a convex, anti-monotone function with respect to the pointwise partial order on \(L^\infty _+({\varOmega })\), and the Loewner partial order in the space of compact self-adjoint operators on \(L^2(\partial {\varOmega })\). These properties are the key to formulate the finite-dimensional inverse problem as a zero finding problem for a pointwise convex and monotonic forward function.

3.1.2 The inverse problem with finitely many measurements

In practical applications, it is natural to assume that the unknown Robin transmission coefficient is a piecewise constant function, i.e., \(\gamma (x)=\sum _{j=1}^n \gamma _j \chi _j(x)\) where \(\gamma _j>0\) and \(\chi _j:=\chi _{{\varGamma }_j}\) are the characteristic functions on pairwise disjoint subsets \({\varGamma }_j\subseteq {\varGamma }\) of a given partition \({\varGamma }=\bigcup _{j=1}^n {\varGamma }_j\). For the ease of notation, here and in the following, we identify a piecewise constant function \(\gamma (x)=\sum _{j=1}^n \gamma _j \chi _j(x)\in L^\infty ({\varGamma })\) with the vector \(\gamma =(\gamma _1,\ldots ,\gamma _n)\in \mathbb {R}^n\). We also simply write a for the constant function \(\gamma (x)=a\), and for the vector \((a,\ldots ,a)\in \mathbb {R}^n\) (and use b analogously). Throughout this work we always assume that \(n\ge 2\).

It is also natural to assume that one knows bounds \(a,b\in \mathbb {R}\) on the unknown Robin transmission coefficient, so that \(0<a\le \gamma _j\le b\) for all \(j=1,\ldots ,n\). For the semi-discretized inverse problem of reconstructing the finite-dimensional coefficient vector \(\gamma \in [a,b]^n\subset \mathbb {R}^n\) from the (infinite-dimensional) measurements \({\varLambda }(\gamma )\), the results in [53, Thm. 2.1 and 2.2] show uniquely solvability and Lipschitz stability. Moroever, [53, Thm. 5.2] shows how to explicitly calculate the Lipschitz constant for a given setting using arguments similar to (and inspiring) Sect. 2 in this work.

We now go one step further and assume that we can only measure finitely many components of \({\varLambda }(\gamma )\), i.e., that we can measure

for a finite number of Neumann boundary data \(g_j,h_j\in L^2(\partial {\varOmega })\). Hence, the fully discretized inverse Robin transmission problem leads to the finite dimensional non-linear inverse problem to

The following practically important questions are then to be answered: Given bounds [a, b] and a partition of \({\varGamma }\) (i.e., a desired resolution), how many and which Neumann boundary functions \(g_j\), \(h_j\) should be used, so that \(F(\gamma )\) uniquely determines \(\gamma \)? How good is the stability of the resulting inverse problem with regard to noisy measurements? How can one construct a globally convergent numerical algorithm to practically determine \(\gamma \) from \(F(\gamma )\)? And how good will the solution be in the case that the true Robin transmission function \(\gamma \) is not piecewise constant?

The following subsections show how these questions can be answered using the theory developed in Sect. 2. For this, let us first observe, that the symmetric choice \(g_j=h_j\) leads to an inverse problem with a pointwise convex and monotonic forward function.

Lemma 6

Let \(b>a>0\), \(g_1,\ldots ,g_n\in L^2(\partial {\varOmega })\), and

F is a pointwise convex and anti-monotone, continuously differentiable function with locally Lipschitz continuous derivative \(F'(\gamma )\in \mathbb {R}^{n\times n}\), where

Given a vector \(y=(y_j)_{j=1}^n\in \mathbb {R}^n\) with \(F(b)\le y\le F(a)\), we define the rescaled function

with \(r(\xi ):=b\mathbb {1}-(b-a)\xi \). Then \({\varPhi }\) is a pointwise convex and monotonic, continuously differentiable function with locally Lipschitz continuous derivative, and \({\varPhi }'(\xi )=-F'(r(\xi ))\) for all \(\xi \in U\). Also, \({\varPhi }(0)\le 0\le {\varPhi }(1)\).

Proof

This follows immediately from Lemma 5. \(\square \)

3.2 Uniqueness, stability and global Newton convergence

We summarize the assumptions on the setting: Let \({\varOmega } \subset \mathbb {R}^d\) (\(d\ge 2\)), be a bounded domain with Lipschitz boundary \(\partial {\varOmega }\) and let D be an open subset of \({\varOmega }\), with \({\overline{D}}\subset {\varOmega }\), Lipschitz boundary \({\varGamma }:=\partial D\) and connected complement \({\varOmega }{\setminus }\overline{D}\). We assume that the true unknown Robin transmission coefficient \({{\hat{\gamma }}}\in L^\infty _+({\varGamma })\) is bounded by \(b\ge {{\hat{\gamma }}}(x)\ge a\) for \(x\in {\varGamma }\) a.e., with a-priori known bounds \(b>a>0\), and that \({{\hat{\gamma }}}=\sum _{j=1}^n {{\hat{\gamma }}}_j \chi _j\), \(\chi _j:=\chi _{{\varGamma }_j}\), is piecewise constant on an a-priori known partition \({\varGamma }=\bigcup _{j=1}^n {\varGamma }_j\) into \(n\in \mathbb {N}\), \(n\ge 2\), pairwise disjoint measurable subsets \({\varGamma }_j\subset {\varGamma }\) with positive measure.

We will show how to construct n Neumann boundary functions \(g_j\in L^2(\partial {\varOmega })\), so that \(\gamma \in [a,b]^n\) is uniquely determined by the n measurements

We also quantify the Lipschitz stability constant of this inverse problem, and show that the inverse problem can be numerically solved with a globally convergent Newton method. Our main tool is to reformulate the finite-dimensional inverse problem as a zero finding problem for the pointwise monotonic and convex function \({\varPhi }\) introduced in Lemma 6. Then we use a relation to the concept of localized potentials [41] to prove that we can choose the measurements \(g_j\) in such a way that \({\varPhi }\) also fulfills the additional assumptions on the directional derivative of the forward function as required by our Newton convergence theory in Sect. 2.

At this point, let us stress that our theory in Sect. 2 allows us to find \(m=n\) measurements that uniquely recover the n unknown components of the Robin coefficient, but it also requires to use exactly those \(m=n\) measurements to ensure global convergence of Newton’s method. In practice, it would be highly desirable to utilize additional measurements for the sake of redundancy and error reduction. But our convergence theory in Sect. 2 does not cover the case \(m>n\) yet, and an extension to, e.g., Gauss–Newton or Levenberg–Marquardt methods seems far from trivial. Moreover, for some applications, it would be desirable to also treat the interior boundary \({\varGamma }\) as unknown. But it is not clear whether the interplay of parametrization, differentiability and localized potentials results that is required to fulfill the assumptions of Sect. 2 can also be extended to this case. Hence, in all of the following we will only consider the case where the interior boundary is known and utilize exactly \(m=n\) measurements.

3.2.1 Choosing the measurements (for specific bounds)

To demonstrate the key idea, we will first consider the specific (and rather restrictive) case where the bounds a, b fufill

since this case can be treated by simply combining Theorem 2 with a known localized potentials result from [53]. The case of general bounds \(b>a>0\) is more technical and requires an extended result on simultaneously localized potentials. It will be treated in Sect. 3.2.2.

Theorem 4

Given bounds \(b>a>0\) that fulfill \(n(n+3)<\frac{b}{b-a}\), we define the piecewise constant functions \(\kappa ^{(j)}\in L^\infty _+({\varGamma })\), \(j=1,\ldots ,n\), by setting

-

(a)

If, for all \(j=1,\ldots ,n\), \(g_j\in L^2(\partial {\varOmega })\) fulfills

$$\begin{aligned} \lambda ^{(j)}:= \int _{{\varGamma }_j} |u_{\kappa ^{(j)}}^{g_j}|^2 \,{\mathrm{d}} s - (n^2+3n+1)\int _{{\varGamma }{\setminus } {\varGamma }_j} |u_{\kappa ^{(j)}}^{g_j} |^2 \,{\mathrm{d}} s>0, \end{aligned}$$(29)then the finite-dimensional non-linear inverse problem of determining

$$\begin{aligned} \gamma =\left( \gamma _j\right) _{j=1}^n\in [a,b]^n \quad \text { from }\quad F(\gamma ):=\left( \int _{\partial {\varOmega }} g_j {\varLambda }(\gamma ) g_j\,{\mathrm{d}} s\right) _{j=1}^n\in \mathbb {R}^n \end{aligned}$$has a unique solution in \([a,b]^n\), and \(\gamma \) depends Lipschitz continuously on \(F(\gamma )\). Moreover, the iterates of Newton’s method applied to the problem of determining \(\gamma \) from \(F(\gamma )\), with initial value \(\gamma ^{(0)}=a\in \mathbb {R}^n\), quadratically converge to the unique solution \(\gamma \) (see Lemma 7 for more details on the properties of F).

-

(b)

In any large enough finite-dimensional subspace of \(L^2(\partial {\varOmega })\), one can find \(g_1,\ldots ,g_n\) fulfilling (29) by the following construction:

Let \((f_m)_{m\in \mathbb {N}}\subseteq L^2(\partial {\varOmega })\) be a sequence of vectors with dense linear span in \(L^2(\partial {\varOmega })\). Let \(j\in \{1,\ldots ,n\}\), \(m\in \mathbb {N}\), and let \(M^{(j)}_m\in \mathbb {R}^{m\times m}\) be the symmetric matrix with entries (\(l,k=1,\ldots ,m\))

$$\begin{aligned} e_l^T M^{(j)}_m e_k:=\int _{{\varGamma }_j} u_{\kappa ^{(j)}}^{f_l} u_{\kappa ^{(j)}}^{f_k} \,{\mathrm{d}} s - (n^2+3n+1)\int _{{\varGamma }{\setminus } {\varGamma }_j} u_{\kappa ^{(j)}}^{f_l} u_{\kappa ^{(j)}}^{f_k} \,{\mathrm{d}} s. \end{aligned}$$Then, for sufficiently large dimension \(m\in \mathbb {N}\), the matrix \(M^{(j)}_m\in \mathbb {R}^{m\times m}\) has a positive eigenvalue \(\lambda ^{(j)}>0\). For a corresponding normalized eigenvector \(v^{(j)}=(v^{(j)}_1,\ldots ,v^{(j)}_m)\in \mathbb {R}^m\), (29) is fulfilled by

$$\begin{aligned} g_j:=\sum _{k=1}^m v^{(j)}_k f_k\in L^2(\partial {\varOmega }). \end{aligned}$$

To prove Theorem 4, we formulate the consequences of our Newton convergence theory in Sect. 2 in the following lemma.

Lemma 7

If \(n(n+3)<\frac{b}{b-a}\) then

where \(I:=(a-\frac{b-a}{n-1},b+\frac{b-a}{n-1})^n\), and \(I':=\left[ b-n(b-a),2b-a\right] ^n\). If, for all \(j=1,\ldots ,n\), \(g_j\in L^2(\partial {\varOmega })\) fulfills (29), then the function

has the following properties

-

(a)

F is pointwise convex, anti-monotone, and continuously differentiable.

-

(b)

F is injective on \(I'\), and its Jacobian \(F'(\gamma )\) is invertible for all \(\gamma \in I'\).

-

(c)

With \(L:=(n+2) \left( \min _{j=1,\ldots ,n}\ \lambda ^{(j)} \right) ^{-1}\), we have that for all \(\gamma ,\gamma '\in I'\)

$$\begin{aligned} \Vert \gamma -\gamma '\Vert _\infty \le L \Vert F(\gamma )-F(\gamma ')\Vert _\infty \quad \text { and } \quad \Vert F'(\gamma )^{-1}\Vert _\infty \le L. \end{aligned}$$ -

(d)

For all \(\gamma \in [a,b]^n\), \(F(b)\le F(\gamma ) \le F(a)\). Moreover, for all \(y\in \mathbb {R}^n\) with \(F(b)\le y \le F(a)\) there exists a unique \(\gamma \in I\) with \(F(\gamma )=y\). The Newton iteration

$$\begin{aligned} \gamma ^{(i+1)}:=\gamma ^{(i)}-F'(\gamma ^{(i)})^{-1} \left( F(\gamma ^{(i)}) - y \right) , \ \text { started with}\, \gamma ^{(0)}:=a \in \mathbb {R}^n, \end{aligned}$$produces a sequence \((\gamma ^{(i)})_{i\in \mathbb {N}}\subset I'\) that converges quadratically to \(\gamma \).

Proof

We define r and \({\varPhi }\) as in Lemma 6. Then,

so that the interval inclusions follow from the anti-monotonicity of r.

Lemma 6 yields assertion (a) and that \({\varPhi }:\ U\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^n\) is a continuously differentiable pointwise convex and monotonic function on \(U=(-\infty ,\textstyle \frac{b}{b-a})^n\). The assumption \(n(n+3)<\frac{b}{b-a}\) guarantees that U contains \([-2,n(n+3)]^n\). Moreover, using that

it follows from Lemma 6 and (29) that

so that \({\varPhi }\) fulfills the assumptions of Theorem 2.

It follows that \({\varPhi }\) is injective on \([-1,n]^n\), and that for all \(\xi ,\xi '\in [-1,n]^n\), \({\varPhi }'(\xi )\) is invertible,

This proves that F fulfills (b) and (c) on \(r([-1,n]^n)=I'\).

The first assertion in (d) follows from the anti-monotonicity of F. For the remaining assertions in (d) note that, by Lemma 6, \({\varPhi }'\) is also locally Lipschitz continuous, and \({\varPhi }(0)\le 0\le {\varPhi }(1)\). Hence, Theorem 2(b) yields that there exists a unique \(\xi \in (-\frac{1}{n-1}, 1+\frac{1}{n-1} ) ^n\) with \({\varPhi }(\xi )=0\), and thus a unique \(\gamma \in I\) that solves \(F(\gamma )=y\).

Moreover, Theorem 2(b) yields that the Newton iteration applied to \({\varPhi }\) with initial value \(\xi ^{(0)}=\mathbb {1}\) does not leave the interval \((-1,n)^n\) and quadratically converges against the unique solution \(\xi \) of \({\varPhi }(\xi )=0\). Since, the Newton iteration is invariant under invertible linear transformations, this yields that the Newton iteration applied to F with \(\gamma ^{(0)}:=a\in \mathbb {R}^n\) does not leave \(I'\) and converges quadratically against the unique solution \(\gamma \) of \(F(\gamma )=y\). \(\square \)

Proof of Theorem 4

-

(a)

follows from Lemma 7.

-

(b)

Let \(j\in \{1,\ldots ,n\}\). From the localized potentials result in [53, Lemma 4.3], it follows that there exists \(g\in L^2(\partial {\varOmega })\) with

$$\begin{aligned} \int _{{\varGamma }_j} |u_{\kappa ^{(j)}}^{g}|^2 \,{\mathrm{d}} s - (n^2+3n+1)\int _{{\varGamma }{\setminus } {\varGamma }_j} |u_{\kappa ^{(j)}}^{g} |^2 \,{\mathrm{d}} s>1. \end{aligned}$$By density and continuity, for sufficiently large \(m\in \mathbb {N}\), there exists a function \(f\in {\mathrm {span}}\{f_1,\ldots ,f_m \}\) with

$$\begin{aligned} \int _{{\varGamma }_j} |u_{\kappa ^{(j)}}^{f}|^2 \,{\mathrm{d}} s - (n^2+3n+1)\int _{{\varGamma }{\setminus } {\varGamma }_j} |u_{\kappa ^{(j)}}^{f} |^2 \,{\mathrm{d}} s>\frac{1}{2}. \end{aligned}$$Writing \(f=\sum _{l=1}^m v_l f_l\) with \(v_l\in \mathbb {R}\), and \(v:=(v_l)_{l=1}^m\in \mathbb {R}^m\), we thus have

$$\begin{aligned} v^T M_m^{(j)} v=\int _{{\varGamma }_j} |u_{\kappa ^{(j)}}^{f}|^2 \,{\mathrm{d}} s - (n^2+3n+1)\int _{{\varGamma }{\setminus } {\varGamma }_j} |u_{\kappa ^{(j)}}^{f} |^2 \,{\mathrm{d}} s>\frac{1}{2}. \end{aligned}$$This shows that the symmetric matrix \(M_m^{(j)}\in \mathbb {R}^{m\times m}\) must have a positive eigenvalue when its dimension \(m\in \mathbb {N}\) is sufficiently large. On the other hand, every normalized eigenvector \(v^{(j)}=(v^{(j)}_1,\ldots ,v^{(j)}_m)\in \mathbb {R}^m\) corresponding to a positive eigenvector \(\lambda ^{(j)}>0\) fulfills

$$\begin{aligned} 0 < \lambda ^{(j)}&= (v^{(j)})^T M_m^{(j)} v^{(j)}\\&= \int _{{\varGamma }_j} |u_{\kappa ^{(j)}}^{g_j}|^2 \,{\mathrm{d}} s - (n^2+3n+1)\int _{{\varGamma }{\setminus } {\varGamma }_j} |u_{\kappa ^{(j)}}^{g_j} |^2 \,{\mathrm{d}} s, \end{aligned}$$with \(g_j:=\sum _{k=1}^m v^{(j)}_k f_k\in L^2(\partial {\varOmega })\), so that (b) is proven.

\(\square \)

3.2.2 Choosing the measurements (for general bounds)

We now show how to treat the case of general bounds \(b>a>0\).

Theorem 5

Given \(b>a>0\), choose \(0<\epsilon <\frac{a}{2(b-a)}\), and set \(c:=2+\frac{2}{\epsilon }\), \( K:={\text {ceil}}\left( \frac{2cn}{\epsilon }\left( 1+\epsilon + \frac{1+\epsilon }{cn}\right) \right) , \) and \(\beta := \frac{1+\epsilon +cn+2\epsilon cn}{\epsilon }\). Let \(\kappa ^{(j,k)}\in L^\infty _+({\varGamma })\) denote the piecewise constant function

-

(a)

If \(g_j\in L^2(\partial {\varOmega })\), \(j=1,\ldots ,n\), fulfills

$$\begin{aligned} \lambda _{j,k}&:= \frac{1}{2}\int _{{\varGamma }_j} |u_{\kappa ^{(j,k)}}^{g_j}|^2 \,{\mathrm{d}} s - \beta \int _{{\varGamma }{\setminus } {\varGamma }_j} |u_{\kappa ^{(j,k)}}^{g_j}|^2 \,{\mathrm{d}} s>0, \end{aligned}$$(31)for all \(k=1,\ldots ,K\), then the finite-dimensional non-linear inverse problem of determining

$$\begin{aligned} \gamma =\left( \gamma _j\right) _{j=1}^n\in [a,b]^n \quad \text { from }\quad F(\gamma ):=\left( \int _{\partial {\varOmega }} g_j {\varLambda }(\gamma ) g_j\,{\mathrm{d}} s\right) _{j=1}^n\in \mathbb {R}^n \end{aligned}$$has a unique solution in \([a,b]^n\), and \(\gamma \) depends Lipschitz continuously \({\varLambda }(\gamma )\). Moreover, the iterates of Newton’s method applied to the problem of determining \(\gamma \) from \(F(\gamma )\), with initial value \(\gamma ^{(0)}=a\in \mathbb {R}^n\), quadratically converge to the unique solution \(\gamma \) (see Lemma 8 for more details on the properties of F).

-

(b)

In any large enough finite dimensional subspace of \(L^2(\partial {\varOmega })\), one can find \(g_1,\ldots ,g_n\) fulfilling (31) by the following construction:

Let \((f_m)_{m\in \mathbb {N}}\subseteq L^2(\partial {\varOmega })\) be a sequence of vectors with dense linear span in \(L^2(\partial {\varOmega })\). Let \(j\in \{1,\ldots ,n\}\), \(m\in \mathbb {N}\), and \(v^{(j)}=(v^{(j)}_1,\ldots ,v^{(j)}_m)\in \mathbb {R}^m\) be a normalized eigenvector corresponding to a largest eigenvalue of the symmetric matrix

$$\begin{aligned} M_m^{(j)}:=\frac{\alpha }{2} S^{(j)} - \beta \sum _{k=1}^K R^{(j,k)} \in \mathbb {R}^{m\times m}, \end{aligned}$$where \(\alpha :=( 1+ \frac{b-a}{b} (1+\epsilon + \frac{1+\epsilon }{cn}))^{-1}\), and for \(i,l=1,\ldots ,m\),

$$\begin{aligned} e_i^T S^{(j)} e_l:=\int _{{\varGamma }} u_{\kappa ^{(j,1)}}^{f_i} u_{\kappa ^{(j,1)}}^{f_l} \,{\mathrm{d}} s, \ \text { and } \ e_i^T R^{(j,k)} e_l:=\int _{{\varGamma }{\setminus } {\varGamma }_j} u_{\kappa ^{(j,k)}}^{f_i} u_{\kappa ^{(j,k)}}^{f_l} \,{\mathrm{d}} s, \end{aligned}$$Then, for sufficiently large dimension \(m\in \mathbb {N}\), (31) is fulfilled by

$$\begin{aligned} g_{j}:=\sum _{l=1}^m v^{(j)}_l f_l\in {\mathrm {span}}\{f_1,\ldots ,f_m \}\subset L^2(\partial {\varOmega }). \end{aligned}$$

As in Sect. 3.2.1, we prove Theorem 5(a) by applying our Newton convergence theory from Sect. 2 in the following lemma.

Lemma 8

We have that

If, for all \(j=1,\ldots ,n\), \(g_j\in L^2(\partial {\varOmega })\) fulfills (31) for all \(k=1,\ldots ,K\), then the function

has the following properties:

-

(a)

F is pointwise convex, anti-monotone, and continuously differentiable.

-

(b)

F is injective on I, and its Jacobian \(F'(\gamma )\) is invertible for all \(\gamma \in I\).

-

(c)

For all \(\gamma ,\gamma '\in I\),

$$\begin{aligned} \Vert \gamma -\gamma '\Vert _\infty \le L \Vert F(\gamma )-F(\gamma ')\Vert _\infty \quad \text { and } \quad \Vert F'(\gamma )^{-1}\Vert _\infty \le L, \end{aligned}$$where \(L:=\left( \min _{j=1,\ldots ,n,\ k=1,\ldots ,K}\ \lambda _{j,k} \right) ^{-1}\).

-

(d)

For all \(\gamma \in [a,b]^n\), \(F(b)\le F(\gamma ) \le F(a)\). Moreover, for all \(y\in \mathbb {R}^n\) with \(F(b)\le y \le F(a)\) there exists a unique \(\gamma \in I\) with \(F(\gamma )=y\). The Newton iteration

$$\begin{aligned} \gamma ^{(i+1)}:=\gamma ^{(i)}-F'(\gamma ^{(i)})^{-1} \left( F(\gamma ^{(i)}) - y \right) , \ \text { started with } \ \gamma ^{(0)}:=a \in \mathbb {R}^n, \end{aligned}$$produces a sequence \((\gamma ^{(i)})_{i\in \mathbb {N}}\subset I\) that converges quadratically to \(\gamma \).

Proof

We define r and \({\varPhi }\) as in Lemma 6. Then \(r([0,1]^n)=[a,b]^n\) and

Lemma 6 yields assertion (a) and that \({\varPhi }:\ U\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^n\) is a continuously differentiable pointwise convex and monotonic function on \(U=(-\infty ,\textstyle \frac{b}{b-a})^n\), with locally Lipschitz continuous \({\varPhi }'\), and \({\varPhi }(0)\le 0\le {\varPhi }(1)\). Also, U contains \([-\frac{1+\epsilon }{cn}-\frac{\epsilon }{2cn},1+2\epsilon ]^n\) since \(\epsilon <\frac{a}{2(b-a)}\).

Moreover, with \(z^{(j,k)},d^{(j)}\in \mathbb {R}^n\) defined by (16) and (17), we have that

It thus follows from Lemma 6 and (31) that

so that \({\varPhi }\) fulfills the assumptions of Theorem 3 which then yields the assertions (b)–(d). \(\square \)

To prove Theorem 5(b), we need to ensure that there exist Neumann data \(g_j\in L^2(\partial {\varOmega })\) so that the corresponding solutions \(u_{\kappa ^{(j,k)}}^{g_j}\) are much larger on \({\varGamma }_j\) than on \({\varGamma }{\setminus } {\varGamma }_j\), and this property has to hold for several Robin transmission coefficients \(\kappa ^{(j,k)}\), \(k=1,\ldots ,K\), simultaneously.

Note that for fixed \(j\in \{1,\ldots ,n\}\) the Robin transmission coefficients \(\kappa ^{(j,k)}\) only differ on \({\varGamma }_j\). Hence, the following lemma will allow us to estimate \(u_{\kappa ^{(j,k)}}^{g_j}\) on \({\varGamma }_j\) by \(u_{\kappa ^{(j,1)}}^{g_j}\).

Lemma 9

Let \(\gamma ^{(1)},\gamma ^{(2)}\in L^\infty _+({\varGamma })\) with \(\gamma ^{(1)}=\gamma ^{(2)}\) on \({\varGamma }{\setminus } {\varGamma }_0\), where \({\varGamma }_0\) is a measurable subset of \({\varGamma }\) with positive measure. Then, for all \(g\in L^2(\partial {\varOmega })\), the corresponding solutions \(u_1,u_2\in H^1({\varOmega })\) of (22)–(25) with \(\gamma =\gamma ^{(1)}\), and \(\gamma =\gamma ^{(2)}\), respectively, fulfill

Proof

We proceed analogously to [50, Lemma 3.6]. It follows from the variational formulation (26) that

Hence, we obtain

and the assertion follows. \(\square \)

The next lemma will allow us to construct \(u_{\kappa ^{(j,k)}}^{g_j}\) for which \(u_{\kappa ^{(j,1)}}^{g_j}\) is large on \({\varGamma }_j\) (and thus by Lemma 10 all \(u_{\kappa ^{(j,k)}}^{g_j}\) are large on \({\varGamma }_j\)), and at the same time all \(u_{\kappa ^{(j,k)}}^{g_j}\) are small on \({\varGamma }{\setminus } {\varGamma }_j\).

Lemma 10

Let \({\varGamma }_0\) be a measurable subset of \({\varGamma }\) with positive measure, \(K\in \mathbb {N}\), and \(\gamma ^{(1)},\gamma ^{(2)},\ldots ,\gamma ^{(K)}\in L^\infty _+({\varGamma })\).

Then, for all \(C>0\), there exists \(g\in L^2(\partial {\varOmega })\), so that the corresponding solutions \(u_1,u_2,\ldots ,u_K\in H^1({\varOmega })\) of (22)–(25) fulfill

Proof

The existence of simultaneously localized potentials for the fractional Schrödinger equation has recently been shown in [51, Theorem 3.11], and we proceed similarly in this proof. Following the original localized potentials approach in [41], we start by reformulating the assertion as operator range (non-)inclusions, by introducing the operators

where \(k\in \{1,\ldots ,K\}\), \(v_0\in H^1({\varOmega })\) solves

and \(v_k\in H^1({\varOmega })\) solves

It is easily shown (see, e.g., the proof of [53, Theorem 3.1]) that the adjoints of these operators are given by

where \(u_1,\ldots ,u_K\in H^1({\varOmega })\) solve (22)–(25) with Neumann boundary data g and Robin transmission coefficients \(\gamma ^{(1)},\ldots ,\gamma ^{(K)}\), respectively.

By a simple normalization argument, the assertion is now equivalent to showing that

Using a functional analytic relation between operator ranges and the norms or their adjoints (cf., [41, Lemma 2.5], [36, Cor. 3.5]), the property (34) (and thus the assertion) is proven if we can show that

We prove (35) by contradiction, and assume that

Then, for every \(f_0\in L^2({\varGamma }_0)\), there exist \(f_1,\ldots ,f_K\in L^2({\varGamma }{\setminus } {\varGamma }_0)\), so that

Let \(v_0,\ldots ,v_K\in H^1({\varOmega })\) be the associated solutions from the definition of \(A_0,\ldots ,A_K\) (with \(f=f_k\)), and set \(v:=v_1+\cdots +v_K-v_0\). Then \(v|_{\partial {\varOmega }}=0\), and \(\partial _\nu v|_{\partial {\varOmega }}=0\), so that by unique continuation \(v=0\) in \({\varOmega }{\setminus } {\overline{D}}\). But this also yields that \(v|_{{\varGamma }}=0\), and from this we obtain that \(v=0\) in D, so that \(v=0\) in all of \({\varOmega }\).

Hence, using (32) and (33), we obtain for all \(w\in H^1({\varOmega })\),

and this shows that

However, since this holds for all \(f_0\in L^2({\varGamma }_0)\), this would imply that

where

are the compact trace operator and the continuous multiplication operator by \(\gamma ^{(1)}-\gamma ^{(k)}\). Hence, the closed infinite-dimensional space \(L^2({\varGamma }_0)\) would be the range of a compact operator, which is not possible cf., e.g., [92, Thm. 4.18]. This contradiction shows that (35) must hold, and thus the assertion is proven. \(\square \)

Proof of Theorem 5

-

1.

follows from Lemma 8.

-

2.

Let \(j\in \{1,\ldots ,n\}\). As in the proof of Theorem 4(b), it follows from the simultaneously localized potentials result in Lemma 10, and a density argument, that \(M_m^{(j)}\in \mathbb {R}^{m\times m}\) will have a positive eigenvalue if the dimension \(m\in \mathbb {N}\) is sufficiently large. Hence, for sufficiently large m, an eigenvector \(v^{(j)}\in \mathbb {R}^m\) corresponding to a largest eigenvalue of \(M_m^{(j)}\) will fulfill (with \(g_j:=\sum _{l=1}^m v^{(j)}_l f_l\in L^2(\partial {\varOmega })\))

$$\begin{aligned} 0 \le (v^{(j)})^T M_m^{(j)} v^{(j)}&=\frac{\alpha }{2} \int _{{\varGamma }_j} |u_{\kappa ^{(j,1)}}^{g_j}|^2 \,{\mathrm{d}} s - \beta \sum _{k=1}^K \int _{{\varGamma }{\setminus } {\varGamma }_j} |u_{\kappa ^{(j,k)}}^{g_j}|^2 \,{\mathrm{d}} s. \end{aligned}$$Using that for all \(k\in \{1,\ldots ,K\}\)

$$\begin{aligned} \inf (\kappa ^{(j,1)}|_{{\varGamma }_j})&= b+ (b-a)\left( \frac{2+3\epsilon }{2cn}\right) \ge b,\\ \Vert \kappa ^{(j,k)} - \kappa ^{(j,1)}\Vert _{L^\infty ({\varGamma }_j)},&\le (b-a) (K-1)\frac{\epsilon }{2cn} \le (b-a) \left( 1+\epsilon + \frac{1+\epsilon }{cn}\right) , \end{aligned}$$we have that

$$\begin{aligned} 1+ \frac{ \Vert \kappa ^{(j,k)} - \kappa ^{(j,1)}\Vert _{L^\infty ({\varGamma }_j)}}{\inf (\kappa ^{(j,1)}|_{{\varGamma }_j})} \le 1+ \frac{b-a}{b} \left( 1+\epsilon + \frac{1+\epsilon }{cn}\right) =\frac{1}{\alpha }, \end{aligned}$$and thus it follows from Lemma 9

$$\begin{aligned}&\frac{1}{2} \int _{{\varGamma }_j} |u_{\kappa ^{(j,k)}}^{g_j}|^2 \,{\mathrm{d}} s - \beta \int _{{\varGamma }{\setminus } {\varGamma }_j} |u_{\kappa ^{(j,k)}}^{g_j}|^2 \,{\mathrm{d}} s\\&\quad \ge \frac{\alpha }{2} \int _{{\varGamma }_j} |u_{\kappa ^{(j,1)}}^{g_j}|^2 \,{\mathrm{d}} s - \beta \sum _{k'=1}^K \int _{{\varGamma }{\setminus } {\varGamma }_j} |u_{\kappa ^{(j,k')}}^{g_j}|^2 \,{\mathrm{d}} s \ge 0. \end{aligned}$$Hence, (31) is fulfilled.

\(\square \)

Remark 2

Regarding the formulation of Theorem 5(b), note that we actually prove that the matrix \(M_m^{(j)}\in \mathbb {R}^{m\times m}\) has a positive eigenvalue if the dimension m is sufficiently large, and that an eigenvector corresponding to a positive eigenvalue leads to a boundary current \(g_j\) that fulfills (31). But the estimates that we use in the proof of 5(b) are far from being sharp, so that it seems worth checking (31) already for eigenvectors to a largest eigenvalue that is not yet positive.

3.3 Numerical results

We test our results on the simple example setting shown in Fig. 1. \({\varOmega }\subset \mathbb {R}^2\) is the two-dimensional unit circle, and D is a square with corner coordinates \((0,-0.1)\), (0, 0.3), \((-0.4,0.3)\) and \((-0.4,-0.1)\). The boundary \({\varGamma }=\partial D\) is decomposed into \(n=4\) parts \({\varGamma }_1,\ldots ,{\varGamma }_4\) denoting (in this order) the right, top, left, and bottom side of the square. We assume that the unknown true Robin transmission coefficient \({{\hat{\gamma }}}:\ {\varGamma }\rightarrow \mathbb {R}\) is a-priori known to be bounded by \(a:=1\) and \(b:=2\) and that it is known to be piecewise-constant with respect to the partition of \({\varGamma }\), i.e.,

Recall that, for the ease of notation, we identify a piecewise-constant function \(\gamma \in L^2({\varGamma })\) with the vector \((\gamma _1,\ldots ,\gamma _4)\in \mathbb {R}^4\), and simply write a for the constant function \(\gamma (x)=a\), and for the vector \((a,a,a,a)\in \mathbb {R}^4\) (and use b analogously).

3.3.1 Choosing the measurements

We first apply Theorem 5 to construct \(n=4\) Neumann boundary functions \(g_1,\ldots , g_4\in L^2(\partial {\varOmega })\) so that the four measurements

and the Newton method applied to F globally converges.

To implement Theorem 5, we choose \(\epsilon :=0.9\,\frac{a}{2(b-a)}\), which yields \(K=173\), and \((f_m)_{m\in \mathbb {N}}\subset L^2(\partial {\varOmega })\) as the standard trigonometric polynomial basis functions

with \(\varphi \) denoting the angle of a point on the unit circle \(\partial {\varOmega }\). For each \(j\in \{1,\ldots ,n\}\), we then calculate the matrix \(M_m^{(j)}\in \mathbb {R}^{m\times m}\) starting with \(m=1\), and increase the dimension \(m\in \mathbb {N}\), until an eigenvector \(v^{(j)}\in \mathbb {R}^m\) corresponding to a largest eigenvalue of this matrix has the property that

fulfills (31) for all \(k=1,\ldots ,K\). To calculate the entries of \(M_m^{(j)}\), and for checking (31), the required solutions \(u^{f_i}_{\kappa ^{(j,k)}}\) of the Robin transmission problem (22)–(25) with Neumann boundary functions \(f_i\), \(i=1,\ldots ,m\) and Robin transmission coefficients \(\kappa ^{(j,k)}\), as defined in (30), were obtained using the commercial finite element software Comsol.

For our setting we had to increase the dimension up to at most \(m=15\), so that all \(g_j\) are trigonometric polynomials of order less or equal 7. Figure 2 shows the boundary functions \(g_j\) plotted with respect to the boundary angle on the unit circle \(\partial {\varOmega }\).

Four boundary currents \(g_1,\ldots ,g_4\in L^2(\partial {\varOmega })\) for which the measurements \(F(\gamma ):=\left( \int _{\partial {\varOmega }} g_j {\varLambda }(\gamma ) g_j \,{\mathrm{d}} s\right) _{j=1}^4\) uniquely determine \(\gamma \in [a,b]^4\subset \mathbb {R}^4\) by a globally convergent Newton method

From checking (31), we also obtain the Lipschitz stability constant for \(F(\gamma ):=\left( \int _{\partial {\varOmega }} g_j {\varLambda }(\gamma ) g_j \,{\mathrm{d}} s\right) _{j=1}^4\) as described in lemma 8(c). For our setting we obtain the stability estimate

where I is the slightly enlarged interval from Lemma 8.

Note that here and in the following we consider the measurement error relative to \( \Vert F(2)-F(1)\Vert _\infty \) as this is the width of the measuring range \(F(1)\ge F(\gamma )\ge F(2)\).

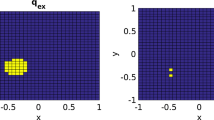

The property (31) can be interpreted in the sense that the boundary current \(g_j\) generates an electric potential for which the corresponding solution \(|u_{\kappa }^{g_j}|^2\) is much larger on \({\varGamma }_j\) than on the remaining boundary \({\varGamma }{\setminus } {\varGamma }_j\), and that this simultaneously holds for several (but finitely many) Robin transmission coefficients \(\kappa =\kappa ^{(j,k)}\). To illustrate this localization property, Fig. 3 shows \(|u_{\kappa }^{g_j}|^2\) (in logarithmic scale) for \(k=1\) and \(k=K\).

Plot of \(\log |u_{\kappa }^{g_j}|^2\) generated by the four boundary currents \(g_j\) in Fig. 2 for \(j=1,\ldots ,4\) (first to fourth column) and \(\kappa =\kappa ^{(j,k)}\) for \(k=1\) (first line) and \(k=K\) (last line) showing the localization on boundary part \({\varGamma }_j\)

Let us make a comment on improving the computation time. Note that the properties of F only depend on whether the used Neumann functions \(g_j\) have the desired property (31), and that our rigorous approach of constructing \(g_j\) is computationally more expensive than checking whether some given \(g_j\) fulfills (31). In fact, for fixed \(j\in \{1,\ldots ,n\}\), the construction of the matrix \(M_m^{(j)}\) requires solving the PDE (22)–(25) for all combinations of K different Robin transmission coefficients and m different Neumann boundary values. On the other hand, checking whether a given Neumann boundary function \(g_j\) has the desired property (31) only requires solving the PDE (22)–(25) for K different Robin transmission coefficients and the single Neumann boundary function \(g_j\). Moreover, as long as \(g_j\) does not fulfill (31), the checking might require very few PDE solutions if (31) already fails to hold for a small k.

Hence, one might try computationally cheaper heuristic approaches to construct \(g_j\) that satisfy (31). In our experiments, we successfully used the ad-hoc approximation

(which only requires 2m PDE solutions), and always found that increasing the dimension \(m\in \mathbb {N}\) lead to Neumann boundary function \(g_j\) fulfilling (31) for all \(k\in \{1,\ldots ,K\}\). Moreover, in our experiments, we found the functions \(g_j\) constructed with this faster heuristic approach virtually identical to those constructed with the exact matrix \(M_m^{(j)}\) from Theorem 5.

3.3.2 Global convergence of Newton’s method

We numerically study the theoretically predicted global convergence of the standard Newton method when applied to the measurements \(g_1,\ldots ,g_4\) constructed in the last subsection. We slightly change the definition of F and define the measurements relative to the known lower bound \(\gamma =a\)

and numerically evaluate F using that, for all \(g\in L^2(\partial {\varOmega })\), and \(\gamma \in L^\infty _+({\varGamma })\),

which immediately follows from the variational formulation of the Robin transmission problem (26). Note that this approach is numerically more stable than calculating \({\varLambda }(\gamma )\) and \({\varLambda }(a)\) separately as it avoids loss of significance effects.

We choose the true coefficient value as \({{\hat{\gamma }}}:=(1.3,1.8,1.5,1.9)\), and first test the reconstruction for noiseless data \(y^\delta :={\hat{y}}:=F({{\hat{\gamma }}})\). Starting with the lower bound \(\gamma ^{(0)}:=(1,1,1,1)\), we implement the standard Newton method

where the (j, l)th entry of the Jacobian matrix \(F'(\gamma )\in \mathbb {R}^{4\times 4}\) is given by \(\int _{{\varGamma }_l} |u^{(g_j)}_\gamma |^2 \,{\mathrm{d}} s\), cf. Lemma 6.

We repeat this for noisy data with relative noise level \(\delta >0\), that we obtain by adding a vector with random entries to \({\hat{y}}\), so that \( \Vert y^\delta -{\hat{y}}\Vert _\infty =\delta \Vert F(b)\Vert _\infty \). Note that \(F(a)=0\), so that this chooses the norm level relative to the measurement range. For the noiseless case \(\delta =0\) we committed the so-called inverse crime of using the same forward solver (i.e., the same finite element mesh) for simulating the data \({\hat{y}}=F({{\hat{\gamma }}})\) and for evaluating F and \(F'\) in the Newton iteration. For the noisy cases \(\delta >0\), we used a different mesh for the forward and inverse solvers.

Figure 4 shows the error of the first Newton iterations for the case \(\delta =0\), \(\delta =10^{-5}\), \(\delta =10^{-3}\), and \(\delta =10^{-1}\), and demonstrates the theoretically predicted quadratic convergence properties. At this point, let us stress that also for noisy data \(y^\delta \), Lemma 8 yields that there exists a unique solution \(y^\delta \in I\supseteq [a,b]^n\) of

and that the standard Newton method converges to this solution \(\gamma ^\delta \), as long as \(y^\delta \) lies within the bounds \(F(a)\ge y^\delta \ge F(b)\) (which is easily guaranteed by capping or flooring the values in \(y^\delta \)). Moreover, the obtained solution will satisfy the error estimate

due to the stability estimate (36) obtained in the last subsection.

We finish this subsection with an example where the true Robin transmission coefficient \({{\hat{\gamma }}}\in L^\infty _+({\varGamma })\) is not piecewise constant but within the a-priori known bounds

Then \({\hat{y}}=\left( \int _{\partial {\varOmega }} g_j \left( {\varLambda }(\gamma ) - {\varLambda }(a)\right) g_j \,{\mathrm{d}} s\right) _{j=1}^4\in \mathbb {R}^4\) will still satisfy \(F(a)\ge {\hat{y}} \ge F(b)\), so that there exists a unique solution \(\gamma \in I\supseteq [a,b]^n\) of \(F(\gamma )={\hat{y}}\), i.e., there exists a unique piecewise constant Robin transmission coefficient that leads to the same measured data as the true non-piecewise-constant coefficient function. The Newton iteration applied to \({\hat{y}}\) (or a noisy version \(y^\delta \)) will globally converge to this piecewise constant solution \(\gamma \) (or an approximation \(\gamma ^\delta \)), see Fig. 5 for a numerical example.

3.3.3 Effect of interval width and number or unknowns

Our result in Theorem 5 holds for any a-priori known bounds \(b>a>0\) and any number of unknowns \(n\in \mathbb {N}\). Thus, in theory, we can treat arbitrary large intervals [a, b] and arbitrary fine resolutions of \({\varGamma }\). However, numerically, the constructed trigonometric polynomials \(g_j\) will quickly become more and more oscillatory, and the calculated Lipschitz constants will quickly increase.

To demonstrate the effect of the interval width, we proceed as in Sect. 3.3.1 to calculate four boundary currents \(g_1,\ldots ,g_4\in L^2(\partial {\varOmega })\) that uniquely determine \(\gamma \in [a,b]^4\) and yield global Newton convergence for \(a=1\), and \(b\in \{2,3,4,5\}\). Table 1 shows the dimension of the trigonometric polynomial subspace of \(L^2(\partial {\varOmega })\) that contains \(g_1,\ldots ,g_4\) and the obtained Lipschitz constant for the inverse problem of determining \(\gamma \) from the corresponding measurements.

To demonstrate the effect of the number of unknowns, we then replace the square D by regular polygons with \(n=3\), \(n=4\), \(n=5\), and \(n=6\) sides keeping the polygon center and circumradius the same as in the square (\(n=4\)) case. We assume that \(\gamma \) is piecewise constant with respect to the polygon sides. As in Sect. 3.3.1 we then calculate n boundary currents \(g_1,\ldots ,g_n\in L^2(\partial {\varOmega })\) that uniquely determine \(\gamma \in [1,2]^n\) and yield global Newton convergence. The required dimension of the trigonometric polynomial subspace of \(L^2(\partial {\varOmega })\) and the obtained Lipschitz constant are shown in Table 2.

In both situations, the boundary currents quickly become highly oscillatory, and the calculated stability constant worsens. Hence, at the current state, our approach will only be feasible for moderate contrasts and relatively few unknowns as stated in the introduction. It should be noted, however, that our criterion (31) in Theorem 5 is sufficient but possibly not necessary for uniqueness, Lipschitz stability and global Newton convergence. The constructed boundary currents and the calculated Lipschitz constants may be far from optimal. Since our result is (to the knowledge of the author) the very first on uniqueness, global convergence and explicit Lipschitz stability constants for a discretized inverse coefficient problem, there may well be room for improvement and significantly sharper estimates that could practically yield in less oscillations and better stability constants.

4 Conclusions

We have derived a method to determine which (finitely many) measurements uniquely determine the unknown coefficient in an inverse coefficient problem with a given resolution, and proved global convergence of Newton’s method for the resulting discretized non-linear inverse problem. Our method also allows to explicitly calculate the Lipschitz stability constant, and yields an error estimate for noisy data. To the knowledge of the author, these are the first such results for discretized inverse coefficient problems.