Abstract

We consider Pareto optimal matchings (POMs) in a many-to-many market of applicants and courses where applicants have preferences, which may include ties, over individual courses and lexicographic preferences over sets of courses. Since this is the most general setting examined so far in the literature, our work unifies and generalizes several known results. Specifically, we characterize POMs and introduce the Generalized Serial Dictatorship Mechanism with Ties (GSDT) that effectively handles ties via properties of network flows. We show that GSDT can generate all POMs using different priority orderings over the applicants, but it satisfies truthfulness only for certain such orderings. This shortcoming is not specific to our mechanism; we show that any mechanism generating all POMs in our setting is prone to strategic manipulation. This is in contrast to the one-to-one case (with or without ties), for which truthful mechanisms generating all POMs do exist.

Similar content being viewed by others

1 Introduction

We study a many-to-many matching market that involves two finite disjoint sets, a set of applicants and a set of courses. Each applicant finds a subset of courses acceptable and has a preference ordering, not necessarily strict, over these courses. Courses do not have preferences. Moreover, each applicant has a quota on the number of courses she can attend, while each course has a quota on the number of applicants it can admit.

A matching is a set of applicant-course pairs such that each applicant is paired only with acceptable courses and the quotas associated with the applicants and the courses are respected. The problem of finding an “optimal” matching given the above market is called the Course Allocation problem (CA). Although various optimality criteria exist, Pareto optimality (or Pareto efficiency) remains the most popular one (see, e.g., [1, 2, 8, 9, 19]). Pareto optimality is a fundamental concept that economists regard as a minimal requirement for a “reasonable” outcome of a mechanism. A matching is a Pareto optimal matching (POM) if there is no other matching in which no applicant is worse off and at least one applicant is better off. Our work examines Pareto optimal many-to-many matchings in the setting where applicants’ preferences may include ties. In the special case where each applicant and course has quota equal to one, our setting reduces to the extensively studied House Allocation problem (HA) [1, 15, 21], also known as the Assignment problem [4, 12]. Computational aspects of HA have been examined thoroughly [2, 18] and particularly for the case where applicants’ preferences are strict. In [2] the authors provide a characterization of POMs in the case of strict preferences and utilize it in order to construct polynomial-time algorithms for checking whether a given matching is a POM and for finding a POM of maximum size. They also show that any POM in an instance of HA with strict preferences can be obtained through the well-known Serial Dictatorship Mechanism (SDM) [1]. SDM is a straightforward greedy algorithm that allocates houses sequentially according to some exogenous priority ordering of the applicants.

Recently, the above results have been extended in two different directions. The first one [17] considers HA in settings where preferences may include ties. Prior to [17], few works in the literature had considered extensions of SDM to such settings. The difficulty regarding ties, observed already in [20], is that the assignments made in the individual steps of the SDM are not unique, and an unsuitable choice may result in an assignment that violates Pareto optimality. To see this, consider a setting with two applicants a 1 and a 2, and two houses h 1 and h 2. Assume that a 1 finds both houses acceptable and is indifferent between them, and that a 2 finds only h 1 acceptable. The only Pareto optimal matching for this setting is {(a 1, h 2),(a 2, h 1)}. Assume that a 1 is served first and that, as both houses are equally acceptable to her, is assigned h 1 (after an arbitrary tie-breaking). Therefore when a 2’s turn arrives, there is no house remaining that she finds acceptable, and is hence left unmatched, resulting in a matching that is not Pareto optimal. In [5] and [20] an implicit extension of SDM is provided (in the former case for dichotomous preferences, where an applicant’s preference list comprises a single tie containing all acceptable houses), but without an explicit description of an algorithmic procedure. Krysta et al. [17] describe a mechanism called the Serial Dictatorship Mechanism with Ties (SDMT) that combines SDM with the use of augmenting paths to ensure Pareto optimality. SDMT includes an augmentation step, in which applicants already assigned a house may exchange it for another, equally preferred one, to enable another applicant to take a house that is most preferred given the assignments made so far. They also show that any POM in an instance of HA with ties can be obtained by an execution of SDMT and also provide the so-called Random Serial Dictatorship Mechanism with Ties (RSDMT) whose (expected) approximation ratio is \(\frac {e}{e-1}\) with respect to the maximum-size POM.

The second direction [8] extends the results of [2] to the many-to-many setting (i.e., CA) with strict preferences, while also allowing for a structure of applicant-wise acceptable sets that is more general than the one implied by quotas. Namely, [8] assumes that each applicant selects from a family of course subsets that is downward closed with respect to inclusion. This work provides a characterization of POMs assuming that the preferences of applicants over sets of courses are obtained from their (strict) preferences over individual courses in a lexicographic manner. Using this characterization, it is shown that deciding whether a given matching is a POM can be accomplished in polynomial time. In addition, [8] generalizes SDM to provide the Generalized Serial Dictatorship Mechanism (GSD), which can be used to obtain any POM for CA under strict preferences. The main idea of GSD is to allow each applicant to choose not her most preferred set of courses at once but, instead, only one course at a time (i.e., the most preferred among non-full courses that can be added to the courses already chosen). This result is important as the version of SDM where an applicant chooses immediately her most preferred set of courses cannot obtain all POMs.

Our Contribution

In the current work, we combine the directions appearing in [17] and [8] to explore the many-to-many setting in which applicants have preferences, which may include ties, over individual courses. We extend these preferences to sets of courses lexicographically, since lexicographic set preferences naturally describe human behavior [13], they have already been considered in models of exchange of indivisible goods [8, 11] and also possess theoretically interesting properties including responsiveness [16].

We provide a characterization of POMs in this setting, leading to a polynomial-time algorithm for testing whether a given matching is Pareto optimal. We introduce the Generalized Serial Dictatorship Mechanism with Ties (GSDT) that generalizes both SDMT and GSD. SDM assumes a priority ordering over the applicants, according to which applicants are served one by one by the mechanism. Since in our setting applicants can be assigned more than one course, each applicant can return to the ordering several times (up to her quota), each time choosing just one course. The idea of using augmenting paths [17] has to be employed carefully to ensure that during course shuffling no applicant replaces a previously assigned course for a less preferred one. To achieve this, we utilize methods and properties of network flows. Although we prove that GSDT can generate all POMs using different priority orderings over applicants, we also observe that some of the priority orderings guarantee truthfulness whereas some others do not. That is, there may exist priority orderings for which some applicant benefits from misrepresenting her preferences. This is in contrast to SDM and SDMT in the one-to-one case in the sense that all executions of these mechanisms induce truthfulness. This shortcoming however is not specific to our mechanism, since we establish that any mechanism generating all POMs is prone to strategic manipulation by one or more applicants.

For an extended abstract containing the results presented in this paper, see [7].

Organization of the Paper

In Section 2 we define our notation and terminology. The characterization is provided in Section 3, while GSDT is presented in Section 4. A discussion on applicants’ incentives in GSDT is provided in Section 5. Avenues for future research are discussed in Section 6.

2 Preliminary Definitions of Notation and Terminology

Let \(A=\{a_{1},a_{2},\cdots ,a_{n_{1}}\}\) be the set of applicants, \(C=\{c_{1},c_{2}, \cdots , c_{n_{2}}\}\) the set of courses and [i] denote the set {1,2,…, i}. Each applicant a has a quota b(a) that denotes the maximum number of courses a can accommodate into her schedule, and likewise each course c has a quota q(c) that denotes the maximum number of applicants it can admit. Each applicant finds a subset of courses acceptable and has a transitive and complete preference ordering, not necessarily strict, over these courses. We write c≻ a c′ to denote that applicant a(strictly) prefers course c to course c′, and c≃ a c′ to denote that a is indifferent betweenc and c′. We write c≽ a c′ to denote that a either prefers c to c′ or is indifferent between them, and say that aweakly prefersc to c′.

Because of indifference, each applicant divides her acceptable courses into indifference classes such that she is indifferent between the courses in the same class and has a strict preference over courses in different classes. Let \(C^{a}_{t}\) denote the t’th indifference class, or tie, of applicant a where t∈[n 2]. The preference list of any applicant a is the tuple of sets \(C^{a}_{t}\), i.e., \(P(a) = (C^{a}_{1}, C^{a}_{2} , \cdots ,C^{a}_{n_{2}})\); we assume that \(C^{a}_{t} = \emptyset \) implies \(C^{a}_{t^{\prime }} = \emptyset \) for all t′>t. Occasionally we consider P(a) to be a set itself and write c∈P(a) instead of \(c \in C^{a}_{t}\) for some t. We denote by \(\mathcal {P}\) the joint preference profile of all applicants, and by \(\mathcal {P}(-a)\) the joint profile of all applicants except a. Under these definitions, an instance of CA is denoted by \(I=(A, C, \mathcal {P}, b, q)\). Such an instance appears in Table 1.

A (many-to-many) assignment μ is a subset of A×C. For a∈A, μ(a)={c∈C:(a, c)∈μ} and for c∈C, μ(c)={a∈A:(a, c)∈μ}. An assignment μ is a matching if μ(a)⊆P(a)—and thus μ is individually rational, |μ(a)|≤b(a) for each a∈A and |μ(c)|≤q(c) for each c∈C. We say that a is exposed if |μ(a)|<b(a), and is full otherwise. Analogous definitions of exposed and full hold for courses.

For an applicant a and a set of courses S, we define the generalized characteristic vector χ a (S) as the vector \((|S\cap C_{1}^{a}|, |S\cap C_{2}^{a}|,\ldots ,|S\cap C_{n_{2}}^{a}| )\). We assume that for any two sets of courses S and U, a prefers S to U if and only if χ a (S)> lex χ a (U), i.e., if and only if there is an indifference class \(C_{t}^{a}\) such that \(|S\cap C_{t}^{a}|>|U\cap C_{t}^{a}|\) and \(|S\cap C_{t^{\prime }}^{a}|= |U\cap C_{t^{\prime }}^{a}|\) for all t′<t. If a neither prefers S to U nor U to S, then she is indifferent between S and U. We write S≻ a U if a prefers S to U, S≃ a U if a is indifferent between S and U, and S≽ a U if a weakly prefers S to U.

A matching μ is a Pareto optimal matching (POM) if there is no other matching in which some applicant is better off and none is worse off. Formally, μ is Pareto optimal if there is no matching μ′ such that μ′(a)≽ a μ(a) for all a∈A, and \(\mu ^{\prime }(a^{\prime }) {\succ }_{a^{\prime }} \mu (a^{\prime })\) for some a′∈A. If such a μ′ exists, we say that μ′Pareto dominates μ.

A deterministic mechanism ϕ maps an instance to a matching, i.e. ϕ:I↦μ where I is a CA instance and μ is a matching in I. A randomized mechanism ϕ maps an instance to a distribution over possible matchings. Applicants’ preferences are private knowledge and an applicant may prefer not to reveal her preferences truthfully. A deterministic mechanism is dominant strategy truthful (or just truthful) if all applicants always find it best to declare their true preferences, no matter what other applicants declare. Formally speaking, for every applicant a and every possible declared preference list P′(a), \(\phi (P(a),\mathcal {P}(-a))\succeq _{a}\phi (P^{\prime }(a),\mathcal {P}(-a))\), for all \(P(a), \mathcal {P}(-a)\). A randomized mechanism ϕ is universally truthful if it is a probability distribution over deterministic truthful mechanisms.

3 Characterizing Pareto Optimal Matchings

Manlove [18, Sec. 6.2.2.1] provided a characterization of Pareto optimal matchings in HA with preferences that may include indifference. He defined three different types of coalitions with respect to a given matching such that the existence of any means that a subset of applicants can trade among themselves (possibly using some exposed course) ensuring that, at the end, no one is worse off and at least one applicant is better off. He also showed that if no such coalition exists, then the matching is guaranteed to be Pareto optimal. We show that this characterization extends to the many-to-many setting, although the proof is more complex and involved than in the one-to-one setting. We then utilize the characterization in designing a polynomial-time algorithm for testing whether a given matching is Pareto optimal.

In what follows we assume that in each sequence \(\mathfrak {C}\) no applicant or course appears more than once.

An alternating path coalition with respect to μ comprises a sequence \(\mathfrak {C}=\langle c_{j_{0}}, a_{i_{0}}, c_{j_{1}}, a_{i_{1}},\ldots ,c_{j_{r-1}},a_{i_{r-1}},c_{j_{r}}\rangle \) where r ≥ 1, \(c_{j_{k}} \in \mu (a_{i_{k}})\) (0≤k≤r−1), \(c_{j_{k}} \not \in \mu (a_{i_{k-1}})\) (1≤k≤r), \(a_{i_{0}}\) is full, and \(c_{j_{r}}\) is an exposed course. Furthermore, \(a_{i_{0}}\) prefers \(c_{j_{1}}\) to \(c_{j_{0}}\) and, if r ≥ 2, \(a_{i_{k}}\) weakly prefers \(c_{j_{k+1}}\) to \(c_{j_{k}}\) (1≤k≤r−1).

An augmenting path coalition with respect to μ comprises a sequence \(\mathfrak {C}=\langle a_{i_{0}}, c_{j_{1}},a_{i_{1}}, \ldots ,c_{j_{r-1}},a_{i_{r-1}},c_{j_{r}}\rangle \) where r ≥ 1, \(c_{j_{k}} \in \mu (a_{i_{k}})\) (1≤k≤r−1), \(c_{j_{k}} \not \in \mu (a_{i_{k-1}})\) (1≤k≤r), \(a_{i_{0}}\) is an exposed applicant, and \(c_{j_{r}}\) is an exposed course. Furthermore, \(a_{i_{0}}\) finds \(c_{j_{1}}\) acceptable and, if r ≥ 2, \(a_{i_{k}}\) weakly prefers \(c_{j_{k+1}}\) to \(c_{j_{k}}\) (1≤k≤r−1).

A cyclic coalition with respect to μ comprises a sequence \(\mathfrak {C}=\langle c_{j_{0}}, a_{i_{0}}, c_{j_{1}}, a_{i_{1}},\ldots ,c_{j_{r-1}}, a_{i_{r-1}}\rangle \) where r ≥ 2, \(c_{j_{k}} \in \mu (a_{i_{k}})\) (0≤k≤r−1), and \(c_{j_{k}} \not \in \mu (a_{i_{k-1}})\) (1≤k≤r). Furthermore, \(a_{i_{0}}\) prefers \(c_{j_{1}}\) to \(c_{j_{0}}\) and \(a_{i_{k}}\) weakly prefers \(c_{j_{k+1}}\) to \(c_{j_{k}}\) (1≤k≤r−1). (All subscripts are taken modulo r when reasoning about cyclic coalitions).

We define an improving coalition to be an alternating path coalition, an augmenting path coalition or a cyclic coalition.

Given an improving coalition \(\mathfrak {C}\), the matching

is defined to be the matching obtained from μ by satisfying \(\mathfrak {C}\) (δ = 1 in the case that \(\mathfrak {C}\) is an augmenting path coalition, otherwise δ = 0).

We will soon show that improving coalitions are at the heart of characterizing Pareto optimal matchings (Theorem 2). The next lemma will come in handy in the proof of Theorems 2 and 10. We say that a sequence of applicants and courses is a pseudocoalition if it satisfies all conditions of an improving coalition except that some courses or applicants may appear more than once.

Lemma 1

Let a matching μ in an instance I of CA admit a pseudocoalition K of length ℓ for some finite ℓ. Then μ admits an improving coalition \(\mathfrak {C}\) of length at most ℓ.

Proof

We prove this by induction. Obviously ℓ ≥ 2. For the base case, it is easy to see that K itself is an augmenting path coalition (where r = 1) if ℓ = 2 , an alternating path coalition (where r = 1) if ℓ = 3, a cyclic coalition (where r = 2) if ℓ = 4 and K ends with an applicant, and an augmenting path coalition (where r = 2) if ℓ = 4 and K ends with a course. Assume that the claim holds for all pseudocoalitions of length d, d<ℓ. We show that it also holds for any given pseudocoalition K of length ℓ. In the rest of the proof we show that either K is in fact an improving coalition, or we can derive a shorter pseudocoalition K′ from K, hence completing the proof. □

The Case for a Repeated Course

Assume that a course c appears more than once in K. We consider two different scenarios.

-

1.

If c is not the very first element of K, then sequence K is in the following form where where \(c=c_{j_{x}}=c_{j_{y}}\).

$$\dots, a_{i_{x-1}}, \overleftrightarrow{c_{j_{x}}, a_{i_{x}}, \dots}, c_{j_{y}}, \dots $$In this case, we simply delete the portion of K under the arrow. Note that in the new sequence \(c_{j_{y}}\) appears right after after \(a_{i_{x-1}}\), but then \(c_{j_{y}}=c_{j_{x}}\). Hence we have obtained a shorter sequence, and it is easy to verify that this new sequence is a pseudocoalition.

-

2.

If c is the first element of K, then K is in the following form where \(c=c_{j_{0}}=c_{j_{y}}\).

$$\overleftrightarrow{c_{j_{0}}, a_{i_{0}}, \dots, a_{i_{y-1}}}, c_{j_{y}}, \dots $$In this case, we simply only keep the portion of K under the arrow, i.e. our new sequence starts with \(c_{j_{0}}\) and ends with \(a_{i_{y-1}}\). The new sequence is shorter and it is easy to verify that it is a pseudocoalition.

The Case for a Repeated Applicant

Assume that an applicant a appears more than once in K. We consider the three different possible scenarios.

-

1.

If a is not the very first element of K, nor the last one, then K is in the following form where \(a=a_{i_{x}}=a_{i_{y}}\).

$$\dots, c_{j_{x}}, \overleftrightarrow{a_{i_{x}}, c_{j_{x+1}}, \ldots, a_{i_{y-1}}}, c_{i_{y}}, a_{i_{y}}, c_{j_{y+1}}, \dots$$Note that \(c_{j_{x+1}} \succeq _{a_{i_{x}}} c_{j_{x}}\) and \(c_{j_{y+1}} \succeq _{a_{i_{x}}} c_{j_{y}}\) (since \(a_{i_{x}}=a_{j_{y}}\)). We consider two different cases.

-

(a)

If \(c_{j_{x+1}} {\succ }_{a_{i_{x}}} c_{j_{y}}\), then we simply only keep the portion of K under the arrow and add \(c_{j_{y}}\) to the beginning. That is, the new sequence is \(K^{\prime }=\langle c_{j_{y}}, a_{i_{x}}, c_{j_{x+1}}, \ldots , a_{i_{y-1}} \rangle \). K′ is shorter than K and it is easy to verify that that it is a pseudocoalition.

-

(b)

If \(c_{j_{y}} \succeq _{a_{i_{x}}} c_{j_{x+1}}\), then \(c_{j_{y+1}} \succeq _{a_{i_{x}}} c_{j_{x}}\). Then we simply remove the portion of K from \(c_{j_{x+1}}\) up to \(a_{i_{y}}\). That is, the new sequence is \(K^{\prime } =\langle {\ldots } c_{j_{x}}, a_{i_{x}}, c_{j_{y+1}}\ldots \rangle \) which is shorter than K. Note that if either \(c_{j_{x+1}} \succ _{a_{i_{x}}} c_{j_{x}}\) or \(c_{j_{y+1}} \succ _{a_{i_{x}}} c_{j_{y}}\), then \(c_{j_{y+1}} \succ _{a_{i_{x}}} c_{j_{x}}\). Hence it is easy to verify that K′ is a pseudocoalition.

-

(a)

-

2.

If a is the first element of K but not the last one, then K is in the following form where \(a=a_{i_{0}}=a_{i_{y}}\).

$$a_{i_{0}}, \overleftrightarrow{c_{j_{1}}, \ldots, c_{j_{y}}, a_{i_{y}}}, c_{j_{y+1}}, \dots$$Notice that a 0 is exposed and finds \(c_{j_{y+1}}\) acceptable and is not matched to it under μ. Hence we simply remove the portion of K under the arrow and get \(K^{\prime } =\langle a_{i_{0}}, c_{j_{y+1}}, \ldots \rangle \). K′ is shorter than K and it is easy to verify that it is a pseudocoalition.

-

3.

If a is the last element of K but not the first one, then K is of the following form where \(a=a_{i_{x}}=a_{i_{y}}\).

$$c_{j_{0}}, \ldots, c_{j_{x}}, \overleftrightarrow{a_{i_{x}}, c_{j_{x+1}}, \ldots, a_{i_{y-1}}}, c_{j_{y}}, a_{i_{y}}$$Note that, as \(a_{i_{x}}=a_{i_{y}}\), \(c_{j_{x+1}} \succeq _{a_{i_{x}}} c_{j_{x}}\) and \(c_{j_{0}} \succeq _{a_{i_{x}}} c_{j_{y}}\). Furthermore, \(a_{i_{x}}\) is not matched to \(c_{j_{0}}\) in μ. We consider two different cases.

-

(a)

If \(c_{j_{x+1}} {\succ }_{a_{i_{x}}} c_{j_{y}}\), then we do as we did in Case 1(a). That is, we only keep the portion of K under the arrow and add \(c_{j_{y}}\) to the beginning; the new sequence is \(K^{\prime }=\langle c_{j_{y}}, a_{i_{x}}, c_{j_{x+1}}, \ldots , a_{i_{y-1}} \rangle \). K′ is shorter than K and it is easy to verify that it is a pseudocoalition.

-

(b)

If \(c_{j_{y}} \succeq _{a_{i_{x}}} c_{j_{x+1}}\), then \(c_{j_{0}} \succeq _{a_{i_{x}}} c_{j_{x+1}} \succeq _{a_{i_{x}}} c_{j_{x}}\). Then we simply remove the portion of K from \(c_{j_{x+1}}\) until the end of the sequence. That is, the new sequence is \(K^{\prime } =\langle c_{j_{0}}, {\ldots } ,c_{j_{x}}, a_{i_{x}}\rangle \). K′ is shorter than K and it is easy to verify that it is a pseudocoalition.

-

(a)

Note that we do not need to check the case where K starts and ends with an applicant, since then K would not fit the definition of an improving coalition.

The following theorem gives a necessary and sufficient condition for a matching to be Pareto optimal.

Theorem 2

Given a CA instance I, a matching μ is a Pareto optimal matching in I if and only if μ admits no improving coalition.

Proof

Let μ be a Pareto optimal matching in I. Assume to the contrary that μ admits an improving coalition \(\mathfrak {C}\). It is fairly easy to see that matching \(\mu ^{\mathfrak {C}}\) obtained from μ according to equation (1) Pareto dominates μ, a contradiction.

Conversely, let μ be a matching in I that admits no improving coalition, and suppose to the contrary that it is not Pareto optimal. Therefore, there exists some matching μ′≠μ such that μ′ Pareto dominates μ. Let \(G_{\mu ,\mu ^{\prime }} = \mu \oplus \mu ^{\prime }\) be the graph representing the symmetric difference of μ and μ′. \(G_{\mu ,\mu ^{\prime }}\) is hence a bipartite graph with applicants in one side and courses in the other; by abusing notation, we may use a or c to refer to a node in \(G_{\mu ,\mu ^{\prime }}\) corresponding to applicant a∈A or course c∈C, respectively. Note that each edge of \(G_{\mu ,\mu ^{\prime }}\) either belongs to μ, referred to as a μ-edge, or to μ′, referred to as a μ′-edge.

We first provide an intuitive sketch of the rest of the proof. Our goal is to find an improving coalition, hence contradicting the assumption that μ does not admit one. We start with an applicant \(a_{i_{0}}\) who strictly prefers μ′ to μ. We choose a μ′-edge \((a_{i_{0}},c_{j_{1}})\) such that \(c_{j_{1}}\) belongs to the first indifference class where μ′ is better than μ for \(a_{i_{0}}\). Either this edge already represents an improving coalition—which is the case if \(c_{j_{1}}\) is exposed in μ—or there exists a μ-edge incident to \(c_{j_{1}}\). In this fashion, we continue by adding edges that alternatively belong to μ′ (when we are at an applicant node) and μ (when we are at a course node), never passing the same edge twice (by simple book-keeping). Most importantly, we always make sure that when we are at an applicant node, we choose a μ′-edge such that the applicant weakly prefers the course incident to the μ′-edge to the course incident to the precedent μ-edge. We argue that ultimately we either reach an exposed course (which implies that an augmenting or an alternating path coalition exists in μ) or are able to identify a cyclic coalition in μ.

Let us first provide some definitions and facts. Let A′ be the set of applicants who prefer μ′ to μ; i.e., A′ = {a|μ′(a)≻ a μ(a)}. Moreover, for each a∈A, let \(\mu ^{\prime }_{\setminus \mu }(a) = \mu ^{\prime }(a)\setminus \mu (a)\) and \(\mu _{\setminus \mu ^{\prime }}(a) = \mu (a)\setminus \mu ^{\prime }(a)\). Likewise, for each course c∈C, let \(\mu _{\setminus \mu ^{\prime }}(c) = \mu (c)\setminus \mu ^{\prime }(c)\) and \(\mu ^{\prime }_{\setminus \mu }(c) = \mu ^{\prime }(c)\setminus \mu (c)\). Note that these sets will be altered during the course of the proof. In what follows and in order to simplify presentation, we will say that we remove a μ′-edge (a, c) from \(G_{\mu ,\mu ^{\prime }}\) to signify that we remove c from \(\mu ^{\prime }_{\setminus \mu }(a)\) and remove a from \(\mu ^{\prime }_{\setminus \mu }(c)\); similarly, we say that we remove a μ-edge (a, c) from \(G_{\mu ,\mu ^{\prime }}\) to signify that we remove c from \(\mu _{\setminus \mu ^{\prime }}(a)\) and remove a from \(\mu _{\setminus \mu ^{\prime }}(c)\). The facts described below follow from the definitions and the assumption that μ′ Pareto dominates μ. Let Υ be a set containing applicants (initially, Υ = ∅); we will explain later what this set will come to contain.

- Fact 1: :

-

For every course c that is full under μ, it is the case that \(|\mu _{\setminus \mu ^{\prime }}(c)| \geq |\mu ^{\prime }_{\setminus \mu }(c)|\).

- Fact 2: :

-

For every applicant a∈A′∖Υ, there exists \(\ell ^{*}_{a}\leq n_{2}\) such that \(|C^{a}_{\ell } \cap \mu ^{\prime }_{\setminus \mu }(a)| = |C^{a}_{\ell } \cap \mu _{\setminus \mu ^{\prime }}(a)|\) for all \(\ell <\ell ^{*}_{a}\), and \(|C^{a}_{\ell _{a}^{*}} \cap \mu ^{\prime }_{\setminus \mu }(a)| > |C^{a}_{\ell _{a}^{*}} \cap \mu _{\setminus \mu ^{\prime }}(a)|\). Hence, \(C^{a}_{\ell _{a}^{*}}\) denotes the first indifference class of a in which μ′ is better than μ for a.

- Fact 3: :

-

For every applicant a∈A′ that is full in μ, there exists ℓ +, \(\ell ^{*}_{a} < \ell ^{+}\leq n_{2}\), such that \(|C^{a}_{\ell ^{+}} \cap \mu _{\setminus \mu ^{\prime }}(a)| > |C^{a}_{\ell ^{+}} \cap \mu ^{\prime }_{\setminus \mu }(a)|\).

□

In what follows, we iteratively remove edges from \(G_{\mu ,\mu ^{\prime }}\) (so as not to visit an edge twice and maintain the aformentioned facts) and create a corresponding path \(\mathfrak {C}\), which we show that in all possible cases implies an improving coalition in μ, thus contradicting our assumption.

As μ′ Pareto dominates μ, A′ is nonempty. Let \(a_{i_{0}}\) be an applicant in A′. By Fact 2, there exists a course \(c_{j_{1}} \in C_{\ell _{a_{i_{0}}}^{*}} \cap \mu ^{\prime }_{\setminus \mu }(a_{i_{0}})\). Hence, we remove edge \((a_{i_{0}}, c_{j_{1}})\) from \(G_{\mu ,\mu ^{\prime }}\) and add it to \(\mathfrak {C}\), i.e., \(\mathfrak {C} = \langle a_{i_{0}}, c_{j_{1}}\rangle \). If \(c_{j_{1}}\) is exposed, then an augmenting path coalition is implied. More specifically, if \(a_{i_{0}}\) is also exposed in μ, then \(\mathfrak {C} = \langle a_{i_{0}},c_{j_{1}}\rangle \) is an augmenting path coalition. Otherwise, if \(a_{i_{0}}\) is full in μ, then it follows from Fact 3 that there exists a course \(c_{j_{0}}\in \mu _{\setminus \mu ^{\prime }}(a_{i_{0}})\) such that \(c_{j_{1}} {\succ }_{a_{i_{0}}} c_{j_{0}}\). Then \(\mathfrak {C}=\langle c_{i_{0}}, a_{i_{0}}, c_{j_{1}}\rangle \) is an alternating path coalition.

If, on the other hand, \(c_{j_{1}}\) is full, we continue our search for an improving coalition in an iterative manner (as follows) until we either reach an exposed course (in which case we show that an augmenting or alternating coalition is found) or revisit an applicant that belongs to A′ (in which case we show that a cyclic coalition is found). Note that \(a_{i_{0}}\in \mu ^{\prime }_{\setminus \mu }(c_{j_{1}})\), hence it follows from Fact 1 that \(|\mu _{\setminus \mu ^{\prime }}(c_{j_{1}})| \geq 1\). At the start of each iteration k ≥ 1, we have:

such that by the construction of \(\mathfrak {C}\) (through the iterative procedure) every applicant \(a_{i_{x}}\) on \(\mathfrak {C}\) weakly prefers the course that follows her on \(\mathfrak {C}\)— i.e., \(c_{j_{x+1}}\) to which she is matched to in μ′ but not in μ—to the course that precedes her—i.e., \(c_{j_{x}}\) to which she is matched to in μ but not in μ′. Moreover, \(a_{i_{0}}\) is either exposed and can accommodate one more course, namely \(c_{j_{1}}\), or is full and hence there exists a course \(c_{j_{0}}\in \mu _{\setminus \mu ^{\prime }}(a_{i_{0}})\) such that \(a_{i_{0}}\) strictly prefers \(c_{j_{1}}\) to \(c_{j_{0}}\). Notice that \(\mathfrak {C}\) would imply an improving coalition (using Lemma 1) if \(c_{j_{k}}\) is exposed.

During the iterative procedure, set Υ includes those applicants who strictly prefer the course they are given in \(\mathfrak {C}\) under μ′ than under μ. That is, \({\Upsilon }=\{a_{i_{k}}\in \mathfrak {C}: c_{j_{k+1}}\succ _{a_{i_{k}}} c_{j_{k}}\}\cup \{a_{i_{0}}\}\). Note that applicant \(a_{i_{0}}\) is always included in Υ, since either she is exposed, or \(\exists c_{j_{0}}\in \mu _{\setminus \mu ^{\prime }}(a_{i_{0}})\) such that \(c_{j_{1}} {\succ }_{a_{i_{0}}}c_{j_{0}}\).

The Iterative Procedure

We repeat the following procedure until either the last course on \(\mathfrak {C}\), \(c_{j_{k}}\), is exposed or Case 3 is reached. If \(c_{j_{k}}\) is full, then by Fact 1 there must exist an applicant \(a_{i_{k}}\in \mu _{\setminus \mu ^{\prime }}(c_{i_{k}})\); note that \(a_{i_{k}} \neq a_{i_{k-1}}\). Remove \((a_{i_{k}},c_{j_{k}})\) from \(G_{\mu ,\mu ^{\prime }}\). Let \(C^{a_{i_{k}}}_{\ell }\) be the indifference class of \(a_{i_{k}}\) to which \(c_{j_{k}}\) belongs. We consider three different cases.

-

Case 1: \(|C^{a_{i_{k}}}_{\ell } \cap \mu ^{\prime }_{\setminus \mu }(a_{i_{k}})| \geq |C^{a_{i_{k}}}_{\ell } \cap \mu _{\setminus \mu ^{\prime }}(a_{i_{k}})|\). Then there must exist a course \(c_{j_{k+1}} \in C^{a_{i_{k}}}_{\ell } \cap \mu ^{\prime }_{\setminus \mu }(a_{i_{k}})\); note that \(c_{j_{k}} \neq c_{j_{k+1}}\). Hence, we remove \((a_{i_{k}},c_{j_{k+1}})\) from \(G_{\mu ,\mu ^{\prime }}\) and append \(\langle a_{i_{k}}, c_{j_{k+1}}\rangle \) to \(\mathfrak {C}\).

-

Case 2: \(|C^{a_{i_{k}}}_{\ell } \cap \mu ^{\prime }_{\setminus \mu }(a_{i_{k}})| < |C^{a_{i_{k}}}_{\ell } \cap \mu _{\setminus \mu ^{\prime }}(a_{i_{k}})|\) and \(a_{i_{k}} \notin {\Upsilon }\). Recall that μ′ Pareto dominates μ, hence it must be that \(a_{i_{k}} \in A^{\prime }\). Therefore it follows from Fact 2 that \(\ell ^{*}_{a_{i_{k}}} < \ell \) and there exists a course \(c_{j_{k+1}} \in C^{a_{i_{k}}}_{\ell ^{*}_{a_{i_{k}}}} \cap \mu ^{\prime }_{\setminus \mu }(a_{i_{k}})\); note that \(c_{j_{k}} \neq c_{j_{k+1}}\). Hence, we remove \((a_{i_{k}},c_{j_{k+1}})\) from \(G_{\mu ,\mu ^{\prime }}\) and append \(\langle a_{i_{k}}, c_{j_{k+1}}\rangle \) to \(\mathfrak {C}\). Moreover, we add \(a_{i_{k}}\) to Υ.

-

Case 3: \(|C^{a_{i_{k}}}_{\ell } \cap \mu ^{\prime }_{\setminus \mu }(a_{i_{k}})| < |C^{a_{i_{k}}}_{\ell } \cap \mu _{\setminus \mu ^{\prime }}(a_{i_{k}})|\) and \(a_{i_{k}} \in {\Upsilon }\); note that this case cannot happen when k = 1. Let \(a_{i_{z}}\) be the first occurrence of \(a_{i_{k}}\) which has resulted in \(a_{i_{k}}\) being added to Υ; i.e., 0≤z<k and \(a_{i_{z}} = a_{i_{k}}\). Therefore, \(\mathfrak {C}\) is of the form \(\langle a_{i_{0}}, c_{j_{1}}, a_{i_{1}},\ldots , a_{i_{z}}, c_{j_{z+1}}, {\ldots } a_{i_{k-1}}, c_{j_{k}}\rangle \). Let us consider \(\mathfrak {C}=\langle c_{j_{k}}, a_{i_{z}}, c_{j_{z+1}}, {\ldots } a_{i_{k-1}}\rangle \). Since \(a_{i_{z}} = a_{i_{k}}\in {\Upsilon }\), \(a_{i_{z}} \in A^{\prime }\) and, by the construction of \(\mathfrak {C}\), \(c_{j_{z+1}}\in C^{i_{k}}_{\ell ^{*}_{i_{k}}}\), it follows from Fact 2 that \(\ell ^{*}_{a_{i_{z}}} < \ell \), which implies (using the assumption of Case 3) that \(c_{j_{z+1}} {\succ }_{i_{z}} c_{j_{k}}\).

If at any iteration k we find a \(c_{j_{k}}\) that is exposed in μ or we arrive at Case 3, then an improving coalition is implied (using Lemma 1), which contradicts our assumption on μ. Otherwise (cases 1 and 2), we continue with a new iteration. However, since the number of edges is bounded, this iterative procedure is bound to terminate, either by reaching an exposed course or by reaching Case 3.

It follows from Theorem 2 that in order to test whether a given matching μ is Pareto optimal, it is sufficient to check whether it precludes any improving coalition. To check this, we construct the extended envy graph associated with μ.

Definition 1

The extended envy graph G(μ)=(V G(μ), E G(μ)) associated with a matching μ is a weighted digraph with V G(μ) = V A ∪V C ∪V μ , where V C = {c:c∈C}, V A = {a:a∈A} and V μ = {ac:(a, c)∈μ}, and \(E_{G(\mu )}=E_{G(\mu )}^{1}\cup E_{G(\mu )}^{2}\cup E_{G(\mu )}^{3}\) where:

-

\(E_{G(\mu )}^{1}=\{(c,a): a\in A, c\in C,\) and \(|\mu (c)|<q(c)\}\bigcup \{(c,ac^{\prime }): c\in C\) and |μ(c)|<q(c)}, with weight equal to 0;

-

\(E_{G(\mu )}^{2}=\{(a,c): a\in A, c\in P(a)\setminus \mu (a)\) and \(|\mu (a)|<b(a)\}\bigcup \{(a,a^{\prime }c): a\in A\setminus \{a^{\prime }\}, c\in P(a)\setminus \mu (a)\) and |μ(a)|<b(a)}, with weight equal to −1; and

-

\(E_{G(\mu )}^{3}=\{(ac,c^{\prime }): c^{\prime }\in P(a)\setminus \mu (a)\) and \(c^{\prime }\succeq _{a} c\} \bigcup \{(ac,a^{\prime }c^{\prime }): c^{\prime }\in P(a)\setminus \mu (a)\) and c′≽ a c}, with weight equal to 0 if c′≃ a c or −1 if c′≻ a c.

That is, G(μ) includes one node per course, one node per applicant and one node per applicant-course pair that participates in μ. With respect to E G(μ), a ‘0’ (‘ −1’) arc is an arc with weight 0 (−1). There is a ‘0’ arc leaving from each exposed course node to any other (non-course) node in G(μ) (arcs in \(E_{G(\mu )}^{1}\)). Moreover, there is a −1 arc leaving each exposed applicant node a towards any course node or applicant-course node whose corresponding course is in P(a)∖μ(a) (arcs in \(E_{G(\mu )}^{2}\)). Finally, there is an arc leaving each applicant-course node ac towards any course node or applicant-course node whose corresponding course c′ is not in μ(a) and c′≽ a c. The weight of such an arc is 0 if c′≃ a c or −1 if c′≻ a c (arcs in \(E_{G(\mu )}^{3}\)).

The following theorem establishes the connection between Pareto optimality of a given matching and the absence of negative cost cycles in the extended envy graph corresponding to that matching.

Theorem 3

A matching μ is Pareto optimal if and only if its extended envy graph G(μ) has no negative cost cycles.

Proof

First we show that if G(μ) has no negative cost cycle, then μ is Pareto optimal. Assume for a contradiction that μ is not Pareto optimal; this means that there exists an improving coalition \(\mathfrak {C}\). We examine each of the three different types of improving coalition separately. Assume that \(\mathfrak {C}\) is an alternating path coalition, i.e., \(\mathfrak {C} = \langle c_{j_{0}}, a_{i_{0}}, c_{j_{1}}, a_{i_{1}},\ldots ,c_{j_{r-1}}a_{i_{r-1}},c_{j_{r}}\rangle \) where r ≥ 1, \((a_{i_{k}},c_{j_{k}}) \in \mu \) (0≤k≤r−1), \((a_{i_{k-1}},c_{j_{k}}) \not \in \mu \) (1≤k≤r), \(a_{i_{0}}\) is full, and \(c_{j_{r}}\) is an exposed course. Furthermore, \(c_{j_{1}}\succ _{a_{i_{0}}}c_{j_{0}}\) and, if r ≥ 2, \(c_{j_{k+1}}\succeq _{a_{i_{k}}} c_{j_{k}}\) (1≤k≤r−1). By Definition 1, there exists in G(μ) an arc \((a_{i_{k}}c_{j_{k}},a_{i_{k+1}}c_{j_{k+1}})\) for each 0≤k≤r−2, an arc \((a_{i_{r-1}}c_{j_{r-1}},c_{j_{r}})\), and an arc \((c_{j_{r}},a_{i_{0}}c_{j_{0}})\), thus creating a cycle K in G(μ). Since all arcs of K have weight 0 or −1 and \((a_{i_{0}}c_{j_{0}},a_{i_{1}}c_{j_{1}})\) has weight −1 (because \(c_{j_{1}}\succ _{a_{i_{0}}}c_{j_{0}}\)), K is a negative cost cycle, a contradiction. A similar argument can be used for the case where \(\mathfrak {C}\) is an augmenting path coalition; our reasoning on the implied cycle would start with a ‘ −1’ arc \((a_{i_{0}},a_{i_{1}}c_{j_{1}})\) and would conclude with a ‘0’ arc \((c_{j_{r}},a_{i_{0}})\). Finally, for a cyclic coalition \(\mathfrak {C}\), the implied negative cost cycle includes all arcs \((a_{i_{k}}c_{j_{k}},a_{i_{k+1}}c_{j_{k+1}})\) for 0≤k≤r−1 taken modulo r, where at least one arc has weight −1 (otherwise, \(\mathfrak {C}\) would not be a cyclic coalition). In all cases, the existence of an improving coalition implies a negative cost cycle in G(μ), a contradiction.

Next, we show that if μ is Pareto optimal, G(μ) has no negative cost cycles. We prove this via contradiction. Let μ be a POM, and assume that G(μ) has a negative cost cycle. Given that there exists a negative cost cycle in G(μ), there must also exist one that is simple and of minimal length. Hence, without loss of generality, let K be a minimal (length) simple negative cost cycle in G(μ). We commence by considering that K contains only nodes in V μ . We create a sequence \(\mathcal {K}\) corresponding to K as follows. Starting from an applicant-course node of K with an outgoing ’ −1’ arc, say \(a_{i_{0}}c_{j_{0}}\), we first place \(c_{j_{0}}\) in \(\mathcal {K}\) followed by \(a_{i_{0}}\), and continue by repeating this process for all nodes in K. It is then easy to verify, by Definition 1 and the definition of a cyclic coalition, that K corresponds to a sequence \(\mathcal {K}=\langle c_{j_{0}},a_{i_{0}},\ldots ,c_{j_{r-1}},a_{i_{r-1}}\rangle \) that satisfies all conditions of a cyclic coalition with the exception that some courses or applicants may appear more than once. But this means that, by Lemma 1, μ admits an improving coalition, which contradicts that μ is a POM.

To conclude the proof, we consider the case where K includes at least one node from V A or V C . Note that if K contains a node from V A , then it also contains a node from V C . This is because, by Definition 1, the only incoming arcs to an applicant node are of type \(E^{1}_{G(\mu )}\). Therefore K includes at least one course node. We now claim that since K is of minimal length, it will contain only one course node. Assume for a contradiction that K contains more than one course nodes. Since K is a negative cost cycle, it contains at least one ‘ −1’ arc which leaves an applicant node a (or an applicant-course node ac′). Let c be the first course node in K that appears after this ‘ −1’ arc. Recall that for c to be in K, c is exposed in μ and there exists a ‘0’ arc from c to any applicant or applicant-course node of G(μ). Hence, there also exists such an arc from c to a (or to ac′), thus forming a negative cost cycle smaller than K, a contradiction. Note that this also implies that K contains at most one node in V A . This is because, by Definition 1, the only incoming arcs to nodes in V A are from nodes in V C , therefore the claim follows from the facts that c is the only course node in K, and K is a simple cycle. We create a sequence \(\mathcal {K}\) corresponding to K as follows. If K contains an applicant node a, we start \(\mathcal {K}\) with a. If K contains a course node c, we end \(\mathcal {K}\) with c. Any applicant-course node a′c′ is handled by placing c′ first and then a′. It is now easy to verify, by Definition 1 and the definition of a cyclic coalition, that K corresponds to a sequence \(\mathcal {K}=\langle a_{i_{0}},c_{j_{1}},a_{i_{1}},\ldots ,c_{j_{r-1}},a_{i_{r-1}},c_{j_{r}}\rangle \) (if K contains an applicant node) or a sequence \(\mathcal {K}=\langle c_{j_{0}},a_{i_{0}},\ldots ,c_{j_{r-1}},a_{i_{r-1}},c_{j_{r}}\rangle \) (if K contains no applicant node), such that \(\mathcal {K}\) satisfies all conditions of either an augmenting path coalition (if K contains an applicant node) or an alternating path coalition (if K contains no applicant node), with the exception that some courses or applicants may appear more than once. But this means that, by Lemma 1, μ admits an augmenting path coalition (or an alternating path coalition), which contradicts that μ is a POM. □

Theorem 3 implies that to test whether a matching μ is Pareto optimal or not, it suffices to create the corresponding extended envy graph and check whether it contains a negative cost cycle. It is easy to see that we can create G(μ) in polynomial time. To test whether G(μ) admits a negative cycle, we can make use of various algorithms that exist in the literature (see [10] for a survey), e.g. Moore-Bellman-Ford which terminates in O(|V G(μ)||E G(μ)|) time. However, as all arcs in G(μ) are of costs either 0 or −1 and hence integer, we can use a faster algorithm of [14] which, for our setting, terminates in \(O(\sqrt {|V_{G(\mu )}|}|E_{G(\mu )}|)\) time.

Corollary 4

Given a CA instance I and a matching μ, we can check in polynomial time whether μ is Pareto optimal in I.

4 Constructing Pareto Optimal Matchings

We propose an algorithm for finding a POM in an instance of CA, which is in a certain sense a generalization of Serial Dictatorship thus named Generalized Serial Dictatorship Mechanism with ties (GSDT). The algorithm starts by setting the quotas of all applicants to 0 and those of courses at the original values given by q. At each stage i, the algorithm selects a single applicant whose original capacity has not been reached, and increases only her capacity by 1. The algorithm terminates after \(B={\sum }_{a\in A} b(a)\) stages, i.e., once the original capacities of all applicants have been reached. In that respect, the algorithm assumes a ‘multisequence’ Σ=(a 1, a 2,…, a B) of applicants such that each applicant a appears b(a) times in Σ; e.g., for the instance of Table 1 and the sequence Σ=(a 1, a 1, a 2, a 2, a 3, a 2, a 3), the vector of capacities evolves as follows:

Let us denote the vector of applicants’ capacities in stage i by b i, i.e., b 0 is the all-zeroes vector and b B = b. Clearly, each stage corresponds to an instance I i similar to the original instance except for the capacities vector b i. At each stage i, our algorithm obtains a matching μ i for the instance I i. Since the single matching of stage 0, i.e., the empty matching, is a POM in I 0, the core idea is to modify μ i−1 in such way that if μ i−1 is a POM with respect to I i−1 then μ i is a POM with respect to I i. To achieve this, the algorithm relies on the following flow network.

Consider the digraph D = (V, E). Its node set is V = A∪T∪C∪{σ, τ} where σ and τ are the source and the sink and vertices in T correspond to the ties in the preference lists of all applicants; i.e., T has a node (a, t) per applicant a and tie t such that \({C^{a}_{t}} \neq \emptyset \). Its arc set is E = E 1∪E 2∪E 3∪E 4 where E 1 = {(σ, a):a∈A}, \(E_{2}=\{(a,(a,t)): a\in A, {C^{a}_{t}} \neq \emptyset \}\), \(E_{3}=\{((a,t),c): c\in {C^{a}_{t}}\}\) and E 4 = {(c, τ):c∈C}. The graph D for the instance of Table 1 appears in Fig. 1, where an oval encircles all the vertices of T that correspond to the same applicant, i.e., one vertex per tie.

Digraph D for the instance I from Table 1

Using digraph D = (V, E), we obtain a flow network N i at each stage i of the algorithm, i.e., a network corresponding to instance I i, by appropriately varying the capacities of the arcs. (For an introduction on network flow algorithms see, e.g., [3].) The capacity of each arc in E 3 is always 1 (since each course may be received at most once by each applicant) and the capacity of an arc e = (c, τ)∈E 4 is always q(c). The capacities of all arcs in E 1∪E 2 are initially 0 and, at stage i, the capacities of only certain arcs associated with applicant a i are increased by 1. For this reason, for each applicant a we use the variable curr(a) that indicates her ‘active’ tie; initially, curr(a) is set to 1 for all a∈A.

In stage i, the algorithm computes a maximum flow f i whose saturated arcs in E 3 indicate the corresponding matching μ i. The algorithm starts with f 0 = 0 and μ 0 = ∅. Let the applicant a i∈A be a copy of applicant a considered in stage i. The algorithm increases by 1 the capacity of arc (σ, a)∈E 1 (i.e., the applicant is allowed to receive an additional course). It then examines the tie curr(a) to check whether the additional course can be received from tie curr(a). To do this, the capacity of arc (a,(a, curr(a)))∈E 2 is increased by 1. The network in stage i where tie curr(a i) is examined is denoted by \(N^{i,curr(a^{i})}\). If there is an augmenting σ−τ path in this network, the algorithm augments the current flow f i−1 to obtain f i, accordingly augments μ i−1 to obtain μ i (i.e., it sets μ i to the symmetric difference of μ i−1 and all pairs (a, c) for which there is an arc ((a, t), c) in the augmenting path) and proceeds to the next stage. Otherwise, it decreases the capacity of (a,(a, curr(a))) by 1 (but not the capacity of arc (σ, a)) and it increases curr(a) by 1 to examine the next tie of a; if all (non-empty) ties have been examined, the algorithm proceeds to the next stage without augmenting the flow. Note that an augmenting σ−τ path in the network \(N^{i,curr(a^{i})}\) corresponds to an augmenting path coalition in μ i−1 with respect to I i.

A formal description of GSDT is provided by Algorithm 1, where w(e) denotes the capacity of an arc e∈E and ⊕ denotes symmetric difference. Observe that all arcs in E 2 are saturated, except for the arc corresponding to the current applicant and tie, thus any augmenting path has one arc from each of E 1, E 2 and E 4 and all other arcs from E 3; as a consequence, the number of courses each applicant receives at stage i in any tie cannot decrease at any subsequent step. Also, μ i dominates μ i−1 with respect to instance I i if and only if there is a flow in N i that saturates all arcs in E 2.

To prove the correctness of GSDT, we need two intermediate lemmas. Let \(e_{t} \in \mathbb {R}^{n_{2}}\) be the vector having 1 at entry t and 0 elsewhere.

Lemma 5

Let N i,t be the network at stage i while tie t of applicant a i is examined. Then, there is an augmenting path with respect to f i−1 in N i,t if and only if there is a matching μ such that

Proof

Note that the flows f i−1 and f i, corresponding to μ i−1 and μ i respectively, are feasible in N i. Moreover, f i−1 is feasible in N i, t and, if there is an augmenting path with respect to f i−1 in N i, t, then f i is feasible in N i, t too.

If there is a path augmenting f i−1 by 1 in N i, t thus obtaining f i, the number of courses assigned per tie and applicant remain identical except for a i that receives an extra course from tie t. Thus, χ a (μ(a)) is identical to χ a (μ i−1(a)) for all a∈A except for a i whose characteristic vector has its t’th entry increased by 1.

Conversely, if the above equation holds, flow f i is larger than f i−1 in the network N i, t. Thus standard network flow theory implies the existence of a path augmenting f i−1. As a side remark, since the network N i, t has similar arc capacities with the network N i−1 except for the arcs (σ, a i) and (a i,(a i, t)), this augmenting path includes these two arcs. □

Lemma 6

Let S≽ a U and |S|≥|U|. If c S and c U denote a least preferred course of applicant a in S and U, respectively, then S∖{c S }≽ a U∖{c U }.

Proof

For convenience, we denote S∖{c S } by S′ and U∖{c U } by U′. Let

and

If S≃ a U, then c S ≃ a c U and χ a (S) = χ a (U), therefore χ a (S′) = χ a (U′) and S′≃ a U′. Otherwise, S≻ a U implies that there is k∈[n 2] such that s j = u j for each j<k and s k >u k .

If there is j ≥ k+1 with s j >0, then \(c_{S}\in {C_{r}^{a}}\) with r ≥ k+1. It follows that \(s^{\prime }_{k}=s_{k}>u_{k}\geq u^{\prime }_{k}\) while \(s^{\prime }_{j} \geq u^{\prime }_{j}\) for all j<k, thus χ a (S′)> lex χ a (U′), i.e., S′≻ a U′. The same follows if s k ≥u k +2 because then \(s^{\prime }_{k} \geq s_{k}-1 > u_{k}\geq u^{\prime }_{k}\).

It remains to examine the case where s j = 0 for all j ≥ k+1 and s k = u k +1. In this case, \(c_{S}\in {C_{k}^{a}}\) thus \(s^{\prime }_{k}=s_{k}-1=u_{k}\), while |S|≥|U| implies that either u j = 0 for all j ≥ k+1 or there is a single k′>k such that \(u_{k^{\prime }}=1\). In the former case \(c_{U}\in {C_{r}^{a}}\) for r≤k thus χ a (S′)> lex χ a (U′), whereas in the latter one \(u^{\prime }_{k}=u_{k}=s_{k}-1=s^{\prime }_{k}\) and \(s^{\prime }_{j}=u^{\prime }_{j}=0\) for all j>k hence χ a (S′) = χ a (U′). □

Theorem 7

For each i, the matching μ i obtained by GSDT is a POM for instance I i.

Proof

We apply induction on i. Clearly, μ 0 = ∅ is the single matching in I 0 and hence a POM in I 0. We assume that μ i−1 is a POM in I i−1 and prove that μ i is a POM in I i.

Assume to the contrary that μ i is not a POM in I i. This implies that there is a matching ξ in I i that dominates μ i. Then, for all a∈A, ξ(a)≽ a μ i(a)≽ a μ i−1(a). Recall that the capacities of all applicants in I i are as in I i−1 except for the capacity of a i that has been increased by 1. Hence, for all a∈A∖{a i }, |ξ(a)| does not exceed the capacity of a in instance I i−1, namely b i−1(a), while |ξ(a i)| may exceed b i−1(a i) by at most 1.

Moreover, it holds that |ξ(a i)|≥|μ i(a i)|. Assuming to the contrary that |ξ(a i)|<|μ i(a i)| yields that ξ is feasible also in instance I i−1. In addition, |ξ(a i)|<|μ i(a i)| implies that it cannot be the case that \(\xi (a^{i}) \simeq _{a^{i}} \mu ^{i}(a^{i})\), and this, together with \(\xi (a^{i}) \succeq _{a^{i}} \mu ^{i}(a^{i})\succeq _{a^{i}} \mu ^{i-1}(a^{i})\), yields \(\xi (a^{i}) \succ _{a^{i}} \mu ^{i}(a^{i}) \succeq _{a^{i}} \mu ^{i-1}(a^{i})\). But then ξ dominates μ i−1 in I i−1, a contradiction to μ i−1 being a POM in I i−1.

Let us first examine the case in which GSDT enters the ‘while’ loop and finds an augmenting path, hence μ i dominates μ i−1 in I i only with respect to applicant a i that receives an additional course. This is one of her worst courses in μ i(a i) denoted as c μ . Let c ξ be a worst course for a i in ξ(a i). Let also ξ′ and μ′ denote ξ∖{(a i, c ξ )} and μ i∖{(a i, c μ )}, respectively. Observe that both ξ′ and μ′ are feasible in I i−1, while having shown that |ξ(a i)|≥|μ i(a i)| implies through Lemma 6 that ξ′ weakly dominates μ′ which in turn weakly dominates μ i−1 by Lemma 5. Since μ i−1 is a POM in I i−1, ξ′(a)≃ a μ′(a)≃ a μ i−1(a) for all a∈A, therefore ξ dominates μ i only with respect to a i and \(c_{\xi } \succ _{a^{i}} c_{\mu }\). Overall, ξ(a)≃ a μ i(a)≃ a μ i−1(a) for all a∈A∖{a i} and \(\xi (a^{i}) \succ _{a^{i}} \mu ^{i}(a^{i})\succ _{a^{i}} \mu ^{i-1}(a^{i})\).

Let t ξ and t μ be the ties of applicant a i containing c ξ and c μ , respectively, where t ξ <t μ because \(c_{\xi } \succ _{a^{i}} c_{\mu }\). Then, Lemma 5 implies that there is a path augmenting f i−1 (i.e., the flow corresponding to μ i−1) in the network \(N^{i,t_{\xi }}\). Let also t′ be the value of curr(a i) at the beginning of stage i. Since we examine the case where GSDT enters the ‘while’ loop and finds an augmenting path, \(C^{a^{i}}_{t^{\prime }} \neq \emptyset \). Thus, t′ indexes the least preferred tie from which a i has a course in μ i−1. The same holds for ξ′ since \(\xi ^{\prime }(a^{i}) \simeq _{a^{i}} \mu ^{i-1}(a^{i})\). Because ξ′ is obtained by removing from a i its worst course in ξ(a i), that course must belong to a tie of index no smaller than t′, i.e., t′≤t ξ . This together with t ξ <t μ yield t′≤t ξ <t μ , which implies that GSDT should have obtained ξ instead of μ i at stage i, a contradiction.

It remains to examine the cases where, at stage i, GSDT does not enter the ‘while’ loop or enters it but finds no augmenting path. For both these cases, μ i = μ i−1, thus ξ dominating μ i means that ξ is not feasible in I i−1 (since it would then also dominate μ i−1). Then, it holds that |ξ(a i)| exceeds b i−1(a i) by 1, thus |ξ(a i)|>|μ i(a i)| yielding \(\xi (a^{i}) \succ _{a^{i}} \mu ^{i}(a^{i})\). Let t ξ be defined as above and t′ now be the most preferred tie from which a i has more courses in ξ than in μ i. Clearly, t′≤t ξ since t ξ indexes the least preferred tie from which a i has a course in ξ. If t′<t ξ , then the matching ξ′, defined as above, is feasible in I i−1 and dominates μ i−1 because \(\xi ^{\prime }(a^{i}) \succ _{a^{i}} \mu ^{i-1}(a^{i})\), a contradiction; the same holds if t′ = t ξ and a i has in ξ at least two more courses from t ξ than in μ i. Otherwise, t′ = t ξ and a i has in ξ exactly one more course from t ξ than in μ i; that, together with |ξ(a i)|>|μ i(a i)| and the definition of t ξ , implies that the index of the least preferred tie from which a i has a course in μ i−1 and, therefore, the value of curr(a i) in the beginning of stage i, is at most t′. But then GSDT should have obtained ξ instead of μ i at stage i, a contradiction. □

The following statement is now direct.

Corollary 8

GSDT produces a POM for instance I.

To derive the complexity bound for GSDT, let us denote by L the length of the preference profile in I, i.e., the total number of courses in the preference lists of all applicants. Notice that |E 3| = L and neither the size of any matching in I nor the total number of ties in all preference lists exceeds L.

Within one stage, several searches in the network might be needed to find a tie of the active applicant for which the current flow can be augmented. However, one tie is unsuccessfully explored at most once, hence each search either augments the flow thus adding a pair to the current matching or moves to the next tie. So the total number of searches performed by the algorithm is bounded by the size of the obtained matching plus the number of ties in the preference profile, i.e., it is O(L). A search requires a number of steps that remains linear in the number of arcs in the current network (i.e., \(N^{i,curr(a^{i})}\)), but as at most one arc per E 1, E 2 and E 4 is used, any search needs O(|E 3|) = O(L) steps. This leads to a complexity bound O(L 2) for GSDT.

The next theorem will come in handy when implementing Algorithm 1, as it implies that for each applicant a only one node in T corresponding to a has to be maintained at a time.

Theorem 9

Let N i,t be the network at stage i while tie t of applicant a i is examined. Then, there is no augmenting path with respect to f i−1 in N i,t that has an arc of the form ((a j ,ℓ),c) where j≤i and ℓ<curr(a j ).

Proof

Assume otherwise. Let \(\mathfrak {P}\) be such an augmenting path that is found in round i and used to obtain μ i. Hence \(\mathfrak {P}\) corresponds to an augmenting path coalition \(\mathfrak {C}\) of the following form 〈a i, c r ,…, c s , a j, c q ,…〉 where c r is in the t’th indifference class of a i and both c s and c q are in the ℓ’th indifference class of a j, ℓ<curr(a j). Note that as ℓ ≥ 1 thus curr(a j)>1. It then follows from the description of Algorithm 1 that either a j is matched to at least one course in curr(a j) under μ i or she is exposed (which would be the case when curr(a j)>n 2 or \(C^{a^{j}}_{curr(a^{j})} =\emptyset \)).

We first show that a i and a j are not the same applicant. Otherwise, \(\mathfrak {C}^{\prime } = \langle a^{j}, c_{q}, {\ldots } \rangle \)—obtained from \(\mathfrak {C}\) by discarding all courses and applicants that appear before a j—is an augmenting path coalition with respect to μ i−1 in I i. Clearly the matching obtained from μ i−1 by satisfying \(\mathfrak {C}^{\prime }\) Pareto dominates μ i, as ℓ<t, contradicting that μ i is a POM in I i.

In the remainder of the proof we assume that a i≠a j. Let us first consider the case where a j is matched to a course in \(C^{a^{j}}_{curr(a^{j})}\) under μ i, and therefore by Lemma 1 to a course \(c\in C^{a^{j}}_{curr(a^{j})}\) under μ i−1. Let \(\mathfrak {C}^{\prime } = \langle c,a^{j},c_{q},\dots \rangle \), i.e., \(\mathfrak {C}^{\prime }\) is obtained from \(\mathfrak {C}\) by discarding all courses and applicants that appear before a j and replacing them with c. Since ℓ<curr(a j), we have \(c_{q} {\succ }_{a^{j}} c\). It is then easy to see that \(\mathfrak {C}^{\prime }\) is an alternating path coalition with respect to μ i−1 in I i−1, and hence μ i−1 is not a POM in I i−1, a contradiction. We now consider the case where a j is exposed in μ i, and hence exposed in μ i−1 with respect to I i−1. Let \(\mathfrak {C}^{\prime } = \langle a^{j},c_{q},\dots \rangle \), i.e., \(\mathfrak {C}^{\prime }\) is obtained from \(\mathfrak {C}\) by discarding all courses and applicants that appear before a j. It is clear that \(\mathfrak {C}^{\prime }\) is an augmenting path coalition with respect to μ i−1 in I i−1, contradicting that μ′ is a POM in I i−1. □

Next we show that GSDT can produce any POM. Our proof makes use of a subgraph of the extended envy graph of Definition 1.

Theorem 10

Given a CA instance I and a POM μ, there exists a suitable priority ordering over applicants Σ given which GSDT can produce μ.

Proof

Given an instance I and a POM μ, let G = (V, E) be a digraph such that V = {ac:(a, c)∈μ} and there is an arc from ac to a′c′ if a≠a′, c′∉μ(a) and c′≽ a c. An arc (ac, a′c′) has weight −1 if a prefers c′ to c and has weight 0 if she is indifferent between the two courses. Note that G is a subgraph of the extended envy graph G(μ) introduced in Definition 1. We say that ac envies a′c′ if (ac, a′c′) has weight −1.

Note that if there exists an applicant-course pair ac∈μ and a course c′ such that c′≻ a c and ac′∉μ, then c′ must be full under μ, or else μ admits an alternating path coalition and is not a POM.

Moreover, all arcs in any given strongly connected component (SCC) of G have weight 0. To see this, note that by the definition of a SCC, there is a path from any node to every other node in the component. Hence, if there is an arc in a SCC with weight −1, then there must be a cycle of negative weight in that SCC. It is then straightforward to see that, by Lemma 1, μ admits a cyclic coalition, a contradiction.

It then follows that if c′≻ a c, then ac′ and ac cannot belong to the same SCC. Should that occur, there would be an arc (of weight 0) from ac′ to some vertex a′c ∗ in the same SCC, implying that c ∗≃ a c′ and thus c ∗≻ a c. This in turn would yield that there is an arc of weight −1 from ac to a′c ∗ in this SCC, a contradiction.

We create the condensation G′ of the graph G. It follows from the definition of SCCs that G′ is a DAG. Hence G′ admits a topological ordering. Let X′ be a reversed topological ordering of G′ and X be an ordering of the vertices in G that is consistent with X′ (the vertices within one SCC may be ordered arbitrarily). Let Σ be an ordering over applicant copies that is consistent with X (we can think of it as obtained from X by removing the courses). We show that GSDT can produce μ given Σ. Note that technically Σ must contain b(a) copies of each applicant a. However, as μ is Pareto optimal, upon obtaining μ the algorithm will not be able to allocate any more courses to any of the applicants, even if we append more applicant copies to the end of Σ.

We continue the proof with an induction. Recall that X is an ordering over the matched pairs in μ. We let X(a i) = c where a i c is the ith element of X. Let μ i denote the matching that corresponds to the first i elements of X; hence μ |μ| = μ. We claim that given Σ, GSDT is able to produce μ i at stage i, after augmenting f i−1 through an appropriate augmenting path.

For the base case, note that a 1 X(a 1) does not envy any other vertex in G and hence it can only be that \(X(a^{1}) \in C^{1}_{a^{1}}\). It is then easy to see that the path 〈σ, a 1,(a 1,1), X(a 1), τ〉 is a valid augmenting path in N 1,1 and hence GSDT might choose it.

Assume that GSDT produces μ ℓ at the end of each stage ℓ for all ℓ<i. We prove that it can produce μ i at the end of stage i. Assume, for a contradiction, that this is not the case. Let r denote the indifference class of a i to which X(a i) belongs. Note that course X(a i) is not full in I i, so if the path 〈σ, a i,(a i, r), X(a i), τ〉 is not chosen by GSDT, it must be the case that GSDT finds an augmenting path in N i, t for some t<r. Let \(\mathfrak {C}\) denote the corresponding augmenting path coalition with respect to the matching μ i−1, which would be of the form

where \(c_{j_{1}} \in C^{t}_{a_{i}}\) and \(c_{j_{y}}\) is exposed in μ i−1. It follows, from the definition of an augmenting path, that there is an edge \((a^{i_{k}}c_{j_{k}}, a^{i_{k+1}}c_{j_{k+1}})\) in G for all k, 1≤k≤y−2. Furthermore, as \(c_{j_{1}} {\succ }_{a^{i}} \mu (a^{i})\), there is an edge of weight −1 in G from a i X(a i) to \(a^{i_{1}}c_{j_{1}}\); therefore \(a^{i_{1}}c_{j_{1}}\) belongs to a SCC of higher priority than the one to which a i X(a i) belongs. If \(c_{j_{y}}\) is exposed in μ, then \(\mathfrak {C}^{\prime }\) that is obtained by adding X(a i) to the beginning of \(\mathfrak {C}\) is an alternating path coalition in μ, a contradiction to μ being a POM. Therefore \(c_{j_{y}}\) must be full in μ.

As \(c_{j_{y}}\) is not full in μ i−1 and full in μ, there must exist an a z, z>i, such that \((a^{z},c_{j_{y}}) \in \mu \). It follows, from the augmenting path coalition \(\mathfrak {C}\), that there is a path in G from \(a^{i_{1}}c_{j_{1}}\) to \(a^{z}c_{j_{y}}\). If there is also a path from \(a^{z}c_{j_{y}}\) to \(a^{i_{1}}c_{j_{1}}\), then the two vertices belong to the same SCC; as \(a^{i_{1}}c_{j_{1}}\) belongs to a SCC of higher priority than the one to which a i X(a i) belongs, so must \(a^{z}c_{j_{y}}\), implying that \(a^{z}c_{j_{y}}\) must have appeared before a i X(a i) in X, a contradiction to z>i. If there is no such a path, then \(a^{z}c_{j_{y}}\) belongs to a SCC that is prioritized even over the SCC to which \(a^{i_{1}}c_{j_{1}}\) belongs, and hence must have appeared before a i X(a i) in X, a contradiction to z>i. □

5 Truthfulness of Mechanisms for sPOMs

It is well-known that the SDM for HA is truthful, regardless of the given priority ordering over applicants. We will show shortly that GSDT is not necessarily truthful, but first prove that this property does hold for some priority orderings over applicants.

Theorem 11

GSDT is truthful given Σ if, for each applicant a, all occurrences of a in Σ are consecutive.

Proof

Without loss of generality, let the applicants appear in Σ in the following order:

Assume to the contrary that some applicant benefits from misrepresenting her preferences. Let a i be the first such applicant in Σ who reports P′(a i ) instead of P(a i ) in order to benefit and \(\mathcal {P}^{\prime }=(P^{\prime }(a_{i}),\mathcal {P}(-a_{i}))\). Let μ denote the matching returned by GSDT using ordering Σ on instance \(I =(A,C,\mathcal {P},b,q)\) (i.e. the instance in which applicant a i reports truthfully) and ξ the matching returned by GSDT using Σ but on instance \(I^{\prime } =(A,C,\mathcal {P}^{\prime },b,q)\). Let s = (Σ ℓ<i b(a ℓ ))+1, i.e., s is the first stage in which our mechanism considers applicant a i . Let j be the first stage of GSDT such that a i prefers ξ j to μ j, where s≤j<s + b(a i ).

Given that applicants a 1,…, a i−1 report the same in I as in I′ and all their occurrences in Σ are before stage j, Lemma 5 yields \(\mu ^{j}(a_{\ell })\simeq _{a_{\ell }}\xi ^{j}(a_{\ell })\) for ℓ = 1,2,…, i−1. Also μ j(a ℓ ) = ξ j(a ℓ ) = ∅ for ℓ = i+1, i+2,…, n 1, since no such applicant has been considered before stage j. But then, all applicants apart from a i are indifferent between μ j and ξ j, therefore a i preferring ξ j to μ j implies that μ j is not a POM in I j, a contradiction to Theorem 7. □

The next result then follows directly from Theorem 11.

Corollary 12

GSDT is truthful if all applicants have quota equal to one.

There are priority orderings for which an applicant may benefit from misreporting her preferences, even if preferences are strict. This phenomenon has also been observed in a slightly different context [6]. Let us also provide an example.

Example 13

Consider a setting with applicants a 1 and a 2 and courses c 1 and c 2, for which b(a 1)=2, b(a 2)=1, q(c 1)=1, and q(c 2)=1. Let I be an instance in which \(c_{2} {\succ }_{a_{1}}c_{1}\) and a 2 finds only c 1 acceptable. This setting admits two POMs, namely μ 1 = {(a 1, c 2),(a 2, c 1)} and μ 2 = {(a 1, c 1),(a 1, c 2)}.

GSDT returns μ 1 for Σ=(a 1, a 2, a 1). If a 1 misreports by stating that she prefers c 1 to c 2, GSDT returns μ 2 instead of μ 1. Since \(\mu _{2} {\succ }_{a_{1}} \mu _{1}\), GSDT is not truthful given Σ.

The above observation seems to be a deficiency of GSDT. We conclude by showing that no mechanism capable of producing all POMs is immune to this shortcoming.

Theorem 14

There is no universally truthful randomized mechanism that produces all POMs in CA, even if applicants’ preferences are strict and all courses have quota equal to one.

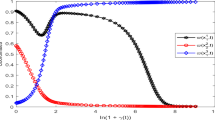

Proof

The instance I 1 in Fig. 2 admits three POMs, namely μ 1 = {(a 1, c 1),(a 2, c 2)}, μ 2 = {(a 1, c 1),(a 1, c 2)} and μ 3 = {(a 1, c 2),(a 2, c 1)}. Assume a randomized mechanism ϕ that produces all these matchings. Therefore, there must be a deterministic realization of it, denoted as ϕ D, that returns μ 1 given I 1. Let us examine the outcome of ϕ D under the slightly different applicants’ preferences shown in Fig. 2, bearing in mind that ϕ D is truthful.

-

Under I 2, ϕ D must return μ 2. The only other POM under I 2 is μ 3, but if ϕ D returns μ 3, then a 2 under I 1 has an incentive to lie and declare only c 1 acceptable (as in I 2).

-

Under I 3, ϕ D must return μ 2. The only other POM under I 3 is μ 3, but if ϕ D returns μ 3, then a 1 under I 3 has an incentive to lie and declare that she prefers c 1 to c 2 (as in I 2).

I 4 admits two POMs, namely μ 2 and μ 3. If ϕ D returns μ 2, then a 1 under I 1 has an incentive to lie and declare that she prefers c 2 to c 1 (as in I 4). If ϕ D returns μ 3, then a 2 under I 3 has an incentive to lie and declare c 2 acceptable—in addition to c 1—and less preferred than c 1 (as in I 4). Thus overall ϕ D cannot return a POM under I 4 while maintaining truthfulness. □

6 Future Work

Our work raises several questions. A particularly important problem is to investigate the expected size of the matching produced by the randomized version of GSDT. It is also interesting to characterize priority orderings that imply truthfulness in GSDT. Consequently, it will be interesting to compute the expected size of the matching produced by a randomized GSDT in which the randomization is taken over the priority orderings that induce truthfulness.

References

Abdulkadiroǧlu, A., Sönmez, T.: Random serial dictatorship and the core from random endowments in house allocation problems. Econometrica 66(3), 689–701 (1998)

Abraham, D.J., Cechlárová, K., Manlove, D.F., Mehlhorn, K.: Pareto optimality in house allocation problems. In: Proc. ISAAC ’04, volume 3341 of LNCS, pp. 3–15. Springer (2004)

Ahuja, R. K., Magnanti, T. L., Orlin, J. B.: Network Flows: Theory, Algorithms, and Applications. Prentice-Hall, Inc., Upper Saddle River (1993)

Bogomolnaia, A., Moulin, H.: A new solution to the random assignment problem. J. Econ. Theory 100(2), 295–328 (2001)

Bogomolnaia, A., Moulin, H.: Random matching under dichotomous preferences. Econometrica 72(1), 257–279 (2004)

Budish, E., Cantillon, E.: The multi-unit assignment problem: Theory and evidence from course allocation at harvard. Amer. Econ. Rev. 102(5), 2237–71 (2012)

Cechlárová, K., Eirinakis, P., Fleiner, T., Magos, D., Manlove, D., Mourtos, I., Oceľáková, E., Rastegari, B.: Pareto optimal matchings in many-to-many markets with ties. In: Proceedings of the Eighth International Symposium on Algorithmic Game Theory (SAGT’15), pp. 27–39 (2015)

Cechlárová, K., Eirinakis, P., Fleiner, T., Magos, D., Mourtos, I., Potpinková, E.: Pareto optimality in many-to-many matching problems. Discret. Optim. 14(0), 160–169 (2014)

Chen, N., Ghosh, A: Algorithms for Pareto stable assignment. In: Conitzer, V., Rothe, J. (eds.) Proc. COMSOC ’10, pp 343–354. Düsseldorf University Press (2010)

Cherkassky, B. V., Goldberg, A. V.: Negative-cycle detection algorithms. Math. Program. 85, 227–311 (1999)

Fujita, E., Lesca, J., Sonoda, A., Todo, T., Yokoo, M.: A complexity approach for core-selecting exchange with multiple indivisible goods under lexicographic preferences. In: Proc. AAAI ’15 (2015)

Gärdenfors, P.: Assignment problem based on ordinal preferences. Manag. Sci. 20(3), 331–340 (1973)

Gigerenzer, G., Goldstein, D. G.: Reasoning the fast and frugal way: Models of bounded rationality. Psychol. Rev. 103(4), 650–669 (1996)

Goldberg, A.V.: Scaling algorithms for the shortest paths problem. Technical Report STAN-CS-92-1429, Stanford University (Stanford, CA, US) (1992)

Hylland, A., Zeckhauser, R.: The efficient allocation of individuals to positions. J. Polit. Econ. 87(2), 293–314 (1979)

Klaus, B., Miyagawa, E.: Strategy-proofness, solidarity, and consistency for multiple assignment problems. Int. J .Game Theory 30, 421–435 (2001)

Krysta, P., Manlove, D., Rastegari, B., Zhang, J.: Size versus truthfulness in the House Allocation problem. Technical Report 1404.5245, Computing Research Repository, Cornell University Library. A shorter version appeared in the Proceedings of EC’14 (2014)

Manlove, D.F: Algorithmics of Matching Under Preferences. World Scientific (2013)

Saban, D., Sethuraman, J.: The complexity of computing the random priority allocation matrix. In: Proc. WINE ’13, volume 8289 of LNCS, pp. 421. Springer (2013)

Svensson, L. G.: Queue allocation of indivisible goods. Soc. Choice Welfare 11 (4), 323–330 (1994)

Zhou, L.: On a conjecture by Gale about one-sided matching problems. J. Econ/ Theory 52(1), 123–135 (1990)

Acknowledgments

This research has been co-financed by the European Union (European Social Fund - ESF) and Greek national funds under Thales grant MIS 380232 (Eirinakis, Mourtos), by grant EP/K010042/1 from the Engineering and Physical Sciences Research Council (Manlove, Rastegari), grants VEGA 1/0344/14, 1/0142/15 from the Slovak Scientific grant agency VEGA (Cechlárová), student grant VVGS-PF-2014-463 (Oceľáková) and OTKA grant K108383 (Fleiner). The authors gratefully acknowledge the support of COST Action IC1205 on Computational Social Choice.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cechlárová, K., Eirinakis, P., Fleiner, T. et al. Pareto Optimal Matchings in Many-to-Many Markets with Ties. Theory Comput Syst 59, 700–721 (2016). https://doi.org/10.1007/s00224-016-9677-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00224-016-9677-1