Abstract

The inherent stochasticity of gene expression in the context of regulatory networks profoundly influences the dynamics of the involved species. Mathematically speaking, the propagators which describe the evolution of such networks in time are typically defined as solutions of the corresponding chemical master equation (CME). However, it is not possible in general to obtain exact solutions to the CME in closed form, which is due largely to its high dimensionality. In the present article, we propose an analytical method for the efficient approximation of these propagators. We illustrate our method on the basis of two categories of stochastic models for gene expression that have been discussed in the literature. The requisite procedure consists of three steps: a probability-generating function is introduced which transforms the CME into (a system of) partial differential equations (PDEs); application of the method of characteristics then yields (a system of) ordinary differential equations (ODEs) which can be solved using dynamical systems techniques, giving closed-form expressions for the generating function; finally, propagator probabilities can be reconstructed numerically from these expressions via the Cauchy integral formula. The resulting ‘library’ of propagators lends itself naturally to implementation in a Bayesian parameter inference scheme, and can be generalised systematically to related categories of stochastic models beyond the ones considered here.

Similar content being viewed by others

1 Introduction

1.1 Motivation

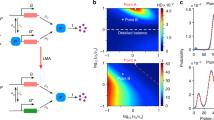

Understanding the process of gene expression in the context of gene regulatory networks is indispensable for gaining insight into the fundamentals of numerous biological processes. However, gene expression can be highly stochastic in nature, both in prokaryotic and in eukaryotic organisms; see e.g. the work by Elowitz et al. (2002), Raj and Oudenaarden (2008), Shahrezaei and Swain (2008b), and the references therein. This inherent stochasticity has a profound influence on the dynamics of the involved species, in particular when their abundance is low. Therefore, gene expression is often appropriately described by stochastic models (Bressloff 2014; Karlebach and Shamir 2008; Thattai and Oudenaarden 2001; Wilkinson 2009). A schematic of the canonical model for gene expression is depicted in Fig. 1. Here, the processes of transcription, translation, and degradation are approximated by single rates.

Figure courtesy of Shahrezaei and Swain (2008a) (Copyright (2008) National Academy of Sciences, U.S.A.)

The canonical model of gene expression. Transcription of mRNA occurs with rate \(\nu _0\); mRNA is translated to protein with rate \(\nu _1\). Both mRNA and protein decay, with rates \(d_0\) and \(d_1\), respectively.

To test the validity of such stochastic models, a comparison with experimental data needs to be performed. The development of experimental techniques, such as time-lapse fluorescence microscopy (Coutu and Schroeder 2013; Elowitz et al. 2002; Larson et al. 2011; Muzzey and Oudenaarden 2009; Raj et al. 2006; Young et al. 2011), allows for real-time tracking of gene expression dynamics in single cells, providing mRNA or protein abundance time series, such as those depicted in Fig. 2. To select between competing hypotheses on the underlying regulatory networks given measurement data, as well as to infer the values of the corresponding model parameters, we can apply Bayesian inference theory to calculate the likelihood of a given model, which is constructed as follows.

The abundance of a protein, denoted by n, is sampled at times \(t_i\), see Fig. 2, yielding a list of measurement pairs \((t_i,n_i)\) from which transitions \((\Delta t,n_i \rightarrow n_{i+1})\) between states can be extracted; here, \(\Delta t = t_{i+1}-t_i\) is the regular, fixed, sampling interval. Next, the underlying stochastic model with parameter set \(\Theta \) is used to calculate the probabilities of these transitions, which are denoted by \(P_{n_{i+1} | n_i}(\Delta t)\). These so-called propagators give the probability of \(n_{i+1}\) protein being present after time \(\Delta t\), given an initial protein abundance of \(n_i\). The log-likelihood \(L(\Theta |D)\) of the parameter set \(\Theta \), given the observed data D, is now defined in terms of the propagators as

To infer the values of parameters in the model, propagators are calculated for a wide range of parameter combinations, resulting in a ‘log-likelihood landscape’; the maximal value of the log-likelihood as a function of the model parameters yields the most likely parameter values, given the experimental data. An example realisation of the above procedure can e.g. be found in the work by Feigelman et al. (2015).

To calculate accurately the log-likelihood in (1.1), it is imperative that the values of the propagators can be extracted from the underlying stochastic model for any desired combination of parameters in \(\Theta \). In particular, we need to be able to calculate the propagator \(P_{n|n_0}(t)\) as a function of time t for any initial protein number \(n_0\). Unfortunately, the highly complex nature of the stochastic models involved makes it very difficult to obtain explicit expressions for these probabilities. Some analytical progress can be made when a steady-state approximation is performed, i.e. when it is assumed that the system is allowed to evolve for a sufficiently long time, such that it converges to a time-independent state. However, the sampling interval \(\Delta t\) used for obtaining experimental data, as seen in Fig. 2, is often short with respect to the protein life time. As that life time represents a natural time scale for the system dynamics, it follows that the evolution of the probabilities \(P_{n|n_0}(t)\) should be studied over short times, in contradiction with the steady-state or long-evolution-time approximations which have previously been employed to derive analytical results (Bokes et al. 2012a; Hornos et al. 2005; Iyer-Biswas and Jayaprakash 2014; Shahrezaei and Swain 2008a).

The complex nature of stochastic models for gene expression has led to the widespread use of stochastic simulation techniques, such as Gillespie’s algorithm (Gillespie 1977), with the aim of predicting values for the associated propagators from these models; see Feigelman et al. (2016) for recent work combining stochastic simulation with a particle filtering approach. However, these approaches can still be very time-consuming, due to the (relatively) high dimensionality of the model parameter space, combined with the fact that, for each combination of parameter values, the stochastic model has to be simulated sufficiently many times to yield a probability distribution that can be used to infer the corresponding propagator. For that reason, it is desirable to be able to obtain explicit expressions for the propagator \(P_{n|n_0}(t)\) directly in terms of the model parameters, if necessary in an appropriate approximation.

1.2 Analytical method

In the present article, we develop an analytical method for the efficient evaluation of time-dependent propagators in stochastic gene expression models, for arbitrary values of the model parameters. The results of our analysis can be implemented in a straightforward fashion in a Bayesian parameter inference framework, as outlined above.

To demonstrate our approach, we analyse two different stochastic models for gene expression. The first model, henceforth referred to as ‘model A’, is a model that incorporates autoregulation, where transcription and translation are approximated by single rates and protein can either stimulate or inhibit its own production by influencing the activity of DNA; see Fig. 3. That model was first studied by Iyer-Biswas and Jayaprakash (2014) via a steady-state approximation. The second model, henceforth referred to as ‘model B’, models both mRNA and protein explicitly and again incorporates DNA switching between an active and an inactive state; see Fig. 4. That model was first studied by Shahrezaei and Swain (2008a) in a long-evolution-time approximation.

Base figure courtesy of Shahrezaei and Swain (2008a). (Copyright (2008) National Academy of Sciences, U.S.A.)

Schematic of model A, a gene expression model with autoregulation.

Both model A and model B are formulated in terms of the chemical master equation (CME), which is the accepted mathematical representation of stochastic gene expression in the context of the model categories considered here; cf. Iyer-Biswas and Jayaprakash (2014) and Shahrezaei and Swain (2008a), respectively. Mathematically speaking, the CME is an infinite-dimensional system of linear ordinary differential equations (ODEs) that describes the evolution in time of the probabilities of observing a specific state in the system, given some initial state. Numerous approaches have been suggested for the (approximate) solution of the CME; see e.g. Popović et al. (2016) and the references therein for details. Our method relies on a combination of various techniques from the theory of differential equations and dynamical systems; specifically, we perform three consecutive steps, as follows.

-

1.

CME system \(\rightarrow \) PDE system: We introduce a probability-generating function to convert the CME into a (system of) partial differential equations (PDEs).

-

2.

PDE system \(\rightarrow \) ODE system: Applying the method of characteristics—combined, if necessary, with perturbation techniques—we transform the system of PDEs obtained in step 1 into a dynamical system, that is, a system of ODEs.

-

3.

ODE system \(\rightarrow \) Explicit solution: Making use of either special functions (model A) or multiple-time-scale analysis (model B), we obtain explicit solutions to the dynamical system found in step 2.

We emphasise that the ‘characteristic system’ of ODEs which is obtained in step 2 is low-dimensional, in contrast to the underlying CME system, as well as that it exhibits additional structure, allowing for the derivation of a closed-form analytical approximation for the associated generating function.

To convert the results of the above procedure into solutions to the original stochastic model, the three steps involved in our analysis have to be reverted. To that end, we require the following three ingredients:

-

1.

Initial conditions are originally stated in terms of the CME, and first have to be reformulated in terms of the corresponding system of PDEs to ensure well-posedness; then, initial conditions can be extracted for the dynamical system that was obtained via the method of characteristics, reverting step 3.

-

2.

To transform solutions to the characteristic system into solutions of the underlying PDE system, the associated ‘characteristic transformation’ has to be inverted, reverting step 2.

-

3.

Lastly, solutions of the CME have to be extracted from solutions to the resulting PDE system, reverting step 1. Although the correspondence between the two sets of solutions is exact, theoretically speaking, the complexity of the expressions involved precludes the efficient analytical reconstruction of propagators from their generating functions. Therefore, we propose a novel hybrid analytical-numerical approach which relies on the Cauchy integral formula.

The various steps in our analytical method, as indicated above, are represented in Fig. 5. It is important to mention that the implementation of Bayesian parameter inference, as outlined in Sect. 1.1, is not a topic for the present article; rather, the aim here is to describe our method, and to present analytical results which can readily be implemented in the context of parameter inference. The article hence realises the first stage of our research programme; the natural next stage, which is precisely that implementation, will be the subject of a follow-up article by the same authors.

1.3 Outline

The present article is organised as follows. In Sect. 2, we apply the analytical method outlined in Sect. 1.2 to model A, the gene expression model with autoregulation. Here, we use a perturbative approach to incorporate the autoregulatory aspects of the model; the resulting dynamical system can be solved in terms of confluent hypergeometric functions, see §13 in NIST Digital Library of Mathematical Functions . In Sect. 3, the same method is applied to model B, the model that explicitly incorporates transcription. We also indicate how autoregulation can be added to that model, and how the resulting extended model can be analysed on the basis of our treatment of model A. The analysis carried out in Sects. 2 and 3 yields a ‘library’ of explicit asymptotic expressions for the probability-generating functions associated to the underlying stochastic models. To obtain quantifiable expressions for their propagators, we introduce a novel hybrid analytical–numerical approach in Sect. 4, which can be readily implemented in the Bayesian parameter inference framework that provided the motivation for our analysis; see Sect. 1.1. We conclude with a discussion of our results, and an outlook to future work, in Sect. 5.

2 Model A: gene expression with autoregulation

We first demonstrate our analytical method in the context of an autoregulatory stochastic gene expression model, as presented by Iyer-Biswas and Jayaprakash (2014); see also Fig. 3. In the original article (Iyer-Biswas and Jayaprakash 2014), a Poisson representation was used to obtain analytical descriptions for time-independent solutions to the model. For a visual guide to the upcoming analysis, the reader is referred to Fig. 5.

2.1 Stochastic model and CME

The basic stochastic model for gene expression is represented by the reaction scheme

The gene can hence switch between the inactive state D and the active state \(D^*\), with switching rates \(c_f\) and \(c_b\), respectively. The active gene produces protein (P) with rate \(p_b\), while protein decays with rate \(p_d\).

The autoregulatory part of the model is implemented as either positive or negative feedback:

In the case of autoactivation, viz. (2.2a), protein induces activation of the gene with activation rate a, thereby accelerating its own production; in the case of autorepression, viz. (2.2b), protein deactivates the active gene with repression rate r, impeding its own production.

The CME system that is associated to the reaction scheme in (2.1), with autoactivation as in (2.2a), is given by

Here, \(P^{(j)}_n(t)\) (\(j=0,1\)) represents the probability of n protein being present at time t while the gene is either inactive (0) or active (1). The time variable is nondimensionalised by the protein decay rate \(p_d\); other model parameters are scaled as

Analogously, the CME system for the case of autorepression, as defined in (2.2b), is given by

Remark 2.1

A priori, it is possible to incorporate both autoactivation and autorepression in a single model, by merging systems (2.3) and (2.5). However, since autoactivation and autorepression precisely counteract each other, a partial cancellation would ensue, resulting in effective activation or repression. It can hence be argued that the simultaneous inclusion of both effects would introduce superfluous terms and parameters, which could be considered as poor modelling practice. Therefore, we choose to model the two autoregulation mechanisms separately.

2.2 Generating function PDE

Rather than investigating the dynamics of (2.3) and (2.5) numerically, using stochastic simulation, we aim to employ an analytical approach. To that end, we define the probability-generating functions \(F^{(j)}(z,t)\) (\(j=0,1\)) as follows; see e.g. Gardiner (2009):

In the case of autoactivation, the generating functions \(F^{(j)}(z,t)\) can be seen to satisfy

if the coefficients \(P^{(j)}_n(t)\) in (2.6) obey the CME system (2.3); likewise, in the case of autorepression, (2.3) gives rise to

Both (2.7) and (2.8) are systems of coupled, linear, first-order, hyperbolic partial differential equations (PDEs). Systems of this type are typically difficult to analyse; existing techniques only provide general results (Courant and Hilbert 1962; Taylor 2011).

To allow for an explicit analysis of systems (2.7) and (2.8), we make the following assumption:

Assumption 2.2

We assume that the autoactivation rate a in (2.2) is small in comparison with the other model parameters; specifically, we write

where \(0<\delta <1\) is sufficiently small. Likewise, we assume that the autorepression rate r is small in comparison with the other model parameters, writing

Previous work on the inclusion of autoregulatory effects in model selection by Feigelman et al. (2016) suggests that, in the context of Nanog expression in mouse embryonic stem cells, autoregulation rates are indeed small compared to other model parameters.

Based on Assumption 2.2, we can expand the generating functions \(F^{(j)}\) (\(j=0,1\)) as power series in \(\delta \):

Substitution of (2.11) into (2.7) yields

analogously, we substitute (2.11) into (2.8) to find

We observe that, in (2.12) and (2.13), the same leading-order differential operator acts on both \(F^{(0)}_m\) and \(F^{(1)}_m\), which allows us to apply the method of characteristics to solve the equations for \(F^{(j)}_m\) (\(j=0,1\)) simultaneously. In particular, we emphasise that, mathematically speaking, the resulting perturbation is regular in the perturbation parameter \(\delta \).

2.3 Dynamical systems analysis

In this section, we apply the method of characteristics to derive the ‘characteristic equations’ that are associated to the PDE systems (2.12) and (2.13), respectively; the former are systems of ODEs, which are naturally analysed in the language of dynamical systems.

2.3.1 Autoactivation

We first consider the case of autoactivation; to that end, we rewrite system (2.12) as

where we have introduced the new variable

The differential operator \(\partial _t + v \partial _v\) in Eq. (2.14) gives rise to characteristics \(\xi (s;v_0)\) that obey the characteristic equation

these characteristics can thus be expressed as

Since the partial differential operators in (2.14) transform into

we arrive at the following system:

Note that Eq. (2.19) is a recursive (nonhomogeneous) system of ordinary differential equations for \(F^{(j)}_m\) (\(j=0,1\)). Henceforth, we will therefore refer to (2.19) as such, while retaining the use of partial derivatives \(\partial _s\) due to the presence of \(\partial _{v_0}\) in the corresponding right-hand sides.

To solve system (2.19), we rewrite it as a second-order ODE for \(F^{(0)}_m\): we hence obtain

which can be solved recursively to determine \(F^{(0)}_m\) for any \(m\ge 0\). To simplify (2.20), we introduce the variable

which transforms the partial derivatives \(\partial _s\) and \(\partial _{v_0}\) into

Equation (2.20) hence reads

Using (2.19a), we can express the second component \(F^{(1)}_m\) in terms of \(F^{(0)}_m\) as

At leading order, i.e. for \(m=0\), (2.23) reduces to

the solutions of which can be expressed in terms of the confluent hypergeometric function \({}_1 F_1\), see §13 of NIST Digital Library of Mathematical Functions , to yield

Using (2.24), we can determine

with \(F^{(0)}_0\) as given in (2.26); for an explicit expression, see Eq. (A.1) in Appendix A.

The expression for \(F^{(0)}_0(w)\) in (2.26) allows us to determine the first-order correction \(F^{(0)}_1(w)\): substituting \(m=1\) in (2.23), we obtain

Next, we apply the method of variation of constants to express the solution to (2.28) as

where

with \(F^{(0)}_0\) as given in (2.26). Finally, we may again use (2.24) to determine

with \(F^{(0)}_0\) and \(F^{(0)}_1\) as given in (2.26) and (2.29), respectively.

At this point in the analysis, the constants \(c_{1}\) and \(c_{2}\) in (2.26) and the integration limits \(c_{3}\) and \(c_{4}\) in (2.29) remain undetermined. To fix these constants, and thereby determine a unique solution to (2.19), we have to prescribe appropriate initial conditions.

2.3.2 Autorepression

Given the analysis of autoactivation in the previous subsection, the case of autorepression can be analysed in an analogous manner. Employing the same characteristics as before, recall (2.17), we obtain

from system (2.13); cf. Eq. (2.19). Next, we rewrite (2.32) as a second-order ODE for \(F^{(1)}_m\), using again the variable transformation in (2.21):

which can be solved recursively to obtain \(F^{(1)}_m\) for any \(m\ge 1\). The first component \(F^{(0)}_m\) can be expressed in terms of \(F^{(1)}_m\) as

to leading order, we thus obtain

and

The corresponding equation for the first-order correction \(F^{(1)}_1\) reads

which can be solved via the method of variation of constants to give

here,

with \(F^{(1)}_0\) as in (2.35). The first-order correction to the first component \(F^{(0)}_0\) is hence given by

with \(F^{(1)}_0\) and \(F^{(1)}_1\) as in (2.35) and (2.38), respectively. As in the case of autoactivation, the constants \(\hat{c}_{1}\) and \(\hat{c}_2\) in (2.35) and the integration limits \(\hat{c}_{3}\) and \(\hat{c}_4\) in (2.38) remain undetermined, and have to be fixed through suitable initial conditions.

2.4 Initial conditions

To determine appropriate initial conditions for the dynamical systems (2.19) and (2.32), we consider the original CME systems (2.3) and (2.5), respectively.

At time \(t=0\), we impose an initial protein number \(n=n_0\), which implies

for the probabilities \(P^{(j)}_n(t)\) (\(j=0,1\)); here, \(\delta _{n,n_0}\) denotes the standard Kronecker symbol, with \(\delta _{n,n_0}=1\) for \(n=n_0\) and \(\delta _{n,n_0}=0\) otherwise. Using the definition of the generating functions \(F^{(j)}(z,t)\) in (2.6), we find that

taking into account the change of variables in (2.15). Thus, (2.42) provides an initial condition for the PDE systems (2.7) and (2.8). Given the power series expansion in (2.11), we infer that the coefficients \(F^{(j)}_m(v,t)\) (\(j=0,1\)) satisfy

which holds for both (2.12) and (2.13).

To be able to interpret the initial conditions in (2.43) in the context of the dynamical systems (2.19) and (2.32), we revisit the method of characteristics, which was used to map the PDE systems (2.12) and (2.13) to the former, respectively.

The characteristics of the differential operator \(\partial _t + v \partial _v(=\partial _s)\) in (2.19) and (2.32) are the integral curves of the vector field (1, v). Geometrically speaking, these characteristic curves foliate the (t, v)-plane over the v-axis. Therefore, each characteristic can be identified by its base point, which is the point where the characteristic curve intersects the v-axis, at \(v=v_0\); see Eq. (2.17) and Fig. 6.

Equivalently, each characteristic can be represented as a graph over the t-axis. Indeed, the differential equation for the v-component of a characteristic curve (2.16) can be solved to obtain the natural parametric description of a characteristic ‘fibre’ with base point \(v=v_0\), which is given by \((s,v_0 \mathrm{e}^{s})\). Here, the parameter s along the characteristic is chosen such that its point of intersection with the v-axis lies at \(s=0\). Given that choice, it is natural to identify the parameter along the characteristic (s) with the time variable (t). Hence, the initial conditions in (2.43), which determine a relation between \(F^{(0)}_m\) and \(F^{(1)}_m\) on the v-axis, can be interpreted on every characteristic as

which again holds for both (2.19) and (2.32).

Remark 2.3

For CME systems such as (2.3) and (2.5), it is customary to impose a ‘normalisation’ condition of the form

as \(P^{(0)}_m(t)\) and \(P^{(1)}_m(t)\) represent probabilities. Recast in the framework of generating functions, recall (2.6), the above normalisation condition yields the boundary condition

It is worthwhile to note that (2.46) is automatically satisfied whenever (2.43) is imposed: by adding the two equations in system (2.12)—or, equivalently, in (2.13)—one sees that \(F^{(0)}_m+F^{(1)}_m\) satisfies

The line \(\{v=0\}\) is represented by the ‘trivial’ characteristic, which is identified by \(v_0=0\); therefore, on that characteristic, we impose (2.43) to find

which implies that \(F^{(0)}_0 + F^{(1)}_0 = 1\) for all s, as well as that \(F^{(0)}_m + F^{(1)}_m\) vanishes identically for all \(m \ge 1\). Substituting these results into the power series representation for \(F^{(j)}(z,t)\) (\(j=0,1\)) in (2.11), we obtain (2.46).

At this point, it is important to observe that Eq. (2.43) determines a line of initial conditions in the phase spaces of the dynamical systems (2.19) and (2.32). Therefore, at every order in \(\delta \), we can only fix one of the two free parameters that arise in the solution of the corresponding differential equations. In particular, (2.43) only fixes either \(c_1\) or \(c_2\) in (2.26), and either \(c_3\) or \(c_4\) in (2.29), and so forth. That indeterminacy motivates us to introduce a new parameter \(\chi \), which is defined as follows:

Definition 2.4

Consider the CME systems (2.3) and (2.5). We define \(\chi (n_0)\) to be the probability that the gene modelled by the reaction scheme in (2.1) is switched off at time \(t=0\), given an initial protein number \(n_0\).

Definition 2.4 immediately specifies initial conditions for systems (2.3) and (2.5) via

the above expression, in turn, provides us with a complete set of initial conditions for the PDE systems (2.7) and (2.8), to wit

Here, we allow for the fact that \(\chi (n_0)\) may depend on other model parameters, and in particular on the autoregulation rates a and r; for a discussion of alternative options, see Sect. 4.1. We therefore expand \(\chi (n_0)\) as a power series in \(\delta \),

The above expansion can be used to infer a complete set of initial conditions for the PDE systems (2.12) and (2.13), yielding

By the same reasoning that inferred (2.44) from (2.43), we can conclude that the complete set of initial conditions for the dynamical systems (2.19) and (2.32) is given by

We can now use the conditions in (2.53) to determine the free constants \(c_{1}\) and \(c_{2}\) in (2.26), which yields

Analogously, for \(\hat{c}_{1}\) and \(\hat{c}_{2}\) in (2.35), we obtain

with \(c_{1}\) and \(c_{2}\) as in (2.54). Using conversion formulas found in §13.3(i) of NIST Digital Library of Mathematical Functions , one can show that the expressions resulting from (2.35) and (2.36) match those in (2.27) and (2.26), respectively, as expected; see also Eq. (A.1). Explicit expressions for the integration limits \(c_{3}\) and \(c_{4}\) in (2.29), as well as for the corresponding limits \(\hat{c}_{3}\) and \(\hat{c}_{4}\) in (2.38), can be found in Appendix A.

2.5 Inverse transformation

The final step towards providing explicit solutions to the PDE systems (2.7) and (2.8) consists in interpreting the solutions to the dynamical systems (2.19) and (2.32), with initial conditions as in (2.53), as solutions to the PDE systems (2.12) and (2.13), respectively. To that end, we again consider the corresponding characteristics from a geometric viewpoint.

As mentioned in Sect. 2.4, the (t, v)-coordinate plane is foliated by characteristics, which are the integral curves of the vector field (1, v); recall Fig. 6. Hence, any point (t, v) lies on a unique characteristic; flowing backward along that characteristic to its intersection with the v-axis, we can determine the corresponding base point \(v_0\) by the inverse transformation

since we have identified the parameter along the characteristic (s) with the time variable (t).

To determine the value of \(F^{(j)}_m(v,t)\) (\(j=0,1\)), interpreted as a solution to the PDE system (2.12) or (2.13), we proceed as follows. We first apply the inverse transformation in (2.56) to establish on which characteristic the coordinate pair (t, v) lies. For that characteristic, identified by its base point \(v_0\), we then find the solution to the dynamical system (2.19) or (2.32), which is a function of s and \(v_0\). Next, we substitute \(s = t\) and \(v_0 = v\mathrm{e}^{-t}\) into that solution to obtain an explicit expression for the solution to the PDE system (2.12) or (2.13):

where \(F^{(j)}_m\) (\(j=0,1\)) on the right-hand side denotes the solution to (2.19) or (2.32), with initial conditions as in (2.53). Lastly, we substitute \(F^{(j)}_m(v,t)\) into the power series in (2.11) to obtain an explicit solution to (2.7) or (2.8), to satisfactory order in \(\delta \).

Remark 2.5

The geometric interpretation of characteristics that was used to motivate the inverse transformation in (2.56) also shows that the introduction of the new system parameter \(\chi \) in Definition 2.4 is necessary for the generating functions \(F^{(j)}\) to be determined uniquely as solutions to (2.7) or (2.8), even if we are only interested in their sum \(F^{(0)}(v,t) + F^{(1)}(v,t)\). The crucial point is that any free constants obtained in the process of solving the dynamical systems (2.19) and (2.32)—or, equivalently, their second-order one-dimensional counterparts (2.20) and (2.33), respectively—are constant in s. In other words, they are constant along the particular characteristic on which the dynamical system is solved. These constants—such as e.g. \(c_{1}\) and \(c_{2}\) in (2.26)—can, and generally will, depend on the base point \(v_0\) of the characteristic; see for example (2.54). The inverse transformation in (2.56) that is used to reconstruct the solution to the original PDE from that of the corresponding dynamical system would then yield undetermined functions \(c(v\,\mathrm{e}^{-t})\) in the resulting solutions to (2.7) and (2.8), respectively.

2.6 Summary of main result

To summarise Sect. 2, we combine the analysis of the previous subsections to state our main result.

Main result: The PDE system (2.7) can be solved for sufficiently small autoactivation rates a; see Assumption 2.2. Its solutions \(F^{(j)}(z,t)\) (\(j=0,1\)) are expressed as power series in the small parameter \(\delta \); recall (2.11). The coefficients \(F^{(j)}_m(z,t)\) in these series, written in terms of the shifted variable v defined in (2.15), can be found by

-

(1)

solving recursively the second-order ODE (2.20) and using the identity in (2.24), incorporating the initial conditions in (2.53);

-

(2)

and, subsequently, applying the inverse transformation in (2.56) to the resulting solutions.

Likewise, we can solve the PDE system (2.8) for sufficiently small autorepression rates r; see Assumption 2.2. Its solutions \(F^{(j)}(z,t)\) are again expressed as power series in the small parameter \(\delta \); cf. (2.11). The coefficients \(F^{(j)}_m(z,t)\) in these series, written in terms of the shifted variable v defined in (2.15), can be found by

-

(1)

solving recursively the second-order ODE (2.33) and using the identity in (2.34), incorporating the initial conditions in (2.53);

-

(2)

and, subsequently, applying the inverse transformation in (2.56) to the resulting solutions.

To illustrate the procedure described above, we state the resulting explicit expressions for the leading-order solution to (2.7)—or, equivalently, to (2.8)—in terms of the original variables z and t:

Note that similar expressions were derived by Iyer-Biswas et al. (2009), where an analogous generating function approach was applied under the assumption that the gene is initially inactive—i.e. that \(\chi (n_0)=1\), see Definition 2.4—as well as that the initial protein number \(n_0\) is zero. With these choices, the expression for \(F^{(0)}_0+F^{(1)}_0\) from (2.58) can be seen to coincide with that found in Equations (5) through (7) of Iyer-Biswas et al. (2009), using §13.3.4 and §13.2.39 of NIST Digital Library of Mathematical Functions .

3 Model B: gene expression with explicit transcription

In this section, we apply our analytical method to model B, a stochastic gene expression model presented by Shahrezaei and Swain (2008a) which explicitly incorporates the transcription stage in the expression process, as well as DNA switching; see also Fig. 4. In the original article by Shahrezaei and Swain (2008a), a generating function approach was used to obtain analytical expressions for the time-independent (‘stationary’) solution to the model. For a visual guide to the upcoming analysis, the reader is again referred to Fig. 5.

3.1 Stochastic model and CME

The model for stochastic gene expression considered here is given by the reaction scheme

The modelled gene can hence switch between inactive and active states which are denoted by D and \(D^*\), respectively, with corresponding switching rates \(k_0\) and \(k_1\). The active gene is transcribed to mRNA (M) with rate \(\nu _0\); mRNA is translated to protein (P) with rate \(\nu _1\). Finally, mRNA decays with rate \(d_0\), while protein decays with rate \(d_1\).

As in model A, autoregulatory terms can be added to the core reaction scheme in (3.1). Since both mRNA and protein are modelled explicitly, we can identify four distinct autoregulatory mechanisms, in analogy to those in (2.2a) and (2.2b):

Autoactivation can be achieved either by mRNA or by protein, with rates \(a_M\) or \(a_P\), respectively; similarly, autorepression can occur either through mRNA or through protein, with respective rates \(r_M\) or \(r_P\).

The CME system associated to the reaction scheme in (3.1) is given by

Here, \(P^{(j)}_{m,n}(t)\) (\(j=0,1\)) represents the probability of m mRNA and n protein being present at time t while the gene is either inactive (0) or active (1). As in (2.3) and (2.5), the time variable is nondimensionalised by the protein decay rate \(d_1\); other model parameters are scaled as

We note that the above scaling was also used by Shahrezaei and Swain (2008a). The effects of incorporating the autoregulatory mechanisms in (3.2) into the CME system (3.3) are specified in Table 1.

3.2 Generating function PDE

Since the probabilities \(P^{(j)}_{m,n}(t)\) (\(j=0,1\)) in (3.3) depend on both the mRNA number m and the protein number n, we introduce probability-generating functions that are defined by double asymptotic series:

For coefficients \(P^{(j)}_{m,n}(t)\) that obey the CME system (3.3), the associated generating functions \(F^{(j)}(w,z,t)\) satisfy

The effects of incorporating the autoregulatory mechanisms in (3.2) into system (3.6) are specified in Table 2.

3.3 Dynamical systems analysis

As before, the PDE system (3.6) for the generating function can be reformulated as a system of ODEs via the method of characteristics. The differential operator \(\partial _t + (z-1)\partial _z +\gamma (w-1)\partial _w-\gamma \mu (z-1)w \partial _w\) now gives rise to the characteristic system

where \(\dot{u} = \frac{\text {d} u}{\text {d} s}\) is the derivative along the characteristic, which is parametrised by s. For simplicity, we have introduced the new variables u and v, which are defined as

respectively. On the resulting characteristics, Eq. (3.6) yields the system of ODEs

First, we note that the characteristic system (3.7) can be solved explicitly in terms of the incomplete Gamma function \(\Gamma (a,z)\), see §8.2(i) of NIST Digital Library of Mathematical Functions ), yielding

However, due to its complex nature, the expression for u(s) given in (3.10a) cannot be used to obtain explicit expressions for the generating functions \(F^{(j)}\) that solve system (3.9). Therefore, inspired by the analysis by Shahrezaei and Swain (2008a) and Popović et al. (2016), we make the following assumption:

Assumption 3.1

We assume that the decay rate of protein (\(d_1\)) is smaller than the decay rate of mRNA (\(d_0\)); specifically, we write

where \(0<\varepsilon <1\) is sufficiently small.

The resulting scale separation between mRNA and protein decay rates is well-documented in many microbial organisms, including in bacteria and yeast (Shahrezaei and Swain 2008a; Yu et al. 2006).

For clarity of presentation, we make an additional assumption here.

Assumption 3.2

We assume that all other model parameters \(\kappa _0\), \(\kappa _1\), \(\lambda \), and \(\mu \), as defined in (3.4), are \(\mathcal {O}(1)\) in \(\varepsilon \).

Remark 3.3

Although Assumption 3.2 is not strictly necessary for the upcoming analysis, it is beneficial. It is worthwhile to note that the analytical scheme presented in this section can be applied in a straightforward fashion in cases where Assumption 3.2 fails, which is particularly relevant in relation to previous work (Shahrezaei and Swain 2008a; Feigelman et al. 2015), where the CME system (3.3) is studied for parameter values far beyond the range implied by Assumption 3.2.

Using Assumption 3.1, we can write the characteristic system (3.7) as

Since \(\varepsilon \) is assumed to be small, we can classify (3.12) as a singularly perturbed slow-fast system in standard form; see Kuehn (2015). A comprehensive slow-fast analysis of Eq. (3.12) was carried out by Popović et al. (2016); we highlight some relevant aspects of that analysis here.

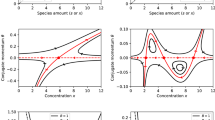

System (3.12) gives rise to a critical manifold \(C_0 = \left\{ (u,v)\, \big |\, u = \frac{\mu v}{1-\mu v}\right\} \). If \(\varepsilon \) is asymptotically small, orbits of (3.12) can be separated into slow and fast segments, using Fenichel’s geometric singular perturbation theory (Kuehn 2015). The critical manifold \(C_0\) is normally repelling; in other words, orbits converge to \(C_0\) in backward time at an exponential rate. For initial conditions asymptotically close to \(C_0\), orbits initially follow \(C_0\) closely for some time, after which they move away from \(C_0\) under the fast dynamics; see also Fig. 7.

To analyse the fast dynamics of system (3.12), we introduce the fast variable \(\sigma = \frac{s}{\varepsilon }\); in terms of \(\sigma \), (3.12) is hence expressed as

where \(u' = \frac{\text {d} u}{\text {d} \sigma }\). We can solve (3.13b) explicitly and write the result as a power series in \(\varepsilon \), which yields

Expressing the solution to (3.13a) as a power series in \(\varepsilon \), as well, i.e. writing

substituting (3.15) into (3.13a), and making use of (3.14), we find

with initial conditions

The first two terms in (3.15) are thus given by

We now employ the expansion in (3.15) to obtain explicit expressions for the generating functions \(F^{(j)}\) (\(j=0,1\)). In the fast variable \(\sigma = \frac{s}{\varepsilon }\), system (3.9) takes the form

As in the analysis of model A, recall Sect. 2.3, we rewrite system (3.19) as a second-order ODE for \(F^{(0)}\) to find

Next, we use (3.19a) to express \(F^{(1)}\) in terms of \(F^{(0)}\) as

To incorporate the expansion for u in (3.15), we also expand \(F^{(j)}\) (\(j=0,1\)) in powers of \(\varepsilon \), writing

Substitution of (3.22) into (3.20) then yields

and

for \(n \ge 2\). By combining (3.21) with (3.22), we obtain

and

for \(n \ge 0\). We can solve Eqs. (3.23) and (3.24) iteratively—taking into account the additional condition on \(F^{(0)}_0\) in (3.25)—to find

for the first three terms which, upon substitution into (3.26), yields

here, \(f_i\) and \(g_i\) are free constants, to be determined by initial conditions; see Sect. 3.4.

Contrary to common practice in the study of slow-fast systems such as (3.19), we forego a detailed analysis of the slow system (3.9), continuing our discussion with the determination of appropriate initial conditions; cf. Sect. 2.4. For details on why the slow dynamics is disregarded, the reader is referred to Remark 3.5.

3.4 Initial conditions and inverse transformation

To complete our analytical method, we discuss the determination of initial conditions and the reconstruction of the solution to the original PDE system (3.6), as was done for model A in Sects. 2.4 and 2.5, respectively.

3.4.1 Initial conditions

We follow the reasoning of Sect. 2.4, and determine appropriate initial conditions by considering the original CME system (3.3).

At time \(t=0\), we prescribe initial mRNA and protein numbers \(m_0\) and \(n_0\), respectively. As in Sect. 2.4, we again introduce the parameter \(\chi \), which is defined as follows; compare Definition 2.4:

Definition 3.4

Consider the CME system (3.3). We define \(\chi (m_0,n_0)\) to be the probability that the gene modelled by the reaction scheme in (3.1) is switched off at time \(t=0\), given initial mRNA and protein numbers \(m_0\) and \(n_0\), respectively.

For the probabilities \(P^{(j)}_{m,n}(t)\) (\(j=0,1\)), Definition 3.4 implies that

Using the definition of the generating functions \(F^{(j)}(w,z,t)\) in (3.5), we find

taking into account the change of variables in (3.8).

Next, we can infer e.g. from (3.10) and well-known properties of the incomplete Gamma function—see §8.2 of NIST Digital Library of Mathematical Functions —that solutions to (3.7) exist globally, i.e. for all s. In particular, given an arbitrary triple \((s_*,u_*,v_*)\), we can apply the inverse flow of (3.7) to flow backward \((u_*,v_*)\) for time \(s_*\). We conclude that the characteristics of the operator \(\partial _t +(z-1)\partial _z + \gamma (w-1)\partial _w -\gamma \mu (z-1)w \partial _w = \partial _t + v \partial _v + \gamma u \partial _u - \gamma \mu (u+1)v \partial _u\), which are given by the orbits of system (3.7), foliate the (t, u, v)-coordinate space over the \(\left\{ t=0\right\} \)-plane when the parameter along the characteristic (s) is identified with the time variable (t). We can therefore uniquely identify such a characteristic—interpreted as a fibre over the \(\left\{ t=0\right\} \)-plane—by its base point. Because orbits of (3.7) provide a parametrisation of the underlying characteristics, the coordinates of that base point are given by \((0,u_0,v_0)\), where \((u_0,v_0)\) are the initial values of the corresponding orbit of (3.7). Hence, the conditions in (3.30) yield, on each characteristic,

As \(s=0\) implies \(\sigma = 0\), we can apply the initial conditions in (3.31) to the solutions of the ODE system (3.19) in order to determine the free constants \(f_i\) and \(g_i\) in (3.27) and (3.28). Combining the power series expansion in (3.22) with (3.31), we obtain

which implies

3.4.2 Inverse transformation

Since the (t, u, v)-coordinate space is foliated by the characteristics of the operator \(\partial _t +v \partial _v + \gamma u \partial _u - \gamma \mu (u+1)v \partial _u\), any point (t, u, v) lies on a unique characteristic. Flowing backward along that characteristic to its intersection with the \(\left\{ t=0\right\} \)-plane, we can determine the corresponding base point \((0,u_0,v_0)\) by inverting the relations in (3.10). Since the dynamics of the v-coordinate do not depend on u, we may use (2.56) to express \(v_0\) in terms of t and v only. Taking the resulting expression as input for inverting (3.10a), we obtain the inverse characteristic transformation

with

Under Assumption 3.1, we can employ the power series expansion in (3.15), in combination with the recursive set of ODEs in (3.16) and initial conditions as in (3.17), as an alternative to (3.35a) to obtain \(u_0\) as a function of u, v (or \(v_0\)), and \(\sigma \). Rewriting the result as a power series in \(\varepsilon \), we find

naturally, (3.14) gives rise to

Since the independent variable in (3.16) is \(\sigma \), and since s is naturally identified with t, we replace \(\sigma \) with \(\frac{t}{\varepsilon }\) in (3.36) to obtain a perturbative expansion for \(u_0(t,u,v)\).

The solution to the PDE system (3.6) can now be found to satisfactory order in \(\varepsilon \) by applying the inverse transformation in (3.34) to the solutions given in (3.27) and (3.28), taking into account the values of \(f_i\) and \(g_i\) in (3.33). In other words,

where \(F^{(j)}_n\) (\(j=0,1\)) on the right-hand side of the above expression denotes the solution to Eqs. (3.23a)–(3.26), with initial conditions as in (3.32).

Remark 3.5

The absence of any detailed analysis of the characteristic system in its slow formulation, Eq. (3.12), can be argued as follows, by considering the corresponding phase space, as depicted in Fig. 7.

-

(a)

For arbitrary initial conditions \((u_0,v_0)\), the dominant dynamics are fast, since the critical manifold \(C_0\) is normally repelling. In other words, solutions are generally repelled away from \(C_0\) under the fast dynamics.

-

(b)

All orbits that have their initial conditions on the same Fenichel fibre are exponentially close (in \(\varepsilon \)) to each other near the slow manifold \(C_\varepsilon \) that is associated to the critical manifold \(C_0\). Therefore, flowing backward from (u, v) to \((u_0,v_0)\)—as expressed through the inverse transformation in (3.34) that yields the corresponding PDE solution—may introduce exponentially large terms in the transformation, precluding any sensible series expansion.

Thus, although the construction of a composite ‘(initially) slow—(ultimately) fast’ expression of \(F^{(j)}\) as a solution to systems (3.12) and (3.13) certainly makes sense from a dynamical systems perspective, the extreme lack of sensitivity of orbits on their initial conditions \((u_0,v_0)\) may prevent such a composite expansion from being useful for obtaining solutions to the original PDE system (3.6).

Remark 3.6

In Sect. 3.3, explicit expressions are given for the expansion of \(F^{(0)}\) up to \(\mathcal {O}(\varepsilon ^2)\) only, cf. (3.27); similarly, \(F^{(1)}\) is approximated to \(\mathcal {O}(\varepsilon )\) in (3.28), for the sake of brevity. It is worthwhile to note that a lower bound on the order of the expansion is stipulated by the application; recall Sect. 1.1: the sampling time \(\Delta t\) can be considered as a minimum time interval over which the results of our analysis should be (reasonably) accurate. To that end, we have to compare \(\Delta t\) with \(\varepsilon = \frac{1}{\gamma }\), the parameter defining the fast time scale on which the above analytical results have been derived. We can then apply the classical theory of Poincaré expansions (Verhulst 2000) to infer that, if for example \(\Delta t = \mathcal {O}(1)\)—which implies \(\Delta t = \mathcal {O}(\varepsilon ^{-1})\) in the fast time variable \(\sigma \)—the generating functions \(F^{(j})\) should at least be expanded up to \(\mathcal {O}(\varepsilon ^2)\) for the resulting approximation to be accurate to \(\mathcal {O}(\varepsilon )\).

3.5 Autoregulation

The inclusion of any type of autoregulation into system (3.6)—which is equivalent to the addition of model terms from Table 2 to the right-hand sides of the corresponding equations—precludes the direct application of the method of characteristics, as the resulting partial differential operators in Eqs. (3.6a) and (3.6b) do not coincide anymore. To resolve that complication, we follow the approach of Sect. 2, making the following assumption:

Assumption 3.7

We assume that the autoregulation rates \(a_M\), \(r_M\), \(a_P\), and \(r_P\), as defined in (3.2), are small in comparison with the protein decay rate \(d_1\); specifically, we write

where \(0<\delta <1\) is sufficiently small.

Next, we expand the generating functions \(F^{(j)}\) (\(j=0,1\)) as power series in \(\delta \); recall (2.11):

To demonstrate the procedure, we include mRNA autoactivation in (3.6), see again Table 2; the analysis of the remaining autoregulatory mechanisms can be performed in a similar fashion. Substitution of (3.40) now yields

cf. (2.12). System (3.41) is then amenable to the method of characteristics. In fact, employing the same characteristics as in the unperturbed setting, recall (3.7), we find

while the partial differential operators in Table 2 transform into

here, \(u(s;u_0,v_0)\) and \(v(s;v_0)\) are as given in (3.10). Thus, the mRNA autoactivation system (3.41) transforms to

To obtain explicit solutions to system (3.44), we adopt Assumption 3.1 and revert to the fast time scale \(\sigma = \frac{s}{\varepsilon }\) to write the dynamical system (3.44) as a second-order ODE for \(F^{(0)}_m\), which yields

Using (3.44), we can express \(F^{(1)}_m\) in terms of \(F^{(0)}_m\) as

To solve (3.45) (recursively), we expand \(F^{(j)}_m\) (\(j=0,1\)) in powers of \(\varepsilon \):

recall Eq. (3.22). Together with the series expansion for u in (3.15), we thus obtain

and

for \(n \ge 2\); compare with Eqs. (3.23a), (3.23b), and (3.24). The coefficients \(G^{(0)}_{m,n}\) in the above expression are defined from an expansion of the autoregulation term as

From (3.46), we obtain

and

for \(n \ge 0\); recall (3.25) and (3.26). To solve Eqs. (3.48) through (3.53) iteratively, we fix m—the order of the expansion in \(\delta \)—and determine the solution to satisfactory order in n, the order of the expansion in \(\varepsilon \). Then, we increase m to \(m+1\) and take the result as input for the dynamics at order \(m+1\). The resulting repeated iteration procedure yields an explicit expression for the generating functions \(F^{(j)}\) (\(j=0,1\)) as double asymptotic series in both \(\delta \) and \(\varepsilon \).

The determination of appropriate initial conditions is largely analogous to the non-autoregulated case; see Sect. 3.4.1. However, with the inclusion of autoregulation into model B, we need to incorporate the possibility that \(\chi (m_0,n_0)\) depends on the corresponding autoregulation rates. As in the case of model A, we expand \(\chi (m_0,n_0)\) as a power series in \(\delta \):

recall (2.51). In that case, the initial conditions for \(F^{(j)}_{m,n}\) can be inferred from (3.32) to give

Solutions to Eqs. (3.48)–(3.53) that incorporate the conditions in (3.55) for all types of autoregulation introduced in (3.2) can be found in Appendix B. Finally, our previous results on the inverse characteristic transformation in the non-autoregulated case from Sect. 3.4.2 can now be applied in a straightforward fashion to give solutions to the PDE system (3.6) with added autoregulation.

3.6 Summary of main result

To summarise Sect. 3, we combine the analysis of the previous subsections to state our main result.

Main result: The PDE system (3.6) can be solved for sufficiently large values of \(\gamma \); see Assumption 3.1. Its solutions \(F^{(j)}(w,z,t)\) (\(j=0,1\)) are expressed as power series in the small parameter \(\varepsilon =\frac{1}{\gamma }\); cf. (3.22). The coefficients \(F_n^{(j)}(w,z,t)\) in these series, written in terms of the shifted variables u and v defined in (3.8), can be found by

-

(1)

solving recursively the second-order ODEs (3.23a) through (3.24) and using the identities in (3.25) and (3.26), incorporating the initial conditions in (3.32);

-

(2)

subsequently applying the inverse transformations in (3.36) and (3.37) to the resulting solutions;

-

(3)

and, finally, substituting \(\sigma = \frac{t}{\varepsilon }\).

To illustrate the procedure described above, we state the resulting explicit expressions for the leading-order solution to (3.6) in terms of the original variables w, z, and t here:

Note that the sum \(F_0^{(0)} + F_0^{(1)}\) corresponds precisely to the leading-order fast expansion found in Equation (21) of Bokes et al. (2012b).

If autoregulation as in (3.2) is incorporated into model B, the main result can be formulated as follows.

Main result (autoregulatory extension): The PDE system (3.6) incorporating any one type of autoregulation from Table 2 can be solved as long as \(\gamma \) is sufficiently large and \(\delta \) is sufficiently small; see Assumptions 3.1 and 3.7, respectively. Its solutions \(F^{(j)}(w,z,t)\) (\(j=0,1\)) are expressed as double power series in the small parameters \(\delta \) and \(\varepsilon \), viz.

The coefficients \(F^{(j)}_{m,n}\) in these series, written in terms of the shifted variables u and v defined in (3.8), can be found by

-

(1a)

solving recursively the second-order ODEs (3.48) through (3.50) for fixed m and using the identities in (3.52) and (3.53), incorporating the initial conditions in (3.55);

-

(1b)

increasing m to \(m+1\), and repeating step (1a) until a sufficient accuracy in \(\delta \) (and \(\varepsilon \)) is attained;

-

(2)

subsequently applying the inverse transformations in (3.36) and (3.37) to the resulting solutions;

-

(3)

and, finally, substituting \(\sigma = \frac{t}{\varepsilon }\).

4 From generating function to propagator

The final step in our analytical method consists in reconstructing the probabilities \(P^{(j)}_n\) (model A) and \(P^{(j)}_{m,n}\) (model B), respectively, from the explicit expressions for the associated generating functions \(F^{(j)}\) (\(j=0,1\)), which were the main analytical outcome of Sects. 2 and 3.

In principle, the relation between probabilities and probability-generating functions is clear from the definition of the latter, and is given in (2.6) and (3.5), respectively. Specifically, probabilities can be expressed in terms of derivatives of their generating functions as follows:

However, explicit expressions for the nth and \((m+n)\)th order derivatives, respectively, of these generating functions become progressively unwieldy with increasing m and n. Indeed, from the expressions for the generating functions \(F^{(j)}\) obtained previously, which combine (2.26) for specific initial conditions as in (2.54) with the inverse characteristic transformation in (2.56), it is clear that finding explicit expressions for derivatives of arbitrary order is very difficult indeed, if it is possible at all.Footnote 1

To complete successfully the final step towards approximating propagators for parameter inference in the present setting, we abandon the requirement of deriving explicit expressions for the probabilities \(P^{(j)}_n\) and \(P^{(j)}_{m,n}\). Instead, we use the standard Cauchy integral formula for derivatives of holomorphic functions to write

here, \(\gamma _A\) is a suitably chosen contour around \(z=0\), while \(\gamma _B\) is a (double) contour around \((w,z) = (0,0)\).

The above expression of probabilities as integrals is well suited for an efficient numerical implementation, which is naturally incorporated into the realisation of the parameter inference scheme discussed in Sect. 1. From a numerical perspective, the integral formula in (4.2) has the additional advantage that the values of \(F^{(j)}\) on (a discretisation of) the integral contours \(\gamma _{A}\) and \(\gamma _{B}\), respectively, only have to be determined once to yield propagators for any values of m and n and fixed initial states \(m_0\) and \(n_0\). Moreover, we are free to choose the integration contours \(\gamma _{A}\) and \(\gamma _{B}\), which allows us to accelerate the calculation of these integrals; see Bornemann (2011). Here, we note that the choice of circular integration contours with unit radius, and subsequent discretisation of those contours as M-sided and N-sided polygons, respectively, coincides with the ‘Fourier mode’ approach, as presented by Bokes et al. (2012a).

Remark 4.1

By introducing the Cauchy integral formula for derivatives of holomorphic functions in (4.2), we implicitly assume that the integration contours \(\gamma _{A}\) and \(\gamma _{B}\) are chosen such that they lie completely within the open neighbourhoods of the origin in \(\mathbb {C}\) and \(\mathbb {C}^2\), respectively, where the canonical complex extensions of the generating functions \(F^{(j)}\)—which exist by the Cauchy-Kowalevski theorem—are holomorphic. In other words, \(\gamma _{A}\) and \(\gamma _{B}\) must be chosen such that any poles of \(F^{(j)}\) lie outside of these integration contours. The expansion for \(u_0\) in (3.36) shows that this is not a moot point: the generating functions \(F^{(j)}\) resulting for model B, as established in Sect. 3, will generically have a pole at \(v = \frac{1}{\mu }\), i.e. at \(z = 1 +\frac{1}{\mu }\). As \(\mu \) is positive, by (3.4), choosing the z-contour of \(\gamma _B\) to be a circle with at most unit radius allows us to avoid that pole.

4.1 Incorporation of \({\pmb \chi }\)

In the course of the analysis presented in Sects. 2 and 3, the introduction of the parameter \(\chi \) was necessary to obtain definite, explicit expressions for the generating functions as solutions to the PDE systems (2.7), (2.8), and (3.6); see Definitions 2.4 and 3.4. The successful implementation of these expressions in a parameter inference scheme requires us to decide how to incorporate that new parameter. We identify three options here.

-

1.

Before implementing parameter inference, we can marginalise over the new parameter \(\chi \) to eliminate it altogether, using a predetermined measure \(\text {d} \mu (\chi )\), which adds an additional integration step to the requisite numerical scheme.

-

2.

We can make a choice for \(\chi \) that is based on the specifics of the model under consideration. Thus, exploiting the Markov property of the stochastic models underlying (2.3), (2.5), and (3.3), we may use the switching rates and any autoregulation rates to express \(\chi \) in model A as

$$\begin{aligned} \chi (n_0)&= \frac{c_b}{c_b+c_f+a n_0}&\text {(autoactivation)}, \end{aligned}$$(4.3a)$$\begin{aligned} \chi (n_0)&= \frac{c_b+r n_0}{c_b+r n_0+c_f}&\text {(autorepression)}; \end{aligned}$$(4.3b)the corresponding expressions for model B read

$$\begin{aligned} \chi (m_0,n_0)&= \frac{k_1}{k_0 + k_1}&\text {(no autoregulation)}, \end{aligned}$$(4.4a)$$\begin{aligned} \chi (m_0,n_0)&= \frac{k_1}{k_0 + k_1 + a_M m_0}&\text {(mRNA autoactivation)}, \end{aligned}$$(4.4b)$$\begin{aligned} \chi (m_0,n_0)&= \frac{k_1 + r_M m_0}{k_0 + k_1 + r_M m_0}&\text {(mRNA autorepression)}, \end{aligned}$$(4.4c)$$\begin{aligned} \chi (m_0,n_0)&= \frac{k_1}{k_0 + k_1 + a_P n_0}&\text {(protein autoactivation)}, \end{aligned}$$(4.4d)$$\begin{aligned} \chi (m_0,n_0)&= \frac{k_1 + r_P n_0}{k_0 + k_1 + r_P n_0}&\text {(protein autorepression)}. \end{aligned}$$(4.4e) -

3.

We can determine \(\chi \) ‘experimentally’ by including the latter in the parameter set that is to be inferred in the (numerical) process of parameter inference.

Note that step 2 has been anticipated in the analysis of model A, by introducing the series expansion in (2.51). Indeed, by Assumption 2.2, we can expand \(\chi (n_0)\) as

Likewise, when autoregulation is added to model B, Assumption 3.7 implies an expansion for \(\chi (m_0,n_0)\) of the form

5 Discussion and outlook

In the present article, we have developed an analytical method for obtaining explicit, fully time-dependent expressions for the probability-generating functions that are associated to models for stochastic gene expression. Moreover, we have presented a computationally efficient approach which allows us to derive model predictions (in the form of propagators) from these generating functions, using the Cauchy integral formula. It is important to note that our method does not make any steady-state or long-evolution-time approximations. On the contrary, the perturbative nature of our approach naturally optimises its applicability over relatively short (or intermediate) time scales; see also Remark 3.6. As is argued in Sect. 1.1, such relatively short evolution times naturally occur in the calculation of quantities such as the log-likelihood, as defined in Eq. (1.1). Therefore, our analytical approach is naturally suited to an implementation in a Bayesian parameter inference scheme, such as is outlined in Sect. 1.1.

As mentioned in Sects. 2.2 and 3.3, the introduction of Assumptions 2.2 and 3.7 in our analysis of the systems of PDEs and ODEs that are obtained via the generating function approach is necessary for determining explicit expressions for the generating functions themselves. Therefore, we can only be certain of the validity of our approach if we assume that the autoregulation rates are small in comparison with other model parameters, as is done there. Moreover, in the analysis of model B, we have to assume that the protein decay rate is smaller than the decay rate of mRNA; recall Assumption 3.1. That assumption is valid for a large class of (microbial) organisms (Shahrezaei and Swain 2008a; Yu et al. 2006); however, it is by no means generic, as the two decay rates are often comparable in mammalian cells (Schwanhäusser et al. 2011; Vogel and Marcotte 2012). Since the accuracy of approximation of the explicit expressions for the generating functions derived here is quantified in terms of orders of the perturbation parameter(s), see e.g. Remark 3.6, violation of Assumption 2.2, 3.1, or 3.7 will decrease the predictive power of the results obtained by the application of the analytical method developed in the article.

The method which is described in Sect. 1.2, and outlined visually in Fig. 5, hence provides a generic framework for the analysis of stochastic gene expression models such as model A (Fig. 3 and Sect. 2.1) and model B (Fig. 4 and Sect. 3.1). Note that, for example, the steady-state and long-evolution-time approximations derived by Shahrezaei and Swain (2008a) could be extended to autoregulatory systems via the same approach. However, as is apparent from the (differences between the) analysis presented in Sects. 2 and 3, the ‘path to an explicit solution’ is highly model-dependent. The decision on which analytical techniques to apply, such as the perturbative expansion postulated in (3.22), has to be made on a case-by-case basis. The success of the method presented in the article fully depends on whether the resulting dynamical systems can be solved explicitly. To that end, it is highly beneficial that the systems (2.19) and (3.19) obtained here are linear, which is a direct consequence of the fact that all reactions described in the reaction schemes in (2.1) and (2.2), as well as in (3.1) and (3.2), are of first order. Inclusion of second-order reactions would introduce both nonlinear terms and second-order differential operators in the PDE systems for the corresponding generating functions, which would severely increase the complexity of these systems, thus preventing us from obtaining explicit solutions.

The method presented in this article, and the results thus obtained, can be seen, first and foremost, as the natural extension of previous work by Popović et al. (2016). Analytical results for the classes of models studied here can be found in several earlier articles. We mention the article by Shahrezaei and Swain (2008a), where a leading-order approximation was obtained in a long-evolution-time and steady-state limit. Bokes et al. (2012a) derived analytical expressions for stationary distributions in a model that is equivalent to that considered by Popović et al. (2016). Also for that model, a time-scale separation was exploited by Bokes et al. (2012b), in a manner that is similar to the present article, to obtain leading-order analytical expressions on both time scales. The model that is referred to as Model A in Sect. 2 was analysed in a steady-state setting by Iyer-Biswas and Jayaprakash (2014) via the Poisson integral transform. A similar model was studied by Hornos et al. (2005), were a generating function approach was used; making a steady-state Ansatz, the authors were able to obtain an explicit solution for the generating function in terms of special (Kummer) functions; see also NIST Digital Library of Mathematical Functions . The same model was later solved in a fully time-dependent context by Ramos et al. (2011), after a cleverly chosen variable substitution, in terms of another class of special (Heun) functions; cf. again NIST Digital Library of Mathematical Functions .

Other authors have attempted to solve several classes of CMEs directly, i.e. without resorting to generating function techniques or integral transforms. A noteworthy example is the work of Jahnke and Huisinga (2007) on monomolecular systems. Another, more recent example can be found in the work by Iserles and MacNamara (2017), where exact solutions are determined for explicitly time-dependent isomerisation models.

It is important to emphasise that the ‘time dependence’ referred to in the title of the present article is solely due to the dynamic nature of the underlying stochastic process, and that it hence manifests exclusively through time derivatives in the associated CMEs, such as e.g. in (2.3). In particular, none of the model parameters are time-dependent, as opposed to, for example, the system studied by Iserles and MacNamara (2017). The inclusion of such explicitly time-dependent parameters would be a starting point for incorporating the influence of (extrinsic) noise in the context of the model categories considered in the article.

The availability of analytical expressions for generating functions does, in principle, allow one to try to obtain insight into the underlying processes by studying the explicit form of said expressions, as has been done e.g. by Bokes et al. (Bokes et al. 2012a, b). However, the complex nature of the processes we analyse here seems to preclude such insights. For example, the integrals over confluent hypergeometric functions, which appear in (2.29), cannot themselves be efficiently expressed in terms of (other) special functions. Still, that complication does not necessarily pose an obstacle to the application we ultimately have in mind, i.e. to Bayesian parameter inference. As the last step in our method—the extraction of propagators from generating functions, see Sect. 4—is numerical, the precise functional form of the generating function is not of importance. The mere fact that an explicit expression can be obtained is sufficient for the application of the Cauchy integral formula, where these generating functions enter into the calculation of the appropriate integrals; see again Sect. 4.

The analytical approach explored in the article does not, of course, represent the only feasible way of obtaining numerical values for propagator probabilities, which can, in turn, serve as input for a Bayesian parameter inference scheme. For an example of a direct numerical method in which the Cauchy integral plays a central role, the reader is referred to the work by MacNamara (2015). Our main motivation for pursuing an analytical alternative is reducing the need for potentially lengthy numerical simulations. An efficient implementation of the resulting expressions can result in (significantly) reduced computation times; see, for example, the work by Bornemann (2011). The optimisation of the underlying numerical procedures is, however, beyond the scope of the present article in particular, and of our research programme in general.

The analytical results obtained thus far, as presented in the article, are ready for implementation in a Bayesian parameter inference framework. An analysis of the performance of the resulting approximations to the associated generating functions in the spirit of the article by Feigelman et al. (2015), where parameter inference is tested on simulated data based on specific stochastic models, is ongoing work. Moreover, the successful application of our analytical method to specific model categories, such as are represented by model A and model B, suggests several feasible expansions of the ‘model library’ for which explicit expressions for the corresponding generating functions can be constructed. Thus, stochastic models comprised of multiple proteins represent a natural next stage, bringing the analysis of toggle switch-type models within reach. In addition, one could begin exploring the vast field of gene regulatory networks by considering a simple two-protein system with, for example, activator-inhibitor interaction. Under the assumption of small interaction rates, the resulting PDE system for the associated generating function would be directly amenable to the analytical method described in the article. The analysis of these and similar systems could be a topic for future research.

Notes

In some specific cases, however, such explicit expressions can be found; see e.g. Popović et al. (2016).

References

Bokes P et al (2012a) Exact and approximate distributions of protein and mRNA levels in the low-copy regime of gene expression. J Math Biol 64(5):829–854. https://doi.org/10.1007/s00285-011-0433-5

Bokes P et al (2012b) Multiscale stochastic modelling of gene expression. J Math Biol 65(3):493–520. https://doi.org/10.1007/s00285-011-0468-7

Bornemann F (2011) Accuracy and stability of computing high-order derivatives of analytic functions by Cauchy integrals. Found Comput Math 11(1):1–63. https://doi.org/10.1007/s10208-010-9075-z

Bressloff PC (2014) Stochastic processes in cell biology, vol 41. In: Interdisciplinary applied mathematics, Chap 6. Stochastic gene expression and regulatory networks. Springer, pp 269–340. ISBN:978-3-319-08488-6. https://doi.org/10.1007/978-3-319-08488-6_6

Courant R, Hilbert D (1962) Methods of mathematical physics, vol II: partial differential equations. Wiley. ISBN:978-0-471-50439-9. https://doi.org/10.1002/9783527617234

Coutu DL, Schroeder T (2013) Probing cellular processes by long-term live imaging—historic problems and current solutions. J Cell Sci 126:3805–3815. https://doi.org/10.1242/jcs.118349

Elowitz MB et al (2002) Stochastic gene expression in a single cell. Science 297(5584):1183–1186. https://doi.org/10.1126/science.1070919

Feigelman J, Popović N, Marr C (2015) A case study on the use of scale separation-based analytical propagators for parameter inference in models of stochastic gene regulation. J Coupled Syst Multiscale Dyn 3(2):164–173. https://doi.org/10.1166/jcsmd.2015.1074

Feigelman J et al (2016) Analysis of cell lineage trees by exact Bayesian inference identifies negative autoregulation of Nanog in mouse embryonic stem cells. Cell Syst 3(5):480–490. https://doi.org/10.1016/j.cels.2016.11.001

Gardiner C (2009) Stochastic methods: a handbook for the natural and social sciences, 4th edn, vol 13. Springer series in synergetics. Springer. ISBN:978-3-540-70712-7. http://www.springer.com/978-3-540-70712-7

Gillespie DT (1977) Exact stochastic simulation of coupled chemical reactions. J Phys Chem 81(25):2340–2361. https://doi.org/10.1021/j100540a008

Hornos JEM et al (2005) Self-regulating gene: an exact solution. Phys Rev E Stat Nonlinear Biol Soft Matter Phys. https://doi.org/10.1103/PhysRevE.90.051907

Iserles A, MacNamara S (2017) Magnus expansions and pseudospectra of master equations. Preprint. arxiv:1701.02522

Iyer-Biswas S, Jayaprakash C (2014) Mixed Poisson distributions in exact solutions of stochastic autoregulation models. Phys Rev E Stat Nonlinear Biol Soft Matter Phys. https://doi.org/10.1103/PhysRevE.90.052712

Iyer-Biswas S, Hayot F, Jayaprakash C (2009) Stochasticity of gene products from transcriptional pulsing. Phys Rev E Stat Nonlinear Biol Soft Matter Phys. https://doi.org/10.1103/PhysRevE.79.031911

Jahnke T, Huisinga W (2007) Solving the chemical master equation for monomolecular reaction systems analytically. J Math Biol 54(1):1–26. https://doi.org/10.1007/s00285-006-0034-x

Karlebach G, Shamir R (2008) Modelling and analysis of gene regulatory networks. Nat Rev Mol Cell Biol 9:770–780. https://doi.org/10.1038/nrm2503

Kuehn C (2015) Multiple time scale dynamics, vol 191. Applied mathematical sciences. Springer. ISBN:978-3-319-12315-8. https://doi.org/10.1007/978-3-319-12316-5

Larson DR et al (2011) Real-time observation of transcription initiation and elongation on an endogenous yeast gene. Science 332(6028):475–478. https://doi.org/10.1126/science.1202142

MacNamara S (2015) Cauchy integrals for computational solutions of master equations. In: Sharples J, Bunder J (eds) Proceedings of the 17th biennial computational techniques and applications conference, CTAC-2014, vol 56. ANZIAM Journal, pp C32–C51. http://journal.austms.org.au/ojs/index.php/ANZIAMJ/article/view/9345

Muzzey D, van Oudenaarden A (2009) Quantitative time-lapse fluorescence microscopy in single cells. Annu Rev Cell Dev Biol 25:301–327. https://doi.org/10.1146/annurev.cellbio.042308.113408

NIST Digital Library of Mathematical Functions. http://dlmf.nist.gov/, Release 1.0.14 of 2016-12-21. Olver FWJ, Olde Daalhuis AB, Lozier DW, Schneider BI, Boisvert RF, Clark CW, Miller BR, Saunders BV (eds). http://dlmf.nist.gov/

Popović N, Marr C, Swain PS (2016) A geometric analysis of fast-slow models for stochastic gene expression. J Math Biol 72(1):87–122. https://doi.org/10.1007/s00285-015-0876-1

Raj A, van Oudenaarden A (2008) Nature, nurture or chance: stochastic gene expression and its consequences. Cell 135(2):216–226. https://doi.org/10.1016/j.cell.2008.09.050

Raj A et al (2006) Stochastic mRNA synthesis in mammalian cells. PLoS Biol 4(10):1707–1719. https://doi.org/10.1371/journal.pbio.0040309

Ramos AF, Innocentini GCP, Hornos JEM (2011) Exact time-dependent solutions for a self-regulating gene. Phys Rev E Stat Nonlinear Biol Soft Matter Phys. https://doi.org/10.1103/PhysRevE.83.062902

Schwanhäusser B et al (2011) Global quantification of mammalian gene expression control. Nature 473:337–342. https://doi.org/10.1038/nature10098

Shahrezaei V, Swain PS (2008a) Analytical distributions for stochastic gene expression. Proc Nat Acad Sci USA 105(45):17256–17261. https://doi.org/10.1073/pnas.0803850105

Shahrezaei V, Swain PS (2008b) The stochastic nature of biochemical networks. Curr Opin Biotechnol 19(4):369–374. https://doi.org/10.1016/j.copbio.2008.06.011

Taylor M (2011) Partial differential equations I: basic theory. 2nd edn, vol 115. Applied mathematical sciences. Springer. ISBN:978-1-4419-7054-1. https://doi.org/10.1007/978-1-4419-7055-8

Thattai M, van Oudenaarden A (2001) Intrinsic noise in gene regulatory networks. Proc Nat Acad Sci USA 98(15):8614–8619. https://doi.org/10.1073/pnas.151588598

Verhulst F (2000) Nonlinear differential equations and dynamical systems. Universitext, 2nd edn. Springer, Berlin. ISBN:978-3-540-60934-6. https://doi.org/10.1007/978-3-642-61453-8

Vogel C, Marcotte EM (2012) Insights into the regulation of protein abundance from proteomic and transcriptomic analyses. Nat Rev Genet 13:227–232. https://doi.org/10.1038/nrg3185

Wilkinson DJ (2009) Stochastical modelling for quantitative description of heterogenous biological systems. Nat Rev Genet 10:122–133. https://doi.org/10.1038/nrg2509

Young JW et al (2011) Measuring single-cell gene expression dynamics in bacteria using fluorescence time-lapse microscopy. Nat Protocols 7:80–88. https://doi.org/10.1038/nprot.2011.432

Yu J et al (2006) Probing gene expression in live cells, one protein molecule at a time. Science 311(5767):1600–1603. https://doi.org/10.1126/science.1119623

Acknowledgements

The authors thank Peter Swain and Adri Olde Daalhuis (both University of Edinburgh) for their valuable input during discussions at earlier stages of the article. Moreover, the authors are grateful to three anonymous reviewers whose comments and suggestions improved the original manuscript. This work has been supported by the Leverhulme Trust, through Research Project Grant RPG-2015-017 (‘A geometric analysis of multiple-scale models for stochastic gene expression’).

Author information

Authors and Affiliations

Corresponding author

Appendices

Model A: explicit expressions

An explicit expression for (2.27) can be stated as follows:

which can be shown to be equal to (2.35) using §13.3(i) of NIST Digital Library of Mathematical Functions when \(\hat{c}_1 = c_1 \frac{\kappa _f}{\kappa _b}\) and \(\hat{c}_2 = c_2\). Explicit expressions involving the integration limits \(c_{3}\) and \(c_{4}\) in (2.29), respectively, are obtained as

and

respectively, with \(F^{(0)}_0\) as in (2.26). Similarly, explicit expression that involve the integration limits \(\hat{c}_{3}\) and \(\hat{c}_{4}\) in (2.38), respectively, read

and

respectively, with \(F^{(1)}_0\) as in (2.35).

Model B with autoregulation: explicit expressions

To leading order in \(\delta \), i.e. for \(m=0\), the right-hand side of Eq. (3.45) vanishes; hence, the expressions in Eqs. (3.20) and (3.21) apply. In other words, we can identify \(F^{(j)}_{0,n}\) with \(F^{(j)}_n\) as given in (3.27) and (3.28), for \(j=0,1\) and \(n= 0,1,2\). The free constants \(f_i\) and \(g_i\) are as stated in (3.33), with \(\chi \) replaced with \(\chi _0\). In the following sections, we present explicit expressions for the first-order correction in \(\delta \)—i.e. for \(m=1\)—for all autoregulatory scenarios listed in (3.2).

1.1 mRNA autoactivation

We can solve (3.45) to second order in \(\varepsilon \), i.e. for \(n=2\), applying the initial conditions in (3.55) to obtain

which yields

where

1.2 mRNA autorepression

For the case of mRNA autorepression, we replace \( \alpha _M (u+1)\left( \frac{\partial u}{\partial u_0}\right) ^{-1}\partial _{u_0} F^{(0)}_{m-1}\) in Eqs. (3.45) and (3.46) with

which yields

Hence, it follows that

where

1.3 Protein autoactivation

For the case of protein autoactivation, we replace \(\alpha _M (u+1)\left( \frac{\partial u}{\partial u_0}\right) ^{-1}\partial _{u_0} F^{(0)}_{m-1}\) in Eqs. (3.45) and (3.46) with

which yields

Hence, it follows that

where

1.4 Protein autorepression

For the case of protein autorepression, we replace \(\alpha _M (u+1)\left( \frac{\partial u}{\partial u_0}\right) ^{-1}\partial _{u_0} F^{(0)}_{m-1}\) in Eqs. (3.45) and (3.46) with

which yields

It follows that

where

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article