Abstract

Purpose

To quantify the inter-observer variability of manual delineation of lesions and organ contours in CT to establish a reference standard for volumetric measurements for clinical decision making and for the evaluation of automatic segmentation algorithms.

Materials and methods

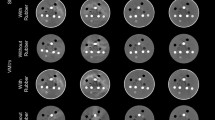

Eleven radiologists manually delineated 3193 contours of liver tumours (896), lung tumours (1085), kidney contours (434) and brain hematomas (497) on 490 slices of clinical CT scans. A comparative analysis of the delineations was then performed to quantify the inter-observer delineation variability with standard volume metrics and with new group-wise metrics for delineations produced by groups of observers.

Results

The mean volume overlap variability values and ranges (in %) between the delineations of two observers were: liver tumours 17.8 [-5.8,+7.2]%, lung tumours 20.8 [-8.8,+10.2]%, kidney contours 8.8 [-0.8,+1.2]% and brain hematomas 18 [-6.0,+6.0] %. For any two randomly selected observers, the mean delineation volume overlap variability was 5–57%. The mean variability captured by groups of two, three and five observers was 37%, 53% and 72%; eight observers accounted for 75–94% of the total variability. For all cases, 38.5% of the delineation non-agreement was due to parts of the delineation of a single observer disagreeing with the others. No statistical difference was found for the delineation variability between the observers based on their expertise.

Conclusion

The variability in manual delineations for different structures and observers is large and spans a wide range across a variety of structures and pathologies. Two and even three observers may not be sufficient to establish the full range of inter-observer variability.

Key Points

• This study quantifies the inter-observer variability of manual delineation of lesions and organ contours in CT.

• The variability of manual delineations between two observers can be significant. Two and even three observers capture only a fraction of the full range of inter-observer variability observed in common practice.

• Inter-observer manual delineation variability is necessary to establish a reference standard for radiologist training and evaluation and for the evaluation of automatic segmentation algorithms.

Similar content being viewed by others

Abbreviations

- CT:

-

Computed tomography

- HD LED:

-

High-definition light-emitting diode

- IRB:

-

Institutional review board

- MDCT:

-

Multiple-detector computed tomography

References

Nanda A, Konar SK, Maiti TK, Bir SC, Guthikonda B (2016) Stratification of predictive factors to assess resectability and surgical outcome in clinoidal meningioma. Clin Neurol Neurosurg 142:31–37

Vivanti R, Szeskin A, Lev-Cohain N, Sosna J, Joskowicz L (2017) Automatic detection of new tumors and tumor burden evaluation in longitudinal liver CT scan studies. Int J Comput Assist Radiol Surg 12(11):1945–1957

Bhooshan N, Sharma NK, Badiyan S et al (2016) Pretreatment tumor volume as a prognostic factor in metastatic colorectal cancer treated with selective internal radiation to the liver using yttrium-90 resin microspheres. J Gastrointest Oncol 7(6):931–937

Abbara S, Blanke P, Maroules CD et al (2016) SCCT guidelines for the performance and acquisition of coronary computed tomographic angiography: A report of the society of Cardiovascular Computed Tomography Guidelines Committee: Endorsed by the North American Society for Cardiovascular Imaging (NASCI). J Cardiovasc Comput Tomogr 10(6):435–449

Greenberg V, Lazarev I, Frank Y, Dudnik J, Ariad S, Shelef I (2017) Semi-automatic volumetric measurement of response to chemotherapy in lung cancer patients: How wrong are we using RECIST? Lung Cancer 108:90–95

Pupulim LF, Ronot M, Paradis V, Chemouny S, Vilgrain V (2017) Volumetric measurement of hepatic tumors: accuracy of manual contouring using CT with volumetric pathology as the reference method. Diagn Interv Imaging S2211-5684(17):30282–30286

Cai W, He B, Fan Y, Fang C, Jia F (2016) Comparison of liver volumetry on contrast-enhanced CT images: one semiautomatic and two automatic approaches. J Appl Clin Med Phys 17(6):118–127

Haas M, Hamm B, Niehues SM (2014) Automated lung volumetry from routine thoracic CT scans: how reliable is the result? Acad Radiol 21(5):633–638

Warfield SK, Zou KH, Wells WM (2004) Simultaneous truth and performance level estimation (STAPLE): an algorithm for the validation of image segmentation. IEEE Trans Med Imaging 23(7):903–921

ITK-SNAP open software. http://www.itksnap.org/pmwiki/pmwiki.php. Accessed Jul 8 2018.

Cohen D (2017) Segmentation variability estimation in medical image processing: framework, method and study. MSc Thesis. The Hebrew University of Jerusalem Israel

Meyer CR, Johnson TD, McLennan G et al (2006) Evaluation of lung MDCT nodule annotation across radiologists and methods. Acad Radiol 13(10):1254–1265

Bø HK, Solheim O, Jakola AS, Kvistad KA, Reinertsen I, Berntsen EM (2017) Intra-rater variability in low-grade glioma segmentation. J Neurooncol 131(2):393–402

Gurari D, Theriault D, Sameki M, et al (2015) How to collect segmentations for biomedical images? A benchmark evaluating the performance of experts, crowdsourced non-experts, and algorithms. Proc IEEE Winter Conference on Applications of Computer Vision, pp 1169–1176

Irshad H, Montaser-Kouhsari L, Waltz G et al (2015) Crowdsourcing image annotation for nucleus detection and segmentation in computational pathology: evaluating experts, automated methods, and the crowd. Pac Symp Biocomput, pp 294–305

Helm E, Seitel A, Isensee F et al (2018) Clickstream analysis for crowd-based objects segmentation with confidence. IEEE Trans Pattern Anal Mach Intell, to appear. https://doi.org/10.1109/TPAMI.2017.2777967

Valindria VV, Lavdas I, Bai W et al (2017) Reverse classification accuracy: predicting segmentation performance in the absence of ground truth. IEEE Trans Med Imaging 36(8):1597–1606

Acknowledgements

We thank Dr. Alexander Benstein and the team of radiologists of the Department of Radiology Hadassah Hebrew University Medical Center, Jerusalem, Israel, for their participation in the manual delineation project. We also thank Dr. Tammy Riklin-Raviv, Ben Gurion University of the Negev, for providing the brain CT scans and the brain hematoma delineations.

Funding

This study has received partial funding from the Israel Ministry of Science, Technology and Space, grant 53681, 2016-19, and by the Oppenheimer Applied Research Grant, The Hebrew University, TUBITAK ARDEB grant no. 110E264, 2015-16.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is Prof. Leo Joskowicz.

Conflict of interest

The authors of this manuscript declare no relationships with any companies, whose products or services may be related to the subject matter of the article.

Statistics and biometry

No complex statistical methods were necessary for this paper.

Informed consent

Written informed consent was waived by the institutional review board.

Ethical approval

Institutional review board approval was obtained.

Methodology

• retrospective

• experimental

• performed at one institution

Electronic supplementary material

ESM 1

(PDF 354 kb)

Rights and permissions

About this article

Cite this article

Joskowicz, L., Cohen, D., Caplan, N. et al. Inter-observer variability of manual contour delineation of structures in CT. Eur Radiol 29, 1391–1399 (2019). https://doi.org/10.1007/s00330-018-5695-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-018-5695-5