Abstract

We introduce multilevel versions of Dyson Brownian motions of arbitrary parameter \(\beta >0\), generalizing the interlacing reflected Brownian motions of Warren for \(\beta =2\). Such processes unify \(\beta \) corners processes and Dyson Brownian motions in a single object. Our approach is based on the approximation by certain multilevel discrete Markov chains of independent interest, which are defined by means of Jack symmetric polynomials. In particular, this approach allows to show that the levels in a multilevel Dyson Brownian motion are intertwined (at least for \(\beta \ge 1\)) and to give the corresponding link explicitly.

Similar content being viewed by others

1 Introduction

1.1 Preface

The Hermite general \(\beta >0\) ensemble of rank \(N\) is a probability distribution on the set of \(N\) tuples of reals \(z_1<z_2<\cdots <z_N\) with density proportional to

When \(\beta =2\), that density describes the joint distribution of the eigenvalues of a random Hermitian \(N\times N\) matrix \(M\), whose diagonal entries are i.i.d. real standard normal random variables, while real and imaginary parts of its entries above the diagonal are i.i.d. normal random variables of variance \(1/2\). The law of such a random matrix is referred to as the Gaussian Unitary Ensemble (GUE) (see e.g. [3, 20, 32]) and it has attracted much attention in the mathematical physics literature following the seminal work of Wigner in the 50s. Similarly, the case of \(\beta =1\) describes the joint distribution of eigenvalues of a real symmetric matrix sampled from the Gaussian Orthogonal Ensemble (GOE) and the case \(\beta =4\) corresponds to the Gaussian Symplectic Ensemble (GSE) (see e.g. [3, 20, 32] for the detailed definitions).

It is convenient to view the realizations of (1.1) as point processes on the real line and there are two well-known ways of adding a second dimension to that picture. The first one is to consider an \(N\)-dimensional diffusion known as Dyson Brownian motion (see [32, Chapter 9], [3, Section 4.3] and the references therein) which is the unique strong solution of the system of stochastic differential equations

with \(W_1,W_2,\ldots ,W_N\) being independent standard Brownian motions. If one solves (1.2) with zero initial condition, then the distribution of the solution at time \(1\) is given by (1.1). When \(\beta =2\) and one starts with zero initial condition, the diffusion (1.2) has two probabilistic interpretations: it can be either viewed as a system of \(N\) independent standard Brownian motions conditioned never to collide via a suitable Doob’s \(h\)-transform; or it can be regarded as the evolution of the eigenvalues of a Hermitian random matrix whose elements evolve as (independent) Brownian motions.

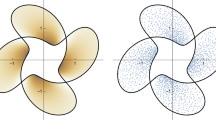

An alternative way of adding a second dimension to the ensemble in (1.1) involves the so-called corner processes. For \(\beta =2\) take a \(N\times N\) GUE matrix \(M\) and let \(x^N_1\le x^N_2\le \cdots \le x^N_N\) be its ordered eigenvalues. More generally for every \(1\le k\le N\) let \(x^k_1\le x^k_2 \le \cdots \le x^k_k\) be the eigenvalues of the top-left \(k\times k\) submatrix (“corner”) of \(M\). It is well-known that the eigenvalues interlace in the sense that \(x_i^k\le x_i^{k-1}\le x_{i+1}^k\) for \(i=1,\dots ,k-1\) (see Fig. 1 for a schematic illustration of the eigenvalues).

The joint distribution of \(x^k_i\), \(1\le i\le k\le N\) is known as the GUE-corners process (some authors also use the name “GUE-minors process”) and its study was initiated in [4] and [26]. The GUE-corners process is uniquely characterized by two properties: its projection to the set of particles \(x^N_1,x^N_2,\ldots ,x^N_N\) is given by (1.1) with \(\beta =2\) (normalized to a probability density), and the conditional distribution of \(x^k_i\), \(1\le i\le k\le N-1\) given \(x^N_1,x^N_2,\ldots ,x^N_N\) is uniform on the polytope defined by the interlacing conditions above, see [4, 21]. Due to the combination of the gaussianity and uniformity embedded into its definition, the GUE–corners process appears as a universal scaling limit for a number of 2d models of statistical mechanics, see [22–24, 26, 38].

Similarly, one can construct corners processes for \(\beta =1\) and \(\beta =4\), see e.g. [33]. Extrapolating the resulting formulas for the joint density of eigenvalues to general values of \(\beta >0\) one arrives at the following definition.

Definition 1.1

The Hermite \(\beta \) corners process of variance \(t>0\) is the unique probability distribution on the set of reals \(x^k_i\), \(1\le i\le k\le N\) subject to the interlacing conditions \(x_i^k\le x_i^{k-1}\le x_{i+1}^k\) whose density is proportional to

The fact that the projection of the Hermite \(\beta \) corners process of variance \(1\) onto level \(k\) (that is, on the coordinates \(x_1^k,x^k_2,\ldots ,x_k^k\)) is given by the corresponding Hermite \(\beta \) ensemble of (1.1) can be deduced from the Dixon-Anderson integration formula (see [2, 17]), which was studied before in the context of Selberg integrals (see [44], [20, Chapter 4]). One particular case of the Selberg integral is the evaluation of the normalizing constant for the probability density of the Hermite \(\beta \) ensemble of (1.1). We provide more details in this direction in Sect. 2.2.

The ultimate goal of the present article is to combine Dyson Brownian motions and corner processes in a single picture. In other words, we aim to introduce a relatively simple diffusion on interlacing particle configurations whose projection on a fixed level is given by Dyson Brownian motion of (1.2), while its fixed time distributions are given by the Hermite \(\beta \) corners processes of Definition 1.1.

One would think that a natural way to do this (at least for \(\beta =1,2,4\)) is to consider an \(N\times N\) matrix of suitable Brownian motions and to project it onto the (interlacing) set of eigenvalues of the matrix and its top-left \(k\times k\) corners, thus generalizing the original construction of Dyson. However, the resulting stochastic process ends up being quite nasty even in the case \(\beta =2\). It is shown in [1] that already for \(N=3\) (and at least some initial conditions) the projection is not a Markov process. When one considers only two adjacent levels (that is, the projection onto \(x_1^N,x^N_2,\ldots ,x_N^N; x_1^{N-1},x^{N-1}_2,\ldots ,x_{N-1}^{N-1}\)), then it can be proven (see [1]) that the projection is Markovian, but the corresponding SDE is very complicated.

An alternative elegant solution for the case \(\beta =2\) was given by Warren [46]. Consider the process \((Y^k_i:1\le i\le k\le N)\) defined through the following inductive procedure: \(Y^1_1\) is a standard Brownian motion with zero initial condition; given \(Y^1_1\), the processes \(Y^2_1\) and \(Y^2_2\) are constructed as independent standard Brownian motions started at zero and reflected on the trajectory of \(Y^1_1\) in such a way that \(Y^2_1(t)\le Y^1_1(t)\le Y^2_2(t)\) holds for all \(t\ge 0\). More generally, having constructed the processes on the first \(k\) levels (that is, \(Y^m_i\), \(1\le i\le m\le k\)) one defines \(Y^{k+1}_i\) as an independent standard Brownian motion started at \(0\) and reflected on the trajectories of \(Y^k_{i-1}\) and \(Y^k_{i}\) in such a way that \(Y^{k-1}_{i-1}(t)\le Y^k_i(t)\le Y^{k-1}_i(t)\) remains true for all \(t\ge 0\) (see [46] and also [24] for more details). Warren shows that the projection of the dynamics on a level \(k\) (that is, on \(Y^k_1,Y^k_2,\ldots ,Y^k_k\)) is given by a \(k\)-dimensional Dyson Brownian Motion of (1.2) with \(\beta =2\), and that the fixed time distributions of the process \((Y^k_i:1\le i\le k\le N)\) are given by the Hermite \(\beta \) corners processes of Definition 1.1 with \(\beta =2\).

Our aim is to construct a generalization of the Warren process for general values of \(\beta \). In other words, we want to answer the question “What is the general \(\beta \) analogue of the reflected interlacing Brownian Motions of [46]?”.

1.2 Our results

Our approach to the construction of the desired general \(\beta \) multilevel stochastic process is based on discrete space approximation. In [24] we proved that the reflected interlacing Brownian motions of [46] can be obtained as a diffusive scaling limit for a class of stochastic dynamics on discrete interlacing particle configurations. The latter dynamics are constructed from independent random walks by imposing the local block/push interactions between particles to preserve the interlacing conditions. The special cases of such processes arise naturally in the study of two-dimensional statistical mechanics systems such as random stepped surfaces and various types of tilings (cf. [6, 9, 10, 34]).

In Sect. 2 we introduce a deformation \(X^{multi}_{disc}(t)\) of these processes depending on a positive parameter \(\theta \) (which is omitted from the notation, and with \(\theta =1\) corresponding to the previously known case). The resulting discrete space dynamics is an intriguing interacting particle system with global interactions whose state space is given by interlacing particle configurations with integer coordinates. Computer simulations of this dynamics for \(\theta =1/2\) and \(\theta =2\) can be found at [25].

We further study the diffusive limit of \(X^{multi}_{disc}(s)\) under the rescaling of time \(s=\varepsilon ^{-1} t\) and \(\varepsilon ^{-1/2}\) scaling of space as \(\varepsilon \downarrow 0\). Our first result is that for any fixed \(\theta >0\), the rescaled processes are tight as \(\varepsilon \downarrow 0\), see Theorem 5.1 for the exact statement. The continuous time, continuous space processes \(Y^{mu}(t)\), defined as subsequential limits \(\varepsilon \downarrow 0\) of the family \(X^{multi}_{disc}(s)\), are our main heros and we prove a variety of results about them for different values of \(\theta \).

-

(1)

For any \(\theta \ge 2\) we show in Theorem 5.2 that \(Y^{mu}(t)\) satisfies the system of SDEs (1.4) below.

-

(2)

For any \(\theta \ge 1/2\) and any \(1\le k\le N\) we show in Theorem 5.3 that if \(Y^{mu}(t)\) is started from a \(\theta \)–Gibbs initial condition (zero initial condition is a particular case), then the \(k\)–dimensional restriction of the \(N(N-1)/2\)–dimensional process \(Y^{mu}(t)\) to the level \(k\) is a \(2\theta \)–Dyson Brownian motion, that is, the vector \((Y^{mu}(t)^k_1, Y^{mu}(t)^k_2,\dots ,Y^{mu}(t)^k_k)\) solves (1.2) with \(\beta =2\theta \) and suitable independent standard Brownian motions \(W_1(t),W_2(t),\dots ,W_k(t)\).

-

(3)

For any \(\theta > 0\) we show that if \(Y^{mu}(t)\) is started from zero initial condition, then its distribution at time \(t\) is the Hermite \(2\theta \) corners process of variance \(t\), that is, the corresponding probability density is proportional to (1.3) with \(\beta =2\theta \). In fact, we prove a more general statement, see Theorem 5.3 and Corollary 5.4.

-

(4)

For \(\theta =1\) the results of [24] yield that \(Y^{mu}(t)\) is the collection of reflected interlacing Brownian motions of [46].

The above results are complemented by the following uniqueness theorem for the system of SDEs (1.4). In particular, it implies that for \(\theta \ge 2\) all the subsequential limits \(Y^{mu}(t)\) of \(X^{multi}_{disc}(s)\) as \(\varepsilon \downarrow 0\) are the same.

Theorem 1.2

(Theorem 4.1) For any \(N\in \mathbb {N}\) and \(\theta >1\) the system of SDEs

where \(W_i^k\), \(1\le i\le k\le N\) are independent standard Brownian motions, possesses a unique weak solution taking values in the cone

for any initial condition \(Y(0)\) in the interior \(\mathcal {G}^N\) of \(\overline{\mathcal {G}^N}\).

It would be interesting to extend all the above results to general \(\theta >0\). We believe (but we do not have a proof) that the identification of \(Y^{mu}(t)\) with a solution of (1.4) is valid for all \(\theta >1\) and that the identification of the projection of \(Y^{mu}(t)\) onto the \(N\)–th level with a \(\beta =2\theta \)–Dyson Brownian motion is valid for any \(\theta >0\). On the other hand, \(Y^{mu}(t)\) cannot be a solution to (1.4) for \(\theta \le 1\). Indeed, we know that when \(\theta =1\) the process \(Y^{mu}(t)\) is a collection of reflected interlacing Brownian motions which hints that one should introduce additional local time terms in (1.4). In addition, the interpretation of the solution to (1.4) as a generalization of the one-dimensional Bessel process to a process in the Gelfand–Tseitlin cone suggests that the corresponding process for \(\theta <1\) is no longer a semimartingale and should be defined and studied along the lines of [42, Chapter XI, Exercise (1.26)].

1.3 Our methods

Our approach to the construction and study of the discrete approximating process \(X^{multi}_{disc}(s)\) is related to Jack symmetric polynomials. Recall that Jack polynomials \(J_{\lambda }(x_1,x_2,\ldots ,x_N;\theta )\), indexed by Young diagrams \(\lambda \) and a positive parameter \(\theta \), are eigenfunctions of the Sekiguchi differential operators ([43], [31, Chapter VI, Section 10], [20, Chapter 12])

One can also define \(J_{\lambda }(x_1,x_2,\ldots ,x_N;\theta )\) as limits of Macdonald polynomials \(P_\lambda (\cdot ;q,t)\) as \(q,t\rightarrow 1\) in such a way that \(t=q^{\theta }\) (see [31]). For the special values \(\theta =1/2,\,1,\,2\) these polynomials are spherical functions of Gelfand pairs \(O(N)\subset U(N)\), \(U(N)\subset U(N)\times U(N)\), \(U(2N)\subset Sp(N)\), respectively, and are also known as Zonal polynomials (see e.g. [31, Chapter 7] and the references therein). It is known that spherical functions of compact type (corresponding to the above Gelfand pairs) degenerate to the spherical functions of Euclidian type, which in our case are related to real symmetric, complex Hermitian and quaternionic Hermitian matrices, respectively (see e.g. [35, Section 4] and the references therein). In particular, in the case \(\theta =1\) this is a manifestation of the fact that the tangent space to the unitary group \(U(N)\) at identity can be identified with the set of Hermitian matrices. Due to all these facts it comes at no surprise that Hermite \(\beta \) ensembles can be obtained as limits of discrete probabilistic structures related to Jack polynomials with parameter \(\theta =\beta /2\).

On the discrete level our construction of the multilevel stochastic dynamics is based on a procedure introduced by Diaconis and Fill [16], which has recently been used extensively in the study of Markov chains on interlacing particle configurations (see e.g. [6, 7, 9–11]). The idea is to use commuting Markov operators and conditional independence to construct a multilevel Markov chain with given single level marginals. In our case these operators can be written in terms of Jack polynomials. In the limit the commutation relation we use turns into the following statement, which might be of independent interest.

Let \(P_N(t;\beta )\) denote the Markov transition operators of the \(N\)-dimensional Dyson Brownian Motion of (1.2) and let \(L^N_{N-1}(\beta )\) denote the Markov transition operator corresponding to conditioning the \((N-1)\)-st level (that is, \(x^{N-1}_1,x^{N-1}_2,\ldots ,x^{N-1}_{N-1}\)) on the \(N\)-th level (that is, \(x^N_1,x^N_2,\ldots ,x^N_N\)) in the Hermite \(\beta \) corners process.

Proposition 1.3

(Corollary of Theorem 5.3) For any \(\beta \ge 1\) the links \(L^N_{N-1}(\beta )\) given by the stochastic transition kernels

intertwine the semigroups \(P_N(t;\beta )\) and \(P_{N-1}(t;\beta )\) in the sense that

The latter phenomenon can be subsumed into a general theory of intertwinings for diffusions, which for example also includes the findings in [45] and [39].

Due to the presence of singular drift terms neither existence, nor uniqueness of the solution of (1.4) is straightforward. When dealing with systems of SDEs with singular drift terms one typically shows the existence and uniqueness of strong solutions by truncating the singularity first (thus, obtaining a well-behaved system of SDEs) and by proving afterwards that the solution cannot reach the singularity in finite time using suitable Lyapunov functions, see e.g. [3, proof of Proposition 4.3.5]. However, for \(1<\theta <2\) the solutions of (1.4) do reach some of the singularities. A similar phenomenon occurs in the case of the \(\beta \)–Dyson Brownian motion (1.2) with \(0<\beta <1\), for which the existence and uniqueness theorem was established in [14] using the theory of multivalued SDEs; however, in the multilevel setting we lack a certain monotonicity property which plays a crucial role in [14]. In addition, due to the intrinsic asymmetry built into the drift terms the solution of (1.4) seems to be beyond the scope of the processes that can be constructed using Dirichlet forms (see e.g. [40] for Dirichlet form constructions of symmetric diffusions with a singular drift at the boundary of their domain and for the limitations of that method). Instead, by localizing in time, using appropriate Lyapunov functions, and applying the Girsanov Theorem, we are able to reduce (1.4) to a number of non-interacting Bessel processes, whose existence and uniqueness is well-known. This approach has an additional advantage over Dirichlet form type constructions, since it allows to establish convergence to the solution of (1.4) via martingale problem techniques, which is how our proof of Theorem 5.2 goes.

Note also that for \(\theta =1\) (\(\beta =2\)) the interactions in the definition of \(X^{multi}_{disc}(s)\) become local. We have studied the convergence of such dynamics to the process of Warren in [24], with the proof being based on the continuity of a suitable Skorokhod reflection map. For general values of \(\theta >0\) neither the discrete dynamics, nor the continuous dynamics can be obtained as the image of an explicitly known process under the Skorokhod reflection map of [24].

1.4 Further developments and open problems

It would be interesting to study the asymptotic behavior of both the discrete and the continuous dynamics as the number of levels \(N\) goes to infinity. There are at least two groups of questions here.

The global fluctuations of Dyson Brownian motions as \(N\rightarrow \infty \) are known to be Gaussian (see [3, Section 4.3]); moreover, the limiting covariance structure can be described by the Gaussian Free Field (see [5, 12]). In addition, the asymptotic fluctuations of the Hermite \(\beta \) corners processes of Definition 1.1 are also Gaussian and can be described via the Gaussian Free Field (cf. [12]). This raises the question of whether the 3-dimensional global fluctuations of the solution to (1.4) are also asymptotically (as \(N\rightarrow \infty \)) Gaussian and how the limiting covariance structure might look like. A partial result in this direction was obtained for \(\beta =2\) in [9].

The edge fluctuations (that is, the fluctuations of the rightmost particle as \(N\rightarrow \infty \)) in the Hermite \(\beta \) ensemble given by (1.1) can be described via the \(\beta \)-Tracy–Widom distribution (see [41]). Moreover, in the present article we link the Hermite \(\beta \) ensemble to a certain discrete interacting particle system. This suggests that one might find the \(\beta \)-Tracy–Widom distribution in the limit of the edge fluctuations of that interacting particle system or its simplified versions.

2 Discrete space dynamics via Jack polynomials

2.1 Preliminaries on Jack polynomials

In this section we collect certain facts about Jack symmetric polynomials. A reader familiar with these polynomials can proceed to Sect. 2.2. Our notations generally follow the ones in [31].

In what follows \(\Lambda ^N\) is the algebra of symmetric polynomials in \(N\) variables. In addition, we let \(\Lambda \) be the algebra of symmetric polynomials in countably many variables, that is, of symmetric functions. An element of \(\Lambda \) is a formal symmetric power series of bounded degree in the variables \(x_1,x_2,\dots \). One way to view \(\Lambda \) is as an algebra of polynomials in the Newton power sums \(p_k=\sum _i (x_i)^k\). There exists a unique canonical projection \(\pi _N:\,\Lambda \rightarrow \Lambda _N\), which sets all variables except for \(x_1,x_2,\ldots ,x_N\) to zero (see [31, Chapter 1, Section 2] for more details).

A partition of size \(n\), or a Young diagram with \(n\) boxes, is a sequence of non-negative integers \(\lambda _1\ge \lambda _2\ge \cdots \ge 0\) such that \(\sum _i \lambda _i=n\). \(|\lambda |\) stands for the number of boxes in \(\lambda \) and \(\ell (\lambda )\) is the number of non-empty rows in \(\lambda \) (that is, the number of non-zero sequence elements \(\lambda _i\) in \(\lambda \)). Let \(\mathbb {Y}\) denote the set of all Young diagrams, and \(\mathbb {Y}^N\) the set of all Young diagrams \(\lambda \) with at most \(N\) rows (that is, such that \(\lambda _{N+1}=0\)). Typically, we will use the symbols \(\lambda \), \(\mu \) for Young diagrams. We adopt the convention that the empty Young diagram \(\emptyset \) with \(|\emptyset |=0\) also belongs to \(\mathbb {Y}\) and \(\mathbb {Y}^N\). For a box \(\,\square =(i,j)\) of a Young diagram \(\lambda \) (that is, a pair \((i,j)\) such that \(\lambda _i\ge j\)), \(a(i,j;\lambda )\) and \(l(i,j;\lambda )\) are its arm and leg lengths:

where \(\lambda '_j\) is the row length in the transposed diagram \(\lambda '\) defined by

Further, \(a'(i,j)\), \(l'(i,j)\) stand for the co-arm and the co-leg lengths, which do not depend on \(\lambda \)

When it is clear from the context which Young diagram is used, we omit it from the notation and write simply \(a(i,j)\) (or \(a(\square )\)) and \(l(i,j)\) (or \(l(\square )\)).

We write \(J_\lambda (\,\cdot ;\theta )\) for Jack polynomials, which are indexed by Young diagrams \(\lambda \) and positive reals \(\theta \). Many facts about these polynomials can be found in [31, Chapter VI, Section 10]. Note however that in that book Macdonald uses the parameter \(\alpha \) given by our \(\theta ^{-1}\). We use \(\theta \), following [27]. \(J_\lambda \) can be viewed either as an element of the algebra \(\Lambda \) of symmetric functions in countably many variables \(x_1,x_2,\ldots \), or (specializing all but finitely many variables to zeros) as a symmetric polynomial in \(x_1,x_2,\ldots ,x_N\) from the algebra \(\Lambda ^N\). In both interpretations the leading term of \(J_\lambda \) is given by \(x_1^{\lambda _1} x_2^{\lambda _2}\cdots x_{\ell (\lambda )}^{\lambda _{\ell (\lambda )}}\). When \(N\) is finite, the polynomials \(J_\lambda (x_1,\dots ,x_N;\theta )\) are known to be the eigenfunctions of the Sekiguchi differential operator:

The eigenrelation (2.1) can be taken as a definition for the Jack polynomials. We also need dual polynomials \(\widetilde{J}_\lambda \) which differ from \(J_\lambda \) by an explicit multiplicative constant:

Next, we recall the definition of skew Jack polynomials \(J_{\lambda /\mu }\). Take two infinite sets of variables \(x\) and \(y\), and consider a Jack polynomial \(J_\lambda (x,y;\theta )\). The latter is, in particular, a symmetric polynomial in the \(x\) variables. The coefficients \(J_{\lambda /\mu }(y;\theta )\) in its decomposition in the linear basis of Jack polynomials in the \(x\) variables are symmetric polynomials in the \(y\) variables and are referred to as skew Jack polynomials:

Similarly, one writes

It is known (see e.g. [31, Chapter VI, Section 10]) that \(J_{\lambda /\mu }(y;\theta )=\widetilde{J}_{\lambda /\mu }(y;\theta )=0\) unless \(\mu \subset \lambda \), which means that \(\lambda _i\ge \mu _i\) for \(i=1,2,\dots \). Also \(J_{\lambda /\emptyset }(y;\theta )=J_{\lambda }(y;\theta )\) and \(\widetilde{J}_{\lambda /\emptyset }(y;\theta )=\widetilde{J}_{\lambda }(y;\theta )\).

Throughout the article the parameter \(\theta \) remains fixed and, thus, we usually omit it, writing simply \(J_\lambda (x)\), \(\widetilde{J}_\lambda (x)\), \(J_{\lambda /\mu }(x)\), \(\widetilde{J}_{\lambda /\mu }(x)\).

A specialization \(\rho \) is an algebra homomorphism from \(\Lambda \) to the set of complex numbers. A specialization is called Jack-positive if its values on all (skew) Jack polynomials with a fixed parameter \(\theta >0\) are real and non-negative. The following statement gives a classification of all Jack-positive specializations.

Proposition 2.1

([27]) For any fixed \(\theta >0\), Jack-positive specializations can be parameterized by triplets \((\alpha ,\beta ,\gamma )\), where \(\alpha \), \(\beta \) are sequences of real numbers with

and \(\gamma \) is a non-negative real number. The specialization corresponding to a triplet \((\alpha ,\beta ,\gamma )\) is given by its values on the Newton power sums \(p_k\), \(k\ge 1\):

The specialization with all parameters taken to be zero is called the empty specialization. This specialization maps a polynomial to its constant term (that is, the degree zero summand).

We prepare the following explicit formulas for Jack-positive specializations for future use.

Proposition 2.2

([31, Chapter VI, (10.20)]) Consider the Jack-positive specialization \(\mathfrak {a}^N\) with \(\alpha _1=\alpha _2=\cdots =\alpha _N=\mathfrak {a}\) and all other parameters set to zero. We have

Taking the limit \(N\rightarrow \infty \) of specializations \(\left( \frac{s}{N}\right) ^N\) of Proposition 2.2 we obtain the following.

Proposition 2.3

Consider the Jack-positive specialization \(\mathfrak {r}_s\) with \(\gamma =s\) and all other parameters set to zero. We have

Certain specializations of skew Jack polynomials also admit explicit formulas. We say that two Young diagrams \(\lambda \) and \(\mu \) interlace and write \(\mu \prec \lambda \) if

Proposition 2.4

For any complex number \(\mathfrak {a}\ne 0\), the specialization value \(J_{\lambda /\mu }(\mathfrak {a}^1)\) vanishes unless \(\mu \prec \lambda \). In the latter case,

where \(k\) is any integer satisfying \(\ell (\lambda )\le k\) and we have used the Pochhammer symbol notation

When \(\mu \) differs from \(\lambda \) by one box \(\lambda =\mu \sqcup (i,j)\) the formula can be simplified to read in terms of \(\widetilde{J}_{\lambda /\mu }\)

Note that the arm lengths in the latter formula are computed with respect to the (smaller) diagram \(\mu \).

Proof

The evaluation of (2.4) is known as the branching rule for Jack polynomials and is also a limit of a similar rule for Macdonald polynomials, see e.g. [31, (7.14’), Section VII, Chapter VI] or [36, (2.3)]. The formula (2.5) is obtained from (2.4) using (2.2). However, this computation is quite involved and we also provide an alternative way: formulas [31, (7.13), (7.14), Chapter VI] relate the skew Macdonald polynomials to certain functions \(\varphi _{\lambda /\mu }\). Further, formulas [31, (6.20),(6.24), Chapter VI] give explicit expressions for \(\varphi _{\lambda /\mu }\), and [31, Section 10, Chapter VI] explains that (skew) Jack polynomials are obtained from (skew) Macdonald polynomials parametrized by pairs \((q,t)\) by the limit transition \(q\rightarrow 1\), \(t=q^{\theta }\). This limit in the expression for \(\varphi _{\lambda /\mu }\) of [31, (6.24), Chapter VI] gives (2.5). \(\square \)

We also need the following two summation formulas for Jack polynomials.

Proposition 2.5

Take two specializations \(\rho _1\), \(\rho _2\) such that the series \(\sum _{k=1}^{\infty } \frac{p_k(\rho _1)\,p_k(\rho _2)}{k}\) is absolutely convergent, and define

Then

and more generally for any \(\nu ,\kappa \in \mathbb {Y}\)

Proof

(2.6) is the specialized version of a Cauchy-type identity for Jack polynomials, see e.g. [31, (10.4), Section 10, Chapter VI]. The latter is also a \((q,t)\rightarrow (1,1)\) limit of a similar identity for Macdonald polynomials [31, (4.13), Section 4, Chapter VI], as is explained in [31, Section 10, Chapter VI]. Similarly, (2.7) is the specialized version of the limit of a skew-Cauchy identity for Macdonald polynomials, see e.g. [31, Exercise 6, Section 7, Chapter VI]. \(\square \)

2.2 Probability measures related to Jack polynomials

We start with the definition of Jack probability measures which is based on (2.6).

Definition 2.6

Given two Jack-positive specializations \(\rho _1\) and \(\rho _2\) such that the series \( \sum _{k=1}^{\infty } \frac{p_k(\rho _1)\,p_k(\rho _2)}{k} \) is absolutely convergent, the Jack probability measure \(\mathcal {J}_{\rho _1;\rho _2}\) on \(\mathbb {Y}\) is defined through

with the normalization constant being given by

Remark 2.7

The construction of probability measures via specializations of symmetric polynomials was originally suggested by Okounkov in the context of Schur measures [37]. Recently, similar constructions for more general polynomials have led to many interesting results starting from the paper [7] by Borodin and Corwin. We refer to [8, Introduction] for the chart of probabilistic objects which are linked to various degenerations of Macdonald polynomials.

The following statement is a corollary of Propositions 2.2, 2.3 and formula (2.2).

Proposition 2.8

Take specializations \(1^N\) and \(\mathfrak {r}_s\) of Propositions 2.2 and 2.3, respectively. Then \(\mathcal {J}_{1^N;\mathfrak {r}_s}(\lambda )\) vanishes unless \(\lambda \in \mathbb {Y}^N\), and in the latter case we have

Next, we consider limits of the measures \(\mathcal {J}_{1^N;\mathfrak {r}_s}\) under a diffusive rescaling of \(s\) and \(\lambda \). Define the open Weyl chamber \({\mathcal {W}^N}=\{y\in \mathbb {R}^N:y_1< y_2 <\cdots < y_N\}\) and let \(\overline{\mathcal {W}^N}\) be its closure.

Proposition 2.9

Fix some \(N\in \mathbb {N}\). Then under the rescaling

the measures \(\mathcal {J}_{1^N;\mathfrak {r}_s}\) converge weakly in the limit \(\varepsilon \rightarrow 0\) to the probability measure with density

on the closed Weyl chamber \(\overline{\mathcal {W}^N}\) where

Note that we have chosen the notation is such a way that the row lengths \(\lambda _i\) are non-increasing, while the continuous coordinates \(y_i\) are non-decreasing in \(i\).

Proof of Proposition 2.9

We start by observing that (2.10), (2.11) define a probability density, namely that the total mass of the corresponding measure is equal to one. Indeed, the computation of the normalization constant is a particular case of the Selberg integral (see [20, 32, 44]). Since \(\mathcal {J}_{1^N;\mathfrak {r}_s}\) is also a probability measure, it suffices to prove that as \(\varepsilon \rightarrow 0\)

with the error term \(o(1)\) being uniformly small on compact subsets of \({\mathcal {W}^N}\). The product over boxes in the first row of \(\lambda \) in (2.9) is (with the convention \(\lambda _{N+1}=0\))

where we have written \(A(\varepsilon )\sim B(\varepsilon )\) for \(\lim _{\varepsilon \rightarrow 0} \frac{A(\varepsilon )}{B(\varepsilon )}=1\). Further,

Therefore, the factors coming from the first row of \(\lambda \) in (2.9) are asymptotically given by

Performing similar computations for the other rows we get

which finishes the proof. \(\square \)

We now proceed to the definition of probability measures on multilevel structures associated with Jack polynomials. Let \(\mathbb {GT}^{(N)}\) denote the set of sequences of Young diagrams \(\lambda ^1\prec \lambda ^2\prec \cdots \prec \lambda ^N\) such that \(\ell (\lambda ^i)\le i\) for every \(i\) and the Young diagrams interlace, that is,

The following definition is motivated by the property (2.3) of skew Jack polynomials.

Definition 2.10

A probability distribution \(P\) on arrays \((\lambda ^1\prec \dots \prec \lambda ^N)\in \mathbb {GT}^{(N)}\) is called a Jack–Gibbs distribution, if for any \(\mu \in \mathbb {Y}^N\) such that \(P(\lambda ^N=\mu )>0\) the conditional distribution of \(\lambda ^1\dots ,\lambda ^{N-1}\) given \(\lambda ^N=\mu \) is

Remark 2.11

When \(\theta =1\), (2.12) implies that the conditional distribution of \(\lambda ^1,\dots ,\lambda ^{N-1}\) is uniform on the polytope defined by the interlacing conditions.

One important example of a Jack–Gibbs measure is given by the following definition, see [7, 8, 11, 13] for a review of related constructions in the context of Schur and, more generally, Macdonald polynomials.

Definition 2.12

Given a Jack-positive specialization \(\rho \) such that \(\sum \nolimits _{k=1}^{\infty } \frac{p_k(\rho )}{k}<\infty \) we define the ascending Jack process \(\mathcal {J}^{asc}_{\rho ;N}\) as the probability measure on \(\mathbb {GT}^{(N)}\) given by

Remark 2.13

If \(\rho \) is the empty specialization, then \(\mathcal {J}^{asc}_{\rho ;N}\) assigns mass \(1\) to the single element of \(\mathbb {GT}^{(N)}\) such that \(\lambda ^i_j=0\), \(1\le i\le j\le N\).

Lemma 2.14

The formula (2.13) defines a Jack–Gibbs probability distribution. Furthermore, for any \(1\le k \le N\) the projection of \(\mathcal {J}^{asc}_{\rho ;N}\) to \((\lambda ^1,\dots ,\lambda ^k)\) is \(\mathcal {J}^{asc}_{\rho ;k}\), and the projection of \(\mathcal {J}^{asc}_{\rho ;N}\) to \(\lambda ^k\) is \(\mathcal {J}_{\rho ;1^k}\).

Proof

The formula (2.4) yields that \(J_{\lambda /\mu }(1^1)\) vanishes unless \(\mu \prec \lambda \), thus, the support of \(\mathcal {J}^{asc}_{\rho ;N}\) is indeed a subset of \(\mathbb {GT}^{(N)}\). Now we can sum (2.13) sequentially over \(\lambda ^1\), ..., \(\lambda ^{N-1}\) using (2.3). This proves that the projection of \(\mathcal {J}^{asc}_{\rho ;N}\) to \(\lambda ^N\) is \(\mathcal {J}_{\rho ;1^N}\). Thus, since \(\mathcal {J}_{\rho ;1^N}\) is a probability measure, so is \(\mathcal {J}^{asc}_{\rho ;N}\). Further, dividing \(\mathcal {J}^{asc}_{\rho ;N}(\lambda ^1,\dots ,\lambda ^N)\) by \(\mathcal {J}_{\rho ;1^N}(\lambda ^N)\) we get the conditional distribution (2.12), which proves that (2.13) is Jack–Gibbs.

To compute the projection onto \((\lambda ^1,\dots ,\lambda ^k)\) we sum (2.13) sequentially over \(\lambda ^N\),..., \(\lambda ^{k+1}\) using (2.7) and arrive at \(\mathcal {J}^{asc}_{\rho ;k}\). In order to further compute the projection to \(\lambda ^k\) we also sum over \(\lambda ^1,\dots ,\lambda ^{k-1}\) using (2.3) and get \(\mathcal {J}_{\rho ;1^k}\). \(\square \)

Define the (open) Gelfand–Tsetlin cone via

and let \(\overline{\mathcal {G}^N}\) be its closure. A natural continuous analogue of Definition 2.10 is:

Definition 2.15

An absolutely continuous (with respect to the Lebesgue measure) probability distribution \(P\) on arrays \(y\in {\mathcal {G}^N}\) is called \(\theta \)–Gibbs, if the conditional distribution of the first \(N-1\) levels \(y^k_i\), \(1\le i \le k\le N-1\) given the \(N\)–th level \(y^N_1,\dots ,y^N_N\) has density

To see that (2.14) indeed defines a probability measure, one can use a version of the Dixon-Anderson identity (see [2, 17], [20, Chapter 4]), which reads

where the integration is performed over the domain

Applying (2.15) sequentially to integrate the density in (2.14) with respect to the variable \(y^1_1\), then the variables \(y^2_1\), \(y^2_2\) and so on, we eventually arrive at \(1\).

Proposition 2.16

Let \(P(q)\), \(q=1,2,\dots \) be a sequence of Jack–Gibbs measures on \(\mathbb {GT}^{(N)}\), and for each \(q\) let \(\{\lambda ^k_i(q)\}\) be a \(P(q)\)–distributed random element of \(\mathbb {GT}^{(N)}\). Suppose that there exist two sequences \(m(q)\) and \(b(q)\) such that \(\lim _{q\rightarrow \infty } b(q)=\infty \) and as \(q\rightarrow \infty \) the \(N\)–dimensional vector

converges weakly to a random vector whose distribution is absolutely continuous with respect to the Lebesgue measure. Then the whole \(N(N+1)/2\)–dimensional vector

also converges weakly and its limiting distribution is \(\theta \)–Gibbs.

Proof

Since we deal with probability distributions converging to another probability distribution, it suffices to check that the quantity in (2.12) (written in the rescaled and reordered coordinates \(y^j_i=\frac{\lambda _{j+1-i}^j-m(q)}{b(q)}\)) converges to (2.14), uniformly on compact subsets of \({\mathcal {G}^N}\).

\(J_{\lambda /\mu }(1^1)\) can be evaluated according to the identity (2.4). Thus, with the notation \( f(\alpha )=\frac{\Gamma (\alpha +1)}{\Gamma (\alpha +\theta )}\) we have

The asymptotics \(f(\alpha )\sim \alpha ^{1-\theta }\) as \(\alpha \rightarrow \infty \) shows

It remains to analyze the asymptotics of \(J_{\mu }(1^N)\) in the denominator of (2.12). To this end, we use the expression for \(J_{\mu }(1^N)\) in Proposition 2.2 and recall that \(\mu \) can be identified with \(\lambda ^N\) to find that the product over the boxes in the first row of \(\mu \) asymptotically (in the limit \(q\rightarrow \infty \)) behaves as

Performing the same computations for the other rows we find that, as \(q\rightarrow \infty \),

One obtains the desired convergence to (2.14) by putting together the asymptotics of the factors in (2.12) and multiplying the result by the term \(b(q)^{N(N-1)/2}\) coming from the space rescaling. \(\square \)

As a combination of Propositions 2.9 and 2.16 we obtain the following statement.

Corollary 2.17

Fix some \(N\in \mathbb {N}\). Then, under the rescaling

the measures \(\mathcal {J}^{asc}_{\mathfrak {r}_s;N}\) converge weakly in the limit \(\varepsilon \rightarrow 0\) to the probability measure on the Gelfand–Tsetlin cone \({\mathcal {G}^N}\) with density

where

Note that the probability measure of (2.16) is precisely the Hermite \(\beta =2\theta \) corners process with variance \(t\) of Definition 1.1.

Remark 2.18

When \(\theta =1\), the factors \((y_j^n-y_i^n)^{2-2\theta }\) and \(|y^n_a-y^{n+1}_b|^{\theta -1}\) in (2.16) disappear, and the conditional distribution of \(y^1, y^2,\dots ,y^{N-1}\) given \(y^N\) becomes uniform on the polytope defined by the interlacing conditions. This distribution is known to be that of eigenvalues of corners of a random Gaussian \(N\times N\) Hermitian matrix sampled from the Gaussian Unitary Ensemble (see e.g. [4]). Similarly, for \(\theta =1/2\) and \(\theta =2\) one gets the joint distribution of the eigenvalues of corners of the Gaussian Orthogonal Ensemble and the Gaussian Symplectic Ensemble, respectively (see e.g. [33], [35, Section 4]).

2.3 Dynamics related to Jack polynomials

We are now ready to construct the stochastic dynamics related to Jack polynomials. Similar constructions for Schur, \(q\)-Whittacker and Macdonald polynomials can be found in [6, 7, 9, 11].

Definition 2.19

Given two specializations \(\rho ,\rho '\) define their union \((\rho ,\rho ')\) through the formulas

where \(p_k\), \(k\ge 1\) are the Newton power sums as before.

Let \(\rho \) and \(\rho '\) be two Jack-positive specializations such that \(H_\theta (\rho ;\rho ')<\infty \). Define matrices \(p^{\uparrow }_{\lambda \rightarrow \mu }\) and \(p^{\downarrow }_{\lambda \rightarrow \mu }\) with rows and columns indexed by Young diagrams as follows:

The next three propositions follow from (2.3), (2.6), (2.7) (see also [6, 7, 11] for analogous results in the cases of Schur, \(q\)-Whittacker and Macdonald polynomials).

Proposition 2.20

The matrices \(p^{\uparrow }_{\lambda \rightarrow \mu }\) and \(p^{\downarrow }_{\lambda \rightarrow \mu }\) are stochastic, that is, all matrix elements are non-negative, and for every \(\lambda \in \mathbb {Y}\) we have

Proposition 2.21

For any \(\mu \in \mathbb {Y}\) and any Jack-positive specializations \(\rho _1,\rho _2,\rho _3\) we have

Proposition 2.22

The following commutation relation on matrices \(p^\uparrow _{\lambda \rightarrow \mu }\) and \(p^\downarrow _{\lambda \rightarrow \mu }\) holds:

Let \(X^N_{disc}(s)\), \(s\ge 0\) denote the continuous time Markov chain on \(\mathbb {Y}^N\) with transition probabilities given by \(p^{\uparrow }(1^N;\mathfrak {r}_s)\), \(s\ge 0\) (and arbitrary initial condition \(X^N_{disc}(0)\in \mathbb {Y}^N\)). We record the jump rates of \(X^N_{disc}\) for later use.

Proposition 2.23

The jump rates of the Markov chain \(X^N_{disc}\) on \(\mathbb {Y}^N\) are given by

Explicitly, for \(\mu =\lambda \sqcup (i,j)\) we have

Remark 2.24

While the jump rates \(q_{\lambda \rightarrow \mu }\) are explicit, we are not aware of any fairly simple formulas for the transition probabilities \(p^{\uparrow }(1^N;\mathfrak {r}_s)\), \(s\ge 0\) of \(X^N_{disc}\).

Proof of Proposition 2.23

The formula (2.20) is readily obtained from the definition of the transition probabilities in (2.18). In order to get (2.21) we note that \(J_\lambda (1^N)\) and \(J_\mu (1^N)\) have been computed in Proposition 2.2. Further, observe that \(\widetilde{J}_{\mu /\lambda }\) is a symmetric polynomial of degree \(1\), thus, it is proportional to the sum of indeterminates \(p_1\). Therefore, \(\widetilde{J}_{\mu /\lambda }(1^1)=\widetilde{J}_{\mu /\lambda }(\mathfrak {r}_1)\) and we can use the formula (2.5) to evaluate it.

The following proposition will prove useful below.

Proposition 2.25

The process \(|X^N_{disc}|\,{:=}\,\sum _{i=1}^N (X^N_{disc})_i\) is a Poisson process with intensity \(N\theta \).

Proof

According to Proposition 2.23 the process \(|X^N_{disc}|\) increases by \(1\) with rate

In order to evaluate the latter sum we use Pieri’s rule for Jack polynomials, which is a formula for the product of a Jack polynomial with the sum of indeterminates and in our case reads

The proof of Pieri’s fule (for Macdonald polynomials, with the case of Jack polynomials being given by the limit transition \(q\rightarrow 1\), \(t=q^{\theta }\)) can be found in [31, (6.24) and Section 10 in Chapter VI]. Note that in the formulas of [31] the notation \(\varphi _{\mu /\lambda }\) is used for \(\widetilde{J}_{\mu /\lambda }(\mathfrak {r}_1)=\widetilde{J}_{\mu /\lambda }(1^1)\) and the \(g_1\) there is proportional to our \(p_1\). \(\square \)

Proposition 2.21 implies the following statement.

Proposition 2.26

Suppose that the initial condition \(X^N_{disc}(0)\) is the empty Young diagram, that is, \(\lambda _1=\lambda _2=\cdots =\lambda _N=0\). Then, for any fixed \(s>0\), the law of \(X^N_{disc}(s)\) is given by \(\mathcal {J}_{1^N;\mathfrak {r}_s}\) which was computed explicitly in Proposition 2.8.

Our next goal is to define a stochastic dynamics on \(\mathbb {GT}^{(N)}\). The construction we use is parallel to those of [6, 7, 9–11]; it is based on an idea going back to [16], which allows to couple the dynamics of Young diagrams of different sizes. We start from the degenerate discrete time dynamics \(\lambda ^0(n)=\emptyset \), \(n\in \mathbb {N}_0\) and construct the discrete time dynamics of \(\lambda ^1,\lambda ^2,\ldots ,\lambda ^N\) inductively. Given \(\lambda ^{k-1}(n)\), \(n\in \mathbb {N}_0\) and a Jack-positive specialization \(\rho \) we define the process \(\lambda ^k(n)\), \(n\in \mathbb {N}_0\) with a given initial condition \(\lambda ^k(0)\) satisfying \(\lambda ^{k-1}(0)\prec \lambda ^k(0)\) as follows. We let the distribution of \(\lambda ^k(n+1)\) depend only on \(\lambda ^k(n)\) and \(\lambda ^{k-1}(n+1)\) and be given by

Carrying out this procedure for \(k=1,2,\ldots ,N\) we end up with a discrete time Markov chain \(\hat{X}^{multi}_{disc}(n;\rho )\), \(n\in \mathbb {N}_0\) on \(\mathbb {GT}^{(N)}\).

Definition 2.27

Define the continuous time dynamics \(X^{multi}_{disc}(s)\), \(s\ge 0\) on \(\mathbb {GT}^{(N)}\) with an initial condition \(X^{multi}_{disc}(0)\in \mathbb {GT}^{(N)}\) as the distributional limit

where all dynamics \(\hat{X}^{multi}_{disc}(\cdot ;\mathfrak {r}_\varepsilon )\) are started from the initial condition \(X^{multi}_{disc}(0)\) and the specialization \(\mathfrak {r}_\varepsilon \) is defined as in Proposition 2.3.

Remark 2.28

Alternatively, we could have started from the specialization \(\rho \) with a single \(\alpha \) parameter \(\alpha _1=\varepsilon \) and we would have arrived at the same continuous time dynamics. Analogous constructions of the continuous time dynamics in the context of Schur and Macdonald polynomials can be found in [9] and [7].

Note that when \(\lambda \prec \kappa \) the term \(\widetilde{J}_{\kappa /\lambda }(\mathfrak {r}_\varepsilon )\) is of order \(\varepsilon ^{|\kappa |-|\lambda |}\) as \(\varepsilon \rightarrow 0\). Therefore, the leading order term in the sum on the right-hand side of (2.22) comes from the choice \(\kappa =\lambda \) unless that \(\kappa \) violates \(\mu \prec \kappa \), in which case the leading term corresponds to taking \(\kappa =\mu \). Moreover, the first-order terms come from the choices \(\kappa =\lambda \sqcup \square \) and the resulting terms turn into the jump rates of the continuous time dynamics. Summing up, the continuous time dynamics \(X^{multi}_{disc}(s)\), \(s\ge 0\) looks as follows: given the trajectory of \(\lambda ^{k-1}\), a box \(\square \) is added to the Young diagram \(\lambda ^k\) at time \(t\) at the rate

In particular, the latter jump rates incorporate the following push interaction: if the coordinates of \(\lambda ^{k-1}\) evolve in a way which violates the interlacing condition \(\lambda ^{k-1}\prec \lambda ^{k}\), then the appropriate coordinate of \(\lambda ^k\) is pushed in the sense that a box is added immediately to the Young diagram \(\lambda ^k\) to restore the interlacing. The factors on the right-hand side of (2.23) are explicit and given by (2.4) and (2.5). Simulations of the continuous time dynamics for \(\theta =0.5\) and \(\theta =2\) can be found at [25].

The following statement is based on the results of Propositions 2.20, 2.21, 2.22 and can be proved by the argument of [9, Sections 2.2, 2.3], see also [6, 7, 11].

Proposition 2.29

Suppose that \(X^{multi}_{disc}(s)\), \(s\ge 0\) is started from a random initial condition with a Jack–Gibbs distribution. Then:

-

the restriction of \(X^{multi}_{disc}(s)\) to level \(N\) coincides with \(X^{N}_{disc}(s)\), \(s\ge 0\) started from the restriction to level \(N\) of the initial condition \(X^{multi}_{disc}(0)\);

-

the law of \(X^{multi}_{disc}(s)\) at a fixed time \(s>0\) is a Jack–Gibbs distribution. Moreover, if \(X^{multi}_{disc}(0)\) has law \(\mathcal {J}^{asc}_{\rho ;N}\), then \(X^{multi}_{disc}(s)\) has law \(\mathcal {J}^{asc}_{\rho ,\mathfrak {r}_s;N}\).

Remark 2.30

In fact, there is a way to generalize Proposition 2.29 to a statement describing the restriction of our multilevel dynamics started from Jack–Gibbs initial conditions to any monotone space-time path (meaning that we look at level \(N\) for some time, then at level \(N-1\) and so on). We refer the reader to [9, Proposition 2.5] for a precise statement in the setting of multilevel dynamics based on Schur polynomials.

3 Convergence to Dyson Brownian motion

The goal of this section is to prove that the Markov chain \(X^N_{disc}(s)\) converges in the diffusive scaling limit to a Dyson Brownian Motion.

To start with, we recall the existence and uniqueness result for Dyson Brownian motions with \(\beta >0\) (see e.g. [3, Proposition 4.3.5] for the case \(\beta \ge 1\) and [14, Theorem 3.1] for the case \(0<\beta <1\)).

Proposition 3.1

For any \(N\in \mathbb {N}\) and \(\beta >0\), the system of SDEs

\(i=1,2,\ldots ,N\), with \(W_1,W_2,\ldots ,W_N\) being independent standard Brownian motions, has a unique strong solution taking values in the Weyl chamber \( \overline{\mathcal {W}^N}\) for any initial condition \(X(0)\in \overline{\mathcal {W}^N}\). Moreover, for all initial conditions, the stopping time

is infinite with probability one if \(\beta \ge 1\) and finite with positive probability if \(0<\beta <1\).

We write \(D^N=D([0,\infty ),\mathbb {R}^N)\) for the space of right-continuous paths with left limits taking values in \(\mathbb {R}^N\) and endow it with the usual Skorokhod topology (see e.g. [19]).

Theorem 3.2

Fix \(\theta \ge 1/2\) and let \(\varepsilon >0\) be a small parameter. Let the \(N\)–dimensional stochastic process \(Y^N_\varepsilon (t)=(Y^N_\varepsilon (t)_1,\dots ,Y^N_\varepsilon (t)_N)\) be defined through

where \((X^N_{disc})_i\) is \(i\)-th coordinate of the process \(X^N_{disc}\). Suppose that, as \(\varepsilon \rightarrow 0\), the initial conditions \(Y^N_\varepsilon (0)\) converge to a point \(Y(0)\) in the interior of \(\overline{\mathcal {W}^N}\). Then the process \(Y^N_{\varepsilon }(t)\) converges in the limit \(\varepsilon \downarrow 0\) in law on \(D^N\) to the \(\beta =2\theta \)–Dyson Brownian motion, that is, to the unique strong solution of (3.1) with \(\beta =2\theta \).

Remark 3.3

We believe that Theorem 3.2 should hold for any \(\theta >0\). However, the case \(0<\theta <1/2\) presents additional technical challenges, since there the stopping time \(\tau \) of (3.2) may be finite.

Let us first present the plan of the proof of Theorem 3.2. In Step 1 we study the asymptotics of the jump rates of \(X^N_{disc}\) in the scaling limit of Theorem 3.2. In Step 2 we prove the tightness of the processes \(Y^N_\varepsilon \) as \(\varepsilon \rightarrow 0\). In Step 3 we show that subsequential limits of that family solve the SDE (3.1). This fact and the uniqueness of the solution to (3.1) yield together Theorem 3.2.

3.1 Step 1: Rates

\(Y^N_\varepsilon (t)\) is a continuous time Markov process with state space \(\overline{\mathcal {W}^N}\), a (constant) drift of \(-\varepsilon ^{-1/2}\) in each coordinate and jump rates

where \(\hat{y}\), \(\hat{y'}\) are the vectors (viewed as Young diagrams) obtained from \(y\), \(y'\) by reordering the components in decreasing order, and the intensities \(q_{\lambda \rightarrow \mu }\) are given in Proposition 2.23. If we write \(y'\approx _\varepsilon y\) for vectors \(y'\), \(y\) which differ in exactly one coordinate with the difference being \(\varepsilon ^{1/2}\), then \(p^N_\varepsilon (y,y',t)=0\) unless \(y'\approx _\varepsilon y\). As we will see, in fact, \(p^N_\varepsilon (y,y',t)\) does not depend on \(t\).

Now, take two sequences \(y'\approx _\varepsilon y\) with \(y'_{N+1-i}-y_{N+1-i}=\varepsilon ^{1/2}\) for some fixed \(i\in \{1,2,\ldots ,N\}\). Define Young diagrams \(\lambda \) and \(\mu \) via \(\lambda _l=\frac{t}{\theta }\,\varepsilon ^{-1}+y_{N+1-l}\,\varepsilon ^{-1/2}\), \(\mu _l=\frac{t}{\theta }\,\varepsilon ^{-1}+y'_{N+1-j}\,\varepsilon ^{-1/2}\). Then \(\mu _i=\lambda _i+1\) and \(\mu _l=\lambda _l\) for \(j\ne i\). Also, set \(j=\lambda _i+1\), so that \(\mu =\lambda \sqcup (i,j)\).

Lemma 3.4

For sequences \(y'\approx _\varepsilon y\) differing in the \((N+1-i)\)-th coordinate as above, we have in the limit \(\varepsilon \rightarrow 0\):

where the error \(O(1)\) is uniform on compact subsets of the open Weyl chamber \(\mathcal {W}^N\).

Proof

Using Proposition 2.23 we have

Now, for any \(y\) the corresponding Young diagram \(\lambda \) has \(\lambda _N\) columns of length \(N\), \((\lambda _{N-1}-\lambda _N)\) columns of length \((N-1)\), \((\lambda _{N-2}-\lambda _{N-1})\) columns of length \((N-2)\) etc. Therefore, the latter expression for \(p^N_\varepsilon (y,y',t)\) can be simplified to

\(\square \)

with the remainder \(O(1)\) being uniform over \(y\) such that \(|y_{N+1-i}-y_{N+1-j}|>\delta \) for \(j\ne i\) and a fixed \(\delta >0\).

3.2 Step 2: Tightness

Let us show that the family \(Y^N_\varepsilon \), \(\varepsilon \in (0,1)\) is tight on \(D^N\). To this end, we aim to apply the necessary and sufficient condition for tightness of [19, Corollary 3.7.4] and need to show that, for any fixed \(t\ge 0\), the random variables \(Y^N_\varepsilon (t)\) are tight on \(\mathbb {R}^N\) as \(\varepsilon \downarrow 0\) and that for every \(\Delta >0\) and \(T>0\) there exists a \(\delta >0\) such that

We first explain how to obtain the desired controls on \((Y^N_\varepsilon (t))_+\) (the vector of positive parts of the components of \(Y^N_\varepsilon (t)\)) and

To control \((Y^N_\varepsilon (t))_+\) and the expressions in (3.6) we proceed by induction over the index of the coordinates in \(Y^N_\varepsilon \). For the first coordinate \((Y^N_\varepsilon )_1\) the explicit formula (3.4) in Step 1 shows that the jump rates of the process \((Y^N_\varepsilon )_1\) are bounded above by \(\varepsilon ^{-1}\). Hence, a comparison with a Poisson process with jump rate \(\varepsilon ^{-1}\), jump size \(\varepsilon ^{1/2}\) and drift \(-\varepsilon ^{-1/2}\) shows that \(((Y^N_\varepsilon )_1(t))_+\) and the expression in (3.6) for \(i=1\) behave in accordance with the conditions of Corollary 3.7.4 in [19] as stated above. Next, we consider \((Y^N_\varepsilon )_i\) for some \(i\in \{2,3,\ldots ,N\}\). In this case, the formula (3.4) in Step 1 shows that, whenever the spacing \((Y^N_\varepsilon )_i-(Y^N_\varepsilon )_{i-1}\) exceeds \(\Delta /3\), the jump rate of \((Y^N_\varepsilon )_i\) is bounded above by

Let us show that \((Y^N_\varepsilon )_i\) can be coupled with a Poisson jump process \(R_\varepsilon \) with jump size \(\varepsilon ^{1/2}\), jump rate given by the right-hand side of the last inequality and drift \(-\varepsilon ^{-1/2}\), so that, whenever \((Y^N_\varepsilon )_i-(Y^N_\varepsilon )_{i-1}\) exceeds \(\Delta /3\) and \((Y^N_\varepsilon )_i\) has a jump to the right, the process \(R_\varepsilon \) has a jump to the right as well.

To do this, recall that (by definition) the law of the jump times of \(Y^N_\varepsilon \) can be described as follows. We take \(N\) independent exponential random variables \(a_1,\dots ,a_N\) with means \(r_j(Y^N_\varepsilon )\), \(j=1,\dots ,N\) defined by (3.3) with \(y\) and \(y'\) differing in the \(j\)-th coordinate. If we let \(k\) be the index for which \(a_k=\min (a_1,\dots ,a_N)\), then at time \(a_k\) the \(k\)-th particle (that is, \((Y^N_\varepsilon )_k\)) jumps. After this jump we repeat the procedure again to determine the next jump.

Let \(M\) denote the right-hand side of (3.7) and consider in each time interval between the jumps of \(Y^N_\varepsilon \) an additional independent exponential random variable \(b\) with mean \(M-r_i(Y^N_\varepsilon )\) if \((Y^N_\varepsilon )_i-(Y^N_\varepsilon )_{i-1}\) exceeds \(\Delta /3\) and with mean \(M\) otherwise. Now, instead of considering \(\min (a_1,\dots ,a_N)\), we consider \(\min (a_1,\dots ,a_N,b)\). If the minimum is given by \(b\), then no jump happens and the whole procedure is repeated. Now, we define the jump times of process \(R_\varepsilon \) to be all times when the clock of the \(i\)-th particle rings provided that \((Y^N_\varepsilon )_i-(Y^N_\varepsilon )_{i-1}\) exceeds \(\Delta /3\), and also all times when the auxilliary random variable \(b\) constitutes the minimum. One readily checks that \(R_\varepsilon \) is given by a Poisson jump process of constant intensity \(M\) and drift \(-\varepsilon ^{-1/2}\).

We further use the convergence of \(R_\varepsilon \) to Brownian motion with drift \(3(i-1)\theta /\Delta \), which implies the tightness and conditions (3.5) are satisfied for \(R_\varepsilon \). Now we get the desired control for \(((Y^N_\varepsilon )_i(t))_+\) and the quantities in (3.6) by invoking the induction hypothesis when spacing \((Y^N_\varepsilon )_i-(Y^N_\varepsilon )_{i-1}\) is less than \(\Delta /3\) and by comparison with \(R_\varepsilon \) when the spacing is larger.

It remains to observe that \((Y^N_\varepsilon (t))_-\) (the vector of negative parts of the components of \(Y^N_\varepsilon \)) and

can be dealt with in a similar manner (but considering the rightmost particle first and moving from right to left). Together these controls yield the conditions of [19, Corollary 3.7.4].

We also note that, since the maximal size of the jumps tends to zero as \(\varepsilon \downarrow 0\), any limit point of the family \(Y^N_\varepsilon \), \(\varepsilon \in (0,1)\) as \(\varepsilon \downarrow 0\) must have continuous paths (see e.g. [19, Theorem 3.10.2]).

Remark 3.5

Note that in the proof of the tightness result the condition \(\theta \ge 1/2\) is not used.

3.3 Step 3: SDE for subsequential limits

Throughout this section we let \(Y^N\) be an arbitrary limit point of the family \(Y^N_\varepsilon \) as \(\varepsilon \downarrow 0\). Our goal is to identify \(Y^N\) with the solution of (3.1). We pick a sequence of \(Y^N_\varepsilon \) which converges to \(Y^N\) in law, and by virtue of the Skorokhod Embedding Theorem (see e.g. Theorem 3.5.1 in [18]) may assume that all processes involved are defined on the same probability space and that the convergence holds in the almost sure sense. In the rest of this section all limits \(\varepsilon \rightarrow 0\) are taken along this sequence.

Let \(\mathcal {F}\) denote the set of all infinitely differentiable functions on \(\overline{\mathcal {W}^N}\) whose support is a compact subset of \(\mathcal {W}^N\). Define

where \(\partial \overline{\mathcal {W}^N}\) denotes the boundary of \(\overline{\mathcal {W}^N}\) and \(\mathrm{dist}\) stands for the \(L^\infty \) distance:

Clearly, \({\mathcal {F}} =\bigcup _{\delta >0} {\mathcal {F}}_\delta \).

For functions \(f\in {\mathcal {F}}\) we consider the processes

Here, \(f_{y_i}\) (\(f_{y_iy_i}\) resp.) stands for the first (second resp.) partial derivative of \(f\) with respect to \(y_i\).

In Step 3a we show that the processes in (3.8) are martingales and identify their quadratic covariations. In step 3b we use the latter results to derive the SDEs for the processes \(Y^N_1\),..., \(Y^N_N\).

Step 3a. We now fix an \(f\in \mathcal {F}_\delta \) for some \(\delta >0\) and consider the family of martingales

Lemma 3.4 implies that the integrand in (3.9) behaves asymptotically as

where \(b_{i}(y)=\sum _{j\ne i} \frac{\theta }{y_{i}-y_j}\). Note also that, for any fixed function \(f\in \mathcal {F}\), the error terms can be bounded uniformly for all sequences \(y'\approx _\varepsilon y\) as above, since \(f\) and all its partial derivatives are bounded and vanish in a neighborhood of the boundary \(\partial \overline{{\mathcal {W}}^N}\) of \(\overline{{\mathcal {W}}^N}\).

By taking the limit of the corresponding martingales \(M^f_\varepsilon \) for a fixed \(f\in \mathcal {F}\) and noting that their limit \(M^f\) can be bounded uniformly on every compact time interval, we conclude that \(M^f\) must be a martingale as well.

In order to proceed further we recall the following definitions. For a real-valued function \(f\) defined on an interval \([0,T]\) (\(T\) can be \(+\infty \) here), its quadratic variation \(\langle f\rangle (t)\) is defined for \(0\le t\le T\) via

where \(\mathfrak {P}\) ranges over all ordered collections of points \(0=t_0<t_1<\dots <t_k=t\) with \(k\) being arbitrary, and \(||\mathfrak {P}||=\min \nolimits _{1\le i\le k} (t_i-t_{i-1})\). Similarly, for two function \(f\) and \(g\) their quadratic covariation \(\langle f,g\rangle (t)\) is defined as

Lemma 3.6

For any two functions \(g,h\in {\mathcal {F}}\), the quadratic covariation of \(M^{g}\) and \(M^{h}\) is given by

Proof

Due to the polarization identity

it is enough to consider the case \(g=h\), that is, to determine the quadratic variation \(\langle M^g\rangle (t)\).

We proceed by finding the limit of the quadratic variation processes \(\langle M^g_\varepsilon \rangle \) of \(M^g_\varepsilon \) as \(\varepsilon \rightarrow 0\). For each \(\varepsilon >0\) and \(j=1,\dots ,N\) define \(\mathcal {S}^{j}_{\varepsilon }\) as the (random) set of all times when the \(j\)-th coordinate of \(Y^N_\varepsilon \) jumps. Note that the sets \(\mathcal {S}^{j}_{\varepsilon }\) are pairwise disjoint and their union \(\bigcup _{j=1}^N S^j_\varepsilon \) is a Poisson point process of intensity \(\varepsilon ^{-1}N\) (see Proposition 2.25).

Recall that the quadratic variation process \(\langle M^g_\varepsilon \rangle (t)\) of \(M^g_\varepsilon \) is given by the sum of squares of the jumps of the process \(M^g_\varepsilon \) (see e.g. [15, Proposition 8.9]) and conclude

with a uniform error term \(O(\varepsilon )\). Suppose that \(g\in \mathcal {F}_{2\delta }\) and consider new \(N\) pairwise disjoint sets \(\widehat{\mathcal {S}}^{j}_{\varepsilon }\), \(j=1,\dots ,N\) satisfying \(\bigcup _{j=1}^N \widehat{\mathcal {S}}^{j}_{\varepsilon } = \bigcup _{j=1}^N {\mathcal {S}}^{j}_{\varepsilon }\) and defined through the following procedure. Take any \(r\in \bigcup _{j=1}^N S^{j}_{\varepsilon }\) and suppose that \(r\in \mathcal {S}^{k}_{\varepsilon }\). If \(\mathrm{dist}(Y^N_\varepsilon (r),\partial \overline{\mathcal {W}^N})\ge \delta \), then put \(r\in \widehat{\mathcal {S}}^k_\varepsilon \). Otherwise, take an independent random variable \(\kappa \) sampled from the uniform distribution on the set \(\{1,2,\dots ,N\}\) and put \(r\in \widehat{\mathcal {S}}^\kappa _\varepsilon \). The definition implies that, for small \(\varepsilon \),

with a uniform error term \(O(\varepsilon )\). Now, take any two reals \(a<b\). We claim that the sets \(\widehat{\mathcal {S}}^{k}_{\varepsilon }\) satisfy the following property almost surely:

Indeed, the Law of Large Numbers for Poisson Point Processes implies

On the other hand, Lemma 3.4 implies the following uniform asymptotics as \(\varepsilon \rightarrow 0\):

where \(\Theta _{<t}\) is the \(\sigma \)-algebra generated by the point process \(\widehat{\mathcal {S}}^{k}_\varepsilon \), \(j=1,\dots ,N\) up to time \(t\). Therefore, the conditional distribution of \(|\widehat{\mathcal {S}}^{k}_{\varepsilon } \cap [a,b]|\) given \(|(\bigcup _{k=1}^N \widehat{\mathcal {S}}^{k}_{\varepsilon }) \cap [a,b]|\) can be sandwiched between two binomial distributions with parameters \(\frac{1}{N}\pm C(\varepsilon )\), where \(\lim _{\varepsilon \rightarrow 0} C(\varepsilon )=0\) (see e.g. [30, Lemma 1.1]). Now, (3.14) and the Law of Large Numbers for the Binomial Distribution imply (3.13).

It follows that the sums in (3.12) approximate the corresponding integrals and we obtain

Note that for each \(g\in {\mathcal {F}}\), both \(M^g_\varepsilon (t)^2\) and \(\langle M^g_\varepsilon \rangle (t)\) are uniformly integrable on compact time intervals (this can be shown for example by another comparison with a Poisson jump process). Further, one of the properties of the quadratic variation (see e.g. [19, Chapter 2, Proposition 6.1]) is that \(M^g_\varepsilon (t)^2-\langle M^g_\varepsilon \rangle (t)\) is a martingale. Sending \(\varepsilon \rightarrow 0\) (see e.g. [19, Chapter 7, Problem 7] for a justification) it follows that the process

is a martingale. On the other hand, since \(M^g(t)\) is continuous in \(t\) (see the end of Step 2), its quadratic variation \(\langle M^g\rangle (t)\) is a unique increasing predictable process such that \(M^g(t)-\langle M^g\rangle (t)\) is a martingale (see [19, Chapter 2, Section 6]). We conclude that

\(\square \)

Step 3b. We are now ready to derive the SDEs for the processes \(Y_1^N,\dots ,Y_N^N\). Define the stopping times \(\tau _\delta \), \(\delta >0\) by

Our next aim is to derive the stochastic integral equations for the processes

Let \(f_j\), \(j=1,\dots ,N\), be an arbitrary function from \({\mathcal {F}}\) such that \(f_j(\mathbf{y})=y_j\) for \(\mathbf{y}\) inside the box \(|y_j|\le 1/\delta \) and such that \(\mathrm{dist}(\mathbf{y},\partial \overline{\mathcal {W}^N})\ge \delta \). The results of Step 3a imply that the processes \(M^{{f}_j}\) are martingales. Note that the definition of stopping times \(\tau _\delta \) imply that on the time interval \([0,\tau _\delta ]\) the processes \(M^{{f}_j}\) and \(M^{y_j}\) almost surely coincide. At this point we can use Lemma 3.6 to conclude that

are martingales with quadratic variations given by \(t\wedge \tau _\delta \) and with the quadratic covariation between any two of them being zero. We may now apply the Martingale Representation Theorem in the form of [29, Theorem 3.4.2] to deduce the existence of independent standard Brownian motions \(W_1,\ldots ,W_N\) (possibly on an extension of the underlying probability space) such that

To finish the proof of Theorem 3.2 it remains to observe that Proposition 3.1 implies

with probability one.

Remark 3.7

An alternative way to derive the system of SDEs for the components of \(Y^N\) is to use [29, Chapter 5, Proposition 4.6]. We will employ this strategy in Sect. 5.3 due to the lack of a straightforward generalization of Lemma 3.6 to the multilevel setting.

3.4 Zero initial condition

A refinement of the proof of Theorem 3.2 involving Proposition 2.9 allows us to deal with the limiting process which is started from \(0\in \overline{{\mathcal {W}}^N}\).

Corollary 3.8

Fix \(\theta \ge 1\). In the notations of Theorem 3.2 and assuming the convergence of the initial conditions to \(0\in \overline{\mathcal {W}^N}\), the process \(X^N_{disc}\) converges in the limit \(\varepsilon \downarrow 0\) in law on \(D^N\) to the \(\beta =2\theta \)–Dyson Brownian motion started from \(0\in \overline{{\mathcal {W}}^N}\), that is, to the unique strong solution of (3.1) with \(\beta =2\theta \) and \(Y(0)=0\in \overline{{\mathcal {W}}^N}\).

Proof

Using Proposition 2.9 and arguing as in the proof of Theorem 3.2 one obtains the convergence of the rescaled versions of the process \(X^N_{disc}\) on every time interval \([t,\infty )\) with \(t>0\) to the solution of (3.1) starting according to the initial distribution of (2.10). Since (2.10) converges to the delta–function at the origin as \(t\rightarrow 0\), we identify the limit points of the rescaled versions of \(X^N_{disc}\) with the solution of (3.1) started from \(0\in \overline{{\mathcal {W}}^N}\). \(\square \)

4 Existence and uniqueness for multilevel DBM

The aim of this section is to prove an analogue of Proposition 3.1 for the multilevel Dyson Brownian motion.

Theorem 4.1

For any \(N\in \mathbb {N}\) and \(\theta > 1\) (that is, \(\beta =2\theta > 2\)), and for any initial condition \(X(0)\) in the interior of \(\overline{\mathcal {G}^N}\), the system of SDEs

with \(W_i^k\), \(1\le i\le k\le N\) being independent standard Brownian motions, possesses a unique weak solution taking values in the Gelfand–Tsetlin cone \(\overline{\mathcal {G}^N}\).

Proof

Given a stochastic process \(X(t)\) taking values in \(\overline{\mathcal {G}^N}\), for any fixed \(\delta >0\), let \(\widehat{\tau }_{\delta }(X)\) denote

that is, the first time when two particles on adjacent levels are at the distance of at most \(\delta \). Further, we define the stopping time \(\tau _\delta [X]\) as the first time when three particles on adjacent levels are at the distance of at most \(\delta \):

Figure 2 shows schematically the six possible triplets of nearby particles at time \(\tau _\delta \).

The following proposition will be proved in Sect. 4.1.

Proposition 4.2

For any \(N\in \mathbb {N}\), \(\delta >0\) and \(\theta > 1\) (that is, \(\beta =2\theta >2\)), and for any initial condition \(X(0)\) in the interior of \(\overline{\mathcal {G}^N}\) the system of stochastic integral equations

with \(W_i^k\), \(1\le i\le k\le N\) being independent standard Brownian motions, possesses a unique weak solution.

In view of Proposition 4.2 we can consider a product probability space which supports independent weak solutions of (4.2) for all \(\delta >0\) and all initial conditions in the interior of \(\overline{\mathcal {G}^N}\). Choosing a sequence \(\delta _l\), \(l\in \mathbb {N}\) decreasing to zero, we can define on this space a process \(X\) such that the law of \(X(t\wedge \tau _{\delta _1}[X])\), \(t\ge 0\) coincides with the law of the solution of (4.2) with \(\delta =\delta _1\) and initial condition \(X(0)\), the law of \(X((\tau _{\delta _1}[X]+t)\wedge \tau _{\delta _2}[X])\), \(t\ge 0\) is given by the law of the solution of (4.2) with \(\delta =\delta _2\) and initial condition \(X(\tau _{\delta _1[X]})\) etc. The uniqueness part of Proposition 4.2 now shows that, for each \(l\in \mathbb {N}\), the law of \(X(t\wedge \tau _{\delta _l[X]})\), \(t\ge 0\) is that of the weak solution of (4.2) with \(\delta =\delta _l\). Since the paths of \(X\) are continuous by construction and hence \(\lim _{l\rightarrow \infty } \tau _{\delta _l}[X]=\tau _0[X]\), we have constructed a weak solution of the system

with \(W_i^k\), \(1\le i\le k\le N\) being independent standard Brownian motions as before. In addition, we note that the law of the solution to (4.3) is uniquely determined. Indeed, for any \(\delta >0\), the process \(X\) stopped at time \(\tau _\delta \) would give a solution to (4.2). Uniqueness of the latter for any \(\delta >0\) now readily implies the uniqueness of the weak solution to (4.3). At this point, Theorem 4.1 is a consequence of the following statement which will be proved in Sect. 4.2.

Proposition 4.3

Suppose that \(X(0)\) lies in the interior of the cone \(\overline{\mathcal {G}^N}\) and let \(X\) be a solution to (4.3).

-

(a)

If \(\theta > 1\), then almost surely \(\tau _0[X]=\infty .\)

-

(b)

If \(\theta \ge 2\), then almost surely \( \widehat{\tau }_{0}[X]=\infty .\)

4.1 Proof of Proposition 4.2

Our proof of Proposition 4.2 is based on a Girsanov change of measure that will dramatically simplify the SDE in consideration. We refer the reader to [29, Section 3.5] and [29, Section 5.3] for general information about Girsanov’s theorem and weak solutions of SDEs.

We start with the uniqueness part. Fix \(N\in \{1,2,\ldots \}\), \(\theta \ge 1\), \(\delta >0\), and let \(X\) be a solution of (4.2). Let \(\mathcal I\) denote the set of \(N(N+1)/2\) pairs \((k,i)\), \(k=1,\dots ,N\), \(i=1,\dots ,k\) which represent different coordinates (particles) in the process \(X\). We will subdivide \(\mathcal I\) into disjoint singletons and pairs of neighboring particles, that is, pairs of the form \(((k,i),(k-1,i))\) or \(((k,i),(k-1,i-1))\). We call any such subdivision a pair-partition of \(\mathcal I\). An example is shown in Fig. 3.

Lemma 4.4

There exists a sequence of stopping times \(0=\sigma _0\le \sigma _1\le \sigma _2\le \dots \le \tau _\delta [X]\) and (random) pair–partitions \(A_1, A_2,\dots \) such that

-

for any \(n=1,2,\dots \), any \(\sigma _{n-1}\le t < \sigma _n\), any two pairs \((k,i)\), \((k',i')\), \(1\le i\le k\le N\), \(1\le i'\le k'\le N\), \(|k-k'|\le 1\), we have \(|X^{k}_i(t)-X^{k'}_{i'}(t)|\ge \delta /2\) unless the pair \(((k,i),(k',i'))\) is one of the pairs of the pair-partition \(A_n\), and

-

for any \(n=1,2,\dots \), either \(\sigma _n=\tau _\delta \) or \(|X^k_i(\sigma _{n+1})-X^k_i(\sigma _n)|\ge \delta /2\) for some \((k,i)\).

Proof

Define the (random) sets \({\mathcal {B}}^k_i\), \({\mathcal {D}}^k_i\) by setting

Note that these sets are closed due to the continuity of the trajectories of \(X\), which in turn is a consequence of (4.2). Define \(A(t;\delta )\) as a pair-partition such that pair \(((k,i),(k-1,i))\) belongs to \(A(t;\delta )\) iff \(t\in {\mathcal {B}}^k_i\) and pair \(((k,i),(k-1,i-1))\) belongs to \(A(t;\delta )\) iff \(t\in {\mathcal {D}}^k_i\). Define \(A(t,\delta /2)\) similarly. The definition of \(\tau _\delta [X]\) implies that such pair-partitions \(A(t;\delta /2)\subset A(t;\delta )\) are well-defined for any \(0\le t\le \tau _\delta [X]\).

Now, we define \(\sigma _n\) and \(A_n\) inductively. First, set \(\sigma _0=0\). Further, for \(n=1,2,\dots \) let \(A_n=A(\sigma _{n-1};\delta )\) and set \(\sigma _n\) to be the minimal \(t\) satisfying \(\tau _\delta [X]\ge t\ge \sigma _{n-1}\) and such that \(A(t;\delta /2)\) has a pair which \(A(\sigma _{n-1};\delta )\) does not have. Since the sets \({\mathcal {B}}^k_i\), \({\mathcal {D}}^k_i\) are closed, either such \(t\) exists or no new pairs are added after time \(\sigma _{n-1}\) and up to time \(\tau _\delta [X]\). In the latter case we set \(\sigma _n=\tau _\delta \). \(\square \)

Next, we fix a \(T>0\), set \(I_n=[\sigma _{n-1},\sigma _{n})\), \(n=1,2,\dots \) and apply a Girsanov change of measure (see e.g. [29, Theorem 5.1, Chapter 3] and note that Novikov’s condition as in [29, Corollary 5.13, Chapter 3] is satisfied due to the boundedness of the integrand in the stochastic exponential) with a density of the form

so that under the new measure \(\widetilde{P}\) for every fixed \(k\), \(i\), \(n\) and \(0\le t\le T\):

where \(\tilde{W}^k_i\), \(1\le i\le k\le N\) are independent standard Brownian motions under the measure \(\widetilde{P}\). We claim that the solution of the resulting system of SDEs (4.5) is pathwise unique on \([0,\lim _{n\rightarrow \infty } \sigma _n)\) (that is, for any two strong solutions of (4.5) adapted to the same Brownian filtration, the quantities \(\lim _{n\rightarrow \infty } \sigma _n\) for the two solutions will be the same with probability one and the trajectories of the two solutions on \([0,\lim _{n\rightarrow \infty } \sigma _n)\) will be identical with probability one). Indeed, on each time interval \(\sigma _{n-1}\le t \le \sigma _n\) the system (4.5) splits into \(|A_n|\) non-interacting systems of SDEs each of which consists of one equation

if \((k,i)\) is a singleton in \(A_n\), or of a system of two equations

if \(((k,i),(k-1,i'))\) is a pair in \(A_n\). Therefore, one can argue by induction over \(n\) and, once pathwise uniqueness of the triplet \(((X(t\wedge \sigma _{n-1}):\,t\ge 0),\sigma _{n-1},A_{n-1})\) is established, appeal to the pathwise uniqueness for (4.6), (4.8) and (4.7) (the latter being the equation for the Bessel process of dimension \(\theta >1\), see [42, Section 1, Chapter XI]) to deduce the pathwise uniqueness of the triplet \(((X(t\wedge \sigma _n):\,t\ge 0),\sigma _n,A_n)\).

The SDEs in (4.5) also allow us to prove the following statement.

Lemma 4.5

The identity \(\lim _{n\rightarrow \infty } \sigma _n=\tau _\delta [X]\) holds with probability one.

Proof

It suffices to show that \(\lim _{n\rightarrow \infty } \sigma _n\wedge T = \tau _\delta [X]\wedge T\) for any given \(T>0\). Indeed, then

and \(\lim _{n\rightarrow \infty } \sigma _n\le \tau _\delta [X]\) holds by the definitions of the stopping times involved. If for some \(n\) we have \(\tau _\delta [X]\wedge T=\sigma _n\wedge T\), then we are done. Otherwise, \(\sigma _n<T\) for all \(n\) and the definition in Lemma 4.4 shows that \(|X^k_i(\sigma _{n+1})-X^k_i(\sigma _n)|\ge \delta /2\) for some \((k,i)\). In addition, (4.5) yields that, under the measure \(\tilde{P}\), \(|X^k_i(\sigma _{n+1})-X^k_i(\sigma _n)|\) is bounded above by the sum of absolute values of the increments of at most two Brownian motions and one Bessel process in time \((\sigma _{n+1}-\sigma _n)\). Since the trajectories of such processes are uniformly continuous on the compact interval \([0,T]\) with probability one, there exist two constants \(c>0\) and \(p>0\) such that \(\tilde{P}(\sigma _{n+1}-\sigma _n>c)>p\). Consequently, \(\sigma _n/c\) stochastically dominates a binomial random variable \(Bin(n,p)\). In view of the law of large numbers for the latter, this is a contradiction to \(\sigma _n<T\) for all \(n\). \(\square \)

Now, we make a Girsanov change of measure back to the original probability measure and conclude that the joint law of \(X(t\wedge \tau _\delta \wedge T)\), \(t\ge 0\), \(\sigma _n\wedge T\) and \(\tau _\delta \wedge T\) under the original probability measure is determined by such law under the measure \(\widetilde{P}\) (the justification for this conclusion can be found for example in the proof of [29, Proposition 5.3.10]). Since the latter is uniquely defined (by the law of the solution to (4.5)), so is the former. Finally, since \(T>0\) was arbitrary, we conclude that the joint law of \(X(t\wedge \tau _\delta [X])\), \(t\ge 0\) and \(\tau _\delta [X]\) is uniquely determined.

To construct a weak solution to (4.2) we start with a probability space \((\Omega ,{{\mathcal {F}}},\mathbb {P})\) that supports a family of independent standard Brownian motions \(\tilde{W}^k_i\), \(1\le i\le k\le N\). In addition, we note (see [42, Section XI] for a proof) that to each pair of Brownian motions of the form \((\tilde{W}^k_i,\,\tilde{W}^{k-1}_{i-1})\) or \((\tilde{W}^k_i,\,\tilde{W}^{k-1}_i)\) and all initial conditions we can associate the unique strong solutions of the SDEs

defined on the same probability space.

We will now construct an \(N(N+1)/2\)-dimensional process \(X(t)\), \(t\ge 0\), stopping times \(\tau _\delta \), \(\sigma _n\), \(n=0,1,2,\dots \) and pair-partitions \(A_n\), \(n=0,1,2,\dots \) which satisfy the conditions of Lemma 4.4 and the system of equations (4.5).

The construction proceeds for each \(\omega \in \Omega \) independently, and is inductive. If the initial condition \(X(0)\) is such that \(\tau _\delta [X]=0\), then there is nothing to prove. Otherwise, we set \(\sigma _0=0\) and \(A_1=A(0;\delta )\) (see the proof of Lemma 4.4 for the definition of \(A(t;\delta )\)). Next, we define \(\hat{X}\) as the unique strong solution of

with initial condition \(\hat{X}(0)=X(0)\).

Now, we can define \(\sigma _1\) as in Lemma 4.4, but with \(\hat{X}(t)\) instead of \(X(t)\). After this, we set \(X(t)\) to be equal to \(\hat{X}(t)\) on the time interval \([0,\sigma _1]\). We further define \(A_2=A(\sigma _1;\delta )\) and repeat the above procedure to define \(X(t)\) on the time interval \([\sigma _1,\sigma _2]\). Iterating this process we can define \(X(t)\) up to time \(\tau _\delta [X]\) thanks to Lemma 4.5. We extend it to all \(t\ge 0\) by setting \(X^k_i(t)=X^k_i(\tau _\delta [X])\) for \(t>\tau _\delta [X]\).

Next, we apply the Girsanov Theorem as in the uniqueness part to conclude that, for each \(T>0\), there exists a probability measure \(\mathbb {Q}_T\) which is absolutely continuous with respect to \(\mathbb {P}\) and such that the representation

holds with \(W^k_i\), \(1\le i\le k\le N\) being independent standard Brownian motions under \(\mathbb {Q}_T\).

Finally, replacing \(T\) by a sequence \(T_n\uparrow \infty \) and using the Kolmogorov Extension Theorem (see e.g. [28, Theorem 6.16] and note that the consistency condition is satisfied due to the uniqueness of the solution to (4.2)), we deduce the existence of processes \(X^k_i\), \(1\le i\le k\le N\) defined on a suitable probability space which solve (4.2).

4.2 Proof of Proposition 4.3