Abstract

We show that there exists an ergodic conductance environment such that the weak (annealed) invariance principle holds for the corresponding continuous time random walk but the quenched invariance principle does not hold.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(d\ge 2\) and let \( E_d\) be the set of all non oriented edges in the \(d\)-dimensional integer lattice, that is, \(E_d = \{e = \{x,y\}: x,y \in {\mathbb Z}^d, |x-y|=1\}\). Let \(\{\mu _e\}_{e\in E_d}\) be a random process with non-negative values, defined on some probability space \((\Omega , \mathcal {F}, \mathrm{\mathbb {P} })\). The process \(\{\mu _e\}_{e\in E_d}\) represents random conductances. We write \(\mu _{xy} = \mu _{yx} = \mu _{\{x,y\}}\) and set \(\mu _{xy}=0\) if \(\{x,y\} \notin E_d\). Set

with the convention that \(0/0=0\) and \(P(x,y)=0\) if \(\{x,y\} \notin E_d\). For a fixed \(\omega \in \Omega \), let \(X = \{X_t, t\ge 0, P^x_\omega , x \in {\mathbb Z}^d\}\) be the continuous time random walk on \({\mathbb Z}^d\), with transition probabilities \(P(x,y) = P_\omega (x,y)\), and exponential waiting times with mean \(1/\mu _x\). The corresponding expectation will be denoted \(E_\omega ^x\). For a fixed \(\omega \in \Omega \), the generator \(\mathcal {L}\) of \(X\) is given by

In [4] this is called the variable speed random walk (VSRW) among the conductances \(\mu _e\). (We have inserted here a factor of \(\frac{1}{2}\)—see Remark 1.5(5).) This model, of a reversible (or symmetric) random walk in a random environment, is often called the random conductance model (RCM).

We are interested in functional Central Limit Theorems (CLTs) for the process \(X\). Given any process \(X\), for \(\varepsilon >0\), set \(X^{(\varepsilon )}_t = \varepsilon X_{t /\varepsilon ^2}, \,t\ge 0\). Let \(\mathcal {D}_T = D([0,T], \mathbb {R}^d)\) denote the Skorokhod space, and let \(\mathcal {D}_\infty =D([0,\infty ), \mathbb {R}^d)\). Write \(d_S\) for the Skorokhod metric and \(\mathcal {B}(\mathcal {D}_T)\) for the \(\sigma \)-field of Borel sets in the corresponding topology. Let \(X\) be the canonical process on \(\mathcal {D}_\infty \) or \(\mathcal {D}_T, \,P_{\text {BM}}\) be Wiener measure on \((\mathcal {D}_\infty , \mathcal {B}(\mathcal {D}_\infty ))\) and let \(E_{\text {BM}}\) be the corresponding expectation. We will write \(W\) for a standard Brownian motion. It will be convenient to assume that \(\{\mu _e\}_{e\in E_d}\) are defined on a probability space \((\Omega , \mathcal {F}, \mathrm{\mathbb {P} })\), and that \(X\) is defined on \((\Omega , \mathcal {F}) \times (\mathcal {D}_\infty , \mathcal {B}(\mathcal {D}_\infty ))\) or \((\Omega , \mathcal {F}) \times (\mathcal {D}_T, \mathcal {B}(\mathcal {D}_T))\). We also define the averaged or annealed measure \(\mathbf{P}\) on \((\mathcal {D}_\infty , \mathcal {B}(\mathcal {D}_\infty ))\) or \((\mathcal {D}_T, \mathcal {B}(\mathcal {D}_T))\) by

Definition 1.1

For a bounded function \(F\) on \(\mathcal {D}_T\) and a constant matrix \(\Sigma \), let \(\Psi ^F_\varepsilon = E^0_\omega F(X^{(\varepsilon )})\) and \(\Psi ^F_\Sigma = E_{\text {BM}}F(\Sigma W)\). In the remaining part of the definition we assume that \(\Sigma \) is not identically zero.

-

(i)

We say that the Quenched Functional CLT (QFCLT) holds for \(X\) with limit \(\Sigma W\) if for every \(T>0\) and every bounded continuous function \(F\) on \(\mathcal {D}_T\) we have \(\Psi ^F_\varepsilon \rightarrow \Psi ^F_\Sigma \) as \(\varepsilon \rightarrow 0\), with \(\mathrm{\mathbb {P} }\)-probability 1.

-

(ii)

We say that the Weak Functional CLT (WFCLT) holds for \(X\) with limit \(\Sigma W\) if for every \(T>0\) and every bounded continuous function \(F\) on \(\mathcal {D}_T\) we have \(\Psi ^F_\varepsilon \rightarrow \Psi ^F_\Sigma \) as \(\varepsilon \rightarrow 0\), in \(\mathrm{\mathbb {P} }\)-probability.

-

(iii)

We say that the Averaged (or Annealed) Functional CLT (AFCLT) holds for \(X\) with limit \(\Sigma W\) if for every \(T>0\) and every bounded continuous function \(F\) on \(\mathcal {D}_T\) we have \( \mathrm{\mathbb {E} }\Psi ^F_\varepsilon \rightarrow \Psi _{\Sigma }^F\). This is the same as standard weak convergence with respect to the probability measure \(\mathbf{P}\).

Since the functions \(F\) in this definition are bounded, it is immediate that QFCLT \(\Rightarrow \) WFCLT \(\Rightarrow \) AFCLT. One could consider a more general form of the WFCLT and QFCLT in which one allows the matrix \(\Sigma \) to depend on the environment \(\mu _\cdot ({\omega })\). However, if the environment is stationary and ergodic, then \(\Sigma \) is a shift invariant function of the environment, so must be \(\mathrm{\mathbb {P} }\)—a.s. constant.

In [12] it is proved that if \(\mu _e\) is a stationary ergodic environment with \(\mathrm{\mathbb {E} }\mu _e<\infty \) then the WFCLT holds (here \(\Sigma \equiv 0\) is allowed). It is an open question as to whether the QFLCT holds under these hypotheses. For the QFCLT in the case of percolation see [7, 15, 18], and for the Random Conductance Model with \(\mu _e\) i.i.d see [2, 4, 10, 16]. In the i.i.d. case the QFCLT holds (with \(\Sigma \not \equiv 0\)) for any distribution of \(\mu _e\) provided \(p_+=\mathrm{\mathbb {P} }(\mu _e>0) > p_c\), where \(p_c\) is the critical probability for bond percolation in \({\mathbb Z}^d\).

Definition 1.2

For \(1\le i < j \le d\) let \(T_{ij}\) be the isometry of \({\mathbb Z}^d\) defined by interchanging the \(i\)th and \(j\)th coordinates, and \(T_i\) be the isometry defined by \(T_i(x_1, \dots , x_i, \dots , x_d) = (x_1, \dots , - x_i, \dots , x_d)\). We say that an environment \((\mu _e)\) on \({\mathbb Z}^d\) is symmetric if the law of \((\mu _e)\) is invariant under \(T_i, 1\le i\le d\) and \(\{ T_{ij}, 1\le i < j \le d\}\).

If \((\mu _e)\) is stationary, ergodic and symmetric, and the WFCLT holds with limit \(\Sigma W\) then the limiting covariance matrix \(\Sigma ^T \Sigma \) must also be invariant under symmetries of \({\mathbb Z}^d\), so must be a constant \(\sigma \ge 0\) times the identity.

Our first result concerns the relation between the weak and averaged FCLT. In general, of course, for a sequence of random variables \(\xi _n\), convergence of \(\mathrm{\mathbb {E} }\xi _n\) does not imply convergence in probability. However, in the context of the RCM, the AFCLT and WFCLT are equivalent.

Theorem 1.3

Suppose the AFCLT holds. Then the WFCLT holds.

A slightly more general result is given in Theorem 2.14 below. Our second result concerns the relation between the weak and quenched FCLT.

Theorem 1.4

Let \(d=2\) and \(p<1\). There exists a symmetric stationary ergodic environment \(\{\mu _e\}_{e\in E_2}\) with \(\mathrm{\mathbb {E} }(\mu _e^p \vee \mu _e^{-p})<\infty \) and a sequence \(\varepsilon _n \rightarrow 0\) such that

-

(a)

the WFCLT holds for \(X^{(\varepsilon _n)}\) with limit \(W\), but

-

(b)

the QFCLT does not hold for \(X^{(\varepsilon _n)}\) with limit \( \Sigma W\) for any \(\Sigma \).

Remark 1.5

-

1.

Under the weaker condition that \(\mathrm{\mathbb {E} }\mu _e^p<\infty \) and \(\mathrm{\mathbb {E} }\mu _e^{-q}<\infty \) with \(p<1, \,q<1/2\) we have the full WFCLT for \(X^{(\varepsilon )}\) as \(\varepsilon \rightarrow 0\), i.e., not just along a sequence \(\varepsilon _n\). However, the proof of this is very much harder and longer than that of Theorem 1.4(a)—see [5]. (Since our environment has \(\mathrm{\mathbb {E} }\mu _e = \infty \) we cannot use the results of [12].) We have chosen to use in this paper essentially the same environment as in [5], although for Theorem 1.4 a slightly simpler environment would have been sufficient.

-

2.

Biskup [9] has proved that the QFCLT holds with \(\sigma >0\) if \(d=2\) and \((\mu _e)\) are symmetric and ergodic with \(\mathrm{\mathbb {E} }( \mu _e \vee \mu _e^{-1})<\infty \).

-

3.

See Remark 6.4 for how our example can be adapted to \({\mathbb Z}^d\) with \(d\ge 3\); in that case we have the same moment conditions as in Theorem 1.4.

-

4.

In [1] it is proved that the QFCLT holds (in \({\mathbb Z}^d, \,d\ge 2\)) for stationary symmetric ergodic environments \((\mu _e)\) under the conditions \(\mathrm{\mathbb {E} }\mu _e^p <\infty , \,\mathrm{\mathbb {E} }\mu _e^{-q}<\infty \), with \(p^{-1}+q^{-1} <2/d\).

-

5.

If \(\mu _e \equiv 1\) then due to the normalisation factor \(\frac{1}{2}\) in (1.1), the vertical jumps of \(X\) occur at rate 1, and the FCLT holds for \(X\) with limit \(W\).

The remainder of the paper after Sect. 2 constitutes the proof of Theorem 1.4. The argument is split into several sections. In the proof, we will discuss the conditions listed in Definition 1.1 for \(T=1\) only, as it is clear that the same argument works for general \(T>0\).

2 Averaged and weak invariance principles

The basic setup will be slightly more general in this section than in the introduction. As in the Introduction, let \((\Omega , \mathcal {F}, \mathrm{\mathbb {P} })\) be a probability space, fix some \(T>0\) and let \(\mathcal {D}=\mathcal {D}_T\) in this section (although we will also use \(\mathcal {D}_{2T}\)). Recall that \(X\) is the coordinate/identity process on \(\mathcal {D}\). Let \(C(\mathcal {D})\) be the family of all functions \(F: \mathcal {D}\rightarrow \mathbb {R}\) which are continuous in the Skorokhod topology. In the following definition, \(P^{\omega }_n\) will stand for a probability measure (not necessarily arising from an RCM) on \(\mathcal {D}\) for \(\omega \in \Omega \) and \(n\ge 1\). We will also refer to a probability measure \(P_0\) on \(\mathcal {D}\). The corresponding expectations will be denoted \(E^{\omega }_n \) and \( E_0\). The following definition was first introduced in [14], see also [12].

Definition 2.1

We will say that \(P^{\omega }_n\) converge weakly in measure to \(P_0\) if for each bounded \(F\in C(\mathcal {D})\),

Let \(\delta _n \rightarrow 0\), let \(\Lambda _n = \delta _n {\mathbb Z}^d\), and let \(\lambda _n\) be counting measure on \(\Lambda _n\) normalized so that \(\lambda _n \rightarrow dx\) weakly, where \(dx\) is Lebesgue measure on \(\mathbb {R}^d\). Suppose that for each \({\omega }\) and \(n \ge 1\) we have Markov processes \(X^{(n)}=(X_t, t\ge 0, P^x_{{\omega },n}, x \in \Lambda _n)\) with values in \(\Lambda _n\). The corresponding expectations will be denoted \(E^x_{{\omega },n}\). Write

for the semigroup of \(X^{(n)}\). Since we are discussing weak convergence, it is natural to put the index \(n\) in the probability measures \(P^x_{{\omega },n}\) rather than the process; however we will sometimes abuse notation and refer to \(X^{(n)}\) rather than \(X\) under the laws \((P^x_{{\omega },n})\). Recall that \(W\) denotes a standard Brownian motion.

For the remainder of this section, we will suppose that the following Assumption holds.

Assumption 2.2

-

1.

For each \({\omega }\), the semigroup \(T^{({\omega }, n)}_t\) is self adjoint on \(L^2( \Lambda _n, \lambda _n)\).

-

2.

The \(\mathrm{\mathbb {P} }\) law of the ‘environment’ for \(X^{(n)}\) is stationary. More precisely, for \(x \in \Lambda _n\) there exist measure preserving maps \(T_x : \Omega \rightarrow \Omega \) such that for all bounded measurable \(F\) on \(\mathcal {D}_T\),

$$\begin{aligned} E^x_{{\omega },n} F( X)&= E^0_{T_x {\omega },n} F( X+x) , \end{aligned}$$(2.2)$$\begin{aligned} \mathrm{\mathbb {E} }E^0_{T_x {\omega },n} F( X)&= \mathrm{\mathbb {E} }E^0_{{\omega },n} F( X). \end{aligned}$$(2.3) -

3.

The AFCLT holds, that is for all \(T>0\) and bounded continuous \(F\) on \(\mathcal {D}_T\),

$$\begin{aligned} \mathrm{\mathbb {E} }E^0_{{\omega },n} F(X) \rightarrow E_{\text {BM}}F(X). \end{aligned}$$

Given a function \(F \) from \(\mathcal {D}_T\) to \(\mathbb {R}\) set

Note that combining (2.2) and (2.3) we obtain

Set

Note that \(\mathcal {T}^{(n)}_t\) is not in general a semigroup. Write \(K_t\) for the semigroup of Brownian motion on \(\mathbb {R}^d\). We also need notation for expectation of general functions \(F\) on \(\mathcal {D}_T\), so we define

Using this notation, the AFCLT states that for \(F \in C(\mathcal {D}_T)\)

Definition 2.3

Fix \(T>0\) and recall that \(\mathcal {D}=\mathcal {D}_T\). Write \(d_U\) for the uniform norm, i.e.,

Recall that we defined \(d_S(w,w')\) to be the usual Skorokhod metric on \(\mathcal {D}\). We have \(d_S(w,w') \le d_U(w,w')\), but the topologies given by the two metrics are distinct. Let \(\mathcal {M}(\mathcal {D})\) be the set of measurable \(F\) on \(\mathcal {D}\). A function \(F\in \mathcal {M}(\mathcal {D})\) is uniformly continuous in the uniform norm on \(\mathcal {D}\) if there exists \(\rho (\varepsilon )\) with \(\lim _{\varepsilon \rightarrow 0} \rho (\varepsilon ) =0\) such that if \(w, w' \in \mathcal {D}_T\) with \(d_U(w,w')\le \varepsilon \) then

Write \(C_U(\mathcal {D})\) for the set of \(F\) in \(\mathcal {M}(\mathcal {D})\) which are uniformly continuous in the uniform norm. Note that we do not have \(C_U(\mathcal {D}) \subset C(\mathcal {D})\).

Let \(C^1_0(\mathbb {R}^d)\) denote the set of continuously differentiable functions with compact support. Let \(\mathcal {A}_m\) be the set of \(F\) such that

where \(0 \le t_1 \le \dots t_m \le T, \,f_i \in C^1_0(\mathbb {R}^d)\), and let \(\mathcal {A}= \bigcup _m \mathcal {A}_m\).

Lemma 2.4

Let \(F \in \mathcal {A}\). Then \(F \in C_U(\mathcal {D})\), and \(\mathcal {K}F \in C_b(\mathbb {R}^d) \cap L^1(\mathbb {R}^d)\).

Proof

Let \(f \in \mathcal {A}_m\). Choose \(C\ge 2\) so that \(||f_i||_\infty \le C\) and \(|f_i(x)-f_i(y)| \le C|x-y|\) for all \(x,y ,i\). Then

Since \(f_i\) are bounded and continuous, so is \(\mathcal {K}F\). Also, \(|F| \le C^{m-1} |f(w(t_1))|\), so

\(\square \)

Lemma 2.5

For all \(F \in \mathcal {M}(\mathcal {D})\),

Proof

By the stationarity of the environment,

The result for \(U^{({\omega },n)}\) is then immediate. \(\square \)

Lemma 2.6

Let \(F \in C_U(\mathcal {D}_T)\). Then \( T^{({\omega },n)} F_x(0), \, U^{({\omega },n)} F_x(0)\), and \(\mathcal {T}^{(n)} F(x)\) are uniformly continuous on \(\Lambda _n\) for every \(n \in {\mathbb {N}}\), with a modulus of continuity which is independent of \(n\).

Proof

If \(|x-y| \le \varepsilon \) then \(d_U(w+x,w+y)\le \varepsilon \), so if \(F\in C_U(\mathcal {D}_T)\) and \(\rho \) is such that (2.5) holds, then \(|F_x(w)-F_y(w)| \le \rho (\varepsilon )\), and hence

This implies the uniform continuity of \( T^{({\omega },n)} F_x(0)\) and \( U^{({\omega },n)} F_x(0)\). By (2.7),

so the uniform continuity of \(\mathcal {T}^{(n)} F(x)\) follows from that of \(T^{({\omega },n)} F_x(0)\). \(\square \)

Lemma 2.7

Let \(F \in \mathcal {A}\). Then

Proof

The AFCLT (in 2.2) implies that \(\mathrm{\mathbb {E} }P^0_{{\omega }, n}\) converge weakly to \(P_{BM}\). Hence the finite dimensional distributions of \(X^{(n)}\) converge to those of \(W\), and this is equivalent to (2.8). \(\square \)

Let \(C_b(\mathbb {R}^d)\) denote the space of bounded continuous functions on \(\mathbb {R}^d\).

Lemma 2.8

Let \(F \in \mathcal {A}\), and \(h \in C_b(\mathbb {R}^d)\cap L^1(\mathbb {R}^d)\). Then

Proof

This is immediate from (2.8) and the uniform continuity proved in Lemma 2.6. \(\square \)

The next Lemma gives the key construction in this section: using the self-adjointness of \(T^{({\omega },n)}_t\) we can linearise expectations of products. A similar idea is used in [19] in the context of transition densities.

Let \(F \in \mathcal {A}_m\) be given by (2.6). Set \(s_j=t_m-t_{m-j}\), and let

Note that \(\widehat{F}\) is defined on functions \(w \in \mathcal {D}_{2T}\) (not \(\mathcal {D}_T\)). Write \({\langle f,g \rangle }_n\) for the inner product in \(L^2(\lambda _n)\) and \({\langle f,g \rangle }\) for the inner product in \(L^2(\mathbb {R}^d)\).

Lemma 2.9

With \(F\) and \(\widehat{F}\) as above,

Proof

Using the Markov property of \(X^{(n)}\)

Hence we obtain

Using the self-adjointness of \(T^{({\omega },n)}_t\) gives

Continuing in this way we obtain

The proof for \(\mathcal {K}\) is exactly the same. \(\square \)

Lemma 2.10

Let \(F \in \mathcal {A}\). Then

Proof

We have

Thus

Since \(\mathcal {K}F\) is continuous we have

Taking \(h= \mathcal {K}F\) and using Lemma 2.4, Lemma 2.8 gives that

Let \(f_m\) and \(\widehat{F}\) be as in the the previous lemma. Then

Again by Lemma 2.8 and (2.11),

Adding the limits of the three terms in (2.13), we obtain (2.12). \(\square \)

Lemma 2.11

Let \(F \in \mathcal {A}\). Then

Proof

The previous lemma gives

Using Lemma 2.5 we have

and using the uniform continuity of \(U^{({\omega },n)}F_x(0)\) gives (2.14). \(\square \)

Write \(\mathrm{\mathbb {D} }\) for the set of dyadic rationals.

Proposition 2.12

Given any subsequence \((n_k)\) there exists a subsequence \((n'_k)\) of \((n_k)\) and a set \(\Omega _0\) with \(\mathrm{\mathbb {P} }(\Omega _0)=1\), such that for any \({\omega }\in \Omega _0\) and \(q_1 \le q_2 \le \cdots \le q_m\) with \(q_i \in \mathrm{\mathbb {D} }\), the r.v. \((X_{q_i}, i=1, \dots ,m)\) under \(P^0_{{\omega },n'_k}\) converge in distribution to \((W_{q_i}, i=1, \dots , m)\).

Proof

Let \(\mathrm{\mathbb {D} }_T=[0,T] \cap \mathrm{\mathbb {D} }\). Fix a finite set \(q_1 \le \dots \le q_m\) with \(q_i \in \mathrm{\mathbb {D} }_T\). Then convergence of \((X_{q_i}, i=1, \dots ,m, P^0_{{\omega },n} )\) is determined by a countable set of functions \(F_i \in \mathcal {A}_m\). So by Lemma 2.11 we can find nested subsequences \((n^{(i)}_k)\) of \((n_k)\) such that for each \(i\)

A diagonalization argument then implies that there exists a subsequence \(n''_k\) such that \((X_{q_i}, i=1, \dots , m, P^0_{{\omega },n''_k} )\) converge in distribution to \((W_{q_i}, i=1, \dots , m)\). Since the set of the finite sets \(\{q_1, \dots , q_m\}\) is countable, an additional diagonalization argument then implies that there exists a subsequence \((n'_k)\) such that this convergence holds for all such finite sets. \(\square \)

Lemma 2.13

If AFCLT holds then “tightness in probability” holds, i.e., for any \(\delta >0\) there exist \(\delta _1>0\) and \(n_1\) such that for \(n \ge n_1\), there is a set \(A_n\) of \(\omega \) with \(\mathrm{\mathbb {P} }(A_n) \ge 1- \delta \), such that for \(\omega \in A_n\),

Proof

If AFCLT holds then, by the Skorokhod Lemma, we can construct \(X^{(n)}\) and \(W\) on a common probability space, in such a way that each \(X^{(n)}\) has the distribution \(\mathrm{\mathbb {E} }P^0_{\omega ,n}\) and \(X^{(n)} \rightarrow W\) in the Skorokhod topology, a.s.

Fix any \(\delta >0\). By continuity of Brownian motion there exists \(\delta _1>0\) such that

If a sequence of processes converges in the Skorokhod topology to a continuous process then it converges also in the uniform sense. Hence, in view of (2.17), there exists \(n_1\) such that for \(n\ge n_1\),

This implies that for \(n\ge n_1\), there is a set \(A_n\) of \(\omega \) with \(\mathrm{\mathbb {P} }(A_n) \ge 1- \sqrt{2\delta }\), such that for \(\omega \in A_n\),

It is elementary to convert the form of this estimate to the form given in the lemma. \(\square \)

Theorem 2.14

If Assumption 2.2 holds then \( P^0_{{\omega },n}\) converge weakly in measure to \(P_{\text {BM}}\).

Proof

Fix any \(T>0\), an arbitrarily small \(\varepsilon >0\) and any bounded function \(F \in C(\mathcal {D}_T)\). Let \(W\) denote Brownian motion and suppose that processes \(Y\) and \(W\) are defined on the same probability space, for which we use the generic notation \(P\) and \(E\). It is easy to see that one can find \(\delta \in (0, \varepsilon /2)\) so small that if the process \(Y\) satisfies

then

Let \(\delta _1>0\) be so small that (2.16) and (2.17) hold with the present choice of \(\delta \). Suppose that \(0 = q_1 \le q_2 \le \dots \le q_m = T\) are dyadic rationals and \(q_k - q_{k-1} \le \delta _1\) for all \(k\) (note that we can assume that \(T\) is a dyadic rational without loss of generality). By Proposition 2.12, we can find a sequence \(n_k\) such that the joint distributions of the random variables \((X_{q_i}, i=1, \dots ,m)\) under \(P^0_{{\omega },n_k}\) converge to the distribution of \((W_{q_i}, i=1, \dots , m)\), as \(k\rightarrow \infty , \,\mathrm{\mathbb {P} }\)-a.s. By the Skorokhod Lemma, we can construct \((X^{\omega ,n_k}_{q_i}, i=1, \dots ,m)\) and \((W^{\omega ,n_k}_{q_i}, i=1, \dots , m)\) on the same probability space \((\Omega _\omega , \mathcal {F}_\omega , P_\omega )\) so that

\((X^{\omega ,n_k}_{q_i}, i=1, \dots ,m)\) has the same distribution under \(P_\omega \) as \((X_{q_i}, i=1, \dots ,m)\) under \(P^0_{{\omega },n_k}\), and \((W^{\omega ,n_k}_{q_i}, i=1, \dots , m)\) has the same distribution under \(P_\omega \) as Brownian motion (sampled at a finite number of times).

Using conditional probabilities and enlarging the probability space, if necessary, we can assume that there exist processes \((X^{\omega ,n_k}_t, 0\le t \le T)\) and \((W^{\omega ,n_k}_t, 0\le t \le T)\) on the same probability space \((\Omega _\omega , \mathcal {F}_\omega , P_\omega )\) such that \((X^{\omega ,n_k}_t, 0\le t \le T)\) has the same distribution under \(P_\omega \) as \((X_t, 0\le t \le T)\) under \(P^0_{{\omega },n_k}, \,(W^{\omega ,n_k}_t, 0\le t \le T)\) is Brownian motion, and all the conditions stated in the previous paragraph hold for these processes sampled at \(q_i, i=1, \dots ,m\); in particular, (2.20) holds.

It follows from (2.20) that there exist an event \(H\) with \(\mathrm{\mathbb {P} }(H) > 1-\delta \) and \(k_1\) such that for \(k\ge k_1\) and each \(\omega \in H\),

By Lemma 2.13, for \(k\ge k_2\), there is a set \(A_k\) of \(\omega \) with \(\mathrm{\mathbb {P} }(A_k) \ge 1- \delta \), such that for \(\omega \in A_k\),

Since \((X^{\omega ,n_k}_t, 0\le t \le T)\) has the same distribution under \(P_\omega \) as \((X^{(n_k)}_t, 0\le t \le T)\) under \(P^0_{{\omega },n_k}\), it follows from (2.22) that for \(k\ge k_2\), there is a set \(A_k\) of \(\omega \) with \(\mathrm{\mathbb {P} }(A_k) \ge 1- \delta \), such that for \(\omega \in A_k\),

For similar reasons, (2.17) implies that

We now combine (2.21), (2.23) and (2.24) to conclude that for \(k \ge k_1 \vee k_2\), there is a set \(H\cap A_{k}\) of \(\omega \) with \(\mathrm{\mathbb {P} }(H\cap A_{k}) \ge 1- 2\delta \), such that for \(\omega \in H\cap A_{k}\),

In view of (2.18)–(2.19) this implies that for \(k \ge k_1 \vee k_2\), there is a set \(H\cap A_{k}\) of \(\omega \) with \(\mathrm{\mathbb {P} }(H\cap A_{k}) \ge 1- 2\delta \), such that for \(\omega \in H\cap A_{k}\),

Set \(\xi _n = |E^0_{\omega ,n} F(X) - E F(W) |\); since \(\delta < \varepsilon /2\), (2.25) implies that

We now extend this result to the whole sequence, and claim that there exists \(n_1\) such that

Suppose not: then there exists a subsequence \(n^*_k\) with \(\mathrm{\mathbb {P} }( \xi _{n^*_k} > \varepsilon ) \ge \varepsilon \) for all \(k\). However, by Proposition 2.12, we can find a subsequence \(n_k\) of \(n^*_k\) such that the joint distributions of the random variables \((X_{q_i}, i=1, \dots ,m)\) under \(P^0_{{\omega },n_k}\) converge to the distribution of \((W_{q_i}, i=1, \dots , m)\), as \(k\rightarrow \infty , \,\mathrm{\mathbb {P} }\)-a.s. Applying the argument above to this subsequence, we have a contradiction to (2.26). Thus (2.27) holds, and this completes the proof of the theorem. \(\square \)

3 Construction of the environment

The remainder of this paper is concerned with the proof of Theorem 1.4. The main idea of the proof as as follows. We choose a sequence \(a_n\) of integers, with \(a_n \gg a_{n-1}\), and \(a_n/a_{n-1} = m_n \in {\mathbb Z}\). For each \(n\) we define an ergodic tiling of \({\mathbb Z}^2\) into (disjoint) squares, each with \(a_n^2\) points. Write \(\mathcal {S}_n\) for the collection of these squares; they are defined so that each square in \(\mathcal {S}_n\) is the union of \(m_n^2\) squares in \(\mathcal {S}_{n-1}\). In each square in \(\mathcal {S}_n\) we place 4 obstacles of diameter \(O(b_n)\), where \(b_n \simeq n^{-1/2} a_n\). The obstacles are chosen so that the resulting environment is symmetric. Let \(F_n\) be the event that \(0\) is within a distance \(O(b_n)\) of an obstacle at scale \(n\). The obstacles are such that if \(F_n\) holds then the rescaled process \(Z_n=(b_n^{-1} X_{b_n^2 t}, 0\le t \le 1)\) will be far from a Brownian motion. Thus if \(F_n\) holds i.o. then the QFCLT will fail. On the other hand, if \(\mathrm{\mathbb {P} }(F_n) \rightarrow 0\) then with high probability \(Z_n\) will be close to BM, and (after some work) we do have the WFCLT.

We now begin by giving the construction of the sets \(\mathcal {S}_n\) and the associated environment. Let \(\Omega = (0,\infty )^{E_2}\), and \(\mathcal {F}\) be the Borel \(\sigma \)-algebra defined using the usual product topology. Then every \(t\in {\mathbb Z}^2\) defines a translation \(T_t \) of the environment by \(t\). Stationarity and ergodicity of the measures defined below will be understood with respect to these transformations.

All constants (often denoted \(c_1, c_2\), etc.) are assumed to be strictly positive and finite. For a set \(A \subset {\mathbb Z}^2\) let \(E(A)\) be the set of edges in \(A\) if regarded as a subgraph of \({\mathbb Z}^2\). Let \(E_h(A)\) and \(E_v(A)\) respectively be the set of horizontal and vertical edges in \(E(A)\). Write \(x \sim y\) if \(\{x,y\}\) is an edge in \({\mathbb Z}^2\). Define the exterior boundary of \(A\) by

Let also

Finally define balls in the \(\ell ^\infty \) norm by \(B_\infty (x,r)= \{y: ||x-y||_\infty \le r\}\); of course this is just the square with center \(x\) and side \(2r\).

Let \(\{a_n\}_{n\ge 0}\), \(\{ \beta _n\}_{n \ge 1}\) and \(\{b_n\}_{n\ge 1}\) be strictly increasing sequences of positive integers growing to infinity with \(n\), with

We will impose a number of conditions on these sequences in the course of the paper. We collect these conditions here so that the reader can check that all conditions can be satisfied simultaneously. There is some redundancy in the conditions, for easy reference. (Some additional conditions on \(b_n/a_{n-1}\) are needed for the proof in [5] of the full WFCLT for \((X^{(\varepsilon )})\).)

-

(i)

\(a_n\) is even for all \(n\).

-

(ii)

For each \(n \ge 1, \,a_{n-1}\) divides \(b_n\), and \(b_n\) divides \(\beta _n\) and \(a_n\).

-

(iii)

\(b_1 \ge 10^{10}\).

-

(iv)

\(a_n/\sqrt{2n} \le b_n \le a_n / \sqrt{n} \) for all \(n\), and \(b_n \sim a_n/\sqrt{n}\).

-

(v)

\(b_{n+1} \ge 2^n b_n\) for all \(n\).

-

(vi)

\(b_n > 40 a_{n-1}\) for all \(n\).

- (vii)

-

(viii)

\(100b_n < \beta _n \le b_n n^{1/4} < 3 \beta _n < a_n/10\) for all \(n\).

These conditions do not define \(a_n\)’s and \(b_n\)’s uniquely. It is easy to check that there exist constants that satisfy all the conditions: if \(a_i,b_i,\beta _i\) have been chosen for all \(i\in \{1,\ldots , n-1\}\), then if \(b_n\) is chosen large enough [with care on respecting the divisibility condition in (ii)], it will satisfy all the conditions imposed on it with respect to constants of smaller indices. Then one can choose \(a_n\) and \(\beta _n\) so that the remaining conditions are satisfied.

We set

We begin our construction by defining a collection of squares in \({\mathbb Z}^2\). Let

Thus \(\mathcal {S}_n(x)\) gives a tiling of \({\mathbb Z}^2\) by disjoint squares of side \(a_n-1\) and period \(a_n\). We say that the tiling \(\mathcal {S}_{n-1}(x_{n-1})\) is a refinement of \(\mathcal {S}_n(x_n)\) if every square \(Q \in \mathcal {S}_n(x_n)\) is a finite union of squares in \(\mathcal {S}_{n-1}(x_{n-1})\). It is clear that \(\mathcal {S}_{n-1}(x_{n-1})\) is a refinement of \(\mathcal {S}_n(x_n)\) if and only if \(x_n = x_{n-1}+ a_{n-1}y\) for some \(y \in {\mathbb Z}^2\).

Take \(\mathcal {O}_1\) uniform in \(B'_1\), and for \(n\ge 2\) take \(\mathcal {O}_n\), conditional on \((\mathcal {O}_1, \dots , \mathcal {O}_{n-1})\), to be uniform in \(B'_n \cap ( \mathcal {O}_{n-1} + a_{n-1}{\mathbb Z}^2)\). We now define random tilings by letting

Let \(\eta _n, \,K_n\) be positive constants; we will have \(\eta _n \ll 1 \ll K_n\). We define conductances on \(E_2\) as a limit of conductances for \(n=1,2\ldots \), as follows. For each \(n\), conductances on a tile of \(\mathcal {S}_n\) will be the same for each tile. Recall that \(a_n\) is even, and let \(a_n' = \frac{1}{2} a_n\). Let

We first define conductances \(\nu ^{0,n}_e\) for \(e \in E(C_n)\). Let

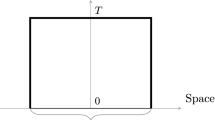

Thus the set \(D^{00}_n \cup D_n^{01}\) resembles the letter I (see Fig. 1).

For an edge \(e \in E(C_n)\) we set

The obstacle set \(D_n^0\). Each obstacle is a copy, in some cases a rotated one, of the obstacle set given in Fig. 1

We then extend \(\nu ^{n,0}\) by symmetry to \(E(B_n)\). More precisely, for \(z =(x,y) \in B_n\), let \(R_1 z=( y,x)\) and \(R_2z = (a_n-y,a_n-x)\), so that \(R_1\) and \(R_2\) are reflections in the lines \(y=x\) and \(x+y=a_n\). We define \(R_i\) on edges by \(R_i (\{x,y\}) = \{R_i x, R_i y \}\) for \(x,y \in B_n\). We then extend \(\nu ^{0,n}\) to \(E( B_n)\) so that \(\nu ^{0,n}_e = \nu ^{0,n}_{R_1 e }=\nu ^{0,n}_{R_2 e }\) for \(e \in E(B_n)\). We define the obstacle set \(D_n^0\) by setting (see Fig. 2),

Note that \(\nu ^{n,0}_e=1\) for every edge adjacent to the boundary of \(B_n\), or indeed within a distance \( a_n/4\) of this boundary. If \(e=(x,y)\), we will write \(e-z = (x-z,y-z)\). Next we extend \(\nu ^{n,0}\) to \(E_2\) by periodicity, i.e., \(\nu ^{n,0}_e = \nu ^{n,0}_{e+ a_n x}\) for all \(x\in {\mathbb Z}^2\). Finally, we define the conductances \(\nu ^n\) by translation by \(\mathcal {O}_n\), so that

We also define the obstacle set at scale \(n\) by

We illustrate two levels of construction in Fig. 3.

We define the environment \(\mu ^n_e\) inductively by

Once we have proved the limit exists, we will set

Theorem 3.1

-

(a)

For each \(n\) the environments \((\nu ^n_e, e\in E_2), \,(\mu ^n_e, e\in E_2)\) are stationary, symmetric and ergodic.

-

(b)

The limit (3.2) exists \(\mathrm{\mathbb {P} }\)-a.s.

-

(c)

The environment \((\mu _e, e \in E_2)\) is stationary, symmetric in the sense of Definition 1.2, and ergodic with respect to the group of translations of \({\mathbb Z}^2\).

Proof

(a) For \(x=(x_1,x_2) \in {\mathbb Z}^2\) define the modulo \(a\) value of \(x\) as the unique \((y_1,y_2)\in [0,a-1]^2\) such that \(x_1\equiv y_1\) (mod \(a\)) and \(x_2\equiv y_2\) (mod \(a\)). We say that \(x,y\in {\mathbb Z}^2\) are equivalent modulo \(a\) if their modulo \(a\) values are the same, and denote it by \(x\equiv y\) mod \(a\).

Let \(\mathcal {K}_n\) be the set of \(n\)-tuples \((x_1,\ldots , x_n)\) with \(x_i\in (x_{i-1}+a_{i-1}{\mathbb Z}^2)\cap [0,a_i-1]^2\) (with the convention \(a_0=1, x_0=0\)). Denote the uniform measure on \(\mathcal {K}_n\) by \(\mathrm{\mathbb {P} }_n\). Note that \((\mathcal {O}_1,\ldots ,\mathcal {O}_n)\) is distributed according to \(\mathrm{\mathbb {P} }_n\).

Let \(U_n\) be a uniformly chosen element of \([0,a_n-1]^2\cap {\mathbb Z}^2\). Then since each \(a_{i-1}\) divides \(a_i\), the distribution of \((U_n+a_1 {\mathbb Z}^2,\ldots , U_n +a_n {\mathbb Z}^2)\) is stationary, symmetric and ergodic with respect to the isometries \((\hat{T}_t, t \in {\mathbb Z}^2)\) defined by

Let \(\beta \) be the bijection between \([0,a_n-1]^2\,\cap \,{\mathbb Z}^2\) and \(\mathcal {K}_n\) defined as \(\beta (t)=(x_1,\ldots , x_n)\), where \(x_i\) is the mod \(a_i\) value of \(t\). The push-forward of the uniform measure for \(U_n\) is then the uniform measure on \(\mathcal {K}_n\). Furthermore, \(\beta \) commutes with translations in the sense that if \(\beta (t)=(x_1,\ldots , x_n)\) and \(\tau \in {\mathbb Z}\), then \(\beta (t+\tau ) =(x_1+\tau ,\ldots , x_n+\tau )\), where addition in the \(i\)’th coordinate is understood modulo \(a_i\). Similarly, \(\beta \) commutes with rotations and reflections. Hence symmetry, stationarity and ergodicity of \((O_1+a_1{\mathbb Z}^2, \ldots , O_n +a_n{\mathbb Z}^2)\) follows from that of \((U_n+a_1{\mathbb Z}^2, \ldots , U_n+a_n{\mathbb Z}^2)\). Symmetry, stationarity and ergodicity of \((\nu ^n_e, e\in E_2)\) and \((\mu ^n_e, e\in E_2)\) follows from the fact that \((\nu ^n_e, e\in E_2)\) and \((\mu ^n_e, e\in E_2)\) are deterministic functions of \((O_1+a_1{\mathbb Z}^2, \ldots , O_n +a_n{\mathbb Z}^2)\), and these functions commute with graph isomorphisms of \({\mathbb Z}^2\).

(b) \(B_n\) contains more than \(2a_n^2\) edges, of which less than \(100 b_n\) are such that \(\nu ^{n,0}_e\ne 1\). So by the stationarity of \(\nu ^n\),

The convergence in (3.2) then follows by the Borel–Cantelli lemma.

(c) The definition (3.2) and (a) show that \((\mu _e, e\in E_2)\) is stationary and symmetric, so all that remains to be proved is ergodicity.

Denote by \(\mathcal {K}_\infty \) the family of sequences \((x_1,x_2,\ldots )\), satisfying \(x_i\in (x_{i-1}+a_{i-1}{\mathbb Z}^2)\,\cap \,[0,a_i-1]^2\) for every \(i\). Let \(\mathcal {G}_\infty \) be the \(\sigma \)-field generated by \((\mathcal {O}_1,\mathcal {O}_2,\ldots )\), and (by a slight abuse of notation) for the rest of this proof let \(\mathrm{\mathbb {P} }\) be the law of \((\mathcal {O}_1,\mathcal {O}_2,\ldots )\). Let \(\mathcal {G}_n\) be the sub-\(\sigma \)-field of \(\mathcal {G}_\infty \) generated by \((\mathcal {O}_1,\ldots ,\mathcal {O}_n)\).

If \((x_1,x_2,\ldots )\in \mathcal {K}_\infty , \,t\in {\mathbb Z}^2\), define the \(\mathrm{\mathbb {P} }\)-preserving transformation \(t+(x_1,x_2,\ldots )\) as \((t+x_1,t+x_2,\ldots )\), where in the \(i\)’th coordinate is modulo \(a_i\). To show ergodicity of \((\mu _e, e \in E_2)\), it is enough to prove ergodicity of \((\mathcal {O}_1,\mathcal {O}_2,\ldots )\), because \((\mu _e, e \in E_2)\) is a deterministic function of it, and this function commutes with graph isomorphisms of \({\mathbb Z}^2\).

Now let \(A\in \mathcal {G}_\infty \) be invariant, and suppose by contradiction that there is some \(\varepsilon >0\) such that \(\varepsilon < \mathrm{\mathbb {P} }(A)< 1-\varepsilon \). There exists some \(n\) and \(B\in \mathcal {G}_n\) with the property that \(\mathrm{\mathbb {P} }(A\triangle B)<\varepsilon /4\) (where \(\triangle \) is the symmetric difference operator). This also implies that \(3\varepsilon /4<\mathrm{\mathbb {P} }(B)<1- 3\varepsilon /4\). We have for \(t \in {\mathbb Z}^2\)

We now show that we can choose \(t\) so that \(\mathrm{\mathbb {P} }(B\triangle (B+t)) \ge 2\mathrm{\mathbb {P} }(B)\mathrm{\mathbb {P} }(\mathcal {K}_\infty {\setminus }B)\ge \varepsilon /2\), giving a contradiction.

For an \(E\in \mathcal {G}_n\) denote by \(E_n\) the subset of \(\mathcal {K}_n\) such that \((\mathcal {O}_1,\mathcal {O}_2,\ldots )\in E\) if and only if \((\mathcal {O}_1,\ldots , \mathcal {O}_n)\in E_n\). Note that \(\mathrm{\mathbb {P} }(E)=\mathrm{\mathbb {P} }_n (E_n)\). So we want to show that for any \(B\in \mathcal {G}_n\) there exists a \(t\) such that \(\mathrm{\mathbb {P} }_n (B_n\triangle (B_n+t)) \ge 2\mathrm{\mathbb {P} }_n(B_n)\mathrm{\mathbb {P} }_n(\mathcal {K}_n{\setminus }B_n)\).

Consider the following average:

Use

and change the order of summation to obtain

It follows from (3.3)–(3.4) that there exists a \(t\in [0,a_n-1]^2\) such that \(\mathrm{\mathbb {P} }_n (B_n\triangle (B_n+t)) \ge 2\mathrm{\mathbb {P} }_n(B_n)\mathrm{\mathbb {P} }_n(\mathcal {K}_n{\setminus }B_n)\). \(\square \)

4 Choice of \(K_n\) and \(\eta _n\)

Let

and \(X^n\) be the associated Markov process.

Proposition 4.1

For each \(n \ge 1\) there exists a constant \(\sigma _n\), depending only on \(\eta _i, \,K_i, \,1\le i \le n\), such that the QFCLT holds for \(X^n\) with limit \(\sigma _n W\).

Proof

Since \(\mu _e^n\) is stationary, symmetric and ergodic, and \(\mu ^n_e\) is uniformly bounded and bounded away from 0, the result follows from [4, Theorem6.1]; see also Remarks 6.2 and 6.5 in that paper. (In fact, while [18, Theorem1.1] is stated for the i.i.d. case, the argument there also works in the ergodic case.) \(\square \)

Next, we recall (from [6] for example) how \(\sigma _n\) is connected with the electrical conductivity across a square of side \(a_n\). Let \(k \in \{a_{n-1}, b_n, a_n\}\), and let

Thus \(\mathcal {Q}_k\) gives a tiling of \({\mathbb Z}^2\) by squares of side \(k\) which are disjoint except for their boundaries. Given \(Q \in \mathcal {Q}_k\) and \(m \in \{n-1,n\}\) set

For \(f: Q \rightarrow \mathbb {R}\) set

Thus \(\kappa _n^{-1}\) is just the effective resistance across the square \(B_n\) when bonds are assigned conductivities \(\tilde{\mu }^{B_n,n}\).

Fix \(n \ge 1\) and for simplicity consider the environment \(\mu ^n\) in the case when \(\mathcal {O}_n=0\). Then \(\mu ^n\) has period \(a_n\) (in both coordinate directions), and \(\mu ^n_{xy}\) for \(x,y \in B_n\) is symmetric with respect to all the symmetries on the square \(B_n\). Because of this symmetry, the limiting conductance matrix will be a multiple \(\sigma _n\) of the identity, and it is sufficient to calculate the variance of \(X^n\) in one coordinate direction.

We wish to construct an \(\mathcal {L}_n\)-harmonic function \(h_n: {\mathbb Z}^2 \rightarrow \mathbb {R}\) so that for all \((x_1, x_2)\in {\mathbb Z}^2\) we have:

It is easy to see by the maximum principle that if such a function exists it is unique. Given such a function \(h_n\), writing \(X^n_t=(X^{n,1}_t, X^{n,2}_t)\) we have \(| h_n(X^{n}_t) - X^{n,2}_t| \le a_n\) and \(h_n(X^n)\) is a martingale. Set

The function \(g_n\) also has period \(a_n\) on \({\mathbb Z}^2\), i.e. \(g(x) = g(x')\) if \(x-x' \in a_n {\mathbb Z}^2\).

Recall that \(B'_n=[0,a_n-1]^2 \cap {\mathbb Z}^2\), and let \(\psi : {\mathbb Z}^2 \rightarrow B'_n\) be the natural function which maps \({\mathbb Z}^2\) onto the torus \(B'_n\). So \(\psi \) is the identity on \(B'_n\) and has period \(a_n\). Let \(Y_t = \psi (X^n_t)\); then \(Y\) is a Markov process on \(B'_n\) with stationary measure \(\nu _x = a_n^{-2}\) for each \(x \in B'_n\). Then

So, by the ergodic theorem for \(Y\),

To construct \(h_n\) we use the resistance problem (4.2) in the square \(Q=B_n\). Let \(f_n\) be the minimising function for (4.2). By the maximum principle \(f_n\) is unique, and so using the symmetry of \(\mu ^n\) with respect to reflections in the lines \(x_1=a_n/2\) and \(x_2=a_n/2\) we deduce that for \((x_1,x_2) \in B_n\),

Given this function \(f_n\) we construct \(h_n\) by setting

where \(e_1=(1,0)\) and \(e_2=(0,1)\). The function \(h\) satisfies (4.3) and is clearly \(\mathcal {L}_n\)-harmonic in the interior of \(B\). Some straightforward calculations show that it is also harmonic at points \(x \in {\partial }_i B_n\), and consequently it is harmonic on \({\mathbb Z}^2\). Since \(h_n\) is constant on the lines \(\{(i,j a_n ), 0\le i \le a_n\}\) for \(j=0,1\) we have, using the symmetries of \(h_n\), that

Thus from (4.4)

We now set

Theorem 4.2

There exist constants \(K_n \in [1, 50b_n]\) such that \(\sigma _n=1\) for all \(n\).

Proof

Let \(n \ge 1\); we can assume that \(K_i, 1\le i \le n-1\) have been chosen so that \(\sigma _i=2\) for \(i \le n-1\).

Since \(\sigma _n\) is non-random, we can simplify our notation and avoid the need for translations by assuming that \(\mathcal {O}_k=0\) for \(k=1, \dots , n\); note that this event has strictly positive probability. For \(K \in [0,\infty )\) let \(\kappa ^{2}_n(K)\) be the effective conductance across \(B_n\) as given by (4.2) if we take \(K_n=K\). Since \(B_n\) is finite, \(\kappa _n^2(K)\) is a continuous non-decreasing function of \(K\). We will show that \(\kappa _n^2(1) \le 1\) and \(\kappa ^2_n(K)>1\) for sufficiently large \(K\); by continuity it follows that there exists a \(K_n\) such that \(\kappa ^2_n(K_n)=1\), and thus \(\sigma ^2_n(K_n)=1\).

If \(K=1\) then we have \(\mu ^n_e \le \mu ^{n-1}_e\), with strict inequality for the edges in \(D_n\). We thus have \(\kappa ^2_n(1) \le 1\). To obtain a lower bound on \(\kappa _n^2(K)\), we use the dual characterization of effective resistance in terms of flows of minimal energy—see [13], and [3] for use in a similar context to the one here.

Let \(Q\) be a square in \(\mathcal {Q}_k\), with lower left corner \(w=(w_1,w_2)\). Let \(Q'\) be the rectangle obtained by removing the top and bottom rows of \(Q\):

A flow on \(Q\) is an antisymmetric function \(I\) on \(Q \times Q\) which satisfies \(I(x,y)=0\) if \(x \not \sim y, \,I(x,y)=-I(y,x)\), and

Let \({\partial }^+ Q =\{ (x_1, w_2+k): w_1 \le x_1 \le w_1+k \}\) be the top of \(Q\). The flux of a flow \(I\) is

For a flow \(I\) and \(m \in \{n-1,n\}\) set

This is the energy of the flow \(I\) in the electrical network given by \(Q\) with conductances \((\widetilde{\mu }^{m,Q}_e)\). If \(\mathcal {I}(Q)\) is the set of flows on \(Q\) with flux 1, then

Let \(I_{n-1}\) be the optimal flow for \(\kappa ^{-2}_{n-1}\). The square \(B_n\) consists of \(m_n^2 = a_n^2/a_{n-1}^2\) copies of \(B_{n-1}\); define a preliminary flow \(I'\) by placing a replica of \(m_n^{-1} I_{n-1}\) in each of these copies. For each square \(Q \in \mathcal {Q}_{a_{n-1}}\) with \(Q\subset B_n\) we have \(E^{n-1}_Q(I',I')=m_n^{-2}\), and since there are \(m_n^2\) of these squares we have \(E^{n-1}_{B_n}(I',I')=1\).

We now look at the tiling of \(B_n\) by squares in \(\mathcal {Q}_{b_n}\); recall that \( \ell _n = a_n/b_n\) and that \(\ell _n\) is an integer. For each \(Q \in \mathcal {Q}_{b_n}\) we have \(E^{n-1}_Q(I',I')= \ell _n^{-2}\). Label these squares by \((i,j)\) with \(1\le i,j\le \ell _n\).

We now describe modifications to the flow \(I'\) in a square \(Q\) of side \(b_n\). For simplicity, take first \(Q=[0, b_n]^2\). Set \(A_1 = \{ x =(x_1, x_2) \in Q: x_1 \ge x_2\}\), and \(A_2 = \{ x =(x_1, x_2) \in Q: x_2 \ge x_1\}\). Given any edge \(e=(x,y)\) in \(E(Q)\), either \(x,y \in A_1\) or else \(x,y \in A_2\). For \(x =(x_1,x_2) \in Q\) set \(r(x)=(x_2,x_1)\). Define a new flow by

The flow \(I'\) runs from bottom to top of the square, and the modified flow \(I^*\) begins at the bottom, and emerges on the left side of the square. As in [3, Proposition3.2] we have \(E_Q(I^*,I^*)\le E_Q(I',I')=\ell _n^{-2}\). Thus ‘making a flow turn a corner’ costs no more, in terms of energy, than letting it run on straight.

Suppose we now consider the flow \(I'\) in a column \((i_1, j), 1\le j \le \ell _n\), and we wish to make the flow avoid an obstacle square \((i_1, j_1)\). Then we can make the flow make a left turn in \((i_1, j_1-1)\), and then a right turn in \((i_1-1, j_1-1)\) so that it resumes its overall vertical direction. This then gives rise to two flows in \((i_1-1, j_1-1)\): the original flow \(I'\) plus the new flow: as in [3] the combined flow in the square \((i_1-1, j_1-1)\) has energy less than \(4 \ell _n^{-2}\). If we carry the combined flow vertically through the square \((i_1-1,j_1)\), and make the similar modifications above the obstacle, then we obtain overall a new flow \(J'\) which matches \(I'\) except on the 6 squares \((i,j), i_1\le i \le i_1, j_1-1\le j \le j_1+1\). The energy of the original flow in these 6 squares is \(6\ell _n^{-2}\), while the new flow will have energy less than \(14\ell _n^{-2}\): we have a ‘cost’ of at most \(4\ell _n^{-2}\) in the 3 squares \((i_1-1,j), j_1-1\le j \le j_1+1\), zero in \((i_1,j_1)\) and at most \(\ell _n^{-2}\) in the two remaining squares. Thus the overall energy cost of the diversion is at most \(8 \ell _n^{-2}\) (see Fig. 4).

We now use a similar procedure to construct a modification of \(I'\) in \(B_n\) with conductances \((\tilde{\mu }_e^{B_n,n})\). We have four obstacles, two oriented vertically and resembling an \(I\), and two horizontal ones. The crossbars on the \(I\), that is the sets \(D^{01}\), contain vertical edges with conductance \(\eta _n \ll 1\). We therefore modify \(I'\) to avoid these edges, and the squares with side \(b_n\) which contain them.

Consider the left vertical \(I\), which has center \((a_n'-\beta _n, a_n')\). Let \((i_1,j_1)\) be the square which contains at the top the bottom left branch of the \(I\), so that this square has top right corner \((a'_n-\beta _n, a'_n-10b_n)\). The top of this square contains vertical edges with conductance \(\eta _n\), so we need to build a flow which avoids these. We therefore (as above) make the flow in the column \(i_1\) take a left turn in square \((i_1,j_1-1)\), a right turn in \((i_1-1,j_1-1)\), carry it vertically through \((i_1-1,j_1)\), take a right turn in \((i_1-1,j_1+1)\) and carry it horizontally through \((i_1,j_1+1)\) into the edges of high conductance at the right side of \((i_1,j_1+1)\). The same pattern is then repeated on the other 3 branches of the left obstacle \(I\), and on the other vertical obstacle.

We now bound the energy of the new flow \(J\), and initially will make the calculations just for the change in columns \(i_1-1\) and \(i_1\) below and to the left of the point \((a_n'-\beta _n, a_n')\). Write \(M=10\) for the half of the overall height of the obstacle. There are \(2(M+2)\) squares in this region where \(I'\) and \(J\) differ; these have labels \((i,j)\) with \(i=i_1-1, i_1\) and \(j_1-1\le j \le j_1+ M\). We begin by calculating the energy if \(K=\infty \). In 3 of these squares the new flow \(J\) has energy at most \(4 \ell _n^{-2}\), in \(M+1\) of them it has energy at most \(\ell _n^{-2}\), and in the remaining \(M\) it has zero energy. So writing \(R\) for this region we have \(E_R(I',I')= (2M+4)\ell _n^{-2} \), while

So

This is if \(K=\infty \). Now suppose that \(K<\infty \). The vertical edge in the obstacle carries a current \(2 /\ell _n\) and has height \(M b_n\), so the energy of \(J\) on these edges is at most

The last inequality holds because \(\ell _n \ge \sqrt{n}\). Finally it is necessary to modify \(I'\) near the 4 ends of the two horizontal obstacles. For this, we just modify \(I'\) in squares of side \(a_{n-1}\), and arguments similar to the above show that for the new flow \(J\) in this region \(R'\), which consists of \(4+ 2 b_n/a_{n-1}\) squares of side \(a_{n-1}\), we have

The new flow \(J\) avoids the edges where \(\mu ^n_e=\eta _n\). Combining these terms we obtain for the whole square \(B_n\), using (4.8)–(4.10),

So if \(K' = 50 b_n\), we have

Hence there exists \(K_n \in [1,50 b_n)\) such that \(\kappa _n^2(K_n)=1\). \(\square \)

Lemma 4.3

Let \(p<1\). Then \(\mathrm{\mathbb {E} }\mu _e^p < \infty \), and \(\mathrm{\mathbb {E} }\mu _e^{-p} <\infty \).

Proof

Since \(\mu _e^n = \eta _n =b_n^{-1-1/n}\) on a proportion \(cb_n/a_n^2\) of the edges in \(B_n\), we have

Here we used the fact that \(b_n \ge 2^{n}\). Similarly,

\(\square \)

Remark 4.4

Using (4.5) and the methods of [3], one can show that for small enough \(\delta \, \kappa _n^2(\delta b_n) < 1\), so that \(K_n \asymp b_n\) and consequently \(\mathrm{\mathbb {E} }\mu _e =\infty \). Note that we also have

From now on we take \(K_n\) to be such that \(\sigma _n=1\) for all \(n\).

5 Weak invariance principle

Let \(X=(X_t, t \in \mathbb {R}_+, P^x_{\omega }, x \in {\mathbb Z}^d)\) be the process with generator (1.1) associated with the environment \((\mu _e)\). Recall (4.1) and the definition of \(X^n\), and define \(X^{(n,\varepsilon )}\) by

Let \(P^{\omega }_n(\varepsilon )\) be the law of \(X^{(n,\varepsilon )}\) on \(\mathcal {D}=\mathcal {D}_1\), and \(P^{\omega }(\varepsilon )\) be the law of \(X^{(\varepsilon )}\).

Recall that the Prokhorov distance \({d_P}\) between probability measures on \(\mathcal {D}_1\) is defined as follows (see [8, p. 238]). For \(A \subset \mathcal {D}\), let \(\mathcal {B}(A,\varepsilon ) = \{x\in \mathcal {D}: d_S (x, A) < \varepsilon \}\). For probability measures \(P\) and \(Q\) on \(\mathcal {D}, \,{d_P}(P,Q) \) is the infimum of \(\varepsilon >0\) such that \(P(A) \le Q(\mathcal {B}(A,\varepsilon )) + \varepsilon \) and \(Q(A) \le P(\mathcal {B}(A,\varepsilon )) + \varepsilon \) for all Borel sets \(A \subset \mathcal {D}\). Recall that convergence in the metric \({d_P}\) is equivalent to the weak convergence of measures.

To prove the WFCLT it is sufficient to prove:

Theorem 5.1

There exists a sequence \((b_n)\) such that if \(\varepsilon _n = 1/b_n\) then \( \lim _{n \rightarrow \infty } {d_P}( P^{\omega }(\varepsilon _n), P_{\text {BM}}) =0\) in \(\mathrm{\mathbb {P} }\)-probability.

Proof

Let \(n \ge 1\) and suppose that \(a_k, b_k\) have been chosen for \(k \le n-1\). By Proposition 4.1 we have for each \({\omega }\) that \({d_P}( P^{\omega }_{n-1}(\varepsilon ), P_{\text {BM}}) \rightarrow 0\). Note that the environment \(\mu ^{n-1}\) takes only finitely many values. So we can choose \(b_n\) large enough so that

Now for \(\lambda >1\) set

We have

We can couple the processes \(X^{n-1}\) and \(X\) so that the two processes agree up to the first time \(X^{n-1}\) hits the obstacle set \(\bigcup _{k=n}^\infty D_k\). Let \(\xi _n({\omega }) = \min \{ |x| : x \in \bigcup _{k=n}^\infty D_k({\omega })\} \), and

Let \(m \ge n\), and consider the probability that 0 is within a distance \(\lambda b_n\) of \(D_m\). Then \(\mathcal {O}_m\) has to lie in a set of area \(c \lambda b_n b_m\), and so

Thus

Suppose that \({\omega }\in F_n\) and \(n\ge 2\) so that \(n^{-1} < \lambda /2\). Then using the coupling above, we have

If now \(\delta >0\), choose \(\lambda >1\) such that \(P_{\text {BM}}(G(\lambda /2)^c) < \delta /2\), and then \(N> 2/ \delta \) large enough so that \(\mathrm{\mathbb {P} }(F_n^c) < \delta \) for \(n \ge N\). Then combining the estimates above, if \(n \ge N\) and \({\omega }\in F_n, \,{d_P}( P^{\omega }(\varepsilon _n), P_{\text {BM}}) < \delta \), so for \(n \ge N, \,\mathrm{\mathbb {P} }( {d_P}( P^{\omega }(\varepsilon _n), P_{\text {BM}}) > \delta ) \le \mathrm{\mathbb {P} }(F_n^c) < \delta , \) which proves the convergence in probability. \(\square \)

6 Quenched invariance principle does not hold

We will prove that the QFCLT does not hold for the processes \(X^{(\varepsilon _n)}\), and will argue by contradiction. If the QFCLT holds for \(X\) with limit \(\Sigma W\) then since the WFCLT holds for \(X^{(\varepsilon _n)}\) with diffusion constant 1 in every direction (by isotropy of the environment), \(\Sigma \) must be the identity matrix.

Let \(w^0_n=( a'_n - 10 b_n -1, a'_n-\beta _n)\) be the centre point on the left edge of the lowest of the four \(n\)th level obstacles in the set \(D^0_n\), and let \(z^0_n=w_n - \left( \tfrac{1}{2} b_n,0\right) \). Thus \(z^0_n\) is situated a distance \(\frac{1}{2}b_n\) to the left of \(w^0_n\)—see Fig. 5. Let

Lemma 6.1

For \(\lambda >0\) the event \(\{0 \in H_n(\lambda ) \}\) occurs for infinitely many \(n, \,\mathrm{\mathbb {P} }\)-a.s.

Proof

Let \(\mathcal {G}_k=\sigma (\mathcal {O}_1, \dots , \mathcal {O}_k)\). Given the values of \(\mathcal {O}_1, \dots , \mathcal {O}_{n-1}\), the r.v. \(\mathcal {O}_n\) is uniformly distributed over \(m_n^2\) points, with spacing \(a_{n-1}\), and has to lie in a square with side \(2 \lambda b_n \) in order for the event \(\{0 \in H_n(\lambda ) \}\) to occur. Thus approximately \(( 2\lambda b_n/a_{n-1})^2\) of these values of \(\mathcal {O}_n\) will cause \(\{0 \in H_n(\lambda ) \}\) to occur. So

The conclusion then follows from an extension of the second Borel–Cantelli Lemma. \(\square \)

Lemma 6.2

With \(\mathrm{\mathbb {P} }\)-probability 1, the event \(G_n(\lambda ) = \{ H_n(\lambda ) \cap (\bigcup _{m=n+1}^\infty D_m ) \ne \emptyset \}\) occurs for only finitely many \(n\).

Proof

Let \(m>n\). Then as in the previous lemma, by considering possible positions of \(\mathcal {O}_m\), we have

Since \(b_{m} \ge 2^m b_{m-1} > 2^m b_n\),

and the conclusion follows by Borel–Cantelli. \(\square \)

The first two Lemmas have shown, first that 0 is close to a \(n\)th level obstacle infinitely often, and next that higher level obstacles do not interfere. Our final task is to show that in this situation, the process \(X\) is unlikely to cross the strip of low conductance edges.

Lemma 6.3

Suppose that \(0 \in H_n(1/8)\) and \(H_n(4) \cap \big (\bigcup _{m=n+1}^\infty D_m\big ) =\emptyset \). Write \(X_t=(X^1_t, X^2_t)\), and let

Then there exists a constant \(A_{n-1}=A_{n-1}(\eta _1, K_1, \dots , \eta _{n-1}, K_{n-1})\) such that

Proof

Let \(w_n=(x_n,y_n)\) be the element of \(\{ w^0_n + \mathcal {O}_n + a_n x, x\in {\mathbb Z}^2\}\) which is closest to 0. Then, under the hypotheses of the Lemma, we have \(3 b_n/8 \le x_n \le 5 b_n/8\), and \(|y_n| \le b_n/8\). Thus the square \(B_\infty (0,2b_n)\) intersects the obstacle set \(D_n\), but does not intersect \(D_m\) for any \(m>n\). Hence if \(F\) holds then we can couple \(X^n\) and \(X\) so that \(X^n_t=X_t\) for \(0 \le t\le b_n^2\).

Let \(\mathbb {H}=\{ (x,y): x \le x_n \}\), and \(J=B \cap {\partial }_i \mathbb {H}\). If \(F\) holds then \(X^n\) has to cross the line \(J\), and therefore has to cross an edge of conductance \(\eta _n\). Let \(Y\) be the process with edge conductances \(\mu '_e\), where \(\mu '_e=\mu ^{n-1}_e\) except that \(\mu '_e=0\) if \(e=\{ (x_n,y), (x_n+1,y)\}\) for \(y \in {\mathbb Z}\). Thus the line \({\partial }_i \mathbb {H}\) is a reflecting barrier for \(Y\). Let

be the amount of time spent by \(Y\) in \(J\), and

Assuming that \(G\) holds, let \(\xi _1\) be a standard \(\mathtt{exp(1)}\) r.v., set \(T= \inf \{s: L_s > \xi _1/\eta _n \}\), and let \(X^n_t=Y_t\) on \([0,T)\), and \(X^n_T = Y_T + (1,0)\). Note that one can complete the definition of \(X^n_t\) for \(t\ge T\) in such a way that the process \(X^n\) has the same distribution as the process defined by (4.1). We have

So

The process \(Y\) has conductances bounded away from 0 and infinity on \(\mathbb {H}\), so by [11] \(Y\) has a transition probability \(p_t(w,z)\) which satisfies

In addition if \(r=|w-z|\ge A\) then \(p_t(w,z) \le p_r(w,z)\). Here \(A=A_{n-1}\) is a possibly large constant which depends on \((\eta _i, K_i, 1\le i \le n-1)\). We can take \(A \ge 10\). For \(w\in J\) we have \(|w| \ge b_n/4\) and so provided \(b_n \ge 8A\),

So since \(|J| \le 2b_n\),

Finally, the construction of \(X^n\) from \(Y\) gives that \(P^0_{\omega }(F) \le P^0_{\omega }( G \cap \{ T \le b_n^2 \} )\). \(\square \)

Proof of Theorem 1.4(b)

We now choose \(b_n\) large enough so that for all \(n\ge 2\),

Let \(W_t = (W^1_t, W^2_t)\) denote two-dimensional Brownian motion with \(W_0=0\), and let \(P_{\text {BM}}\) denote its distribution. For a two-dimensional process \(Z=(Z^1, Z^2)\), define the event

The support theorem implies that \(p_1 := P_{\text {BM}}(F(W)) >0\). Write \(F_n = F(X^{(\varepsilon _n)})\).

Let \(N_1 = N_1({\omega })\) be such that the event \(G_n(4)\) defined in Lemma 6.2 does not occur for \(n \ge N_1\). Let \(\Lambda =\Lambda ({\omega })\) be the set of \(n > N_1\) such that \(0 \in H_n\left( \tfrac{1}{8}\right) \). Then \(\mathrm{\mathbb {P} }(\Lambda \hbox { is infinite})=1\) by Lemma 6.1. By Lemma 6.3 and the choice of \(b_n\) in (6.1) we have \( P^0_{\omega }( F_n) < cn^{-1}\) for \(n\in \Lambda \). So

Thus whenever \(\Lambda ({\omega })\) is infinite the sequence of processes \( (X^{(\varepsilon _n)}_t, t \in [0,1], P^0_{\omega }), \, n \ge 1, \) cannot converge to \(W\), and the QFCLT therefore fails. \(\square \)

Remark 6.4

We can construct similar obstacle sets in \({\mathbb Z}^d\) with \(d \ge 3\), and we now outline briefly the main differences from the \(d=2\) case.

We take \(b_n = a_n n^{-1/d}\), so that \(\sum b_n^d/a_n^d =\infty \), and the analogue of Lemma 6.2 holds. In a cube side \(a_n\) we take \(2d\) obstacle sets, arranged in symmetric fashion around the centre of the cube. Each obstacle has an associated ‘direction’ \(i \in \{1, \dots , d\}\). An obstacle of direction \(i\) consists of a \(2 b_n^{d-1}\) edges of low conductance \(\eta _n\), arranged in two \(d-1\) dimensional ‘plates’ a distance \(M b_n\) apart, with each edge in the direction \(i\). The two plates are connected by \(d-1\) dimensional plates of high conductance \(K_n\). Thus the total number of edges in the obstacles is \(c b_n^{d-1}\), so taking \(a_n/a_{n-1}\) large enough, we have \(\sum b_n^{d-1}/a_n^d<\infty \), and the same arguments as in Sect. 3 show that the environment is well defined, stationary and ergodic.

The conductivity across a cube side \(N\) in \({\mathbb Z}^d\) is \(N^{d-2}\). Thus if we write \(\sigma ^2_n(\eta _n, K_n)\) for the limiting diffusion constant of the process \(X^n\), and \(R_n=R_n(\eta _n,K_n)\) for the effective resistance across a cube side \(a_n\), then (4.5) is replaced by:

For the QFCLT to fail, we need \(\eta _n = o(b_n^{-1})\), as in the two-dimensional case. With this choice we have \(R_n(\eta _n, 0)^{-1} < a_n^{d-2}\), and as in Theorem 4.2 we need to show that if \(K_n\) is large enough then \(R_n(\eta _n, K_n)^{-1} > a_n^{d-2}\).

Recall that \(\ell _n=a_n/b_n\). Let \(I'\) be as in Theorem 4.2; then \(I'\) has flux \(\ell _n^{-d+1}\) across each sub-cube \(Q'\) of side \(b_n\). If the sub-cube does not intersect the obstacles at level \(n\), then \(E_{Q'}(I',I')= \ell _n^{-d} a_n^{2-d}\). The ‘cost’ of diverting \(I'\) around a low conductance obstacle is therefore of order \(c \ell _n^{-d} a_n^{2-d}= c b_n^{-d+2} \ell _n^{-2d+2}\)—see [17]. As in Theorem 4.2 we divert the flow onto the regions of high conductance, so as to obtain some cubes in which the new flow has zero energy. To estimate the energy in the high conductance bonds, note that we have \(2(d-1)b_n^{d-2}\) sets of parallel paths of edges of high conductance, and each path is of length \(M b_n\), so the flow in each edge is \(F_n= \ell _n^{-d+1}/ b_n^{d-2}(2d-2)\). Hence the total energy dissipation in the high conductance edges is

We therefore need

that is we need to choose \(K_n > c M b_n\) for some constant \(c\). Since

we find that in \(d\ge 3\) our example also has \(\mathrm{\mathbb {E} }\mu _e^{\pm p}<\infty \) if and only if \(p<1\).

References

Andres, S., Deuschel, J.-D., Slowik, M.: Invariance principle for the random conductance model in a degenerate ergodic environment. Preprint (2013)

Andres, S., Barlow, M.T., Deuschel, J.-D., Hambly, B.M.: Invariance principle for the random conductance model. Prob. Theory Rel. Fields 156(3–4), 535–580 (2013)

Barlow, M.T., Bass, R.F.: On the resistance of the Sierpinski carpet. Proc. R. Soc. Lond. A 431, 345–360 (1990)

Barlow, M.T., Deuschel, J.-D.: Invariance principle for the random conductance model with unbounded conductances. Ann. Probab. 38, 234–276 (2010)

Barlow, M.T., Burdzy, K., Timar, A.: Comparison of quenched and annealed invariance principles for random conductance model: Part II. In: Festschrift for M. Fukushima (to appear). World Scientific, New York (2015)

Bensoussan, A., Lions, J.-L., Papanicolau, G.: Asymptotic analysis for periodic structures. North Holland, Amsterdam (1978)

Berger, N., Biskup, M.: Quenched invariance principle for simple random walk on percolation clusters. Probab. Theory Rel. Fields 137(1–2), 83–120 (2007)

Billingsley, P.: Convergence of probability measures, 2nd edn. In: Wiley Series in Probability and Statistics: Probability and Statistics. A Wiley-Interscience Publication. Wiley, New York (1999)

Biskup, M.: Recent progress on the random conductance model. Prob. Surv. 8, 294–373 (2011)

Biskup, M., Prescott, T.M.: Functional CLT for random walk among bounded random conductances. Electron. J. Prob. 12(paper 49), 1323–1348 (2007)

Delmotte, T.: Parabolic Harnack inequality and estimates of Markov chains on graphs. Rev. Math. Iberoam. 15, 181–232 (1999)

De Masi, A., Ferrari, P.A., Goldstein, S., Wick, W.D.: An invariance principle for reversible Markov processes. Applications to random motions in random environments. J. Stat. Phys. 55, 787–855 (1989)

Doyle, P., Snell, J.L.: Random Walks and Electrical Networks. Mathematical Association of America, Washigton, DC (1984). arXiv:PR/0001057

Kipnis, C., Varadhan, S.R.S.: Central limit theorem for additive functionals of reversible Markov processes and applications to simple exclusions. Commun. Math. Phys. 104(1), 1–19 (1986)

Mathieu, P., Piatnitski, A.: Quenched invariance principles for random walks on percolation clusters. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 463(2085), 2287–2307 (2007)

Mathieu, P.: Quenched invariance principles for random walks with random conductances. J. Stat. Phys. 130(5), 1025–1046 (2008)

McGillivray, I.: Resistance in higher-dimensional Sierpiński carpets. Potential Anal. 16(3), 289–303 (2002)

Sidoravicius, V., Sznitman, A.-S.: Quenched invariance principles for walks on clusters of percolation or among random conductances. Probab. Theory Rel. Fields 129(2), 219–244 (2004)

Zhikov, V.V., Piatnitskii, A.L.: Homogenization of random singular structures and random measures (Russian). Izv. Ross. Akad. Nauk Ser. Mat. 70(1), 23–74 (2006) (translation in Izv. Math. 70(1), 2006, 1967) (2006)

Acknowledgments

We are grateful to Emmanuel Rio for very helpful advice, and Pierre Mathieu, Jean-Dominique Deuschel and Marek Biskup for some very useful discussions. In addition we want to thank a referee for very careful reading.

Author information

Authors and Affiliations

Corresponding author

Additional information

Research supported in part by NSF Grant DMS-1206276, by NSERC, Canada, and Trinity College, Cambridge, and by MTA Rényi “Lendulet” Groups and Graphs Research Group.

Rights and permissions

About this article

Cite this article

Barlow, M., Burdzy, K. & Timár, Á. Comparison of quenched and annealed invariance principles for random conductance model. Probab. Theory Relat. Fields 164, 741–770 (2016). https://doi.org/10.1007/s00440-015-0618-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-015-0618-8