Abstract

The mean field limit of large-population symmetric stochastic differential games is derived in a general setting, with and without common noise, on a finite time horizon. Minimal assumptions are imposed on equilibrium strategies, which may be asymmetric and based on full information. It is shown that approximate Nash equilibria in the n-player games admit certain weak limits as n tends to infinity, and every limit is a weak solution of the mean field game (MFG). Conversely, every weak MFG solution can be obtained as the limit of a sequence of approximate Nash equilibria in the n-player games. Thus, the MFG precisely characterizes the possible limiting equilibrium behavior of the n-player games. Even in the setting without common noise, the empirical state distributions may admit stochastic limits which cannot be described by the usual notion of MFG solution.

Similar content being viewed by others

1 Introduction

A decade of active research on mean field games (MFGs) has been driven by a primarily intuitive connection with large-population stochastic differential games of a certain symmetric type. The idea, which began with the pioneering work of Lasry and Lions [31] and Huang et al. [21], is that a large-population game of this type should behave similarly to its MFG counterpart, which may be thought of as an infinite-player version of the game. Rigorous analysis of this connection, however, remains restricted in scope. Following [21], the vast majority of the literature works backward from the mean field limit, in the sense that a solution of the MFG is used to construct approximate Nash equilibria for the corresponding n-player games for large n. Fewer papers [2, 14, 15, 31] have approached from the other direction: given for each n a Nash equilibrium for the n-player game, in what sense (if any) do these equilibria converge as n tends to infinity? The goal of this paper is to address both of these problems in a general framework.

More precisely, we study an n-player stochastic differential game, in which the private state processes \(X^1,\ldots ,X^n\) of the agents (or players) are given by the following dynamics:

Here \(B,W^1,\ldots ,W^n\) are independent Wiener processes, \(\alpha ^i\) is the control of agent i, and \({\widehat{\mu }}^n\) is the empirical distribution of the state processes. We call \(W^1,\ldots ,W^n\) the independent or idiosyncratic noises, since agent i feels only \(W^i\) directly, and we call B the common noise, since each agent feels B equally. The reward to agent i of the strategy profile \((\alpha ^1,\ldots ,\alpha ^n)\) is

Agent i seeks to maximize this reward, and so we say that \((\alpha ^1,\ldots ,\alpha ^n)\) form an \(\epsilon \)-Nash equilibrium (or an approximate Nash equilibrium) if

for each admissible alternative strategy \(\beta \). Intuitively, if the number of agents n is very large, a single representative agent has little influence on the empirical measure flow \(({\widehat{\mu }}^n_t)_{t \in [0,T]}\), and so this agent expects to lose little in the way of optimality by ignoring her own effect on the empirical measure. Crucially, the system is symmetric in the sense that the same functions \((b,\sigma ,\sigma _0)\) and (f, g) determine the dynamics and objectives of each agent, and thus we may hope to learn something of the entire system from the behavior of a single representative agent.

The mean field game is specified precisely in Sect. 2, and it follows this intuition by treating n as infinite. Loosely speaking, a strong MFG solution is a \(({\mathcal {F}}^B_t = \sigma (B_s : s\le t))_{t \in [0,T]}\)-adapted measure-valued process \((\mu _t)_{t \in [0,T]}\) satisfying \(\mu _t = \text {Law}(X^{\alpha ^*}_t \ | \ {\mathcal {F}}^B_t)\) for each t, where \(X^{\alpha ^*}\) is an optimally controlled state process coming from the following stochastic optimal control problem:

In other words, with the process \((\mu _t)_{t \in [0,T]}\) treated as fixed, the representative agent solves an optimal control problem. The requirement \(\mu _t = \text {Law}(X^{\alpha ^*}_t \ | \ {\mathcal {F}}^B_t)\), often known as a consistency condition, assures us that this decoupled optimal control problem is truly representative of the entire population, and we may think of the measure flow \((\mu _t)_{t \in [0,T]}\) as an equilibrium.

The analysis of this paper focuses on mean field games with common noise, but both of the volatility coefficients \(\sigma \) and \(\sigma _0\) are allowed to be degenerate. Hence, our results cover the usual mean field games without common noise (where \(\sigma _0 \equiv 0\)) as well as deterministic mean field games (where \(\sigma \equiv \sigma _0 \equiv 0\)). The literature on mean field games with common noise is quite scarce so far, but some general analysis is provided in the recent papers [1, 5, 9, 11], and some specific models were studied in [12, 19]. This paper can be seen as a sequel to [11], from which we borrow many definitions and a handful of lemmas. It is emphasized in [11] that strong solutions are quite difficult to obtain when common noise is present, and this leads to a notion of weak MFG solution. Weak solutions, defined carefully in Sect. 2.2, differ most significantly from strong solutions in that the measure flow \((\mu _t)_{t \in [0,T]}\) need not be \(({\mathcal {F}}^B_t)_{t \in [0,T]}\)-adapted, and the consistency condition is weakened to something like \(\mu _t = \text {Law}(X^{\alpha ^*}_t \ | \ {\mathcal {F}}^{B,\mu }_t)\), where \({\mathcal {F}}^{B,\mu }_t = \sigma (B_s,\mu _s : s \le t)\). Additionally, weak MFG solutions allow for relaxed (i.e. measure-valued) controls which need not be adapted to the filtration generated by the inputs \((X_0,B,W,\mu )\) of the control problem.

Although this weaker notion of MFG solution was introduced in [11] to develop an existence and uniqueness theory for MFGs with common noise, the main result of this paper is to assert that this notion is the right one from the point of view of the finite-player game, in the sense that weak MFG solutions characterize the limits of approximate Nash equilibria. The main results are stated in full generality in Sects. 2.4 and 2.5, but let us state them loosely for now in a simplified form: First, we show that if for each n we are given an \(\epsilon _n\)-Nash equilibrium \((\alpha ^{n,1},\ldots ,\alpha ^{n,n})\) for the n-player game, where \(\epsilon _n \rightarrow 0\), then the family \((\text {Law}(B,{\widehat{\mu }}^n))_{n=1}^\infty \) is tight, and every weak limit agrees with the law of \((B,\mu )\) coming from some weak MFG solution. Second, we show conversely that every weak MFG solution can be obtained as a limit in this way.

Specializing our results to the case without common noise uncovers something unexpected. In the literature thus far, a MFG solution is defined in terms of a deterministic equilibrium \((\mu _t)_{t \in [0,T]}\), corresponding to our notion of strong MFG solution. Even when there is no common noise, a weak MFG solution still involves a stochastic equilibrium, and because of our main theorems we must therefore expect the limits of the finite-player empirical measures to remain stochastic. Moreover, we demonstrate by a simple example that a stochastic equilibrium is not necessarily just a randomization among the family of deterministic equilibria. Hence, the solution concept considered thusfar in literature on mean field games (without common noise) does not fully capture the limiting dynamics of finite-player approximate Nash equilibria. This is unlike the case of McKean–Vlasov limits (see [16, 32, 34]), which can be seen as mean field games with no control. We prove some admittedly difficult-to-apply results which nevertheless shed some light on this phenomenon: The fundamental obstruction is the adaptedness required of controls, which renders the class of admissible controls quite sensitive to whether or not \((\mu _t)_{t \in [0,T]}\) is stochastic.

Our first theorem, regarding the convergence of arbitrary approximate equilibria (open-loop, full-information, and possibly asymmetric), is arguably the more novel of our two main theorems. It appears to be the first result of its kind for mean field games with common noise, with the exception of the linear quadratic model of [12] for which explicit computations are available. However, even in the setting without common noise we substantially generalize the few existing results.

Several papers, such as the recent [9] dealing with common noise, contain purely heuristic derivations of the MFG as the limit of n-player games. The intuition guiding such derivations is as follows (and let us assume there is no common noise for the sake of simplicity): If n is large, a single agent in a large population should lose little in the way of optimality if she ignores the small feedbacks arising through the empirical measure flow \(({\widehat{\mu }}^n_t)_{t \in [0,T]}\). If each of the n identical agents does this, then we expect to see symmetric strategies which are nearly independent and ideally of the form \({\hat{\alpha }}(t,X^i_t)\), for some feedback control \({\hat{\alpha }}\) common to all of the agents. From the theory of McKean–Vlasov limits, we then expect that \(({\widehat{\mu }}^n_t)_{t \in [0,T]}\) converges to a deterministic limit. This intuition, however, is largely unsubstantiated and, we will argue, inaccurate in general.

Lasry and Lions [30, 31] first attacked this problem rigorously using PDE methods, working with an infinite time horizon and strong simplifying assumptions on the data, and their results were later generalized by Feleqi [14]. Bardi and Priuli [2, 3] justified the MFG limit for certain linear-quadratic problems, and Gomes et al. [17] studied models with finite state space. Substantial progress was made in a very recent paper of Fischer [15], which deserves special mention also because both the level of generality and the method of proof are quite similar to ours; we will return to this shortly.

With the exception of [15], the aforementioned results share the important limitation that the agents have only partial information: the control of agent i may depend only on her own state process \(X^{n,i}\) or Wiener process \(W^i\). Our results allow for arbitrary full-information strategies, settling a conjecture of Lasry and Lions (stated in Remark x after [31, Theorem 2.3] for the case of infinite time horizon and closed-loop controls). Combined in [14, 30, 31] with the assumption that the state process coefficients \((b,\sigma )\) do not depend on the empirical measure, the assumption of partial information leads to the immensely useful simplification that the state processes of the n-player games are independent. By showing then that they are also asymptotically identically distributed, the aforementioned heuristic argument can be made precise.

Fischer [15], on the other hand, allows for full-information controls but characterizes only the deterministic limits of \(({\widehat{\mu }}^n_t)_{t \in [0,T]}\) as MFG equilibria. Assuming that the limit is deterministic implicitly restricts the class of n-player equilibria in question. By characterizing even the stochastic limits of \(({\widehat{\mu }}^n_t)_{t \in [0,T]}\), which we show are in fact quite typical, we impose no such restriction on the equilibrium strategies of the n-player games. This not to say, however, that our results completely subsume those of [15], which work with a more general notion of local approximate equilibria and which notably include conditions under which the assumption of a deterministic limit can be verified.

Our second main theorem, which asserts that every weak MFG solution is attainable as a limit of finite-player approximate Nash equilibria, is something of an abstraction of the kind of limiting result most commonly discussed in the MFG literature. In a tradition beginning with [21] and continued by the majority of the probabilistic papers on the subject [4, 6, 8, 13, 27], an optimal control from an MFG solution is used to construct approximate equilibria for the finite-player games. Although our result applies in more general settings, our conclusions are duly weaker, in the sense that the approximate equilibria we construct do not necessarily consist of particularly tangible (i.e. distributed or even symmetric) strategies. We emphasize that the goal of this work is not to construct nice approximate equilibria but rather to characterize all possible limits of approximate equilibria.

It is worth emphasizing that this paper makes no claims whatsoever regarding the existence or uniqueness of equilibria for either the n-player game or the MFG. Rather, we show that if a sequence of n-player approximate equilibria exists, then its limits are described by weak MFG solutions. Conversely, if a weak MFG solution exists, then it is achieved as the limit of some sequence of n-player approximate equilibria. Hence, existence of a weak MFG solution is equivalent to existence of a sequence of n-player approximate equilibria. Note, however, that the main Assumption A of this paper actually guarantees the existence of a weak MFG solution, because of the recent results of [11]. Far more results are available for MFGs without common noise; refer to the surveys [7, 18] and the recent book [4] for a wealth of well-posedness results and for further discussion of MFG theory in general.

The paper is organized as follows. Section 2 defines the MFG and the corresponding n-player games, before stating the main limit Theorem 2.6 and its converse, Theorem 2.11, along with several useful corollaries. Section 3 specializes the results to the more familiar setting without common noise and explains the gap between weak and strong solutions. Section 4 provides some background on weak solutions of MFGs with common noise, borrowed from [11], before we turn to the proofs of the main results in Sects. 5, 6, and 7. Section 5 is devoted to the proof of Theorem 2.6, while Sect. 6 contains the proof of the converse Theorem 2.11. Finally, Sect. 7 explains how to carefully specialize these two theorems to the setting without common noise.

2 The mean field limit with common noise

After establishing some notation, this section first defines quickly and concisely the mean field game. We work with the same definitions and nearly the same assumptions as [11], to which the reader is referred for a more thorough discussion. Then, the n-player game is formulated precisely, allowing for somewhat more general information structures than one usually finds in the literature on stochastic differential games. This generality is not just for its own sake; it will play a crucial role in the proofs later.

2.1 Notation and standing assumptions

For a topological space E, let \({\mathcal {B}}(E)\) denote the Borel \(\sigma \)-field, and let \({\mathcal {P}}(E)\) denote the set of Borel probability measures on E. For \(p \ge 1\) and a separable metric space (E, d), let \({\mathcal {P}}^p(E)\) denote the set of \(\mu \in {\mathcal {P}}(E)\) satisfying \(\int _Ed^p(x,x_0)\mu (dx) < \infty \) for some (and thus for any) \(x_0 \in E\). Let \(\ell _{E,p}\) denote the p-Wasserstein distance on \({\mathcal {P}}^p(E)\), given by

Unless otherwise stated, the space \({\mathcal {P}}^p(E)\) is equipped with the metric \(\ell _{E,p}\), and all continuity and measurability statements involving \({\mathcal {P}}^p(E)\) are with respect to \(\ell _{E,p}\) and the corresponding Borel \(\sigma \)-field. The analysis of the paper will make routine use of several topological properties of the spaces \({\mathcal {P}}^p(E)\) and \({\mathcal {P}}^p({\mathcal {P}}^p(E))\), especially when E is a product space. All of the results we need, well known or not, are summarized in the Appendices A and B of [29].

We are given a time horizon \(T > 0\), three exponents \((p',p,p_\sigma )\) with \(p \ge 1\), a control space A, an initial state distribution \(\lambda \in {\mathcal {P}}({\mathbb {R}}^d)\), and the following functions:

Assume throughout the paper that the following Assumption A holds. This is exactly Assumption A of [11], except that here we require that \(p' \ge 2\) and that \((b,\sigma ,\sigma _0)\) are Lipschitz not only in the state argument but also in the measure argument.

Assumption A

-

(A.1)

A is a closed subset of a Euclidean space. (More generally, as in [20], a closed \(\sigma \)-compact subset of a Banach space would suffice.)

-

(A.2)

The exponents satisfy \(p' > p \ge 1 \vee p_\sigma \) and \(p' \ge 2 \ge p_\sigma \ge 0\), and also \(\lambda \in {\mathcal {P}}^{p'}({\mathbb {R}}^d)\).

-

(A.3)

The functions b, \(\sigma \), \(\sigma _0\), f, and g of \((t,x,\mu ,a)\) are jointly measurable and are continuous in \((x,\mu ,a)\) for each t.

-

(A.4)

There exists \(c_1 > 0\) such that, for all \((t,x,y,\mu ,\nu ,a) \in [0,T] \times {\mathbb {R}}^d \times {\mathbb {R}}^d \times {\mathcal {P}}^p({\mathbb {R}}^d) \times {\mathcal {P}}^p({\mathbb {R}}^d) \times A\),

$$\begin{aligned}&|b(t,x,\mu ,a) - b(t,y,\nu ,a)| + |(\sigma ,\sigma _0)(t,x,\mu ) - (\sigma ,\sigma _0)(t,y,\nu )| \\&\quad \le c_1\left( |x-y| + \ell _{{\mathbb {R}}^d,p}(\mu ,\nu )\right) , \end{aligned}$$and

$$\begin{aligned} |b(t,0,\delta _0,a)|&\le c_1(1 + |a|), \\ |(\sigma \sigma + \sigma _0\sigma _0^\top )(t,x,\mu )|&\le c_1\left[ 1 + |x|^{p_\sigma } + \left( \int _{{\mathbb {R}}^d}|z|^p\mu (dz)\right) ^{p_\sigma /p}\right] . \end{aligned}$$ -

(A.5)

There exist \(c_2, c_3 > 0\) such that, for each \((t,x,\mu ,a) \in [0,T] \times {\mathbb {R}}^d \times {\mathcal {P}}^p({\mathbb {R}}^d) \times A\),

$$\begin{aligned}&|g(x,\mu )| \le c_2\left( 1 + |x|^p + \int _{{\mathbb {R}}^d}|z|^p\mu (dz)\right) ,\\&\quad -c_2\left( 1 + |x|^p + \int _{{\mathbb {R}}^d}|z|^p\mu (dz) + |a|^{p'}\right) \le f(t,x,\mu ,a) \\&\quad \le c_2\left( 1 + |x|^p + \int _{{\mathbb {R}}^d}|z|^p\mu (dz)\right) - c_3|a|^{p'}. \end{aligned}$$

While these assumptions are fairly general, they do not cover all linear-quadratic models. Because of the requirement \(p' > p\), the running objective f may grow quadratically in a only if its growth in \((x,\mu )\) is strictly subquadratic. This requirement is important for compactness purposes, both for the results of this paper and for the existence results of [11, 29]. In fact, [11, 29] provide examples of MFGs with \(p'=p\) which do not admit solutions even though they verify the rest of Assumption A. Existence results for this somewhat delicate boundary case have been obtained in [6, 8, 10, 12] by assuming some additional inequalities between coefficients. It seems feasible to expect our main results to adapt to such settings, but we do not pursue this here.

2.2 Relaxed controls and mean field games

Define \({\mathcal {V}}\) to be the set of measures q on \([0,T] \times A\) with first marginal equal to Lebesgue measure, i.e. \(q([s,t] \times A) = t-s\) for \(0 \le s \le t \le T\), satisfying also

Since these measures have mass T, we may endow \({\mathcal {V}}\) with a suitable scaling of the p-Wasserstein metric. Each \(q \!\in \! {\mathcal {V}}\) may be identified with a measurable function \([0,T] \ni t \mapsto q_t \!\in \! {\mathcal {P}}^p(A)\), determined uniquely (up to a.e. equality) by \(dtq_t(da) \!=\! q(dt,da)\). It is known that \({\mathcal {V}}\) is a Polish space, and in fact if A is compact then so is \({\mathcal {V}}\); see [29, Appendix A] for more details. The elements of \({\mathcal {V}}\) are called relaxed controls, and \(q \!\in \! {\mathcal {V}}\) is called a strict control if it satisfies \(q(dt,da) \!=\! dt\delta _{\alpha _t}(da)\) for some measurable function \([0,T] \ni t \mapsto \alpha _t \!\in \! A\). Finally, if we are given a measurable process \((\Lambda _t)_{t \!\in \! [0,T]}\) with values in \({\mathcal {P}}(A)\) defined on some measurable space and with  , we write \(\Lambda \!=\! dt\Lambda _t(da)\) for the corresponding random element of \({\mathcal {V}}\).

, we write \(\Lambda \!=\! dt\Lambda _t(da)\) for the corresponding random element of \({\mathcal {V}}\).

Let us define some additional canonical spaces. For a positive integer k let \({\mathcal {C}}^k = C([0,T];{\mathbb {R}}^k)\) denote the set of continuous functions from [0, T] to \({\mathbb {R}}^k\), and define the truncated supremum norms \(\Vert \cdot \Vert _t\) on \({\mathcal {C}}^k\) by

Unless otherwise stated, \({\mathcal {C}}^k\) is endowed with the norm \(\Vert \cdot \Vert _T\) and its Borel \(\sigma \)-field. For \(\mu \in {\mathcal {P}}({\mathcal {C}}^k)\), let \(\mu _t \in {\mathcal {P}}({\mathbb {R}}^k)\) denote the image of \(\mu \) under the map \(x \mapsto x_t\). Let

This space will house the idiosyncratic noise, the relaxed control, and the state process. Let \(({\mathcal {F}}^{\mathcal {X}}_t)_{t \in [0,T]}\) denote the canonical filtration on \({\mathcal {X}}\), where \({\mathcal {F}}^{\mathcal {X}}_t\) is the \(\sigma \)-field generated by the maps

For \(\mu \in {\mathcal {P}}({\mathcal {X}})\), let \(\mu ^x := \mu ({\mathcal {C}}^m \times {\mathcal {V}}\times \cdot )\) denote the \({\mathcal {C}}^d\)-marginal. Finally, for ease of notation let us define the objective functional \(\Gamma : {\mathcal {P}}^p({\mathcal {C}}^d) \times {\mathcal {V}}\times {\mathcal {C}}^d \rightarrow {\mathbb {R}}\) by

The following definition of weak mean field game (MFG) solution is borrowed from [11].

Definition 2.1

A weak MFG solution with weak control (with initial state distribution \(\lambda \)), or simply a weak MFG solution, is a tuple \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P,B,W,\mu ,\Lambda ,X)\), where \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P)\) is a complete filtered probability space supporting \((B,W,\mu ,\Lambda ,X)\) satisfying

-

(1)

\((B_t)_{t \in [0,T]}\) and \((W_t)_{t \in [0,T]}\) are independent \(({\mathcal {F}}_t)_{t \in [0,T]}\)-Wiener processes of respective dimension \(m_0\) and m, the process \((X_t)_{t \in [0,T]}\) is \(({\mathcal {F}}_t)_{t \in [0,T]}\)-adapted with values in \({\mathbb {R}}^d\), and \(P \circ X_0^{-1} = \lambda \). Moreover, \(\mu \) is a random element of \({\mathcal {P}}^p({\mathcal {X}})\) such that \(\mu (C)\) is \({\mathcal {F}}_t\)-measurable for each \(C \in {\mathcal {F}}^{\mathcal {X}}_t\) and \(t \in [0,T]\).

-

(2)

\(X_0\), W, and \((B,\mu )\) are independent.

-

(3)

\((\Lambda _t)_{t \in [0,T]}\) is \(({\mathcal {F}}_t)_{t \in [0,T]}\)-progressively measurable with values in \({\mathcal {P}}(A)\) and

$$\begin{aligned} {\mathbb {E}}^P\int _0^T\int _A|a|^p\Lambda _t(da)dt < \infty . \end{aligned}$$Moreover, \(\sigma (\Lambda _s : s \le t)\) is conditionally independent of \({\mathcal {F}}^{X_0,B,W,\mu }_T\) given \({\mathcal {F}}^{X_0,B,W,\mu }_t\), for each \(t \in [0,T]\), where

$$\begin{aligned} {\mathcal {F}}^{X_0,B,W,\mu }_t&= \sigma \left( X_0,B_s,W_s,\mu (C) : s \le t, \ C \in {\mathcal {F}}^{\mathcal {X}}_t\right) . \end{aligned}$$ -

(4)

The state equation holds:

$$\begin{aligned} dX_t = \int _Ab(t,X_t,\mu ^x_t,a)\Lambda _t(da)dt + \sigma (t,X_t,\mu ^x_t)dW_t + \sigma _0(t,X_t,\mu ^x_t)dB_t. \end{aligned}$$(2.5) -

(5)

If \(({\widetilde{\Omega }}',({\mathcal {F}}'_t)_{t \in [0,T]},P')\) is another filtered probability space supporting \((B',W',\mu ',\Lambda ',X')\) satisfying (1-4) and \(P \circ (B,\mu )^{-1} = P' \circ (B',\mu ')^{-1}\), then

$$\begin{aligned} {\mathbb {E}}^P\left[ \Gamma (\mu ^x,\Lambda ,X)\right] \ge {\mathbb {E}}^{P'}\left[ \Gamma (\mu '^x,\Lambda ',X')\right] . \end{aligned}$$ -

(6)

\(\mu \) is a version of the conditional law of \((W,\Lambda ,X)\) given \((B,\mu )\).

If also there exists an A-valued process \((\alpha _t)_{t \in [0,T]}\) such that \(P(\Lambda _t = \delta _{\alpha _t} \ a.e. \ t)=1\), then we say the weak MFG solution has strict control. If this \((\alpha _t)_{t \in [0,T]}\) is progressively measurable with respect to the completion of \(({\mathcal {F}}^{X_0,B,W,\mu }_t)_{t \in [0,T]}\), we say the weak MFG solution has strong control. If \(\mu \) is a.s. B-measurable, then we have a strong MFG solution (with either weak control, strict control, or strong control).

Given a weak MFG solution \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P,B,W,\mu ,\Lambda ,X)\), we may view \((X_0,B,W,\mu ,\Lambda ,X)\) as a random element of the canonical space

A weak MFG solution thus induces a probability measure on \(\Omega \), which itself we would like to call a MFG solution, as it is really the object of interest more than the particular probability space. (The initial state \(X_0\) is singled out mostly for notational convenience later on and to be consistent with the paper [11].) The following definition will be reformulated in Sect. 4 in a more intrinsic manner.

Definition 2.2

If \(P \in {\mathcal {P}}(\Omega )\) satisfies \(P = P' \circ (X_0,B,W,\mu ,\Lambda ,X)^{-1}\) for some weak MFG solution \((\Omega ',({\mathcal {F}}'_t)_{t \in [0,T]},P',B,W,\mu ,\Lambda ,X)\), then we refer to P itself as a weak MFG solution. Naturally, we may also refer to P as a weak MFG solution with strict control or strong control, or as a strong MFG solution, under the analogous additional assumptions.

2.3 Finite-player games

This section describes a general form of the finite-player games, allowing controls to be relaxed and adapted to general filtrations.

An n-player environment is defined to be any tuple \({\mathcal {E}}_n = (\Omega _n,({\mathcal {F}}^n_t)_{t \in [0,T]},{\mathbb {P}}_n,\xi ,B,W)\), where \((\Omega _n,({\mathcal {F}}^n_t)_{t \in [0,T]},{\mathbb {P}}_n)\) is a complete filtered probability space supporting an \({\mathcal {F}}^n_0\)-measurable \(({\mathbb {R}}^d)^n\)-valued random variable \(\xi = (\xi ^1,\ldots ,\xi ^n)\) with law \(\lambda ^{\times n}\), an \(m_0\)-dimensional \(({\mathcal {F}}^n_t)_{t \in [0,T]}\)-Wiener process B, and a nm-dimensional \(({\mathcal {F}}^n_t)_{t \in [0,T]}\)-Wiener process \(W = (W^1,\ldots ,W^n)\), independent of B. For simplicity, we consider i.i.d. initial states \(\xi ^1,\ldots ,\xi ^n\) with common law \(\lambda \), although it is presumably possible to generalize this. Perhaps all of the notation here should be parametrized by \({\mathcal {E}}_n\) or an additional index for n, but, since we will typically focus on a fixed sequence of environments \(({\mathcal {E}}_n)_{n=1}^\infty \), we avoid complicating the notation. Indeed, the subscript n on the measure \({\mathbb {P}}_n\) will be enough to remind us on which environment we are working at any moment.

Until further notice, we work with a fixed n-player environment \({\mathcal {E}}_n\). An admissible control is any \(({\mathcal {F}}^n_t)_{t \in [0,T]}\)-progressively measurable \({\mathcal {P}}(A)\)-valued process \((\Lambda _t)_{t \in [0,T]}\) satisfying

An admissible strategy is a vector of n admissible controls. The set of admissible controls is denoted \({\mathcal {A}}_n({\mathcal {E}}_n)\), and accordingly the set of admissible strategies is the Cartesian product \({\mathcal {A}}_n^n({\mathcal {E}}_n)\). A strict control is any control \(\Lambda \in {\mathcal {A}}_n({\mathcal {E}}_n)\) such that \({\mathbb {P}}_n(\Lambda _t = \delta _{\alpha _t}, \ a.e. \ t) = 1\) for some \(({\mathcal {F}}^n_t)_{t \in [0,T]}\)-progressively measurable A-valued process \((\alpha _t)_{t \in [0,T]}\), and a strict strategy is any vector of n strict controls. Given an admissible control \(\Lambda =(\Lambda ^1,\ldots ,\Lambda ^n) \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) define the state processes \(X[\Lambda ] := (X^1[\Lambda ],\ldots ,X^n[\Lambda ])\) by

Note that Assumption A ensures that a unique strong solution of this SDE system existsFootnote 1. Indeed, the Lipschitz assumption of (A.4) and the obvious inequality

together imply, for example, that the function

is Lipschitz, uniformly in (t, a). A standard estimate using assumption (A.4), which is worked out in Lemma 5.1, shows that \({\mathbb {E}}^{{\mathbb {P}}_n}[\Vert X^i[\Lambda ]\Vert _T^p] < \infty \) for each \(\Lambda \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\), \(n \ge i \ge 1\).

The value for player i corresponding to a strategy \(\Lambda = (\Lambda ^1,\ldots ,\Lambda ^n) \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) is defined by

Note that \(J_i(\Lambda ) < \infty \) is well-defined because of the upper bounds of assumption (A.5), but it is possible that \(J_i(\Lambda ) = -\infty \), since we do not require that an admissible control possess finite moment of order \(p'\). Given a strategy \(\Lambda = (\Lambda ^1,\ldots ,\Lambda ^n) \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) and a control \(\beta \in {\mathcal {A}}_n({\mathcal {E}}_n)\), define a new strategy \((\Lambda ^{-i},\beta ) \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) by

Given \(\epsilon = (\epsilon _1,\ldots ,\epsilon _n) \in [0,\infty )^n\), a relaxed \(\epsilon \) -Nash equilibrium in \({\mathcal {E}}_n\) is any strategy \(\Lambda \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) satisfying

Naturally, if \(\epsilon _i=0\) for each \(i=1,\ldots ,n\), we use the simpler term Nash equilibrium, as opposed to 0-Nash equilibrium. A strict \(\epsilon \) -Nash equilibrium in \({\mathcal {E}}_n\) is any strict strategy \(\Lambda \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) satisfying

Note that the optimality is required only among strict controls.

Note that the role of the filtration \(({\mathcal {F}}^n_t)_{t \in [0,T]}\) in the environment \({\mathcal {E}}_n\) is mainly to specify the class of admissible controls. We are particularly interested in the sub-filtration generated by the Wiener processes and initial states; define \(({\mathcal {F}}^{s,n}_t)_{t \in [0,T]}\) to be the \({\mathbb {P}}_n\)-completion of

Of course, \({\mathcal {F}}^{s,n}_t \subset {\mathcal {F}}^n_t\) for each t. Let us say that \(\Lambda \in {\mathcal {A}}_n({\mathcal {E}}_n)\) is a strong control if \({\mathbb {P}}_n(\Lambda _t = \delta _{\alpha _t} \ a.e. \ t)=1\) for some \(({\mathcal {F}}^{s,n}_t)_{t \in [0,T]}\)-progressively measurable A-valued process \((\alpha _t)_{t \in [0,T]}\). Naturally, a strong strategy is a vector of strong controls. A strong \(\epsilon \) -Nash equilibrium in \({\mathcal {E}}_n\) is any strong strategy \(\Lambda \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) such that

Remark 2.3

Equivalently, a strong \(\epsilon \)-Nash equilbrium in \({\mathcal {E}}_n = (\Omega _n,({\mathcal {F}}^n_t)_{t \in [0,T]},{\mathbb {P}}_n,\xi ,B,W)\) is a strict \(\epsilon \)-Nash equilibrium in \({\widetilde{{\mathcal {E}}}}_n := (\Omega _n,({\mathcal {F}}^{s,n}_t)_{t \in [0,T]},{\mathbb {P}}_n,\xi ,B,W)\).

The most common type of Nash equilibrium considered in the literature is, in our terminology, a strong Nash equilibrium. The next proposition assures us that our equilibrium concept using relaxed controls (and general filtrations) truly generalizes this more standard situation, thus permitting a unified analysis of all of the equilibria described thusfar. The proof is deferred to Appendix A.1.

Proposition 2.4

On any n-player environment \({\mathcal {E}}_n\), every strong \(\epsilon \)-Nash equilibrium is also a strict \(\epsilon \)-Nash equilibrium, and every strict \(\epsilon \)-Nash equilibrium is also a relaxed \(\epsilon \)-Nash equilibrium.

Remark 2.5

Another common type of strategy in dynamic game theory is called closed-loop. Whereas our strategies (also called open-loop) are specified by processes, a closed-loop (strict) strategy is specified by feedback functions \(\phi _i : [0,T] \,\times \,({\mathbb {R}}^d)^n \rightarrow A\), for \(i=1,\ldots ,n\), to be evaluated along the path of the state process. In the model of Carmona et al. [12], both the open-loop and closed-loop equilibria are computed explicitly for the n-player games, and they are shown to converge to the same MFG limit. A natural question, which this paper does not attempt to answer, is whether or not closed-loop equilibria converge to the same MFG limit that we obtain in Theorem 2.6.

2.4 The main limit theorem

We are ready now to state the first main Theorem 2.6 and its corollaries. The proof is deferred to Sect. 5. Given an admissible strategy \(\Lambda = (\Lambda ^1,\ldots ,\Lambda ^n) \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) defined on some n-player environment \({\mathcal {E}}_n = (\Omega _n,({\mathcal {F}}^n_t)_{t \in [0,T]},{\mathbb {P}}_n,\xi ,B,W)\), define (on \(\Omega _n\)) the random element \({\widehat{\mu }}[\Lambda ]\) of \({\mathcal {P}}^p({\mathcal {X}})\) (recalling the definition of \({\mathcal {X}}\) from (2.3)) by

As usual, we identify a \({\mathcal {P}}(A)\)-valued process \((\Lambda ^i_t)_{t \in [0,T]}\) with the random element \(\Lambda ^i = dt\Lambda ^i_t(da)\) of \({\mathcal {V}}\). Recall the definition of the canonical space \(\Omega \) from (2.6).

Theorem 2.6

Suppose Assumption A holds. For each n, let \(\epsilon ^n = (\epsilon ^n_1,\ldots ,\epsilon ^n_n) \in [0,\infty )^n\), and let \({\mathcal {E}}_n = (\Omega _n,({\mathcal {F}}^n_t)_{t \in [0,T]},{\mathbb {P}}_n,\xi ,B,W)\) be any n-player environment. Assume

Suppose for each n that \(\Lambda ^n = (\Lambda ^{n,1},\ldots ,\Lambda ^{n,n}) \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) is a relaxed \(\epsilon ^n\)-Nash equilibrium, and let

Then \((P_n)_{n=1}^\infty \) is relatively compact in \({\mathcal {P}}^p(\Omega )\), and each limit point is a weak MFG solution.

Remark 2.7

Averaging over \(i=1,\ldots ,n\) in (2.10) circumvents the problem that the strategies \((\Lambda ^{n,1},\ldots ,\Lambda ^{n,n})\) need not be exchangeable, and we note that the limiting behavior of \({\mathbb {P}}_n \circ (B,{\widehat{\mu }}[\Lambda ^n])^{-1}\) can always be recovered from that of \(P_n\). To interpret the definition of \(P_n\), note that we may write

where \(U_n\) is a random variable independent of \({\mathcal {F}}^n_T\), uniformly distributed among \(\{1,\ldots ,n\}\), constructed by extending the probability space \(\Omega _n\). In words, \(P_n\) is the joint law of the processes relevant to a randomly selected representative agent. Of course, Theorem 2.6 specializes when there is exchangeability, in the following sense. For any set E, any element \(e = (e^1,\ldots ,e^n) \in E^n\), and any permutation \(\pi \) of \(\{1,\ldots ,n\}\), let \(e_\pi := (e^{\pi (1)},\ldots ,e^{\pi (n)})\). If

is independent of the choice of permutation \(\pi \), then so is

It then follows that

Theorem 2.6 is stated in quite a bit of generality, devoid even of standard convexity assumptions on the objective functions f and g. Theorem 2.6 includes quite degenerate cases, such as the case of no objectives, where \(f \equiv g \equiv 0\) and A is compact. In this case, any strategy profile whatsoever in the n-player game is a Nash equilibrium, and any weak control can arise in the limit. Exploiting results of [11], the following corollaries demonstrate how, under various additional convexity assumptions, we may refine the conclusion of Theorem 2.6 by ruling out certain types of limits, such as those involving relaxed controls.

Corollary 2.8

Suppose the assumptions of Theorem 2.6 hold, and assume also that for each \((t,x,\mu ) \in [0,T] \times {\mathbb {R}}^d \times {\mathcal {P}}^p({\mathbb {R}}^d)\) the following subset of \({\mathbb {R}}^d \times {\mathbb {R}}\) is convex:

Then

is relatively compact in \({\mathcal {P}}^p({\mathcal {C}}^{m_0} \times {\mathcal {C}}^m \times {\mathcal {P}}^p({\mathcal {C}}^d) \times {\mathcal {C}}^d)\), and every limit is of the form \(P \circ (B,W,\mu ^x,X)^{-1}\), for some weak MFG solution with strict control \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P,B,W,\mu ,\Lambda ,X)\).

Proof

This follows from Theorem 2.6 and the argument of [11, Theorem 4.1]. Indeed, the latter shows that for every weak MFG solution with weak control \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P,B,W,\mu ,\Lambda ,X)\), there exists a weak MFG solution with strict control \(({\widetilde{\Omega }}',({\mathcal {F}}'_t)_{t \in [0,T]},P',B',W',\mu ',\Lambda ',X')\) such that \(P \circ (B,W,\mu ^x,X)^{-1} = P' \circ (B',W',\mu '^x,X')^{-1}\). \(\square \)

Corollary 2.9

Suppose the assumptions of Theorem 2.6 hold, and define \(P_n\) as in (2.10). Assume also that for each fixed \((t,\mu ) \in [0,T] \times {\mathcal {P}}^p({\mathbb {R}}^d)\), \((b,\sigma ,\sigma _0)(t,x,\mu ,a)\) is affine in (x, a), \(g(x,\mu )\) is concave in x, and \(f(t,x,\mu ,a)\) is strictly concave in (x, a). Then \((P_n)_{n=1}^\infty \) is relatively compact in \({\mathcal {P}}^p(\Omega )\), and every limit point is a weak MFG solution with strong control.

Proof

By [11, Proposition 4.4], the present assumptions guarantee that every weak MFG solution is a weak MFG solution with strong control. The claim then follows from Theorem 2.6. \(\square \)

Finally, we provide an example of the satisfying situation, in which there is a unique MFG solution. Say that uniqueness in law holds for the MFG if any two weak MFG solutions induce the same law on \(\Omega \). The following corollary is an immediate consequence of Theorem 2.6 and the uniqueness result of [11, Theorem 6.2], which makes use of the monotonicity assumption of Lasry and Lions [31].

Corollary 2.10

Suppose the assumptions of Corollary 2.9 hold, and define \(P_n\) as in (2.10). Assume also that

-

(1)

b, \(\sigma \), and \(\sigma _0\) have no mean field term, i.e. no \(\mu \) dependence,

-

(2)

f is of the form \(f(t,x,\mu ,a) = f_1(t,x,a) + f_2(t,x,\mu )\),

-

(3)

For each \(\mu ,\nu \in {\mathcal {P}}^p({\mathcal {C}}^d)\) we have

$$\begin{aligned}&\int _{{\mathcal {C}}^d}(\mu -\nu )(dx)\left[ g(x_T,\mu _T) - g(x_T,\nu _T) + \int _0^T\left( f_2(t,x,\mu ) - f_2(t,x,\nu )\right) dt\right] \nonumber \\&\quad \le 0. \end{aligned}$$

Then there exists a unique in law weak MFG solution, and it is a strong MFG solution with strong control. In particular, \(P_n\) converges in \({\mathcal {P}}^p(\Omega )\) to this unique MFG solution.

2.5 The converse limit theorem

This section states and discusses a converse to Theorem 2.6. For this, we need an additional technical assumption, which we note holds automatically under Assumption A in the case that the control space A is compact.

Assumption B

The function f of \((t,x,\mu ,a)\) is continuous in \((x,\mu )\), uniformly in a, for each \(t \in [0,T]\). That is,

Moreover, there exists \(c_4 > 0\) such that, for all \((t,x,x',\mu ,\mu ',a)\),

Theorem 2.11

Suppose Assumptions A and B hold. Let \(P \in {\mathcal {P}}(\Omega )\) be a weak MFG solution, and for each n let \({\mathcal {E}}_n = (\Omega _n,({\mathcal {F}}^n_t)_{t \in [0,T]},{\mathbb {P}}_n,\xi ,B,W)\) be any n-player environment. Then there exist, for each n, \(\epsilon _n \ge 0\) and a strong \((\epsilon _n,\ldots ,\epsilon _n)\)-Nash equilibrium \(\Lambda ^n = (\Lambda ^{n,1},\ldots ,\Lambda ^{n,n})\) on \({\mathcal {E}}_n\), such that \(\lim _{n\rightarrow \infty }\epsilon _n = 0\) and

Combining Theorems 2.6 and 2.11 shows that the set of weak MFG solutions is exactly the set of limits of strong approximate Nash equilibria. More precisely, the set of weak MFG solutions is exactly the set of limits

where \(\Lambda ^n \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) are strong \(\epsilon ^n\)-Nash equilibria and \(\epsilon ^n=(\epsilon ^n_1,\ldots ,\epsilon ^n_n) \in [0,\infty )^n\) satisfies (2.9). The same statement is true when the word “strong” is replaced by “strict” or “relaxed”, because of Proposition 2.4. Similarly, combining Theorem 2.11 with Corollaries 2.8 and 2.9 yields characterizations of the mean field limit without recourse to relaxed controls.

Remark 2.12

In light of Remark 2.3, the statement of Theorem 2.11 is insensitive to the choice of environments \({\mathcal {E}}_n\). Without loss of generality, they may all be assumed to satisfy \({\mathcal {F}}^n_t = {\mathcal {F}}^{s,n}_t\) (where the latter filtration was defined in (2.8)) for each t; that is, the filtration may be taken to be the one generated by the process \((\xi ,B_t,W_t)_{t \in [0,T]}\).

Remark 2.13

It follows from the proofs of Theorems 2.6 and 2.11 that the values converge as well, in the sense that \(\frac{1}{n}\sum _{i=1}^nJ_i(\Lambda ^n)\) converges (along a subsequence in the case of Theorem 2.6) to the corresponding optimal value corresponding to the MFG solution.

Remark 2.14

Theorem 2.11 is admittedly abstract, and not as strong in its conclusion as the typical results of this nature in the literature. Namely, in the setting without common noise, it is usually argued as in [21] that a MFG solution may be used to construct not just any sequence of approximate equilibria, but rather one consisting of symmetric distributed strategies, in which the control of agent i is of the form \({\hat{\alpha }}(t,X^i_t)\) for some function \({\hat{\alpha }}\) which depends neither on the agent i nor the number of agents n. When the measure flow is stochastic under P (i.e. when the solution is weak or involves common noise), we may naturally look for strategies of the form \({\hat{\alpha }}(t,X^i_t,{\widehat{\mu }}^x_t)\). The techniques of this paper seem too abstract to yield a result of this nature, although a careful reading of the proof of Theorem 2.11 shows that two refinements are possible: First, we may take the n-player equilibrium \(\Lambda ^n\) to be exchangeable in the sense of Remark 2.7 if we only require it to be a relaxed equilibrium, not a strong one. Second, if the given MFG solution is strong, then the strong n-player equilibrium \(\Lambda ^n\) can be taken to be exchangeable in the sense of Remark 2.7. These details stray somewhat from the main objective of the paper, so we refrain from elaborating any further. On a related note, at the level of generality of Theorem 2.11 we do not expect to obtain a rate of convergence of \(\epsilon _n\), as in [8, 27].

3 The case of no common noise

The goal of this section is to specialize the main results to MFGs without common noise. Indeed we assume that \(\sigma _0 \equiv 0\) throughout this section. Assumption A permits degenerate volatility, but when \(\sigma _0 \equiv 0\) our general definition of weak MFG solution still involves the common noise B, which in a sense should no longer play any role. To be absolutely clear, we will rewrite the definitions and the two main theorems so that they do not involve a common noise; most notably, the notion of strong controls for the finite-player games is refined to very strong controls.

The proofs of the main results of Sect. 3.1, Proposition 3.3 and Theorem 3.4, are deferred to Sect. 7, where we will see how to deduce almost all of the results without common noise from those with common noise. Crucially, even without common noise, a weak MFG solution still involves a random measure \(\mu \), and the consistency condition becomes \(\mu = P((W,\Lambda ,X) \in \cdot \ | \ \mu )\). We illustrate by example just how different weak solutions can be from the strong solutions typically considered in the MFG literature, in which \(\mu \) is deterministic. Finally we close the section by discussing some situations in which weak solutions are concentrated on the family of strong solutions.

3.1 Definitions and results

First, let us state a simplified definition of MFG solution for the case \(\sigma _0 \equiv 0\), which is really just Definition 2.1 rewritten without B. Again, the following definition is relative to the initial state distribution \(\lambda \).

Definition 3.1

A weak MFG solution without common noise is a tuple \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P,W,\mu ,\Lambda ,X)\), where \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P)\) is a complete filtered probability space supporting \((W,\mu ,\Lambda ,X)\) satisfying

-

(1)

\((W_t)_{t \in [0,T]}\) is an \(({\mathcal {F}}_t)_{t \in [0,T]}\)-Wiener processes of dimension m, the process \((X_t)_{t \in [0,T]}\) is \(({\mathcal {F}}_t)_{t \in [0,T]}\)-adapted with values in \({\mathbb {R}}^d\), and \(P \circ X_0^{-1} = \lambda \). Moreover, \(\mu \) is a random element of \({\mathcal {P}}^p({\mathcal {X}})\) such that \(\mu (C)\) is \({\mathcal {F}}_t\)-measurable for each \(C \in {\mathcal {F}}^{\mathcal {X}}_t\) and \(t \in [0,T]\).

-

(2)

\(X_0\), W, and \(\mu \) are independent.

-

(3)

\((\Lambda _t)_{t \in [0,T]}\) is \(({\mathcal {F}}_t)_{t \in [0,T]}\)-progressively measurable with values in \({\mathcal {P}}(A)\) and

$$\begin{aligned} {\mathbb {E}}^P\int _0^T\int _A|a|^p\Lambda _t(da)dt < \infty . \end{aligned}$$Moreover, \(\sigma (\Lambda _s : s \le t)\) is conditionally independent of \({\mathcal {F}}^{X_0,W,\mu }_T\) given \({\mathcal {F}}^{X_0,W,\mu }_t\), for each \(t \in [0,T]\), where

$$\begin{aligned} {\mathcal {F}}^{X_0,W,\mu }_t&= \sigma \left( X_0,W_s,\mu (C) : s \le t, \ C \in {\mathcal {F}}^{\mathcal {X}}_t\right) . \end{aligned}$$ -

(4)

The state equation holds:

$$\begin{aligned} dX_t = \int _Ab(t,X_t,\mu ^x_t,a)\Lambda _t(da)dt + \sigma (t,X_t,\mu ^x_t)dW_t. \end{aligned}$$(3.1) -

(5)

If \(({\widetilde{\Omega }}',({\mathcal {F}}'_t)_{t \in [0,T]},P')\) is another filtered probability space supporting \((W',\mu ',\Lambda ',X')\) satisfying (1-4) and \(P \circ \mu ^{-1} = P' \circ (\mu ')^{-1}\), then

$$\begin{aligned} {\mathbb {E}}^P\left[ \Gamma (\mu ^x,\Lambda ,X)\right] \ge {\mathbb {E}}^{P'}\left[ \Gamma (\mu '^x,\Lambda ',X')\right] . \end{aligned}$$ -

(6)

\(\mu \) is a version of the conditional law of \((W,\Lambda ,X)\) given \(\mu \).

As in Definition 2.2, we may refer to the law \(P \circ (W,\mu ,\Lambda ,X)^{-1}\) itself as a weak MFG solution. Again, if also there exists an A-valued process \((\alpha _t)_{t \in [0,T]}\) such that \(P(\Lambda _t = \delta _{\alpha _t} \ a.e. \ t)=1\), then we say the MFG solution has strict control. If this \((\alpha _t)_{t \in [0,T]}\) is progressively measurable with respect to the completion of \(({\mathcal {F}}^{X_0,W,\mu }_t)_{t \in [0,T]}\), we say the MFG solution has strong control. If \(\mu \) is a.s.-constant, then we have a strong MFG solution without common noise. In this case, we may abuse the terminology somewhat by saying that a measure \({\widetilde{\mu }} \in {\mathcal {P}}^p({\mathcal {X}})\) is itself a strong MFG solution (without common noise), if there exists a weak MFG solution \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P,W,\mu ,\Lambda ,X)\) without common noise such that \(P(\mu = {\widetilde{\mu }}) = 1\).

Remark 3.2

Our notion of strong MFG solution (without common noise) with weak control corresponds to the solution concept adopted by the recent papers [15, 29]. On the other hand, a strong MFG solution (without common noise) with strong control corresponds to the usual definition of MFG solution in the literature [6, 8, 21]. This is not immediate, since our strong control is still required to be optimal among the class of weak controls, whereas the latter papers require optimality only relative to other strong controls. But in most cases, such as under Assumption A, optimality among strong controls implies optimality among weak controls, and thus our definition reduces to the standard one. This is the same phenomenon driving Propositions 2.4 and 3.3, and it is well known in control theory. Lemma 6.5 will elaborate on this point, and see also [24] or the more recent [26] for further discussion.

We continue to work with the definition of the n-player games of the previous section. Suppose we are given an n-player environment \({\mathcal {E}}_n = ({\widetilde{\Omega }}_n,({\mathcal {F}}^n_t)_{t \in [0,T]},{\mathbb {P}}_n,\xi ,B,W)\), as was defined in Sect. 2.3. Let \(({\mathcal {F}}^{vs,n}_t)_{t \in [0,T]}\) denote the \({\mathbb {P}}_n\)-completion of \((\sigma (\xi ,W_s : s \le t))_{t \in [0,T]}\), that is the filtration generated by the initial state and the idiosyncratic noises (but not the common noise). Let us say that a control \(\Lambda \in {\mathcal {A}}_n({\mathcal {E}}_n)\) is a very strong control if \({\mathbb {P}}_n(\Lambda _t = \delta _{\alpha _t} \ a.e. \ t) = 1\), for some \(({\mathcal {F}}^{vs,n}_t)_{t \in [0,T]}\)-progressively measurable A-valued process \((\alpha _t)_{t \in [0,T]}\). A very strong strategy is a vector of very strong controls. For \(\epsilon =(\epsilon _1,\ldots ,\epsilon _n) \in [0,\infty )^n\), a very strong \(\epsilon \) -Nash equilibrium in \({\mathcal {E}}_n\) is any very strong strategy \(\Lambda \in {\mathcal {A}}_n^n({\mathcal {E}}_n)\) such that

The very strong equilibrium is arguably the most natural notion of equilibrium in the case of no common noise, and it is certainly one of the most common in the literature. The proof of the following Proposition is deferred to Appendix A.2.

Proposition 3.3

When \(\sigma _0\equiv 0\), every very strong \(\epsilon \)-Nash equilibrium is also a relaxed \(\epsilon \)-Nash equilibrium.

The following Theorem 3.4 rewrites Theorems 2.6 and 2.11 in the setting without common noise. Although this is mostly derived from Theorems 2.6 and 2.11, the proof is spelled out in Sect. 7, as it is not entirely straightforward.

Theorem 3.4

Suppose \(\sigma _0 \equiv 0\). Theorem 2.6 remains true if the term “weak MFG solution” is replaced by “weak MFG solution without common noise,” and if \(P_n\) is defined instead by

Theorem 2.11 remains true if “weak MFG solution” is replaced by “weak MFG solution without common noise,” if \(P_n\) is defined by (3.2), and if “strong” is replaced by “very strong.”

Since strong MFG solutions are more familiar in the literature on mean field games and presumably more accessible computationally, it would be nice to have a description of weak solutions in terms of strong solutions. We will see that this is not possible in general, and the investigation of this issue highlights the fundamental difference between stochastic and deterministic equilibria (i.e. weak and strong MFG solutions). First, a discussion of a special case will help to clarify the ideas.

3.2 A digression on McKean–Vlasov equations

When there is no control (when A is a singleton), the mean field game reduces to a McKean–Vlasov equation. In this case, an interesting simplification occurs: every weak solution is simply a randomization over strong solutions. To be more clear, suppose we have a system of weakly interacting diffusions, given by

A common argument in the theory of McKean–Vlasov limits [16, 32, 34] is to show, under suitable assumptions on \(({\tilde{b}},{\tilde{\sigma }})\), that \((\mu ^n)_{n=1}^\infty \) is tight, and that every weak limit point (an element of \({\mathcal {P}}({\mathcal {P}}({\mathcal {C}}^d))\)) is concentrated on the set of solutions \(\mu \in {\mathcal {P}}({\mathcal {C}}^d)\) of the following strong McKean–Vlasov equation:

Consider also searching for a \({\mathcal {P}}({\mathcal {C}}^d)\)-valued random variable \(\mu \) satisfying the weak McKean–Vlasov equation:

It is not too difficult to convince yourself that a \({\mathcal {P}}({\mathcal {C}}^d)\)-valued random variable satisfies the weak McKean–Vlasov equation if and only if it almost surely satisfies the strong McKean–Vlasov equation. That is, every weak solution is supported on the set of strong solutions. In particular, we find that the set of strong McKean–Vlasov solutions is rich enough to characterize all of the possible limiting behaviors of the finite-particle systems.

In general, no such simplification is available for mean field games. Given a weak MFG solution (without common noise), we can still say that the random measure \(\mu \) is concentrated on the set of solutions of the corresponding McKean–Vlasov equation, but the optimality condition breaks down. This is essentially because the adaptedness requirement makes the class of admissible controls quite dependent on how random \(\mu \) is. To highlight this point, Sect. 3.3 below describes a model possessing weak MFG solutions which are not randomizations of strong MFG solutions. Section 3.4 discusses some partial results on when this simplification can occur in the MFG setting.

3.3 An illuminating example

This section describes a deceptively simple example which illustrates the difference between weak and strong solutions. Consider the time horizon \(T = 2\), the initial state distribution \(\lambda = \delta _0\), and the following data (still with \(\sigma _0 \equiv 0\)):

where for \(\nu \in {\mathcal {P}}^1({\mathbb {R}})\) we define \({\bar{\nu }} := \int x\nu (dx)\). Similarly, for \(\mu \in {\mathcal {P}}^1({\mathcal {X}})\) write \({\bar{\mu }}^x_t := \int _{{\mathbb {R}}}x\mu ^x_t(dx)\). Assumption A is verified by choosing \(p=2\), \(p_\sigma = 0\), and any \(p' > 2\). Let us first study the optimization problems arising in the MFG problem. Let \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,2]},P,W,\mu ,\Lambda ,X)\) satisfy (1-5) of Definition 3.1. For \(({\mathcal {F}}_t)_{t\in [0,2]}\)-progressively measurable \({\mathcal {P}}([-1,1])\)-valued processes \(\beta = (\beta _t)_{t\in [0,2]}\), define

where

Independence of W and \(\mu \) implies

where \({\mathcal {F}}^\beta _t := \sigma (\beta _s : s \le t)\). If it is also required that \({\mathcal {F}}^\beta _t\) is conditionally independent of \({\mathcal {F}}^{X_0,W,\mu }_2\) given \({\mathcal {F}}^{X_0,W,\mu }_t\), then

where the last equality follows from independence of \((X_0,W)\) and \(\mu \), and \({\mathcal {F}}^\mu _t := \sigma (\mu (C) : C \in {\mathcal {F}}^{\mathcal {X}}_t)\). Hence

Condition (5) of Definition 3.1 implies that \(\Lambda \) maximizes J over all such processes \(\beta \), which implies that \(\Lambda _t(\omega )\) must equal \(\delta _{\alpha ^*_t(\omega )}\) on the \((t,\omega )\)-set \(\{\alpha ^* \ne 0\}\), where

and we use the convention \(\text {sign}(0) := 0\).

Remark 3.5

This already highlights the key point: When \(\mu \) is deterministic, an optimal control is the constant \(\text {sign}({\bar{\mu }}^x_2)\), but when \(\mu \) is random, this control is inadmissible since it is not adapted.

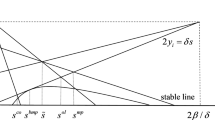

Proposition 3.6

Every strong MFG solution (without common noise) satisfies \({\bar{\mu }}^x_2 \in \{-2,0,2\}\) and \({\bar{\mu }}^x_t = t\,\mathrm {sign}({\bar{\mu }}^x_2)\).

Proof

Let \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,2]},P,W,\mu ,\Lambda ,X)\) satisfy Definition 3.1, with \(\mu \) deterministic. In this case, \(\alpha ^*_t = \text {sign}({\bar{\mu }}^x_2)\) for all t. Suppose that \({\bar{\mu }}^x_2 \ne 0\). Then \(\Lambda _t = \delta _{\alpha ^*_t}\) must hold \(dt \otimes dP\)-a.e., and thus

The consistency condition (6) of Definition 3.1 implies \({\bar{\mu }}^x_t = {\mathbb {E}}[X_t] = t\,\text {sign}({\bar{\mu }}^x_2)\). In particular, \({\bar{\mu }}^x_2 = 2\,\text {sign}({\bar{\mu }}^x_2)\), which implies \({\bar{\mu }}^x_2 = \pm 2\) since we assumed \({\bar{\mu }}^x_2 \ne 0\). \(\square \)

Proposition 3.7

There exists a weak MFG solution (without common noise) satisfying \(P({\bar{\mu }}^x_2 = 1) = P({\bar{\mu }}^x_2 = -1) = 1/2\).

Proof

Construct on some probability space \(({\widetilde{\Omega }},{\mathcal {F}},P)\) a random variable \(\gamma \) with \(P(\gamma = 1) = P(\gamma = -1) = 1/2\) and an independent Wiener process W. Let \(\alpha ^*_t = \gamma 1_{(1,2]}(t)\) for each t (noticing that this interval is open on the left), and define \(({\mathcal {F}}_t)_{t \in [0,2]}\) to be the complete filtration generated by \((W_t,\alpha ^*_t)_{t \in [0,2]}\). Let

Finally, let \(\Lambda = dt\delta _{\alpha ^*_t}(da)\), and define \(\mu := P((W,\Lambda ,X) \in \cdot \ | \ \gamma )\). Clearly \(\mu \) is \(\gamma \)-measurable. On the other hand, independence of \(\gamma \) and W implies

Thus \(\gamma \) is also \(\mu \)-measurable, and we conclude that \(\mu = P((W,\Lambda ,X) \in \cdot \ | \ \mu )\). It is straightforward to check that

Thus

Since \({\bar{\mu }}^x_2 = \gamma = \text {sign}(\gamma )\), we conclude that \(\alpha ^*_t = \text {sign}({\mathbb {E}}[{\bar{\mu }}^x_2 \ | \ {\mathcal {F}}^\mu _t])\). It is then readily checked using the previous arguments that \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,2]},P,W,\mu ,\Lambda ,X)\) is a weak MFG solution. \(\square \)

To be absolutely clear, the above two propositions imply the following: If \(S := \{\nu \in {\mathcal {P}}({\mathcal {X}}) : {\bar{\nu }}^x_2 \in \{-2,0,2\}\}\), then every strong MFG solution lies in S, but there exists a weak MFG solution with \(P(\mu \in S) = 0\).

Remark 3.8

The example of Proposition 3.7 can be modified to illustrate another strange phenomenon. The proof of Proposition 3.7 has \(\alpha ^*_t = \gamma \) for \(t \in (1,2]\) and \(\alpha ^*_t=0\) for \(t \le 1\). Instead, we could set \(\alpha ^*_t = \eta _t\) for \(t \le 1\), for any mean-zero \([-1,1]\)-valued process \((\eta _t)_{t \in [0,1]}\) independent of \(\gamma \) and W. The rest of the proof proceeds unchanged, yielding another weak MFG solution with the same conditional mean state \({\bar{\mu }}^x\), but with different conditional law \(\mu ^x\). (In fact, we could even choose \(\alpha ^*\) to be any mean-zero relaxed control on the time interval [0, 1].) Intuitively, for \(t \le 1\) we have \({\mathbb {E}}[{\bar{\mu }}^x_2 \ | \ {\mathcal {F}}^\mu _t] = 0\), and the choice of control on the time interval [0, 1] does not matter in light of (3.3); the agent then has some freedom to randomize her choice of control among the family of non-unique optimal choices. This type of randomization can typically occur when optimal controls are non-unique, and although it is unnatural in some sense, Theorem 2.6 indicate that this behavior can indeed arise in the limit from the finite-player games.

3.4 Supports of weak solutions

In this section, we attempt to partially explain what permits the existence of weak solutions which are not randomizations among strong solutions. As was mentioned in Remark 3.5, the culprit is the adaptedness required of controls. Indeed, in the example of Sect. 3.3, very different optimal controls arise depending on whether or not the measure \(\mu \) is random. If \(\mu \) is deterministic, then so is the optimal control, and we may write this optimal control as a functional of \(\mu \) by

The problem is as follows: for each fixed deterministic \(\mu \), the optimal control \(({\hat{\alpha }}^D(t,\mu ))_{t \in [0,T]}\) is deterministic and thus trivially adapted, but when \(\mu \) is allowed to be random then this control is no longer adapted and thus no longer admissible. If, for a different MFG problem, it happens that \({\hat{\alpha }}^D\) is in fact progressively measurable with respect to \(({\mathcal {F}}^\mu _t)_{t \in [0,T]}\), then this control is still admissible when \(\mu \) is randomized; moreover, it should be optimal when \(\mu \) is randomized, since it was optimal for each realization of \(\mu \). The following results make this idea precise, but first some terminology will be useful. As usual we work under Assumption A at all times, and the initial state distribution \(\lambda \in {\mathcal {P}}^{p'}({\mathbb {R}}^d)\) is fixed.

Definition 3.9

We say that a function \({\hat{\alpha }} : [0,T] \times {\mathcal {C}}^m \times {\mathcal {C}}^d \times {\mathcal {P}}^p({\mathcal {X}}) \rightarrow A\) is a universally admissible control if:

-

(1)

\({\hat{\alpha }}\) is progressively measurable with respect to the (universal completion of the) natural filtration \(({\mathcal {F}}^{W,X,\mu }_t)_{t \in [0,T]}\) on \({\mathcal {C}}^m \times {\mathcal {C}}^d \times {\mathcal {P}}^p({\mathcal {X}})\). Here \({\mathcal {F}}^{W,X,\mu }_t := \sigma (W_s,X_s,\mu (C) : s \le t, \ C \in {\mathcal {F}}^{\mathcal {X}}_t)\) for each t, where \((W,X,\mu )\) denotes the identity map on \({\mathcal {C}}^m \times {\mathcal {C}}^d \times {\mathcal {P}}^p({\mathcal {X}})\).

-

(2)

For each fixed \(\nu \in {\mathcal {P}}^p({\mathcal {X}})\), the SDE

$$\begin{aligned} dX_t = b(t,X_t,\nu ^x_t,{\hat{\alpha }}(t,W,X,\nu ))dt + \sigma (t,X_t,\nu ^x_t)dW_t, \ X_0 \sim \lambda , \end{aligned}$$(3.4)is unique in joint law; that is, if we are given two pairs of processes \((W^i_t,X^i_t)_{t \in [0,T]}\) for \(i=1,2\), possibly on different filtered probability spaces but with \((W^i_t)_{t \in [0,T]}\) a Wiener process in either case, then \((W^1,X^1)\) and \((W^2,X^2)\) have the same law.

-

(3)

Suppose we are given a filtered probability space \(({\widetilde{\Omega }},({\widetilde{{\mathcal {F}}}}_t)_{t \in [0,T]},{\widetilde{P}})\) supporting an \(({\widetilde{{\mathcal {F}}}}_t)_{t \in [0,T]}\)-Wiener process \({\widetilde{W}}\), an \({\widetilde{{\mathcal {F}}}}_0\)-measurable \({\mathbb {R}}^d\)-valued random variable \({\widetilde{\xi }}\) with law \(\lambda \), and a \({\mathcal {P}}^p({\mathcal {X}})\)-valued random variable \({\tilde{\mu }}\) independent of \((\xi ,W)\) such that \({\tilde{\mu }}(C)\) is \({\widetilde{{\mathcal {F}}}}_t\)-measurable for each \(C \in {\mathcal {F}}^{\mathcal {X}}_t\) and \(t \in [0,T]\). Then there exists a strong solution \({\widetilde{X}}\) of the SDE

$$\begin{aligned} d{\widetilde{X}}_t = b(t,{\widetilde{X}}_t,{\tilde{\mu }}^x_t,{\hat{\alpha }} (t,W,{\widetilde{X}}, {\tilde{\mu }}))dt + \sigma (t,{\widetilde{X}}_t, {\tilde{\mu }}^x_t)d{\widetilde{W}}_t, \ {\widetilde{X}}_0 = {\widetilde{\xi }}, \end{aligned}$$and it satisfies \({\mathbb {E}}\int _0^T|{\hat{\alpha }}(t,W,{\widetilde{X}},{\tilde{\mu }})|^pdt < \infty \).

If \({\hat{\alpha }}\) is a universally admissible control, we say it is locally optimal if for each fixed \(\nu \in {\mathcal {P}}^p({\mathcal {X}})\) there exists a complete filtered probability space \((\Omega ^{(\nu )},({\mathcal {F}}^{(\nu )}_t)_{t \in [0,T]},P^\nu )\) supporting a Wiener process \(W^\nu \) and a continuous adapted process \(X^\nu \) such that \((W^\nu ,X^\nu )\) satisfies the SDE (3.4) and:

-

(4)

If \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P)\) supports a m-dimensional Wiener process W, a progressive \({\mathcal {P}}(A)\)-valued process \(\Lambda \) satisfying \({\mathbb {E}}^P\int _0^T\int _A|a|^p\Lambda _t(da)dt < \infty \), and a continuous adapted \({\mathbb {R}}^d\)-valued process X satisfying

$$\begin{aligned} dX_t = \int _Ab\left( t,X_t,\nu ^x_t,a\right) \Lambda _t(da)dt + \sigma \left( t,X_t,\nu ^x_t\right) dW_t, \ P \circ X_0^{-1} = \lambda , \end{aligned}$$then

$$\begin{aligned} {\mathbb {E}}^{P^{(\nu )}}\left[ \Gamma \left( \nu ^x,dt\delta _{{\hat{\alpha }} (t,W^\nu ,X^\nu ,\nu )}(da),X^\nu \right) \right] \ge {\mathbb {E}}^P\left[ \Gamma (\nu ^x,\Lambda ,X)\right] . \end{aligned}$$

We need an additional Assumption C, which simply requires the uniqueness of the optimal controls. Some simple conditions are given in [11, Proposition 4.4] under which Assumption C holds: in particular, it suffices to assume that b and \(\sigma \) are affine in (x, a), that f is strictly concave in (x, a), and that g is concave in x.

Assumption C

If \(({\widetilde{\Omega }}^i,({\mathcal {F}}^i_t)_{t \in [0,T]},P^i,W^i,\mu ^i,\Lambda ^i,X^i)\) for \(i=1,2\) both satisfy (1-5) of Definition 3.1 as well as \(P^1 \circ (\mu ^1)^{-1} = P^2 \circ (\mu ^2)^{-1}\), then \(P^1 \circ (W^1,\mu ^1,\Lambda ^1,X^1)^{-1} = P^2 \circ (W^2,\mu ^2,\Lambda ^2,X^2)^{-1}\).

Theorem 3.10

Assume C holds. Suppose that there exists a universally admissible and locally optimal control \({\hat{\alpha }} : [0,T] \times {\mathcal {C}}^m \times {\mathcal {C}}^d \times {\mathcal {P}}^p({\mathcal {X}}) \rightarrow A\). Then, for every weak MFG solution \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P,W,\mu ,\Lambda ,X)\) (without common noise), \(P \circ \mu ^{-1}\) is concentrated on the set of strong MFG solutions (without common noise). Conversely, if \(\rho \in {\mathcal {P}}^p({\mathcal {P}}^p({\mathcal {X}}))\) is concentrated on the set of strong MFG solutions (without common noise), then there exists a weak MFG solution (without common noise) with \(P \circ \mu ^{-1} = \rho \).

Proof

Let \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P,W,\mu ,\Lambda ,X)\) be a weak MFG solution (without common noise).

Step 1: We will first show that necessarily \(\Lambda _t = \delta _{{\hat{\alpha }}(t,W,X,\mu )}\) holds \(dt \otimes dP\)-a.e. On \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P)\) we may use (3) of Definition 3.9 to find a strong solution \(X'\) of the SDE

with \({\mathbb {E}}^P\int _0^T|{\hat{\alpha }}(t,W,X',\mu )|^pdt < \infty \). In particular, \(X'\) is adapted to the (completion of the) filtration \({\mathcal {F}}^{X_0,W,\mu }_t := \sigma (X_0,W_s,\mu (C) : s\le t, \ C \in {\mathcal {F}}^{\mathcal {X}}_t)\). Let \(\Lambda ' := dt\delta _{{\hat{\alpha }}(t,W,X',\mu )}(da)\). Then it is clear that \(({\widetilde{\Omega }},({\mathcal {F}}^{X_0,W,\mu }_t)_{t \in [0,T]},P,W,\mu ,\Lambda ',X')\) satisfies conditions (1-4) of Definition 3.1. Optimality of P implies

On the other hand, for \(P \circ \mu ^{-1}\)-a.e. \(\nu \in {\mathcal {P}}^p({\mathcal {X}})\), the following hold under \(P(\cdot \ | \ \mu =\nu )\):

-

W is a \(({\mathcal {F}}_t)_{t \in [0,T]}\)-Wiener process.

-

\((W,\Lambda ,X)\) satisfies

$$\begin{aligned} dX_t = \int _Ab\left( t,X_t,\nu ^x_t,a\right) \Lambda _t(da) + \sigma \left( t,X_t,\nu ^x_t\right) dW_t. \end{aligned}$$ -

\((W,X')\) solves the SDE (3.4).

From the local optimality of \({\hat{\alpha }}\) we conclude (keeping in mind the uniqueness condition (2) of Definition 3.9) that

Thus

By Assumption C, there is only one optimal control, and so \(\Lambda = \Lambda ' = dt\delta _{{\hat{\alpha }}(t,W,X',\mu )}(da)\), P-a.s. From uniqueness of the SDE solutions we conclude that \(X=X'\) a.s. as well, completing the first step. (Note we do not use the assumptions of Definition 3.9 for this last conclusion, but only the Lipschitz assumption (A.4).)

Step 2: Next, we show that \(P \circ \mu ^{-1}\) is concentrated on the set of strong MFG solutions. Using (2) and (3) of Definition 3.9, we know that for \(P \circ \mu ^{-1}\)-a.e. \(\nu \in {\mathcal {P}}^p({\mathcal {X}})\) there exists on some filtered probability space \((\Omega ^{(\nu )},({\mathcal {F}}^{(\nu )}_t)_{t \in [0,T]},P^\nu )\) a weak solution \(X^\nu \) of the SDE

where \(W^\nu \) is an \(({\mathcal {F}}^{(\nu )}_t)_{t \in [0,T]}\)-Wiener process. From Step 1, on \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P)\) we have

It follows from the P-independence of \(\mu \), \(X_0\), and W along with the uniqueness in law of condition (2) of Definition 3.9 that

for \(P \circ \mu ^{-1}\)-a.e. \(\nu \in {\mathcal {P}}^p({\mathcal {X}})\). Since \(\mu = P((W,\Lambda ,X) \in \cdot \ | \ \mu )\), it follows that

We conclude that \(P \circ \mu ^{-1}\)-a.e. \(\nu \in {\mathcal {P}}^p({\mathcal {X}})\) is a strong MFG solution, or more precisely that

is a strong MFG solution. Indeed, we just verified condition (6) of Definition 3.1, and conditions (1-4) are obvious. The optimality condition (5) of Definition 3.1 is a simple consequence of the local optimality of \({\hat{\alpha }}\).

Step 3: We turn now to the converse. Let \(({\widetilde{\Omega }},{\mathcal {F}},P)\) be any probability space supporting a random variable \((\xi ,W,\mu )\) with values in \({\mathbb {R}}^d \times {\mathcal {C}}^m \times {\mathcal {P}}^p({\mathcal {X}})\) with law \(\lambda \times {\mathcal {W}}^m \times \rho \), where \({\mathcal {W}}^m\) is Wiener measure on \({\mathcal {C}}^m\). Let \(({\mathcal {F}}_t)_{t \in [0,T]}\) denote the P-completion of \((\sigma (\xi ,W_s,\mu (C) : s \le t, \ C \in {\mathcal {F}}^{\mathcal {X}}_t))_{t \in [0,T]}\). Solve strongly on \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P)\) the SDE

Note that hypothesis (3) makes this possible. Define \(\Lambda := dt\delta _{{\hat{\alpha }}(t,W,X,\mu )}(da)\). Clearly \(P \circ \mu ^{-1} = \rho \) by construction, and we claim that \(({\widetilde{\Omega }},({\mathcal {F}}_t)_{t \in [0,T]},P,W,\mu ,\Lambda ,X)\) is a weak MFG solution. Using hypothesis (1), it is clear that conditions (1-4) of Definition 3.1 hold, and thus we must only check the optimality condition (5) and the fixed point condition (6).

First, let \(({\widetilde{\Omega }}',({\mathcal {F}}'_t)_{t \in [0,T]},P',W',\mu ',\Lambda ',X')\) be an alternative probability space satisfying (1-4) of Definition 3.1 and \(P' \circ (\mu ')^{-1} = P \circ \mu ^{-1} = \rho \). The uniqueness in law condition (2) of Definition 3.9 implies that \(P(((W,X) \in \cdot \ | \ \mu = \nu )\) is exactly the law of the solution of the SDE (3.4), for \(P \circ \mu ^{-1}\)-a.e. \(\nu \). Applying local optimality of \({\hat{\alpha }}\) for each \(\nu \), we conclude that

Integrate with respect to \(\rho \) on both sides to get \({\mathbb {E}}^P[\Gamma (\mu ^x,\Lambda ,X)] \ge {\mathbb {E}}^{P'}[\Gamma ((\mu ')^x,\Lambda ',X')]\), which verifies condition (5) of Definition 3.1. Finally, we check (6) by applying Step 1 to deterministic \(\mu \) and again using uniqueness of the SDE (3.4) to find that both (3.5) and (3.6) hold for \(\rho \)-a.e. \(\nu \). \(\square \)

3.5 Applications of Theorem 3.10

It is admittedly quite difficult to check that there exists a universally admissible, locally optimal control, and we will leave this problem open in all but the simplest cases. Note, however, that conditions (2) and (3) of Definition 3.9 hold automatically when \({\hat{\alpha }}(t,w,x,\nu ) = {\hat{\alpha }}'(t,w,x_0,\nu )\), for some \({\hat{\alpha }}' : [0,T] \times {\mathcal {C}}^m \times {\mathbb {R}}^d \times {\mathcal {P}}^p({\mathcal {X}}) \rightarrow A\).

A simple class of examples Suppose \(A \subset {\mathbb {R}}^k\) is convex, \(g \equiv 0\), and \(f=f(t,\mu ,a)\) is twice differentiable in a with uniformly negative Hessian in a. That is, \(D_a^2f(t,\mu ,a) \le -\delta \) for all \((t,\mu )\), for some \(\delta > 0\). Suppose as usual that Assumption A holds. Define

It is straightforward to check that Assumption C holds and that \({\hat{\alpha }}\) is a universally admissible and locally optimal control. Of course, this example is simple in that the state process does not influence the optimization.

A possible general strategy The following approach may be more widely applicable. First, for a fixed \(\nu \in {\mathcal {P}}^p({\mathcal {X}})\), we may define the value function \(V[\nu ](t,x)\) of the corresponding optimal control problem in the usual way, and it should solve a Hamilton–Jacobi–Bellman (HJB) PDE of the form

where the Hamiltonian \(H : [0,T] \times {\mathbb {R}}^d \times {\mathcal {P}}^p({\mathbb {R}}^d) \times {\mathbb {R}}^d \times {\mathbb {R}}^{d \times d} \rightarrow {\mathbb {R}}\) is defined by

Suppose that we can show (as is well known to be possible in very general situations) that for each \(\nu \) the value function \(V[\nu ]\) is the unique (viscosity) solution of this HJB equation. Then, an optimal control can be obtained by finding a function \(s=s(t,x,\mu ,y,z)\) which attains the supremum in \(H(t,x,\mu ,y,z)\) for each \((t,x,\mu ,y,z)\), and setting

for each \((t,x,\nu ) \in [0,T] \times {\mathbb {R}}^d \times {\mathcal {P}}^p({\mathcal {X}})\). For each fixed, deterministic \(\nu \in {\mathcal {P}}^p({\mathcal {X}})\), this gives us the solution to the corresponding optimal control problem. The crux of this approach is to view \(\nu \) as variable and show that \({\hat{\alpha }}\) universally admissible (it is clearly then locally optimal). For this it suffices to show that the value function \(V[\nu ](t,x)\) is adapted with respect to \(\nu \). More precisely, assuming \(V[\nu ](t,\cdot )\) is continuous for each \((t,\nu )\), we must show that \((t,\nu ) \mapsto V[\nu ](t,x)\) is progressively measurable for each x, using the canonical filtration on \({\mathcal {P}}^p({\mathcal {X}})\) given by \((\sigma (\mu (C) : C \in {\mathcal {F}}^{\mathcal {X}}_t))_{t \in [0,T]}\). Even better would be to show that V is Markovian, in the sense that \(V[\nu ](t,x) = {\widetilde{V}}(t,x,\nu ^x_t)\) for a measurable function \({\widetilde{V}}\). In general, V can easily fail to be adapted in this sense, as in the example of Sect. 3.3 above. But this naturally raises the question of what assumptions can be imposed on the model inputs to produce this adaptedness. In short, we must study the dependence of a family of HJB equations on a path-valued parameter.

4 Mean field games on a canonical space

In this section, we begin to work toward the proofs of the main results announced in Sects. 2.4 and 2.5. This section briefly elaborates on the notion of mean field game solution on the canonical space, in order to state simpler conditions by which may check that a measure P is a weak MFG solution, in the sense of Definition 2.2. The definitions and notations of this section are again mostly borrowed from [11], to which the reader is referred for more details.

First, we mention some notational conventions. We will routinely use the same letter \(\phi \) to denote the natural extension of a function \(\phi : E \rightarrow F\) to any product space \(E \times E'\), given by \(\phi (x,y) := \phi (x)\) for \((x,y) \in E \times E'\). Similarly, we will use the same symbol \(({\mathcal {F}}_t)_{t \in [0,T]}\) to denote the natural extension of a filtration \(({\mathcal {F}}_t)_{t \in [0,T]}\) on a space E to any product space \(E \times E'\), given by \(({\mathcal {F}}_t \otimes \{\emptyset , E'\})_{t \in [0,T]}\).

We will make use of the following canonical spaces, two of which have been defined already but are recalled for convenience:

From now on, let \(\xi \), B, W, \(\mu \), \(\Lambda \), and X denote the identity maps on \({\mathbb {R}}^d\), \({\mathcal {C}}^{m_0}\), \({\mathcal {C}}^m\), \({\mathcal {P}}^p({\mathcal {X}})\), \({\mathcal {V}}\), and \({\mathcal {C}}^d\), respectively. Note, for example, that our convention permits W to denote both the identity map on \({\mathcal {C}}^m\) and the projection from \(\Omega \) to \({\mathcal {C}}^m\). The canonical filtration \(({\mathcal {F}}^\Lambda _t)_{t \in [0,T]}\) is defined on \({\mathcal {V}}\) by

It is known (e.g. [29, Lemma 3.8]) that there exists a \(({\mathcal {F}}^\Lambda _t)_{t \in [0,T]}\)-predictable process \(\overline{\Lambda } : [0,T] \times {\mathcal {V}}\rightarrow {\mathcal {P}}(A)\) such that \(dt[\overline{\Lambda }(t,q)](da) = q\) for each q, or equivalently \(\overline{\Lambda }(t,q) = q_t\) for a.e. t, for each q. Then, we may think of \((\overline{\Lambda }(t,\cdot ))_{t \in [0,T]}\) as the canonical \({\mathcal {P}}(A)\)-valued process defined on \({\mathcal {V}}\), and it is clear that \({\mathcal {F}}^\Lambda _t = \sigma (\overline{\Lambda }(s,\cdot ) : s \le t)\). With this in mind, we may somewhat abusively write \(\Lambda _t\) in place of \(\overline{\Lambda }(t,\cdot )\), and with this notation \({\mathcal {F}}^\Lambda _t = \sigma (\Lambda _s : s \le t)\).

The canonical processes B, W, and X generate obvious natural filtrations, denoted \(({\mathcal {F}}^B_t)_{t \in [0,T]}\), \(({\mathcal {F}}^W_t)_{t \in [0,T]}\), and \(({\mathcal {F}}^X_t)_{t \in [0,T]}\), respectively. We will frequently work with filtrations generated by several canonical processes, such as \({\mathcal {F}}^{\xi ,B,W}_t := \sigma (\xi ,B_s,W_s : s \le t)\) defined on \(\Omega _0\), and \({\mathcal {F}}^{\xi ,B,W,\Lambda }_t = {\mathcal {F}}^{\xi ,B,W}_t \otimes {\mathcal {F}}^{\Lambda }_t\) defined on \(\Omega _0 \times {\mathcal {V}}\). Our convention on canonical extensions of filtrations to product spaces permits the use of \(({\mathcal {F}}^{\xi ,B,W}_t)_{t \in [0,T]}\) to refer also to the filtration on \(\Omega _0 \times {\mathcal {V}}\) generated by \((\xi ,B,W)\), and it should be clear from context on which space the filtration is defined. Hence, the filtration \(({\mathcal {F}}^{\mathcal {X}}_t)_{t \in [0,T]}\) defined just before Definition 2.1 could alternatively be denoted \({\mathcal {F}}^{\mathcal {X}}_t = {\mathcal {F}}^{W,\Lambda ,X}_t\), but we stick with the former notation for consistency. Define the canonical filtration \(({\mathcal {F}}^\mu _t)_{t \in [0,T]}\) on \({\mathcal {P}}^p({\mathcal {X}})\) by

There is somewhat of a conflict in notation, between our use of \((\xi ,B,W)\) here as the identity map on \({\mathbb {R}}^d \times {\mathcal {C}}^{m_0} \times {\mathcal {C}}^m\) and our previous use (beginning in Sect. 2.3) of the same letters for random variables with values in \(({\mathbb {R}}^d)^n \times {\mathcal {C}}^{m_0} \times ({\mathcal {C}}^m)^n\), defined on an n-player environment \({\mathcal {E}}_n = (\Omega _n,({\mathcal {F}}^n_t)_{t \in [0,T]},{\mathbb {P}}_n,\xi ,B,W)\). However, we will almost exclusively discuss the random variables \((\xi ,B,W)\) through the lenses of various probability measures, and thus it should be clear from context (i.e. from the nearest notated probability measure) which random variables \((\xi ,B,W)\) we are working with at any given moment. For example, given \(P \in {\mathcal {P}}(\Omega )\), the notation \(P \circ (\xi ,B,W)^{-1}\) refers to a measure on \({\mathbb {R}}^d \times {\mathcal {C}}^{m_0} \times {\mathcal {C}}^m\). On the other hand, \({\mathbb {P}}_n\) is reserved for the measure on \(\Omega _n\) in a typical n-player environment, and so \({\mathbb {P}}_n \circ (\xi ,B,W)^{-1}\) refers to a measure on \(({\mathbb {R}}^d)^n \times {\mathcal {C}}^{m_0} \times ({\mathcal {C}}^m)^n\).

Recall that the initial state distribution \(\lambda \in {\mathcal {P}}^{p'}({\mathbb {R}}^d)\) is fixed throughout. Let \({\mathcal {M}}_\lambda \) denote the set of \(\rho \in {\mathcal {P}}^p(\Omega _0 \times {\mathcal {P}}^p({\mathcal {X}}))\) satisfying

-

(1)

\(\rho \circ \xi ^{-1} = \lambda \),

-

(2)

B and W are independent Wiener processes on \((\Omega _0 \times {\mathcal {P}}^p({\mathcal {X}}),({\mathcal {F}}^{\xi ,B,W,\mu }_t)_{t \in [0,T]},\rho )\).

(Note that the set \({\mathcal {M}}_\lambda \) was denoted \({\mathcal {P}}^p_c[(\Omega _0,{\mathcal {W}}_\lambda ) \leadsto {\mathcal {P}}^p({\mathcal {X}})]\) in [11]; we prefer this shorter notation mainly because we will make no use of it after this section.) For \(\rho \in {\mathcal {M}}_\lambda \), the class \({\mathcal {A}}(\rho )\) of admissible controls is the set of probability measures Q on \(\Omega _0 \times {\mathcal {P}}^p({\mathcal {X}}) \times {\mathcal {V}}\) satisfying:

-