Abstract

Background

Artificial intelligence (AI) and computer vision (CV) have revolutionized image analysis. In surgery, CV applications have focused on surgical phase identification in laparoscopic videos. We proposed to apply CV techniques to identify phases in an endoscopic procedure, peroral endoscopic myotomy (POEM).

Methods

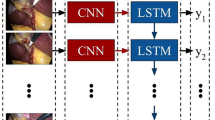

POEM videos were collected from Massachusetts General and Showa University Koto Toyosu Hospitals. Videos were labeled by surgeons with the following ground truth phases: (1) Submucosal injection, (2) Mucosotomy, (3) Submucosal tunnel, (4) Myotomy, and (5) Mucosotomy closure. The deep-learning CV model—Convolutional Neural Network (CNN) plus Long Short-Term Memory (LSTM)—was trained on 30 videos to create POEMNet. We then used POEMNet to identify operative phases in the remaining 20 videos. The model’s performance was compared to surgeon annotated ground truth.

Results

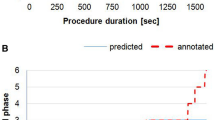

POEMNet’s overall phase identification accuracy was 87.6% (95% CI 87.4–87.9%). When evaluated on a per-phase basis, the model performed well, with mean unweighted and prevalence-weighted F1 scores of 0.766 and 0.875, respectively. The model performed best with longer phases, with 70.6% accuracy for phases that had a duration under 5 min and 88.3% accuracy for longer phases.

Discussion

A deep-learning-based approach to CV, previously successful in laparoscopic video phase identification, translates well to endoscopic procedures. With continued refinements, AI could contribute to intra-operative decision-support systems and post-operative risk prediction.

Similar content being viewed by others

References

Hashimoto DA, Rosman G, Rus D, Meireles OR (2018) Artificial intelligence in surgery: promises and perils. Ann Surg 268(1):70–76. https://doi.org/10.1097/SLA.0000000000002693

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: Pereira F, Burges CJC, Bottou L, Weinberger KQ (eds) Advances in neural information processing systems, vol 25. Curran Associates Inc, New York, pp 1097–1105

Hollon TC, Pandian B, Adapa AR, Urias E, Save AV, Khalsa SSS, Eichberg DG, D’Amico RS, Farooq ZU, Lewis S, Petridis PD, Marie T, Shah AH, Garton HJL, Maher CO, Heth JA, McKean EL, Sullivan SE, Hervey-Jumper SL, Patil PG, Thompson BG, Sagher O, McKhann GM, Komotar RJ, Ivan ME, Snuderl M, Otten ML, Johnson TD, Sisti MB, Bruce JN, Muraszko KM, Trautman J, Freudiger CW, Canoll P, Lee H, Camelo-Piragua S, Orringer DA (2020) Near real-time intraoperative brain tumor diagnosis using stimulated Raman histology and deep neural networks. Nat Med. https://doi.org/10.1038/s41591-019-0715-9

Rajpurkar P, Irvin J, Zhu K, Yang B, Mehta H, Duan T, Ding D, Bagul A, Langlotz C, Shpanskaya K, Lungren MP, Ng AY (2017) CheXNet: radiologist-level pneumonia detection on chest X-rays with deep learning. arXiv:1711.05225 [cs, stat]

Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S (2017) Dermatologist-level classification of skin cancer with deep neural networks. Nature 542(7639):115–118. https://doi.org/10.1038/nature21056

Yengera G, Mutter D, Marescaux J, Padoy N (2018) Less is more: surgical phase recognition with less annotations through self-supervised pre-training of CNN-LSTM networks. arXiv:1805.08569 [cs]

Hashimoto Daniel A, Rosman Guy R, Witkowski Elan J, Stafford Caitlin W, Navarette-Welton Allison D, Rattner David L, Lillemoe Keith R, Rus Daniela R, Meireles Ozanan R (2019) Computer vision analysis of intraoperative video: automated recognition of operative steps in laparoscopic sleeve gastrectomy. Ann Surg 270(3):414–421. https://doi.org/10.1097/SLA.0000000000003460

Kitaguchi D, Takeshita N, Matsuzaki H, Takano H, Owada Y, Enomoto T, Oda T, Miura H, Yamanashi T, Watanabe M, Sato D, Sugomori Y, Hara S, Ito M (2019) Real-time automatic surgical phase recognition in laparoscopic sigmoidectomy using the convolutional neural network-based deep learning approach. Surg Endosc. https://doi.org/10.1007/s00464-019-07281-0

Fabrice Bellard: FFmpeg. http://ffmpeg.org/about.html

Inoue H, Minami H, Kobayashi Y, Sato Y, Kaga M, Suzuki M, Satodate H, Odaka N, Itoh H, Kudo S (2010) Peroral endoscopic myotomy (POEM) for esophageal achalasia. Endoscopy 42(04):265–271. https://doi.org/10.1055/s-0029-1244080

Krippendorff K (2004) Content analysis: an introduction to its methodology, 2nd edn. Sage, Thousand Oaks

Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. Biometrics 33(1):159–174. https://doi.org/10.2307/2529310

R Core Team (2019) R: a language and environment for statistical computing. R Foundationfor Statistical Computing, Vienna

Gamer M, Lemon J, Singh IFP (2019) Irr: various coefficients of interrater reliability and agreement. CRAN

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 770–778. https://doi.org/10.1109/cvpr.2016.90

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, Desmaison A, Kopf A, Yang E, DeVito Z, Raison M, Tejani A, Chilamkurthy S, Steiner B, Fang L, Bai J, Chintala S (2019) PyTorch: an imperative style, high-performance deep learning library. In: Wallach H, Larochelle H, Beygelzimer A, Alche-Buc F, Fox E, Garnett R (eds) Advances in neural information processing systems, vol 32. Curran Associates Inc, New York, pp 8024–8035

Kuhn M (2020) Caret: classification and regression training. CRAN

Wickham H (2016) Ggplot2: elegant graphics for data analysis. Springer, New York

Padoy N, Blum T, Ahmadi SA, Feussner H, Berger MO, Navab N (2012) Statistical modeling and recognition of surgical workflow. Med Image Anal 16(3):632–641. https://doi.org/10.1016/j.media.2010.10.001

Twinanda AP, Shehata S, Mutter D, Marescaux J, de Mathelin M, Padoy N (2017) EndoNet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging 36(1):86–97. https://doi.org/10.1109/TMI.2016.2593957

Jin Y, Dou Q, Chen H, Yu L, Qin J, Fu CW, Heng PA (2018) SV-RCNet: workflow recognition from surgical videos using recurrent convolutional network. IEEE Trans Med Imaging 37(5):1114–1126. https://doi.org/10.1109/TMI.2017.2787657

Acknowledgements

This work was supported by a 2017 research award from the Natural Orifice Surgery Consortium for Assessment and Research (NOSCAR). Daniel Hashimoto was partly funded for this work by NIH Grant T32DK007754-16A1 and the MGH Edward D. Churchill Research Fellowship. The authors thank Caitlin Stafford, CCRP, for her assistance and support in research management.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosures

Drs. Ban, Hashimoto, Meireles, Rosman, Rus, and Ward receive research support from Olympus Corporation. Dr. Hashimoto is a consultant for Verily Life Sciences, Johnson & Johnson Institute, Worrell Inc, Mosaic Research Management, and Gerson Lehrman Group. Dr. Rattner is a consultant for the Olympus Corporation. Dr. Meireles is a consultant for the Olympus Corporation and Medtronic. Dr. Inoue is a consultant for the Olympus and Top Corporations and received research support from the Olympus Corporation and Takeda Pharmaceutical Company. Drs. Rosman and Rus receive research support from Toyota Research Institute (TRI). Dr. Lillemoe has no conflicts of interest nor financial ties to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ward, T.M., Hashimoto, D.A., Ban, Y. et al. Automated operative phase identification in peroral endoscopic myotomy. Surg Endosc 35, 4008–4015 (2021). https://doi.org/10.1007/s00464-020-07833-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-020-07833-9