Abstract

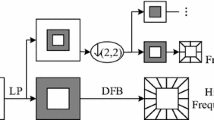

Multi-scale transforms (MST)-based methods are popular for multi-focus image fusion recently because of the superior performances, such as the fused image containing more details of edges and textures. However, most of MST-based methods are based on pixel operations, which require a large amount of data processing. Moreover, different fusion strategies cannot completely preserve the clear pixels within the focused area of the source image to obtain the fusion image. To solve these problems, this paper proposes a novel image fusion method based on focus-region-level partition and pulse-coupled neural network (PCNN) in nonsubsampled contourlet transform (NSCT) domain. A clarity evaluation function is constructed to measure which regions in the source image are focused. By removing the focused regions from the source images, the non-focus regions which contain the edge pixels of the focused regions are obtained. Next, the non-focus regions are decomposed into a series of subimages using NSCT, and subimages are fused using different strategies to obtain the fused non-focus regions. Eventually, the fused result is obtained by fusing the focused regions and the fused non-focus regions. Experimental results show that the proposed fusion scheme can retain more clear pixels of two source images and preserve more details of the non-focus regions, which is superior to conventional methods in visual inspection and objective evaluations.

Similar content being viewed by others

References

Al-Nima RRO, Abdullah MAM, Al-Kaltakchi MTS et al (2017) Finger texture biometric verification exploiting multi-scale sobel angles local binary pattern features and score-based fusion. Digit Signal Proc 70:178–189

Amolins K, Zhang Y, Dare P (2007) Wavelet based image fusion techniques—an introduction, review and comparison. ISPRS J Photogramm Remote Sens 62(4):249–263

Aslantas V, Toprak AN (2014) A pixel based multi-focus image fusion method. Opt Commun 332(4):350–358

da Cunha AL, Zhou J, Do MN (2006) The nonsubsampled contourlet transform: theory, design, and applications. IEEE Trans Image Process 15(10):3089–3101

Gupta S, Gore A, Kumar S et al (2016) Objective color image quality assessment based on Sobel magnitude. Signal Image Video Process 11(1):1–6

He K, Zhou D, Zhang X, Nie R et al (2017) Infrared and visible image fusion based on target extraction in the nonsubsampled contourlet transform domain. J Appl Remote Sens 11(1):015011. https://doi.org/10.1117/1.JRS.11.015011

Jamal S, Karim F (2012) Infrared and visible image fusion using fuzzy logic and population-based optimization. Appl Soft Comput 12(3):1041–1054

Ji W, Qian Z, Xu B et al (2016) Apple tree branch segmentation from images with small gray-level difference for agricultural harvesting robot. Optik 127(23):11173–11182

Jin X, Nie R, Zhou D et al (2016) A novel DNA sequence similarity calculation based on simplified pulse-coupled neural network and Huffman coding. Phys A Stat Mech Appl 461:325–338

Jin X, Zhou D, Yao S et al (2016c) Remote sensing image fusion method in CIELab color space using nonsubsampled shearlet transform and pulse coupled neural networks. J Appl Remote Sens 10(2):025023

Jin X, Nie R, Zhou D, Wang Q, He K (2016) Multifocus color image fusion based on NSST and PCNN. J Sens 2016:8359602. https://doi.org/10.1155/2016/8359602

Jin X, Zhou D, Yao S, Nie R et al (2017) Multi-focus image fusion method using S-PCNN optimized by particle swarm optimization. Soft Comput 1298:1–13. https://doi.org/10.1007/s00500-017-2694-4

Johnson JL, Padgett ML (1999) PCNN models and applications. IEEE Trans Neural Netw 17(3):480–498

Kavitha S, Thyagharajan KK (2016) Efficient DWT-based fusion techniques using genetic algorithm for optimal parameter estimation. Soft Comput 2016:1–10. https://doi.org/10.1007/s00500-015-2009-6

Kaya IE, Pehlivanl AA, Sekizkarde EG et al (2017) PCA based clustering for brain tumor segmentation of T1w MRI images. Comput Methods Programs Biomed 140:19–28

Kumar A, Hassan MF, Raveendran P (2018) Learning based restoration of Gaussian blurred images using weighted geometric moments and cascaded digital filters. Appl Soft Comput 63:124–138

Li S, Yang B (2008) Multifocus image fusion using region segmentation and spatial frequency. Image Vis Comput 26(7):971–979

Li ST, Kwok JTY, Tsang IWH et al (2004) Fusing images with different focuses using support vector machines. IEEE Trans Neural Netw 15(6):1555–1561

Li M, Cai W, Tan Z (2006) A region-based multi-sensor image fusion scheme using pulse-coupled neural network. Pattern Recogn Lett 27(16):1948–1956

Li H, Chai Y, Li Z (2013) Multi-focus image fusion based on nonsubsampled contourlet transform and focused regions detection. Optik 124(1):40–51

Li S, Kang X, Fang L et al (2017) Pixel-level image fusion: a survey of the state of the art. Inf Fusion 33:100–112

Liu Y, Liu S, Wang Z (2015) A general framework for image fusion based on multi-scale transform and sparse representation. Inf Fusion 24:147–164

Qu X, Yan J, Xiao H et al (2008) Image fusion algorithm based on spatial frequency-motivated pulse coupled neural networks in nonsubsampled contourlet transform domain. Acta Autom Sin 34(12):1508–1514

Sheikh HR, Bovik AC (2006) Image information and visual quality. IEEE Trans Image Process 15(2):430–444

Tian J, Chen L (2012) Adaptive multi-focus image fusion using a wavelet-based statistical sharpness measure. Sig Process 92(9):2137–2146

Wang J, Jeong J (2017) Wavelet-content-adaptive BP neural network-based deinterlacing algorithm. Soft Comput. https://doi.org/10.1007/s00500-017-2968-x

Wang Z, Ma Y, Gu J (2010) Multi-focus image fusion using PCNN. Pattern Recogn 43(6):2003–2016

Xiang T, Yan L, Gao R (2015) A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking PCNN in NSCT domain. Infrared Phys Technol 69:53–61

Yang Y (2011) A novel DWT based multi-focus image fusion method. Proc Eng 24(1):177–181

Zhang Q, Guo BL (2009) Multifocus image fusion using the nonsubsampled contourlet transform. Sig Process 89(7):1334–1346

Zhao C, Guo Y, Wang Y (2015) A fast fusion scheme for infrared and visible light images in NSCT domain. Infrared Phys Technol 72:266–275

Zheng S, Shi WZ, Liu J et al (2007) Multisource image fusion method using support value transform. IEEE Trans Image Process 16(7):1831–1839

Zhong F, Ma Y, Li H (2014) Multifocus image fusion using focus measure of fractional differential and NSCT. Pattern Recogn Image Anal 24(2):234–242

Acknowledgements

The authors thank the editors and the anonymous reviewers for their careful works and valuable suggestions for this study. This study was supported by the National Natural Science Foundation of China (No. 61463052 and No. 61365001).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interests regarding the publication of this paper.

Human and animal rights

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Communicated by V. Loia.

Rights and permissions

About this article

Cite this article

He, K., Zhou, D., Zhang, X. et al. Multi-focus image fusion combining focus-region-level partition and pulse-coupled neural network. Soft Comput 23, 4685–4699 (2019). https://doi.org/10.1007/s00500-018-3118-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3118-9