Abstract

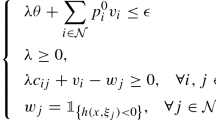

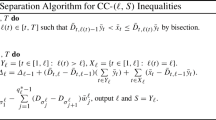

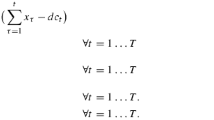

In this paper, we study data-driven chance constrained stochastic programs, or more specifically, stochastic programs with distributionally robust chance constraints (DCCs) in a data-driven setting to provide robust solutions for the classical chance constrained stochastic program facing ambiguous probability distributions of random parameters. We consider a family of density-based confidence sets based on a general \(\phi \)-divergence measure, and formulate DCC from the perspective of robust feasibility by allowing the ambiguous distribution to run adversely within its confidence set. We derive an equivalent reformulation for DCC and show that it is equivalent to a classical chance constraint with a perturbed risk level. We also show how to evaluate the perturbed risk level by using a bisection line search algorithm for general \(\phi \)-divergence measures. In several special cases, our results can be strengthened such that we can derive closed-form expressions for the perturbed risk levels. In addition, we show that the conservatism of DCC vanishes as the size of historical data goes to infinity. Furthermore, we analyze the relationship between the conservatism of DCC and the size of historical data, which can help indicate the value of data. Finally, we conduct extensive computational experiments to test the performance of the proposed DCC model and compare various \(\phi \)-divergence measures based on a capacitated lot-sizing problem with a quality-of-service requirement.

Similar content being viewed by others

References

Ahmed, S.: Convex relaxations of chance constrained optimization problems. Optim. Lett. 8(1), 1–12 (2014). doi: 10.1007/s11590-013-0624-7

Ahmed, S., Papageorgiou, D.: Probabilistic set covering with correlations. Oper. Res. 61(2), 438–452 (2013)

Ahmed, S., Shapiro, A.: Solving chance-constrained stochastic programs via sampling and integer programming. In: Chen, Z.-L., Raghavan, S. (eds.) Tutorials in Operations Research, pp. 261–269. INFORMS, Catonsville (2008)

Ben-Tal, A., den Hertog, D., De Waegenaere, A., Melenberg, B., Rennen, G.: Robust solutions of optimization problems affected by uncertain probabilities. Manage. Sci. 59(2), 341–357 (2013)

Beraldi, P., Ruszczyński, A.: A branch and bound method for stochastic integer problems under probabilistic constraints. Optim. Methods Softw. 17(3), 359–382 (2002)

Birge, J., Louveaux, F.: Introduction to Stochastic Programming. Springer, Berlin (1997)

Calafiore, G.C., Campi, M.C.: Uncertain convex programs: randomized solutions and confidence levels. Math. Program. 102(1), 25–46 (2005)

Calafiore, G.C., El Ghaoui, L.: On distributionally robust chance-constrained linear programs. J. Optim. Theory Appl. 130(1), 1–22 (2006)

Charnes, A., Cooper, W.W.: Deterministic equivalents for optimizing and satisficing under chance constraints. Oper. Res. 11(1), 18–39 (1963)

Charnes, A., Cooper, W.W., Symonds, G.H.: Cost horizons and certainty equivalents: an approach to stochastic programming of heating oil. Manage. Sci. 4(3), 235–263 (1958)

Chen, W., Sim, M., Sun, J., Teo, C.P.: From CVaR to uncertainty set: implications in joint chance constrained optimization. Oper. Res. 58(2), 470–485 (2010)

Cover, T.M., Thomas, J.A.: Elements of Information Theory. Wiley, Hoboken (2012)

Danielsson, J.: Stochastic volatility in asset prices estimation with simulated maximum likelihood. J. Econom. 64(1), 375–400 (1994)

Devroye, L., Györfi, L.: Nonparametric Density Estimation: The \(\ell _1\) View. Wiley, Hoboken (1985)

El Ghaoui, L., Oks, M., Oustry, F.: Worst-case value-at-risk and robust portfolio optimization: a conic programming approach. Oper. Res. 51(4), 543–556 (2003)

Erdoğan, E., Iyengar, G.: Ambiguous chance constrained problems and robust optimization. Math. Program. 107(1), 37–61 (2006)

Gibbs, A.L., Su, F.E.: On choosing and bounding probability metrics. Int. Stat. Rev. 70(3), 419–435 (2002)

Henrion, R., Strugarek, C.: Convexity of chance constraints with independent random variables. Comput. Optim. Appl. 41(2), 263–276 (2008)

Hu, Z., Hong, L.J.: Kullback–Leibler Divergence Constrained Distributionally Robust Optimization. Technical report, The Hong Kong University of Science and Technology. Available at optimization-online: http://www.optimization-online.org/DB_FILE/2012/11/3677.pdf (2013)

Justus, C.G., Hargraves, W.R., Mikhail, A., Graber, D.: Methods for estimating wind speed frequency distributions. J. Appl. Meteorol. 17(3), 350–353 (1978)

Kall, P., Wallace, S.: Stochastic Programming. Wiley, Hoboken (1994)

Küçükyavuz, S.: On mixing sets arising in chance-constrained programming. Math. Program. 132(1–2), 31–56 (2010)

Kullback, S.: Information Theory and Statistics. Courier Dover Publications, Mineola (1997)

Lejeune, M.A.: Pattern-based modeling and solution of probabilistically constrained optimization problems. Oper. Res. 60(6), 1356–1372 (2012)

Liao, S., van Delft, C., Vial, J.-P.: Distributionally robust workforce scheduling in call centres with uncertain arrival rates. Optim. Methods Softw. 28(3), 501–522 (2013)

Love, D., Bayraksan, G.: Two-stage likelihood robust linear program with application to water allocation under uncertainty. In: Winter Simulation Conference, pp. 77–88 (2013)

Luedtke, J.: A branch-and-cut decomposition algorithm for solving chance-constrained mathematical programs with finite support. Math. Program. 146(1–2), 219–244 (2014). doi: 10.1007/s10107-013-0684-6

Luedtke, J., Ahmed, S.: A sample approximation approach for optimization with probabilistic constraints. SIAM J. Optim. 19(2), 674–699 (2008)

Luedtke, J., Ahmed, S., Nemhauser, G.L.: An integer programming approach for linear programs with probabilistic constraints. Math. Program. 122(2), 247–272 (2010)

Miller, B.L., Wagner, H.M.: Chance constrained programming with joint constraints. Oper. Res. 13(6), 930–945 (1965)

Nemirovski, A., Shapiro, A.: Scenario approximations of chance constraints. In: Calafiore, G., Dabbene, F. (eds.) Probabilistic and Randomized Methods for Design Under Uncertainty, pp. 3–47. Springer, Berlin (2006)

Nemirovski, A., Shapiro, A.: Convex approximations of chance constrained programs. SIAM J. Optim. 17(4), 969–996 (2007)

Pagnoncelli, B., Ahmed, S., Shapiro, A.: Sample average approximation method for chance constrained programming: theory and applications. J. Optim. Theory Appl. 142(2), 399–416 (2009)

Pardo, L.: Statistical Inference Based on Divergence Measures, vol. 185. CRC Press, Boca Raton (2006)

Parzen, E.: On estimation of a probability density function and mode. Ann. Math. Stat. 33(3), 1065–1076 (1962)

Popescu, I.: A semidefinite programming approach to optimal-moment bounds for convex classes of distributions. Math. Oper. Res. 30(3), 632–657 (2005)

Prékopa, A.: On probabilistic constrained programming. In: Proceedings of the Princeton Symposium on Mathematical Programming, pp. 113–138. Citeseer (1970)

Prékopa, A.: Stochastic Programming. Springer, Berlin (1995)

Rockafellar, R.T., Uryasev, S.: Optimization of conditional value-at-risk. J. Risk 2, 21–42 (2000)

Rosenblatt, M.: Remarks on some nonparametric estimates of a density function. Ann. Math. Stat. 27(3), 832–837 (1956)

Ruszczyński, A.: Probabilistic programming with discrete distributions and precedence constrained knapsack polyhedra. Math. Program. 93(2), 195–215 (2002)

Shapiro, A., Dentcheva, D., Ruszczyński, A.: Lectures on Stochastic Programming: Modeling and Theory. MOS-SIAM Series on Optimization, vol. 9. SIAM, Philadelphia (2009)

Van Parys, B.P.G., Goulart, P.J., Kuhn, D.: Generalized Gauss Inequalities Via Semidefinite Programming. Technical report, Automatic Control Laboratory, Swiss Federal Institute of Technology Zürich (2014)

Vandenberghe, L., Boyd, S., Comanor, K.: Generalized Chebyshev bounds via semidefinite programming. SIAM Rev. 49(1), 52–64 (2007)

Wang, Z., Glynn, P.W., Ye, Y.: Likelihood robust optimization for data-driven problems. arXiv:1307.6279v3.pdf (2014)

Wasserman, L.: All of Nonparametric Statistics. Springer, Berlin (2006)

Yanıkoglu, I., den Hertog, D., Kleijnen, J.: Adjustable Robust Parameter Design with Unknown Distributions. Available at optimization-online: http://www.optimization-online.org/DB_FILE/2013/03/3806.pdf (2014)

Zymler, S., Kuhn, D., Rustem, B.: Distributionally robust joint chance constraints with second-order moment information. Math. Program. 137(1–2), 167–198 (2013)

Zymler, S., Kuhn, D., Rustem, B.: Worst-case value at risk of nonlinear portfolios. Manage. Sci. 59(1), 172–188 (2013)

Acknowledgments

The authors would like to thank the associate editor and referees very much for providing the nice suggestions, which help improve the quality of this paper significantly.

Author information

Authors and Affiliations

Corresponding author

Additional information

An early version of this paper is available online at www.optimization-online.org/DB_HTML/2012/07/3525.html. This paper has been presented in the Simulation Optimization Workshop, Viña del Mar, Chile, March 21–23, 2013 and the 13th International Conference on Stochastic Programming, Bergamo, Italy, July 8–12, 2013.

Appendices

Appendix 1: Proof of Lemma 3

Proof

We prove each property as follows:

-

(i)

By definition, \(\phi ^*\) is a supremum of linear functions and hence convex.

-

(ii)

For any \(x_1, x_2 \in {\mathbb {R}}\) such that \(x_1 < x_2\), we have

$$\begin{aligned} x_1t - g(t) \ \le \ x_2t - g(t), \ \ \ \forall t \ge 0. \end{aligned}$$Also, since \(\phi (t) = +\infty \) for \(t < 0\), we have

$$\begin{aligned} \phi ^*(x) = \ \sup _{t \in {\mathbb {R}}} \left\{ xt-\phi (t)\right\} = \ \sup _{t \ge 0} \left\{ xt-\phi (t)\right\} , \end{aligned}$$and so

$$\begin{aligned} \phi ^*(x_1) = \ \sup _{t \ge 0} \left\{ x_1t-\phi (t)\right\} \ \le \ \sup _{t \ge 0} \left\{ x_2t-\phi (t)\right\} = \ \phi ^*(x_2). \end{aligned}$$ -

(iii)

Since \(\phi (1) = 0\), we have

$$\begin{aligned} \phi ^*(x) = \ \sup _{t \ge 0} \left\{ xt-\phi (t)\right\} \ \ge \ x. \end{aligned}$$ -

(iv)

We prove by contradiction. Suppose that \(\phi ^*(x) = m\) on the interval [a, b] and \(\phi ^*(y) = m' \ne m\) for some \(y < a\). First, we observe that \(m' < m\) because \(\phi ^*\) is nondecreasing. Second, there exists some \(\lambda \in [0, 1]\) such that \(a = \lambda y + (1-\lambda )b\). It follows that

$$\begin{aligned} \phi ^*(a) \ \le \ \lambda \phi ^*(y) + (1-\lambda ) \phi ^*(b) = \ \lambda m' + (1-\lambda ) m < m, \end{aligned}$$which gives a desirable contradiction. \(\square \)

Appendix 2: Proof of Remark 2

Proof

If \(\ell _{\phi } = +\infty \), then \(\ell _{\phi } \ge \overline{m}(\phi ^*)\) and the claim holds. Hence, without loss of generality, we can assume that \(\ell _{\phi } \,{<}\, +\infty \). In the remainder of the proof, we show that \(\ell _{\phi } + \delta \ge \overline{m}(\phi ^*)\) for any \(\delta \,{>}\, 0\), and the claim follows. Because \(\ell _{\phi } = \lim _{x \rightarrow +\infty } \phi (x)/x\), there exists a \(K \,{>}\, 0\) such that \(|\ell _{\phi } - \phi (x)/x| \le \delta /2\), and accordingly \(\ell _{\phi }x - \phi (x) \ge -\delta x /2\) for all \(x \ge K\). If follows that, for any \(M \in {\mathbb {R}}\), there exists an \(N := \max \{K, 2M/\delta \}\) such that

It follows that \(\lim _{x \rightarrow +\infty }\{ (\ell _{\phi } + \delta ) x - \phi (x) \} = +\infty \). Hence,

Therefore, \(\ell _{\phi } + \delta \ge \overline{m}(\phi ^*)\) for any \(\delta > 0\), and the proof is completed. \(\square \)

Appendix 3: Proof of Proposition 2

Proof

First, since \(\phi (x) = (x-1)^2\), we have

Hence, \(\underline{m}(\phi ^*) = -2\) and \(\overline{m}(\phi ^*) = +\infty \). Second, we solve the problem

to optimality, where we make a transform by replacing \(z_0\) by \(z_0-z\). We let \(g(z_0, z)\) represent the objective function and discuss the following cases:

-

1.

If \(z_0 - z \le -2\), then \(\phi ^*(z_0-z) = -1\) and \(\phi ^*(z_0) = \frac{1}{4}z_0^2 + z_0\). It follows that

$$\begin{aligned} g(z_0, z) = \ \frac{\left( \frac{1}{4}z_0^2 + z_0 \right) -z_0+(1-\alpha )z+d}{\left( \frac{1}{4}z_0^2 + z_0 \right) - (-1)} = \ \frac{\frac{1}{4}z_0^2 + (1-\alpha )z + d}{\left( \frac{1}{2}z_0 + 1 \right) ^2}, \end{aligned}$$and so

$$\begin{aligned} \frac{\partial g(z_0, z)}{\partial z_0} = \frac{\frac{1}{2}z_0-(1-\alpha )z-d}{\left( \frac{1}{2}z_0 + 1 \right) ^3}. \end{aligned}$$Since \(z_0 \le z -2,\,z_0 \ge -2\) and \(\alpha < 1/2\) by assumption, we have \((1/2)z_0-(1-\alpha )z-d\le (\alpha -1/2)z-d-1 < 0\) and \(\frac{1}{2}z_0 + 1 \ge 0\). Hence, \(\partial g(z_0, z)/\partial z_0 < 0\) for any fixed z and it is optimal to choose \(z^*_0 = z-2\). It follows that

$$\begin{aligned} \inf _{\begin{array}{c} z > 0,\\ z_0 \ge -2 \end{array}} g(z_0, z) = \ \inf _{z > 0} g(z-2, z) = \inf _{z>0} \ 4(d+1)\left( \frac{1}{z}\right) ^2 - 4\alpha \left( \frac{1}{z}\right) + 1. \end{aligned}$$Therefore, it is optimal to choose \(z^* = 2(d+1)/\alpha \) and

$$\begin{aligned} \inf _{\begin{array}{c} z > 0,\\ z_0 \ge -2 \end{array}} g(z_0, z) = \ 1-\frac{\alpha ^2}{d+1}. \end{aligned}$$ -

2.

If \(z_0 -z \ge -2\), then \(\phi ^*(z_0-z) = \frac{1}{4}(z_0-z)^2 + (z_0-z)\) and \(\phi ^*(z_0) = \frac{1}{4}z_0^2 + z_0\). It follows that

$$\begin{aligned} g(z_0, z) = \ \frac{\left( \frac{1}{4}z_0^2 + z_0 \right) -z_0+(1-\alpha )z+d}{\left( \frac{1}{4}z_0^2 + z_0 \right) - \left( \frac{1}{4}(z_0-z)^2 + (z_0-z) \right) } = \ \frac{\frac{1}{4}z_0^2 + (1-\alpha )z + d}{\frac{1}{2}zz_0 + z - \frac{1}{4}z^2}, \end{aligned}$$and so

$$\begin{aligned} \frac{\partial g(z_0, z)}{\partial z_0} = \frac{z\left( z_0^2 + (4-z)z_0 - 4(1-\alpha )z-4d\right) }{8\left( \frac{1}{2}zz_0 + z - \frac{1}{4}z^2\right) ^2}. \end{aligned}$$For fixed z, we set \(\partial g(z_0, z)/\partial z_0 = 0\) and obtain

$$\begin{aligned} z_0 = \frac{(z-4)\pm \sqrt{z^2+8(1-2\alpha )z+16(d+1)}}{2}. \end{aligned}$$Since \(z_0 \ge z-2\), we rule out the negative root and so

$$\begin{aligned} z^*_0 = \frac{(z-4)+\sqrt{z^2+8(1-2\alpha )z+16(d+1)}}{2} \end{aligned}$$is a stationary point of \(g(z_0, z)\) with z fixed and the corresponding objective value

$$\begin{aligned} g(z_0^*, z) = \ \frac{1}{2}\sqrt{16(d+1)\left( \frac{1}{z}\right) ^2+8(1-2\alpha )\left( \frac{1}{z}\right) +1} \ + \frac{1}{2}\left( 1-4\left( \frac{1}{z}\right) \right) . \end{aligned}$$Now we show that \(z_0^*\) is an optimal solution for \(\inf _{z_0\ge z-2}g(z_0, z)\) with z fixed. We compare the value of \(g(z_0^*, z)\) with \(g(+\infty , z)\) and \(g(z-2, z)\) because \(+\infty \) and \(z-2\) are the end points of the feasible region of \(z_0\). We observe that \(g(+\infty , z) = +\infty \). Also, we have

$$\begin{aligned} g(z-2, z) = \ \frac{\frac{1}{4}(z-2)^2+(1-\alpha )z+d}{\frac{1}{2}z(z-2)+z-\frac{1}{4}z^2} = \ 4(d+1)\left( \frac{1}{z}\right) ^2 - 4\alpha \left( \frac{1}{z}\right) + 1, \end{aligned}$$and \(g(z-2, z) \ge g(z_0^*, z)\). To see that, we compare the values of \(g(z-2, z)\) and \(g(z_0^*, z)\) by the following inequalities, where the inequalities below imply those above.

$$\begin{aligned}&g(z-2, z) \ge g(z_0^*, z) \\&\quad \Leftarrow 8(d+1)\left( \frac{1}{z}\right) ^2 - 8\alpha \left( \frac{1}{z}\right) + 2 \\&\quad \ge \sqrt{16(d+1)\left( \frac{1}{z}\right) ^2+8(1-2\alpha )\left( \frac{1}{z}\right) +1} + \left( 1-4\left( \frac{1}{z}\right) \right) \\&\quad \Leftarrow \left[ 8(d+1)\left( \frac{1}{z}\right) ^2 + 4(1-2\alpha )\left( \frac{1}{z}\right) + 1\right] ^2 \\&\quad \ge 16(d+1)\left( \frac{1}{z}\right) ^2+8(1-2\alpha )\left( \frac{1}{z}\right) +1\\&\quad \Leftarrow 16\left( \frac{1}{z}\right) ^2\left[ 2(d+1)\left( \frac{1}{z}\right) + (1-2\alpha )\right] ^2 \ge 0. \end{aligned}$$Hence, \(\inf _{z_0\ge z-2}g(z_0, z) = g(z_0^*, z)\) with z fixed. Therefore, we have

$$\begin{aligned} \inf _{z > 0, z_0 \ge z-2} g(z_0, z) = \ \inf _{z > 0} \ \frac{1}{2}\sqrt{16(d+1)z^2+8(1-2\alpha )z+1} \ + \frac{1}{2}(1-4z), \end{aligned}$$where we have 1 / z replaced by z. Similarly, we set

$$\begin{aligned} \frac{\partial g(z_0^*, z)}{\partial z} = \ \frac{8(d+1)z+2(1-2\alpha )}{\sqrt{16(d+1)z^2+8(1-2\alpha )z+1}}-2 = \ 0, \end{aligned}$$and obtain

$$\begin{aligned} z^* = \frac{\sqrt{d^2 + 4d(\alpha -\alpha ^2)}-(1-2\alpha )d}{4d(d+1)}. \end{aligned}$$Therefore, we have

$$\begin{aligned} g(z_0^*, z^*) = \ 1-\alpha +\frac{\sqrt{d^2 + 4d(\alpha -\alpha ^2)}-(1-2\alpha )d}{2(d+1)}. \end{aligned}$$Again, we shall compare the value of \(g(z_0^*, z^*)\) with \(g(z_0^*, +\infty )\) and \(g(z_0^*, 0)\) since \(+\infty \) and 0 are the end points of the feasible region of z. We observe that \(g(z_0^*, +\infty ) = +\infty \) and \(g(z_0^*, 0) = 1 \ge g(z_0^*, z^*)\), and hence

$$\begin{aligned} \inf _{z > 0, z_0 \ge z-2} g(z_0, z) = \ 1-\alpha +\frac{\sqrt{d^2 + 4d(\alpha -\alpha ^2)}-(1-2\alpha )d}{2(d+1)}. \end{aligned}$$

Finally, we compare the optimal value of \(g(z_0, z)\) in the two cases. We claim that the optimal value obtained in the latter case is smaller (and hence globally optimal). To see that, we compare the two values by the following inequalities, where the inequalities below imply those above.

Therefore, the perturbed risk level is

\(\square \)

Appendix 4: Proof of Proposition 3

Proof

First, Since \(\phi (x) = |x-1|\), we have

Hence, \(\underline{m}(\phi ^*) = -1\) and \(\overline{m}(\phi ^*) = 1\). Second, we solve the problem

to optimality. We discuss the following cases:

-

1.

If \(z_0 \le -1\), then \(\phi ^*(z_0) = -1\) and \(\phi ^*(z_0+z) = z_0+z\). It follows that

$$\begin{aligned} g(z_0, z) = \frac{(1-\alpha )z+d}{z_0+z+1}. \end{aligned}$$Note here that for any given \(z,\,g(z_0, z)\) is a nonincreasing function of \(z_0\), due to the fact that \(z_0+z+1 \ge 0\). Meanwhile, \(z_0+z \le 1\). Hence, it is optimal to choose \(z_0^* = \min \{1-z, -1\}\) and so

$$\begin{aligned} g(z_0^*, z) = \left\{ \begin{array}{ll} \frac{(1-\alpha )z+d}{2}, &{}\quad \hbox {if} \, z \ge 2, \\ \frac{(1-\alpha )z+d}{z}, &{}\quad \hbox {if} \, z \le 2. \end{array}\right. \end{aligned}$$Therefore, \(g(z_0^*, z)\) is nonincreasing on z on the interval (0, 2] and nondecreasing on z on the interval \([2, +\infty )\), and so \(g(z_0^*, z^*) = 1-\alpha +\frac{d}{2}\).

-

2.

If \(-1 \le z_0 \le 1\), then \(\phi ^*(z_0) = z_0\). Also, we have \(z \le 2\) and \(\phi ^*(z_0+z) = z_0+z\) because \(-1 \le z_0 + z\le 1\). Hence,

$$\begin{aligned} g(z_0, z) = \frac{(1-\alpha )z+d}{z} = 1 - \alpha + \frac{d}{z} \ge 1-\alpha +\frac{d}{2}, \end{aligned}$$and the lower bound is attained at \(z^* = 2\). Therefore, \(g(z_0^*, z^*) = 1-\alpha +\frac{d}{2}\).

To sum up, we have \(1 - \alpha ' = g(z_0^*, z^*) = 1-\alpha +\frac{d}{2}\), or equivalently \(\alpha ' = \alpha -\frac{d}{2}\). \(\square \)

Appendix 5: Proof of Proposition 4

Proof

We divide the proof into two parts. In the first part, we show that the perturbed risk level

In the second part, we show how to compute \(\alpha '\) by using bisection line search.

(Risk level) First, since \(\phi (x) = x\log x -x + 1\), we have \(\phi ^*(x) = e^x-1\). Hence, \(\underline{m}(\phi ^*) = -\infty \) and \(\overline{m}(\phi ^*) = +\infty \). Second, we solve the problem

to optimality. Since

we have \(z_0^* = d-\alpha z\) by setting \(\partial g/\partial z_0 = 0\), and so

where Eq. (16) follows by replacing \((1/e^z)\) with x. Therefore, we have proved Eq. (15).

(Computation) We compute \(\alpha '\) by searching the optimal solution of the minimization problem

First, by denoting \(1 - \alpha ' = \inf _{x\in (0, 1)} h(x)\), we have

It is clear that \((x-1)^2\) decreases as x increases. Meanwhile, since \(x < 1\) and \(x^{-\alpha -1} > x^{-\alpha }\), we have

Therefore, \(h'(x)\) increase as x increases in (0, 1), and hence the function h(x) is convex over x in (0, 1). Because \(\displaystyle \lim _{x \rightarrow 0^+} h'(x) = -\infty \) and \(\displaystyle \lim _{x \rightarrow 1^-} h'(x) = +\infty \), we have:

We can compute the optimal \(x^*\) by forcing

i.e., \((x^*)^{\alpha } = e^{-d}\alpha x^* + e^{-d}(1-\alpha )\). The intersection of functions \(x^{\alpha }\) and \(e^{-d}\alpha x + e^{-d}(1-\alpha )\) can be easily computed by a bisection line search. Finally, to achieve \(\epsilon \) accuracy, i.e., \(|\hat{x} - x^*| \le \epsilon \), of the incumbent probing value \(\hat{x}\), we only have to conduct S steps of bisection, such that \(2^{-S} \le \epsilon \). It follows that \(S \ge \left\lceil \log _2(\frac{1}{\epsilon }) \right\rceil \). \(\square \)

Appendix 6: Proof of Proposition 6

Proof

First, the convergence claim follows from Proposition 5 because \(x = 1\) is the unique minimizer of function \(\phi _{\chi D2}(x) = (x-1)^2,\,x \ge 0\).

Second, we define

based on Proposition 2. Then, we have

Since \(d = d(N)\) by assumption, we have

The proof is completed by substituting the definition of \(g'(d)\) in (18) into Eq. (19). \(\square \)

Appendix 7: Proof of Proposition 7

Proof

The convergence claim follows from Proposition 5 because \(x = 1\) is the unique minimizer of function \(\phi _{\hbox {KL}}(x) = x \log (x) - x + 1,\,x \ge 0\). We divide the remainder of the proof into two parts. We develop the relationship between \(\alpha '\) and d in the first part, and compute the value of data in the second part.

(Relationship between \(\alpha '\) and d) From Proposition 4, we have

Also, the optimal objective value of the embedded optimization problem in equality (20) can be attained by some \(\bar{x} \in (0, 1)\) (based on claim (17) in the proof of Proposition 4), which is the stationary point of the objective function. It follows that

Solving this nonlinear equation system, we reformulate the first equation and then substitute the second equation into the first as follows:

Ruling out the solution \(\bar{x} = 1\), we have \(\bar{x} = \frac{\alpha '(1 - \alpha )}{\alpha (1-\alpha ')} \in (0, 1)\). Finally, we substitute the solution of \(\bar{x}\) back into the second equation in (21) and obtain

Finally, by taking the natural logarithm on both sides of Eq. (22), we obtain that

(Value of data) From Eq. (23) we have

It is easy to observe that \({{\hbox {d}}d}\big /{{\hbox {d}}\alpha '}\) is a monotone function of \(\alpha '\) and \({{\hbox {d}}d}\big /{{\hbox {d}}\alpha '} \ne 0\). Hence, we have

Therefore,

\(\square \)

Rights and permissions

About this article

Cite this article

Jiang, R., Guan, Y. Data-driven chance constrained stochastic program. Math. Program. 158, 291–327 (2016). https://doi.org/10.1007/s10107-015-0929-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-015-0929-7