Abstract

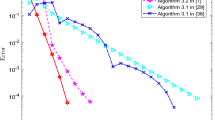

Augmented Lagrangian method (ALM) has been popularly used for solving constrained optimization problems. Practically, subproblems for updating primal variables in the framework of ALM usually can only be solved inexactly. The convergence and local convergence speed of ALM have been extensively studied. However, the global convergence rate of the inexact ALM is still open for problems with nonlinear inequality constraints. In this paper, we work on general convex programs with both equality and inequality constraints. For these problems, we establish the global convergence rate of the inexact ALM and estimate its iteration complexity in terms of the number of gradient evaluations to produce a primal and/or primal-dual solution with a specified accuracy. We first establish an ergodic convergence rate result of the inexact ALM that uses constant penalty parameters or geometrically increasing penalty parameters. Based on the convergence rate result, we then apply Nesterov’s optimal first-order method on each primal subproblem and estimate the iteration complexity of the inexact ALM. We show that if the objective is convex, then \(O(\varepsilon ^{-1})\) gradient evaluations are sufficient to guarantee a primal \(\varepsilon \)-solution in terms of both primal objective and feasibility violation. If the objective is strongly convex, the result can be improved to \(O(\varepsilon ^{-\frac{1}{2}}|\log \varepsilon |)\). To produce a primal-dual \(\varepsilon \)-solution, more gradient evaluations are needed for convex case, and the number is \(O(\varepsilon ^{-\frac{4}{3}})\), while for strongly convex case, the number is still \(O(\varepsilon ^{-\frac{1}{2}}|\log \varepsilon |)\). Finally, we establish a nonergodic convergence rate result of the inexact ALM that uses geometrically increasing penalty parameters. This result is established only for the primal problem. We show that the nonergodic iteration complexity result is in the same order as that for the ergodic result. Numerical experiments on quadratically constrained quadratic programming are conducted to compare the performance of the inexact ALM with different settings.

Similar content being viewed by others

Notes

Although the global convergence rate in terms of augmented dual objective can be easily shown from existing works (e.g., see our discussion in Sect. 5), that does not indicate the convergence speed from the perspective of the primal objective and feasibility.

By “simple”, we mean the proximal mapping of h is easy to evaluate, i.e., it is easy to find a solution to \(\min _{{\mathbf {x}}\in {\mathcal {X}}} h({\mathbf {x}}) + \frac{1}{2\gamma }\Vert {\mathbf {x}}-\hat{{\mathbf {x}}}\Vert ^2\) for any \(\hat{{\mathbf {x}}}\) and \(\gamma >0\).

Although [37] only considers the inequality constrained case, the results derived there apply to the case with both equality and inequality constraints.

Nedelcu et al. [29] assumes every subproblem solved to the condition \(\langle \tilde{\nabla } {\mathcal {L}}_\beta ({\mathbf {x}}^{k+1},{\mathbf {y}}^k ), {\mathbf {x}}-{\mathbf {x}}^{k+1}\rangle \ge -O(\varepsilon ),\,\forall {\mathbf {x}}\in {\mathcal {X}}\), which is implied by \({\mathcal {L}}_\beta ({\mathbf {x}}^{k+1},{\mathbf {y}}^k )-\min _{{\mathbf {x}}\in {\mathcal {X}}}{\mathcal {L}}_\beta ({\mathbf {x}},{\mathbf {y}}^k )\le O(\varepsilon ^2)\) if \({\mathcal {L}}_\beta \) is smooth with respect to \({\mathbf {x}}\).

References

Bazaraa, M.S., Sherali, H.D., Shetty, C.M.: Nonlinear Programming: Theory and Algorithms. Wiley, New York (2006)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Ben-Tal, A., Zibulevsky, M.: Penalty/barrier multiplier methods for convex programming problems. SIAM J. Optim. 7(2), 347–366 (1997)

Bertsekas, D.P.: Convergence rate of penalty and multiplier methods. In: 1973 IEEE Conference on Decision and Control Including the 12th Symposium on Adaptive Processes, vol. 12, pp. 260–264. IEEE (1973)

Bertsekas, D.P.: Nonlinear Programming. Athena Scientific, Belmont (1999)

Bertsekas, D.P.: Constrained Optimization and Lagrange Multiplier Methods. Academic press, London (2014)

Birgin, E.G., Castillo, R., Martínez, J.M.: Numerical comparison of augmented lagrangian algorithms for nonconvex problems. Comput. Optim. Appl. 31(1), 31–55 (2005)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach Learn. 3(1), 1–122 (2011)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Deng, W., Yin, W.: On the global and linear convergence of the generalized alternating direction method of multipliers. J. Sci. Comput. 66(3), 889–916 (2016)

Gao, X., Xu, Y., Zhang, S.: Randomized primal-dual proximal block coordinate updates. J. Oper. Res. Soc. China 7(2), 205–250 (2019)

Glowinski, R.: On alternating direction methods of multipliers: a historical perspective. In: Fitzgibbon, W., Kuznetsov, Y., Neittaanmäki, P., Pironneau, O. (eds.) Modeling, Simulation and Optimization for Science and Technology. Computational Methods in Applied Sciences, vol. 34. Springer, Dordrecht (2014)

Grant, M., Boyd, S., Ye, Y.: CVX: Matlab Software for Disciplined Convex Programming (2008)

Güler, O.: On the convergence of the proximal point algorithm for convex minimization. SIAM J. Control Optim. 29(2), 403–419 (1991)

Güler, O.: New proximal point algorithms for convex minimization. SIAM J. Optim. 2(4), 649–664 (1992)

Hamedani, E.Y., Aybat, N.S.: A primal-dual algorithm for general convex-concave saddle point problems. arXiv preprint arXiv:1803.01401 (2018)

He, B., Yuan, X.: On the acceleration of augmented Lagrangian method for linearly constrained optimization. Optimization Online (2010)

He, B., Yuan, X.: On the \({O}(1/n)\) convergence rate of the douglas-rachford alternating direction method. SIAM J. Numer. Anal. 50(2), 700–709 (2012)

Hestenes, M.R.: Multiplier and gradient methods. J. Optim. Theory Appl. 4(5), 303–320 (1969)

Kang, M., Kang, M., Jung, M.: Inexact accelerated augmented Lagrangian methods. Comput. Optim. Appl. 62(2), 373–404 (2015)

Kang, M., Yun, S., Woo, H., Kang, M.: Accelerated bregman method for linearly constrained \(\ell _1\)-\(\ell _2\) minimization. J. Sci. Comput. 56(3), 515–534 (2013)

Lan, G., Monteiro, R.D.: Iteration-complexity of first-order augmented lagrangian methods for convex programming. Math. Program. 155(1–2), 511–547 (2016)

Li, Z., Xu, Y.: First-order inexact augmented lagrangian methods for convex and nonconvex programs: nonergodic convergence and iteration complexity. Preprint (2019)

Lin, T., Ma, S., Zhang, S.: Iteration complexity analysis of multi-block admm for a family of convex minimization without strong convexity. J. Sci. Comput. 69(1), 52–81 (2016)

Liu, Y.-F., Liu, X., Ma, S.: On the non-ergodic convergence rate of an inexact augmented lagrangian framework for composite convex programming. Math. Oper. Res. 44(2), 632–650 (2019)

Lu, Z., Zhou, Z.: Iteration-complexity of first-order augmented lagrangian methods for convex conic programming. ArXiv preprint arXiv:1803.09941 (2018)

Monteiro, R.D., Svaiter, B.F.: Iteration-complexity of block-decomposition algorithms and the alternating direction method of multipliers. SIAM J. Optim. 23(2), 475–507 (2013)

Necoara, I., Nedelcu, V.: Rate analysis of inexact dual first-order methods application to dual decomposition. IEEE Trans. Autom. Control 59(5), 1232–1243 (2014)

Nedelcu, V., Necoara, I., Tran-Dinh, Q.: Computational complexity of inexact gradient augmented lagrangian methods: application to constrained mpc. SIAM J. Control Optim. 52(5), 3109–3134 (2014)

Nedić, A., Ozdaglar, A.: Approximate primal solutions and rate analysis for dual subgradient methods. SIAM J. Optim. 19(4), 1757–1780 (2009)

Nedić, A., Ozdaglar, A.: Subgradient methods for saddle-point problems. J. Optim. Theory Appl. 142(1), 205–228 (2009)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Kluwer Academic Publisher, Norwell (2004)

Nesterov, Y.: Gradient methods for minimizing composite functions. Math. Program. 140(1), 125–161 (2013)

Ouyang, Y., Chen, Y., Lan, G., Pasiliao Jr., E.: An accelerated linearized alternating direction method of multipliers. SIAM J. Imaging Sci. 8(1), 644–681 (2015)

Ouyang, Y., Xu, Y.: Lower complexity bounds of first-order methods for convex-concave bilinear saddle-point problems. ArXiv preprint arXiv:1808.02901 (2018)

Powell, M.J.: A method for non-linear constraints in minimization problems. In: Fletcher, R. (ed.) Optimization. Academic Press, New York (1969)

Rockafellar, R.T.: A dual approach to solving nonlinear programming problems by unconstrained optimization. Math. Program. 5(1), 354–373 (1973)

Rockafellar, R.T.: The multiplier method of hestenes and powell applied to convex programming. J. Optim. Theory Appl. 12(6), 555–562 (1973)

Rockafellar, R.T.: Augmented lagrangians and applications of the proximal point algorithm in convex programming. Math. Oper. Res. 1(2), 97–116 (1976)

Schmidt, M., Roux, N.L., Bach, F.R.: Convergence rates of inexact proximal-gradient methods for convex optimization. In: Advances in Neural Information Processing Systems, pp. 1458–1466 (2011)

Tseng, P., Bertsekas, D.P.: On the convergence of the exponential multiplier method for convex programming. Math. Program. 60(1), 1–19 (1993)

Xu, Y.: Accelerated first-order primal-dual proximal methods for linearly constrained composite convex programming. SIAM J. Optim. 27(3), 1459–1484 (2017)

Xu, Y.: Primal-dual stochastic gradient method for convex programs with many functional constraints. ArXiv preprint arXiv:1802.02724 (2018)

Xu, Y.: Asynchronous parallel primal-dual block coordinate update methods for affinely constrained convex programs. Comput. Optim. Appl. 72(1), 87–113 (2019)

Xu, Y., Yin, W.: A block coordinate descent method for regularized multiconvex optimization with applications to nonnegative tensor factorization and completion. SIAM J. Imaging Sci. 6(3), 1758–1789 (2013)

Xu, Y., Zhang, S.: Accelerated primal-dual proximal block coordinate updating methods for constrained convex optimization. Comput. Optim. Appl. 70(1), 91–128 (2018)

Yu, H., Neely, M.J.: A primal-dual type algorithm with the \({O} (1/t)\) convergence rate for large scale constrained convex programs. In: 2016 IEEE 55th Conference on Decision and Control (CDC), pp. 1900–1905. IEEE (2016)

Yu, H., Neely, M.J.: A simple parallel algorithm with an \({O}(1/t)\) convergence rate for general convex programs. SIAM J. Optim. 27(2), 759–783 (2017)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is partly supported by NSF Grant DMS-1719549.

Relation of the primal-dual \(\varepsilon \)-solutions in Definition 2 and (5.1)

Relation of the primal-dual \(\varepsilon \)-solutions in Definition 2 and (5.1)

In this section, on linearly constrained problems in the form of (1.13) with \(f_0=g+h\), we compare the two different definitions of primal-dual \(\varepsilon \)-solutions given in Definition 2 and (5.1). The analysis in the second part follows from the proof of Theorem 2.1 in [25].

First, let \((\bar{{\mathbf {x}}},\bar{{\mathbf {y}}})\) be a point satisfying (5.1). Then it follows from (2.2) that

In addition, we have from the convexity of g and (5.1) that for any \({\mathbf {x}}\in {\mathcal {X}}\) and any constant \(\beta >0\),

Letting \({\mathbf {x}}={\mathbf {x}}^*\) in the above inequality gives \(f_0(\bar{{\mathbf {x}}}) - f_0({\mathbf {x}}^*) \le \varepsilon +\Vert \bar{{\mathbf {y}}}\Vert \sqrt{\varepsilon }\), and minimizing the left hand side about \({\mathbf {x}}\in {\mathcal {X}}\) yields \(f_0(\bar{{\mathbf {x}}})-d_0(\bar{{\mathbf {y}}})\le \varepsilon +\Vert \bar{{\mathbf {y}}}\Vert \sqrt{\varepsilon }.\) Hence, \((\bar{{\mathbf {x}}},\bar{{\mathbf {y}}})\) is an \(O(\sqrt{\varepsilon })\)-solution in Definition 2.

On the other hand, let \((\bar{{\mathbf {x}}},\bar{{\mathbf {y}}})\) be a primal-dual \(\varepsilon \)-solution in Definition 2. Let

and

where \(L_0\) is the Lipschitz constant of \(\nabla g\). Then we have (cf. [45, Lemma 2.1]) \({\mathcal {L}}_0(\bar{{\mathbf {x}}},\bar{{\mathbf {y}}})-{\mathcal {L}}_0(\bar{{\mathbf {x}}}^+,\bar{{\mathbf {y}}})\ge \frac{L_0}{2}\Vert \bar{{\mathbf {x}}}^+-\bar{{\mathbf {x}}}\Vert ^2\). Since \(\Vert {\mathbf {A}}\bar{{\mathbf {x}}}-{\mathbf {b}}\Vert \le \varepsilon \) and \(f_0(\bar{{\mathbf {x}}})-d_0(\bar{{\mathbf {y}}})\le 2\varepsilon \), we have \({\mathcal {L}}_0(\bar{{\mathbf {x}}},\bar{{\mathbf {y}}})-d_0(\bar{{\mathbf {y}}}) \le \varepsilon \Vert \bar{{\mathbf {y}}}\Vert +2\varepsilon \). Noting \(d_0(\bar{{\mathbf {y}}})\le {\mathcal {L}}_0(\bar{{\mathbf {x}}}^+,\bar{{\mathbf {y}}})\), we have \(\frac{L_0}{2}\Vert \bar{{\mathbf {x}}}^+-\bar{{\mathbf {x}}}\Vert ^2\le \varepsilon \Vert \bar{{\mathbf {y}}}\Vert +2\varepsilon ,\) and thus \(\Vert \bar{{\mathbf {x}}}^+-\bar{{\mathbf {x}}}\Vert \le \sqrt{\frac{2\varepsilon (\Vert \bar{{\mathbf {y}}}\Vert +2)}{L_0}}\). By the triangle inequality, it holds that

In addition, we have from (A.1) the optimality condition

and thus

Therefore, \((\bar{{\mathbf {x}}}^+,\bar{{\mathbf {y}}})\) is an \(O(\sqrt{\varepsilon })\)-solution in the sense of (5.1).

Rights and permissions

About this article

Cite this article

Xu, Y. Iteration complexity of inexact augmented Lagrangian methods for constrained convex programming. Math. Program. 185, 199–244 (2021). https://doi.org/10.1007/s10107-019-01425-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-019-01425-9

Keywords

- Augmented Lagrangian method (ALM)

- Nonlinearly constrained problem

- First-order method

- Global convergence rate

- Iteration complexity