Abstract

Simulator training is becoming increasingly important for training of time-critical and dynamic situations. Hence, how simulator training in such domains is planned, carried out and followed up becomes important. Based on a model prescribing such crucial aspects, ten decision-making training simulator facilities have been analyzed from an activity theoretical perspective. The analysis reveals several conflicts that exist between the training that is carried out and the defined training objectives. Although limitations in technology and organization are often alleviated by proficient instructors, it is concluded that there is a need for a structured approach to the design of training to be able to define the competencies and skills that ought to be trained along with relevant measurable training goals. Further, there is a need for a pedagogical model that takes the specifics of simulator training into account. Such a pedagogical model is needed to be able to evaluate the training, and would make it possible to share experiences and make comparisons between facilities in a structured manner.

Similar content being viewed by others

1 Introduction

Training and exercises are an essential part of learning complex skills and to gain competence and knowledge. With the introduction and use of computers, training possibilities—using computer-based serious games and simulators—have dramatically increased and particularly so in domains that are inherently complex, time-critical, and involving high risk. Today, training in such complex situations involving, e.g., operating a nuclear power plant, managing emergency situations, or military command and control, can be designed for and undertaken using simulators where the computer can visualize the environment and the dynamics in relation to the actions of the user. These domains are characterized by dynamic, time-critical and ill-structured problems that tend to be difficult to get an overview of and to make a comprehensive representation of (Orasanu and Connolly 1993). Decisions that have to be made in these domains often concern environmentally lethal and life-threatening situations. Thus, training in these domains involves making decisions and taking actions to avoid potentially severe consequences. In light of the potentials and possibilities that are offered by simulator training, and to be able to make the most out of this training, this study serves to investigate the following research question:

-

How is simulator training in time-critical and dynamic domains planned, carried out, and followed up?

Based on this question the following more specific questions are formulated:

-

How is training purposefully designed and conducted?

-

How is performance, skill and competency evaluated and assessed?

-

According to stakeholders investing in simulator training, what are the benefits of training in simulated environments?

-

How are questions regarding transfer of knowledge, competencies and skills from training in simulators to real-world contexts handled?

The aim of the study is to present a general analysis of how simulator training in dynamic decision-making domains is designed, carried out, and evaluated. The empirical data used in the study have been gathered from a variety of representative Swedish simulator training facilities. The analysis is based on an activity theoretical framework that allows to structure, describe and articulate plausible conflicts within an activity system (e.g., an instructor carrying out training, or an organization providing with the training), or between activity systems. Results from such an analysis points to problems within and between organizations, and in the context of this study thereby contributes with solutions to the design of simulator training in dynamic decision-making domains.

The article is structured as follows. Section 2 provides background information concerning decision-making in time-critical dynamic domains, discusses related work, and concludes with a set of crucial aspects to take into account when designing simulator training. Section 3 presents the theoretical and analytical framework used in the study, and Sect. 4 discusses the methodological aspects. Next, the actual analysis and results are presented in Sect. 5 and further discussed in Sect. 6 where the results are also related to the previously established key aspects of training according to Sect. 2. Finally, Sect. 7 concludes the article.

2 Dynamic decision-making simulator training

This section starts out by describing and characterizing dynamic distributed decision-making in complex situations, which is the kind of decision-making that the simulator training investigated in this study seeks to prepare trainees for. Next, a review of the related research literature follows, pointing to a lack of empirically founded comparative research on simulator training in such domains. Finally, models that can be used to guide resource-efficient design and development of simulator training to ascertain that training goals are met are described, and a set of common characteristics concerning crucial aspects to be considered during simulator training is presented as a basis for analyzing the results of this study.

2.1 Dynamic distributed decision-making in complex situations

In domains that are inherently complex, time-critical and involve high risk, decision-makers have to deal with imperfect, incomplete information about the target system or adversary and thus need training to cope with this. Furthermore, the domains include social systems where several people need to coordinate their actions and decisions. These characteristics fall within the research area of dynamic decision-making which was first defined by Edwards (1962) and later extended by Brehmer and Allard (1991) as:

-

a series of decisions are required,

-

the decisions are not independent,

-

the state changes both autonomously and as a consequence of the decision-maker’s actions, and

-

the decisions have to be made in real-time.

This definition assumes a cybernetic perspective which includes that the decision-makers have a common goal, that they are continuously able to ascertain the state of the target system, and that they are able to affect the system and can form a model of the system’s functioning (Brehmer 1992; Conant and Ashby 1970).

The problem is further complicated by information, and decision-making being distributed among several individuals highlights a number of factors including: having partial overview of the system as well as the state of it, having different knowledge and information processing capabilities, being at different geographical locations, having different tasks and possibilities to affect the state of the system, and finally having different positions in the command chain (Artman 2000; Artman and Wærn 1999; Brynielsson 2006). Individual goals and decisions are further not independent and can potentially be in conflict. For training this means that a team, constituting a control system, trains to coordinate and communicate their decisions as well as other actions and observations to accomplish a common goal, the goal being either to reach a steady state or mission completion (Artman and Wærn 1999). The training includes individual decision-making but the overarching goal is the collective decision-making and the action capabilities that are required to reach a common goal.

All in all, training of dynamic distributed decision-making for complex, time-critical situations relies on emergent interactions that need to be taken into account. Training for these situations has a stronger focus on the relevant acting in a given action space, rather than on details such as the correct operation of instruments. Since the targeted domains are dynamic and to a large extent also unpredictable, there are often no correct or prescribed actions and decisions. Acting and making decisions can rather be looked upon as a constant and disciplined improvisation (Sawyer 2004). Training thus needs to prepare the decision-makers to coordinate their actions under such circumstances, i.e., the actions of the team need to match the dynamics of the system (Conant and Ashby 1970).

2.2 Design of simulator training

Much simulator training is conducted in domains that share the characteristics described in the previous section, and evaluations of simulator training typically focus on the effects of simulator training on the operative environment. Research documented in the literature most often originates from individual studies (see, e.g., Remolina et al. 2009; Ney et al. 2014; Sellberg 2018; Wahl 2019) or meta-reviews of published articles. As others have noted (see for example Lineberry et al. 2013) there is a significant amount of research on simulator training within the medical domain (see, e.g., the reviews by Lineberry et al. 2013; Cook et al. 2011; Issenberg et al. 2005). Reviews in the medical domain mainly focus on the relative effectiveness of simulation as compared to practice and intervention. Lineberry et al. (2013), however, report that it is difficult to draw meaningful overarching conclusions regarding the effectiveness of simulator training due to methodological problems. Although there admittedly are methodological issues involved in making such comparisons and trying to come to definitive conclusions regarding the effectiveness of simulator training, the potential benefits from training in simulators have been reported on by Cook et al. (2013) in their meta-analysis. Further, the effects of simulator-based training seem to be favored by theory-predicted instructional design (ibid.)

In the domain of flight simulation and training, a meta-analysis of flight simulator training effectiveness has been conducted aiming at identifying important characteristics associated with effective simulator training (Hays et al. 1992). In their research, the authors had to tackle many of the difficulties of conducting a meta-analysis due to the lack of crucial information and data in reports and articles published in the field. Based on their findings, the authors conclude that the use of simulators in combination with aircraft training produced clear improvements as compared to aircraft training only. The effectiveness of simulator training is also reported to be influenced by the type and amount of training (ibid.), and the effectiveness has elsewhere been found to vary with the training methods used, see, e.g., Bailey et al. (1980). That is, the design of training including simulator training and aircraft training, influences the training outcome. In another meta-review on the effectiveness of using flight simulators in training, the authors report that very few studies were found focusing on transfer effects of skills (Bell and Waag 1998). However, in terms of subjective experience of transfer there are several studies pointing towards positive effects of simulator training (ibid.)

Another example of a review of research and practice of simulator training was conducted from a human factors perspective (Stanton 1996). Although this review does take a larger grip than focusing on the relative effectiveness of simulator training, it does, however, not focus on how simulator training is designed, carried out and evaluated in different training domains. Reviews and studies thus tend to focus on simulation-based training and its effects within a certain domain. Hence, there is a lack of empirical research focusing on how simulator training programs are designed involving how these are planned, carried out and evaluated within different domains that share the common characteristics of being complex, time-critical and involving high risk.

2.3 Structured approaches to the design of training and evaluation

Regardless of the particular training environment or domain, for effective training to take place it is essential to design a process for analyzing, developing, delivering and evaluating training that provides feedback between and across phases, as well as support the development of relevant measures of the effectiveness of training. Several different and partly overlapping models for this purpose exist and these share common characteristics in that they aim to guide resource-efficient development in support of effective training and, ultimately, performance improvement (Artman et al. 2013). Analysis–Design–Development–Implementation–Evaluation, ADDIE (Branson et al. 1975) is for instance a process built around five phases and foci ranging from conducting an analysis to clarifying the objectives, needs and requirements for the training, to an evaluation phase with the purpose of evaluating each phase of the process as well as the success of the training program. The approach was originally developed to formalize the process of developing military interservice training. Current applications of the process are dynamic and iterative between and across phases whereas the original process was considered hierarchical and sequential. Yet other frameworks and methods that can be used to design training—including how to plan, structure, carry out, and evaluate training—include for instance the “training needs analysis” (Goldstein 1993), and “task and work analysis” (Wilson et al. 2012).

The study reported on in this article was built on established perspectives and design approaches. Building on common characteristics exemplified by the above-mentioned models, a set of crucial aspects to consider for planning, developing, carrying out, and evaluating training in simulator environments was defined. These aspects are:

-

Define goals with training For effective training to take place, goals need to be well defined and linked to both higher order and lower order, and more detailed goals and measures.

-

Conduct needs and requirements analyses The goals that are defined need to be broken down to more detailed and measurable subgoals.

-

Develop and design relevant scenarios Scenarios used in training need to be tightly coupled with training goals, provided with relevant situations that in turn can count as important input to debriefing as well as measuring of performance.

-

Conduct adapted and meaningful evaluation of performance Evaluation of performance needs to enable deliberate and thought-through modifications and adjustments of training over time.

-

Assume a pedagogical approach to training The use of training equipment (simulators, etc.) and other tools to be used in training need to fit into the pedagogical approach that is assumed.

-

Conduct debriefing after training Conducting debriefing (with aid of supporting technologies and tools such as visualizations) enables discovery and reflection on training results that go beyond single measures and standalone results.

-

Motivate the trainee Motivation is a basic and crucial prerequisite to learning.

-

Make sure there is positive transfer to operative environment Although debated, the question of transfer should be taken into account to the extent possible.

-

Define roles for instructors and others involved in carrying out training The pedagogical approach that is assumed and the way it is implemented require clear definitions of roles and responsibilities.

-

Clarify the organizational prerequisites The prerequisites of an organization in terms of technology, competence, economy, personnel, etc., form design constraints and boundaries of what can be expected from a certain training program.

The aspects accounted for above have in this study been used as themes to be covered through semi-structured interviews with representatives at simulator and training facilities, and have also formed the basis for more specific questions as well as foci for observations conducted at the facilities. All of these aspects could, in a sense, be claimed to be rather intuitive and obvious to consider when designing training. However, in practice organizational and other issues as well as who is accountable for the training put constraints on what is possible to do, which in turn has consequences for to what extent these aspects can be adhered to.

3 Theoretical and analytical framework: activity theory

Given the aim of the article—to take an overall domain-specific view on simulator training rather than focusing solely on the effects of the training as such—there is a need for a theoretical and analytical framework that purposefully permits structuring and analysis of simulator training on different levels of abstraction. Activity theory is described by Kuutti (1996) as a philosophical and cross-disciplinary framework for the study of human practices in real-life situations where the context of the activity is included on both individual and social levels. To exemplify, entertainment-oriented games are played in such a context where different forms of sociocultural extrinsic play activities take place around and beyond the ordinary game context (Ang et al. 2010).

The activity model used in this study is based on Engeström’s (1987) model of activity systems, expanded from the work by Vygotsky (1986) and Leont’ev (1978). Engeström’s model (see Fig. 1) consists of subjects, objects and outcomes of an activity which are bound to the mediating artifacts that are in use, as well as the sociocultural contexts in which they take place in terms of rules, community of practice and division of labor. This model depicts the framework in which human cognition is distributed. Furthermore, it describes not only the individuals that partake in the activity (the subject), but also the other people and the context of the activity that must be taken into account at the same time (Cole and Engeström 1993). Due to the fact that activities are often connected to other activities, and, therefore, are subjected to external influence, there are almost always conflicts or contradictions between or within elements or between different activities. These conflicts or contradictions are seen as sources of possible development (Kuutti 1996).

The activity theoretical framework has been applied in several learning contexts, for instance within mobile learning (Nouri et al. 2014; Nouri and Cerratto-Pargman 2015; Dissanayeke et al. 2014, 2016), and has also previously been applied in the context of simulator training. For instance in the medical domain, Ellaway (2014) describes a framework derived from Leont’ev (1978) and Engeström (1987) where virtual patients are considered as activities instead of artifacts, and concludes that the use of virtual patients and the ways that they are used is what brings about educational value. In another study, Battista (2015) argues that activity theory ought to be used as a “theoretical lens,” and demonstrates how the theory can be used to generate rich descriptions and analyses of participants’ activities and how they accomplish their goals during simulation-based training. In yet another study from the domain of flight simulation and training, commercial pilots’ views and opinions regarding flight simulator training was reviewed (Qi and Meloche 2009). The authors used activity theory as a framework where flight simulator training is seen as a mediating artifact to achieve the pilots’ goals. The pilots recognized the simulator as a type of knowledge management aid in that it shares, organizes and uses the pilots’ knowledge (ibid.)

In this work, a similar conceptualization and use of the theoretical and analytical framework has been made. The simulator training was characterized and analyzed on an organizational level as well as on an operational level, and looked upon from the perspective of an instructor carrying out the training. The purpose of using the framework and conducting the analysis from an activity theoretical perspective is to enable the observation and description of conflicts, contradictions, etc., within elements and activities as well as between activity systems on different levels of abstraction. This analysis enables identifying how, and to what extent, organizations’ and instructors’ training design adhere to structured approaches to design of simulator training as described in Sect. 2.3, and how difficulties to adhere to these approaches are handled.

4 Method and data collection

The data collection consisted of visits to ten facilities training in domains that are characterized as being time-critical and dynamic. The selection of simulator facilities was partly based on convenience, i.e., the willingness to participate in the study. Among the simulator facilities agreeing to participate, simulator facilities from different domains and branches (military as well as civilian) were selected to enable a comparative analysis. The data collection was conducted to obtain an understanding of the respective facilities in general, and the training taking place in particular. This included inspection of simulators and other equipment used during training as well as hands-on experiences. A qualitative interview with one to two interviewees was conducted which lasted approximately 1–2 h. The interviewees were selected due to their experience, expertise, involvement, and responsibility. None of the interviewees were responsible for the commissioning of the training, but they were responsible for the planning, carrying out, and development of training at the facilities. All interviewees were male aged 45–55 years. They were all experienced instructors and experts in the pedagogical approaches assumed at the facilities. In the military facilities, the instructors were officers with many years of experience within their respective armed forces services. In the civilian facilities, the instructors all had at least an undergraduate degree. The interview questions were based on ten different themes that had been identified as vital parts for designing, planning, and carrying out simulator training, as described in more detail in Sect. 2.3.

Activity theory has been utilized to structure the data and the analysis. It has been used to explore inconsistencies within a specific facility, and how and to what extent organizations and instructors adhere to structured approaches for their design of simulator training. A further interest has been to explore possible tensions between the prescribed training goals, the provision of resources for carrying out the training, and the actual carrying out of training.

The questions in the interviews were formulated based on the defined key aspects of simulator training according to Sect. 2.3. Hence, the defined aspects structured the interview, and also functioned as an interview guide. The thematic analysis was theory driven, and the answers to the questions were, hence, organized in terms of the nodes and relations as defined in the activity theory model (see Sect. 3). The model prescribes the central role of a subject, which in the analysis and below presentation has been defined as the operative instructor (i.e., the interviewee) in one analysis and the entity of the organization in the second analysis (i.e., when the interviewee refers to general actors inside the organizational body and/or other organizational aspects). The authors all participated in the processing and analysis of the interview data.

The ten facilities visited are all conducting simulator training, but in various expanse and in various ways. Seven out of ten facilities are part of the Swedish Armed Forces (army, air force, and navy), whilst the other three are civilian facilities. The ten facilities visited are:

Swedish Air Force

-

Swedish Air Force Combat Simulation Centre (FLSC) At FLSC, training of simulated air combat is conducted. All Swedish Air Force squadrons train at the facility regularly, and it is also used by foreign air force squadrons as well as for research purposes.

-

Command, Control and Air Surveillance School The school is part of the Swedish Air Force Air Combat Training School, and educates forward air controllers and air surveillance operators.

-

Norrbotten Air Force Wing (F21) F21 has the responsibility for the surveillance of the Swedish air space along with F17 (Blekinge Air Force Wing). Simulator training for complementary flight training and combat readiness training is provided.

-

Flying School The school provides basic flight training, and is part of the Swedish Air Force Air Combat Training School.

Swedish Navy

-

Naval Warfare Centre As part of the overall responsibility to train soldiers and officers in naval warfare and amphibious operations, simulators are being used for training within fields such as command and control, radio communication, etc.

Swedish Army

-

Land Warfare Centre The center is responsible for education, development and training for prospective chiefs and specialist officers. At the command training facility, the center trains the army’s different units with the help of simulator technology (Virtual Battlespace 3).

-

Swedish Armed Forces International Centre (SWEDINT) The center provides education and training for military, police, and civilian personnel in support of peace support operations (PSO) led by, e.g., UN, NATO, or EU, with the use of Virtual Battlespace 3.

Civilian facilities

-

Swedish Civil Contingencies Agency (MSB) MSB provides education and training within the field of societal safety and readiness. Training at the command training facility combines simulations and visualizations with role play.

-

Chalmers University of Technology, Shipping and Marine Technology The department provides education for ship’s officers and marine professionals. Simulators are used as a vital part of the education in the form of, e.g., full mission bridge simulators, an engine operations simulator, and simulators for practicing radio communication.

-

KSU Ringhals The facility in Ringhals is part of the nationwide KSU Nuclear Power Safety and Education center, which has been developed to make the Swedish nuclear power safer. The facility provides education and simulator training for the operating staff of the nuclear power plant through using simulators that are identical to the control rooms.

First an analysis of the individual simulator facilities was conducted. The purpose of the analysis has then been to explicate general, generic and less common practices as well as the design and organization of simulator training at diverse facilities, rather than pinpointing difficulties, problems or deficiencies at specific facilities. Hence, the identity of the individual facilities has been kept secret in the analysis.

5 Analysis and results

All the simulator facilities included in this study have the objective to deliver training of skills necessary to manage complex situations and decision-making in these. In general, most of the trainees are already skilled in terms of handling and operating the systems and policies for the tasks, but need training and experience in handling decision-making or cooperation in complex, time-critical and high-risk situations. The design of scenarios are, therefore, of utmost importance as mediating tools to reach the defined objective of training.

In the following, the article accounts for the analyses conducted, focusing on conflicts that propagate through the two different levels of abstraction with regard to conducting training activities. Below, these two levels are referred to as “the level of the instructor” and “the level of the facility/organization.”

5.1 The level of the instructor

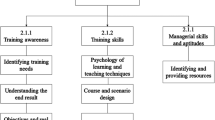

The design and planning of training is based on information from subject matter experts concerning what to train based on defined training goals emanating from documents, policies and regulations defining professional practice. The outcome of the planning is one or more scenarios aiming at subjecting the trainee to specific situations meant to elicit certain training objects, e.g., for making well-informed and to the situation appropriate decisions (see Fig. 2, nodes subject–tool–object).

The experience and role of the instructor thus becomes crucial to designing and carrying out training that meets the objective of professional training. This involves communicating with subject matter experts to be able to design relevant scenarios to pinpoint specific learning goals, to together with technicians and subject matter experts have defined relevant performance measures to enable evaluation of training results, and to meaningfully utilize available tools to carry out debriefing and following up of training results over time (i.e., covering all nodes in the activity system according to Fig. 2). The ability to in situ and dynamically make changes in a scenario to balance the level of difficulty to match the skills of those being trained also heavily relies on the experience of the instructor.

While the background, training and pedagogical experience of the instructor become crucial to designing and carrying out training, this also makes training vulnerable in different respects. Relying too heavily on the individual experience and skills of the instructor obviously makes organizational change problematic. However, other vulnerabilities include the risk of creating idiosyncratic libraries of scenarios or ignoring to at all maintain and document suitable scenario libraries and their use, as well as difficulties of staying up-to-date with technical and other developments (nodes subject–tool if scenarios are available, or subject–object if no scenario is available). The conflicts found during the analysis are presented in the following section.

5.1.1 Conflicts

In the analysis of simulator training at the facilities, several conflicts were found. These concerned conflicts between the instructor, the tools and the other resources used to carry out simulator training, see Fig. 3.

Subject–tool–object The instructors at seven of the ten facilities had limited access to a library of scenarios tailored at training of specific competencies and skills. In practice, this means that the instructor needs to design and/or adapt the existing scenarios to fit with training of specific skills at each training occasion. In yet other cases, the simulator facility lacks dynamic functionalities and systems, which in practice means that the instructor needs to design the training using a less dynamic course of events. These difficulties concern both training in the simulator environment as well as how debriefing after training can be designed and carried out. For instance, difficulties concerning access to log data, quantitative performance measures and tools for visualization to use during debriefing, were something all of the instructors at the ten facilities commented on in the interviews. In particular, a need for quantitative performance measures to be able to follow up training results over time was expressed. This in turn might have consequences for to what extent the objectives with the training can be reached and ascertained. In cases of continuous retraining to uphold a certain level of proficiency and prevent skill-decay, instructors raised concerns regarding the little time that is devoted to this kind of training.

Subject–rules–community At nine of the ten facilities, the instructors expressed the need of having more clear directives and regulations and, more importantly, having access to well-defined training goals originating from the commissioning body. The lack of having access to well-defined training goals in practice means that the instructor in collaboration with subject matter experts need to agree upon and define what skills and competencies that are to be trained at a given training occasion. While not having access to this information could be claimed to introduce a level of flexibility and adaptability, it also makes it difficult for the instructor to plan for training sessions in advance including the design of appropriate training scenarios. This further makes it difficult for the instructor to design and plan for consecutive and long-term training sessions where the level of difficulty is successively increased, and/or introducing new challenges in a systematic way. In some of the military schools, the instructors also expressed that due to the high pace within and between the educational programs, little time remains for reflection regarding the education carried out, the use of simulator training in education, etc. Another concern that was raised was that there is little time to experiment and try out new ideas.

5.2 The level of the facility/organization

The facility that hosts the simulator is an organization in its own right that is not directly dependent on the commissioning body. That is, the facility can structure its own organization in terms of processes, personnel and roles to design and provide adequate and appropriate training for those receiving it. The simulator and, as will be discussed below, its inherent fidelity at the facility is the main tool for carrying out the needed training. The simulator and its characteristics as such is thus framing how and what the facility can provide in terms of training, but also how the instructor and others involved may work and carry out the training.

The organization within which simulator training is carried out has a commissioning body procuring training. Ideally, the commissioning body should provide rules, regulations and competence profiles to define and facilitate training at the simulator facilities (see Fig. 4, nodes subject–rules–community–object). Furthermore, the commissioning body must have a clear idea and understanding of the mindset of the professionals that will receive training.

As observed in the analysis, these rules, regulations and competence profiles are, however, often not clearly defined and/or communicated to the facilities, which of course becomes problematic to the facilities carrying out training in striving towards the objective of professional training. The engagement of senior representatives being an integral part of the operation of the facilities can to some extent bridge this gap by providing with information or aspects of scenario design that tailor specific requirements for a specific training session. Below, the conflicts observed in the analysis are emphasized.

5.2.1 Conflicts

Conflicts observed during analysis are illustrated in Fig. 5, and primarily concern the relations between the simulator facility, the tools used, the community and the division of labor.

Subject–tool–object A few facilities use low-fidelity simulators mostly due to economic reasons. In these cases, the training experience could be claimed to be nonrealistic. However, this does not necessarily impede the training outcome, which depends on what skills and competencies that are trained during the simulator training. For example, simulator training can focus on decision-making and communication skills rather than the specifics of managing instruments, panels and other tools. This thus puts requirements on those planning and carrying out the training to train those skills and competencies that the simulator environment can support without creating negative transfer effects. Also, in the case of educational programs there is the opportunity to combine simulator training with real-world training and practice that can help alleviate possible negative effects. This puts requirements on the pedagogical approach assumed, and the access to real-world training and practice. A general statement could be that the more general and generic skills or basic training that the training is aimed for, the less need for high-fidelity simulators. That is, when the training targets decision-making in dynamic situations in general rather than during specific events or tactical situations, the simulator can be less dynamic and provide relatively simple pictures and movies rather than dynamic and immersive fully replicated environments. At the same time, such training tends to be highly dependent on the instructors’ active intervention to enact the dynamics of a scenario. Thus, the facilities’ simulator functionality and fidelity propagate throughout the activity system and frame how training can be performed.

Subject–rules–community As previously observed, there is a conflict on the instructor level regarding the lack of access to information regarding training goals. On the facility level, this conflict manifests itself as a lack of communication and interaction between the commissioning body and the facilities, and more specifically so related to the degree of access that a facility has to rules, regulations and competence profiles for the training. This primarily concerns the military facilities where much of the information is classified. In practice, this means that the simulator facility needs to create alternative ways of apprehending what the commissioning body (and the trainees) needs in their simulator training. While this increases the workload for the facility personnel, it also complicates the planning of training programs and reaching the objective of professional training.

Subject–division of labor A majority of the facilities have a somewhat fragile organization in that the responsibility for the operations relies on a few key individuals. This of course increases the workload for these individuals and in effect also means that there is little time to reflect, experiment and develop the facility and its activities further. On an individual level this could concern, e.g., development of pedagogical competence, and on the level of the facility the possibility to try out new pedagogical approaches is affected. While the simulator facilities have increased their amount of training during recent years due to a high demand, this creates a long-term problem due to the reliance on key individuals and the little time that can be devoted to continued development. This and other interlevel conflicts between the activity systems are described in more detail in the next section.

5.3 Conflicts between the activity systems

The above presentations of conflicts have focused on conflicts and inconsistencies within the respective level, i.e., from the perspective of the instructor and from the perspective of the facility counting as units of analyses. There are, however, also conflicts between these two levels of abstraction, as illustrated by Fig. 6.

The most important and serious conflict is that of the instructor’s role as simulator training designer and the division of labor within a facility (the node subject on the level of the instructor, and the node division of labor on the level of the organization). The instructor is a key person and a scarce resource which makes the facilities dependent on the instructor’s availability both strategically and operatively. The problem propagates throughout the levels of activities since the design of the training programs (scenario, pedagogical models, performance measures and debriefing) heavily relies on the instructor and in effect the instructor has little time to reflect on, discuss and develop these aspects. Strategically the facility thus becomes vulnerable. A simple solution would of course be to employ more instructors, but this is among other things dependent on the financial situation of the facility.

The second interlevel conflict is between the tools that are available to the instructor and the object of the facility in terms of training and fostering appropriate skills (the node tool on the level of the instructor, and the node object on the level of the organization). The tools the instructors’ use are seldom focused on skills, but rather on measuring scenario-specific performance. The conflict here lies in what the facilities can offer to the commissioning bodies and what they can actually assess and demonstrate in terms of measurement and evaluation. This is in turn connected to a more general problem of measuring and ascertaining effects of training.

Many of the different conflicts within simulator training appear to be related to the tools put into the hands of the instructor, including information regarding training goals (regulations, directives and competence profiles). A more general problem concerns to what extent the training in the simulator environment is effective in reality. At all facilities, no structured approach is assumed to evaluate such fit or discrepancy. However, their subjective assessment is that there is a relative fit since the commissioning bodies as well as those receiving training are keen to further utilize the facilities.

5.4 Observed conflicts related to the defined key aspects of training

Several conflicts within and between the levels of abstraction were observed in the analyses conducted. Returning to the defined key aspects of simulator training, and more specifically the set of crucial aspects to be considered in designing, planning, developing, carrying out and evaluating training in simulator environments as defined in Sect. 2.3, Table 1 maps observed conflicts to the defined key aspects.

In the analyses conducted, several problems and difficulties were observed, relating to the lack of information and regulations, and the communication of these to the facilities and the instructors carrying out training. Those acting as instructors in simulator training, therefore, need to be creative and find ways to work around and try to mitigate these problems. This also concerns problems relating to more technology oriented aspects, such as using low-fidelity simulators lacking, e.g., visualization tools to carry out debriefing, etc. The lack of information and communication can in the worst case endanger the objective of professional training in that design and planning of training is hindered, resulting in that the facility might not be used to its full potential.

There is thus a need for a holistic approach in which the prerequisites for training are decided on an organizational level, and this then needs to be communicated and propagated to the facilities and instructors along with the necessary resources to be able to design and carry out the training as intended.

6 Discussion

In complex situations, the number of possible actions and decisions to make could be said to be infinite or at least not possible to determine beforehand (Orasanu and Connolly 1993). Simulator training for dynamic decision-making in complex situations strives to delimit these possible actions to a finite range of possible actions and decisions, i.e., those that are timely and appropriate given the context of the scenario. This calls for a structured approach to the design of simulator training where pedagogical approaches and the use of scenarios and performance measurements are specifically tailored to train the sought for skills (Branson et al. 1975; Cook et al. 2013).

How simulator training is designed and carried out at a number of simulator facilities has been analyzed in this article. Results show several conflicting interdependencies between nodes as expressed in and between activity systems. The most alarming and recurring observation concerns communication and interaction between the commissioning body ordering simulator training and organizations and facilities designing and carrying out the training. More specifically, the observations concern how and to what degree rules, regulations and training goals are defined, articulated and communicated between the commissioning body and the simulator facility. The lack of communication creates a situation where the simulator facility runs the risk of not being able to reach the objective of delivering professional training, as well as that the simulator facility is not utilized to its full potential. This situation is more clearly visible in the case of military simulator facilities due to confidentiality and other legal regulations that need to be adhered to. This study has thus shown the importance of organizational prerequisites for efficient and appropriate simulator training. In contrast to other meta-reviews (see, e.g., Lineberry et al. 2013; Cook et al. 2011; Issenberg et al. 2005) which focus on transfer effectiveness, this study has focused on how efficiency and appropriateness can or should be organized.

The question of how and to what degree rules, regulations and training goals are defined, articulated and communicated, in turn influences definition of specific training goals and relevant performance measures. This becomes particularly important from the perspective of designing training programs and consecutive training sessions aiming at training specific skills. The extent to which well-defined performance measures (both quantitative and qualitative) can be obtained strongly influences the feedback that can be given during debriefing sessions, the use of different representations and visualizations to spark collaborative as well as individual reflection depending on what pedagogical model that is used. On the one hand, relying too heavily on subjective and qualitative measures during debriefings and follow-up of training results may run the risk of becoming too abstracted and detached from specifics which in turn could make it difficult to take notice of, e.g., skill-decay. On the other hand, relying too heavily on quantitative and specific measures may lose in terms of reflection and abstraction (see Bell and Waag 1998). As has been pointed out by for example Lineberry et al. (2013), these are methodological issues that make comparative analysis of the effectiveness of transfer problematic. Although the issue of transfer between simulator training and real-world situations admittedly is a problematic one, the definition and use of performance measures also influences how and to what extent effects of transfer can be measured and ascertained (Ramberg and Karlgren 1998; Artman et al. 2013; Ekanayake et al. 2013). Still, as witnessed by the analysis conducted in this article, some issues do not only concern measures but also common ground and communication between different actors, communities and formal bodies.

6.1 Training for the wild

The conflicts that have been highlighted in the analysis appear between and within different abstraction levels, and concern instructors, training facilities, commissioning bodies, and other stakeholders. Taken together, the conflicts give rise to concerns regarding the extent of actual and efficient achievement of relevant training goals. As indicated, a more general problem concerns to ascertain that the training in the simulator environment is effective in reality—which must be considered the ultimate goal of any training endeavor—requiring both that the training goals are relevant, and that the degree of achievement of the goals can be measured. Further, for the training to be efficient, it is also necessary to be able to evaluate and compare different ways of achieving the same training goals to design the most efficient training curriculum (see, e.g., Lineberry et al. 2013). In the following, the issue of efficiently achieving the relevant training goals will be discussed further in light of the study presented herein.

Looking at training from a generic perspective, achievement of the training goals can be accomplished using several different training approaches. Such an approach might involve, e.g., theoretical studies, tabletop exercises, or hands-on training using a high-fidelity flight simulator. Further, the didactical use of a tool such as a computer-based training simulator gives rise to different ways of reaching the same training goals using one and the same training simulator which in turn may give rise to different cognitive processes (see, e.g., Artman 2000). Hence, there is a need to make good use of the resources that are at disposal (Salas et al. 1998), and for training to be efficient it is integral to be able to evaluate and choose the best training approach. The instructors consider debriefing to be of utmost importance for the learning process, both for the individual as well as for the team or group of individuals. Still, relatively few formal debriefing tools exist within the analyzed facilities.

As indicated above, efficient achievement of relevant training goals requires the training design to be made explicit to be used as a tool for systematic improvement. This way, small adjustments of the training can be implemented and evaluated to continuously strive for a better way of reaching the training goals. To do this, the training goals need to be described so that they can be measured, and the training can then be designed in a way that it to the best extent possible fulfills these training goals (Branson et al. 1975). The overarching idea is that any good training endeavor should continuously strive towards being constructively criticized and further developed upon to continuously improve the forms and processes for achieving the training goals. From a training simulator perspective, this means that one should assess the improvements that the simulator brings about compared to other ways of reaching the training goals, i.e., whether the simulator actually is better than reading a book or training in a real scenario. Moreover, given that the simulator indeed provides a superior alternative, one should also continuously assess the current use of the simulator and compare it to other possible ways of using the same simulator, e.g., whether the simulator training should be combined with other activities, how the simulator parameters should be varied, etc. As an example, Lilja et al. (2016) have studied how fighter pilot radio communication can potentially be used to improve air combat training debriefings.

It should be noted that the described idea of being able to use observations as empirical data, and to use them for continuous reflection and improvement of the training design, is in line with contemporary educational practice and the use of action research methodology (Cohen et al. 2011). Sharing the key aspects of training proposed in the ADDIE model (Branson et al. 1975), this paradigm prescribes that curricula design and development should be governed by careful planning, observation, and reflection of the actions taking place “in the classroom,” and that small adjustments of the training design should be implemented and evaluated in an iterative manner.

6.2 Recommendations

From the perspective of the present study and the concerns that have been raised in the analysis, two important overall conclusions can be made. First, the commissioning body and the training facility must collaboratively work together to define measurable training goals that are operationally relevant on the one hand, and lend themselves to assessment on the other hand. For the commissioning body, this includes not only to define training goals prior to training, but equally important to revise the training goals based on the impact that the training actually results in, i.e., to assess the operational value of the conducted training as a basis for improvement of future design of training curricula. Second, the training facility needs to be using the training goals as a basis for continuous reflection upon and further development of the training design for the training to be efficient.

From an instructor viewpoint, it is important to maintain and develop both domain and pedagogical competence. This calls for a long-term strategy to not become dependent on specific individuals for conducting the training. From an organizational viewpoint, there is a need for commonly accepted tools (technical as well as conceptual) for systematic assessment, simulation of complex training situations, and visualization of data. Lack of such tools otherwise tends to force facilities to adopt inferior solutions that runs the risk of hindering efficient training. Also, for being able to learn from other facilities and organizations, explicit processes and a pedagogical frame of reference are needed.

7 Conclusions

In any design, be it design of a digital artifact or design of learning activities, sacrifices and tradeoffs need to be made. In the context of design of simulator training of time-critical and dynamic situations, it is crucial to be able to explicate what these sacrifices and tradeoffs are, so that design of training can be meaningfully complemented by training on other platforms and contexts to ascertain that training goals are met.

The study presented herein has served to investigate how simulator training in time-critical and dynamic domains is planned, carried out, and followed up. As a general conclusion, all the three investigated aspects (i.e., planning, carrying out, and following up) are to a great extent governed by the means (technological, conceptual, etc.) at one’s disposal, rather than having the overarching training goals in mind. As a consequence it becomes difficult to measure and constructively criticize the training endeavor, thereby hindering continuous improvement.

The study has been carried out in Sweden by the use of qualitative methodology. Hence, the generalizability of the findings can be questioned. Similar qualitative and comparative studies, therefore, ought to be performed in other countries, to investigate and possibly confirm whether the same structural dependencies can be found, or whether the challenges are unique to Swedish conditions.

References

Ang CS, Zaphiris P, Wilson S (2010) Computer games and sociocultural play: an activity theoretical perspective. Games Cult 5(4):354–380. https://doi.org/10.1177/1555412009360411

Artman H (2000) Team situation assessment and information distribution. Ergonomics 43(8):1111–1128. https://doi.org/10.1080/00140130050084905

Artman H, Wærn Y (1999) Distributed cognition in an emergency co-ordination center. Cogn Technol Work 1(4):237–246. https://doi.org/10.1007/s101110050020

Artman H, Borgvall J, Castor M, Jander H, Lindquist S, Ramberg R (2013) Towards the learning organisation: frameworks, methods, and tools for resource-efficient and effective training. Technical report FOI-R--3711--SE, Swedish Defence Research Agency, Stockholm, Sweden

Bailey J, Hughes RG, Jones WE (1980) Application of backward chaining to air-to-surface weapons delivery training. Technical report AFHRL-TR-79-63, Air Force Human Resources Laboratory, Brooks Air Force Base, Texas

Battista A (2015) Activity theory and analyzing learning in simulations. Simul Gaming 46(2):187–196. https://doi.org/10.1177/1046878115598481

Bell HH, Waag WL (1998) Evaluating the effectiveness of flight simulators for training combat skills: a review. Int J Aviat Psychol 8(3):223–242. https://doi.org/10.1207/s15327108ijap0803_4

Branson RK, Rayner GT, Cox JL, Furman JP, King FJ, Hannum WH (1975) Interservice procedures for instructional systems development (5 volumes). TRADOC Pamphlet 350-30, U.S. Army Training and Doctrine Command, Fort Monroe, Virginia

Brehmer B (1992) Dynamic decision making: human control of complex systems. Acta Psychologica 81(3):211–241. https://doi.org/10.1016/0001-6918(92)90019-A

Brehmer B, Allard R (1991) Dynamic decision making: the effects of task complexity and feedback delay. In: Rasmussen J, Brehmer B, Leplat J (eds) Distributed decision making: cognitive models for cooperative work, chap 16. Wiley, Chichester, pp 319–334

Brynielsson J (2006) A gaming perspective on command and control. Ph.D. thesis, KTH Royal Institute of Technology, Stockholm, Sweden. http://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-4029

Cohen L, Manion L, Morrison K (2011) Research methods in education, chap 18, 7th edn. Routledge, London, pp 344–361

Cole M, Engeström Y (1993) A cultural–historical approach to distributed cognition. In: Salomon G (ed) Distributed cognitions: psychological and educational considerations. Learning in doing: social, cognitive, and computational perspectives, chap 1. Cambridge University Press, Cambridge, pp 1–46

Conant RC, Ashby WR (1970) Every good regulator of a system must be a model of that system. Int J Syst Sci 1(2):89–97. https://doi.org/10.1080/00207727008920220

Cook DA, Hatala R, Brydges R, Zendejas B, Szostek JH, Wang AT, Erwin PJ, Hamstra SJ (2011) Technology-enhanced simulation for health professions education: a systematic review and meta-analysis. J Am Med Assoc 306(9):978–988. https://doi.org/10.1001/jama.2011.1234

Cook DA, Hamstra SJ, Brydges R, Zendejas B, Szostek JH, Wang AT, Erwin PJ, Hatala R (2013) Comparative effectiveness of instructional design features in simulation-based education: systematic review and meta-analysis. Med Teach 35(1):e867–e898. https://doi.org/10.3109/0142159X.2012.714886

Dissanayeke U, Hewagamage KP, Ramberg R, Wikramanayake G (2014) Study to initiate mobile learning among a group of young farmers from Kandy district: an activity theory based approach. Trop Agric Res 26(1):26–38. https://doi.org/10.4038/tar.v26i1.8069

Dissanayeke U, Hewagamage KP, Ramberg R, Wikramanayake G (2016) Developing and testing an m-learning tool to facilitate guided-informal learning in agriculture. Int J Adv ICT Emerg Reg 8(3):12–21. https://doi.org/10.4038/icter.v8i3.7165

Edwards W (1962) Dynamic decision theory and probabilistic information processing. Hum Factors 4(2):59–73. https://doi.org/10.1177/001872086200400201

Ekanayake HB, Backlund P, Ziemke T, Ramberg R, Hewagamage KP, Lebram M (2013) Comparing expert driving behavior in real world and simulator contexts. Int J Comput Games Technol. https://doi.org/10.1155/2013/891431

Ellaway RH (2014) Virtual patients as activities: exploring the research implications of an activity theoretical stance. Perspect Med Educ 3(4):266–277. https://doi.org/10.1007/s40037-014-0134-z

Engeström Y (1987) Learning by expanding: an activity-theoretical approach to developmental research. Orienta-Konsultit Oy, Helsinki

Goldstein IL (1993) Training in organizations: needs assessment, development, and evaluation, 3rd edn. Brooks/Cole Publishing Company, Pacific Grove

Hays RT, Jacobs JW, Prince C, Salas E (1992) Flight simulator training effectiveness: a meta-analysis. Milit Psychol 4(2):63–74. https://doi.org/10.1207/s15327876mp0402_1

Issenberg SB, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ (2005) Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach 27(1):10–28. https://doi.org/10.1080/01421590500046924

Kuutti K (1996) Activity theory as a potential framework for human–computer interaction research. In: Nardi BA (ed) Context and consciousness: activity theory and human–computer interaction, chap 2. MIT Press, Cambridge, pp 17–44

Leont’ev AN (1978) Activity, consciousness, and personality. Prentice Hall, Inc., Englewood Cliffs

Lilja H, Brynielsson J, Lindquist S (2016) Identifying radio communication inefficiency to improve air combat training debriefings. In: Proceedings of the 2016 interservice/industry training, simulation, and education conference (I/ITSEC 2016), National Training and Simulation Association (NTSA), Arlington, Virginia, paper no. 16287

Lineberry M, Walwanis M, Reni J (2013) Comparative research on training simulators in emergency medicine: a methodological review. Simul Healthc 8(4):253–261. https://doi.org/10.1097/SIH.0b013e31828715b1

Ney M, Gonçalves C, Balacheff N (2014) Design heuristics for authentic simulation-based learning games. IEEE Trans Learn Technol 7(2):132–141. https://doi.org/10.1109/TLT.2014.2316161

Nouri J, Cerratto-Pargman T (2015) Characterizing learning mediated by mobile technologies: a cultural–historical activity theoretical analysis. IEEE Trans Learn Technol 8(4):357–366. https://doi.org/10.1109/TLT.2015.2389217

Nouri J, Cerratto-Pargman T, Rossitto C, Ramberg R (2014) Learning with or without mobile devices? A comparison of traditional schoolfield trips and inquiry-based mobile learning activities. Res Pract Technol Enhanc Learn 9(2):241–262

Orasanu J, Connolly T (1993) The reinvention of decision making. In: Klein GA, Orasanu J, Calderwood R, Zsambok CE (eds) Decision making in action: models and methods, chap 1. Ablex Publishing Corporation, Norwood, pp 3–20

Qi Y, Meloche J (2009) Acknowledging the importance of play, with the use of flight simulator training, as a way to advance knowledge management practices. Int J Knowl Cult Change Manag Annu Rev 9(8):155–179. https://doi.org/10.18848/1447-9524/CGP/v09i08/49779

Ramberg R, Karlgren K (1998) Fostering superficial learning. J Comput Assist Learn 14(2):120–129. https://doi.org/10.1046/j.1365-2729.1998.1420120.x

Remolina E, Ramachandran S, Stottler R, Davis A (2009) Rehearsing naval tactical situations using simulated teammates and an automated tutor. IEEE Trans Learn Technol 2(2):148–156. https://doi.org/10.1109/TLT.2009.24

Salas E, Bowers CA, Rhodenizer L (1998) It is not how much you have but how you use it: toward a rational use of simulation to support aviation training. Int J Aviat Psychol 8(3):197–208. https://doi.org/10.1207/s15327108ijap0803_2

Sawyer RK (2004) Creative teaching: collaborative discussion as disciplined improvisation. Educ Res 33(2):12–20. https://doi.org/10.3102/0013189X033002012

Sellberg C (2018) From briefing, through scenario, to debriefing: the maritime instructor’s work during simulator-based training. Cogn Technol Work 20(1):49–62. https://doi.org/10.1007/s10111-017-0446-y

Stanton NA (1996) Simulators: a review of research and practice. In: Stanton NA (ed) Human factors in nuclear safety, chap 7. Taylor & Francis, London, pp 114–137

Vygotsky LS (1986) Thought and language. MIT Press, Cambridge

Wahl AM (2019) Expanding the concept of simulator fidelity: the use of technology and collaborative activities in training maritime officers. Cogn Technol Work. https://doi.org/10.1007/s10111-019-00549-4

Wilson MA, Bennett W Jr, Gibson SG, Alliger GM (eds) (2012) The handbook of work analysis: methods, systems, applications and science of work measurement in organizations. Applied psychology series. Taylor & Francis, London

Acknowledgements

Open access funding was provided by the Swedish Defence Research Agency. Research funding was provided by the Swedish Armed Forces.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Aronsson, S., Artman, H., Brynielsson, J. et al. Design of simulator training: a comparative study of Swedish dynamic decision-making training facilities. Cogn Tech Work 23, 117–130 (2021). https://doi.org/10.1007/s10111-019-00605-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10111-019-00605-z