Abstract

A concerted research effort over the past two decades has heralded significant improvements in both the efficiency and effectiveness of time series classification. The consensus that has emerged in the community is that the best solution is a surprisingly simple one. In virtually all domains, the most accurate classifier is the nearest neighbor algorithm with dynamic time warping as the distance measure. The time complexity of dynamic time warping means that successful deployments on resource-constrained devices remain elusive. Moreover, the recent explosion of interest in wearable computing devices, which typically have limited computational resources, has greatly increased the need for very efficient classification algorithms. A classic technique to obtain the benefits of the nearest neighbor algorithm, without inheriting its undesirable time and space complexity, is to use the nearest centroid algorithm. Unfortunately, the unique properties of (most) time series data mean that the centroid typically does not resemble any of the instances, an unintuitive and underappreciated fact. In this paper we demonstrate that we can exploit a recent result by Petitjean et al. to allow meaningful averaging of “warped” time series, which then allows us to create super-efficient nearest “centroid” classifiers that are at least as accurate as their more computationally challenged nearest neighbor relatives. We demonstrate empirically the utility of our approach by comparing it to all the appropriate strawmen algorithms on the ubiquitous UCR Benchmarks and with a case study in supporting insect classification on resource-constrained sensors.

Similar content being viewed by others

Notes

Note that the cognitive science use of “ensemble” is unrelated to the more familiar machine learning meaning.

It actually finds the compact multiple alignment [28].

We use 42 datasets, i.e., all but two of the datasets of the archive; we have excluded the StarLightCurve and FetalECG for computational reasons.

In case of ties, we assign the average (or fractional) ranking. For example, if there is one winner, two seconds and a loser [1, 2 ,2, 4], then the fractional ranking will be [1, 2.5, 2.5, 4].

References

Wang X, Mueen A, Ding H, Trajcevski G, Scheuermann P, Keogh E (2013) Experimental comparison of representation methods and distance measures for time series data. Data Min Knowl Discov 26(2):275–309

Bagnall A, Lines J (2014) An experimental evaluation of nearest neighbour time series classification. Technical report #CMP-C14-01, Department of Computing Sciences, University of East Anglia, Tech. Rep

Xi X, Keogh E, Shelton C, Wei L, Ratanamahatana CA (2006) Fast time series classification using numerosity reduction, in international conference on machine learning, pp 1033–1040

Rakthanmanon T, Campana B, Mueen A, Batista G, Westover B, Zhu Q, Zakaria J, Keogh E (2012) Searching and mining trillions of time series subsequences under dynamic time warping, in international conference on knowledge discovery and data mining, pp 262–270

Assent I, Wichterich M, Krieger R, Kremer H, Seidl T (2009) Anticipatory DTW for efficient similarity search in time series databases. Proc VLDB Endow 2(1):826–837

Kremer H, Günnemann S, Ivanescu A-M, Assent I, Seidl T (2011) Efficient processing of multiple DTW queries in time series databases. Scientific and statistical database management. Springer, Berlin, pp 150–167

Zhuang DE, Li GC, Wong AK (2014) Discovery of temporal associations in multivariate time series. IEEE Trans Knowl Data Eng 26(12):2969–2982

Petitjean F, Ketterlin A, Gançarski P (2011) A global averaging method for dynamic time warping, with applications to clustering. Pattern Recognit 44(3):678–693

Petitjean F, Forestier G, Webb GI, Nicholson AE, Chen Y, Keogh E (2014) Dynamic time warping averaging of time series allows faster and more accurate classification, in IEEE international conference on data mining, pp 470–479

Galton F (1907) Vox populi. Nature 75(1949):450–451

Tibshirani R, Hastie T, Narasimhan B, Chu G (2002) Diagnosis of multiple cancer types by shrunken centroids of gene expression. Natl Acad Sci 99(10):6567–6572

Gou J, Yi Z, Du L, Xiong T (2012) A local mean-based k-nearest centroid neighbor classifier. Comput J 55(9):1058–1071

Hart PE (1968) The condensed nearest neighbor rule. IEEE Trans Inf Theory 14(03):515–516

Xi X, Ueno K, Keogh E, Lee D-J (2008) Converting non-parametric distance-based classification to anytime algorithms. Pattern Anal Appl 11(3–4):321–336

Additional material. http://www.tiny-clues.eu/Research/ICDM2014-DTW/index.php

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Lear Res 7:1–30

Ariely D (2001) Seeing sets: representation by statistical properties. Psychol Sci 12(2):157–162

Alvarez GA (2011) Representing multiple objects as an ensemble enhances visual cognition. Trends Cognit Sci 15(3):122–131

Jenkins R, Burton A (2008) 100 % accuracy in automatic face recognition. Science 319(5862):435–435

Gusfield D (1997) Algorithms on strings, trees, and sequences: computer science and computational biology, Cambridge University Press, ch. 14 multiple string comparison—The Holy Grail, pp 332–367

Wang L, Jiang T (1994) On the complexity of multiple sequence alignment. J Comput Biol 1(4):337–348

Gupta L, Molfese DL, Tammana R, Simos PG (1996) Nonlinear alignment and averaging for estimating the evoked potential. IEEE Trans Biomed Eng 43(4):348–356

Wang K, Gasser T et al (1997) Alignment of curves by dynamic time warping. Ann Stat 25(3):1251–1276

Niennattrakul V, Ratanamahatana CA (2009) Shape averaging under time warping, in IEEE international conference on electrical engineering/electronics, computer, telecommunications and information technology, vol. 2, pp 626–629

Ongwattanakul S, Srisai D (2009) Contrast enhanced dynamic time warping distance for time series shape averaging classification, in International conference on interaction sciences: information technology, culture and human, ACM, pp 976–981

Feng D-F, Doolittle RF (1987) Progressive sequence alignment as a prerequisite to correct phylogenetic trees. J Mole Evol 25(4):351–360

Keogh E, Xi X, Wei L, Ratanamahatana CA (2011) The UCR time series classification/clustering homepage. http://www.cs.ucr.edu/~eamonn/time_series_data/

Petitjean F, Gançarski P (2012) Summarizing a set of time series by averaging: from Steiner sequence to compact multiple alignment. Theor Comput Sci 414(1):76–91

Petitjean F, Inglada J, Gançarski P (2012) Satellite image time series analysis under time warping. IEEE Trans Geosci Remote Sens 50(8):3081–3095

Petitjean F (2014) Matlab and Java source code for DBA. doi:10.5281/zenodo.10432

Kranen P, Seidl T (2009) Harnessing the strengths of anytime algorithms for constant data streams. Data Min Knowl Discov 19(2):245–260

Hu B, Rakthanmanon T, Hao Y, Evans S, Lonardi S, Keogh E (2011) Discovering the intrinsic cardinality and dimensionality of time series using MDL, in IEEE international conference on data mining, pp 1086–1091

Ratanamahatana CA, Keogh E (2005) Three myths about dynamic time warping data mining, in SIAM international conference on data mining, pp 506–510

Niennattrakul V, Ratanamahatana CA (2007) Inaccuracies of shape averaging method using dynamic time warping for time series data, in international conference on computational science. Springer, pp 513–520

Pekalska E, Duin RP, Paclìk P (2006) Prototype selection for dissimilarity-based classifiers. Pattern Recogni 39(2):189–208

Ueno K, Xi X, Keogh E, Lee D-J (2006) Anytime classification using the nearest neighbor algorithm with applications to stream mining, in IEEE international conference on data mining, pp 623–632

Chen Y, Why A, Batista G, Mafra-Neto A, Keogh E (2014) Flying insect classification with inexpensive sensors. J Insect Behav 27(5):657–677

Goddard LB, Roth AE, Reisen WK, Scott TW et al (2002) Vector competence of California mosquitoes for west Nile virus. Emerging Infect Dis 8(12):1385–1391

Yang Y, Webb GI, Korb K, Ting K-M (2007) Classifying under computational resource constraints: anytime classification using probabilistic estimators. Mach Learn, 69(1):35–53

Acknowledgments

This research was supported by the ARC DP120100553 and DP140100087, the NSF IIS-1161997, the Bill and Melinda Gates Foundation, Vodafone’s Wireless Innovation Project, the French-Australia Science Innovation Collaboration Grants PHC Grant No. 32571NA and the Air Force Office of Scientific Research, Asian Office of Aerospace Research under contracts FA2386-15-1-4017 and FA2386-15-1-4007.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: proof of convergence of DBA

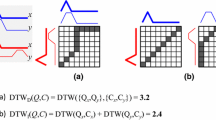

We want to prove that, at each iteration, DBA provides a better average sequence \(\overline{T} \), i.e., it has a lower sum of squares (Eq. 2). DTW guarantees to find the minimum alignment between two sequences, which proves optimality for the first step of DBA (Table 1—Algorithm 2—lines 1–8). Proving convergence thus requires showing that for a given multiple alignment M, the computed \(\overline{T} \) is optimal.

Let \(M=DTW\_multiple\_alignment\left( {\overline{T} ,{\mathbf {D}}} \right) \) (Table 1—Algorithm 3) and \(M_{\ell } =M\left[ \ell \right] \). We start by rewriting the objective function (sum of squares—SS):

where e is an element of a sequence of \({\mathbf {D}}\) that has been “linked” to the \(\ell \mathrm{th}\) element of \(\overline{T} \) by Dynamic Time Warping. Given that this function has no maximum, it is minimized when its partial derivative is 0:

This leads to \(\hbox {SS}\left( {\overline{T} ,{\mathbf {D}}} \right) \) being minimized when every element \(\ell \) of \(\overline{T} \) is positioned as the mean of \(\left| {M_{\ell }} \right| \). \(\square \)

Appendix 2: quantitative evaluation of DBA

See Table 6.

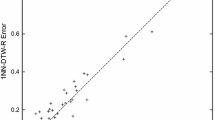

Appendix 3: representative samples of the full set of results available at [33]

Figure 9a presents the results on the electrocardiograms time series dataset (ECG 200) which show the electrical potential between two points on the surface of the body caused by a beating heart [27]. In this dataset, the proposed condensing methods that make use of the average (KMeans and AHC) outperform all other methods. Similarly, as in our example for insect surveillance (Fig. 8), a better overall accuracy can be reached while using a subset of prototypes instead of using the entire training set. The technique based on AHC reaches an error rate of 14 % with only 16 prototypes per class, while the full 1-NN algorithm requires more than 50 prototypes per class to obtain a 23 % error rate.

(Best viewed in color) the error rate (with standard deviation) of various data condensing techniques for every output training size from 1 per class to 100 per class. The curves are slightly smoothed for visual clarity; the raw data spreadsheets are available at [33] (color figure online)

Figure 9b presents the results on the Gun/NoGun motion capture time series dataset. Here again, our average-based condensing techniques dominate state-of-the-art methods. It is interesting to observe the important reduction in the error rate with 2 to 5 items per class. This can be explained by the multimodality of the two classes of the dataset, which has been created from recording of movements of people with different heights.

Figure 9c presents the results on the uWaveGestureLibrary(Z) time series dataset which contains over 4000 samples of accelerometer readings for gesture recognition. This example shows that one prototype per class makes it possible to “explain” most of the variance in the classes of the dataset. This is another critical example, because gesture recognition systems not only have to be reliable, but also often must perform the recognition very quickly. With one prototype per class on this dataset that is composed of more than 100 training time series for each class, our condensing technique offers a 100-fold speedup, with a loss in the recovery of only 5 %. This starkly contrasts with a condensing using the best non-average-based method (K-medoids), for which the error rate increases by 14 % for the same speedup.

Rights and permissions

About this article

Cite this article

Petitjean, F., Forestier, G., Webb, G.I. et al. Faster and more accurate classification of time series by exploiting a novel dynamic time warping averaging algorithm. Knowl Inf Syst 47, 1–26 (2016). https://doi.org/10.1007/s10115-015-0878-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-015-0878-8