Abstract

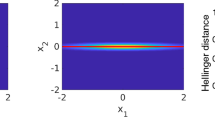

Metropolis algorithms for approximate sampling of probability measures on infinite dimensional Hilbert spaces are considered, and a generalization of the preconditioned Crank–Nicolson (pCN) proposal is introduced. The new proposal is able to incorporate information on the measure of interest. A numerical simulation of a Bayesian inverse problem indicates that a Metropolis algorithm with such a proposal performs independently of the state-space dimension and the variance of the observational noise. Moreover, a qualitative convergence result is provided by a comparison argument for spectral gaps. In particular, it is shown that the generalization inherits geometric convergence from the Metropolis algorithm with pCN proposal.

Similar content being viewed by others

Notes

In general elliptic PDEs can be solved in a weak sense by variational methods. Then adjoint methods known from PDE-constrained optimization and parameter identification can be employed to compute \(\nabla G(\varvec{\xi })\), see [31, Chapter 6] for details.

The empirical performance of each algorithm was best for this particular tuning.

We also studied other functions such as \(f(\varvec{\xi }) = \xi _1\), \(f(\varvec{\xi }) = \max _x {{\mathrm{\mathrm {e}}}}^{u(x,\varvec{\xi })}\) and \(f(\varvec{\xi }) = p(0.5,\varvec{\xi })\) but the results of the comparison were essentially the same.

We also estimated the \(\text {ESS}\) by batch means (100 batches of size \(10^4\)) to control our simulations. This led to similar results.

References

A. Beskos, G. Roberts, A. Stuart, and J. Voss. MCMC methods for diffusion bridges. Stoch. Dynam., 8(3):319–350, 2008.

V. Bogachev. Gaussian Measures. American Mathematical Society, 1998.

N. Bou-Rabee and M. Hairer. Nonasymptotic mixing of the MALA algorithm. IMA J. Numer. Anal., 33(1):80–110, 2013.

S. Cotter, G. Roberts, A. Stuart, and D. White. MCMC methods for functions: Modifying old algorithms to make them faster. Stat. Sci., 28(3):283–464, 2013.

T. Cui, K. Law, and Y. Marzouk. Dimension-independent likelihood-informed MCMC. Journal of Computational Physics, 304:109–137, 2016.

G. Da Prato and J. Zabczyk. Second Order Partial Differential Equations in Hilbert Spaces. Cambridge University Press, Cambridge, 2004.

R. Douglas. On majorization, factorization, and range inclusion of operators on Hilbert space. Proc. Amer. math. Soc., 17:413–415, 1966.

N. Dunford and J. Schwartz. Linear Operators, Part I: General Theory. Wiley-Interscience, New York, 1958.

O. Ernst, B. Sprungk, and H.-J. Starkloff. Analysis of the ensemble and polynomial chaos Kalman filters in Bayesian inverse problems. SIAM/ASA J. Uncertainty Quantification, 3(1):823–851, 2015.

C. Geyer. Practical Markov chain Monte Carlo. Stat. Sci., 7(4):473–483, 1992.

M. Girolami and B. Calderhead. Riemann manifold Langevin and Hamiltonian Monte Carlo methods. J. R. Stat. Soc. Ser. B, 73(2):123–214, 2010.

M. Hairer. An introduction to stochastic PDEs. Lecture notes, 2009.

M. Hairer, A. Stuart, and S. Vollmer. Spectral gaps for a Metropolis–Hastings algorithm in infinite dimensions. Ann. Appl. Probab., 24(6):2455–2490, 2014.

M. Hladnik and M. Omladič. Spectrum of the product of operators. Proc. Amer. math. Soc., 102(2):300–302, 1988.

Z. Hu, Z. Yao, and J. Li. On an adaptive preconditioned Crank–Nicolson algorithm for infinite dimensional Bayesian inferences. arXiv:1511.05838, 2015.

C. Kipnis and S. Varadhan. Central limit theorem for additive functionals of reversible Markov processes and applications to simple exclusions. Comm. Math. Phys., 104(1):1–19, 1986.

K. Law. Proposals which speed up function-space MCMC. J. Comput. Appl. Math., 262:127–138, 2014.

G. Lawler and A. Sokal. Bounds on the \(L^2\) spectrum for Markov chains and Markov processes: a generalization of Cheeger’s inequality. Trans. Amer. Math. Soc., 309(2):557–580, 1988.

A. Lee and K. Łatuszyński. Variance bounding and geometric ergodicity of Markov chain Monte Carlo kernels for approximate Bayesian computation. Biometrika, 101(3):655–671, 2014.

S. Livingstone. Geometric ergodicity of the Random Walk Metropolis with position-dependent proposal covariance. arXiv:1507.05780, 2015.

A. Mandelbaum. Linear estimators and measurable linear transformations on a Hilbert space. Z. Wahrscheinlichkeitstheorie verw. Gebiete, 65:385–397, 1984.

J. Martin, L. Wilcox, C. Burstedde, and O. Ghattas. A stochastic Newton MCMC method for large-scale statistical inverse problems with application to seismic inversion. SIAM J. Sci. Comput., 34(3):A1460–A1487, 2012.

F. Pinski, G. Simpson, A. Stuart, and H. Weber. Algorithms for Kullback–Leibler approximation of probability measures in infinite dimensions. SIAM J. Sci. Comput., 37(6):A2733–A2757, 2015.

G. Roberts and J. Rosenthal. Geometric ergodicity and hybrid Markov chains. Electron. Comm. Probab., 2(2):13–25, 1997.

G. Roberts and J. Rosenthal. Optimal scaling for various Metropolis–Hastings algorithms. Stat. Sci., 16(4):351–367, 2001.

D. Rudolf. Explicit error bounds for Markov chain Monte Carlo. Dissertationes Math. (Rozprawy Mat.), 485:1–93, 2012.

D. Rudolf and M. Ullrich. Positivity of hit-and-run and related algorithms. Electron. Commun. Probab., 18:1–8, 2013.

A. Stuart. Inverse problems: a Bayesian perspective. Acta Numer., 19:451–559, 2010.

L. Tierney. Markov chains for exploring posterior distributions. Ann. Stat., 22(4):1701–1762, 1994.

L. Tierney. A note on Metropolis–Hastings kernels for general state spaces. Ann. Appl. Probab., 8(1):1–9, 1998.

C. Vogel. Computational Methods for Inverse Problems. SIAM, Philadelphia, 2002.

Acknowledgements

We thank Oliver Ernst, Hans-Jörg Starkloff and Albrecht Böttcher for many fruitful discussions and the referees for their valuable comments which helped to improve the paper. D. R. was supported by the DFG Priority Program 1324 and the DFG Research Training Group 1523. B. S. was supported by the DFG Priority Program 1324.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Andrew Stuart.

Appendix

Appendix

1.1 Gaussian Measures

The following brief introduction to Gaussian measures is based on the presentations given in [6, Section 1] and [12, Section 3]. Another comprehensive reference for this topic is [2].

Let \({\mathcal {H}}\) be a Hilbert space with norm \(\Vert {\cdot }\Vert \) and inner-product \(\langle {\cdot }, {\cdot } \rangle \) and let \(\mathcal L^1_+({\mathcal {H}})\) denote the set of all linear, bounded, self-adjoint, positive and trace class operators \(A:{\mathcal {H}} \rightarrow {\mathcal {H}}\).

Let \(\mu \) be a measure on \(({\mathcal {H}}, \mathcal B({\mathcal {H}}))\), and for simplicity let us assume that \(\int _{{\mathcal {H}}} \Vert v\Vert ^2\, \mu (\mathrm {d}v) < \infty \). The mean \(m \in {\mathcal {H}}\) of \(\mu \) is defined as the Bochner integral \(m = \int _{{\mathcal {H}}} v\, \mu (\mathrm {d}v)\) and the covariance of \(\mu \) is the unique operator \(C\in \mathcal L^1_+({\mathcal {H}})\) given by

A measure \(\mu \) on \({\mathcal {H}}\) is called a Gaussian measure with mean \(m\in {\mathcal {H}}\) and covariance operator \(C\in \mathcal L^1_+({\mathcal {H}})\), denoted by N(m, C), iff

This definition is equivalent to \(\langle u \rangle _*\mu = N(\langle u, m\rangle , \langle \hbox {Cu}, u\rangle )\) for all \(u\in {\mathcal {H}}\) where \(\langle u \rangle : {\mathcal {H}} \rightarrow \mathbb {R}\) with \(\langle u \rangle (v) := \langle u, v\rangle \) and where \(\langle u \rangle _*\mu \) denotes the pushforward measure of \(\mu \) under the mapping \(\langle u \rangle \). Gaussian measures are uniquely determined by their mean and covariance, i.e., for any \(m\in {\mathcal {H}}\) and any \(C \in \mathcal L^1_+({\mathcal {H}})\) there exists a unique Gaussian measure \(\mu =N(m,C)\) on \({\mathcal {H}}\). Moreover, the set of random variables on \({\mathcal {H}}\) distributed according to a Gaussian measure is closed w.r.t. affine transformations. In detail, let \(X \sim N(m,C)\) be a Gaussian random variable on \({\mathcal {H}}\) and let \(b\in {\mathcal {H}}\) and \(T:{\mathcal {H}} \rightarrow {\mathcal {H}}\) be a bounded, linear operator, then due to [6, Proposition 1.2.3] we have

The Cameron–Martin space \({\mathcal {H}}_\mu \) of a Gaussian measure \(\mu = N(m,C)\) on \({\mathcal {H}}\) is defined as the image space \(\mathrm{Im}C^{1/2}\) which forms equipped with \(\langle u, v \rangle _{C^{-1}}:= \langle C^{-1/2}u, C^{-1/2}v\rangle \) again a Hilbert space. The space \({\mathcal {H}}_\mu \) has some surprising properties: It is the intersection of all measurable linear subspaces \(\mathcal X \subseteq {\mathcal {H}}\) with \(\mu (\mathcal X)=1\); if \(\ker C = \{0\}\) then \({\mathcal {H}}_\mu \) is dense in \({\mathcal {H}}\) and if \({\mathcal {H}}\) is infinite dimensional then \(\mu ({\mathcal {H}}_\mu )= 0\). Moreover, the space \({\mathcal {H}}_\mu \) plays an important role for the equivalence of Gaussian measures as rigorously expressed in the Cameron–Martin theorem below. Before stating the result we need some more notation.

In the following let \(\mu = N(0,C)\). For \(u\in {\mathcal {H}}_\mu \) we set

and understand \(W_u\) as an element of \(L_2(\mu )\). Since the mapping \({\mathcal {H}}_\mu \ni u \mapsto W_u \in L_2(\mu )\) is an isometry [6, Section 1.2.4], we can define for any \(u\in {\mathcal {H}}\)

where \(u_n \in {\mathcal {H}}_\mu \) and \(u_n \rightarrow u\) in \({\mathcal {H}}\) as \(n\rightarrow \infty \). And by [6, Proposition 1.2.7] it holds that

Hence, if \(h\in {\mathcal {H}}_\mu \), we understand \(\langle C^{-1} h, {\cdot } \rangle \) as \(\langle C^{-1/2} (C^{-1/2} h), {\cdot } \rangle \in L_2(\mu )\).

Theorem 21

(Cameron–Martin formula, [6, Theorem 1.3.6]) Let \(\mu = N(0, C)\) and \(\mu _h = N(h, C)\) be Gaussian measures on a separable Hilbert space \({\mathcal {H}}\). Then, \(\mu \) and \(\mu _h\) are equivalent iff \(h\in {\mathcal {H}}_\mu = \mathrm{Im}C^{1/2}\) in which case

Thus, two Gaussian measures N(m, C) and \(N(m+h, C)\) are only equivalent if \(h\in \mathrm{Im}C^{1/2}\). Consider now \(\mu = N(0,C)\) and \(\nu = N(0, Q)\) with \(C\ne Q\). Before stating a theorem about the equivalence of \(\mu \) and \(\nu \), we need some more notations. Let \(T:{\mathcal {H}} \rightarrow {\mathcal {H}}\) be in the following a self-adjoint trace class operator and let \((t_n)_{n\in \mathbb {N}}\) denote the sequence of its eigenvalues. We set

and define

where \(\varPi _N\) denotes the projection operator to \(\mathrm {span}\{e_1,\ldots ,e_N\}\) with \(e_n\) denoting the nth eigenvector of C. The existence of the \(\mu \text {-a.e.}\)-limit above is proven in [6, Proposition 1.2.10], and furthermore, if \(\langle Tu, u\rangle < \Vert u\Vert ^2\) holds for any \(u\in {\mathcal {H}}\), then by [6, Proposition 1.2.11] we have

Theorem 22

([6, Proposition 1.3.11]) Let \(\mu = N(0, C)\) and \(\nu = N(0, Q)\) be Gaussian measures on a separable Hilbert space \({\mathcal {H}}\). If \(T:= I - C^{-1/2}QC^{-1/2}\) is self-adjoint, trace class and satisfies \(\langle Tu, u\rangle < \Vert u\Vert ^2\) for any \(u\in {\mathcal {H}}\), then \(\mu \) and \(\nu \) are equivalent with

We note that the assumptions of Theorem 22 can be relaxed to \(I - C^{-1/2}QC^{-1/2}\) being Hilbert–Schmidt which is known as Feldman–Hajek theorem. Also in this case expression for the Radon–Nikodym derivative can be obtained, see [2, Corollary 6.4.11].

Finally, we recall two simple but useful facts resulting from a change of variables.

Lemma 23

Let \({\mathcal {H}}\) be a separable Hilbert space, \(0<s<\infty \) and \(h\in {\mathcal {H}}\).

-

Assume \(\mu = N(m,C)\), \(\nu = N(m+h, s^2C)\) on \({\mathcal {H}}\) and \(f:{\mathcal {H}} \rightarrow \mathbb {R}\). Then

$$\begin{aligned} \int _{{\mathcal {H}}} f(v) \mu (\mathrm {d}v) = \int _{{\mathcal {H}}} f\left( \frac{1}{s} (v - h)\right) \, \nu (\mathrm {d}v). \end{aligned}$$ -

Assume \(\mu _1 = N(m_1, C_1)\) and \(\mu _2 = N(m_2, C_2)\) are equivalent with \(\frac{\mathrm {d}\mu _2}{\mathrm {d}\mu _1}(u) = \pi (u)\). Then the measures \(\nu _1 = N(m_1+h, s^2C_1)\) and \(\nu _2 = N(m_2+h, s^2C_2)\) are also equivalent with

$$\begin{aligned} \frac{\mathrm {d}\nu _2}{\mathrm {d}\nu _1}(u) = \pi \left( \frac{u-h}{s}\right) . \end{aligned}$$

1.2 Proofs

The following proofs are rather operator theoretic and rely heavily on the holomorphic functional calculus. We refer to [8, Section VII.3] for a comprehensive introduction.

1.2.1 Proof of Lemma 2

From the proof of Proposition 1 we know that \((I + H_\varGamma )^{-1}:{\mathcal {H}} \rightarrow {\mathcal {H}}\) is self-adjoint and that \(\Vert (I + H_\varGamma )^{-1}\Vert \le 1\). Thus, \(I-s^2(I+H_\varGamma )^{-1}\) is also a self-adjoint, bounded and positive operator on \({\mathcal {H}}\) and its square root operator appearing in (16) exists. This yields the well definedness of \(A_\varGamma : \mathrm {Im}\,C^{1/2}\rightarrow {\mathcal {H}}\). We now prove that \(A_\varGamma \) is a bounded operator on \(\mathrm {Im}\,C^{1/2}\). For \(s=0\) we get \(A_\varGamma = I\) and the assertion follows, so that we assume \(s\in (0,1)\). Let us now define \(f:\mathbb {C}{\setminus } \{-1\} \rightarrow \mathbb {C}\) by

The function f is analytic in the complex half plane \(\{z \in \mathbb {C}: \mathfrak {R}(z) > s^2-1\}\), since \(\mathfrak {R}(1+z) > s^2\) implies

Denoting \(\gamma :=\Vert H_\varGamma \Vert \) the spectrum of \(H_\varGamma = C^{1/2}\varGamma C^{1/2}\) is contained in \([0,\gamma ]\). Then, since \(s<1\) we have that f is analytic in a neighborhood, say, \(\mathcal {N}[0,\gamma ]\) of \([0,\gamma ]\). Hence, by functional calculus we obtain

Due to analyticity we can approximate f by a sequence of polynomials \(p_n\) with degree n which converge uniformly on \(\mathcal {N}[0,\gamma ]\) to f for \(n\rightarrow \infty \). Then, by [8, Lemma VII.3.13] holds

for \(n \rightarrow \infty \). Since the polynomials \(p_n\) can be represented as \( p_n(z) = \sum _{k=0}^n a_k^{(n)} z^k\), we obtain further

By [14, Proposition 1] we have

where \(\mathrm{spec}({\cdot }\mid {\mathcal {H}})\) denotes the spectrum on \({\mathcal {H}}\), and, thus, we can conclude \(\Vert p_n(C\varGamma ) - f(C\varGamma ) \Vert _{{\mathcal {H}} \rightarrow {\mathcal {H}}} \rightarrow 0\) as \(n \rightarrow \infty \) again by [8, Lemma VII.3.13]. Hence,

and

where \(f(C\varGamma )\) is by construction a bounded operator on \({\mathcal {H}}\).

1.2.2 Proof of Lemma 12

By [7, Theorem 1] the relation \(\mathrm{Im}(\varDelta _\varGamma ) \subseteq \mathrm{Im}(C^{1/2})\) holds iff there exists a bounded operator \(B:{\mathcal {H}}\rightarrow {\mathcal {H}}\) such that

Thus, \(\mathrm{Im}(\varDelta _\varGamma ) \subseteq \mathrm{Im}(C^{1/2})\) is equivalent to \(C^{-1/2}\varDelta _\varGamma \) being bounded on \({\mathcal {H}}\). In order to construct and analyze the operator B, we define \(f:\mathbb {C}{\setminus }\{-1\} \rightarrow \mathbb {C}\) by

which is analytic in \(\{z \in \mathbb {C}: \mathfrak {R}(z) > s^2-1\}\), cf. the proof of Lemma 2, and particularly in

where \(\gamma := \Vert H_\varGamma \Vert \). We have the following representation

with

see [8, Chapter VII.3]. Hence, if we can prove that \(B = - f(H_\varGamma ) \, C^{-1/2}\) is a bounded operator on \({\mathcal {H}}\), we have shown the assertion.

To this end let \(p_n(z) = \sum _{k=0}^n a_k^{(n)} z^k\) be polynomials of degree n, with \(n\in \mathbb {N}\), which converge uniformly on V to f. Such polynomials exist due to the analyticity of f and by the fact that \(f(0) = 0\) we can assume w.l.o.g. that \(a_0^{(n)} = 0\) for all \(n\in \mathbb {N}\). This leads to

with \(q_{n-1}(z) := \sum _{k=1}^{n} a_{k}^{(n)} z^{k-1} = p_n(z)/z\). Now, [14, Proposition 1] implies that the operators \(C^{1/2}\varGamma C^{1/2}\) and \(\varGamma ^{1/2}C \varGamma ^{1/2}\) share the same spectrum, since C and \(\varGamma \) are positive. Thus, \(\mathrm{spec}(\varGamma ^{1/2}C \varGamma ^{1/2}\mid {\mathcal {H}}) \subset [0,\gamma ]\) and we have

Moreover, the polynomials \(q_n\) are a Cauchy sequence in \(C(\partial V)\), since

where \(\min _{\eta \in \partial V} |\eta | = \varepsilon > 0\) due to our choice of V. Thus, the polynomials \(q_n\) converge uniformly on \(\partial V\) to a function g. This implies that the operators \(q_n(\varGamma ^{1/2}C \varGamma ^{1/2})\) converge in the operator norm to a bounded operator

We arrive at

which yields

being bounded on \({\mathcal {H}}\).

Rights and permissions

About this article

Cite this article

Rudolf, D., Sprungk, B. On a Generalization of the Preconditioned Crank–Nicolson Metropolis Algorithm. Found Comput Math 18, 309–343 (2018). https://doi.org/10.1007/s10208-016-9340-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10208-016-9340-x