Abstract

Combining the classical theory of optimal transport with modern operator splitting techniques, we develop a new numerical method for nonlinear, nonlocal partial differential equations, arising in models of porous media, materials science, and biological swarming. Our method proceeds as follows: first, we discretize in time, either via the classical JKO scheme or via a novel Crank–Nicolson-type method we introduce. Next, we use the Benamou–Brenier dynamical characterization of the Wasserstein distance to reduce computing the solution of the discrete time equations to solving fully discrete minimization problems, with strictly convex objective functions and linear constraints. Third, we compute the minimizers by applying a recently introduced, provably convergent primal dual splitting scheme for three operators (Yan in J Sci Comput 1–20, 2018). By leveraging the PDEs’ underlying variational structure, our method overcomes stability issues present in previous numerical work built on explicit time discretizations, which suffer due to the equations’ strong nonlinearities and degeneracies. Our method is also naturally positivity and mass preserving and, in the case of the JKO scheme, energy decreasing. We prove that minimizers of the fully discrete problem converge to minimizers of the spatially continuous, discrete time problem as the spatial discretization is refined. We conclude with simulations of nonlinear PDEs and Wasserstein geodesics in one and two dimensions that illustrate the key properties of our approach, including higher-order convergence our novel Crank–Nicolson-type method, when compared to the classical JKO method.

Similar content being viewed by others

1 Introduction

Gradient flow methods are classical techniques for the analysis and numerical simulation of partial differential equations. Historically, such methods were exclusively based on gradient flows arising from a Hilbert space structure, particularly \(L^2({{\mathbb {R}}^d})\), but since the work of Jordan, Kinderlehrer, and Otto in the late 90’s [75, 93, 94], interest has emerged in a range of nonlinear, nonlocal partial differential equations that are gradient flows in the Wasserstein metric,

When \(\varOmega \ne {{\mathbb {R}}^d}\), we consider no-flux boundary conditions.

Equations of this form arise in a number of physical and biological applications, including models in granular media [12, 45, 46, 102], material science [71], and biological swarming [6, 39, 77]. Furthermore, many well-known equations may be written in this way: when \(V = W = 0\) and \(\alpha =1\), Eq. (1) reduces to the heat equation (\(m=1\)), porous medium equation (\(m>1\)), and fast diffusion equation (\(m<1\)) [103]. In the presence of a drift potential V, it becomes a Fokker–Planck equation (\(m=1\)) or nonlinear Fokker–Planck equation (\(m>1\)), as used in models of tumor growth [96, 100]. When the interaction potential W is given by a repulsive–attractive Morse or power-law potential,

we recover a range of nonlocal interaction models, which are repulsive at short length scales and attractive at long length scales [4, 5, 34, 101]. When \(W = (\varDelta )^{-1}\), the Newtonian potential, we have the Keller–Segel equation and its nonlinear diffusion variants [17, 19, 25, 26, 32, 41, 76]. Finally, as the diffusion exponent \(m \rightarrow +\infty \), we recover congested aggregation and drift equations arising in models of pedestrian crowd dynamics and shape optimization problems [23, 58, 67, 84, 90, 91].

In order to describe the gradient flow structure of equation (1), we begin by rewriting it as a continuity equation in \(\rho (x,t)\) for a velocity field v(x, t),

In this form, two key properties of the equation become evident: it is positivity preserving and conserves mass. In what follows, we will always consider nonnegative initial data, and we will typically renormalize so that the mass of the initial data equals one, i.e., \(\rho _0 \in {{\mathcal {P}}}_{ac}(\varOmega )\), where \( {{\mathcal {P}}}_{ac}(\varOmega )\) is the set of probability measures on \(\varOmega \) that are absolutely continuous with respect to Lebesgue measure. Furthermore, as our objective is to develop a numerical method for these equations, we will exclusively consider the case when \(\varOmega \) is a bounded domain. Throughout, we commit a mild abuse of notation and identify all such probability measures with their densities, \(d \rho (x) = \rho (x) \mathrm {d}x\).

As discovered by Otto [93], given an energy \({\mathcal {E}}: {{\mathcal {P}}}_{ac}(\varOmega ) \rightarrow {\mathbb {R}}\cup \{ +\infty \}\), we may formally define its gradient with respect to the Wasserstein metric \(d_{\mathcal {W}}\) using the formula

(See Sect. 2.1 for the definition of the Wasserstein metric \(d_{\mathcal {W}}\).) In this way, gradient flows of \({\mathcal {E}}\), \(\partial _t \rho = - \nabla _{d_{\mathcal {W}}} {\mathcal {E}}(\rho )\), correspond to solutions of the continuity equation with velocity \(v = - \nabla \frac{\delta {\mathcal {E}}}{ \delta \rho }\). In particular, Eq. (3) is the gradient flow of the energy

Differentiating the energy (4) along solutions of (3), one formally obtains that the energy is decreasing along the gradient flow

which coincides with the theoretical interpretation of gradient flows as solutions that evolve in the direction of steepest descent of an energy, where the notion of steepest descent is induced by the Wasserstein metric structure.

A key feature of equations of the form (3) is the competition between repulsive and attractive effects. For repulsive–attractive interaction kernels W, as in equation (2), these effects can arise purely through nonlocal interactions, leading to rich structure of the steady states [4, 13, 14, 34, 65]. For purely attractive interaction kernels W, as in the Keller–Segel equation, the competition instead arises from the combination of nonlocal interaction with diffusion. In this case, different choices of interaction kernel W, diffusion exponent m, and initial data \(\rho _0\) can lead to widely different behavior—from bounded solutions being globally well posed to smooth solutions blowing up in finite time [17, 19, 25, 26, 32, 41].

1.1 Summary of Numerical Approach

The goal of the present work is to develop new numerical approach for partial differential equations of the form (1) that combine gradient flow methods with modern operator splitting techniques. Our approach applies to equations of this form with any combination of diffusion \(\alpha U'_m(\rho )\) \((\alpha \ge 0\)), drift V, or interaction \(W*\rho \) terms—in particular, it is not necessary for diffusion to be present in order for our scheme to converge.

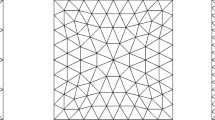

The main idea of our approach is to discretize the PDE/Wasserstein gradient flow at two levels. First, we consider a time discretization of the gradient flow with time step \(\tau \) (see Fig. 1b), either given by the classical JKO scheme (Eq. (6) below) or a new Crank–Nicolson inspired variant (Eq. (7) below). This reduces computation of the gradient flow to solving a sequence of infinite-dimensional minimization problems. Then, we consider a dynamical reformulation of these minimization problems, stemming from Benamou and Brenier’s dynamic characterization of the Wasserstein metric, by which the problem becomes the minimization of a strictly convex integral functional subject to a linear PDE constraint (see Fig. 1c). At this level, the problem remains continuous in space and time. We conclude by considering a further discretization of the problem, with inner time step \((\varDelta t)\) and spatial discretization \((\varDelta x)\), by taking piecewise constant approximations of the functions and using a finite difference approximation of the PDE constraint (see Fig. 1d). In this final, fully discrete form, we then compute the minimizer using modern operator splitting techniques, applying Yan’s recent extension of the classical primal dual algorithm for minimizing sums of three convex functions [106].

Our paper is organized as follows. In Sect. 1.2, we discuss the relationship between our numerical approach and previous work. In Sect. 1.3, we summarize our contribution. In Sect. 2, we describe the details of our numerical method. Along with numerically simulating Wasserstein gradient flows, our method also provides, as a special case, a new method for computing Wasserstein geodesics and the Wasserstein distance between probability densities; see Remark 1. In Sect. 3, we prove that, provided a smooth, positive solution of the continuum JKO scheme exists and the energy corresponding to the PDE is sufficiently regular, then minimizers of the fully discrete problem exist (Theorem 1), the objective functions of the discrete problems \(\varGamma \)-converge to the objective function of the continuum problem (Theorem 2), and thus, solutions of the fully discrete scheme converge, up to a subsequence, to a solution of the continuum scheme (Theorem 3). As a special case, we also recover convergence of a numerical method for computing Wasserstein geodesics, similar to that introduced by Papadakis, Péyre, and Oudet [95]. Finally, in Sect. 4, we provide several numerical simulations illustrating our approach in both one and two dimensions, computing Wasserstein geodesics, nonlinear Fokker–Planck equations, aggregation diffusion equations, and other related equations.

1.2 Details of Approach and Comparison with Previous Work

1.2.1 Classical Numerical PDE Methods

We now compare our approach to existing numerical methods. Perhaps the most common numerical approach for equations of the form (1) is to consider the equation as an advection–diffusion equation and apply classical finite difference, finite volume, or Galerkin discretizations [3, 29, 54, 66, 85]. However, when such methods are based on explicit time discretizations, they suffer from stability constraints due either to the degeneracy of the diffusion (when \(m>1\)) or the nonlocality from the interaction potential W. (See for instance the mesa problem [83].) Implicit time discretizations, on the other hand, are computationally intensive, due to the difficulty of matrix inversion, even when the implicit steps are solved by smart iterative methods to avoid the high computation cost of convolution [3].

Another common approach is to leverage structural similarities between (3) and equations from fluid dynamics to develop particle methods [14, 27, 30, 36, 43, 48, 57, 60, 88, 92]. Until recently, the key limitation of such methods has been developing approaches to incorporate diffusion. Following the analogy with the Navier–Stokes equations, stochastic particle methods have been proposed in the case of linear diffusion (\(m=1\)) [72,73,74, 86]. More recently the first two authors and Patacchini developed a deterministic blob method for linear and nonlinear diffusion (\(m \ge 1\)) [31]. On the one hand, particle methods naturally conserve mass and positivity, and they can also be designed to respect the underlying gradient flow structure of the equation, including the energy dissipation property (5). On the other hand, a large number of particles are often required to resolve finer properties of solutions.

In contrast with such classical methods, our method introduces an auxiliary momentum variable m and an additional inner layer of time discretization, which enlarges the dimension of the problem. However, as later pointed out in [80], the inner layer of time can be discretized with just one step without violating the overall first-order accuracy, there completely eliminating the additional cost introduced by the inner layer. Another major advantage of our approach is that, by reforming the PDE problem into an optimization problem, we obtain unconditional stability (for the JKO discretization, see Eq. (6) below) while avoiding the inversion of a full matrix in the general implicit setting, which is extremely expensive, especially in higher dimensions; see for instance [3]. Finally, compared to other implicit methods, such as the backward Euler method, the suboptimization problems can be solved independently at each gridpoint, and therefore are massively parallelizable and suitable for high-dimensional problems.

1.2.2 Variational Methods

Compared to the classical numerical PDE approaches described in the previous section, a more modern class of numerical methods leverages the gradient flow structure of (1) to approximate solutions of the PDE by solving a sequence of minimization problems. This is the approach we take in the present work. Originally introduced by Jordan, Kinderlehrer, and Otto as a technique for computing solutions of the Fokker–Planck equation (Eq. (1), \(W=0\), \(m=1\)) [75], this scheme approximates the solution \(\rho (x,t)\) at time t by solving the following sequence of n minimization problems with time step \(\tau = t/n\),

The JKO scheme is precisely the analogue of the implicit Euler method in the infinite-dimensional Wasserstein space. The constraint \(\rho \in {{\mathcal {P}}}_{ ac}(\varOmega )\) ensures that the method is positivity and mass preserving, and the fact that \(d_{\mathcal {W}}^2(\rho ,\rho ^n) \ge 0\) ensures the energy decreasing along the scheme, \({\mathcal {E}}(\rho ^{n+1}_\tau ) \le {\mathcal {E}}(\rho ^{n}_\tau )\).

Under sufficient assumptions on the underlying domain \(\varOmega \), drift potential V, interaction potential W, and initial data \(\rho _0\) (see Sect. 2.1), the solution of the JKO scheme \(\rho ^n_\tau \) converges to the solution \(\rho (x,t)\) of the partial differential equation (1), with a first-order rate in terms of the time step \(\tau = t/n\) [2, Theorem 4.0.4],

In our numerical simulations, we observe that this discretization error dominates other errors in our numerical method; see Sects. 4.2.1 and 4.2.2. Consequently, we also introduce a new time discretization, in analogy with the Crank–Nicolson method

The connection between the above scheme and the classical Crank–Nicolson discretization can be seen by considering the optimality conditions for (7):

Like the JKO scheme, our Crank–Nicolson inspired method is also positivity and mass-preserving, though it is not energy decreasing. In Figs. 7, 8, and 10 of our numerics section, we conduct a preliminary analysis of the rate of convergence of this method, which verifies that it is indeed higher order than the JKO scheme. As the goal of the present work is primarily the development of fully discrete numerical schemes, we leave a thorough analysis of the rate of convergence of our Crank–Nicolson inspired method as \(\tau \rightarrow 0\) to future work.

On the one hand, our Crank–Nicolson inspired method (7) is not the first higher-order method proposed for metric space gradient flows: Matthes and Plazotta developed a provably second-order scheme for general metric space gradient flows by generalizing the backward differentiation formula [89]. The Matthes–Plazotta method, however, requires two evaluations of the Wasserstein distance at each outer time step and thus is less practical for our purpose of numerically computing gradient flows in higher dimensions. Another method was introduced by Legendre and Turinici [79] based on the midpoint method. This method can be reformulated as the classical JKO step with half time step followed by an extrapolation. This extrapolation step could be implemented by solving the corresponding continuity equation either explicitly or implicitly; however, solving the equation explicitly could potentially violate conservation of positivity, while solving it implicitly would require an additional matrix inversion. Another higher-order variational method was also proposed in [78], which resembles explicit Runge–Kutta methods and, again, require two or more evaluations of the Wasserstein distance at each outer time step.

1.2.3 Numerical Methods for the Wasserstein Distance

To use either the classical JKO scheme (6) or our new Crank–Nicolson inspired scheme (7) as a basis for numerical simulations, one must first develop a fully discrete approximation of the minimization problem at each step of the scheme. Here, the main numerical difficulty arises in approximating the Wassserstein distance, and there are several different approaches for dealing with this term. First, one can reformulate the Wasserstein distance in terms of a Monge–Ampére equation with nonstandard boundary conditions [11, 68], though difficulties arise due to the lack of a comparison principle [70]. Second, one can reframe the problem as a classical \(L^2({{\mathbb {R}}^d})\) gradient flow at the level of diffeomorphisms [16, 37, 47, 49, 69], but to pursue this approach, one has to overcome complications arising from the underlying geometry and the structure of the PDE system for the diffeomorphisms. Third, one can discretize the Wasserstein distance term as a finite-dimensional linear program, overcoming the lack of strict convexity of the objective function by adding a small amount of entropic regularization [8, 55, 61]. (For a detailed survey of computational optimal transport, we refer the reader to the recent book by Péyre and Cuturi for [97].)

A fourth approach for computing the Wasserstein distance, and the one which we develop in the present work, is to consider a dynamic formulation due to Benamou and Brenier [7]. This reframes the problem as a strictly convex optimization problem with linear PDE constraints, which can be discretized using Benamou and Brenier’s original augmented Lagrangian method ALG2 or, more generally, a range of modern proximal splitting methods, as shown by Papadakis, Peyre, and Oudet [95]. (See also [21, 22] for related work on mean field games.) Adding an additional Fisher information term in this dynamic formulation (in analogy with entropic regularization) has also been explored in [82].

Only recently have these above approaches for computing the Wasserstein distance been integrated with the JKO scheme (6) in order to simulate partial differential equations of the form (1). The Monge–Ampére approach extends naturally, though the presence of a diffusion term \(\alpha U'_m(\rho )\) for \(\alpha >0\) is required to enforce convexity constraints at the discrete level [10]. Similarly, entropic regularization (or the addition of a Fisher information term) vastly accelerates the computation of gradient flows, but at the level of the partial differential equation, this corresponds to introducing numerical diffusion, which may disrupt the delicate balance between aggregation and diffusion inherent in PDEs of this type [28, 55, 82]. Finally, Benamou and Brenier’s dynamic reformulation of the Wasserstein distance has also been adopted in recent work to approximate gradient flows [9]. A key benefit of this latter approach when compared to entropic regularization is that it leads to an optimization problem in \(N_x^d \times N_t\) variables, where \(N_x\) and \(N_t\) are the number of spatial and temporal gridpoints, whereas the latter leads to an optimization problem in \(N_x^{2d}\) variables.

In the present work, we further develop this last approach, using Benamou and Brenier’s dynamic reformulation of the Wasserstein distance to simulate Wasserstein gradient flows, via both the classical JKO scheme (6) and our new Crank–Nicolson inspired scheme (7). This leads to a sequence of minimization problems (Fig. 1C), which we discretize (Fig. 1D) and then solve using a modern primal dual three operator splitting scheme due to Yan [106], instead of the classical ALG2 method. See Sect. 2 for a detailed description of our approach.

Due to the fact that we use operator splitting methods to compute the minimizer in Benamou and Brenier’s dynamic formulation of the Wasserstein distance, our work can be seen as an extension of previous work by Papadakis, Peyre, and Oudet [95], which applied similar two operator splitting schemes to simulate the Wasserstein distance. However, there are a few key differences between our approach and previous work. First, we are able to implement the primal dual splitting scheme in a manner that does not require matrix inversion of the finite difference operator, which reduces the computational cost. Second, we succeed in obtaining the exact expression for the proximal operator, which allows our method to be truly positivity preserving, while other similar methods are only positivity preserving in the limit as \(\varDelta x, \varDelta t \rightarrow 0\); see Remark 5. Third, instead of imposing the linear PDE constraint in Benamou and Brenier’s dynamic reformulation exactly, via a finite difference approximation, we allow the linear PDE constraint to hold up to an error of order \(\delta >0\), which can be tuned according to the spatial discretization \((\varDelta x)\), the inner temporal discretization \((\varDelta t)\), and the outer time step \(\tau \) to respect the order of accuracy of the finite difference approximation; see Remark 3. Numerically, this allows our method to converge in fewer iterations, without any reduction in accuracy, as demonstrated in Fig. 3. From a theoretical perspective, the fact that we only require the PDE constraint to hold up to an error of order \(\delta > 0\) makes it possible to prove convergence of minimizers of the fully discrete problem to minimizers of the JKO scheme (6), since minimizers of the fully discrete problem always exist for \(\delta >0\), which is not the case when the PDE constraint is enforced exactly (\(\delta = 0\)); see Remark 8 and Theorem 1.

1.3 Contribution

The main components of our numerical method for computing solutions to (1) are:

-

(a)

an outer time discretization, of either JKO (6) or Crank–Nicolson type (7) (Fig. 1B)

-

(b)

a dynamic interpretation of the Wasserstein distance (Fig. 1C), which when discretized via finite difference approximations leads to a sequence of constrained optimization problems (Fig. 1D)

-

(c)

an application of modern three operator splitting schemes for solving these optimization problems.

Our main contributions are:

-

Unlike classical explicit methods, our JKO-type method is unconditionally stable. Unlike classical implicit methods, it achieves this stability without an expensive matrix inversion.

-

In practice, we observe that our Crank–Nicolson-type method performs even better than our JKO-type method, in terms of rate of convergence with respect to the outer time step (see Figs. 7, 8, and 10). We leave a thorough analysis of the rate of this convergence of this method to future work.

-

By formulating our optimization problem with a linear inequality constraint instead of a linear equality constraint, our algorithm converges in fewer iterations when compared to related algorithms for Wasserstein geodesics; see Remark 3 and Fig. 3.

-

We prove convergence of our fully discrete method (Fig. 1D) to the JKO scheme (Fig. 1B, C) as the spatial discretization and inner time discretization go to zero.

2 Numerical Method

2.1 Dynamic Formulation of JKO Scheme

As described in the previous section, our numerical method for computing the JKO scheme is based on the following dynamic reformulation of the Wasserstein distance due to Benamou and Brenier [7]:

where \((\rho ,v)\in AC(0,1;{{\mathcal {P}}}(\varOmega ) )\times L^1(0,1; L^2(\rho ))\) belongs to the constraint set \({\mathcal {C}}_0\) provided that

where \(\nu \) is the outer unit normal on the boundary of the domain \(\varOmega \). A curve \(\rho \) in \({{\mathcal {P}}}(\varOmega )\) is absolutely continuous in time, denoted \(\rho \in AC(0,1;{{\mathcal {P}}}(\varOmega ) )\), if there exists \(w \in L^1(0,1)\) so that \(d_{\mathcal {W}}(\rho (\cdot , t_0), \rho (\cdot , t_1)) \le \int _{t_0}^{t_1} w(s) \mathrm {d}s\) for all \(0< t_0 \le t_1 < 1\). The PDE constraint (9 and 10) holds in the duality with smooth test functions on \({{\mathbb {R}}^d}\times [0,1]\), i.e., for all \(f \in C^\infty _c({{\mathbb {R}}^d}\times [0,1])\),

This dynamic reformulation reduces the problem of finding the Wasserstein distance between any two measures to identifying the curve in \({{\mathcal {P}}}(\varOmega )\) that connects them with minimal kinetic energy. However, the objective function (8) is not strictly convex, and the PDE constraint (9) is nonlinear. For these reasons, in Benamou and Brenier’s original work, they restrict their attention to the case \(\rho (\cdot , t) \in {{\mathcal {P}}}_{ac}(\varOmega )\) and introduce the momentum variables \(m = v \rho \), in order to rewrite (8) as

where

and \((\rho ,m) \in AC(0,1; {{\mathcal {P}}}_{ac}(\varOmega )) \times L^1(0,1;L^2(\rho ^{-1}))\) belong to the constraint set \({\mathcal {C}}_1\) provided that

After this reformulation, the integral functional

is strictly convex along linear interpolations and lower semicontinuous with respect to weak-* convergence [1, Example 2.36], and the PDE constraint is linear. As an immediate consequence, one can conclude that minimizers are unique. Furthermore, for any \(\rho _0, \rho _1 \in {{\mathcal {P}}}_{ac}(\varOmega )\), a direct computation shows that the minimizer \(({{\bar{\rho }}}, {{\bar{m}}})\) is given by the Wasserstein geodesic from \(\rho _0\) to \(\rho _1\),

where T is the optimal transport map from \(\rho _0\) to \(\rho _1\). (See [2, 98, 105] for further background on optimal transport.) Consequently, given any minimizer \(({{\bar{\rho }}}, {{\bar{m}}})\) of (12), we can recover the optimal transport plan T via the following formula:

Building upon Benamou and Brenier’s dynamic reformulation of the Wasserstein distance, one can also consider a dynamic reformulation of the JKO scheme (6). In particular, substituting (12) in (6) leads to the following dynamic JKO scheme:

Problem 1

(Dynamic JKO) Given \(\tau >0\), \({\mathcal {E}}\), and \(\rho _0\), solve the constrained optimization problem,

where \((\rho ,m) \in AC(0,1; {{\mathcal {P}}}_{ac}(\varOmega )) \times L^1(0,1;L^2(\rho ^{-1}))\) belong to the constraint set \({\mathcal {C}}\) provided that

We emphasize that the requirement \(\rho (x,t) \in {{\mathcal {P}}}_{ac}(\varOmega )\) for all \( t\in [0,1]\) ensures that \(\rho (x,t) \ge 0\).

Remark 1

(Wasserstein geodesics) Note that for any \(\rho _1 \in {{\mathcal {P}}}_{ac}(\varOmega )\), we may take

in which case Problem 1 reduces to the Benamou–Brenier formulation of the Wasserstein distance (12). Consequently, the numerical method we develop for Problem 1 offers, as a particular case, a provably convergent numerical method for computing the Wasserstein geodesic and Wasserstein distance between \(\rho _0\) and \(\rho _1\). On the one hand, there are many alternative methods for computing Wasserstein geodesics in Euclidean space. Indeed, the many algorithms described in the introduction for computing the Wasserstein distance also provide an optimal transport plan, which can be linearly interpolated to give the Wasserstein geodesic [8, 11, 55, 61, 68, 97]. On the other hand, our method is distinguished because it could be more naturally extended to variants of the Wasserstein metric built on the Benamou–Brenier formulation [33, 64, 87], as well as to Wasserstein geodesics on non-Euclidean manifolds, where the geodesic equations on the underlying manifold may no longer be explicit, so that one cannot pass directly from the optimal transport plan to the Wasserstein geodesic.

Remark 2

(existence and uniqueness of minimizers) If the underling domain \(\varOmega \) is convex and the energy \({\mathcal {E}}\) is proper, lower semicontinuous, coercive, and \(\lambda \)-convex along generalized geodesics, and also satisfies \( \{ \mu : {\mathcal {E}}(\mu ) < +\infty \} \subseteq {{\mathcal {P}}}_{ac}(\varOmega )\), then, for \(\tau >0\) sufficiently small, there exists a unique solution to Problem 1 [2, Theorem 4.0.4, Theorem 8.3.1]. In particular, these assumptions are satisfied by the energy \({\mathcal {G}}_{\rho _1}\) (18), as well as by the drift–diffusion interaction energy from the introduction (4), for U as in Eq. (3), \(V, W \in C^2(\varOmega )\). (See, for example, [2, Section 9.3] or [56] for more general conditions on U, V, W.)

Thus, if we denote by \(({\bar{\rho }},{\bar{m}})\) the minimizer of Problem 1, then for \(\tau >0\) sufficiently small, the proximal map,

is well defined for all \(\rho _0 \in D({\mathcal {E}})\). Furthermore, the energy decreases under the proximal map,

which can be seen by comparing the value of the objective function at the minimizer \(({\overline{\rho }},{\overline{m}})\) to the value of the objective function at \((\rho (x,0), 0) \in {\mathcal {C}}\) and using that \(\varPhi (\rho ,m) \ge 0\).

Given \(\rho _0 \in D({\mathcal {E}})\), if we recursively define the discrete time gradient flow sequence

then, taking \(\tau = t/n\), \(\rho ^n_\tau \) converges to \(\rho (x,t)\), the gradient flow of the energy \({\mathcal {E}}\) with initial data \(\rho _0\) at time t, and under mild regularity assumptions on \(\rho _0\), we have

In this way, the classical JKO scheme provides a first-order approximation of the gradient flow [2, Theorem 4.0.4]. In our numerical simulations, we observe that this discretization error dominates other errors in our numerical method; see Sects. 4.2.1 and 4.2.2. For this reason, we introduce the following new scheme, inspired by the Crank–Nicolson method.

Problem 2

(Crank–Nicolson Inspired Dynamic JKO) Given \(\tau >0\), \({\mathcal {E}}\), and \(\rho _0\), solve the constrained optimization problem,

where \((\rho ,m) \in AC(0,1; {{\mathcal {P}}}_{ac}(\varOmega )) \times L^1(0,1;L^2(\rho ^{-1}))\) belong to the constraint set \({\mathcal {C}}\) provided that

In Sect. 4.2.2, we provide numerical examples comparing the above method to the classical JKO scheme from Problem 1, illustrating that it achieves a higher-order rate of convergence in practice (see Figs. 7, 8, and 10), in spite of the fact that that it lacks the energy decay property of Problem 1. Under what conditions a higher-order analogue of inequality (21) holds for the new scheme is an interesting open question that we leave to future work, as the main goal of the present work is the development of fully discrete numerical methods for computing minimizers of Problem 1 and 2. By iterating either of these minimization problems, as in Eq. (20), we obtain a numerical method for simulating Wasserstein gradient flows.

2.2 Fully Discrete JKO

We now turn to the discretization of the dynamic JKO scheme, Problem 1, and the Crank–Nicolson inspired scheme, Problem 2. We begin by noting that the Crank–Nicolson inspired Problem 2 can be rewritten in the same form as Problem 1 by considering the energy

Using this observation, we will now describe our discretization of both problems simultaneously.

2.2.1 Discretization of Functions and Domain

Given an n-dimensional hyperrectangle \(S = \varPi _{I=1}^{n} [a_I,b_I] \subseteq {\mathbb {R}}^{n}\), we discretize it as a union of cubes \(Q_i\), \(i \in {\mathbb {N}}^n\), where in the lth direction, we suppose there are \(N_l\) intervals of spacing \(({ \mathbf \varDelta z})_l = (b_l-a_l)/N_l\):

Piecewise constant functions with respect to this discretization are given by

To discretize Problem 1, we take \(S = {\overline{\varOmega }} \times [0,1] \subseteq {\mathbb {R}}^{d +1}\), where \({\overline{\varOmega }} = \varPi _{i=1}^{n} [a_i,b_i] \). For any \(i \in {\mathbb {N}}^{d+1}\), write \(i = (j,k)\), for the spatial index \(j \in {\mathbb {N}}^d\) and the temporal index \(k \in {\mathbb {N}}\). We let \( N_x \in {\mathbb {N}} \) denote the number of intervals in each spatial direction and \(N_t \in {\mathbb {N}}\) denote the number of intervals in the temporal direction. Take \(\mathbf{\varDelta z} = (\mathbf{\varDelta x}, \varDelta t)\) for \((\mathbf{\varDelta x})_l = (\varDelta x) >0\) for all \(l = 1, \dots , d\) and \(\varDelta t >0\).

We consider piecewise constant approximations \((\rho ^h,m^h)\) of the functions \((\rho ,m)\), with coefficients denoted by \((\rho _{j,k}, m_{j,k})\). For any \( (\rho ,m) \in C({\overline{\varOmega }} \times [0,1])\), one such approximation is the pointwise piecewise approximation \(({\hat{\rho }}^h, {\hat{m}}^h)\), obtained by defining the coefficients \(({\hat{\rho }}_{j,k}, {\hat{m}}_{j,k})\) to be the value of \((\rho ,m)\) on a regular grid of spacing \((\varDelta x) \times (\varDelta t)\):

where \({\mathbf {1}} = [1,1, \dots , 1]^t \in {\mathbb {N}}^d\). Note that, whenever \((\rho ,m) \in C({\overline{\varOmega }} \times [0,1])\), we have that \(({\hat{\rho }}^h, {\hat{m}}^h)\) converges to \((\rho ,m)\) uniformly.

2.2.2 Discretization of Energy Functionals

Next, we approximate the energy functionals by discrete energies \({\mathcal {E}}^h\), beginning with energies of the form (4). Given a piecewise constant function \(\rho ^h\) with coefficients \(\rho _j\),

where \(V^h(x) = \sum _{j} V_j 1_{Q_j}(x)\) is a piecewise constant approximation of V(x) and \(W^h(x,y) = \sum _{j,l} W_{j,l} 1_{Q_j}(x)1_{Q_j}(y) \) is a piecewise constant approximation of \(W(x-y)\). Here, \(W_{j,l} = W(|x_j - x_l|)\) symmetric, i.e., \(W_{j,l} = W_{l,j}\).

Likewise, for energies of the form (4), we consider the following discretization of the energy \({\mathcal {H}}_{\rho _0}\) from Eq. (22) for the Crank–Nicolson inspired scheme, Problem 2,

Finally, to compute Wasserstein geodesics between two measures \(\rho _0, \rho _1 \in {{\mathcal {P}}}_{ac}(\varOmega )\), we consider a discretization of the energy \({\mathcal {G}}_{\rho _1}\) from Eq. (18). Given a piecewise constant approximation \(\rho _1^h\) of \(\rho _1\) and \(\delta \ge 0\), define

2.2.3 Discretization of Derivative Operators

Let \(D^h_t \rho ^h \) and \(D^h_x m^h\) denote the discrete time derivative and spatial divergence on \(\varOmega \times [0,1]\) and let \(\nu ^h\) denote the discrete outer unit normal of \(\varOmega \). (See Hypothesis 3 for the precise requirements we impose on each of these discretizations). For example, in one dimension we may choose a centered difference in space and a forward Euler method in time,

or a Crank–Nicolson method,

We compute these discretizations of the derivatives at the boundary by extending \(m_{j,k} \) to be zero in the direction of the outer unit normal vector. As we can only expect these approximations of the temporal and spatial derivatives to hold up to an error term, we relax the equality constraints from (17) in the following discrete dynamic JKO scheme.

2.2.4 Discrete Dynamic JKO

The discretizations described in the previous sections lead to a fully discrete dynamic JKO problem:

Problem \(1_{j,k}\) (Discrete Dynamic JKO) Fix \(\tau , \delta _1, \delta _2, \delta _3, \delta _4 >0\), \({\mathcal {E}}^h\), and \(\rho _0^h\). Solve the constrained optimization problem,

where \((\rho _{j,k},m_{j,k})\) belong to the constraint set \({\mathcal {C}}^h\) provided that for all j, k,

The inequalities (30) enforce the PDE constraint and the boundary condition; the inequalities (31) enforce the mass constraint and the initial conditions. Recall that, by definition of \(\varPhi \) in Eq. (13), \(\varPhi (\rho _{j,k}, m_{j,k})< +\infty \) only if \(\rho _{j,k}\) is nonnegative. Consequently, if a minimizer \(\rho _{j,k}\) exists, it must be nonnegative.

Remark 3

(relaxation of PDE constraints) A key element of our numerical method is that we relax the equality constraint (17) at the fully discrete level. This reflects the fact that even an exact solution of the continuum PDE will only satisfy the discrete constraints (30-31) up to an error term depending on the order of the finite difference operators.

We allow the choice of \(\delta _i\) to vary for each of the above constraints. However, when the desired exact solution is sufficiently smooth, the optimal choice of \(\delta _i\) for a second-order discretization of the spatial and temporal derivatives is

where \(\tau >0\) is the size of the timestep in the outer time discretization; see equations (6-7). As we will demonstrate in Fig. 3 of our numerics section, relaxing the PDE constraint accelerates convergence to a minimizer of the fully discrete Problem \(1_{j,k}\) without any loss of accuracy with respect to the exact continuum solution.

Finally, note that while the discrete PDE constraint (30) automatically enforces the mass constraint up to order \(\delta _1^2 + \delta _2^2\), we choose to impose the mass constraint separately via the first Eq. in (31). This leads to better performance in examples where the exact solution is not smooth enough to satisfy the discrete PDE constraint up to a high order of accuracy but imposing a stricter mass constraint leads to a higher quality numerical solution; see Fig. 4.

Under sufficient hypotheses on the discrete energy \({\mathcal {E}}^h\) and the initial data \(\rho _0^h\), minimizers of Problem \(1_{j,k}\) exist; see Theorem 1. Furthermore, this discrete dynamic JKO scheme preserves the energy decreasing property of the original JKO scheme. To see this, note that, given an energy \({\mathcal {E}}^h\), time step \(\tau >0\), and initial data \((\rho _0^h)_j\) we may define the fully discrete proximal map by

where \(({\overline{\rho }}_{j,k}, {\overline{m}}_{j,k})\) is any minimizer of Problem \(1_{j,k}\). Independently of which minimizer is chosen, we have

which can be seen by comparing the value of the objective function at the minimizer \(({\overline{\rho }}_{j,k},{\overline{m}}_{j,k})\) to the value of the objective function at \((\rho _{j,k}, m_{j,k}) = ((\rho _0)_j, 0) \in {\mathcal {C}}\) and using the fact that \(\varPhi \ge 0\). Furthermore, by iterating the fully discrete proximal map, we may construct a fully discrete gradient flow sequence

In analogy with the continuum case, we will use this fully discrete JKO scheme to simulate gradient flows. (See Algorithm 3.)

2.3 Primal Dual Algorithms for Fully Discrete JKO

In order to find minimizers of Problem \(1_{j,k}\), we apply a primal dual operator splitting method. Since the constraints in Problem \(1_{j,k}\) are linear inequality constraints, we may rewrite them in the form \(\Vert {{\tilde{{\mathsf {A}}}}}_i {u} - {{\tilde{b}}}_i\Vert _2 \le \delta _i\) for \(i = 1,2,3,4\), where \({u} = (\mathbf {\rho }, \mathbf {m})\), and \(\mathbf {\rho }\) and \(\mathbf {m}\) are vector representations of the matrices \(\rho _{j,k}\) and \(m_{j,k}\). (See the Appendix A for explicit formulas for \(\tilde{\mathsf {A}}_i\) and \({{\tilde{b}}}_i\), in one spatial dimension). Similarly, we may rewrite the first term of the objective function (29) in terms of u, defining

We consider two cases for the energy term in the objective function. When the energy is of the form \({\mathcal {G}}^h_{\rho _1}\), as in Eq. (26), we reframe the problem by removing the energy from the objective function and adding \( \sum _j |\rho _{j, N_t}- (\rho _1^h)_j |^2 (\varDelta x)^d \le \delta _5^2 \) to the constraints (30) and (31), denoting \(\Vert {\mathsf {A}}_i {u} - b_i\Vert _2 \le \delta _i\), for \(i = 1,2,3,4,5\), as the modified constraints. On the other hand, when the energy is of the form (24) or (25), we rewrite it in terms of u as

In particular, if we let \({\mathsf {S}}\) be the selection matrix

then \(F({u}) = {\mathcal {F}}^h({\mathsf {S}}{u})\) and \(H(u) = {\mathcal {H}}_{\rho _0}^h({\mathsf {S}}{u})\), where \({\mathcal {F}}^h\) and \({\mathcal {H}}_{\rho _0}^h\) are defined in (24) and (25), respectively.

This leads to the following two optimization problems:

Problem 3(a)

\(\min _{{u}} \varPhi ({u}) + {\mathfrak {i}}_{ \mathbf{{ \delta }}}({\mathsf {A}}{u}),\) \({\mathfrak {i}}_{ {\varvec{\delta }}} ( {\mathsf {A}}{u}) = \left\{ \begin{array}{cl} 0 &{} \Vert {\mathsf {A}}_i {u} - b_i\Vert _{2} \le \delta _i , \ i = 1, \dots ,5 \\ +\infty , &{} \text { otherwise.} \end{array} \right. \)

Problem 3(b)

\(\min _{{u}} \varPhi ({u}) + 2 \tau E({u}) + {\mathfrak {i}}_{\tilde{\varvec{\delta }}} ({{\tilde{{\mathsf {A}}}}} {u})\), \({\mathfrak {i}}_{\tilde{\varvec{\delta }}} ({{\tilde{{\mathsf {A}}}}} {u}) = \left\{ \begin{array}{cl} 0 &{} \Vert {{\tilde{{\mathsf {A}}}}}_i {u} - \tilde{b}_i\Vert _{2} \le {{\tilde{\delta }}}_i , \ i = 1,\dots ,4 \\ +\infty , &{} \text { otherwise.} \end{array} \right. \)

To compute the Wasserstein distance, we solve Problem 2.3, and to compute the gradient flow of an energy, we iterate Problem 2.3\(O(\frac{1}{\tau })\) times, for either \(E(u) = F(u)\) (classical JKO) or \(E(u) = H(u)\) (Crank–Nicolson inspired scheme).

Primal-dual methods for solving optimization problems in which the objective function is the sum of two convex functions, as in Problem 2.3, are widely available [52]. However, analogous methods for optimizations problems in which the objective function is the sum of three convex functions, as in Problem 2.3, have only recently emerged [62, 106]. In particular, in Algorithm 1, for Problem 2.3, we use Chambolle and Pock’s well-known primal dual algorithm, and in Algorithm 2, for Problem 2.3, we use Yan’s recent extension of this algorithm to objective functions with three convex terms. Both algorithms offer an extended range of primal and dual step sizes \(\lambda \) and \(\sigma \) and low per-iteration complexity, due to the sparseness of \({\mathsf {S}}\), \({\mathsf {A}}\), and \({\tilde{{\mathsf {A}}}}\). Note specifically that the success of Algorithm 1 depends on the ease of computing the proximal operators related to \(\phi \) and \({\mathfrak {i}}_\delta \), and therefore if we simply group the additional energy term in Problem 2.3 to either \(\phi \) or \({\mathfrak {i}}_\delta \), it would violate such property. Instead, we shall consider E(u) as a separate term and take advantage of its smoothness, as shown in Algorithm 2. Finally, in Algorithm 3, we describe how Algorithm 2 can be iterated to approximate the full JKO sequence and, consequently, solutions of a range of nonlinear partial differential equations of Wasserstein gradient flow type.

To initialize both algorithms, we choose \(\phi ^0\) and \(m^0\) to be zero vectors, and for \(\rho ^0\), we let its components at the initial time (i.e., \(k = 0\)) be \(\rho _0(x)\) evaluated on an equally spaced grid of width \(\varDelta x\), and other times to be zero. The stopping criteria consist of checking the PDE constraint (30)–(31) along with the convergence monitors:

The proximal operator, which appears in Algorithms 1 and 2, is defined by

For both \(h = \sigma i_{\varvec{\delta }}^*\) and \(h = \lambda \varPhi \), there are explicit formulas for the proximal operators. By Moreau’s identity, we may write \(\text {Prox}_{\sigma i_{\varvec{\delta }}^*}(x)\) in terms of projections onto balls of radius \(\delta _i\) centered at \(b_i\) for the ith portion of vector x:

For the proximal operator of \(\varPhi \), as shown by Peyré, Papadakis, and Oudet [95, Proposition 1],

where \(\rho ^*\) is the largest real root of the cubic polynomial equation \(P(x) := (x-\rho )(x+\lambda )^2-\frac{\lambda }{2}|m|^2 = 0,\) and \(m^*\) can be obtained by \(m^* = \rho ^*m/(\rho ^*+\lambda )\). By computing the proximal operator exactly, our primal dual method is positivity preserving, respecting a key property of the original Problems 1 and \(1_{j,k}\).

As the computations of both proximal operators (35), (36) are component-wise, they can easily be parallelized. Likewise, the computation of the gradient \(\nabla E\) is also component-wise:

Remark 4

(discrete convolution) As written, the above functionals involves a computation of the convolutions \(\sum _{l } W_{j,l} \rho _{l, N_t}\) and \(\sum _{l } W_{j,l} \rho _{l,0}\), which can be achieved efficiently using the fast Fourier transform. Note that since the product of the discrete Fourier transforms of two vectors is the Fourier transform of the circular convolution and the interaction potential \(W_{j-k}=W(x_j-x_k)\) is not a periodic function, we need zero-padding for computing the convolution. For the 1D case, we can first use the fast Fourier transform to compute the circular convolution of \(\mathbf {W}=(W_j)_{j=-N_x+2}^{N_x-2}\) and \((\mathbf {\rho }, ~ (\mathbf {0})_{N_x-2})\), and then extract the last \(N_x-1\) elements, which are the desired convolution \(\sum _{k } W_{j-k} \rho _k\) for \(1\le j \le N_x-1\).

Embedding Algorithm 2 to the JKO iteration, we have the following algorithm for Wasserstein gradient flows.

Note that line 6 in Algorithm 3 is to construct a better initial guess for \(\rho \) at each JKO iteration by applying an extrapolation.

Remark 5

(Comparison of our numerical method to previous work) Our definition of the indicator function in Problems 3(a) and 3 (b) differs from previous work, and as a result, our primal-dual algorithm does not require the inversion of the matrix \({\mathsf {A}}{\mathsf {A}}^T\) [7, 95], which makes it quite efficient in high dimensions thanks to the sparsity of \({\mathsf {A}}\). A similar approach is taken in a recent preprint [81] to compute the earth mover’s distance \(W_1\), though, in this context, the earth mover’s distance is dissimilar from the Wasserstein distance, since it does not require an extra time dimension and is thus a lower-dimensional problem.

A second difference between our method and the approach in previous works is that, since P(x) has at most one strictly positive root, it can be obtained by the general solution formula for cubic polynomials with real coefficients. Therefore, in our numerical simulations, we may compute the proximal operator \(\text {Prox}_{\lambda \varPhi }(u)\) by using this general solution formula, rather than via Newton iteration [95]. As a consequence, our method is truly positivity preserving, as opposed to positivity preserving in the limit as \(\varDelta x, \varDelta t \rightarrow 0\).

We close this section by recalling sufficient conditions on the primal and dual step sizes \(\sigma \) and \(\lambda \) that ensure Algorithms 1 and 2 converge to minimizers of Problems 2.3 and 2.3.

Proposition 1

(Convergence of Algorithm 1, c.f. [52]) Suppose \(\sigma \lambda <1/\lambda _{max}({{\mathsf {A}}}{{\mathsf {A}}}^t)\) and a minimizer of Problem 2.3 exists. Then, as \(\mathrm {Iter_{max}} \rightarrow +\infty ,\) and \(\epsilon _1\), \(\epsilon _2 \rightarrow 0\) in the stopping criteria (33) (34), the output \({u}^*\) of Algorithm 1 converges to a minimizer of Problem 2.3.

Proposition 2

(Convergence of Algorithm 2, c.f. [106]) Suppose that the discrete energy E(u) defined in Eq. (32) is proper, lower semi-continuous, convex, and there exists \(\beta >0\) such that \(\left\langle u_1-u_2, \nabla _u E(u_1) - \nabla _u E(u_2)\right\rangle \ge \beta \Vert \nabla E(u_1) - \nabla E(u_2)\Vert ^2\). Suppose further that \(\sigma \lambda <1/\lambda _{max}({\tilde{{\mathsf {A}}}}{\tilde{{\mathsf {A}}}}^t)\), \(\lambda <2\beta \), and a minimizer of Problem 2.3 exists. Then, as \(\mathrm{Iter_{max}} \rightarrow +\infty \) and \(\epsilon _1\), \(\epsilon _2 \rightarrow 0\), the output \(u^*\) converges to a minimizer of Problem 2.3.

Note here that the co-coercivity requirement on \(\nabla E\) in the above proposition is equivalent to require the Lipschitz continuity of \(\nabla E\), i.e., \(\Vert \nabla _u E(u_1) - \nabla _u E(u_2)\Vert \le \frac{1}{\beta } \Vert u_1 - u_2\Vert \). For the energy of the form (4), this requirement reduces to the boundedness of \(U''(\rho )\) and W, which can be satisfied independent of the numerical resolution if we consider bounded solution (no finite time blow up in \(\rho \)) and nonsingular interaction kernel. In the case when W is singular, for example when W is a Newtonian interaction potential, we approximate W by a continuous function via convolution with a mollifier; see Remark 7.

3 Convergence

We now prove the convergence of solutions of the fully discrete JKO scheme, Problem \(1_{j,k}\), to a solution of the continuum JKO scheme, Problem 1. We begin, in Sect. 3.1, by describing the hypotheses we place on the underlying domain \(\varOmega \), the energy \({\mathcal {E}}\), the initial data \(\rho _0\), and the discretization operators. Then, in Sect. 3.2, we show that minimizers of Problem \(1_{j,k}\) exist, provided the discretization is sufficiently refined. Finally, in Sect. 3.3, we prove that any sequence of minimizers of Problem \(1_{j,k}\) has a subsequence that converges to a minimizer of Problem 1. In order for our finite difference approximation to converge, we assume throughout that a smooth, positive minimizer of the continuum JKO scheme Problem 1 exists. See hypothesis (H6) and Remark 9 for further discussion of this assumption.

3.1 Hypotheses

We impose the following hypotheses on the underlying domain, energy, and discretization operators.

-

(H1)

\(\varOmega = \varPi _{i=1}^d (a_i,b_i) \subseteq {{\mathbb {R}}^d}\), for \(a_i< b_i \in {\mathbb {R}}\). We assume that the spacing of the spatial discretization \((\varDelta x) >0 \) and the temporal discretization \((\varDelta t)>0\) are both functions of h satisfying \(\lim _{h \rightarrow 0} (\varDelta x) = \lim _{h \rightarrow 0} (\varDelta t) = 0\).

-

(H2)

For any piecewise constant function \(\rho ^h\) on \(\varOmega \), the discrete energy functional \({\mathcal {E}}^h\) has one of the following forms, as described in Sect. 2.2.2:

-

(a)

\( {\mathcal {F}}^h(\rho ^h) = \sum _{j} \left( U( \rho _j) + V_j \rho _j \right) {{(\varDelta x)^d}}+ \sum _{j,l} W_{j, l} \rho _j \rho _{l} (\varDelta x)^{2d} \)

-

(b)

\( {\mathcal {H}}^h_{\rho _0}(\rho ^h) = \frac{1}{2} {\mathcal {F}}^h(\rho ^h) + \frac{1}{2} \sum _{j} \left( U'( (\rho _0)_j) + V_j +\sum _l W_{j, l} (\rho _0)_l (\varDelta x)^{d} \right) \rho _j (\varDelta x)^{d} \)

-

(c)

\({{\mathcal {G}}}_{\rho _1}^h(\rho _j) := {\left\{ \begin{array}{ll} 0 &{}\text { if } \sum _j |\rho _j - ( {\rho }^h_1)_j |^2 (\varDelta x)^d \le \delta _5^2 \\ +\infty &{}\text { otherwise.} \end{array}\right. } \)

We place the following assumptions on U, V, and W and the target measure \(\rho _1\):

-

(i)

Either \(U \equiv 0\) or \(U \in C([0, +\infty ))\) is convex, \(U \in C^1((0,+\infty ))\), \(\lim _{r \rightarrow +\infty } \frac{U(r)}{r} = +\infty \), and \(U(0) = 0\);

-

(ii)

\( V^h(x) := \sum _{j \in {\mathbb {Z}}^d} V_{j} 1_{Q_{j}}(x) \) and \(W^h(x,y) := \sum _{(j,l) \in {\mathbb {Z}}^d \times {\mathbb {Z}}^d} W_{j,l} 1_{Q_{j}}(x) 1_{Q_{l}}(y)\) are piecewise constant approximations of \(V,W \in C( {\overline{\varOmega }})\) converging uniformly on \({\overline{\varOmega }}\).

-

(iii)

\(\rho _1 \in C^1({\overline{\varOmega }})\) and \(\rho _1^h\) is a pointwise piecewise constant approximation of \(\rho _1\).

-

(a)

-

(H3)

\(D^{h}_t\) and \(D^{h}_x\) are finite difference approximations of the time derivative and spatial divergence. We assume that \(D^h_t\) is a forward Euler method in time, whereas \(D^h_x\) can be given by an explicit or implicit scheme of first or higher order. We denote by \(D^{-h}_t\) and \(D^{-h}_x\) the dual operators with respect to the \(\ell ^2\) inner product, and we assume the following integration by parts formulas hold for all piecewise constant functions \(\rho ^h,f^h: [0,1] \rightarrow {\mathbb {R}}\),

$$\begin{aligned} \int _0^1 D^{h}_t \rho ^h f^h \mathrm {d}t = \left( \left. \rho ^h f^h \right| _{0}^1 \right) -\int _0^1 \rho ^h D^{-h}_t f^h \mathrm {d}t \end{aligned}$$and if \(m^h:\varOmega \rightarrow {\mathbb {R}}^d\), \(f^h : \varOmega \rightarrow {\mathbb {R}}\),

$$\begin{aligned} \int _{\varOmega } D^{h}_x m^h f^h \mathrm {d}x = \int _{\partial \varOmega } f^h m^h \cdot \nu ^h \mathrm {d}x - \int _{\varOmega } m^h D^{-h}_x f^h \mathrm {d}x , \end{aligned}$$where \(\nu ^h: {\overline{\varOmega }} \rightarrow {{\mathbb {R}}^d}\) is the discrete outer unit normal of \(\varOmega \). Finally, we assume there exists \(C>0\) depending on the domain \({\overline{\varOmega }} \times [0,1]\), so that, for any \(f \in C^1({\overline{\varOmega }} \times [0,1]; {\mathbb {R}})\) and \(v \in C^1({\overline{\varOmega }} \times [0,1]; {\mathbb {R}}^d)\), if \(({f}^h,{v}^h)\) are pointwise piecewise constant approximations,

$$\begin{aligned} \Vert D^{h}_t {f}^h - \partial _t f\Vert _\infty&\le C\Vert \partial _t^2 f \Vert _\infty (\varDelta t) ,&\Vert D^{-h}_t {f}^h - \partial _t f \Vert _\infty&\le C\Vert \partial _t^2 f \Vert _\infty (\varDelta t) \\ \Vert D^{h}_x {v}^h - \nabla \cdot v\Vert _\infty&\le C \Vert D^2 v\Vert _\infty (\varDelta x),&\Vert D^{-h}_x {f}^h - \nabla f \Vert _\infty&\le C \Vert D^2 f \Vert _\infty (\varDelta x) \\ \Vert {v}^h \cdot \nu ^h - v \cdot \nu \Vert _\infty&\le C \Vert v\Vert _\infty (\varDelta x) . \end{aligned}$$(See Sect. 2.2.3 for finite difference approximations satisfying these hypotheses.)

-

(H4)

The constraint relaxation parameters \(\delta _1, \delta _2, \delta _3, \delta _4 \ge 0\) are functions of h with \( \lim _{h \rightarrow 0} \delta _i = 0\), for all i. If the energy is of the form (H2c), we require that \(\delta _5\) is a function of h satisfying \( \lim _{h \rightarrow 0} \delta _5 = 0\) and \( \lim _{h \rightarrow 0} \left( \varDelta x + \varDelta t\right) /\delta _5 = 0\).

-

(H5)

The initial data of the continuum problem satisfy \(\rho _0 \in C^1({\overline{\varOmega }})\) and \( {\rho }_0^h\) is a pointwise piecewise constant approximation of \(\rho _0\).

-

(H6)

Given the domain, energy, and initial data described in the previous hypotheses, there exists a minimizer \(( {\rho }, {m})\) of the continuum Problem 1 satisfying \( {\rho } \in C^2([0,1]; C^1({\overline{\varOmega }}))\), \( {\rho } >0\), and \( {m} \in C^1([0,1]; C^2({\overline{\varOmega }}))\).

To ease notation in the following convergence proof, we observe that Problem \(1_{j,k}\) may be rewritten as follows in terms of \((\rho ^h, m^h)\), the piecewise constant functions on \(\varOmega \times [0,1]\) corresponding to the coefficients \((\rho _{j,k}, m_{j,k})\).

Problem \({1^h}\) (Discrete Dynamic JKO) Fix \(\tau , \delta _1, \delta _2, \delta _3, \delta _4 >0\), \({\mathcal {E}}^h\), and \(\rho _0^h\). Solve the constrained optimization problem,

where \((\rho ^h,m^h)\) belong to the constraint set \({\mathcal {C}}^h\) provided that they are piecewise constant functions on \(\varOmega \times [0,1]\) and the following inequalities hold

Similarly, we may rewrite the definition of the discrete energies in hypothesis (H2) in terms of a piecewise constant functions \(\rho ^h\) on \(\varOmega \) corresponding to \(\rho _j\),

Recall that, by definition of \(\varPhi \) in equation (13), \(\varPhi (\rho ^h, m^h)< +\infty \) only if \(\rho ^h\) is nonnegative. Consequently, if a minimizer \(\rho \) exists, it must be nonnegative.

We conclude this section with several remarks on the sharpness of the preceding hypotheses.

Remark 6

(assumption on domain \(\varOmega )\) In hypothesis (H1), we assume that \(\varOmega \) is an n-dimensional hyperrectangle. We impose this assumption for simplicity, as it provides an natural interpretation of the discretized outer unit normal \(\nu ^h\), which is essential in imposing the boundary conditions for our PDE constraint at the discrete level. More generally, our convergence result can be extended to any Lipschitz domain, as long as sufficient care is taken to define the discrete outer unit normal and the corresponding no flux boundary conditions.

Remark 7

(assumption on energy) As described in hypothesis (H2), our convergence result applies to internal U, drift V, and interaction W potentials that are sufficiently regular on \({\overline{\varOmega }}\). Our assumptions on U are classical and ensure that the internal energy is lower semicontinuous with respect to weak-* convergence [2, Remark 9.3.8]. Our assumptions on V and W, on the other hand, are somewhat stronger, and in practice, one often encounters partial differential equations for which the corresponding choices of V and W are not continuous. However, there are robust methods for approximating these potentials by continuous functions that ensure convergence of the gradient flows. For example, the second author and Topaloglu provide sufficient conditions on discontinuous interaction potentials W for which gradient flows of the regularized interaction potential, \(W_\varepsilon := W*\varphi _\varepsilon \) for a smooth mollifier \(\varphi _\varepsilon \), converge to gradient flows of the original interaction potential W, as well as conditions that ensure minimizers of \(W_\varepsilon \) converge to minimizers of W [59]. (The convergence of general stationary points of \(W_\epsilon \) that are not global minimizers to stationary point of W remains open.)

Remark 8

(assumption on \(\delta _5)\) In hypothesis (H4), it is essential that \(\delta _5\) not vanish too quickly with respect to other parameters in the discretization. A simple illustration of this fact arises in the case that \(\delta _1 \equiv \delta _2 \equiv \delta _3 \equiv \delta _4 \equiv 0\). In this case, we cannot choose \(\delta _5 \equiv 0\), since our pointwise piecewise approximation of the initial data \(\rho _0^h\) will not generally have the same mass as our pointwise piecewise approximation of the target measure \(\rho _1^h\), and if they do not have the same mass, minimizers of the discrete problem do not exist. Consequently, it would be impossible to prove that minimizers of the fully discrete problem converge to minimizers of the continuum problem. On the one hand, this does not greatly impact the performance of our numerical method, as can be seen by considering previous work by Papadakis, Péyre, and Oudet, which numerically implements this approach [95]. On the other hand, our numerical simulation in Fig. 3 indicates that poor choice of the relaxation parameters can cause the method to iterate longer than necessary, without any improvement in accuracy.

Our requirement that \(\lim _{h \rightarrow 0} (\varDelta x + \varDelta t)/\delta _5 =0\) is sufficient to fix this problem and ensure convergence of the method, and this requirement is nearly sharp. To see this, note that, for an arbitrary pointwise piecewise approximation \(\rho _0^h\) of a continuous function \(\rho _0\), we cannot in general achieve accuracy of \(| \int _\varOmega \rho _0^h - \int _\varOmega \rho _0|\) better than \(O (\varDelta x)\). If either \(\delta _1\) and \(\delta _3\), the parameters for the PDE constraint and the mass constraint, are chosen arbitrarily small, then \(|\int _\varOmega \rho ^h(\cdot ,1) - \int _\varOmega \rho _0^h|\) can likewise be made arbitrarily small. Thus, since \(\rho _0, \rho _1 \in {{\mathcal {P}}}_{ac}(\varOmega )\),

so we much have \(\delta _5 \ge O(\varDelta x)\). While a CFL-type condition is not necessary for the stability of our discretization of the PDE constraint, since \(\rho \) and m indeed become coupled in the continuum limit (see Eqs. (8) and (12)), one should expect \((\varDelta t) \le O(\varDelta x)\) to give the best balance between computational accuracy and cost, and we indeed observe this numerically. Combining these facts shows that enforcing that \(\delta _5\) cannot decay faster than \(O(\varDelta x + \varDelta t)\) by assuming \(\lim _{h \rightarrow 0} (\varDelta x + \varDelta t)/\delta _5 =0\) is nearly optimal.

Remark 9

(assumption on existence of smooth, positive minimizer) In hypothesis (H6), we suppose that there exists a sufficiently regular minimizer \(({\overline{\rho }},{\overline{m}})\), \({\bar{\rho }}>0\), of the continuum problem. Our proof of the existence of minimizers of the fully discrete problem and our proof that minimizers of the discrete problems converge to a minimizer of the continuum problem as \(h \rightarrow 0\) strongly rely on this assumption. In particular, the smoothness assumption allows us to use convergence of the finite difference operators, described in hypothesis (H3), to construct an element of \({\mathcal {C}}^h\) in Proposition 3. The positivity assumption allows us to conclude that \(\nabla _{\rho , m} \varPhi \) is uniformly bounded on the range of \({\bar{\rho }}\), which we use to prove the \(\limsup \) inequality for the recovery sequence in Theorem 2(b).

From the perspective of approximating gradient flows, which are solutions of diffusive partial differential equations (3), such regularity and positivity can be guaranteed as long as the initial data are smooth and positive and either the diffusion is sufficiently strong or the drift and interaction terms do not cause loss of regularity. On the other hand, developing conditions on the energy and initial data that ensure such regularity and positivity holds at the level of the JKO scheme, for minimizers of Problem 1, remains largely open: results on the propagation of \(L^p({{\mathbb {R}}^d})\) or BV bounds along the scheme have only recently emerged [17, 50, 63].

From the perspective of approximating Wasserstein geodesics, the now classical regularity theory developed by Caffarelli and Urbas ensures that if the source and target measures \(\rho _0\) and \(\rho _1\) are smooth and strictly positive, then the minimizer of Problem 1\(({\bar{\rho }},{\bar{m}})\) is also smooth and strictly positive. (See, for example, [105, Section 4.3] and [2, Section 8.3].)

Along with this analytical justification for our smoothness and positivity assumptions, our numerical results also indicate that such assumptions are in general necessary. For example in Fig. 4, we observe that if the source and target measure of a Wasserstein geodesic are not sufficiently smooth, the numerical solution introduces artificial regularity. Likewise, even in Fig. 6, we observe that the numerical simulation is strictly positive (though very close to zero in places), while the exact solution is identically zero outside of its support. Still, in spite of the fact that our theoretical convergence result requires smoothness and positivity assumptions, in practice our numerical method still performs well on nonsmooth or nonpositive problems, provided that the spatial and temporal discretization are taken to be sufficiently small; see Figs. 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, and 18.

Finally, these types of smoothness and positivity assumptions are typically needed in convergence proofs for numerical methods based on the JKO scheme. For example, in a method based on the Monge Ampére approximation of the Wasserstein distance, the exact solution is required to be uniformly bounded above and below [10]. Likewise, while rigorous convergence results for fully discrete numerical methods based on entropic or Fisher information regularization remain open, since these methods correspond to introducing numerical diffusion at the level of the PDE, they automatically enforce smoothness and positivity [28, 55, 82].

3.2 Existence of Minimizers

We now show that, under the hypotheses described in the previous section, minimizers of the fully discrete JKO scheme, Problem \({1^h}\), exist for all \(h>0\) sufficiently small. We begin with the following proposition, which constructs a specific element in the constraint set \({\mathcal {C}}^h\), which we will use both in our proof of existence of minimizers and in our \(\varGamma \)-convergence results in the next section.

Proposition 3

(construction of element in \({\mathcal {C}}^h)\) Suppose that hypotheses (H1)–(H6) hold, and choose \((\rho , m) \in {\mathcal {C}}\) satisfying \( {\rho } \in C^2([0,1]; C^1({\overline{\varOmega }}))\), \( {\rho } >0\), and \( {m} \in C^1([0,1]; C^2({\overline{\varOmega }}))\). Then for \(h>0\) sufficiently small, there exists \(({\tilde{\rho }}^h, {\tilde{m}}^h) \in {\mathcal {C}}^h\) satisfying \(({\tilde{\rho }}^h, {\tilde{m}}^h) \xrightarrow {h \rightarrow 0} (\rho ,m)\) uniformly on \(\varOmega \times [0,1]\) and

If, in addition, the energy satisfies hypothesis (H2c) and \({\mathcal {E}}(\rho (\cdot , 1))<+\infty \), then we have

for all \(h>0\) sufficiently small.

Proof

We construct \((\rho ^h,m^h) \in {\mathcal {C}}^h\) as follows. Let \({\hat{m}}^h\) be a pointwise piecewise constant approximation of m; see Eq. (23). Recall that \(\nu ^h\) is the discrete outer unit normal vector. We define \({\tilde{m}}^h: \varOmega \times [0,1] \rightarrow {{\mathbb {R}}^d}\) component-wise to respect the no flux boundary conditions, letting \(({\tilde{m}}^h)_l\) denote the lth component of the vector for \(l = 1, \dots , d\). If \(x \in \partial \varOmega \), then we define

Otherwise, we take \({\tilde{m}}^h(x,t) = {\hat{m}}^h(x,t)\). Define \({\tilde{\rho }}^h: \varOmega \times [0,1] \rightarrow {\mathbb {R}}\) so that \({\tilde{\rho }}^h(x,0) = {\rho }_0^h\) and \(D^{h}_t {\tilde{\rho }}^h(x,t) + D^h_x {\tilde{m}}^h(x,t) \equiv 0\).

We begin by showing that \(({\tilde{\rho }}^h, {\tilde{m}}^h) \in {\mathcal {C}}^h\). By construction, for all \(h >0\),

Taking \(f^h \equiv 1\) in Hypothesis (H3) and applying the PDE constraint ensures that, for all \(s \in [0,1]\) and \(k \in {\mathbb {N}}\) so that \(k (\varDelta t) \le s < (k+1) \varDelta t\),

Thus, we also obtain

This concludes the proof that \(({\tilde{\rho }}^h, {\tilde{m}}^h) \in {\mathcal {C}}^h\).

We now show that \(({\tilde{\rho }}^h, {\tilde{m}}^h) \rightarrow (\rho ,m)\) uniformly on \(\varOmega \times [0,1]\) as \(h \rightarrow 0\). We begin by proving convergence of \({\tilde{m}}^h\) to m. Due to hypothesis (H1) on our domain \(\varOmega \), whenever \(e_i \cdot \nu ^h(x) \ne 0\), there exists \(y \in \partial \varOmega \) so that \(|y-x| \le 2\sqrt{d} (\varDelta x)\) and \( \nu (y) = e_i\). Thus, whenever \(e_i \cdot \nu ^h(x) \ne 0\), the continuum boundary condition \(m(y,t) \cdot \nu (y) = 0\) ensures that for all \(t \in [0,1]\),

We also have that, for all \((x,t) \in \varOmega \times [0,1]\),

Therefore, for all \((x,t) \in \varOmega \times [0,1]\), there exists \(C_m = C_m(d, \Vert Dm\Vert _\infty , \Vert \partial _t m \Vert _\infty )>0\) so that

We now prove the convergence of \({\tilde{\rho }}^h\) to \(\rho \). Since \((\rho ,m)\) is a classical solution of the PDE constraint and \({\tilde{\rho }}^h: \varOmega \times [0,1] \rightarrow {\mathbb {R}}\) is defined by the conditions that \({\tilde{\rho }}^h(x,0) = {\hat{\rho }}_0^h\) and \(D^{h}_t {\tilde{\rho }}^h(x,t) + D^h_x {\tilde{m}}^h(x,t) \equiv 0\), for \((x,t) \in \varOmega \times [0,1]\) and \(k \in {\mathbb {N}}\) so that \(k (\varDelta t) \le t < (k+1) (\varDelta t)\), we have

Since \({\tilde{\rho }}^h \rightarrow \rho \) uniformly and \(\rho >0\), we immediately obtain (39).

Finally, suppose the energy satisfies (H2c). Since \({\mathcal {E}}(\rho (\cdot , 1)) = {\mathcal {G}}_{\rho _1}(\rho (\cdot , 1))< +\infty \), we have \(\rho (\cdot , 1) = \rho _1\). By inequality (41) and the fact that \(\rho ^h_1\) is a pointwise piecewise approximation of \(\rho (\cdot , 1)\),

where \(C_{\rho ,m} = C_{\rho ,m}(\varOmega ,\Vert \nabla \rho \Vert _\infty ,\Vert \nabla \cdot m \Vert _\infty , \Vert D^2m\Vert _\infty )>0\). By hypothesis (H4), \(\lim _{h \rightarrow 0} \frac{(\varDelta x + \varDelta t)}{\delta _5} \rightarrow 0\). Thus, for h sufficiently small,

which completes the proof.

\(\square \)

Theorem 1

(minimizers of discrete dynamic JKO exist) Suppose that hypotheses (H1)–(H6) hold. Then for all \(h>0\) sufficiently small, a minimizer of Problem \({1^h}\) exists.

Proof

First, we note that Proposition 3 ensures that, for \(h>0\) sufficiently small, the constraint set \({\mathcal {C}}^h\) is nonempty and contains some \((\rho ^h,m^h)\) satisfying \(\rho ^h > 0\). If the energy satisfies (H2a) or (H2b), then we immediately obtain \({\mathcal {E}}^h(\rho ^h( \cdot , 1))<+\infty \). Similarly, if the energy satisfies (H2c), then inequality (40) in Proposition 3 again ensures that \({\mathcal {E}}^h(\rho ^h(\cdot ,1)) < +\infty \).

Since \(\varPhi (\rho ^h,m^h)<+\infty \) whenever \(\rho ^h \ge 0\), this ensures that value of the objective function in the discrete minimization problem \({1^h}\) is not identically \(+\infty \) on the constraint set. Therefore,

and we may choose a minimizing sequence \((\rho ^h_n, m^h_n) \in {\mathcal {C}}^h\) that converges to the infimum. We may assume, without loss of generality, that

To conclude the proof of the theorem, we will now show that there exists \((\rho ^h_*, m^h_*)\) so that a subsequence of \((\rho ^h_n, m^h_n)\) converges to \((\rho ^h_*, m^h_*)\) uniformly on \(\varOmega \times [0,1]\). Then, since the objective functional \({\mathcal {E}}^h\) is lower semi-continuous along uniformly convergent sequences [1, Example 2.36] and the constraint set \({\mathcal {C}}^h\) is closed under uniform convergence for fixed \(h>0\), \( (\rho ^h_*, m^h_*)\) must be a minimizer of the fully discrete problem.

In order to obtain compactness of \((\rho ^h_n, m^h_n)\), first note that (42) ensures \( \varPhi ( \rho ^h, m^h) < +\infty \) on \({\overline{\varOmega }} \times [0,1]\), so \(\rho ^h \ge 0\) on \({\overline{\varOmega }}\). Furthermore, the mass constraint (38) ensures that there exists \(R = R(h) >0\), depending on \(\varOmega \), \((\varDelta x)\), \((\varDelta t)\), and \(\delta _3\) so that \(|\rho ^h_n(x,t)| \le R\) for all \((x,t) \in \varOmega \times [0,1]\). Therefore, the vector of coefficients \((\rho ^h_n)_{j,k}\) for this piecewise constant function satisfies \((\rho ^h_n)_{j,k} \in B_R(0) \subseteq {\mathbb {R}}^{N_x^d N_t}\). Consequently, by the Heine–Borel theorem, there exists a vector \((\rho ^h_*)_{j,k} \in {\mathbb {R}}^{N_x^d N_t}\) so that, up to a subsequence, \((\rho ^h_n)_{j,k} \rightarrow (\rho ^h_*)_{j,k}\). Therefore, if \(\rho ^h_*\) denotes the corresponding piecewise constant function, we have that, up to taking a subsequence which we again denote by \(\rho ^h_n(x,t)\), \(\lim _{n \rightarrow +\infty } \rho ^h_n(x,t) = \rho ^h_*(x,t)\) uniformly on \(\varOmega \times [0,1]\).

Next, we show that

If the energy satisfies (H2c), then \({\mathcal {E}}^h(\rho ^h_n(\cdot , 1)) \ge 0\) for all n, and the above inequality is immediate. If the energy satisfies (H2a) or (H2b), then this follows from the fact that U is bounded below on \([0, +\infty ]\), V and W are bounded below on \({\overline{\varOmega }}\) and \(\rho ^h_n(x,t) \rightarrow \rho ^h_*(x,t)\) uniformly.

Combining (43) and (44), we obtain

Furthermore, since \(0 \le \rho ^h_n(x,t) \le R\) for all \((x,t) \in \varOmega \times [0,1]\), \(n \in {\mathbb {N}}\), we have

Therefore, combining (45) and (46), we obtain that there exists \(R' = R'(h)>0\), depending on \(\varOmega \), \((\varDelta x)\), \((\varDelta t)\), and \(\delta _3\), so that \(|m^h_n(x,t)| \le R'\) for all \((x,t) \in \varOmega \times [0,1]\). Arguing as before, the Heine–Borel theorem ensures that, up to a subsequence, \(\lim _{n \rightarrow +\infty } m^h_n(x,t) = m^h_*(x,t)\) uniformly on \(\varOmega \times [0,1]\), for some piecewise constant function \(m^h_*(x,t)\). This gives the result.

\(\square \)

3.3 Convergence of Minimizers

We now prove that minimizers of the discrete dynamic JKO scheme, Problem \({1^h}\) converge to minimizers of Problem 1 as \(h \rightarrow 0\). We begin with the following lemma, showing that any \((\rho ^h, m^h) \in {\mathcal {C}}^h\) satisfies a weak form of the PDE constraint, in the limit as \(h \rightarrow 0\).

Lemma 1

(properties of \({\mathcal {C}}^h)\) Suppose that hypotheses (H1)–(H6) hold, and fix \((\rho ^h,m^h) \in {\mathcal {C}}^h\) so that \(\int _0^1 \int _\varOmega \varPhi (\rho ^h, m^h) <+\infty \) for each \(h>0\). Then \(\rho ^h(\cdot , 0) \rightarrow \rho _0\) in \(L^2(\varOmega )\), and there exist \(\rho \in {{\mathcal {P}}}(\varOmega \times [0,1])\) and \(\mu \in {{\mathcal {P}}}(\varOmega )\) so that, up to a subsequence, \(\rho ^h {\mathop {\rightharpoonup }\limits ^{*}}\rho \) and \(\rho ^h(\cdot , 1) {\mathop {\rightharpoonup }\limits ^{*}}\mu \). Furthermore, for any piecewise constant function \(f^h\) with \(\sup _{h>0} \Vert f^h \Vert _{L^2(\varOmega \times [0,1])} +\Vert f^h \Vert _{L^2(\partial \varOmega \times [0,1])} < +\infty \), we have

Proof

By hypothesis (H6), \( \rho ^h_0 \rightarrow \rho _0\) uniformly on \({\overline{\varOmega }}\). Likewise, the constraint on the initial data (38) and (H4) ensure \(\lim _{h \rightarrow 0} \Vert \rho ^h(\cdot , 0) - {\rho }_0^h \Vert _{L^2(\varOmega )} \le \lim _{h \rightarrow 0} \delta _4 = 0\). Thus, \(\rho ^h(\cdot , 0) \rightarrow \rho _0\) in \({L^2(\varOmega )}\).

We now turn to Eq. (47). By the PDE constraint and boundary conditions (37) and summation by parts, via hypotheses (H3),

where, in the last line, we use that (H4) ensures \(\delta _2, \delta _4 \rightarrow 0\) and the fact that \(f^h\) is bounded uniformly in h in \(L^2(\varOmega \times [0,1]\) and \(L^2(\partial \varOmega \times [0,1])\).

Next, we show that there exist \(\rho \in {{\mathcal {P}}}(\varOmega \times [0,1])\) and \(\mu \in {{\mathcal {P}}}(\varOmega )\) so that, up to a subsequence, \(\rho ^h {\mathop {\rightharpoonup }\limits ^{*}}\rho \) and \(\rho ^h(\cdot , 1) {\mathop {\rightharpoonup }\limits ^{*}}\mu \). By Hölder’s inequality and the mass constraint (38),

where, in the last line, we use that (H4) ensures \(\delta _3 \rightarrow 0\). Since hypothesis (H6) ensures \(\rho _0^h \rightarrow \rho _0\) uniformly and \(\int _\varOmega \rho _0 = 1\), we obtain,

Furthermore, since \(\int _0^1 \int _\varOmega \varPhi (\rho ^h,m^h) < +\infty \) for each \(h >0\), we must have \(\rho ^h \ge 0\) on \(\varOmega \times [0,1]\), and the above equation ensures \(\sup _{h >0} \Vert \rho ^h\Vert _{L^1(\varOmega \times [0,1])} < +\infty \). Thus, classical functional analysis results ensure there exists a subsequence that converges to some \(\rho \in {{\mathcal {P}}}(\varOmega \times [0,1])\) in the weak-* topology (see, e.g., [20, Section 3]).

Finally, taking \(f^h \equiv 1\) in Eq. (47) gives,

Arguing as above, we obtain that, up to a further subsequence, \(\rho ^h(\cdot , 1) {\mathop {\rightharpoonup }\limits ^{*}}\mu (\cdot )\) for \(\mu \in {{\mathcal {P}}}(\varOmega )\). \(\square \)

We now prove that the discrete energies \({\mathcal {E}}^h\) are lower semicontinuous along weak-* convergent sequences.

Proposition 4

(Lower semicontinuity of energies along weak-* convergent sequences) Suppose that hypotheses (H1)–(H6) hold. Then, for any sequence of piecewise constant functions \(\rho ^h: \varOmega \rightarrow {\mathbb {R}}\) such that \(\rho ^h {\mathop {\rightharpoonup }\limits ^{*}}\rho \), we have \(\liminf _{h \rightarrow 0} {\mathcal {E}}^h(\rho ^h) \ge {\mathcal {E}}(\rho )\).

Proof

First, suppose the energy satisfies (H2a). Since the piecewise constant approximations \({\hat{V}}^h\) and \({\hat{W}}^h\) converge to V and W uniformly, for any sequence \(\rho ^h {\mathop {\rightharpoonup }\limits ^{*}}\rho \),

Furthermore, our assumptions on U guarantee that the internal energy term is lower semicontinuous with respect to weak-* convergence [2, Remark 9.3.8], so \(\liminf _{h \rightarrow 0} \int _\varOmega U(\rho ^h(x)) \mathrm {d}x \ge \int _\varOmega U(\rho (x)) \mathrm {d}x\). Combining this with equations (48-49) gives the result.

Next, suppose the energy satisfies (H2b). Since \(\rho _0>0\) on the compact set \({\overline{\varOmega }}\) and \(U'\) is uniformly continuous on \(\rho _0({\overline{\varOmega }}) \subset (0, +\infty )\), the fact that hypothesis (H6) ensures \({\hat{\rho }}_0^h \rightarrow \rho _0\) uniformly ensures \(U'({\hat{\rho }}_0^h) \rightarrow U'(\rho _0)\) uniformly. Therefore,

Likewise, since \({\hat{V}}^h\) and \({\hat{W}}^h\) converge to V and W uniformly, we also have

Combining these limits with the \(\liminf \) inequality for energies of the form (H2a) gives the result.

Finally, suppose the energy satisfies (H2c). Without loss of generality, we may assume that \(\liminf _{h \rightarrow 0} {\mathcal {G}}^h_{\rho _1}(\rho ^h) <+\infty \), so that up to a subsequence, \({\mathcal {G}}^h_{\rho _1}(\rho ^h) \equiv 0\) and \( \lim _{h \rightarrow 0} \Vert \rho ^h - \rho ^h_1 \Vert _{L^2(\varOmega )} = 0\). By uniqueness of limits, \(\rho = \rho _1\). Thus, since \({\mathcal {G}}^h_{\rho _1} \ge 0\), we have \(\liminf _{h \rightarrow 0} {\mathcal {G}}^h_{\rho _1}(\rho ^h) \ge 0 = {\mathcal {G}}_{\rho _1}(\rho ) \).

\(\square \)

We now apply Proposition 4 to prove the \(\varGamma \)-convergence of Problem \({1^h}\) to Problem 1.

Theorem 2

(\(\varGamma \)-convergence of discrete to continuum JKO) Suppose hypotheses (H1)–(H6) hold.

-

(a)

If \((\rho ^h, m^h) \in {\mathcal {C}}^h\) with \((\rho ^h, m^h) {\mathop {\rightharpoonup }\limits ^{*}}(\rho ,m)\), then \((\rho ,m) \in {\mathcal {C}}\) and

$$\begin{aligned}&\liminf _{h \rightarrow 0} \int _0^1 \int _\varOmega \varPhi ( \rho ^h, m^h) \mathrm {d}x \mathrm {d}t + 2 \tau {\mathcal {E}}^h(\rho ^h(\cdot , 1)) \\&\quad \ge \int _0^1 \int _\varOmega \varPhi ( \rho , m ) \mathrm {d}x \mathrm {d}t + 2 \tau {\mathcal {E}}(\rho (\cdot , 1)) . \end{aligned}$$ -

(b)

For any \((\rho , m) \in {\mathcal {C}}\) satisfying \(\rho \in C^2([0,1]; C^1({\overline{\varOmega }}))\), \(\rho >0\), and \(m \in C([0,1]; C^2({\overline{\varOmega }})\), there exists a sequence \(({\tilde{\rho }}^h, {\tilde{m}}^h) \in {\mathcal {C}}^h\) so that \(({\tilde{\rho }}^h ,{\tilde{m}}^h) \rightarrow (\rho ,m)\) uniformly and

$$\begin{aligned}&\limsup _{h \rightarrow 0} \int _0^1 \int _\varOmega \varPhi ( {\tilde{\rho }}^h, {\tilde{m}}^h) \mathrm {d}x \mathrm {d}t + 2 \tau {\mathcal {E}}^h({\tilde{\rho }}^h(\cdot , 1)) \\&\quad \le \int _0^1 \int _\varOmega \varPhi ( \rho , m ) \mathrm {d}x \mathrm {d}t + 2 \tau {\mathcal {E}}(\rho (\cdot , 1)). \end{aligned}$$

Proof

We first prove part (a). Suppose \((\rho ^h, m^h) \in {\mathcal {C}}^h\), with \(\rho ^h {\mathop {\rightharpoonup }\limits ^{*}}\rho \) and \(m^h {\mathop {\rightharpoonup }\limits ^{*}}m\). We begin by showing that the limit \((\rho ,m)\) belongs to \({\mathcal {C}}\). Fix \(f \in C^\infty (\varOmega \times [0,1])\) and let \(f^h\) be a pointwise piecewise constant approximation of f. (See Eq. (23).) By Lemma 1 and hypothesis (H3),