Abstract

Integrated pest management relies on insect pest monitoring to support the decision of counteracting a given level of infestation and to select the adequate control method. The classic monitoring approach of insect pests is based on placing in single infested areas a series of traps that are checked by human operators on a temporal basis. This strategy requires high labor cost and provides poor spatial and temporal resolution achievable by single operators. The adoption of image sensors to monitor insect pests can result in several practical advantages. The purpose of this review is to summarize the progress made on automatic traps with a particular focus on camera-equipped traps. The use of software and image recognition algorithms can support automatic trap usage to identify and/or count insect species from pictures. Considering the high image resolution achievable and the opportunity to exploit data transfer systems through wireless technology, it is possible to have remote control of insect captures, limiting field visits. The availability of real-time and on-line pest monitoring systems from a distant location opens the opportunity for measuring insect population dynamics constantly and simultaneously in a large number of traps with a limited human labor requirement. The actual limitations are the high cost, the low power autonomy and the low picture quality of some prototypes together with the need for further improvements in fully automated pest detection. Limits and benefits resulting from several case studies are examined with a perspective for the future development of technology-driven insect pest monitoring and management.

Similar content being viewed by others

Key Message

-

Insect pest monitoring is typically performed by human operators through costly and time-consuming on-site visits, resulting in limited spatial and temporal resolution;

-

The advent of new technology in remote sensing, electronics and informatics opens the opportunity for monitoring at a distant location;

-

The use of camera-equipped traps allows optimization of monitoring costs and effectiveness;

-

Image analysis algorithms can provide automatic detection and counting of insect pests captured in traps with limited human support.

Monitoring insect pests with new technologies

Insect pest monitoring is typically performed in agriculture and forestry to assess the pest status in given locations (i.e., greenhouse, field, orchard/vineyard, forest) by collecting information about the target pest presence, abundance, and distribution. Within the integrated pest management programs in agriculture, the final goal of insect pest monitoring is to provide growers with a practical decision-making tool. For instance, the intervention thresholds are essential to counteract a given insect pest infestation in a specific field in the best moment, optimizing the control strategy and the grower inputs on that crop. Monitoring data can also be used to implement prediction phenological models to forecast an insect population outbreak, providing additional information to improve the control techniques and optimize insecticide usage (Dent 2000). Similarly, in forestry the detection and monitoring of both native insect pests and invasive species is a crucial issue to set up suitable managements programs, considering the severe impact that a forest insect species can have on the biodiversity, ecology, and economy of the infested area (Brockerhoff et al. 2006).

Insect pests and beneficials may be monitored using a wide range of techniques, including visual inspection, suction traps and passive methods (McCravy 2018). The latter are among the most widely used and typically consist of pitfall and sticky traps for agricultural pests and multifunnel or panel traps for forest pests. Monitoring traps may be colored (chromotropic traps) or baited with attractants, such as pheromones or food baits. Practically, the monitoring and management of a wide range of insect pests is based on trap captures to estimate pest population density and predict damage (Suckling 2016). Traps are then observed by skilled operators, which need to enter every single location on a regular basis to assess the number and determine the species of the trapped insects in every single trap (Southwood and Henderson 2000). Another possibility for insect pest monitoring relies on active sampling by assessing the signs of damages or through canopy beating (i.e., frappage) for particular species, such as for psyllids in apple orchards (Fischnaller et al. 2017). In both cases, the operator has to directly visit the points of observation. These manpowered-assisted monitoring systems are consistently associated with high labor costs and, in some cases, may result in low efficiency, limited promptness of reaction and an inadequate sample size according to the needs. When a monitoring visit in an agricultural or forest parcel concludes negligible infestation levels, the field visit could have been avoided or postponed; however, with traditional approaches, the direct human presence in situ is strictly required to confirm the actual infestation status. When performed manually after a field visit, data acquisition and data analysis typically result in a delay in the awareness of the updated pest pressure status, cannot be synchronized in multiple locations and may be affected by biases due to subjective evaluations. In addition, human-driven insect pest monitoring is often performed in easily accessible locations, in a limited number of points and at best on a weekly basis interval for practicality and cost reasons. These aspects lead to a major limitation of the classic monitoring approach, yielding poor spatial and temporal resolution of insect pest monitoring programs, which occasionally fail to make timely decisions about insect pest control techniques.

In the era of globalization, ‘transdisciplinary’ is also a keyword used in agriculture and forestry, considering the economic, environmental, and social sustainability of the agricultural and forest systems. Expert systems [such as the decision support systems (DSSs)] are a clear exemplification of this transdisciplinarity (Yelapure and Kulkarni 2012; Deepthi and Sreekantha 2017). DSSs, which are coined in numerous ways for many insect pests, diseases and weeds (Damos 2015), can be practically applied with a concrete benefit for the growers on a given productive area, overlaying several topics and simultaneously addressing numerous issues, including trap-based monitoring information (Jones et al. 2010). In this context, the advent of high technology has widened the possibilities for insect pest monitoring. The turning point has likely been the availability of real-time monitoring opportunities through remote sensing (Ennouri et al. 2020). Given that insect trapping with different trap designs and baits according to the target species is the most widespread approach in insect pest monitoring, the opportunity to know what happens inside an insect trap from a distant location opens a significant opportunity to conceive differently the monitoring of an insect population.

Several research articles aimed to improve insect pest monitoring by adding electronic devices to the monitoring traps. The first examples date back to the 1980s with optical sensors integrated into sex-pheromone baited traps to automatically count captures of the tobacco budworm Heliothis virescens Fabricius and the cabbage looper Trichoplusia ni Hübner (both Lepidoptera: Noctuidae) (Hendricks 1985). A significant advance was made with the remote transmission of the recorded data to a computer, as in the case of the automatic detection of the boll weevil Anthonomus grandis Boheman (Coleoptera: Curculionidae) (Hendricks 1990). One of the first applications of cameras for insect pest monitoring was reported by Kondo et al. (1994) to monitor the Asiatic rice borer Chilo suppressalis Walker (Lepidoptera: Crambidae) and the tobacco cutworm Spodoptera litura Fabricius (Lepidoptera: Noctuidae). During the last 25 years, thanks to the significant technological progress and in parallel to the development of camera traps for wildlife monitoring (Rovero et al. 2013), camera devices have been increasingly exploited for insect pest monitoring. Performing a basic literature search in a scientific database (Web of Science 2020) with the keywords ‘camera* OR video* OR smart OR automatic OR automated OR electronic OR long distance OR remote’ AND ‘monitoring OR detection’ AND ‘insect OR pest’ in the TITLE publication for the last century, more than 90% of the papers found (out of less than one hundred published papers) have been published in the last 25 years (Fig. 1), demonstrating that this is a quite recent discipline. The present review focuses on insect pest monitoring with camera-equipped traps with the purpose of presenting the advantages and limits of using a camera and therefore exploiting insect capture pictures to replace the classic manpowered on-site trap check in both agricultural and forestry frameworks.

Literature published on the topic of remote insect pest monitoring with new technologies (searched in Web of Science 2020, using keywords ‘camera* OR video* OR smart OR automatic OR automated OR electronic OR long distance OR remote’ AND ‘monitoring OR detection’ AND ‘insect OR pest’ in the TITLE publication for the last century)

Camera-equipped traps: design based on species

Typically, an automatic trap equipped with a camera involves two modules: the hardware and the software. The hardware is typically composed of the trap structure containing the bait and retaining the trapped insects, an electronic box including the camera, a data transmission modem, a battery, and eventually an external power supply, such as a solar panel. The software is composed of the online repository in which the capture data pictures are stored and accessed plus optional image analysis algorithms to automatically identify and count the captures. Trap design may vary according to the target pest to be monitored, as detailed in this section.

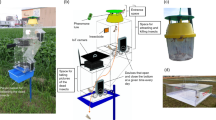

Various papers describe prototypes of camera devices coupled with a sticky trap (sticky liners, where the insect is immobilized in the glue and dies), primarily for the monitoring of adult moths given that many Lepidopteran species have a known long-range sex pheromone (Suckling 2016). For instance, Guarnieri et al. (2011) realized an automatic trap prototype modifying a commercial trap (Pomotrap®, currently Carpo® by Isagro S.p.A., Milan, Italy) with data acquisition and data transfer systems to monitor the codling moth Cydia pomonella L. (Lepidoptera: Tortricidae) in apple orchards (Fig. 2a). A similar prototype was proposed by Ünlü et al. (2019) to monitor the European grapevine moth Lobesia botrana Denis & Schiffermüller (Lepidoptera: Tortricidae) in vineyards. This later study proposed a delta-shaped trap (Fig. 2b) with a high-definition (HD) digital camera, a solar panel, a charging unit and a battery, and a general packet radio service (GPRS) modem incorporated into the structure. Another example using sticky delta traps is reported by Shaked et al. (2018), where the so-called ‘Jackson trap’ was equipped with a camera device for the automatic monitoring of the Mediterranean fruit fly Ceratitis capitata Wiedemann (Diptera: Tephritidae) (Fig. 2c, d). Bucket traps are typically adopted to monitor fruit flies as described by Doitsidis et al. (2017), where a camera-based electronic McPhail trap was used to monitor by remote the olive fruit fly Bactrocera oleae Gmelin (Diptera: Tephritidae) (Fig. 2e). Remote monitoring using capture images has been exploited not only for Lepidopteran or Dipteran species; several papers reported the use of different trap designs supplied with camera devices for other insect pests, such as some Coleopteran. In particular, López et al. (2012) modified a common bucket trap for the red palm weevil Rhynchophorus ferrugineus (Olivier) (Coleoptera: Dryophthoridae) to host image sensors, while Selby et al. (2014) equipped the conventional pyramidal trap used to monitor the plum curculio Conotrachelus nenuphar Herbst (Coleoptera: Curculionidae) with a camera. In the same way, Rassati et al. (2016) coupled camera devices with a multifunnel trap to establish remote monitoring of forest longhorn beetles (Coleoptera: Cerambycidae) and bark beetles (Coleoptera: Scolytinae) (Fig. 2f, g and h).

Experimental prototypes of automatic traps integrated with electronics. Camera-based sticky traps reported by Guarnieri et al. 2011 (a); Ünlü et al. 2019 (b); Shaked et al. 2018 (c and d). Camera-based bucket traps reported by Doitsidis et al. 2017 (e); Rassati et al. 2016 (f, g and h). Infrared sensor-based bucket traps reported by Jiang et al. 2008 (i and j); Holguin et al. 2010 (k and l)

In all these cases, the key aspect regarding the trap design is to provide a similar trap size and shape to what is usually assumed to work effectively for that given target pest, minimizing the electronic package dimension and avoiding changing the trap entrance features. In fact, the efficacy of a new prototype needs to be validated in comparison to commercially available traps as clearly reported by Guarnieri et al. (2011). In that study, for instance, the prototype trap opening width was not modified, and despite the new trap design (that included an upper box containing the electronic components compared to the commercial Pomotrap®), no difference in the trap efficiency (i.e., number of captures) was reported between the automatic prototype and the unmodified commercial trap.

Types of digital camera

Using capture pictures to assess insect occurrence and identification in traps by remote location implies both sufficiently high image resolution and picture sharpening, particularly according to insect size and morphometric characteristics. Therefore, concerning camera types, it is necessary to guarantee adequate picture quality together with limited cost and power consumption. As an example, López et al. (2012) selected a limited resolution camera (C328-7640 color camera from Comedia that produces JPEG-compressed images at a maximum resolution of 640 × 480 pixels provided by the OmniVision OV7640 image sensor) for R. ferrugineus given that it is a large-size weevil. For smaller insects, such as fruit flies, a higher resolution camera is required. For instance, Doitsidis et al. (2017) selected a 2-Mpixel camera and Shaked et al. (2018) selected a 5-Mpixel camera (Omni Vision OV5647 NOIR Rasp Pi) to monitor B. oleae and C. capitata, respectively. For C. pomonella, which is approximately 15–20 mm long, Guarnieri et al. (2011) used a programmable smartphone (S60 3rd edition devices, with Symbian ver.9.0 operating system) with a 3-Mpixel incorporated camera, providing sufficient image quality to remotely recognize the captures. Instead, Selby el al. (2014) modified an automatic camera trap equipped with a pyroelectric sensor that was originally applied to monitor mammals to detect the entrance of the plum curculio C. nenuphar (5 mm long) into the collecting container. These types of cameras (Extreme 2 model, GSM Outdoors, Grand Prairie, TX; and, L-20 model, Moultrie Feeders, Alabaster, AL), which are triggered by an infrared motion sensor, are activated any time an organism enters the photo space. Thus, multiple shots are taken in quick succession, and all the photos are stored in a memory card with a time and date stamp. Finally, Rassati et al. (2016) evaluated the performance of two cameras resulting in the modification of home security cameras equipped with a wide-angle lens. These cameras were internally equipped with a rechargeable battery pack, a subscript identity module (SIM) card, a general packet radio service (GPRS) modem for connection, and a secure digital (SD) memory card. The two cameras, RedEye® and BioCam® (both iDefigo/Mi5 Security, Auckland, New Zealand), differed in image resolution (1 Mpixel and 3 Mpixel, respectively), the presence of an additional external rechargeable battery pack, a solar panel and a built-in flash (present only in the BioCam). These authors took pictures of wood-boring insects (Coleoptera: Cerambycidae and Scolytinae), and only the largest species, such as longhorn beetles (> 8 mm of length) and Ips spp. (4–5 mm of length) among scolytines, were identified at the genus level by image analysis.

In all these papers, very few studies provided minimal information about optical issues (e.g., cone of vision, focal length, depth of field), which are all key aspects to produce a focused and sharp image of the target insect. For instance, Guarnieri et al. (2011) reported that the cone of vision was less than 210 mm in height. In contrast, in Selby el al. (2014), the camera focal length was reduced from several meters to 9 cm, but the overall optical description is often poor and incomplete. Finally, it must be considered that the higher the optical quality of the camera, the higher the cost of the device, which may represent a limiting factor for extensive usage in pest monitoring. For example, Rassati et al. (2016) reported that the security cameras RedEye® and BioCam® used in their study had a similar cost to that of a medium–high range smartphone, likely limiting the potential massive adoption of these devices.

Picture acquisition

To optimize the energy consumption and guarantee sufficient operation longevity, the photo collection time is typically programmed at defined time intervals, resulting in a limited number of records per day. In fact, the major limitation of taking repeated shots is power consumption, which is mainly related to data transmission. Therefore, the automatic traps developed to date are typically set to take a daily picture (Guarnieri et al. 2011; Ünlü et al. 2019). However, multiple shots, including up to three photos per day, have been reported for experimental prototypes (Rassati et al. 2016), such as those recently available for Trapview® (EFOS d.o.o, Hruševje, Slovenia) commercial automatic traps (Trapview 2020). These latter camera-based monitoring traps were adapted in Lucchi et al. (2018) to monitor the daily activity of L. botrana males to identify the best time intervals in which synthetic sex pheromone releases are temporized, optimizing the mating disruption technique using puffer dispensers. In that study, the camera-equipped traps were customized with increased battery capacity and larger solar panels (compared to the standard cameras supplied by the company) to provide a sufficient power supply to take one picture every 30 min (therefore up to 48 pictures per day for the whole study duration of 130–140 days). With such a power improvement, Lucchi et al. (2018) enabled the device to deliver a very frequent capture record. In contrast, effective real-time and continuous monitoring (i.e., 24 h per day with no interruption) is typically characteristic of other detecting sensors different from cameras. For instance, infrared sensors exploit an electric signal generated every time an insect passes through a detection space, generating a count. These sensors make it possible to properly investigate insect activity and behavior. For instance, Kim et al. (2011) demonstrated that the diel attraction rhythm of the male oriental fruit moth Grapholita molesta Busck (Lepidoptera: Tortricidae) to the female sex pheromone ranged from 4:00 pm to midnight based on uninterrupted infrared-based monitoring (which was operative for more than 6 months, covering the whole pest flight seasonal period). This motion sensor circuit based on the infrared beam has also been exploited by Selby et al. (2014) to activate the camera, as described in the previous paragraph regarding camera types. In that study, the presence of arthropods in the photo space was always recorded by the camera, but the occurrence of generalist predators, such as jumping spiders (Araneae: Salticidae) and earwigs (Dermaptera: Forficulidae), lingering in the trap entrance generated multiple photo events and, in some cases, blocked the sensor.

Picture acquisition can therefore be set at defined and limited timings or can be taken every time an insect enters a gate and activates a movement sensor. In the first case, which is the most commonly adopted, the temporal resolution achievable is greater than that achievable with classic manpower-assisted monitoring, typically passing from weekly to daily records. It is important to define the most appropriate moment to take the daily picture, taking into account the external light and weather conditions (e.g., brightness, shadows caused by the sun position, dew and water condensation on the camera lens in the early morning), which could impact the image quality. With the second option, the frequency of consecutive capture records is largely improved by having a picture at every event of trap entrance (continuous monitoring) and better fits the research purposes. However, since the sensors used to trigger the picture are not able to identify the insect species, lure and trap selectivity and trap design need to be carefully considered because they can negatively impact proper picture acquisition and data collection as reported by Selby et al. (2014).

Data transmission systems

Among the case studies cited in this review, only one prototype (Selby et al. 2014) reported the lack of a data transfer system. The necessity of remote communication between the trap and a remote control station provides the advantage of avoiding, limiting or postponing field visits. In the study of Selby et al. (2014), the need to wait for a field visit or the end of the monitoring period not only to check the trap captures but also to discover and fix any operating problem and component failure was a limitation. For instance, photo omissions due to sensor wire failure (imputable to weak, broken or wet circuit connection discovered when physically checking the traps) and water leakage (which compromised the camera reliability in the first year of study and caused sensor short circuit due to moisture during the second year) were recorded in situ by a human operator since there was no communication between the automatic trap and a remote control station. A part from this example, the adoption of a camera device is usually coupled with a data transmission system. In Guarnieri et al. (2011), a programmable smartphone was used to take and send capture pictures throughout an Enhanced Data rates for GSM Evolution/general packet radio service (EDGE/GPRS) network; similarly, in Ünlü et al. (2019), the screenshots of the camera captures were accessed daily via the Internet due to the presence of a GPRS modem that allowed remote data transmission. In Doitsidis et al. (2017), once the captured picture was taken and stored locally on the SD memory card, wireless transmission was initiated using the global system for mobile communications (GSM) module. Additionally, in the case of commercial automatic traps (Lucchi et al. 2018), a cellular network was used to send the data captures to an accessible web application where the pictures were stored.

The prototypes of automatic traps reported so far were realized to work independently from other devices, each with an autonomous data transmission system. A different approach consists of many camera-equipped traps interconnected among them and therefore facilitating communication between one with the other with a single gateway for data transfer. López et al. (2012) evaluated a prototype of an autonomous wireless image sensor network for R. ferrugineus monitoring, working in an unattended mode (with no need of maintenance during the operational life of the trap), reducing the costs and increasing the temporal resolution of the monitoring with capture data available in real-time through an Internet connection. They deployed their image sensor-equipped traps uniformly within the monitoring area (i.e., following a mesh pattern) to create a trap network, in which each trap (forming a node) was in contact with at least another trap (therefore another node) in a connected wireless sensor network that communicated with a control station (i.e., a PC). Each sensor node was composed of a camera, a radio transceiver, and a microcontroller able to manage and transfer images. According to the radio transceiver (and its transmission power), the radio coverage (and the environmental noise), and the data transmission rate (function also of the image quality), the point-to-point communication distance can vary. In their study, the authors operated in conditions of very limited area coverage with a maximum 140 m between each node and the control station (assuming a single-hop scenario, where each node directly communicated with the control station). The same approach exploiting interconnected nodes was also adopted by Tirelli et al. (2011) in a greenhouse, by Priya et al. (2013) and by Shaked et al. (2018) in the field. In these cases, one central control station had the task of collecting all the pictures from the surrounding nodes and communicating with the exterior of the field all the data provided by the nodes network. Adopting this approach, there is the opportunity to create a high-resolution monitoring grid within each location.

Traps can be remotely monitored via the Internet using wireless communication technology. In some cases, each trap is individually operative (singe-trap monitoring unit). However, in other case studies, a network of traps is interconnected and operates as a complex of devices where the data transfer system relies on a single shared gateway (multiple nodes with a central control station). While the advantage of having an independent trap is clear (i.e., it can be placed anywhere there is a connection regardless of the location of other traps), the use of trap networks with interconnected nodes can allow a considerable increase in the monitoring resolution in a given location (according to the number of nodes and the feasible trap distance between them).

Insect pest identification and count

Capture identification and counting can be performed manually or by exploiting image processing software. According to Sciarretta and Calabrese (2019), the monitoring systems can be classified as (i) fully automated, when the system is equipped with software for image interpretation and species identification of the captured insects; (ii) semiautomated, when a remote human operator has to identify and count the captured insects by watching the images taken by the camera-equipped trap.

In some of the case studies reported here (Guarnieri et al. 2011; Rassati et al. 2016; Shaked et al. 2018; Ünlü et al. 2019), the captured insects were checked manually by remote, and the images were observed by the human eye via computer or smartphone. This approach requires a trained observer in the control station to properly identify the insect species, and despite actually avoiding the field visit, the process is time-consuming. As reported by Ünlü et al. (2019), the manual count and identification can provide very high accuracy on the number of target moths captured. However, these semiautomated systems require a certain number of man-hours; therefore, a part of the labor cost in the trap check is not avoidable. In addition, insect size can be an issue. In Rassati et al. (2016), all the trapped wood-boring insects were identified at the family level from the pictures. For longhorn beetles, it was always possible to reach the genus level. However, for bark beetles, only one genus was large enough (i.e., Ips) to be determined through manual picture analysis.

To facilitate the observer, image processing algorithms can be used to provide identification and automatic counts of the insects (Silveira and Monteiro 2009; Priya et al. 2013; Kang et al. 2014; Upadhyay and Ingole 2014; Wen et al. 2015; Ding and Taylor 2016; Bjerge et al. 2020). In some cases, the accuracy of the automatic count needs to be verified and validated by a human expert. For instance, Trapview® commercial camera-based automatic traps automatically process high-resolution pictures, providing a very precise basic count of the captures for different insect pest species and having a manual confirmation included as part of its customer service (Trapview 2020) (Fig. 3). Similar commercial services with automatic insect counts are currently provided by different companies and available for several insect pests, such as iSCOUT® (Pessl Instruments 2020) and SightTrap® (Insect Limited 2020).

Automatic traps equipped with high-resolution cameras, solar panels, temperature and humidity sensors and an electronic system for data acquisition and transfer controllable from distant locations: self-cleaning funnel type trap (a) and capture picture of Helicoverpa armigera Hübner automatically marked and counted (b); Trapview standard trap installed in an apple orchard (c) and capture picture of Cydia pomonella L. automatically marked and counted (d) (TrapView, Hruševje, Slovenia)

The development of fully automated insect counts was originally based on motion sensors (Hendricks 1985, 1990; Kliewe 1998; Tabuchi et al. 2006) and has been exploited for several insect pests in recent decades. In fact, the exploitation of photointerruption sensors (e.g., infrared sensors generating an electric signal) is a quick approach to count hundreds of individuals automatically, such as in the case of fruit flies, as reported, for instance, with a double-counting method for the oriental fruit fly Bactrocera dorsalis Hendel (Diptera: Tephritidae) (Jiang et al. 2008, 2013; Okuyama et al. 2011) (Fig. 2i, j) or for C. capitata (Goldshtein et al. 2017). However, this electric signal-based approach in some cases could result in a very poor accuracy of the monitoring system, as shown by Holguin et al. (2010) for the automated counting of C. pomonella and G. molesta by using electronic bucket traps (Fig. 2k, l). These authors developed a system in which when the moths entered the trap, they became intoxicated by an insecticide-impregnated strip before falling down through the funnel inside the trap and passing by the optical sensor. However, these tortricid moths were not killed immediately and were still active inside the trap, flying up and down through the sensor several times and therefore causing an overestimation of the counts.

Image processing techniques and computer vision have been applied for the automatic identification of several insect pests, such as the diamondback moth Plutella xylostella L. (Lepidoptera: Plutellidae) (Shimoda et al. 2006), the Queensland fruit fly Bactrocera tryoni Froggatt (Diptera: Tephritidae) (Liu et al. 2009), and the rice bug Leptocorisa chinensis Dallas (Hemiptera: Alydidae) (Fukatsu et al. 2012). Doitsidis et al. (2017) developed a detection and recognition algorithm using machine vision techniques to allow the automatic insect count of B. oleae. However, when similar species are attracted towards the same trap a trained operator is needed to identify the target species. For instance, the McPhail ‘e-trap’ developed for B. oleae (Doitsidis et al. 2017) also attracted other Tephritid species, such as C. capitata, which were easily distinguished by the human operator but not by the computer algorithm.

Miscount and interpretation errors can also occur with the distant readings of capture pictures performed by the human eye since the lure selectivity and the synchronous occurrence of more species with similar morphological characteristics can play an important role in the accuracy of insect capture identification. In her Ph.D. thesis, Hári (2014) noted the remote identification issue related to the simultaneous capture of G. molesta and the plum fruit moth Grapholita funebrana Treitschke (Lepidoptera: Tortricidae), which have a similar aspect and size. However, in general, the high specificity of the trap and bait together with high-quality pictures provide good accuracy for insect pest identification and count (both manually and automatically).

Power supply for the electronic devices

López et al. (2012) demonstrated that within remote monitoring, image wireless transmission is the most demanding energy operation, which can be optimized to improve the image compression system. In their work, the authors selected a lithium thionyl chloride battery given its high-performance characteristics and longevity (over 10 years). To minimize the power consumption, the operating software was set in standby mode when not in running mode with the possibility to define the image capture period with capture cycles that ranged from 30 min to one day. The power consumption during running mode was a function of the image size and image capture period and increased in the case of packet error delivery (due to interferences in the data transmission). In Guarnieri et al. (2011), an external power unit alimented with batteries was added to allow an operating life of the electronic devices of approximately two months. A similar life span was observed by Rassati et al. (2016) using an external rechargeable battery pack.. Doitsidis et al. (2017) reported the use of a 12-V (7 Ah−1) battery as sufficient for continuous operation throughout the summer period of monitoring. In Ünlü et al. (2019), a 12-V (7 Ah−1) battery plus a solar panel and a charging unit allowed monitoring of L. botrana flights throughout the entire season (from April to September). Similarly, in Shaked et al. (2018), a 12-V (24 Ah−1) battery together with a battery charge controller, a step-down converter (from 12 to 5 V) and a solar panel (5, 15 or 50 W, according to the experiment) allowed the field trials to be conducted uninterruptedly for up to three months (according to the pest occurrence and crop phenological stage). Finally, in Selby et al. (2014), the batteries of some electronic components had to be changed every 2–4 days for proper operation due to the energy requirements and battery author choice for each electronic device present in their prototype. In that study, the camera unit required 4 C batteries, the white LEDs and the infrared emitter required 2 AA batteries each, and the infrared detector required a 9 V battery (all conventional and disposable alkaline batteries for cost reasons). However, in the discussion of their work, Selby et al. (2014) showed the perspective for a practical improvement of their system to face this power supply limit using, for instance, different rechargeable batteries eventually in combination with a solar panel.

The power supply for independent trap units installed in the field with no direct power access is bonded to a sufficiently durable battery and/or to the opportunity of combining a rechargeable battery with a solar panel. In the various automatic trap prototypes examined in this review, when using external additional batteries or solar panels, the power supply was never an issue.

Requirements for developing an efficient camera-equipped trap prototype

Camera-equipped traps can be relatively easily tailored given the necessary electronic and informatics expertise. As reported in Table 1, some basic requirements need to be taken into account during prototype development to guarantee optimal system functionality. Selby et al. (2014) fixed six criteria for the evaluation of their automatic prototypes, including the transferability of the prototype design developed into mass-produced units. This aspect should always be considered from the beginning of prototype development. In particular, the selection of electronic components should be focused on high quality, low cost, easily accessible, and largely available items to potentially allow the large-scale exploitation of a worthy prototype.

To achieve the transferability of a trap prototype to the final users, among the numerous aspects listed in Table 1 there are few crucial factors particularly relevant: (i) a proper trap design and trapping mechanism according to the species to be monitored (Muirhead-Thompson 2012); (ii) a sufficient image quality provided by high-resolution cameras; (iii) a suitable power supply to cover an adequate life span of the electronic part; (iv) an affordable cost that can allow an extensive adoption of such tool.

Trap devices and monitoring costs are essential to adopt new technologies, including automatic traps. A recurrent aspect in the various publications examined in this review is the lack of economic evaluation, in which the analysis of costs and benefits has been done in terms of the effective value of an automated monitoring system, including the costs of the materials (camera device and other related electronic parts, software and algorithm). One of the few examples that mentioned an economic evaluation was provided by Ünlü et al. (2019). These authors stated that the main advantage of their camera-equipped trap was the opportunity to perform accurate monitoring in a remote location, therefore solving the logistic issue and saving time, labor and money. According to the authors’ calculation, the value of a camera-equipped trap was ca. 250 $, while the cost of a weekly field visit in their remote location was 125 $. Thus, six months of in situ monitoring resulted in twelve times the actual value of the automatic trap. The economic aspect is determinant in the decision of adopting an automatic pest monitoring system on a large scale and should be better addressed in future publications to provide more monetary data useful for prototype comparisons.

Reasons to adopt an automatic trap and possible limits in their use

There are several advantages in the adoption of automatic traps equipped with a camera device for insect pest monitoring as listed in Table 2. Due to the constant and continuative signs of progress in the new technologies, when the cost of these advancements will be practically reachable by growers and forest managers, automated monitoring could be largely exploited worldwide in numerous agricultural, horticultural and forest systems for several insect pest species. The opportunity to detect an insect pest occurrence by remote and create digital records of its population dynamic from both a spatial and temporal point of view will provide the users with a very powerful tool to face the actual and future challenges in insect pest monitoring and management. For instance, automatic trap-based area-wide monitoring can be performed with a higher number of observation points located according to a less aggregated distribution in comparison to what is currently feasible with human-based monitoring (which is usually concentrated in a limited number of points easy to reach). This approach can create the conditions to better understand the large-scale dynamics of the insect distribution and to benefit from its knowledge, as reported by Jiang et al. (2008 and 2013) and Potamitis et al. (2017).

Camera-equipped traps can directly benefit growers by allowing the management of new invasive agricultural pests already present in a given area, adapting either prototypes or commercial trap designs to the species of interest. For instance, the monitoring of the brown marmorated stink bug Halyomorpha halys Stål (Hemiptera: Pentatomidae) is effectively realized using aggregation pheromone-baited pyramid traps (Acebes-Doria et al. 2020) but could benefit by trap automatization for survey, early detection, and area-wide monitoring programs. Camera-equipped pyramidal trap prototypes, such as the one described by Selby et al. (2014), could be adapted to optimize and implement the monitoring and management of this harmful pest in agriculture. The versatility of these camera-based prototypes, which are flexible and adaptable to different pests (Selby et al. 2014), relies on the opportunity to monitor different species with a similar behavior by simply changing the attractant. For example, with sufficient power autonomy, the Selby et al. (2014) prototypes could be adopted for the continuous monitoring of invasive species, such as H. halys. Similarly, market solutions specifically designed for H. halys already exist, such as the iSCOUT® Bug (Pessl Instruments 2020).

Insect pest camera-based monitoring can be practically adopted also in forestry as complementary tool to aerial and field surveys for forest pests. To date remote sensing (exploiting images from satellites or unmanned aerial vehicles, i.e., drones) can facilitate the creation of infestation maps together with the classical aerial and direct field scouting (Hall et al. 2016; Torresan et al. 2017; Zhang et al. 2019). The inclusion of camera traps for insect pests, paired with remote sensing, can further enhance the monitoring programs in forests to permit early detection of harmful species and to allow predictions of outbreaks risk exploiting the monitoring data (Ayres and Lombardero 2018; Choi and Park 2019). The potential monitoring improvements can also be exploited in the case of quarantine pests, for example, to detect B. tryoni in fruit and vegetable shipments in Australian exports (Liu et al. 2009) or forest longhorn and bark beetles not yet present in the European Union (Rassati et al. 2016). In fact, web-based automatic monitoring can be effectively adopted for the early detection of invasive alien species at high-risk sites, such as the country point of entry (i.e., internationals ports and airports) (Poland and Rassati 2019). A monitoring program of quarantine species based on traps requires frequent inspections of the captures to state the real absence of the target insect pest or to guarantee quick promptness of reaction in case of detection. The trap check, which is typically performed manually by a human operator in situ, can be replaced with automatic remote trap capture observations, justifying the direct human intervention only in case of a real need as described by Rassati et al. (2016) for wood-boring beetles. Finally, camera-based monitoring could be applied also within eradication programs of invasive species (Martinez et al. 2020).

In addition to image-based classification, other systems are available to automatically detect and monitor insect pests, such as infrared sensors and acoustic sensors, which have been exploited for several insect species (Cardim Ferreira Lima et al. 2020). The main advantage of using a trap equipped with an image sensor (i.e., a camera taking pictures) in comparison with automatic traps operating, for instance, light-dependent resistor or infrared sensors (e.g., those adopted in Holguin et al. 2010; Kim et al. 2011 and Potamitis et al. 2017), is the availability of a picture of the captured insects that a human operator can directly check and verify by remote at any time. In fact, in Potamitis et al. (2017), photointerruption optoelectronic sensors (based, for instance, on LED emitters and photodiode receivers) allow a very precise count (i.e., high accuracy) of the red palm weevil or of grain beetles captured in funnel, pyramid or pitfall traps. Nevertheless, since this sensor only recognizes arthropod presence when entering the trap, any insect species is counted regardless of its identity. Similarly, Kim et al. (2011) demonstrated a very high correlation between the electric signal generated by an infrared sensor and the actual captures of G. molesta present inside the automatic cone type traps installed in apple orchards. However, the high accuracy of the insect counts was substantially provided by the high specificity of the sex pheromone lure, and some overestimations occurred due to captures of non-target arthropods or to G. molesta males that triggered the sensor but escaped the trap. The presence of stored high-quality images can unequivocally clarify the real situation inside the traps, avoiding these misidentification issues. Considering examples of automatic counts with infrared sensors, Kim et al. (2011) reported a low trapping efficiency of the automatic cone type trap compared to the wing type standard sticky trap, suggesting an implementation of their structure to force the insects to pass through the sensing area and therefore get counted. Using the same trap type (e.g., bucket trap), Holguin et al. (2010) showed that the total moth captures in the automatic traps were approximately half of the captures recorded within similar standard traps without the electronic circuit, which likely caused the low capture rate (probably due to the ultrasonic vibrations of the clock circuits and the electromagnetic fields generated by the detection circuits). These issues are absent in the case of a camera-equipped trap, where the identification and count are based on image analysis and not on in-site electric sensors.

Nevertheless, to date there are some elements that restrain a massive adoption of automated devices for insect pest monitoring in both agriculture and forestry. First, the cost of such technology is usually not affordable for individual growers or forest managers, although in the last few years the costs of electronic components and batteries have been largely decreased. Nowadays, the camera-equipped automatic traps use in the private sector is mainly restricted to cooperatives of producers, in which the costs such as the added value derived by automatic traps are divided among several growers of a common crop and gaining a shared information. Another example is given by large companies that wish to implement technical aspects in their pest management and simultaneously take advantage of this investment with marketing actions that valorize their brand (for instance in the case of important wineries producing expensive wines). In the public sector, such monitoring tools can be likely more suitable at regional or national level for plant protection services in both agriculture and forestry. In fact, the power of camera-equipped automatic traps is in the creation of trap networks suitable to cover large areas, obtaining real-time area-wide information on specific insect pest infestations. A second limit encountered in reviewing various research papers on camera-equipped trap prototypes is the low power autonomy of some of these systems. Another limit can be the low resolution of the capture pictures. However, both issues have been largely solved in commercial automatic traps and to date they cannot be considered as limits for the practical adoption of camera-based monitoring. Finally, a fundamental limitation of these tools is the availability and level of automatic pest identification and count. Several marketable options require a manual identification of the species or at least a manual validation, showing space for further improvements in fully automated pest detection systems.

Challenges for further developments

Agriculture is in the middle of the digital revolution. Specifically, the huge amount of data available and their relatively low cost of collection and transmission (e.g., digital weather stations, in situ sensors for several soil–plant-environmental parameters, drones, and satellites) are an incredible opportunity to exploit. Agriculture operators are now facing the ‘Big Data Analysis’ prospect: organize, aggregate and interpret the massive sample size of available digital data with sophisticated algorithms to drive decisions based on data interpretation, prediction, and inference potentially on a global scale (Fan et al. 2014; Weersink et al. 2018). In addition, the implementation of computer vision science (Paul et al. 2020), machine learning (Liakos et al. 2018), deep learning (Kamilaris and Prenafeta-Boldú 2018), neural networks (Patil and Vohra 2020), fuzzy logic (Kale and Patil 2019) and artificial intelligence (Jha et al. 2019) can reduce human interventions and efforts, optimize inputs and maximize outputs. Moreover, all this information is deeply inserted in the highest level of connectivity that humankind has ever witnessed. The concept of the ‘internet of things’ (IoT), which was defined by Granell et al. (2020) as the ‘holistic proposal to enable an ecosystem of varied, heterogeneous networked objects and devices to speak to and interact with each other’, is leading our ability to provide uncountable perspectives for the near future (Lakhwani et al. 2019). information and communications technology (ICT) has reached a new frontier with the IoT with a significant impact on precision agriculture and other fields of agriculture (Sreekantha and Kavya 2017; Khanna and Kaur 2019). This advancement is also due to achievements in wireless communication technology (Rehman et al. 2014) and in the global system of mobile communication (GSM) (Sudarshan et al. 2019) applied in agricultural systems. Examples of practical implications of the IoT in insect monitoring and management with camera-equipped traps have been recently published to monitor, for instance, crawling insects, such as cockroaches (Blattodea), beetle pests of stored food (Coleoptera) and ants (Hymenoptera: Formicidae), in urban environments (Eliopoulos et al. 2018) and to control the coffee berry borer Hypothenemus hampei Ferrari (Coleoptera: Curculionidae) in coffee crops (Figueiredo et al. 2020).

Given this unique opportunity to apply high technology, electronic and informatics knowledge, and data analysis to monitor insect pests, the use of automatic traps equipped with camera devices will allow the implementation of management programs, providing precise insect identification and updated counts by remote location in both agriculture and forestry. Compared to other automated systems for insect detection, such as infrared sensors, the opportunity to have a high-quality image of the captures allows us to check the trap directly from the office with the same accuracy. Camera-based insect monitoring can be exploited not only for pest monitoring but also for early detection and survey, allowing a prompt reaction especially for invasive species. There is a potential perspective to interconnect traps among sites and create a network at local, regional, country, continental, and global scales, as reported by Potamitis et al. (2017). In these networks, digital automatic trap information could be integrated inside a ‘Big Data’ system together with several other environmental and geographical parameters, such as geocoordinates, weather trends, forecasting models for pest species, and control techniques (e.g., grower interventions in case of agricultural and horticultural crops, or forest pest management programs in forestry). Such integrated systems will enable the exploitation of temporal and spatial distributions of the pests captured by the traps to enhance and optimize their control with a technology-driven approach in conventional and organic management programs.

References

Acebes-Doria AL, Agnello AM, Alston DG, Andrews H, Beers EH, Bergh JC, Bessin R, Blaauw BR, Buntin GD, Burkness EC, Chen S, Cottrell TE, Daane KM, Fann LE, Fleischer SJ, Guédot C, Gut LJ, Hamilton GC, Hilton R, Hoelmer KA, Hutchison WD, Jentsch P, Krawczyk G, Kuhar TP, Lee JC, Milnes JM, Nielsen AL, Patel DK, Short BD, Sial AA, Spears LR, Tatman K, Toews MD, Walgenbach JD, Welty C, Wiman NG, van Zoeren J, Leskey TC (2020) Season-long monitoring of the brown marmorated stink bug (Hemiptera: Pentatomidae) throughout the United States using commercially available traps and lures. J Econ Entomol 113(1):159–171. https://doi.org/10.1093/jee/toz240

Ayres MP, Lombardero MJ (2018) Forest pests and their management in the Anthropocene. Can J For Res 48(3):292–301. https://doi.org/10.1139/cjfr-2017-0033

Bjerge K, Sepstrup MV, Nielsen JB, Helsing F, Hoye TT (2020) A light trap and computer vision system to detect and classify live moths (Lepidoptera) using tracking and deep learning. bioRxiv. https://doi.org/10.1101/2020.03.18.996447

Brockerhoff EG, Liebhold AM, Jactel H (2006) The ecology of forest insect invasions and advances in their management. Can J For Res 36(2):263–268. https://doi.org/10.1139/x06-013

Cardim Ferreira Lima M, de Almeida D, Leandro ME, Valero C, Pereira Coronel LC, Gonçalves Bazzo CO (2020) Automatic detection and monitoring of insect pests—a review. Agriculture 10(5):161. https://doi.org/10.3390/agriculture10050161

Choi WI, Park YS (2019) Monitoring, assessment and management of forest insect pests and diseases. Forests 10:865. https://doi.org/10.3390/f10100865

Damos P (2015) Modular structure of web-based decision support systems for integrated pest management. A Rev Agron Sustain Dev 35(4):1347–1372. https://doi.org/10.1007/s13593-015-0319-9

Deepthi MB, Sreekantha DK (2017, March) Application of expert systems for agricultural crop disease diagnoses—A review. In: 2017 International conference on inventive communication and computational technologies (ICICCT), p. 222–229, IEEE. https://doi.org/10.1109/ICICCT.2017.7975192

Dent D (2000) Sampling, monitoring and forecasting. Insect pest management, 2nd edn. Cabi, United States, pp 14–46

Ding W, Taylor G (2016) Automatic moth detection from trap images for pest management. Comput Electron Agr 123:17–28. https://doi.org/10.1016/j.compag.2016.02.003

Doitsidis L, Fouskitakis GN, Varikou KN, Rigakis II, Chatzichristofis SA, Papafilippaki AK, Birouraki AE (2017) Remote monitoring of the Bactrocera oleae (Gmelin) (Diptera: Tephritidae) population using an automated McPhail trap. Comput Electron Agr 137:69–78. https://doi.org/10.1016/j.compag.2017.03.014

Eliopoulos P, Tatlas NA, Rigakis I, Potamitis I (2018) A “smart” trap device for detection of crawling insects and other arthropods in urban environments. Electronics 7(9):161. https://doi.org/10.3390/electronics7090161

Ennouri K, Triki MA, Kallel A (2020) Applications of remote sensing in pest monitoring and crop management. In: Keswani C (ed) Bioeconomy for sustainable development. Springer, Singapore, pp 65–77

Fan J, Han F, Liu H (2014) Challenges of big data analysis. Natl Sci Rev 1(2):293–314. https://doi.org/10.1093/nsr/nwt032

Figueiredo VAC, Mafra S, Rodrigues J (2020) A Proposed IoT Smart Trap using Computer Vision for Sustainable Pest Control in Coffee Culture. arXiv preprint arXiv:2004.04504

Fischnaller S, Parth M, Messner M, Stocker R, Kerschbamer C, Reyes-Dominguez Y, Janik K (2017) Occurrence of different Cacopsylla species in apple orchards in South Tyrol (Italy) and detection of apple proliferation phytoplasma in Cacopsylla melanoneura and Cacopsylla picta. Cicadina 17:37–51

Fukatsu T, Watanabe T, Hu H, Yoichi H, Hirafuji M (2012) Field monitoring support system for the occurrence of Leptocorisa chinensis Dallas (Hemiptera: Alydidae) using synthetic attractants, Field Servers, and image analysis. Comput Electron Agr 80:8–16. https://doi.org/10.1016/j.compag.2011.10.005

Goldshtein E, Cohen Y, Hetzroni A, Gazit Y, Timar D, Rosenfeld L, Grinshpon Y, Hoffman A, Mizrach A (2017) Development of an automatic monitoring trap for Mediterranean fruit fly (Ceratitis capitata) to optimize control applications frequency. Comput Electron Agr 139:115–125. https://doi.org/10.1016/j.compag.2017.04.022

Granell C, Kamilaris A, Kotsev A, Ostermann FO, Trilles S (2020) Internet of things. In: Guo H, Goodchild MF, Annoni A (eds) Manual of digital earth. Springer, Singapore, pp 387–423

Guarnieri A, Maini S, Molari G, Rondelli V (2011) Automatic trap for moth detection in integrated pest management. Bull Insectology 64(2):247–251

Hall RJ, Castilla G, White JC, Cooke BJ, Skakun RS (2016) Remote sensing of forest pest damage: a review and lessons learned from a Canadian perspective. Can Entomol 148(S1):S296–S356. https://doi.org/10.4039/tce.2016.11

Hári K (2014) A gyümölcsmolyok elleni környezetkímélő növényvédelem fejlesztésének hazai lehetőségei = Possibilities in development of environmentally friendly control of fruit moths in Hungary. Doctoral dissertation, Budapesti Corvinus Egyetem. https://doi.org/10.14267/phd.2014064

Hendricks DE (1985) Portable electronic detector system used with inverted-cone sex pheromone traps to determine periodicity and moth captures. Environ Entomol 14(3):199–204. https://doi.org/10.1093/ee/14.3.199

Hendricks DE (1990) Electronic system for detecting trapped boll weevils in the field and transferring incident information to a computer. Southwest Entomol 15(1):39–48

Holguin GA, Lehman BL, Hull LA, Jones VP, Park J (2010) Electronic traps for automated monitoring of insect populations. IFAC Proc Vol 43(26):49–54. https://doi.org/10.3182/20101206-3-JP-3009.00008

Jha K, Doshi A, Patel P, Shah M (2019) A comprehensive review on automation in agriculture using artificial intelligence. Artif Intell 2:1–12. https://doi.org/10.1016/j.aiia.2019.05.004

Jiang JA, Tseng CL, Lu FM, Yang EC, Wu ZS, Chen CP, Lin SH, Lin KC, Liao CS (2008) A GSM-based remote wireless automatic monitoring system for field information: a case study for ecological monitoring of the oriental fruit fly, Bactrocera dorsalis (Hendel). Comput Electron Agr 62(2):243–259. https://doi.org/10.1016/j.compag.2008.01.005

Jiang JA, Lin TS, Yang EC, Tseng CL, Chen CP, Yen CW, Zheng XY, Liu CY, Liu RH, Chen YF, Chang WY, Chang WY (2013) Application of a web-based remote agro-ecological monitoring system for observing spatial distribution and dynamics of Bactrocera dorsalis in fruit orchards. Precis Agric 14(3):323–342. https://doi.org/10.1007/s11119-012-9298-x

Jones VP, Brunner JF, Grove GG, Petit B, Tangren GV, Jones WE (2010) A web-based decision support system to enhance IPM programs in Washington tree fruit. Pest Manag Sci 66(6):587–595. https://doi.org/10.1002/ps.1913

Kale SS, Patil PS (2019) Data mining technology with fuzzy logic, neural networks and machine learning for agriculture. Data management, analytics and innovation. Springer, Singapore, pp 79–87

Kamilaris A, Prenafeta-Boldú FX (2018) Deep learning in agriculture: a survey. Comput Electron Agric 147:70–90. https://doi.org/10.1016/j.compag.2018.02.016

Kang SH, Cho JH, Lee SH (2014) Identification of butterfly based on their shapes when viewed from different angles using an artificial neural network. J Asia-Pac Entomol 17(2):143–149. https://doi.org/10.1016/j.aspen.2013.12.004

Khanna A, Kaur S (2019) Evolution of Internet of Things (IoT) and its significant impact in the field of precision agriculture. Comput Electron Agric 157:218–231. https://doi.org/10.1016/j.compag.2018.12.039

Kim Y, Jung S, Kim Y, Lee Y (2011) Real-time monitoring of oriental fruit moth, Grapholita molesta, populations using a remote sensing pheromone trap in apple orchards. J Asia-Pac Entomol 14(3):259–262. https://doi.org/10.1016/j.aspen.2011.03.008

Kliewe V (1998) Elektronisch gesteuerte Zeitfalle zur Untersuchung der tageszeitlichen Aktivität von Bodenarthropoden. Beiträge zur Entomol = Contrib Entomol 48(2):541–543

Kondo A, Sano T, Tanaka F (1994) Automatic record using camera of diel periodicity of pheromone trap catches. Jpn J Appl Entomol Zool 38:197–199. https://doi.org/10.1303/jjaez.38.197

Lakhwani K, Gianey H, Agarwal N, Gupta S (2019) Development of IoT for smart agriculture a review. In: Rathore V, Worring M, Mishra D, Joshi A, Maheshwari S (eds) Emerging trends in expert applications and security. Springer, Singapore, pp 425–432

Liakos K, Busato P, Moshou D, Pearson S, Bochtis D (2018) Machine learning in agriculture: a review. Sensors 18(8):2674. https://doi.org/10.3390/s18082674

Insect Limited (2020) https://www.insectslimited.com/sighttrap. Accessed 9 Nov 2020

Liu Y, Zhang J, Richards M, Pham B, Roe P, Clarke A (2009) Towards continuous surveillance of fruit flies using sensor networks and machine vision. In: 2009 5th International conference on wireless communications, networking and mobile computing, p. 1–5. Doi: https://doi.org/10.1109/WICOM.2009.5303034.

López O, Rach MM, Migallon H, Malumbres M, Bonastre A, Serrano J (2012) Monitoring pest insect traps by means of low-power image sensor technologies. Sensors 12(11):15801–15819. https://doi.org/10.3390/s121115801

Lucchi A, Sambado P, Royo ABJ, Bagnoli B, Benelli G (2018) Lobesia botrana males mainly fly at dusk: video camera-assisted pheromone traps and implications for mating disruption. J Pest Sci 91(4):1327–1334. https://doi.org/10.1007/s10340-018-1002-0

Martinez B, Reaser JK, Dehgan A, Zamft B, Baisch D, McCormick C, Giordano AJ, Aicher R, Selbe S (2020) Technology innovation: advancing capacities for the early detection of and rapid response to invasive species. Biol Invasions 22(75–100):1–26. https://doi.org/10.1007/s10530-019-02146-

McCravy KW (2018) A review of sampling and monitoring methods for beneficial arthropods in agroecosystems. Insects 9:170. https://doi.org/10.3390/insects9040170

Muirhead-Thompson RC (2012) Trap responses of flying insects: the influence of trap design on capture efficiency. Academic Press, Cambridge

Okuyama T, Yang EC, Chen CP, Lin TS, Chuang CL, Jiang JA (2011) Using automated monitoring systems to uncover pest population dynamics in agricultural fields. Agric Syst 104(9):666–670. https://doi.org/10.1016/j.agsy.2011.06.008

Patil B, Vohra M (2020) Contribution of neural networks in different applications. In: Sathiyamoorthi V (ed) Handbook of research on applications and implementations of machine learning techniques. IGI Global, Hershey, pp 305–316

Paul A, Ghosh S, Das AK, Goswami S, Choudhury SD, Sen S (2020) A review on agricultural advancement based on computer vision and machine learning. In: Mandal J, Bhattacharya D (eds) Emerging technology in modelling and graphics. Springer, Singapore, pp 567–581

Pessl instruments (2020) http://metos.at/iscout/. Accessed 9 Nov 2020

Poland TM, Rassati D (2019) Improved biosecurity surveillance of non-native forest insects: a review of current methods. J Pest Sci 92(1):37–49. https://doi.org/10.1007/s10340-018-1004-y

Potamitis I, Eliopoulos P, Rigakis I (2017) Automated remote insect surveillance at a global scale and the internet of things. Robotics 6(3):19. https://doi.org/10.3390/robotics6030019

Priya CT, Praveen K, Srividya A (2013) Monitoring of pest insect traps using image sensors & dspic. Int J Eng Trends Tech 4(9):4088–4093

Rassati D, Faccoli M, Chinellato F, Hardwick S, Suckling DM, Battisti A (2016) Web-based automatic traps for early detection of alien wood-boring beetles. Entomol Exp Appl 160(1):91–95. https://doi.org/10.1111/eea.12453

Rehman A, Abbasi AZ, Islam N, Shaikh ZA (2014) A review of wireless sensors and networks’ applications in agriculture. Comput Stand Inter 36(2):263–270. https://doi.org/10.1016/j.csi.2011.03.004

Rovero F, Zimmermann F, Berzi D, Meek P (2013) “Which camera trap type and how many do I need?” A review of camera features and study designs for a range of wildlife research applications. Hystrix 24(2):148–156. https://doi.org/10.4404/hystrix-24.2-6316

Sciarretta A, Calabrese P (2019) Development of automated devices for the monitoring of insect pests. Curr Agric Res 7(1):19–25. https://doi.org/10.12944/CARJ.7.1.03

Selby RD, Gage SH, Whalon ME (2014) Precise and low-cost monitoring of plum curculio (Coleoptera: Curculionidae) pest activity in pyramid traps with cameras. Environ Entomol 43(2):421–431. https://doi.org/10.1603/EN13136

Shaked B, Amore A, Ioannou C, Valdés F, Alorda B, Papanastasiou S, Goldshtein E, Shenderey C, Leza M, Pontikakos C, Perdikis D, Tsiligiridis T, Tabilio MR, Sciarretta A, Barceló C, Athanassiou C, Miranda MA, Alchanatis V, Papadopoulos N, Nestel D (2018) Electronic traps for detection and population monitoring of adult fruit flies (Diptera: Tephritidae). J Appl Entomol 142(1–2):43–51. https://doi.org/10.1111/jen.12422

Shimoda N, Kataoka T, Okamoto H, Terawaki M, Hata SI (2006) Automatic pest counting system using image processing technique. J Japan Soc Agric Mach JSAM 68(3):59–64. https://doi.org/10.11357/jsam1937.68.3_59

Silveira M, Monteiro A (2009) Automatic recognition and measurement of butterfly eyespot patterns. Biosystems 95(2):130–136. https://doi.org/10.1016/j.biosystems.2008.09.004

Southwood TRE, Henderson PA (2000) Ecological methods, 3rd edn. Wiley, Oxford

Sreekantha DK, Kavya AM (2017, January) Agricultural crop monitoring using IOT-a study. In: 2017 11th International conference on intelligent systems and control (ISCO), p. 134–139. Doi: https://doi.org/10.1109/ISCO.2017.7855968

Suckling DM (2016) Monitoring for surveillance and management. In: Allison JD, Cardé RT (eds) Pheromone communication in moths: evolution, behavior, and application. Univ of California Press, Oakland, pp 337–347

Sudarshan KG, Hegde RR, Sudarshan K, Patil S (2019) Smart agriculture monitoring and protection system using IOT. Persp Commun, Emb-Syst Signal-process-PiCES 2(12):308–310

Tabuchi K, Moriya S, Mizutani N, Ito K (2006) Recording the occurrence of the bean bug Riptortus clavatus (Thunberg)(Heteroptera: Alydidae) using an automatic counting trap. Jpn J Appl Entomol Z 50(2):123–129

Tirelli P, Borghese NA, Pedersini F, Galassi G, Oberti R. (2011, May) Automatic monitoring of pest insects traps by Zigbee-based wireless networking of image sensors. In: 2011 International instrumentation and measurement technology conference, p. 1–5, IEEE. Doi: https://doi.org/10.1109/IMTC.2011.5944204

Torresan C, Berton A, Carotenuto F, Di Gennaro SF, Gioli B, Matese A, Miglietta F, Vagnoli C, Zaldei A, Wallace L (2017) Forestry applications of UAVs in Europe: a review. Int J Remote Sens 38(8–10):2427–2447. https://doi.org/10.1080/01431161.2016.1252477

Trapview (2020) https://www.trapview.com/v2/en/. Accessed 9 Nov 2020

Ünlü L, Akdemir B, Ögür E, Şahin İ (2019) Remote monitoring of European Grapevine Moth, Lobesia botrana (Lepidoptera: Tortricidae) population using camera-based pheromone traps in vineyards. Turkish J A F Sci Tech 7(4):652–657. https://doi.org/10.24925/turjaf.v7i4.652-657.2382

Upadhyay AJ, Ingole PV (2014) Automatic monitoring of pest insects traps using image processing. Int J Manage, IT Eng IJMIE 4(3):165–168. https://doi.org/10.11591/telkomnika.v12i8.6272

Web of Science (2020) https://apps.webofknowledge.com/. Accessed 18 Aug 2020

Weersink A, Fraser E, Pannell D, Duncan E, Rotz S (2018) Opportunities and challenges for big data in agricultural and environmental analysis. Annu Rev Resour Econ 10:19–37. https://doi.org/10.1146/annurev-resource-100516-053654

Wen C, Wu D, Hu H, Pan W (2015) Pose estimation-dependent identification method for field moth images using deep learning architecture. Biosys Eng 136:117–128. https://doi.org/10.1016/j.biosystemseng.2015.06.002

Yelapure SJ, Kulkarni RV (2012) Literature review on expert system in agriculture. Int J Comput Sci Inf Technol Adv Res 3(5):5086–5089

Zhang J, Huang Y, Pu R, Gonzalez-Moreno P, Yuan L, Wu K, Huang W (2019) Monitoring plant diseases and pests through remote sensing technology: a review. Comput Electron Agric 165:104943. https://doi.org/10.1016/j.compag.2019.104943

Funding

Open access funding provided by Libera Università di Bolzano within the CRUI-CARE Agreement. This work received no external funding.

Author information

Authors and Affiliations

Contributions

MP, FV and SA: Conceptualization and methodology; MP: Literature search; MP: Writing—original draft preparation; MP, FV and SA: Writing—review and editing; SA: Supervision. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Nicolas Desneux.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Preti, M., Verheggen, F. & Angeli, S. Insect pest monitoring with camera-equipped traps: strengths and limitations. J Pest Sci 94, 203–217 (2021). https://doi.org/10.1007/s10340-020-01309-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10340-020-01309-4