Abstract

This institutional self-study investigated the use of text-matching software (TMS) to prevent plagiarism by students in a Canadian university that did not have an institutional license for TMS at the time of the study. Assignments from a graduate-level engineering course were analyzed using iThenticate®. During the initial phase of the study, similarity scores from the first student assignments (N = 132) were collected to determine a baseline level of textual similarity. Students were then offered an educational intervention workshop on academic integrity. Another set of similarity scores from consenting participants’ second assignments (n = 106) were then collected, and a statistically significant assignment effect (p < 0.05) was found between the similarity scores of the two assignments. The results of this study indicate that TMS, when used in conjunction with educational interventions about academic integrity, can be useful to students and educators to prevent and identify academic misconduct. This study adds to the growing body of empirical research about academic integrity in Canadian higher education and, in particular, in engineering fields.

Similar content being viewed by others

The purpose of this institutional self-study (Bertram Gallant and Drinan 2008) was to investigate the use of text-matching software (TMS) among engineering graduate students at a university in Western Canada. This project falls within the university’s commitment to upholding the fundamental values of academic integrity: fairness, respect, responsibility, trust, honesty, and courage (International Center for Academic Integrity [ICAI] 2014). The genesis of this project grew out of conversations on campus about the possibility of using commercially available TMS. In order to determine if such software would be useful on our campus, we undertook a small-scale pilot study. To align our study with institutional strategic commitments to enrich the quality and breadth of learning for our students, and to focus on improving student experience, we purposefully designed our research as an institutional self-study. This intentional design sharpened the focus on plagiarism prevention and student learning, rather than plagiarism detection for the purposes of catching students and imposing sanctions. Some studies have focused on the consequences of academic misconduct, noting consequences can be both a deterrent and a response to breaches of integrity (Austin et al. 2005; Christensen Hughes and Bertram Gallant 2016; Genereux and McLeod 1995). Our study focused more intently on pro-active approaches to prevention, which aligns with the position of scholars who advocate for a teaching and learning approach to academic integrity (Bertram Gallant 2008, 2017; Halgamuge 2017; Morris 2016; Price 2002).

Literature Review

As our study was situated within the Canadian context, we begin our review of the literature there, as this broad, national context sets the stage for subsequent topics we address: academic integrity in engineering-related fields and the use of TMS.

Higher Education in Canada

With an estimated population of just over 37 million (Statistics Canada 2019), Canada has a little less than one-tenth of the population of the United States and about two-thirds of that of the United Kingdom. Tertiary education includes public and private universities and colleges, while trade schools and polytechnics are classified as non-tertiary (Statistics Canada 2018b). The most recent available statistics show that 93% of Canadian adults aged 25 to 34 have attained a high school diploma, with 57% of adults aged 25 to 64 having completed some form of tertiary education, the highest rate among OECD countries (Statistics Canada 2018b).

In 2015–2016, Canada allocated 6% of its GDP to educational institutions, which is higher than the OECD average of 5%, though the allocation varies between provinces and territories (Statistics Canada 2018b). During the 2016–2017 academic year (for which the most recent statistics are available), the total number of students enrolled in post-secondary institutions was reported to be 2,051,865, of whom 135,528 were enrolled in architecture, engineering, and related technologies (Statistics Canada 2018a), with men accounting for 79.5% of total enrollments in those fields.

Higher education in Canada is decentralized (Eaton 2019). The accreditation of undergraduate engineering programs is managed by a national accreditation body, Engineers Canada. The Canadian Degree Qualifications Framework provides guidance to universities on quality assurance (Council of Ministers of Education, Canada 2007; McKenzie 2018), which is not regulated nationally, as it is in the United Kingdom or Australia, for example.

Academic Integrity in Higher Education

Since the 1960s, research in the United States and elsewhere has established consistently widespread academic misconduct in higher education. Reporting rates have varied depending on the study conducted. An early seminal study, Bowers (1966) found that 27% of college students self-reported that they had engaged in plagiarism themselves; 14% self-reported having turned in papers completed in part or in whole by a third party; and 13% self-reported as having cheated on an exam (p. 83). Later work by McCabe and Treviño (1996) found 52% of students self-reported to have copied from another student; 26% of students self-reported as having plagiarized and 54% reported having copied material without footnoting (p. 31). Rates of misconduct among Canadian students are similar.

One seminal study in Canada merits highlighting. Christensen Hughes and McCabe (2006a, 2006b) conducted the largest study of academic misconduct in Canadian history, surveying 14,913 undergraduate students, 1308 graduate students, and 1902 faculty across eleven post-secondary institutions. The results showed that academic misconduct among Canadian students begins before they reach post-secondary levels. Of particular interest for our study is that 35% of graduate students studying in Canada reported that they “engaged in one or more instances of serious cheating on written work” (Christensen Hughes and McCabe 2006a, p. 11), despite 77% of respondents reporting that they had been informed about institutional policies relating to academic misconduct.

Notwithstanding Christensen Hughes and McCabe’s (2006a, 2006b) large-scale study more than a decade ago, research relating to academic integrity in Canada has lagged behind other countries (Eaton and Edino 2018). However, in recent years, advocacy, research, and knowledge mobilization in this field have been gaining momentum (Bretag 2019a, 2019b; Christensen Hughes 2017; Eaton et al. 2019; McKenzie 2018). The reason for this may be the small but repeated efforts of individuals in the Canadian academic integrity community who have organized regional meetings, attended conferences, and formed networks (McKenzie 2018).

Academic integrity in professional education has been a topic of particular interest among a select group of Canadian scholars who are concerned there may be a relationship between student breaches of integrity in school and misconduct or unethical behaviour in professional contexts after graduation. This topic has been explored in a variety of fields among Canadian scholars including education (Bens 2010), health sciences (Austin et al. 2005, 2006; Miron 2016) and engineering (Roncin 2013; Smith et al. 2017).

Academic Integrity in Engineering

Although the fundamental values of academic integrity (ICAI 2014) are regarded as having a certain universality, and institutional policies identify expectations across a particular campus, there are still notable differences in what academic integrity means across disciplines (Simon 2016). Studies on academic integrity in engineering conducted in the United States have shown that engineering students report high rates of cheating behaviours, a close second to business students (McCabe 1997; McCabe et al. 2012). In their review of seven studies over 11 years, Finelli, Harding and Carpenter (2007) found that engineering students reported engaging in cheating behaviours more than students in other disciplines, regardless of opportunities to cheat. This is especially concerning because those who behave unethically in university are more likely to behave that way in the workplace (Harding et al. 2004).

Other research on academic integrity in engineering has demonstrated that students often lack awareness of and skills to avoid academic misconduct. One way to respond to this is through engaging students and providing clear ethical frameworks, which Colby and Sullivan (2008) reported as effective. Although not focused on engineering programs, Broekleman-Post (2008) also reported that faculty can safeguard against cheating and misconduct by discussing cheating. This is especially relevant in engineering programs where faculty may be perceived to turn a “blind eye” to students copying each other’s lab reports, for example (Parameswaran and Devi 2006).

Little research has been conducted in Canada in this field (Smith et al. 2017), but there are some notable exceptions. Hu (2001) used a qualitative case study to explore academic writing among 16 Chinese graduate students in sciences and engineering at the University of British Columbia Canada. Hu also interviewed seven faculty members and reviewed institutional documentation such as course outlines. Study findings showed that the students worked extraordinarily hard to attain academic achievement, taking full course loads, presenting at conferences, and dedicating long hours of study in their programs, but they still struggled with presenting their ideas and research in English. The workloads and time pressures of students taking programs in sciences and engineering were noted as a complicating factor. One particular finding is noteworthy. The students consistently reported being told by faculty members that their writing had to be of publishable quality, but the feedback and support the students received to improve their writing varied greatly among instructors and supervisors. The students reported that the majority of faculty members chose to focus on content, offering little in the way of support to improve the technical aspects of the students’ writing. They felt they needed to develop their writing skills but did not receive adequate support to do so. Different expectations among individual faculty members, as well as variation in the type and thoroughness of the feedback provided by individual faculty members, proved to be a source of frustration for the students, with lack of feedback being noted as particularly frustrating.

Another study focused on engineering programs in Canada was conducted by Smith and Maw (2018) at the University of Saskatchewan and the University of Regina, which are located in the same province. A survey custom-designed for use in Canada was administered to undergraduate and graduate engineering students, as well as faculty (n = 567). Cheating among students was also linked to institutional reputation. Smith and Maw (2018) pointed out that “cheating damages engineering schools in three fundamental ways. It short-circuits the achievement of learning objectives, it erodes the integrity of the evaluation system that is the basis for professional accreditation, and it demoralizes community members who do not cheat” (p. 2). Findings showed a significant negative correlation between academic achievement and self-reported cheating behaviours. A lower academic average was found to be a factor in academic misconduct among Canadian engineering students in this study. Of particular interest was a finding that contradicted the often unchallenged assumption that “the only way to survive within their engineering program would be to engage in academic dishonesty frequently” (Smith and Maw 2018, p. 4). Results showed that this alleged culture of cheating in engineering did not significantly account for self-reported instances of cheating, at least among this particular group of students from Saskatchewan. The authors concluded that more research is needed to better understand academic misconduct among engineering students in Canada.

Text-Matching Software

TMS has been identified as a technology-enhanced way to combat academic misconduct, in particular plagiarism, in a digital age. Although it is sometimes referred to as “plagiarism detection software” (Kloda and Nicholson 2005; Weber-Wulff 2016), this term can be misleading. Such software can identify textual similarities between an uploaded document and other documents in a database. Only a human can determine whether or not the textual similarities suggest that plagiarism has taken place (Weber-Wulff 2016). Advantages of TMS include using the software as a formative tool to help students learn how to improve their writing (Weber-Wulff 2016). Disadvantages include similarity reports being difficult to interpret correctly and incorrect identification of reference material as being non-original (Weber-Wulff 2016). In addition, patchwriting might not be identified by TMS, even when it is an indication that material may have been used without attribution (Weber-Wulff 2016).

There are numerous commercially available systems, with Turnitin® being arguably the most widely used. It is typically licensed as a campus-wide product. Ithenticate®, on the other hand, is similar to Turnitin® and is owned by the same parent company; however, it is more often used by academic journals and publishers, as it also contains Proquest databases but does not contain student work. Initially, any documents, including student assignments, were added to Turnitin®’s proprietary database, but now uploaders can opt out of that feature. Documents uploaded to Ithenticate® are not added to their corpus of data. Other commercially available products include, but may not be limited to Compilatio, Copyscape, Docoloc, Duplichecker, Ephorus, OAPS, PlagAware, Plagiarisma, PlagiarismDetect, PlagiarismFinder, PlagScan, PlagTracker, Strike Plagiarism, and Urkund (Weber-Wulff 2016). In addition, there are a number of non-commercial TMS programs that have been developed, but a discussion of them is beyond the scope of this paper.

TMS has been studied in some depth in higher education contexts around the world for more than a decade (Badge and Scott 2009; Batane 2010; Bruton and Childers 2016; Wood 2004). However, despite its ever-increasing worldwide popularity (Bruton and Childers 2016), TMS has not been universally adopted in Canadian higher education institutions (Bretag 2019b; Kloda and Nicholson 2005). Strawczynski (2004) explored the difficulties faced at McGill University in the province of Quebec with the adoption of TMS. In the 2003–2004 academic year, that university adopted Turnitin® on a trial basis. Jesse Rosenfeld, a second-year student at McGill, received failing grades on his final assignments after refusing to submit his work to Turnitin®, which was a course mandate. He cited copyright infringement of his work and took the matter to the courts (Strawczynski 2004). The case received nationwide media attention, with the judge ruling in favour of Rosenfeld. By 2013 McGill no longer licensed Turnitin® (McGill University 2013). Although it is impossible to say for certain, it is conceivable that the outcome of this legal case may have led other Canadian institutions to become reluctant to adopt TMS, due to fear of litigation. It is not known how many higher education institutions in Canada currently use TMS.

Five years after McGill dropped its license for Turnitin®, Zaza and McKenzie (2018) published the results of a study in which they surveyed 820 students, 67 teaching assistants, and 53 faculty members at a Canadian university about the use of Turnitin®, which was provided as an option on campus. Eleven percent of instructors and 39% of students reported using the TMS only once. Use of the software was positively correlated with knowledge of the software. In other words, individuals who knew how to use the TMS were more likely to do so. Students’ responses about the software were generally positive, with 51% being satisfied or very satisfied with the software, and 70% having no concerns about using the TMS. Adequate resourcing was identified as a challenge with the software. Of the remaining responses, 16% were blank and 14% reported having concerns, mainly about added stress and privacy. Even when the institution provided resources on how to use the TMS, fewer than half of the respondents reported accessing the resources. A key point of interest was that students reported that academic staff could have better explained the software and what to do if the software found a text match. Although respondents were generally satisfied with the TMS, it was found that students needed more information and support from their instructors to use it more effectively (Zaza and McKenzie 2018).

TMS in Engineering Education

Cooper and Bullard (2014) investigated the use of TMS, in this case Turnitin®, in chemical engineering courses at the University of North Carolina in the United States. They found that the tool to was useful to identify students in need of educational supports and resources for understanding and acting with academic integrity. Ali (2013) also investigated engineering student and faculty experiences with Turnitin® and found that both groups positively regarded its usefulness in minimizing and detecting plagiarism. Of the respondents that found the TMS useful, 80% noted that Turnitin® helped them better identify areas of concern in their writing and revise it prior to submission and 70% noted that it helped them better understand plagiarism.

However, Cox (2012) reported that the use of TMS, specifically Turnitin®, may be limited as an educational tool. Her study focused on academic interventions: instructional support and software training. Student participants in her study reported benefiting more from the workshops and instructional support than using the software, although they did find value in its use. Limits to TMS are also noted by Kaner and Fielder (2008), who suggested that TMS may be used by students as a way of avoiding detection, rather than as a tool to avoid plagiarism, noting the potential to modify settings in the software to generate more favourable outcomes. Oghigian et al. (2015) examined Turnitin® similarity reports of 68 science and engineering papers written by students in a Japanese university. At first glance, 99% of the papers contained textual similarities to documents in the Turnitin® database; however, on closer investigation, many of these similarities were excluded as false positives, leaving 29% of the papers with potentially plagiarized materials. The authors further argued that in these cases plagiarism was not intentional, and there was little evidence of intent to deceive. The authors cautioned TMS users to interpret similarity results with caution.

At the conclusion of our review of the literature, we found no evidence of TMS being studied specifically in engineering graduate programs in Canada. We developed our study to advance knowledge of academic integrity within this particular context. Our study was guided by these research questions:

-

1.

How can TMS be used for plagiarism prevention with graduate students?

-

2.

How can TMS be used as a tool for educating students about what plagiarism is and how to avoid it?

-

3.

What is the baseline of textual similarity on student assignments in a graduate-level engineering course?

-

4.

What impact, if any, can a workshop on academic integrity and TMS have on similarity scores in a graduate-level engineering course?

Conceptual Framing: Institutional Self-Study

We have framed our study around Bertram Gallant and Drinan’s (2008) four-stage model of academic integrity institutionalization. Each stage represents continuing development of an institution with regard to academic integrity, with the first being the least developed and the final being the most mature. The four stages are a) Recognition and Commitment, b) Response Generation, c) Implementation, and d) Institutionalization. Institutional research that acknowledges the importance of academic integrity on a campus and develops responses to it is an indicator of commitment to improvement. In order for an institution to develop innovative and evidence-informed educational interventions to support academic integrity, it must first uncover what is happening on its own campus. Self-study can be a key step to developing a culture of academic integrity at an institution (Bertram Gallant and Drinan 2008).

Although the volume of academic misconduct research in Canada may not be as robust as in other regions, studies conducted in this country have often used an institutional self-study approach (Austin et al. 2005; Austin et al. 2006; Bens 2010; Hu 2001; Taylor et al. 2004; Zaza and McKenzie 2018), frequently naming the institutions in the knowledge mobilization of the research. Our project followed in the footsteps of previous self-studies in Canada, and elsewhere, as part of an institutional commitment not only to uphold academic integrity but to improve and also to showcase the university’s commitment to student learning and success through evidence-based approaches.

Research Design

This study took place within the engineering faculty of a large university in Western Canada. The institutional self-study was bounded within one professional development course required for several departments of engineering graduate students. The study was also bounded by time, in that it took place over one 12-week semester in winter 2019. Three particular highlights make this case noteworthy. First, the course has traditionally been team-taught by two or more full-time faculty members, all of whom have been recognized with institutional teaching excellence awards. Second, the team of instructors that designed the course included explicit instruction on plagiarism prevention. This strategy has been specifically recommended for engineering students for more than 20 years (Kennedy 1992), though to the best of our knowledge it has yet to be embedded in instructional practice on a wide scale. Finally, although the course was a requirement, it used a pass/fail evaluation system, meaning that no percentage grades or letter grades were assigned to students.

Two assignments from the course were included in the study. The first assignment was used to establish a baseline for the data in terms of text matches identified by TMS. The second assignment provided additional data after students had been debriefed about the nature of the study, and had received a teaching and learning intervention to help them develop a deeper understanding of plagiarism prevention.

Ethical Considerations

This project received approval from the institutional research ethics review board (REB17–0372). In order to obtain an accurate baseline measure of textual similarity, students could not be made aware of the research before submission of their first assignment so as to not influence their behaviours. For this reason, a deception model was used, and students were made aware of the study only after the similarity scores on their first assignment were determined.

Because of the deception component of this study, considerable harm reduction measures were taken. For example, the research associate hired for the project was not a student, as the institutional REB requested that no student have access to peer grades or work; and individual data from consenting students could not be disclosed.

Additionally, our research ethics board approval stipulated that student work not be uploaded to or saved in a database of any kind. This decision was made in favour of protecting students’ intellectual property. In order for the study to proceed, permission was also required from the institutional legal counsel in order to obtain a license to TMS. As a result of these consultations, iThenticate® was the product selected for the purposes of this research. The process to obtain approval for all aspects of the study, from both the research ethics board and legal counsel, was extraordinarily rigorous and required approximately nine months.

All assignments uploaded to iThenticate® were available only to the uploader through a password-protected account. Assignments were not saved to iThenticate®’s database or used in any way beyond the scope of individual text comparison. Owned by Turnitin®, iThenticate® is most often used to examine academic manuscripts prior to publication. According to iThenticate® (2018), uploaded texts are compared with “60 billion web pages and 155 million content items, including 49 million works from 800 scholarly publisher participants of Crossref Similarity Check” (para. 1).

Research Participants

Participants included engineering graduate students enrolled in Master of Science or Doctor of Philosophy programs (N = 132). Baseline scores were collected, anonymized, and retained from all students in the course, with the permission of the research ethics board. Subsequent to the collection of baseline data, students received an in-class debrief of the deception protocol. The purpose of the study was explained to them, along with the rationale for collecting baseline data without their prior knowledge. Following the deception debrief, 106 (80%) students consented to carry on with the study, had their second assignment analyzed with iThenticate®, and received their personal similarity reports. We are unable to speculate as to why the remaining 20% did not consent to continue with the project, but not all students attended class that day, and others may simply have not been interested.

Data Sources

Two assignments were selected for this research project: an impact study and a short conference-style paper. The course was evaluated on a pass/fail basis, so the two assignments were purposefully brief to provide a fair workload to the students.

The first assignment was a one-page abstract on the impact of a seminal study in students’ research areas. The students were asked to choose a work, find related publications that used it, and perform an impact analysis. The main objectives of this assignment were to become familiar with a seminal work in their research area, to learn how to search different database to find related work, and to practice writing.

The second assignment took the form of a short conference paper, written in a style that is typical for the students. The paper included an introduction illustrating how the student’s research project fit into their research field, a brief overview of key methods used to collect and analyze data, a summary of initial data collection and analysis, and a concluding section. The submitted papers were organized into predefined themes aligned with areas of research. Students who submitted papers within a specific theme performed a double-blind review of three thematically similar papers from other students. The students discussed their reviews at a mock technical program committee meeting. Because this assignment was approached from the perspective of a writer and a reviewer, this was an excellent opportunity to reinforce concepts related to academic integrity. It should be noted that TMS analysis was done on the initial submissions of assignment 2 papers prior to peer review.

Procedures

Each paper (baseline N = 132; assignment 2 n = 106) was run through iThenticate®. Quotes and bibliographies were excluded from the similarity report to avoid artificially inflating scores. For each text uploaded, iThenticate® assigns a similarity score in the form of a percentage, representing how much of the input text is similar to texts in the database. All similarities are highlighted, and original sources are provided in the report.

Phase I – Collection and Analysis of Baseline Data

A research team member was added to the course’s learning management system as a teaching assistant. After the first assignment was electronically submitted, all assignments were downloaded and labeled by student ID number. Assignments were then input into iThenticate® for analysis, and similarity scores were recorded in a spreadsheet on a password-protected computer.

Phase II – Workshop on Academic Integrity

In order to take a holistic and educational approach to the use of TMS in class, members of the research team designed a workshop to accompany the TMS intervention. Ten days after the first assignment was submitted, a member of the research team provided a 75-min workshop to the students. In the class, she used tangible examples of dishonesty to introduce the topic and foster discussion about intellectual property. She also reviewed the university’s policies on academic integrity and described examples of academic misconduct such as cheating and plagiarism. Students were also provided with instruction and practice on avoiding plagiarism through paraphrasing and citation techniques and were given resources for extra information and additional practice. Finally, TMS was introduced and explained. A variety of similarity result exemplars were shown to introduce students to TMS tools and explain how to interpret similarity scores. The workshop facilitator also addressed the advantages of TMS, such as its usefulness as a tool to flag areas of concern and better understand plagiarism along with some of its disadvantages, including the time commitment to understand similarity reports and to interpret their results.

Phase III – Deception Debriefing and Solicitation of Consent

One week following the workshop, the research team member returned to the class. She revealed the deception, read the deception debriefing, and distributed and reviewed consent forms. She requested consent for continued participation, allowing her to access and analyze the second assignment using iThenticate® TMS. She explained how she had analyzed all of the first assignments and showed the class baseline results in an aggregated, non-identifying format. She also returned each similarity report—sealed in an individual envelope and labeled with a student ID number—to the corresponding student. Signed consent forms were received from 80% of the students, who agreed to participate in the subsequent phase of the project.

Phase IV – Collection and Analysis of Post-Intervention (Assignment 2) Data

The second assignments of consenting participants were downloaded shortly after submission. They were analyzed with iThenticate® using the same parameters as in Phase I. Similarity reports were emailed directly to consenting participants for their own information via the learning management system.

Findings

To establish a baseline level of textual similarity, all first assignment submissions were analyzed with iThenticate®, which assigned similarity scores to each one. Analysis of the student submissions revealed that the mean similarity score was 19.71% (Mdn = 14.5%).

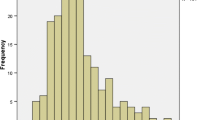

Ethics clearance for this study permitted us to retain baseline data from Assignment 1 regardless of the students’ continued participation; however, it is useful to compare the overall scores with the scores with those students who consented to continue with the study. The mean baseline score of all 132 students was slightly higher (19.71%, SD = 18.01) than the mean baseline score of the 106 students who consented to continued participation (18.74%, SD = 18.04), but the difference was not statistically significant (t = 1.00, df = 106, p = .32) and the distribution of the scores were similar, as seen in Fig. 1.

Between the collection of the baseline scores and Assignment 2, students were provided with an academic integrity workshop and a deception debriefing, where they were informed about the study. To determine if there were any differences between the baseline scores and Assignment 2 scores, the second assignments submitted by consenting participants were analyzed using iThenticate®. The mean similarity score for Assignment 2 (n = 106) was 15.08% (SD = 17.17), and the median similarity score was 8%. Of the papers, 19 (17.92%) had similarity scores over 25%, and six (5.66%) had scores over 50%. Although these numbers may seem high, there were some complexities that contributed to this, which are explained later in this section. For further analysis, the generalized estimating equations (GEE) procedure was selected due to its flexibility, as it models the mean response rather than individual covariates, which was important given the correlated and unbalanced nature of the data. GEE was performed through the GENLIN procedure under SPSS 24.0, and the results revealed a statistically significant assignment effect with χ2(1) = 4.880, p = .027. Figure 1 displays the estimated marginal means of the similarity scores for the baseline (n = 132) and Assignment 2 (n = 106) measures.

Further analysis of Assignment 2 data revealed two important complexities. First, it was discovered that 15 of the 106 assignments (14%) had inflated scores because the software did not identify their reference lists for exclusion and thus included them in the results. The mean score of these papers was 23.79% (SD = 20.38), with a median of 18%. There were no erroneous inclusions of bibliographies in the baseline data.

Additionally, eight of the papers contained instances of self-citation. For Assignment 2, students were to produce an extended abstract, and they could draw on their own work. In some cases, they reported on previously published research, which was not against the institution’s academic integrity policies. The instructor was aware of this situation. Self-citation affected the similarity scores of these eight papers. The mean score for these papers was 49.75% (SD = 25.26), and the median was 46%.

Table 1 presents all the categories of collected data in aggregate. Figure 2 illustrates the distribution of similarity scores for all Assignment 1 papers (baseline), all Assignment 2 papers, and Assignment 2 papers without reference errors or self-citation.

To get a better idea of the potential of the software, an additional analysis compared only the similarity scores of the 83 students for whom Assignment 2 did not lead to inflated results due to self-citation or the reference list error. Once again, the GEE method was performed through the GENLIN procedure under SPSS 24.0. The results show that there is a statistically significant assignment effect with χ2(1) = 9.196, p = .002). Figure 3 displays estimated marginal means of similarity scores for the 83 students for whom there were no self-citation or reference list errors on Assignment 2.

Discussion

Our research has demonstrated that plagiarism exists in various forms and was apparent in a graduate-level engineering course. Furthermore, TMS has the potential to help students and faculty better understand and avoid plagiarism, and more importantly, incidents of plagiarism can be significantly reduced by better education. In this section we use Bertram Gallant and Drinan’s (2008) four-stage model to situate the discussion of the outcomes of this project.

Stage 1, Recognition and Commitment: Like in other institutions, student academic misconduct was already a concern prior to this study, not only in engineering but also across campus. Our university recognized that despite commitments to address academic misconduct through policies and academic support services, transgressions were still occurring.

Stage 2, Response Generation: In response to concerns about academic misconduct, we recognized that TMS could be a useful educational tool to support students and faculty to develop a culture of academic integrity. We looked toward an “intentional, systemic approach to change” (Bertram Gallant and Drinan 2008, p. 31) by developing a study to investigate the usefulness of TMS as a tool to prevent plagiarism. We designed our study to incorporate TMS into a workshop on academic integrity and to trial it in one course to learn baseline levels of textual similarity and explore its potential as an educational tool.

Stage 3, Response Implementation: This stage has largely been described in this article. We undertook the research reported here, and the data we collected demonstrated that TMS is potentially useful for the prevention and detection of plagiarism.

Stage 4, Institutionalization: Based on the implications of our findings, we hope that the results of this study will prompt our institution to consider adopting TMS as part of a commitment to fostering a culture of academic integrity. We also hope that our work will inspire other institutions of higher education to recognize the scope of plagiarism on campus and commit themselves to addressing it. In the rest of this section, we discuss the study’s results in greater detail.

The mean level of textual similarity found in this study was similar to the 20.5% reported by Batane (2010). One difference between these results and others is that out of 132 students, 37 (28%) had similarity scores over 25% and 11 (8.3%) had scores over 50%. This is much higher than what was reported by CAVAL Collaborative Solutions (2002), where out of over 1700 student papers from across disciplines, 8.9% had similarity scores greater than 25%. We suspect this may be because the earlier report is now quite out of date and reflects a different landscape, where many sources were not available electronically and not included in TMS databases.

The use of TMS and similarity reports can significantly benefit students and researchers and increase the integrity of academic programs. Similarity reports provide students, faculty, and teaching assistants with evidence-informed information about student writing, which may be especially appreciated by students in engineering fields. During the course of this research, many students had legitimate questions about how to identify and avoid plagiarism, and the similarity reports provided them with valuable feedback to help them improve their writing. It was clear that they wanted to use their results as a learning tool and better their writing skills. TMS can help students independently identify problematic paraphrasing, patchwriting, or inadvertently uncited materials.

Faculty, too, can benefit from similarity reports produced by TMS. They can identify students who would benefit from additional support and training to better understand plagiarism and develop skills for citing and paraphrasing. TMS can help educators provide actionable and formative feedback. Faculty can also identify what is likely to be intentional plagiarism using the similarity report evidence to save time manually finding and documenting student plagiarism.

Although TMS offers many advantages to various stakeholders in higher education, the use of the software is not without its drawbacks. TMS has the potential for punitive applications, and it is in the identification of plagiarism that is either unintentional or a result of poor academic writing or research skills where this potential is greatest. Academic misconduct cannot be identified by a similarity score alone. It is crucial to analyze and interpret similarity scores produced by TMS, rather than taking scores at face value. Although scores may indeed reveal plagiarism, results may be confounded by software errors, common phrases, technical jargon, among other things (Weber-Wulff 2016). Further, TMS is only one tool in the toolbox; it cannot be seen as a replacement for academic integrity workshops, tutorials, or education. It also does not replace human detection, and faculty should be aware that there are users who find ways to work around the software, with some websites offering strategies to avoid the detection of plagiarized materials (Carter and Blanford 2016). Furthermore, there is a need for student and faculty training with the software. One misinformed student belief that surfaced during the course of this study was that a similarity score of zero is ideal or necessary. All people involved in using the software need to understand how to interpret and use similarity results.

The software reports should not be used as the only measure for determining plagiarism. Upon determining a baseline level of textual similarity of student work and looking more carefully at the similarity reports, a more nuanced view of textual similarity emerged. Almost all the work submitted had some textual similarity, with only 10 of 132 (7.58%) papers assigned similarity scores of zero. Of the remaining 122 assignments, the results were heterogeneous. The vast majority contained textual similarities that were neither worrisome nor unexpected, such as terms and phrases common to academic or technical writing. Others contained examples of what could be considered cases where paraphrasing was either poorly done or the citation was unclear. Another problem was self-plagiarism, where students copied their own previous work. These incidences of unintentional plagiarism and self-plagiarism can be significantly reduced through proper training and education. Only a few works contained what can be considered intentional plagiarism, with large components of the submitted assignment copied and pasted from one or more sources with the intent to pass the work off as the student’s own.

Furthermore, no TMS program is perfect. As we discovered, there were times when the software did not function as intended; for example, out of 106 submissions of the second assignment, 15 similarity reports erroneously included the reference lists, despite the filter settings. This resulted in artificially inflated similarity scores, underscoring the importance of investigating and interpreting results carefully. There is no magic score or threshold, and all results need to be considered on a case-by-case basis.

Text-Matching Software in Canadian Higher Education

The use of TMS has been more limited in Canadian higher education contexts for a variety of reasons outlined in the literature review (Bretag 2019b; Kloda and Nicholson 2005; Strawczynski 2004; Zaza and McKenzie 2018). Research such as this, despite its narrow scope, provides evidence of the usefulness of TMS as a tool to promote academic integrity when coupled with instructional and administrative supports and policies.

We framed our project around Bertram Gallant and Drinan’s (2008) four-stage model of academic integrity institutionalization. The four developmental stages are a) Recognition and Commitment, b) Response Generation, c) Implementation, and d) Institutionalization. In this study, we were able to generate meaningful dialogue about the benefits and drawbacks of commercially available TMS. Above and beyond the results reported in this study, we were able to advance the institutional dialogue about the importance of incorporating pro-active pedagogical approaches to supporting graduate students’ learning.

At the beginning of this article we noted that institution-level research can help campus stakeholders affirm the importance of academic integrity. Then, educators and administrators can develop ways to respond to breaches of integrity as part of an overall institutional commitment to improve. We noted that for an institution to develop innovative and evidence-informed responses to support academic integrity, it must first uncover what is happening on its own campus. In the case of this study, we discovered that graduate engineering students benefitted from the combination of the use of TMS and direct instruction.

Limitations

This study had a number of limitations. First, as an institutional self-study, the results are not generalizable to other contexts. Second, the two data sets that were compared were not equal for a number of reasons. The baseline assignment and second assignment were of different lengths with different instructions. Furthermore, permitted self-citation and software errors affected the quality of the similarity reports from the second assignment. Finally, it is possible that the statistically significant reductions in similarity scores on the second assignment were due to unknown extraneous factors not considered in the scope of this research.

Future Research Directions

The results of this study are promising and indicate the potential for TMS to be used for the identification of and education about plagiarism in a graduate-level engineering course, yet they represent only two assignments from one cohort of learners. Further longitudinal research with other students in other faculties is warranted. Future research might allow students to access and use TMS as well. Another possible area of research is to investigate how TMS can help identify different types of plagiarism by more closely investigating the types of textual similarities flagged by the software. As research about academic misconduct in the field of engineering has been limited in Canada compared with other countries, not only is more research needed, but larger-scale, multi-institutional studies are needed in Canada (Eaton and Edino 2018).

Implications for Policy and Practice

Compared to other countries, Canada has been slow to adopt TMS in higher education (Bretag 2019b; Kloda and Nicholson 2005; Strawczynski 2004; Zaza and McKenzie 2018). This study demonstrates that the software offers some value to a variety of stakeholders in post-secondary institutions by providing students with examples of do’s and don’ts while writing a manuscript. We argue that with proper support and training mechanisms in place, more universities could consider licensing and adopting TMS for students and faculty.

Conclusions

Whether due to increased ease of access to materials online or the increased ease of identifying plagiarized materials, plagiarism is becoming a growing concern in higher education. This study investigated the potential for TMS within a graduate-level course at a Western Canadian university. Our research determined the levels of textual similarity of student assignments and explored how TMS can be used to detect plagiarism and educate students about plagiarism, specifically in the field of graduate engineering programs. The high levels of textual similarity we discovered when establishing a baseline emphasize the importance of educating students about plagiarism and how to avoid it. Furthermore, we found that an intervention in the form of a workshop about academic integrity, paraphrasing, and TMS appeared to be effective in reducing similarity scores for a subsequent assignment. When comparing assignment scores before and after the intervention, statistically significant differences were noted.

TMS has the potential to support student and faculty understanding of plagiarism. However, the adoption of TMS alone is not sufficient to reduce instances of plagiarism. The implementation of this software on campus needs to be accompanied by explicit academic integrity education as well as resourcing in the form of human support for academic staff and students.

Bertram Gallant and Drinan (2008) pointed out that institutional self-study can be a crucial key step to developing a culture of academic integrity at an institution. We found that to be the case as a result of this study, due to the fact that the process of conducting the study generated deep discussions within the research team itself, which included members from three different faculties. In addition, it generated robust discussion within our institutional research ethics board, as noted in our methods section. We cannot draw conclusions about the development of the entire institution based on an inquiry conducted in a single faculty, but we can say that as a result of this work, we have been able to take a more pro-active approach not only to plagiarism prevention, but to upholding and enacting integrity in the graduate engineering program. One example of how this was done is that members of our research team have been successful in collaborating with other faculty colleagues in the school of engineering to engage in ongoing educational development with engineering faculty members about how to focus more on pro-active and preventative approaches to upholding and enacting integrity. In turn, this has led to deeper and sustained discussions about the need to connect academic integrity in school to professional ethics in industry.

This study is situated as one example of how the institution has been engaging in a more intentional effort to build an explicit culture of integrity at the institution. Additional evidence of the institutional commitment to academic integrity can be found in our other recent work (Eaton and Edino 2018; Eaton 2019; Crossman et al. 2020). Perhaps the most noteworthy evidence of an institutional commitment to academic integrity is that since the time of this study, the university created a new secondment position dedicated to academic integrity, appointing the principal investigator of this study to the role for a two year period. The secondment role is situated role within the centre for teaching and learning, and the terms of reference include further developing the institutional culture of integrity on campus.

References

Ali, H. I. H. (2013). Minimizing cyber-plagiarism through Turnitin: Faculty’s & students’ perspectives. International Journal of Applied Linguistics and English Literature. https://doi.org/10.7575/aiac.ijalel.v.2n.2p.33.

Austin, Z., Simpson, S., & Reynen, E. (2005). ‘The fault lies not in our students, but in ourselves’: Academic honesty and moral development in health professions education—Results of a pilot study in Canadian pharmacy. Teaching in Higher Education. https://doi.org/10.1080/1356251042000337918.

Austin, Z., Collins, D., Remillard, A., Kelcher, S., & Chiu, S. (2006). Influence of attitudes toward curriculum on dishonest academic behavior. American Journal of Pharmaceutical Education. https://doi.org/10.5688/aj700350.

Badge, J., & Scott, J. (2009). Dealing with plagiarism in the digital age. http://evidencenet.pbworks.com/w/page/19383480/Dealing-with-plagiarism-in-the-digital-age. Accessed 25 September 2019.

Batane, T. (2010). Turning to Turnitin to fight plagiarism among university students. Educational Technology & Society, 13(2), 1–12.

Bens, S. L. (2010). Senior education students’ understandings of academic honesty and dishonesty (Doctoral thesis). http://hdl.handle.net/10388/etd-09192010-154127. Accessed 25 September 2019.

Bertram Gallant, T. (2008). Academic integrity in the twenty-first century: A teaching and learning imperative. Hoboken, NJ: Wiley.

Bertram Gallant, T. (2017). Academic integrity as a teaching & learning issue: From theory to practice. Theory Into Practice. https://doi.org/10.1080/00405841.2017.1308173.

Bertram Gallant, T., & Drinan, P. (2008). Toward a model of academic integrity institutionalization: Informing practice in postsecondary education. Canadian Journal of Higher Education, 38(2), 25–43.

Bowers, W. J. (1966). Student dishonesty and its control in college. New York: Bureau of Applied Social Research, Columbia University.

Bretag, T. (2019a). Academic integrity: A global Community of Scholars. 1–12. Paper presented at the Canadian Symposium on Academic Integrity, Calgary, Canada. https://prism.ucalgary.ca/handle/1880/110280. Accessed 16 January 2020.

Bretag, T. (2019b). Contract cheating research: Implications for Canadian universities. Paper presented at the Canadian symposium on academic integrity, Calgary, Canada. http://hdl.handle.net/1880/110279. Accessed 10 September 2019.

Broeckelman-Post, M. A. (2008). Faculty and student classroom influences on academic dishonesty. IEEE Transactions on Education, 51(2), 206–211. https://doi.org/10.1109/TE.2007.910428.

Bruton, S., & Childers, D. (2016). The ethics and politics of policing plagiarism: A qualitative study of faculty views on student plagiarism and Turnitin®. Assessment & Evaluation in Higher Education. https://doi.org/10.1080/02602938.2015.1008981.

Carter, C. B., & Blanford, C. F. (2016). Plagiarism and detection. Journal of Materials Science, 51, 7047–7048. https://doi.org/10.1007/s10853-016-0004-7.

Christensen Hughes, J. M. (2017). Understanding academic misconduct: Creating robust cultures of integrity. Paper presented at the University of Calgary, Canada. http://hdl.handle.net/1880/110083. Accessed 25 September 2019.

Christensen Hughes, J., & Bertram Gallant, T. (2016). Infusing ethics and ethical decision making into the curriculum. In T. Bretag (Ed.), Handbook of academic integrity (pp. 1055–1073). Singapore: Springer Singapore.

Christensen Hughes, J. M., & McCabe, D. L. (2006a). Academic misconduct within higher education in Canada. Canadian Journal of Higher Education, 36(2), 1–21.

Christensen Hughes, J. M., & McCabe, D. L. (2006b). Understanding academic misconduct. Canadian Journal of Higher Education, 36(1), 49–63.

Colby, A., & Sullivan, W. M. (2008). Ethics teaching in undergraduate engineering education. Journal of Engineering Education, 97(3), 327–338.

CAVAL Collaborative Solutions. (2002). Victorian vice-chancellors’ electronic plagiarism detection pilot project. CAVAL Collaborative Solutions: Bundoora, Australia.

Cooper, M. E., & Bullard, L. G. (2014). Application of plagiarism screening software in the chemical engineering curriculum. Chemical Engineering Education, 48(2), 90–96.

Council of Ministers of Education, Canada. (2007).

Cox, S. (2012). Use of Turnitin and a class tutorial to improve referencing and citation skills in engineering students. In W. Aung, V. Ilic, O. Mertanen, J. Moscinski, & J. Uhomoibhi (Eds.), Innovations 2012 (pp. 109-118). Potomac, MD: iNEER.

Crossman, K., Sabbaghan, S., Eaton, S. E., & Lock, J. (2020). Plagiarism or sloppiness? A pilot study of student and professor perceptions of plagiarism. Paper presented at the International Center for Academic Integrity (ICAI) 2020 Conference, Portland, OR.

Eaton, S. E. (2019). Overview of higher education in Canada. In: J. M. Jacob & R. Heydon (Eds.), Bloomsbury education and childhood studies. London, UK: Bloomsbury.

Eaton, S. E., & Edino, R. I. (2018). Strengthening the research agenda of educational integrity in Canada: A review of the research literature and call to action. International Journal of Educational Integrity, 14(1). https://doi.org/10.1007/s40979-018-0028-7.

Eaton, S. E., Crossman, K., & Edino, R. I. (2019). Academic integrity in Canada: An annotated bibliography. Retrieved from Calgary: http://hdl.handle.net/1880/110130.

Finelli, C. J., Harding, T. S., Carpenter, D. D. & Mayhew, M. (2007). Academic integrity among engineering undergraduates: Seven years of research by the E^3 Team. Paper presented at 2007 American Society for Engineering Education annual conference & exposition, Honolulu, Hawaii. https://peer.asee.org/2805. Accessed 25 September 2019.

Genereux, R. L., & McLeod, B. A. (1995). Circumstances surrounding cheating: A questionnaire study of college students. Research in Higher Education, 36(6), 687–704.

Halgamuge, M. N. (2017). The use and analysis of anti-plagiarism software: Turnitin tool for formative assessment and feedback. Computer Applications in Engineering Education, 25(6), 895–909. https://doi.org/10.1002/cae.21842.

Harding, T. S., Carpenter, D. D., Finelli, C. J., & Passow, H. J. (2004). Does academic dishonesty relate to unethical behavior in professional practice?. An exploratory study. Science and Engineering Ethics. https://doi.org/10.1007/s11948-004-0027-3.

Hu, J. (2001). The academic writing of Chinese graduate students in sciences and engineering: Processes and challenges (Doctoral thesis). http://hdl.handle.net/2429/12959. Accessed 25 September 2019.

International Center for Academic Integrity. (2014). The fundamental values of academic integrity (2nd ed.). https://academicintegrity.org/wp-content/uploads/2017/12/Fundamental-Values-2014.pdf. Accessed 25 September 2019.

iThenticate. (2018). About iThenticate plagiarism detection software. http://www.ithenticate.com/about. Accessed 25 September 2019.

Kaner, C., & Fiedler, R. L. (2008). A cautionary note on checking software engineering papers for plagiarism. IEEE Transactions on Education. https://doi.org/10.1109/TE.2007.909351.

Kennedy, I. G. (1992). Educating engineers in how to do research. In 3D Africon Conference. Africon ‘92 Proceedings, https://doi.org/10.1109/AFRCON.1992.624559.

Kloda, L., & Nicholson, K. (2005). Plagiarism detection software and academic integrity: The Canadian perspective. Paper presented at the Librarians’ Information Literacy Annual Conference, Imperial College, London, UK. http://hdl.handle.net/10760/7079. Accessed 20 September 2019.

McCabe, D. L. (1997). Classroom cheating among natural science and engineering majors. Science and Engineering Ethics. https://doi.org/10.1007/s11948-997-0046-y.

McCabe, D. L., & Treviño, L. K. (1996). What we know about cheating in college longitudinal trends and recent developments. Change: The Magazine of Higher Learning, 1(1), 28–33. https://doi.org/10.1080/00091383.1996.10544253.

McCabe, D. L., Butterfield, K. D., & Treviño, L. K. (2012). Cheating in college: Why students do it and what educators can do about it. JHU Press.

McGill University. (2013). Termination of Turnitin service. https://www.mcgill.ca/it/channels/news/termination-turnitin-service-227074.

McKenzie, A. M. (2018). Academic integrity across the Canadian landscape. Canadian Perspectives on Academic Integrity. https://doi.org/10.11575/cpai.v1i2.54599.g42964.

Miron, J. B. (2016). Academic integrity and senior nursing undergraduate clinical practice (Doctoral thesis). https://qspace.library.queensu.ca/handle/1974/14708. Accessed 25 September 2019.

Morris, E. J. (2016). Academic integrity: A teaching and learning approach. In T. Bretag (Ed.), Handbook of academic integrity (pp. 1037–1053). Singapore: Springer Singapore.

Oghigian, K., Rayner, M., & Chujo, K. (2015). A quantitative evaluation of Turnitin from an L2 science and engineering perspective. CALL-EJ, 17(1), 1–18.

Parameswaran, A., & Devi, P. (2006). Student plagiarism and faculty responsibility in undergraduate engineering labs. Higher Education Research and Development, 25(3), 263–276.

Price, M. (2002). Beyond “gotcha!”: Situating plagiarism in policy and pedagogy. College Composition and Communication, 54(1), 88–115. https://doi.org/10.2307/1512103.

Roncin, A. (2013). Thoughts on engineering ethics in Canada. In Proceedings of the 2013 Canadian Engineering Education Association Conference, https://doi.org/10.24908/pceea.v0i0.4909.

Simon. (2016). Academic integrity in non-text based disciplines. In T. Bretag (Ed.), Handbook of academic integrity (pp. 763–782). Singapore: Springer Singapore.

Smith, D. M., & Maw, S. (2018). Supplementary results of the CAIS-1 survey on cheating in undergraduate engineering programs in Saskatchewan. In Proceedings of the 2017 Canadian Engineering Education Association Conference, https://doi.org/10.24908/pceea.v0i0.10578.

Smith, D. M., Bens, S., Wagner, D., & Maw, S. (2017). A literature review on the culture of cheating in undergraduate engineering programs. In Proceedings of the 2016 Canadian Engineering Education Association Conference, https://doi.org/10.24908/pceea.v0i0.6536.

Statistics Canada (2018a). Canadian postsecondary enrolments and graduates, 2016/2017. https://www150.statcan.gc.ca/n1/daily-quotidien/181128/dq181128c-eng.htm. Accessed 20 September 2019.

Statistics Canada. (2018b). Education indicators in Canada: An international perspective, 2018. https://www150.statcan.gc.ca/n1/en/pub/81-604-x/81-604-x2018001-eng.pdf. Accessed 20 September 2019.

Statistics Canada. (2019). Table 17-10-0005-01: Population estimates on July 1st, by age and sex. https://doi.org/10.25318/1710000501-eng.

Strawczynski, J. (2004). When students won’t Turnitin: An examination of the use of plagiarism prevention services in Canada. Education & Law Journal, 14(2), 167–190.

Taylor, K. L., Usick, B. L., & Paterson, B. L. (2004). Understanding plagiarism: The intersection of personal, pedagogical, institutional, and social contexts. Journal on Excellence in College Teaching, 15(3), 153–174.

Weber-Wulff, D. (2016). Plagiarism detection software: Promises, pitfalls, and practices. In T. Bretag (Ed.), Handbook of academic integrity (pp. 625–638). Singapore: Springer Singapore.

Wood, G. (2004). Academic original sin: Plagiarism, the internet, and librarians. The Journal of Academic Librarianship. https://doi.org/10.1016/j.acalib.2004.02.011.

Zaza, C., & McKenzie, A. (2018). Turnitin® use at a Canadian university. The Canadian Journal for the Scholarship of Teaching and Learning. https://doi.org/10.5206/cjsotl-rcacea.2018.2.4.

Acknowledgements

We wish to thank Dr. Tak Fung, University of Calgary, for his support with the statistical analysis of the data. We thank colleagues at the University of Calgary who reviewed early drafts of this paper, including Dr. Jenny Godley.

Availability of Data and Material

Due to constraints imposed by the institutional REB, we are not permitted to share the raw data in any form, including, but not limited to student data and individual similarity scores.

Funding

This project was funded by a University of Calgary Teaching and Learning Grant.

Author information

Authors and Affiliations

Contributions

Eaton, Yates, Behjat, Fear and Trifkovic conceptualized and designed the project. Eaton supervised the project and led the ethics application. Crossman collected and analyzed the data. All authors collaborated on the manuscript writing.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eaton, S.E., Crossman, K., Behjat, L. et al. An Institutional Self-Study of Text-Matching Software in a Canadian Graduate-Level Engineering Program. J Acad Ethics 18, 263–282 (2020). https://doi.org/10.1007/s10805-020-09367-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10805-020-09367-0