Abstract

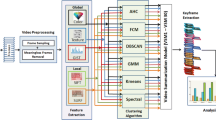

The rapid growth of video data demands both effective and efficient video summarization methods so that users are empowered to quickly browse and comprehend a large amount of video content. It is a herculean task to manage access to video content in real time where humongous amount of audiovisual recorded data is generated every second. In this paper we propose an Eratosthenes Sieve based key-frame extraction approach for video summarization (VS) which can work better for real-time applications. Here, Eratosthenes Sieve is used to generate sets of all Prime number frames and nonprime number frames up to total N frames of a video. k-means clustering procedure is employed on these sets to extract the key–frames quickly. Here, the challenge is to find the optimal set of clusters, achieved by employing Davies-Bouldin Index (DBI). DBI a cluster validation technique which allows users with free parameter based VS approach to choose the desired number of key-frames without incurring additional computational costs. Moreover, our proposed approach includes likes of both local and global perspective videos. The method strongly enhances clustering procedure performance trough engagement of Eratosthenes Sieve. Qualitative and quantitative evaluation and complexity computation are done in order to compare the performances of the proposed model and state-of-the-art models. Experimental results on two benchmark datasets with various types of videos exhibit that the proposed methods outperform the state-of-the-art models on F-measure.

Similar content being viewed by others

References

Achanta R, Hemami S, Estrada F, Süsstrunk S (2009) Frequency-tuned salient region detection IEEE international conference on computer vision and pattern recogition, pp 1597–1604

Arya R, Singh N, Agrawal R K (2015) A novel hybrid approach for salient object detection using local and global saliency in frequency domain. Multimed Tools App:1–21

Assfalg J, Bertini M, Colombo C, Bimbo A D, Nunziati W (2003) Semantic annotation of soccer videos: Automatic highlights identification. Comp Vision Image Underst 92(2):285–305

Brunelli R, Mich O, Modena C M (1999) A survey on the automatic indexing of video data. J Vis Comm Image Represent 10(2):78–112

Chang H S, Sull S, Lee S U (1999) Efficient video indexing scheme for content-based retrieval. IEEE Trans Circuits Syst for Video Tech 9(8):1269–1279

Chang P, Han M, Gong E (2002) Extract highlights from baseball game video with hidden markov models Proceedings of the IEEE international conference on image processing (ICIP 2002), vol 1, pp I–609

Changsheng X et al (2008) A novel framework for semantic annotation and personalized retrieval of sports video. IEEE Trans Multimedia 10(3):421–436

Chen F, De Vleeschouwer C, Cavallaro A (2014) Resource allocation for personalized video summarization. IEEE Trans Multimedia 16(2):455–469

Chowdhury A S, Kuanar S K, Panda R, Das Moloy N (2012) Video storyboard design using Delaunay graphs International conference on pattern recogition (ICPR 2012), pp 3108–3111

Cong Y, Yuan J, Luo J (2012) Towards scalable summarization of consumer videos via sparse dictionary selection. IEEE Trans Multimed 14(1):66–75

Dagtas S, Abdel-Mottaleb M (2004) Multimodal detection of highlights for multimedia content. Multimed Syst 9:586–593

de Avila S E F, Lopes A P B et al (2011) Vsumm: a mechanism designed to produce static video summaries and a novel evaluation method. Pattern Recog Lett 32 (1):56–68

de Avila S E F, Lopes A P B et al (2011) Vsumm: a mechanism designed to produce static video summaries and a novel evaluation method. Pattern Recognit Lett 32(1):56–68

Doulamis A D, Doulamis N D, Kollias S D (2000) A fuzzy video content representation for video summarization and content-based retrieval. Sign Process 80 (6):1049–1067

Furini M, Geraci F, Montangero M, Pellegrini M (2010) Stimo: still and moving video storyboard for the web scenario. Multimed Tools Appl 46(1):47–69

Gao L, Song J et al (2016) Optimized graph learning using partial tags and multiple features for image and video annotation 14th European conference on computer vision

Gao L, Song J, Liu X, Shao J, Liu J, Shao J (2015) Learning in high-dimensional multimedia data: the state of the art. Multimedia Systems:1–11

Gao Y, Zhao S, Yang Y, Chua T-S (2015) Multimedia social event detection in microblog International conference on multimedia modeling, Springer International Publishing, pp 269–281

Gargi U, Kasturi R, Strayer S H (2000) Performance characterization of video-shot-change detection methods. IEEE Trans Circuits System Video Tech 10 (1):1–13

Gong Y, Liu X (2000) Video summarization using singular value decomposition IEEE conference on computer vision and pattern recogition, vol 2, pp 174–180

Gu L, Bone D, Reynolds G (1999) Replay detection in sports video sequences Proceedings of the eurographics workshop on multimedia (multimed.’99), pp 3–9

Guan G, Wang Z, Lu S, Da Deng J, Feng D (2013) Keypoint based keyframe selection. IEEE Trans Circuits Syst Video Tech 23(4):729–734

Hou X, Zhang L (2007) Saliency detection: a spectral residual approach IEEE conference on computer vision and pattern recogition IEEE, pp 1–8

John P J P (1996) Biopsychology 3rd edition (third ed.) Pearson Education, pp 170–171

Kim G, Sigal L, Xing E P (2014) Joint summarization of large-scale collections of web images and videos for storyline reconstruction Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4225–4232

Mei S, Guan G et al (2015) Video summarization via minimum sparse reconstruction. Pattern Recog 48(2):522–533

Money A, Agius H (2008) Video summarisation: a conceptual framework and survey of the state of the art. J Vis Comm Image Represent 19(2):121–143

Mundur P, Rao Y, Yesha Y (2006) Keyframe-based video summarization using Delaunay clustering. Int J Digit Libr 6(2):219–232

Nagasaka A (1991) Automatic video indexing and full-video search for object appearances Second working conference on visual database system, pp 119–133

Ouyang J-Q, Li J-T, Zhang Y-D (2003) Replay boundary detection in MPEG compressed video International conference the machine learning and cybernetics international conference IEEE, vol 5, pp 2800–2804

Pan H, Beek P, Sezan M (2001) Detection of slow-motion replay segments in sports video for highlights generation Proceedings of international conference Acoustics, speech, and signal processing IEEE (ICASSP’01), vol 3, pp 1649–1652

Panagiotakis C, Doulamis A, Tziritas G (2009) Equivalent key frames selection based on iso-content principles. IEEE Trans Circuits System Video Tech 19(3):447–451

Ricardo B-Y, Ribeiro-Neto B (1999) Modern information retrieval. ACM Press, New York, p 463

Shahraray B, Gibbon D (1995) Automatic generation of pictorial transcripts of video programs. Proc SPIE 2417:512–518

Singh N, Arya R, Agrawal R K (2016) A novel position prior using fusion of rule of thirds and image center for salient object detection. Multimedia Tools and Applications. doi:10.1007/s11042-016-3676-8

Song J et al (2016) Optimized graph learning using partial tags and multiple features for image and video annotation. IEEE Trans Image Process 25(11):4999–5011

Sun X, Kankanhalli M S (2000) Video summarization using R-sequences. Real-Time Imaging 6(6):449–459

Tjondronegoro D, Chen Y -P P, Pham B (2004) Integrating highlights for more complete sports video summarization. Proc IEEE Multimed 11(4):22–37

Truong BT, Venkatesh S (2007) Video abstraction: a systematic review and classification. ACM Trans Multimed Comp Comm App 3(1, Article 3):37. doi:10.1145/1198302.1198305

Vermaak J, Pérez P, Gangnet M, Blake A (2002) Rapid summarization and browsing of video sequences British machine vision conference, pp 1–10

Video open project storyboard, https://open-video.org/results.php?size=extralarge. Retrieved May, 2016

Wang H L, Cheong L -F (2006) Affective understanding in film. IEEE Trans Cir Sys Video Technol 16(6):689–704

Wang P, Cai R, Yang S-Q (2004) Contextual browsing for highlights in sports video Proceedings of the international conference on multimedia and expo (ICME’04), IEEE international conference, vol 3, pp 1951–1954

Wang S, Ji Q (2015) Video affective content analysis: a survey of state-of-the-art methods. IEEE Trans Affect Comput 6(4):410–430

Weisstein E W (2016) Sieve of Eratosthenes, http://mathworld.wolfram.com/sieveoferatosthenes.html. Retrieved April

Xiong Z, Radhakrishnan R, Divakaran A, Huang T S (2004) Effective and efficient sports highlight extraction using the minimum description length criterion in selecting GMM structures Proceedings of the international conference on multimedia and expo (ICME’04), IEEE international conference, vol 3, pp 1947–1950

Xiong Z, Radhakrishnan R, Divakaran A, Rui Y, Huang T S (2005) A unified framework for video summarization, browsing & retrieval: with applications to consumer and surveillance video. Academic Press, Inc., Orlando, USA

Youtube data uploading statistics, https://www.youtube.com/yt/press/statistics.html, retrieved January, 2016

Zhao G et al (2016) Spatial and temporal scoring for egocentric video summarization. Neurocomputing. doi:10.1016/j.neucom.2016.03.083

Zhao S et al (2013) Flexible presentation of videos based on affective content analysis International conference on multimedia modeling, vol 1. Springer, Berlin, Heidelberg, pp 368–379

Zhao S et al (2016) Continuous probability distribution prediction of image emotions via multi-task shared sparse regression. IEEE Trans Multimedia:99. doi:10.1109/TMM.2016.2617741

Zhao S, Gao Y, Jiang X, Yao H, Chua T-S, Sun X (2014) Exploring principles-of-art features for image emotion recognition ACM MM, pp 47–56

Zhao S, Yao H, Gao Y, Ji R, Xie W, Jiang X, Chua T-S (2016) Predicting personalized emotion perceptions of social images Proceedings of the 2016 ACM on multimedia conference (MM ’16), pp 1385–1394

Zhuang Y, Rui Y, Huang TS, Mehrotra S (1998) Adaptive key frame extraction using unsupervised clustering Proceedings of the international conference on image processing, IEEE, vol 1, pp 866–870

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kumar, K., Shrimankar, D.D. & Singh, N. Eratosthenes sieve based key-frame extraction technique for event summarization in videos. Multimed Tools Appl 77, 7383–7404 (2018). https://doi.org/10.1007/s11042-017-4642-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4642-9