Abstract

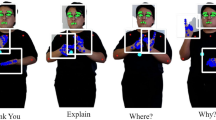

A machine cannot easily understand and interpret three-dimensional (3D) data. In this study, we propose the use of graph matching (GM) to enable 3D motion capture for Indian sign language recognition. The sign classification and recognition problem for interpreting 3D motion signs is considered an adaptive GM (AGM) problem. However, the current models for solving an AGM problem have two major drawbacks. First, spatial matching can be performed on a fixed set of frames with a fixed number of nodes. Second, temporal matching divides the entire 3D dataset into a fixed number of pyramids. The proposed approach solves these problems by employing interframe GM for performing spatial matching and employing multiple intraframe GM for performing temporal matching. To test the proposed model, a 3D sign language dataset is created that involves 200 continuous sentences in the sign language through a motion capture setup with eight cameras.The method is also validated on 3D motion capture benchmark action dataset HDM05 and CMU. We demonstrated that our approach increases the accuracy of recognizing signs in continuous sentences.

Similar content being viewed by others

References

Agarwal A, Thakur MK (2013) Sign language recognition using microsoft kinect. In: 2013 Sixth international conference on contemporary computing (IC3), IEEE. https://doi.org/10.1109/ic3.2013.6612186

Aggarwal J, Xia L (2014) Human activity recognition from 3d data: a review. Pattern Recogn Lett 48:70–80. https://doi.org/10.1016/j.patrec.2014.04.011

Almeida SGM, Guimarães FG, Ramírez JA (2014) Feature extraction in brazilian sign language recognition based on phonological structure and using RGB-d sensors. Expert Syst Appl 41(16):7259–7271. https://doi.org/10.1016/j.eswa.2014.05.024

Ansari ZA, Harit G (2016) Nearest neighbour classification of indian sign language gestures using kinect camera. Sadhana 41(2):161–182. https://doi.org/10.1007/s12046-015-0405-3

Barnachon M, Bouakaz S, Boufama B, Guillou E (2014) Ongoing human action recognition with motion capture. Pattern Recogn 47(1):238–247

Belgacem S, Chatelain C, Paquet T (2017) Gesture sequence recognition with one shot learned CRF/HMM hybrid model. Image Vis Comput 61:12–21. https://doi.org/10.1016/j.imavis.2017.02.003

Borzeshi EZ, Piccardi M, Xu RYD (2011) A discriminative prototype selection approach for graph embedding in human action recognition. In: 2011 IEEE International conference on computer vision workshops (ICCV workshops), IEEE. https://doi.org/10.1109/iccvw.2011.6130401

Cahill-Rowley K, Rose J (2017) Temporal–spatial reach parameters derived from inertial sensors: Comparison to 3d marker-based motion capture. J Biomech 52:11–16. https://doi.org/10.1016/j.jbiomech.2016.10.031

Çeliktutan O, Wolf C, Sankur B, Lombardi E (2014) Fast exact hyper-graph matching with dynamic programming for spatio-temporal data. J Math Imaging Vision 51(1):1–21. https://doi.org/10.1007/s10851-014-0503-6

Chai X, Li G, Chen X, Zhou M, Wu G, Li H (2013) Visualcomm: a tool to support communication between deaf and hearing persons with the kinect. In: Proceedings of the 15th international ACM SIGACCESS conference on computers and accessibility, ACM, p 76

Cui J, Liu Y, Xu Y, Zhao H, Zha H (2013) Tracking generic human motion via fusion of low-and high-dimensional approaches. IEEE Trans Syst Man Cybern Syst 43(4):996–1002

Duan J, Zhou S, Wan J, Guo X, Li SZ (2016) Multi-modality fusion based on consensus-voting and 3d convolution for isolated gesture recognition. arXiv:1611.06689

Geng L, Ma X, Wang H, Gu J, Li Y (2014) Chinese sign language recognition with 3d hand motion trajectories and depth images. In: Proceeding of the 11th world congress on intelligent control and automation, IEEE. https://doi.org/10.1109/wcica.2014.7052933

Grest D, Krüger V Gradient-enhanced particle filter for vision-based motion capture. In: Human motion–understanding, modeling, capture and animation. Springer, Berlin, pp 28–41

Gärtner T, Flach P, Wrobel S (2003) On graph kernels: hardness results and efficient alternatives. In: Learning theory and kernel machines. Springer, Berlin, pp 129–143

Guess TM, Razu S, Jahandar A, Skubic M, Huo Z (2017) Comparison of 3d joint angles measured with the kinect 2.0 skeletal tracker versus a marker-based motion capture system. J Appl Biomech 33(2):176–181. https://doi.org/10.1123/jab.2016-0107

Han F, Reily B, Hoff W, Zhang H (2017) Space-time representation of people based on 3d skeletal data: a review. Comput Vis Image Underst 158:85–105. https://doi.org/10.1016/j.cviu.2017.01.011

Huang P, Hilton A, Starck J (2010) Shape similarity for 3d video sequences of people. Int J Comput Vis 89(2-3):362–381

Jeong YS, Jeong MK, Omitaomu OA (2011) Weighted dynamic time warping for time series classification. Pattern Recogn 44(9):2231–2240

Junejo IN, Aghbari ZA (2012) Using SAX representation for human action recognition. J Vis Commun Image Represent 23(6):853–861

Kakadiaris I, Barrón C Model-based human motion capture. In: Handbook of mathematical models in computer vision, Springer, pp 325–340

Kishore PVV, Kumar DA, Sastry ASCS, Kumar EK (2018) Motionlets matching with adaptive kernels for 3-d indian sign language recognition. IEEE Sensors J 18(8):3327–3337. https://doi.org/10.1109/jsen.2018.2810449

Kumar Eepuri K, Kishore PSSASC, Maddala TKK, Kumar Dande A (2018) Training CNNs, for 3d sign language recognition with color texture coded joint angular displacement maps. IEEE Signal Processing Letters :1–1. https://doi.org/10.1109/lsp.2018.2817179

Kumar P, Gauba H, Roy PP, Dogra DP (2017) Coupled hmm-based multi-sensor data fusion for sign language recognition. Pattern Recogn Lett 86:1–8

Kumar P, Gauba H, Roy PP, Dogra DP (2017) A multimodal framework for sensor based sign language recognition. Neurocomputing 259:21–38

Kushwah MS, Sharma M, Jain K, Chopra A (2016) Sign language interpretation using pseudo glove. In: Proceeding of international conference on intelligent communication, control and devices, Springer, Singapore, pp 9–18

Leightley D, Li B, McPhee JS, Yap MH, Darby J (2014) Exemplar-based human action recognition with template matching from a stream of motion capture. In: Lecture notes in computer science, Springer international publishing, pp 12–20

Li K, Zhou Z, Lee CH (2016) Sign transition modeling and a scalable solution to continuous sign language recognition for real-world applications. ACM Transactions on Accessible Computing 8(2):1–23. https://doi.org/10.1145/2850421

Li M, Leung H, Liu Z, Zhou L (2016) 3d human motion retrieval using graph kernels based on adaptive graph construction. Comput Graph 54:104–112

Li SZ, Yu B, Wu W, Su SZ, Ji RR (2015) Feature learning based on SAE–PCA network for human gesture recognition in RGBD images. Neurocomputing 151:565–573. https://doi.org/10.1016/j.neucom.2014.06.086

Liu L, Cheng L, Liu Y, Jia Y, Rosenblum DS (2016) Recognizing complex activities by a probabilistic interval-based model. In: AAAI, vol 30, pp 1266–1272

Liu Y, Nie L, Han L, Zhang L, Rosenblum DS (2015) Action2activity: recognizing complex activities from sensor data. In: IJCAI, vol 2015, pp 1617–1623

Liu Y, Nie L, Liu L, Rosenblum DS (2016) From action to activity: sensor-based activity recognition. Neurocomputing 181:108–115

Lu Y, Wei Y, Liu L, Zhong J, Sun L, Liu Y (2017) Towards unsupervised physical activity recognition using smartphone accelerometers. Multimedia Tools and Applications 76(8):10701–10719

Mapari RB, Kharat G (2016) American static signs recognition using leap motion sensor. In: Proceedings of the Second international conference on information and communication technology for competitive strategies, ACM, p 67

Moeslund TB, Granum E (2001) A survey of computer vision-based human motion capture. Comput Vis Image Underst 81(3):231–268

Nai W, Liu Y, Rempel D, Wang Y (2017) Fast hand posture classification using depth features extracted from random line segments. Pattern Recogn 65:1–10. https://doi.org/10.1016/j.patcog.2016.11.022

Park S, Park H, Kim J, Adeli H (2015) 3d displacement measurement model for health monitoring of structures using a motion capture system. Measurement 59:352–362. https://doi.org/10.1016/j.measurement.2014.09.063

Rao GA, Kishore P (2017) Selfie video based continuous indian sign language recognition system. Ain Shams Engineering Journal. https://doi.org/10.1016/j.asej.2016.10.013

Rucco R, Agosti V, Jacini F, Sorrentino P, Varriale P, Stefano MD, Milan G, Montella P, Sorrentino G (2017) Spatio-temporal and kinematic gait analysis in patients with frontotemporal dementia and alzheimer’s disease through 3d motion capture. Gait Posture 52:312–317. https://doi.org/10.1016/j.gaitpost.2016.12.021

Sandler W (2017) The challenge of sign language phonology. Annual Review of Linguistics 3:43–63

Sun C, Zhang T, Bao BK, Xu C, Mei T (2013) Discriminative exemplar coding for sign language recognition with kinect. IEEE Transactions on Cybernetics 43(5):1418–1428. https://doi.org/10.1109/tcyb.2013.2265337

Sun S, Luo C, Chen J (2017) A review of natural language processing techniques for opinion mining systems. Information Fusion 36:10–25. https://doi.org/10.1016/j.inffus.2016.10.004

Sun Y, Bray M, Thayananthan A, Yuan B, Torr P (2006) Regression-based human motion capture from voxel data. In: Procedings of the british machine vision conference 2006. British machine vision association

Ta AP, Wolf C, Lavoue G, Baskurt A (2010) Recognizing and localizing individual activities through graph matching. In: 2010 7Th IEEE international conference on advanced video and signal based surveillance, IEEE. https://doi.org/10.1109/avss.2010.81

Xiao Q, Wang Y, Wang H (2014) Motion retrieval using weighted graph matching. Soft Comput 19(1):133–144. https://doi.org/10.1007/s00500-014-1237-5

Yang C, Cheung G, Stankovic V (2017) Estimating heart rate and rhythm via 3d motion tracking in depth video. IEEE Trans Multimedia 19(7):1625–1636. https://doi.org/10.1109/tmm.2017.2672198

Zhang W, Liu Z, Zhou L, Leung H, Chan AB (2017) Martial arts, dancing and sports dataset: a challenging stereo and multi-view dataset for 3d human pose estimation. Image Vis Comput 61:22–39. https://doi.org/10.1016/j.imavis.2017.02.002

Zhang Z, Kurakin AV Dynamic hand gesture recognition using depth data (2017). US Patent 9,536, 135

Acknowledgements

This work was supported in part by the research project scheme titled “Visual – Verbal Machine Interpreter Fostering Hearing Impaired and Elderly”, by the “Technology Interventions for Disabled and Elderly” programme of the Department of Science and Technology, SEED Division, Govt. of India, Ministry of Science and Technology under Grant SEED/TIDE/013/2014(G).

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Kumar, D.A., Sastry, A.S.C.S., Kishore, P.V.V. et al. Indian sign language recognition using graph matching on 3D motion captured signs. Multimed Tools Appl 77, 32063–32091 (2018). https://doi.org/10.1007/s11042-018-6199-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-6199-7