Abstract

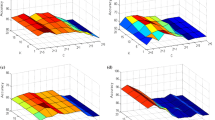

Usually the real-world (RW) datasets are imbalanced in nature, i.e., there is a significant difference between the number of negative and positive class samples in the datasets. Because of this, most of the conventional classifiers do not perform well on RW data classification problems. To handle the class imbalanced problems in RW datasets, this paper presents a novel density-weighted twin support vector machine (DWTWSVM) for binary class imbalance learning (CIL). Further, to boost the computational speed of DWTWSVM, a density-weighted least squares twin support vector machine (DWLSTSVM) is also proposed for solving the CIL problem, where, the optimization problem is solved by simply considering the equality constraints and by considering the 2-norm of slack variables. The key ideas behind the models are that during the model training phase, the training data points are given weights based on their importance, i.e., the majority class samples are given more importance compared to the minority class samples. Simulations are carried on a synthetic imbalanced and some real-world imbalanced datasets. The model performance in terms of F1-score, G-mean, recall and precision of the proposed DWTWSVM and DWLSTSVM are compared with support vector machine (SVM), twin SVM (TWSVM), least squares TWSVM (LSTWSVM), fuzzy TWSVM (FTWSVM), improved fuzzy least squares TWSVM (IFLSTWSVM) and density-weighted SVM for binary CIL. Finally, a statistical study is carried out based on F1-score and G-mean on RW datasets to verify the efficacy and usability of the suggested models.

Similar content being viewed by others

References

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Wei CC (2012) Wavelet kernel support vector machines forecasting techniques: case study on water-level predictions during typhoons. Expert Syst Appl 39(5):5189–5199

Wang L, Gao C, Zhao N, Chen X (2019) A projection wavelet weighted twin support vector regression and its primal solution. Appl Intell, pp 1–21

Campbell WM, Campbell JP, Reynolds DA, Singer E, Torres-Carrasquillo PA (2006) Support vector machines for speaker and language recognition. Comput Speech Lang 20(2–3):210–229

Du SX, Wu TJ (2003) Support vector machines for pattern recognition. J-Zhejiang Univ Eng Sci 37(5):521–527

Drucker H, Wu D, Vapnik VN (1999) Support vector machines for spam categorization. IEEE Trans Neural Netw 10(5):1048–1054

Sun S, Xie X, Dong C (2018) Multiview learning with generalized eigenvalue proximal support vector machines. IEEE Trans Cybern 49(2):688–697

Anitha PU, Neelima G, Kumar YS (2019) Prediction of cardiovascular disease using support vector machine. J Innovat Electron Commun Eng 9(1):28–33

Hazarika BB, Gupta D, Berlin M (2020) A comparative analysis of artificial neural network and support vector regression for river suspended sediment load prediction. In: First international conference on sustainable technologies for computational intelligence, pp 339–349. Springer, Singapore

Jayadeva K, R., & Chandra, S. (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Wang Z, Shao YH, Bai L, Deng NY (2015) Twin support vector machine for clustering. IEEE Trans Neural Netw Learn Syst 26(10):2583–2588

Wang Z, Shao YH, Bai L, Li CN, Liu LM, Deng NY (2018) Insensitive stochastic gradient twin support vector machines for large scale problems. Inf Sci 462:114–131

Cao Y, Ding Z, Xue F, Rong X (2018) An improved twin support vector machine based on multi-objective cuckoo search for software defect prediction. Int J Bio-Inspired Comput 11(4):282–291

Ding S, Zhang N, Zhang X, Wu F (2017) Twin support vector machine: theory, algorithm and applications. Neural Comput Appl 28(11):3119–3130

Ding S, Zhao X, Zhang J, Zhang X, Xue Y (2019) A review on multi-class TWSVM. Artif Intell Rev 52(2):775–801

Ding S, An Y, Zhang X, Wu F, Xue Y (2017) Wavelet twin support vector machines based on glowworm swarm optimization. Neurocomputing 225:157–163

Khemchandani R, Jayadeva, Chandra S (2008) Fuzzy twin support vector machines for pattern classification. In: Mathematical programming and game theory for decision making (pp 131–142)

Gupta D, Richhariya B, Borah P (2018) A fuzzy twin support vector machine based on information entropy for class imbalance learning. Neural Comput Appl, pp 1–12

Cheon M, Yoon C, Kim E, Park M (2008) Vehicle detection using fuzzy twin support vector machine. In: SCIS & ISIS SCIS & ISIS 2008, pp 2043–2048. Japan Society for Fuzzy Theory and Intelligent Informatics

Chen SG, Wu XJ (2018) A new fuzzy twin support vector machine for pattern classification. Int J Mach Learn Cybern 9(9):1553–1564

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36(4):7535–7543

Khemchandani R, Sharma S (2016) Robust least squares twin support vector machine for human activity recognition. Appl Soft Comput 47:33–46

Mir A, Nasiri JA (2018) KNN-based least squares twin support vector machine for pattern classification. Appl Intell 48(12):4551–4564

Tang H, Dong P, Shi Y (2019) A new approach of integrating piecewise linear representation and weighted support vector machine for forecasting stock turning points. Appl Soft Comput 78:685–696

Borah P, Gupta D, Prasad M (2018) Improved 2-norm based fuzzy least squares twin support vector machine. In: 2018 IEEE symposium series on computational intelligence (SSCI), pp 412–419. IEEE.

Sun Y, Wong AK, Kamel MS (2009) Classification of imbalanced data: a review. Int J Pattern Recognit Artif Intell 23(04):687–719

Du G, Zhang J, Luo Z, Ma F, Ma L, Li S (2020) Joint imbalanced classification and feature selection for hospital readmissions. Knowl-Based Syst 200:106020.

Liu Z, Cao W, Gao Z, Bian J, Chen H, Chang Y, Liu TY (2020) Self-paced ensemble for highly imbalanced massive data classification. In: 2020 IEEE 36th international conference on data engineering (ICDE), pp 841–852. IEEE

Wang C, Deng C, Yu Z, Hui D, Gong X, Luo R (2021) Adaptive ensemble of classifiers with regularization for imbalanced data classification. Inf Fusion 69:81–102

Elyan E, Moreno-Garcia CF, Jayne C (2021) CDSMOTE: class decomposition and synthetic minority class oversampling technique for imbalanced-data classification. Neural Comput Appl 33(7):2839–2851

Wang X, Xu J, Zeng T, Jing L (2021) Local distribution-based adaptive minority oversampling for imbalanced data classification. Neurocomputing 422:200–213

Tian Y, Bian B, Tang X, Zhou J (2021) A new non-kernel quadratic surface approach for imbalanced data classification in online credit scoring. Inf Sci 563:150–165

Koziarski M (2020) Radial-based undersampling for imbalanced data classification. Pattern Recogn 102:107262

Choi HS, Jung D, Kim S, Yoon S (2021) Imbalanced data classification via cooperative interaction between classifier and generator. IEEE Trans Neural Netw Learn Syst

Shao YH, Chen WJ, Zhang JJ, Wang Z, Deng NY (2014) An efficient weighted Lagrangian twin support vector machine for imbalanced data classification. Pattern Recogn 47(9):3158–3167

Tomar D, Singhal S, Agarwal S (2014) Weighted least square twin support vector machine for imbalanced dataset. Int J Database Theory Appl 7(2):25–36

Tomar D, Agarwal S (2015) Twin support vector machine: a review from 2007 to 2014. Egyptian Inf J 16(1):55–69

Hua X, Ding S (2015) Weighted least squares projection twin support vector machines with local information. Neurocomputing 160:228–237

Cha M, Kim JS, Baek JG (2014) Density weighted support vector data description. Expert Syst Appl 41(7):3343–3350

Hazarika BB, Gupta D (2020) Density-weighted support vector machines for binary class imbalance learning. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05240-8

He H, Garcia EA (2009) Learning from imbalanced data. IEEE Trans Knowl Data Eng 21(9):1263–1284

Batuwita R, Palade V (2010) FSVM-CIL: fuzzy support vector machines for class imbalance learning. IEEE Trans Fuzzy Syst 18(3):558–571

Tax DM, Duin RP (2004) Support vector data description. Mach Learn 54(1):45–66

Golub GH, Van Loan CF (2013) Matrix computations (Vol 3). JHU Press, Baltimore

Dudani SA (1976) The distancE−weighted k-nearest-neighbor rule. IEEE Trans Syst Man Cybern 4:325–327

Gupta D, Natarajan N (2021) Prediction of uniaxial compressive strength of rock samples using density weighted least squares twin support vector regression. Neural Comput Appl, pp 1–8

Gupta D, Hazarika BB, Berlin M (2020) Robust regularized extreme learning machine with asymmetric Huber loss function. Neural Comput Appl. https://doi.org/10.1007/s00521-020-04741-w

Balasundaram S, Gupta D (2016) On optimization based extreme learning machine in primal for regression and classification by functional iterative method. Int J Mach Learn Cybern 7(5):707–728

Powers DM (2011) Evaluation: from precision, recall and F-measure to ROC, informedness, markedness and correlation

Alcalá-Fdez J, Fernández A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2011) Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Mult-Valued Log Soft Comput 17

Dua D, Graff C (2019) UCI Machine Learning Repository [http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science, zuletzt abgerufen am: 14.09. 2019. Google Scholar

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

McNemar Q (1947) Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 12(2):153–157

Cardillo G (2007) McNemar test: perform the McNemar test on a 2x2 matrix. http://www.mathworks.com/matlabcentral/fileexchange/15472

Everitt BS (1992) The analysis of contingency tables. CRC Press

Eisinga R, Heskes T, Pelzer B, TeGrotenhuis M (2017) Exact p-values for pairwise comparison of Friedman rank sums, with application to comparing classifiers. BMC Bioinform 18(1):68

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interests. The MATLAB codes for the proposed models can be found at: https://github.com/Barenya/densitycode

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hazarika, B.B., Gupta, D. Density Weighted Twin Support Vector Machines for Binary Class Imbalance Learning. Neural Process Lett 54, 1091–1130 (2022). https://doi.org/10.1007/s11063-021-10671-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-021-10671-y