Abstract

In this work, we advocate using Bayesian techniques for inversely identifying material parameters for multiscale crystal plasticity models. Multiscale approaches for modeling polycrystalline materials may significantly reduce the effort necessary for characterizing such material models experimentally, in particular when a large number of cycles is considered, as typical for fatigue applications. Even when appropriate microstructures and microscopic material models are identified, calibrating the individual parameters of the model to some experimental data is necessary for industrial use, and the task is formidable as even a single simulation run is time consuming (although less expensive than a corresponding experiment). For solving this problem, we investigate Gaussian process based Bayesian optimization, which iteratively builds up and improves a surrogate model of the objective function, at the same time accounting for uncertainties encountered during the optimization process. We describe the approach in detail, calibrating the material parameters of a high-strength steel as an application. We demonstrate that the proposed method improves upon comparable approaches based on an evolutionary algorithm and performing derivative-free methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To reduce the tremendous effort involved in experimentally characterizing the (high-cycle) fatigue properties of components made from polycrystalline materials (Mughrabi et al. 1981), complementary analytical (Stephens et al. 2000) and computational strategies (McDowell 1996; Sangid 2013) are sought. To produce predictions with high quality, the heterogeneity of polycrystalline materials on a lower length scale needs to be accounted for Kasemer et al. (2020). Multiscale models based on crystal plasticity (CP) (Roters et al. 2010; Hochhalter et al. 2020) provide the missing link between polycrystalline microstructures, e.g., based on real 3D image data (Diehl et al. 2017), furnished with comparatively simple single-crystal material models (Lian et al. 2019; Wulfinghoff et al. 2013) and the ensuing macroscopic material properties.

For modeling the response to cyclic loading accurately, the Bauschinger effect, which concerns a decrease of the resistance to plastic deformation also when a change in loading directions, for instance from tension to compression, takes place, needs to be accounted for Cruzado et al. (2020). Also, the ratcheting behavior, i.e., the consistent accumulation of plastic slip under stress-driven cyclic loading conditions, is necessary to consider (Farooq et al. 2020). Then, simulations for lifetime predictions may be set up (McDowell and Dunne 2010; Schäfer et al. 2019), often based on kinematic hardening models (Gillner and Münstermann 2017), also considering thermal activation (Xie et al. 2004), atmospheric conditions (Arnaudov et al. 2020) or crack initiation (Anahid et al. 2011) and crack growth (Dunne et al. 2007).

To be of practical use, the involved material parameters on the microscopic scale need to be identified, based on experiments which require less effort than component-scale testing. Dedicated approaches include micro-tensile experiments (Gianola and Eberl 2009), ultrasonic testing (Huntington 1947) or high-energy x-ray diffraction (Dawson et al. 2018). Also, nano-indentation (Liu et al. 2020; Chakraborty and Eisenlohr 2017; Engels et al. 2019) or milled micropillars (Cruzado et al. 2015) may be used for calibrating the material parameters for the single crystal model, also taking into account the strain pole-figures (Wielewski et al. (2017).

Alternatively, the material parameters of the single crystal may be identified by comparing polycrystalline (macroscopic) experiments to simulations on representative volume elements (RVEs) (Shenoy et al. 2008), for instance based on static experiments (Herrera-Solaz et al. 2014; Kim et al. 2017) or in relation to hysteresis data (Cruzado et al. 2017). Such inverse approaches were also put forward for open-cell metal foams (Bleistein et al. 2020) and in the context of noisy thermal measurements (Sawaf et al. 1995).

For identifying the CP parameters from single-crystal measurements, a number of rather classical strategies, like the simplex method (Chakraborty and Eisenlohr 2017) or the Nelder-Mead algorithm (Engels et al. 2019), were reported to be effective. Another prevalent method to calibrate crystal plasticity parameters is the use of genetic algorithms (Prithivirajan and Sangid 2018; Kapoor et al. 2021; Bandyopadhyay et al. 2020; Guery et al. 2016; Pagan et al. 2017). Although these methods are quite powerful, they can be limited when only polycrystalline experiments are at hand and evaluating the quality of a single parameter set involves significant computational cost. Indeed, in general, a full-field simulation needs to be conducted for the latter scenario.

To overcome this intrinsic difficulty, inverse optimization techniques which build up a surrogate model (Zhou et al. 2006; Sedighiani et al. 2020) of the objective function turn out to be useful. Such a surrogate model serves as an approximation of the true objective function, but is designed in such a way that classical optimization techniques (Nocedal and Wright 1999) apply.

Surrogate modeling coupled to an evolutionary algorithm was shown to be effective in shape optimization (Zhou et al. 2006) and for the optimum design of components (Hsiao et al., (2020), see Forrester and Keane (2009) for an overview in the context of aerospace design.

The Bayesian approach to optimization (Mockus 1989) augments surrogate models by accounting for uncertainty during the optimization process. Indeed, suppose that the objective function has already been evaluated at a finite number of points. Then, values of the objective function are known exactly at these points. Apart from these points, the function is unknown, and it is necessary to balance exploration, i.e., inspecting yet undiscovered areas, and exploitation, i.e., seeking to improve the objective function in the vicinity of the currently best spot. In the Gaussian process approach, Bayesian optimization builds up a surrogate model by furnishing each point in the feasible set by a mean value and a standard deviation. The mean value may be thought of as an estimate of the objective function, and the standard deviation quantifies the uncertainty of this objective value at this specific location. From the knowledge of these parameters, Bayesian optimization builds up an objective function which automatically adjusts a trade-off between exploration and exploitation. The Bayesian approach to optimization was reported to be very successful in material design (Snoek et al. 2012; Klein et al. 2017), see (Frazier and Wang 2016) for a recent overview.

In this work, we propose using Bayesian optimization with Gaussian processes for calibrating single crystal parameters inversely based on polycrystalline experiments. We improve upon related research (Schäfer et al. 2019) by assessing the capabilities of our proposed framework by comparing it to a number of extremely powerful methods, including a genetic algorithm and modern derivative-free optimization schemes. This work is structured as follows. We recall the used small-strain crystal plasticity models in Sect. 2.1 and expose Gaussian-process based Bayesian optimization in Sect. 2.2. We investigate the performance of the proposed approach in Sect. 3, carefully studying the optimal algorithmic parameters, comparing to state-of-the-art genetic algorithms (Schäfer et al. 2019) as well as powerful derivative-free optimization methods (Rios and Sahinidis 2013; Huyer and Neumaier 1999; 2008) and demonstrating the applicability of the method to large-scale polycrystalline microstructures.

2 Background on modeling and optimization

2.1 Considered crystal plasticity models

With the fatigue behavior of polycrystalline materials in mind, we will use a material model at small-strains, closely following Schäfer et al. (2019). We assume the total strain tensor \(\varepsilon\) to be decomposed additively

into an elastic and a plastic contribution, denoted by \(\varepsilon _e\) and \(\varepsilon _p\), respectively. We refer to Simo-Hughes (Simo and Hughes 2006, Chap.1) for details. The Cauchy stress tensor \(\sigma\), which is symmetric by conservation of angular momentum (Haupt 2013), is assumed to be linear in the elastic strain, i.e., Hooke’s law

involving the fourth-order stiffness tensor \({\mathbb {C}}\), is assumed to hold. For elasto-viscoplasticity, the evolution of the plastic strain \(\varepsilon _p\) is typically governed by a flow rule of the form

where z denotes a vector of additional internal variables, see Simo-Hughes (2006, Chap. 2).

In the framework of crystal plasticity it is assumed that plastic deformation, i.e., \({\dot{\varepsilon }}_p\not = 0\), is caused by the movement of dislocations on the corresponding crystallographic slip systems. More precisely, a slip system \(\alpha\), characterized by the two orthogonal vectors \(m^\alpha\) and \(n^\alpha\) encoding the slip direction and slip plane normal, respectively, is activated when the resolved shear stress

reaches a critical value \(\tau _c\). Here, \(M^\alpha\) denotes the symmetrized Schmid tensor of the slip system \(\alpha\)

Following (Bishop 1953; Hutchinson 1976), the flow rule (2.3) is formulated as the superposition of crystallographic slips on all the considered slip systems \(N_S\)

where \({\dot{\gamma }}^\alpha\) denotes the plastic slip rate for the \(\alpha\)-th system. To determine the amount of plastic slip on a slip system \(\alpha\), the theory of elasto-viscoplasticity assumes the plastic slip rate \({\dot{\gamma }}^\alpha\) to be a function of the resolved shear stress. The flow rule is designed in such a way that it captures both the Bauschinger effect and ratcheting behavior, which are necessary for describing the cyclic behavior of metals accurately. The Bauschinger effect concerns a decreased resistance to plastic deformation also when a change of loading directions (e.g., from tension to compression) occurs. Ratcheting refers to consistent accumulation of plastic slip under stress-driven cyclic loading conditions. Please note that the loading levels which lead to ratcheting under repeated loading will not induce a plastic flow of the material under static loading conditions, in general. For ratcheting and strain-driven loading, the mean stress will decrease for increasing number of cycles, see Farooq et al. (2020) and Cruzado et al. (2020) for recent discussions of ratcheting and the Bauschinger effect, respectively.

In this work, the flow rule proposed by Hutchingson (1976)

is used. The symbol \(\tau ^\alpha _c\) denotes the critical resolved shear stress and c refers to the stress exponent. To capture both the Bauschinger effect and ratcheting behavior, the original flow rule is augmented by the backstress \({\mathcal {X}}^\alpha _b\), as proposed by Cailletaud (1992). Alternative approaches for modeling these effects are discussed by Harder (2001) with special focus on the coupling of the backstress evolution on different slip systems and the existence of the backstress tensor. Please note that the vector z of internal variables in equation (2.3) collects the different backstresses \({\mathcal {X}}^\alpha _b\).

To close the model, the flow rule needs to be complemented by an evolution equation for the backstress \({\mathcal {X}}^\alpha _b\). The Armstrong-Frederick (AF) model (Frederick and Armstrong 2007) is a nonlinear extension of the model by Prager (1949) and involves a recall term. The model describes the evolution of the backstress by the equation

where A and B are material parameters with dimensions MPa and 1, respectively. These parameters represent the direct hardening as formulated in the Prager model and the recovery modulus present in the extended model, respectively. Bari and Hassan (2000) showed that the AF model may overestimate ratcheting encountered in experiments. Furthermore, they attribute this shortcoming to the constant ratcheting associated to the model.

Extending a formulation by Chaboche, which is based on a decomposition of the AF model, Ohno and Wang (1993) proposed a kinematic hardening model that reads, in the context of crystal plasticity,

In the Ohno-Wang (OW) model, the dynamic recovery term is modified by a power law with exponent M, which controls the degree of non-linearity, to improve the prediction of ratcheting and mean stress relaxation.

2.2 Bayesian optimization

Bayesian optimization (BO) is an approach for solving optimization problems where the objective function is expensive to compute and the gradient of the objective function is not available. Such optimization problems occur, for instance, when evaluating the function corresponds to running a (possibly large-scale) simulation, as is common in virtual crash tests (Raponi et al. 2019) or for complex chemical reactions (Häse et al. 2018). Such problems might also be approached by automatic differentiation (Griewank and Walther 2008), which might prove difficult to integrate into an existing simulation code, or by evolutionary algorithms, like particle swarm algorithms (Blum and Merkle 2008). However, the latter are typically limited to small spatial dimensions and inexpensive function evaluations.

In contrast, Bayesian optimization constructs a surrogate model of the optimization problem based on probabilistic ideas, accounting for uncertainty by Bayesian statistics. We restrict to Gaussian process regression as our uncertainty model. For other approaches we refer to Shah et al. (2014) and Kushner (1964).

Suppose that we are concerned with the optimization problem

where \(A\subseteq \mathbb {R}^d\) is a non-empty set in d spatial dimensions whose membership is easily tested (for instance, a box). For (Gaussian process based) Bayesian optimization, we need to specify:

-

1.

A mean function \(m: A \rightarrow \mathbb {R}\).

-

2.

A kernel (or covariance) function \(K: A\times A \rightarrow \mathbb {R}\).

-

3.

An acquisition function \(u_{f^*,\mu ,\sigma }: A \rightarrow \mathbb {R}\), depending on a value \(f^* \in \mathbb {R}\) and two functions

$$\begin{aligned} \mu :A \rightarrow \mathbb {R}\quad \text {and} \quad \sigma :A \rightarrow \mathbb {R}_{\ge 0}, \end{aligned}$$which we will refer to as (the local estimates of) the mean and standard deviations of the function values at each \(x \in A\).

There are specific prerequisites for sensible kernel functions. We refer to Sect. 4 in Williams and Rasmussen (2006) for details, and will discuss our choice below.

For given m, K and u, Bayesian optimization proceeds as follows. Suppose that the objective function f was evaluated at n points \(x_1,\ldots ,x_n\) in A already, i.e., the values \(f(x_1), \ldots , f(x_n)\) are known. In the Bayesian approach, it is assumed that the vector

was drawn at random from a probability distribution. In Gaussian process regression (Mockus 1994), the latter vector is assumed to follow a multivariate normal distribution with mean \(\mu _n \in \mathbb {R}^n\) and \(\Sigma _n \in \mathbb {R}^{n \times n}\) given by

To infer the value f(x) at some new point we assume that the joint distribution of the values of f over \((x_1,\ldots ,x_n,x)\) is also governed by a multivariate normal distribution with mean \(\mu _{n+1}\) and \(\Sigma _{n+1}\) for \(x_{n+1} = x\), as prescribed in equation (2.11). Using Bayes’ rule, the conditional distribution of f(x) is also Gaussian with mean

and variance

where

see Sect. 2 in Williams and Rasmussen (2006). Provided \(\Sigma _n\) is non-degenerate, and as \(K_n(x_i)\) corresponds to the i-th row of \(\Sigma _n\) for \(i=1,\ldots ,n\), we immediately see that

hold, i.e., the function \(\mu\) interpolates the known values \(f(x_i)\), and the corresponding standard deviation \(\sigma (x_i)\) vanishes. Furthermore, \(\mu (x)\) serves as an estimate for the function value f(x) at any potentially newly sampled point \(x \in A\).

In this work, we set the mean function identical to zero, \(m(x) \equiv 0\). As kernel function we consider the anisotropic ”Matern5/2” kernel (Matérn 2013)

in terms of a positive parameter \(\sigma ^2_k\) and an anisotropic distance function \(\delta\)

involving dilation parameters \(\ell _d\) for each of the dimensions \(d=1\ldots D\) of the vector x. Indeed, we cannot justify using the squared exponential kernel (Stein 2012) due to the lack of sufficient smoothness of our objective function f. For an overview of alternative kernel functions, we refer to chapter 4 in Williams and Rasmussen (2006). The dilation parameters of the distance function \(\delta\) may be interpreted as prefactors used for a nondimensionalization of the variables. To determine the dilation parameters and \(\sigma ^2_k\), a maximum likelihood estimation may be used, see chapter 6 in Bishop (2006). For each new observation the Gaussian process regression is updated, i.e., the parameters of the kernel function (2.14) are determined with respect to all previously made observations, continuously improving the model of f.

We restricted to discussing Gaussian processes for our noiseless application of Bayesian optimization and refer to (Williams and Rasmussen 2006; Bishop 2006) for a more general discussion.

As the next step, BO searches for an improved guess for the solution x of the optimization problem (2.10). A possible approach would be to ignore the estimated statistics and to minimize \(\mu\), exploiting the currently estimated objective values. However, this approach disregards the uncertainty. Indeed, it might happen that the optimum \(x^*\) is located in regions of high uncertainty. Thus, it might be better to explore first.

Acquisition functions provide a suitable exploration-exploitation trade-off by combining the currently known means and variances into a single function to be optimized.

Denote by \(f^*_n\) the lowest objective value observed so far

Plugging \(f^*_n\) and the functions \(\mu\) and \(\sigma\) above into the acquisition function gives rise to the surrogate optimization problem

In contrast to the original optimization problem (2.10), the acquisition function is cheap to evaluate and gradient information is available. Also, the acquisition function provides a trade-off between exploration, i.e., decreasing the uncertainty, and exploitation, i.e., searching in the vicinity of sites with small objective values. Thus, the next point to investigate is selected by maximizing the acquisition function

Recall that confidence intervals of a normally distributed random variable with mean \(\mu\) and standard deviation \(\sigma\) have the form

where \(\xi\) is a parameter which determines the probability that a measurement lies in this confidence interval. For instance, a two-sided confidence interval with \(95\%\) probability is obtained for \(\xi \approx 1.96\).

For the lower confidence-bound acquisition-function (\(u_{LCB}\)) (Cox and John 1992), the lower bound of the confidence interval is chosen as the proxy for the objective function f, i.e.,

is considered with \(\mu (x)\) and \(\sigma (x)\) given by Equations (2.12) and (2.13), respectively. This acquisition function tries to improve the currently known least lower bound on f.

The quantity \(\xi\) may be chosen as a tuneable parameter. Indeed, for small \(\xi\), the acquisition function tends to select points with low mean \(\mu (x)\). In contrast, high values of \(\xi\) encourage the algorithm to choose points where the variance, i.e., the uncertainty, is high. We restrict to a fixed value for \(\xi\) and refer to Srinivas et al. (2010) for an adaptive choice of \(\xi\).

An alternative strategy, proposed by Mockus et al. (1978) and made popular by Jones et al. (1998), considers the improvement

where \(\langle \cdot \rangle _+ = \max (0,\cdot )\) denotes the Macaulay bracket. If \(f\ge f^*_n\), there will be no improvement. For lower values of f, the improvement \({\mathcal {I}}(f)\) increases linearly. Taking the expectation of the improvement (2.17) w.r.t. the normal distribution determined from \(\mu (x)\) and \(\sigma (x)\) gives rise to the expected improvement \(u_{EI}(x)\), which may be expressed analytically in the form

where \(\Delta _n(x) = f^*_n - \mu (x)\) and \(\phi\) as well as \(\Phi\) denote the standard normal density and distribution function with mean \(\mu (x)\) and standard deviation \(\sigma (x)\), see (Jones et al. 1998; Lizotte 2008) proposed a shift of equation (2.18)

by a parameter \(\xi\). This enables controlling the trade-off between exploitation and exploration and is similar to the parameter used in \(u_{LCB}\), see equation (2.16).

The discussed acquisition functions are inexpensive to evaluate, and gradient data is available. Thus, in addition to zeroth order optimization methods, for instance Monte Carlo methods (Wilson et al. 2018) or DIRECT (Brochu et al. 2010), gradient-based solvers like BFGS (Frazier and Wang 2016; Wang et al. (2018; Picheny et al. (2016) may be used.

Still, finding the global optimum of the acquisition function may be non-trivial. Indeed, even if the original function f was convex, the surrogate problem need not be concave. For instance, the expected improvement acquisition function (2.18) has local maxima at all previously explored points \(x_1,\ldots ,x_n\).

Our workflow for maximizing the acquisition function works as follows. First, we sample the acquisition function \(u_{f^*_n,\mu ,\sigma }\) at 300 points. As our domain of interest is a box, we rely upon the first 300 points of the corresponding Sobol sequence (Sobol 1967). Then, we select those ten points with the highest acquisition function values, and run BFGS with the selected point as initial point. Finally, we select the maximum value obtained during those BFGS runs.

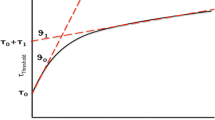

The employed Bayesian optimization workflow is summarized in Fig. 1. Please note that we rely upon a preliminary Latin-hypercube sampling (McKay et al. 2000) to sample the first ten points within the domain of interest A.

3 Computational investigations

3.1 Setup

We wish to identify material parameters for the quenched and tempered high-strength steel 50CrMo4, as investigated by Schäfer et al. (2019). The material features a martensitic microstructure and a hardness of 39 HRC. To determine the crystal plasticity parameters of this material, macroscopic strain-driven low-cycle fatigue (LCF) experiments at different strain amplitudes \(\varepsilon _a\) were performed, see Schäfer et al. (2019) for details on the experimental procedure.

The constitutive material model described in Sect. 2.1 was implemented into a user defined material subroutine (UMAT) within the commercial finite element solver ABAQUS (Dassault Systèmes 2018). To thoroughly test the optimization framework, we restrict to the simple microstructure shown in Fig. 2, consisting of \(8\times 8\times 8\) grains, each discretized by \(2^3\) elements. The different colors in Fig. 2 represent distinct, randomly sampled, orientations. Periodic boundary conditions were used, following the procedure proposed by Smit et al. (1998). The microscopic material parameters we wish to identify are the following.

-

1.

The critical resolved shear stress \(\tau _c\) (2.7), which we assume to be identical for all slip systems, i.e., \(\tau _c^\alpha = \tau _c\) holds for all \(\alpha\).

-

2.

The parameters of the Armstrong-Frederick or the Ohno-Wang kinematic hardening model A and B, see formulas (2.8) and (2.9).

The remaining model parameters are taken from the literature according to Schäfer et al. (2019), see Table 1. Please note that we restrict to slip systems in the lath-plane, as these are primarily activated according to Michiuchi et al. (2009). We collect the three parameters we wish to determine into a single three-component vector

which we would like to identify.

Following Herrera-Solaz et al. (2014) and Schäfer et al. (2019), we consider the objective function

quantifying the difference between a stress-strain hysteresis of low-cycle fatigue (LCF) experiments and the corresponding macroscopic stress-strain hysteresis of a micromechanical simulation. Here, S denotes the number of time steps, \(\sigma _{y}^\text {exp}\) refers to the experimentally measured stress in loading direction and \(\sigma _{y}^\text {sim}\) stands for the homogenized stress in loading direction.

Following the experiments, we prescribe the macroscopic loading for the simulations to follow a triangular waveform in y-direction, see Fig. 2. In total, we simulate two cycles, where the each cycle lasts four seconds, and the second cycle serves as our simulation output. We use time increments of 0.01 seconds for computing the homogenized stress response, i.e., \(S=400\) is used in this work, and we will discuss the rationale behind this choice below. Please note that we disregard strain-rate dependency of the considered material due to the cyclic stable behavior, see Schäfer et al. (2019). In this work, we wish to minimize the mean of the error for H different hystereses, i.e., we consider

Here, the term \(f^h(x)\) corresponds to equation (3.2) and a fixed strain amplitude. In this article, we use the experimental data for the strain amplitudes \(\varepsilon _a = 0.35\%\), \(\varepsilon _a = 0.60\%\) and \(\varepsilon _a = 0.90\%\), i.e., \(H=3\). The corresponding hystereses at half of the lifetime are shown in Fig. 3.

Polycrystalline stress-strain hystereses of the martensitic 50CrMo4 steel at half of the lifetime used for the inverse optimization problem obtained by Schäfer et al. (2019)

For a start, we study the necessary time-step size \(\triangle t\). Indeed, exceedingly small time steps increase the computational cost, whereas too large time steps distort the computational results. We consider a very small time step of \(\triangle t=0.0001\) s as our reference, and investigate the relative deviation of the error function (3.3) for time steps of increasing size, see Table 2. All simulations were carried out for the Armstrong-Frederick kinematic hardening model with the material parameters found by Schäfer et al. (2019). Based on these results, we select the time step \(\triangle t = 0.01\) s, as we consider the associated deviation of \(1.26\%\) in the error function negligible.

For the parameter identification in a Bayesian optimization framework, diagrammatically represented in Fig. 1, we consider a two-stage process. First, we start with running BO on a large box in parameter space, to obtain a rough estimate for the parameters. Based on these results, a second optimization with tighter boundaries is carried out for fine-tuning the parameters. Both steps are described in further detail in the following subsections.

We use the GPy implementation (GPy 2012) as a Gaussian-process regressor for the method described in Sect. 2.2. For the acquisition functions and the associated optimization we used an implementation by the Bosch Center for Artificial Intelligence (BCAI) based on Python 3.7, numpy (van der Walt et al. 2011) and scipy (Virtanen et al. 2020).

We use the maximum number of function evaluations as a stopping criterion within the BO framework. We set this value to 160, involving ten random initialization points and 150 BO steps, unless otherwise specified. For the evolutionary algorithms, included as a benchmark, we use the commercial software optiSLang (Dynardo, optiSLang, Version: 3.3.5. [Online]. Available: https://www.dynardo.de/software/optislang.html.).

3.2 Parameter identification with a reasonably large search space

For this setting, the search space is given by the parameter bounds listed in Table 3. We initialize Bayesian optimization with ten points from a Latin-Hypercube sampling. The ”Matern5/2” kernel (2.14) is chosen as a kernel function. The lower confidence-bound acquisition-function \(u_{LCB}\) (2.16) is employed with an exploration margin of \(\xi =2.0\). Thus, over-exploitation and getting stuck in a local maximum are avoided. The rationale for choosing this specific acquisition function and exploration margin, is discussed in Sect. 3.3.

First, we investigate the parameter optimization for the AF model and investigate the smallest error, i.e., the smallest value of f,

versus function evaluations N. Fig. 4a shows this quantity for a single BO run using a maximum number of function evaluations set to 400. Objective values above 80.0 MPa were cut off, as these are obtained during the first ten function evaluations in the Latin-Hypercube sampling.

Within this single run, BO reaches a minimum value of 48.80 MPa after 171 function evaluations. However, a comparable error of 49.9 MPa is reached after 81 evaluations.

BO reaches a strictly positive objective value. This indicates that the material model described in Sect. 2.1 may only approximate the experimentally determined stress-strain hystereses with a non-vanishing error. This effect may be systematic or result from stochastic fluctuations certainly present for the different experimentally determined hystereses.

The Bayesian optimization framework relies upon randomness both for the initialization and the optimization of the acquisition function. To evaluate the influence of this randomness, we restarted Bayesian optimization ten times. Figure 4b shows the mean of ten BO runs in terms of the best error encountered versus number of function evaluations. Additionally, we computed the \(95\%\) confidence interval (\(95\%\) CI) using an unbiased population formula for the standard deviation and Student’s t-distribution (Student 1908). The bounds of the confidence interval are indicated by shaded areas centered at the mean value.

Please note that the number of evaluations used by BO is limited to 160, including those used for the initialization. BO reaches a mean error of 47.83 MPa after the allowed 160 function evaluations with a \(95\%\) confidence interval of [46.40 MPa, 48.74 MPa]. A comparable mean error of 48.78 MPa and a \(95\%\) confidence interval [47.10, MPa, 48.76 MPa] is reached after 121 function evaluations, corresponding to 111 BO steps excluding evaluations used for initialization.

Thus, we observe that repeating the BO process leads, after a sufficient number of iterations, to rather similar results as for a single run. In addition to the AF model, we investigate the Ohno-Wang model in this work to demonstrate the general robustness and reliability of Bayesian optimization in this setting. The result for one BO run with a maximum number of 400 evaluations is shown in Fig. 5a.

Qualitatively, similar conclusions may be drawn as for the AF model. To address the issue of randomness we use ten BO runs with different initialization and evaluate the results, see Fig. 5b. The observed behavior parallels the optimization for the AF model, where the mean error improves significantly within the first 100 function evaluations and stagnates afterwards. An important difference to the AF model are the final mean and the confidence bounds. For all considered optimization runs the mean best error is 43.94 MPa, which is lower than the mean reached using AF. The \(95\%\) confidence interval is computed as [42.71 MPa, 45.17 MPa]. The broader \(95\%\) confidence interval for the OW model compared to the one obtained for the AF-model optimization indicates a higher dispersion of BO for the OW model, i.e., a higher influence of the initial sampling.

To gain insight into the shape of the energy landscape for the considered black-box function, we compute the difference between two consecutive minimum values of \(f^*\) at the j-th function evaluation

as well as the Euclidean distance between a parameter set \(x_i\) (see equation (3.1)) and the previous vector \(x_{i-1}\). For proper dimensioning, we normalize each distance parameter-wise by the parameters \(x_j^*\), for which a minimum objective value was found, see Table 4, via

Here, D denotes the number of dimensions of the input vector (3.1), i.e., \(D=3\) for this work. Let us denote by

the averaged objective values and mean distances over R total optimization runs at the j-th function evaluation. First, we consider the Armstrong-Frederick kinematic hardening model, see Fig. 6a. The first ten function evaluations, corresponding to sampling of the given parameter space, allow the algorithm to find a promising starting point. Similar to Fig. 4b, for the first ten steps, the mean change in best error (encountered so far) is very high. After these initial steps, BO reduces the mean parameter distance while navigating towards a smaller error, i.e., the already gained knowledge about f in the vicinity of an encountered (local) minimum is exploited and the uncertainty associated to this region is reduced in the process. Thereafter, it becomes more attractive to sample points with a higher degree of uncertainty, encoded by a higher variance. This exploration phase is indicated by the mean parameter distance oscillating around a fixed value at about 50 -112 function evaluations. The error is reduced frequently by small margins, up to about 112 function evaluations. After that, both the mean parameter distance and the mean difference in best error decrease further, as no further improvement of the objective value is made, see also Fig. 4b.

After about 120 function evaluations, corresponding to 110 BO steps, the exploitation tendency of the considered acquisition function is apparent. Despite decreasing the mean parameter distance,i.e., exploiting the already gained knowledge about the function, the best error is not decreased significantly. Indeed, the used acquisition function \(u_{LCB}\) comes with an intrinsic trade-off between exploration and exploitation by sampling the point where the negative mean minus the variance (multiplied by \(\xi\)) is largest.

For the Ohno-Wang kinematic hardening model, the considered metrics are shown in Fig. 6b. Sampling the parameter space reduces the encountered best error significantly, as we have seen for the Armstrong-Frederick model. Compared to the AF model, shown in 6a, the mean relative parameter distance is smaller. This indicates a higher degree of smoothness in the objective function.

After the initialization phase, the error is reduced, exploiting the already gained knowledge of the objective function f. The mean parameter distance decreases, i.e., neighboring points are being evaluated. After 50 function evaluations, the improvement of the best error gets less pronounced, resulting in the plateau apparent in Fig. 5b. After about 80 evaluations, a step with large mean parameter distance occurs, resulting in a subsequent decrease of the best error.

Interpreted in terms of the energy landscape of the objective f, BO investigates a neighborhood with promising points first. After exploiting this area (up to about 64 iterations), the exploitation-exploration trade-off favors reducing the uncertainty. This results in a large mean parameter distance at about 80 function evaluations, allowing the algorithm to find a new area where the objective f may be decreased further.

For both single optimization runs shown in Fig. 4a and Fig. 5a, the resulting stress-strain hystereses are shown in Fig. 7 besides their experimental counterparts. The results for, both, the Armstrong-Frederick and Ohno-Wang model, show good agreement with the experiments at medium and high strain amplitudes, i.e., \(\varepsilon =0.6\%\) and \(\varepsilon =0.9\%\). These observations may be quantified when normalizing the root of the mean squared distance between experiment and simulation, i.e., equation (3.2), by the root of the mean squared experimental values

For the Armstrong-Frederick model, the relative difference is \(4.29\%\) and \(6.81\%\) for \(\varepsilon =0.6\%\) and \(\varepsilon =0.9\%\), respectively. We obtain similar values for the Ohno-Wang model, namely \(3.56\%\) and \(6.48\%\). For the smallest strain amplitude considered, i.e., \(\varepsilon =0.35\%\), the relative error is larger, with \(22.60\%\) and \(20.41\%\) for the Armstrong-Frederick and Ohno-Wang model, respectively. These errors may be reduced either by considering a more complex microstructure or by working with more advanced models. For the work at hand, we focus on the optimization method used for the parameter identification.

3.3 On the choice of acquisition function

In this section, we elaborate on our choice of acquisition function and exploration margin. For the large search-space problem, we compare two different exploration margins for each of the considered acquisition functions \(u_{EI}\) and \(u_{LCB}\). For these four combinations, the mean best error of ten BO runs is shown in Fig. 8a. Except for \(u_{LCB}\) with \(\xi =1.0\), all combinations lead to a comparable minimum value.

For BO with \(u_{LCB}\) and \(\xi =1.0\), after reaching an error of 53.53 MPa at 53 function evaluations, the mean gradually reduces to its final value of 52.27 MPa. This behavior indicates that the algorithm gets stuck in a local minimum and is not able to leave it. Indeed, a two-sided confidence interval of width \(2\sigma\) contains only \(68.27\%\) of all realizations, on average. In particular, more than \(30\%\) of potentially relevant improvements are inaccessible. Thus, the driving force for exploration, encoded by \(\xi\), is too small for this setup.

To study the remaining combinations of acquisition functions and exploration margins in more detail, the results are restricted to the interval from 60 to 160 function evaluations in Fig. 8b.

\(u_{EI}\) with exploration margin \(\xi =0.1\) reaches a mean stationary value of \(f(x)=48.66\) MPa after 106 function evaluations, and does not lead to a subsequent reduction afterwards.

After reaching a similar mean error compared to \(u_{LCB}\) with \(\xi =1.0\), namely 53.50 MPa using 54 function evaluations, \(u_{EI}\) with an exploration margin of \(\xi =0.05\) reduces the error further. This is achieved between 70 and 90 as well as between 118 and 125 function evaluations, where the mean error reduces from 53.50 MPa to 48.80 MPa. The final mean error is reached at 48.29 MPa after 145 function evaluations. Additionally, we look at the maximum best error (i.e., the worst case) for all ten optimization runs versus the number of function evaluations, shown in Fig. 9a. As for the behavior of the mean best error, shown in Fig. 8, for \(u_{LCB}\) with \(\xi =1.0\), the maximum best error does not decrease to a comparable level. The other three considered cases behave similarly. To examine these cases in more detail, we investigate at the last 100 evaluations in Fig. 9b. BO using \(u_{LCB}\) with an exploration margin of two reaches its minimum objective value of 49.45 MPa after 119 function evaluations for the considered case. The worst case for expected improvement \(u_{EI}\) and \(\xi =0.1\), a stationary value of 51.36 MPa, is reached after 106 function evaluations. The reached value is not improved using more function evaluations. For an exploration margin of 0.05, a stationary value of 49.31 MPa is reached for the worst case considered. This is slightly smaller than the error obtained using \(u_{LCB}\) and \(\xi =2.0\), but requires 26 additional evaluations, which is why we chose \(u_{LCB}\) with \(\xi =2.0\) as our acquisition function and exploration margin.

3.4 Fine-tuning material parameters with small parameter space

In Sect. 3.2, we were concerned with optimizing parameters when little is known about the optimum. We may work on a smaller parameter space if additional information is known, for instance based on expert knowledge or a previous optimization run for a similar problem. For determining the crystal plasticity parameters of the single crystal, we will use the bounds of Table 5, which we chose to be tighter than the previously considered bounds. Again, we choose our acquisition function to be \(u_{LCB}\) with an exploration margin of \(\xi =2.0\), as conclusions very similar to those in Sect. 3.3 may be drawn in the fine-tuning case, as well. The BO workflow is initialized by ten points obtained from a Latin-Hypercube sampling. Then, BO is run for a fixed set of 150 function evaluations, summing up to a total of 160. The results of ten optimization runs are shown in Fig. 10a and b for the Armstrong-Frederick and Ohno-Wang model, respectively.

BO reaches a mean minimum error-value of 46.72 MPa for the AF model. The \(95\%\) confidence interval at end of optimization is given by [45.66 MPa, 47.78 MPa], which is tighter than for the results of the large search-space problem. After 80 evaluations, BO reaches a comparable mean error of 46.92 MPa with a \(95\%\) confidence interval of [45.76 MPa, 48.08 MPa].

For the optimization of the OW kinematic hardening model, Fig. 10b shows the mean and the \(95\%\) confidence interval of the smallest error versus function evaluations. The mean minimum-value of 41.69 MPa is reached after 136 evaluations and a comparable mean error of 41.695 MPa is reached after 73 evaluations. Furthermore, each of the optimization runs arrives at almost the same error, emphasized by the narrow \(95\%\) confidence intervals, [41.6851 MPa, 41.6854 MPa] and [41.52 MPa, 42.17 MPa] for 136 and 73 function evaluations, respectively. As we did for the large search-space problem, we look at, both, the mean difference between two consecutive best encountered values of f, and, the mean normed parameter distance (see equations (3.7) and (3.8)). As normalization, we use those parameters for which the minimum function value was found, see Table 6. These metrics are shown for the AF model in Fig. 11a. BO systematically reduces the step size, with the main change made between 0 and 60 function evaluations. This corresponds to the observations made in Fig. 10a, where after about 60 function evaluations there is no substantial change in the mean best error encountered as well as for the \(95\%\) confidence interval .

Fig. 11a also shows the trade-off between exploration and exploitation. This is indicated by peaks in parameter distance after about 80 function evaluations without decreasing f.

The mean distance between parameters is smaller within the more restrained boundaries of Table 5. This explains why a comparable or even better solution is achieved in shorter time than when optimizing with the (larger) bounds given in Table 3. For the fine-tuning of the OW model, see Fig. 11b, similar observations can be made. In contrast to optimizing the AF model, the mean change in best error, shown in Fig. 10b, is almost zero after 80 function evaluations. As for the large-space problem, the mean parameter distance is smaller than for the case of optimizing the Armstrong-Frederick model. However, for the case at hand, this difference is less pronounced.

3.5 Comparison to the state-of-the-art

In this section, we compare the proposed Bayesian approach to alternative optimization algorithms which demonstrated their power for similar tasks, in particular inversely identifying material parameters. In addition to the evolutionary approach used by Schäfer et al. (2019), we investigate the Multilevel Coordinate Search (MCS, Huyer and Neumaier 1999) and Stable Noisy Optimization by Branch and FIT (SNOBFIT, Huyer and Neumaier 2008). The latter two derivative-free optimization methods turned out to be particularly powerful for low-dimensional problems in the expressive review article of Rios and Sahinidis (2013).

The workflow used by Schäfer et al. (2019) proceeds as follows. First, a Latin-Hypercube-Sampling, consisting of 200 points in the proposed parameter space, is performed. Subsequently, an evolutionary algorithm is run on the parameter space, initialized using the most promising results from the sampling step. MCS and SNOBFIT do not require an additional initialization, and we use the recommended default parameters (Huyer and Neumaier 2008; Huyer and Neumaier 1999) for both algorithms.

We compare the smallest error encountered versus function evaluations for a single optimization run of the AF model in the large search-space setting, see Fig. 12, where we constrain the maximum number of function evaluations to 400. For a start, we observe that all algorithms reach a non-zero function value, in agreement to previous observations. The minimum error obtained by the evolutionary approach of 48.20 MPa is reached after 332 function evaluations. A comparable value of 49.15 MPa is encountered after 270 evaluations. Please note that the sampling provides a suitable initialization, and, for the first 100 function evaluations, there is a steady decrease in the objective value f. MCS reaches a similar error of 48.69 MPa after 400 evaluations, with an error of 49.16 MPa reached after evaluating the cost function 237 times. Among all considered optimization algorithms, SNOBFIT reaches the lowest error value of 43.72 MPa after 201 function evaluations.

All considered algorithms, except for MCS, rely to some degree on a random initialization (Huyer and Neumaier 2008; Brochu et al. 2010). Therefore, we wish to asses the influence of randomness on the overall optimization results. In Fig. 12b, the mean value of ten optimization runs is shown. Additionally, we visualize the computed \(95\%\) confidence interval via shaded areas, centered at the mean. For the SNOBFIT algorithm and these multiple runs, we set the maximum number of function evaluations to 160, as for the proposed Bayesian strategy. As MCS is a deterministic algorithm, in all of the following we will only show the results of a single optimization run.

After 100 evaluations, the evolutionary approach reaches a mean minimum function value of 56.31 MPa with a \(95\%\) confidence interval of [52.73 MPa, 59.90 MPa], and, after 160 evaluations arrives at a mean and \(95\%\) confidence interval of 53.42 MPa and [50.61 MPa, 56.23 MPa], respectively. The smallest mean value of 48.71 MPa is reached after evaluating the cost function 394 times, with a comparable value of 49.97 MPa reached after 376 evaluations. Using the SNOBFIT algorithm results in a smaller mean error of 52.66 MPa at 100 function evaluations, but a larger \(95\%\) confidence interval of [47.14 MPa, 58.19 MPa]. The mean best error after 160 function evaluations is lower for SNOBFIT than for BO and the evolutionary algorithm, namely 47.47 MPa while the \(95\%\) confidence interval of [44.15 MPa, 50.78 MPa], obtained by using SNOBFIT, is larger than for the other two methods.

As our next step, we compare BO to the evolutionary approach, MCS and SNOBFIT for optimizing the unknown parameters of the OW kinematic hardening model in the large-space setting. In Fig. 13a, we plot the best error vs. iterations for each algorithm and a single optimization run. Bayesian optimization, the evolutionary approach and SNOBFIT reach similar error values of 44.21 MPa, 45.74 MPa and 43.72 MPa, respectively. Comparable error values are obtained by BO, SNOBFIT and the evolutionary framework after evaluating the cost function about 120, 290 and 340 times, respectively. Using Multilevel Coordinate Search leads to a minimum best error of 48.69 MPa, which is reached after 392 function evaluations.

To investigate the influence of the random initialization on optimizing the OW model, we use the mean of ten optimization runs and visualize the results, with the corresponding \(95\%\) confidence interval as shaded areas, in Fig. 13b for BO, SNOBFIT and the evolutionary approach.

Combining Latin-Hypercube sampling and the evolutionary algorithm produces, after 400 function evaluations, a mean error of 51.10 MPa, and a \(95\%\) confidence interval of [47.76 MPa, 54.40 MPa]. After evaluating the function 160 times, the mean best error using the evolutionary approach is 55.30 MPa with \(95\%\) confidence interval [53.19 MPa, 57.40 MPa]. For SNOBFIT, the mean best error of 49.77 MPa after 160 function evaluations lies between the results of BO and the evolutionary approach, whereas the \(95\%\) confidence interval of [44.73 MPa, 54.81 MPa] is larger than for the two other methods.

In Fig. 14, we compare the considered algorithms for the refined bounds given in Table 5, for both kinematic hardening models. For optimizing the parameters of the AF model, SNOBFIT provides the lowest mean error of 44.16 MPa with [42.99 MPa, 45.34 MPa] as a \(95\%\) confidence interval after 160 function evaluations. We observe comparable values after letting SNOBFIT evaluate the function 98 times, e.g., 44.53 MPa for the mean error with a \(95\%\) confidence interval of [43.32 MPa, 45.74 MPa]. Using an evolutionary algorithm to optimize the AF parameters leads to a mean minimum error of 47.29 MPa, which is close to the mean value reached by BO. The \(95\%\) confidence interval at 160 function calls of the evolutionary algorithm computes to [45.47 MPa and 49.10 MPa], which is larger than for BO or SNOBFIT. To reach similar mean error values of 47.50 MPa and 46.85 MPa, the evolutionary algorithm and BO need 153 and 87 function evaluations, respectively. Among the considered algorithms, MCS reaches the lowest best error already in the first 13 iterations with 50.807 MPa. This error level is reached within the first function evaluations. Yet, MCS falls short of reducing the error significantly for subsequent function evaluations. Indeed, after evaluating the function 160 times, the error is 48.70 MPa.

Next, we compare the mean best error encountered when optimizing the parameters for the Ohno-Wang kinematic hardening model using the evolutionary approach, BO or SNOBFIT, see Fig. 14b, together with best error over iterations for the MCS method. Similar conclusions for the behavior of SNOBFIT may be drawn as for the BO approach, see Sec. 3.4. The mean best error and \(95\%\) confidence interval after 160 function evaluations are 43.10 MPa and [42.60 MPa, 43.60 MPa], respectively. Using the evolutionary algorithm produces a mean minimum error of 49.31 MPa after 133 function evaluations with a \(95\%\) confidence interval of [48.78 MPa, 49.84 MPa]. Almost the same mean best error of 50.71 MPa with a \(95\%\) confidence interval of [48.79 MPa, 52.64 MPa] is reached after 13 function evaluations and not improving up to 109 evaluations. For MCS, the error is, similar to optimizing the AF model, the lowest in the first 10 optimization steps. After evaluating the cost function 160 times, the error reaches a similar value as reached by BO with 42.29 MPa.

Let us summarize the findings of this section for each algorithm individually. The evolutionary algorithm consistently required the highest number of function evaluations among the considered algorithms to reach a specific error level. For the OW model and both the large and the small search space, the evolutionary algorithm lacked significantly behind the other algorithms. With the exception of the large search space and the AF model, MCS provided a similar error value as BO and the evolutionary algorithm. MCS showed inferior performance for the OW model and the large search space. In the case of fine tuning, MCS turned out to be worst for the Armstrong-Frederick model, but second best for Ohno-Wang. Still, there are practical benefits of the MCS method. First and foremost, it is a deterministic algorithm, dispensing with the influence of randomness. Secondly, in the fine-tuning setting MCS provided very good results already for the first few iterations. Thus, when the number of evaluations is small and prescribed beforehand, MCS is the method of choice.

SNOBFIT is good for the fine-tuning case, in particular for the AF model. In this case, SNOBFIT produces a similar dispersion as BO. However, for the large search space, the values produced by SNOBFIT are characterized by a rather large dispersion. Directly comparing SNOBFIT and BO, the former consistently requires more function evaluations to reach the same error level.

The proposed Bayesian optimization framework produces a mean which is comparable to SNOBFIT in the setting of the large search space. However, BO is both consistently faster and characterized by a smaller dispersion. Only for fine-tuning AF, BO is a little worse than SNOBFIT in terms of the reached best error. For fine-tuning the more complex Ohno-Wang model, BO is fastest and leads to the best error.

To conclude, the presented BO framework provides a strongly competitive solution, independent of the problem. Thus, it turns out to be the algorithm of choice for industrial applications, in particular parameter identification for an unknown material.

3.6 Industrial-scale polycrystalline microstructure

Previously, we determined the optimum parameters for a simplified volume element, see Fig. 3, which was selected with extensive studies of the proposed Bayesian optimization approach in mind. In this section, we investigate a more complex microstructure to demonstrate the capabilities of the approach. For this we use a synthetic microstructure, generated by the method discussed in Kuhn et al. (2020), consisting of 100 grains (with equal volume). Furthermore, each grain is randomly assigned a crystalline orientation, s.t. the overall orientation is isotropic, see Fig. 16a, where the coloring indicates the corresponding orientation. We furnish this volume element with periodic boundary conditions, discretize it by \(64^3\) voxels and use the FFT-based solver FeelMath (Fraunhofer ITWM 2020; Wicht et al. 2020a; Wicht et al. 2020b) to speed up computations (Rovinelli et al. 2020). Both, the Armstrong-Frederick and the Ohno-Wang kinematic hardening model will be considered.

Following (Sedighiani et al. 2020), we define the bounds of the feasible parameter space in terms of the previously found optima, with a range of \(1.5\times x^*\) and \(0.5\times x^*\), see Tables 7 and 8. We retain our choice of acquisition function and exploration margin. To keep the computational cost reasonable, we restrict the maximum number of iterations to 50. The best error versus number of evaluations is shown in Fig. 15a for the Armstrong-Fredrick model. Guided by a Latin-Hypercube-Sampling, the first ten function evaluations result in an error of 44.10 MPa. This error is further reduced to 43.68 MPa after 20 additional function evaluations. The minimum error of 41.20 MPa is reached after evaluating the function 33 times. The obtained error is smaller than in Sect. 3.4. This may be a result of the increased complexity of the microstructure, which is closer to the experimental setup encompassing a large number of grains. Furthermore, the obtained parameter values, shown in Table 9 lie outside of the bounds considered in the previous sections, see Tables 3 and 5.

Similar conclusions may be drawn for the Ohno-Wang kinematic hardening model, where the smallest error versus number of function evaluations is shown in Fig 15b. After ten function evaluations following a Latin-Hypercube-Sampling, the smallest error is 62.05 MPa. After taking one BO step, this error is reduced to 41.35 MPa. The final minimum error of 38.21 MPa is reached after 27 function evaluations, i.e., after 17 steps proposed by Bayesian optimization.

To ensure that the smaller error for the complex microstructure is not a consequence of the different parameter bounds, we return to the simplified microstructure, see Fig. 3, and run Bayesian optimization on the constrained parameter spaces, see Tables 7 and 8. For the Armstrong-Frederick model, BO reaches a mean error of 44.69 MPa an a [44.69 MPa, 44.70 MPa] \(95\%\) confidence interval after 50 function evaluations. After the same number of evaluations, the resulting mean error and \(95\%\) confidence interval are 41.78 MPa and [41.59 MPa, 41.97 MPa], respectively. Thus, even for the constrained bounds, the error corresponding to the more complex microstructure shown in Fig. 16a improves upon the error obtained for the simplified structure. Concerning the computational cost, the simulation with a time step of \(\triangle t=0.01\) s and 16 CPUs takes roughly 40 minutes for the largest strain amplitude of \(\varepsilon _a=0.9\%\). Thus, for the chosen maximum number of 50 function evaluations, the total Bayesian parameter identification takes 33 hours. The minimum error of 38.21 MPa is found after a computation time of 18 hours in total for the Ohno-Wang kinematic hardening model. As for the Armstrong-Frederick model a total computation time of 22 hours is needed to find a solution with error 41.20 MPa.

Using a more complex synthetic microstructure, the error is further reduced, at the expense of an increased computational effort. Still, the Bayesian-optimization approach limits the required total time to less than a day. Using a more complex representation of the microstructure also permits us to gain deeper insights into the deformation behavior of the microstructure, for instance in terms of the accumulated plastic slip, a mesoscopic indicator for the plastic deformation. Furthermore, the obtained optimum parameter set may enter further microstructure simulations.

For \(\varepsilon _a=0.9\%\) and \(t=8.0\) s, the distribution of accumulated plastic slip, see Wulfinghoff et al. (2013),

is shown in Fig. 16b and c for the Armstrong-Frederick and Ohno-Wang kinematic hardening model, respectively. In both cases, the accumulated plastic slip is concentrated in specific grains.

As we used the same geometric microstructure for each of the hardening models, we observe that, independent of the employed kinematic hardening model, the orientation of each grain plays a crucial role in determining the deformation behavior. Apparently, grains with higher accumulated plastic slip \({\dot{\gamma }}\) are oriented in such a way that the applied macroscopic load has maximum effect, thus resulting in a higher amount of plastic deformation. Moreover, the distribution of accumulated plastic slip provides some insight into the advantages of the OW model over the AF model in producing smaller errors which is not apparent from the resulting hystereses shown in Fig. 17. For the Ohno-Wang kinematic hardening model, there are more regions with high accumulated plastic slip \({\dot{\gamma }}\) in Fig. 16c than for the Armstrong-Frederick model in Fig. 16b. This more pronounced plasticity seems to match the experimental behavior better than the results computed using the Armstrong-Frederick kinematic hardening model. The relative error defined in equation (3.9) is considerably lower for the small strain amplitude \(\varepsilon =0.35\%\) for both kinematic hardening models with \(11.65\%\) and \(8.65\%\) for the Armstrong-Frederick and Ohno-Wang model, respectively. The relative errors for the medium strain amplitude are increased to \(11.20\%\) for the Armstrong-Frederick and \(11.08\%\) for the Ohno-Wang model, whereas the error for \(\varepsilon =0.9\%\) is also reduced to \(4.59\%\) and \(5.16\%\) for the respective models. As the small strain amplitude \(\varepsilon =0.35\%\) is most interesting for fatigue applications, the benefits of using the more complex structure, see Fig. 16a,instead of the simplified microstructure, see Fig 3, become apparent.

4 Conclusion

This work was dedicated to identifying constitutive parameters for a small strain crystal plasticity constitutive law incorporating a kinematic hardening model to capture the behavior under cyclic loading conditions, a topic which received attention recently from, both, a scientific and applied perspective (Sedighiani et al. 2020; Shahmardani et al. 2020; Shenoy et al. 2008; Prithivirajan and Sangid 2018; Guery et al. 2016; Hochhalter et al. 2020; Kapoor et al. 2021; Pagan et al. 2017; Bandyopadhyay et al. 2020; Rovinelli et al. 2018; Bandyopadhyay et al. 2019).

We investigated a Bayesian optimization approach for the rapid and flexible calibration of the single-crystal constitutive parameters entering micromechanical simulations. The methodology is data-driven and compares the predicted stress-strain hystereses to given polycrystalline experimental data. More precisely, we proposed using Bayesian optimization with Gaussian processes to build up a surrogate model of the optimization problem at hand and employed the upper confidence bound using an exploration margin of two for selecting the next iterate. Our numerical investigations demonstrated that the proposed optimization framework reliably and efficiently determines suitable material parameters for our purposes. For instance, these moduli may enter a simulation predicting the initiation of fatigue cracks, see Schäfer et al. (2019). We investigated both a large and a comparatively small space of admissible parameters, simulating whether prior knowledge on the optimum is available or not. The proposed framework is able to handle the challenges of the large search space, and is sped up for the smaller admissible set.

By investigating both the changes in parameter vector and in the objective value, we could gain an intuitive understanding for the selection strategy of Bayesian optimization, providing a suitable trade-off between exploitation and exploration. Furthermore, we could get an insight into the general energy landscape and the effects of using different acquisition functions.

We compared the proposed Bayesian optimization framework to a representative (Schäfer et al. 2019) of the powerful class of genetic algorithms (Kapoor et al. 2021; Bandyopadhyay et al. 2020; Rovinelli et al. 2018) as well as two derivative-free optimization schemes recommended in the review article (Rios and Sahinidis 2013) for low-dimensional problems. The computational findings of Sect. 3.5 demonstrated the capabilities of the Bayesian optimization framework. Compared to the investigated evolutionary algorithm, BO turned out to be consistently faster, and featured a smaller dispersion. BO outperforms MCS mainly in the case of the large search space, whereas, for fine tuning, BO produces a smaller minimum achieved error for all cases, but requires more function evaluations to reach a comparable error level. Compared to SNOBFIT, BO produces a considerably smaller dispersion when considering a large search-space. BO requires fewer function evaluations, and provides similar or better results in case of fine-tuning.

We observed that, for fitting computed stress-strain hystereses to their experimental counterparts accurately, using more complex microstructures is necessary. In particular, the hysteresis at a low strain amplitude, the one most relevant to fatigue applications, using a volume element with 100 complex grains led to more accurate results than the simplified volume element with 64 cubic grains.

To further improve the fitting quality, it appears wise to investigate whether increasing the complexity of the underlying material model or tweaking the parameters used for microstructure generation has a higher effects. Especially in the context of industrial applications and the digital twin paradigm (Tuegel et al. 2011), accurate computational representations of components and their microstructure are needed. Further research might be invested into optimizing the microstructure descriptors, which are used as input for synthetic microstructure generation (Quey and Renversade 2018; Bourne et al. 2020; Kuhn et al. 2020). In particular, it may be possible to determine a set of minimal, thus most efficient, characteristics a microstructure has to possess in order to produce the desired macroscopic material behavior.

Complementing research dedicated to microstructure representations, the proposed optimization scheme could be improved. Despite the power and flexibility we experienced in the applied context, decreasing the number of necessary simulation runs (via fewer function calls) would be very much appreciated, in particular combined with a clever stopping criterion. Furthermore, research could be devoted to dedicated transfer strategies, i.e., building upon previous experience with similar microstructures, material models or experimental data (Golovin et al. 2017).

Last but not least, it appears worthwhile to extend the Bayesian optimization framework to uncertainty quantification (Bandyopadhyay et al. 2019).

References

Anahid M, Samal MK, Ghosh S (2011) Dwell fatigue crack nucleation model based on crystal plasticity finite element simulations of polycrystalline titanium alloys. J Mech Phys Solids 59(10):2157–2176

Arnaudov N, Kolyshkin A, Weihe S (2020) Micromechanical modeling of fatigue crack initiation in hydrogen atmosphere. Mech Mater 149:103557

Bandyopadhyay R, Prithivirajan V, Peralta AD, Sangid MD (2020) Microstructure-sensitive critical plastic strain energy density criterion for fatigue life prediction across various loading regimes. Proc Royal Soc A 476(2236):20190766

Bandyopadhyay R, Prithivirajan V, Sangid MD (2019) Uncertainty quantification in the mechanical response of crystal plasticity simulations. JOM 71(8):2612–2624

Bari S, Hassan T (2000) Anatomy of coupled constitutive models for ratcheting simulation. Int J Plast 16(3–4):381–409

Bishop JFW (1953) VI. A theoretical examination of the plastic deformation of crystals by glide. London, Edinburgh, Dublin Philos Magazine J Sci 44(348):51–64

Bishop CM (2006) Pattern recognition and machine learning. Springer, Berlin

Bleistein T, Diebels S, Jung A (2020) Parameter identification for open cell aluminium foams using inverse calculation. Comput Math Appl 79(9):2644–2654

Blum C, Merkle D (2008) Swarm intelligence: introduction and applications. Springer, Berlin

Bourne DP, Kok PJJ, Roper SM, Spanjer WDT (2020) Laguerre tessellations and polycrystalline microstructures: a fast algorithm for generating grains of given volumes. Philos Magazine 100(21):2677–2707

Brochu E, Cora VM, de Freitas N (2010) A Tutorial on Bayesian Optimization of Expensive Cost Functions‚ with Application to Active User Modeling and Hierarchical Reinforcement Learning. Preprint at https://arxiv.org/abs/1012.2599

Cailletaud G (1992) A micromechanical approach to inelastic behaviour of metals. Int J Plast 8(1):55–73

Chakraborty A, Eisenlohr P (2017) Evaluation of an inverse methodology for estimating constitutive parameters in face-centered cubic materials from single crystal indentations. Euro J Mech-A/Solids 66:114–124

Cox DD, John S (1992) A statistical method for global optimization. In: 1992 IEEE International Conference on Systems, Man, and Cybernetics, pp 1241–1246

Cruzado A, Gan B, Jiménez M, Barba D, Ostolaza K, Linaza A, Molina-Aldareguia JM, LLorca J, Segurado J (2015) Multiscale modeling of the mechanical behavior of IN718 superalloy based on micropillar compression and computational homogenization. Acta Materialia 98:242–253

Cruzado A, LLorca J, Segurado J (2017) Modeling cyclic deformation of inconel 718 superalloy by means of crystal plasticity and computational homogenization. Int J Solids Struct 122:148–161

Cruzado A, LLorca J, Segurado J (2020) Computational Micromechanics Modeling of Polycrystalline Superalloys: Application to Inconel 718. In: Integrated Computational Materials Engineering (ICME), pp 127–163

Dassault Systèmes, “ABAQUS.” Version: 2018

Dawson PR, Boyce DE, Park J-S, Wielewski E, Miller MP (2018) Determining the strengths of HCP slip systems using harmonic analyses of lattice strain distributions. Acta Materialia 144:92–106

Diehl M, An D, Shanthraj P, Zaefferer S, Roters F, Raabe D (2017) Crystal plasticity study on stress and strain partitioning in a measured 3D dual phase steel microstructure. Phys Mesomech 20(3):311–323

Dunne FPE, Wilkinson AJ, Allen R (2007) Experimental and computational studies of low cycle fatigue crack nucleation in a polycrystal. Int J Plast 23(2):273–295

Dynardo, optiSLang, Version: 3.3.5. [Online]. Available: https://www.dynardo.de/software/optislang.html

Engels JK, Vajragupta N, Hartmaier A (2019) Parameterization of a non-local crystal plasticity model for tempered lath martensite using nanoindentation and inverse method. Front Mater 6:247

Farooq H, Cailletaud G, Forest S, Ryckelynck D (2020) Crystal plasticity modeling of the cyclic behavior of polycrystalline aggregates under non-symmetric uniaxial loading: Global and local analyses. Int J Plast 126:102619

Forrester AIJ, Keane AJ (2009) Recent advances in surrogate-based optimization. Prog Aerosp Sci 45(1–3):50–79

Fraunhofer ITWM, FeelMath. https://www.itwm.fraunhofer.de/de/abteilungen/sms/produkte-und-leistungen/feelmath.html. Accessed 23 Oct 2020

Frazier PI, Wang J (2016) Bayesian Optimization for Materials Design. In: Information Science for Materials Discovery and Design, pp 45–75

Frederick CO, Armstrong PJ (2007) A mathematical representation of the multiaxial Bauschinger effect. Mater High Temp 24(1):1–26

GPy (2012) GPy: A Gaussian process framework in python. http://github.com/SheffieldML/GPy Accessed 23 Oct 2020

Gianola DS, Eberl C (2009) Micro-and nanoscale tensile testing of materials. JOM 61(3):24

Gillner K, Münstermann S (2017) Numerically predicted high cycle fatigue properties through representative volume elements of the microstructure. Int J Fatigue 105:219–234

Golovin D, Solnik B, Moitra S, Kochanski G, Karro J, Sculley D (2017) Google Vizier: A Service for Black-Box Optimization. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 1487–1495

Griewank A, Walther A (2008) Evaluating Derivatives: Principles and Techniques of Algorithmic Differentiation. Philadelphia: SIAM, second ed

Guery A, Hild F, Latourte F, Roux S (2016) Identification of crystal plasticity parameters using DIC measurements and weighted FEMU. Mech Mater 100:55–71

Harder J (2001) FEM-simulation of the hardening behavior of FCC single crystals. Acta Mechanica 150(3–4):197–217

Haupt P (2013) Continuum mechanics and theory of materials. Springer, New York

Herrera-Solaz V, LLorca J, Dogan E, Karaman I, Segurado J (2014) An inverse optimization strategy to determine single crystal mechanical behavior from polycrystal tests: Application to AZ31 Mg alloy. Int J Plast 57:1–15

Hochhalter J, Bomarito G, Yeratapally S, Leser P, Ruggles T, Warner J, Leser W (2020) Non-deterministic Calibration of Crystal Plasticity Model Parameters. In: Integrated Computational Materials Engineering (ICME), pp 165–198

Hsiao JC, Shivam K, Chou CL, Kam TY (2020) Shape design optimization of a robot arm using a surrogate-based evolutionary approach. Appl Sci 10(7):2223

Huntington HB (1947) Ultrasonic measurements on single crystals. Phys Rev 72(4):321

Hutchingson JW (1976) Bounds and self-consistent estimates for creep of polycrystallin materials. Proc Royal Soc Lond. A. Math Phys Sci 348(1652):101–127

Huyer W, Neumaier A (1999) Global optimization by multilevel coordinate search. J Global Optim 14(4):331–355

Huyer W, Neumaier A (2008) SNOBFIT-stable noisy optimization by branch and fit. ACM Trans Math Softw (TOMS) 35(2):1–25

Häse F, Roch LM, Kreisbeck C, Aspuru-Guzik A (2018) Phoenics: A Bayesian optimizer for chemistry. ACS Central Sci 4(9):1134–1145

Jones DR, Schonlau M, Welch W (1998) Efficient global optimization of expensive black-box functions. J Global Optim 13(4):455–492

Kapoor K, Ravi P, Noraas R, Park J-S, Venkatesh V, Sangid MD (2021) Modeling Ti-6Al-4V using crystal plasticity, calibrated with multi-scale experiments, to understand the effect of the orientation and morphology of the \(\alpha\) and \(\beta\) phases on time dependent cyclic loading. J Mech Phys Solids 146:104192

Kasemer M, Falkinger G, Roters F (2020) A numerical study of the influence of crystal plasticity modeling parameters on the plastic anisotropy of rolled aluminum sheet. Model Simul Mater Sci Eng 28(8):085005

Kim JH, Lee M-G, Kang J-H, Oh C-S, Barlat F (2017) Crystal plasticity finite element analysis of ferritic stainless steel for sheet formability prediction. Int J Plast 93:26–45

Klein A, Falkner S, Bartels S, Hennig P, Hutter F (2017) Fast bayesian optimization of machine learning hyperparameters on large datasets. In: Artificial Intelligence and Statistics, pp 528–536

Kuhn J, Schneider M, Sonnweber-Ribic P, Böhlke T (2020) Fast methods for computing centroidal Laguerre tessellations for prescribed volume fractions with applications to microstructure generation of polycrystalline materials. Comput Methods Appl Mech Eng 369:113175

Kushner HJ (1964) A new method of locating the maximum point of an arbitrary multipeak curve in the presence of noise. J Basic Eng 86:97–106

Lian J, Liu W, Zhang W, Shen F, Münstermann S (2019) Crystal plasticity modelling of flow behavior under various strain rates. In: AIP Conference Proceedings, vol 2113, p 180015

Liu W, Lian J, Aravas N, Münstermann S (2020) A strategy for synthetic microstructure generation and crystal plasticity parameter calibration of fine-grain-structured dual-phase steel. Int J Plast 126:102614

Lizotte DJ (2008) Practical Bayesian Optimization. PhD thesis, University of Alberta

Matérn B (2013) Spatial variation, vol 36. Springer, New York

McDowell DL (1996) Basic issues in the mechanics of high cycle metal fatigue. Int J Fract 80(2–3):103

McDowell DL, Dunne FPE (2010) Microstructure-sensitive computational modeling of fatigue crack formation. Int J Fatigue 32(9):1521–1542

McKay MD, Beckman RJ, Conover WJ (2000) A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 42(1):55–61

Michiuchi M, Nambu S, Ishimoto Y, Inoue J, Koseki T (2009) Relationship between local deformation behavior and crystallographic features of as-quenched lath martensite during uniaxial tensile deformation. Acta Materialia 57(18):5283–5291

Mockus J (1989) Bayesian approach to global optimization. Kluwer, Dordrecht

Mockus J (1994) Application of Bayesian approach to numerical methods of global and stochastic optimization. J Global Optim 4(4):347–365

Mockus J, Tiesis V, Zilinskas A (1978) The application of Bayesian methods for seeking the extremum. In: Dixon LCW, Szegö GP (eds) Towards Global Optimisation 2. North Holland, pp 117–129

Mughrabi H, Herz K, Stark X (1981) Cyclic deformation and fatigue behaviour of \(\alpha\)-iron mono-and polycrystals. Int J Fract 17(2):193–220

Nocedal J, Wright S (1999) Numerical optimization. Springer, Berlin

Ohno N, Wang J-D (1993) Kinematic hardening rules with critical state of dynamic recovery, part I: formulation and basic features for ratchetting behavior. Int J Plast 9(3):375–390

Pagan DC, Shade PA, Barton NR, Park J-S, Kenesei P, Menasche DB, Bernier JV (2017) Modeling slip system strength evolution in Ti-7Al informed by in-situ grain stress measurements. Acta Materialia 128:406–417

Picheny V, Gramacy RB, Wild S, Le Digabel S (2016) Bayesian optimization under mixed constraints with a slack-variable augmented Lagrangian. In: Advances in Neural Information Processing Systems, pp 1435–1443

Prager W (1949) Recent developments in the mathematical theory of plasticity. J Appl Phys 20(3):235–241

Prithivirajan V, Sangid MD (2018) The role of defects and critical pore size analysis in the fatigue response of additively manufactured IN718 via crystal plasticity. Mater Des 150:139–153

Quey R, Renversade L (2018) Optimal polyhedral description of 3D polycrystals: method and application to statistical and synchrotron X-ray diffraction data. Comput Meth Appl Mech Engnrg 330:308–333

Raponi E, Bujny M, Olhofer M, Aulig N, Boria S, Duddeck F (2019) Kriging-assisted topology optimization of crash structures. Comput Methods Appl Mech Eng 348:730–752

Rios LM, Sahinidis NV (2013) Derivative-free optimization: a review of algorithms and comparison of software implementations. J Global Optim 56(3):1247–1293

Roters F, Eisenlohr P, Hantcherli L, Tjahjanto DD, Bieler TR, Raabe D (2010) Overview of constitutive laws, kinematics, homogenization and multiscale methods in crystal plasticity finite-element modeling: Theory, experiments, applications. Acta Materialia 58(4):1152–1211

Rovinelli A, Proudhon H, Lebensohn RA, Sangid MD (2020) Assessing the reliability of fast Fourier transform-based crystal plasticity simulations of a polycrystalline material near a crack tip. Int J Solids Struct 184:153–166

Rovinelli A, Sangid MD, Proudhon H, Guilhem Y, Lebensohn RA, Ludwig W (2018) Predicting the 3D fatigue crack growth rate of small cracks using multimodal data via Bayesian networks: In-situ experiments and crystal plasticity simulations. J Mech Phys Solids 115:208–229

Sangid MD (2013) The physics of fatigue crack initiation. Int J Fatigue 57:58–72

Sawaf B, Ozisik MN, Jarny Y (1995) An inverse analysis to estimate linearly temperature dependent thermal conductivity components and heat capacity of an orthotropic medium. Int J Heat Mass Transf 38(16):3005–3010

Schäfer BJ, Song X, Sonnweber-Ribic P, ul Hassan H, Hartmaier A (2019) Micromechanical modelling of the cyclic deformation behavior of martensitic SAE 4150–A Comparison of Different Kinematic Hardening Models. Metals 9(3):368

Schäfer BJ, Sonnweber-Ribic P, ul Hassan H, Hartmaier A (2019) Micromechanical modeling of fatigue crack nucleation around non-metallic inclusions in martensitic high-strength steels. Metals 9(12):1258

Sedighiani K, Diehl M, Traka K, Roters F, Sietsma J, Raabe D (2020) An efficient and robust approach to determine material parameters of crystal plasticity constitutive laws from macro-scale stress–strain curves. Int J Plast 102779

Shah A, Wilson A, Ghahramani Z (2014) Student-t processes as alternatives to Gaussian processes. In: Artificial intelligence and statistics, pp 877–885

Shahmardani M, Vajragupta N, Hartmaier A (2020) Robust optimization scheme for inverse method for crystal plasticity model parametrization. Materials 13(3):735

Shenoy M, Tjiptowidjojo Y, McDowell D (2008) Microstructure-sensitive modeling of polycrystalline IN 100. Int J Plast 24(10):1694–1730

Simo JC, Hughes TJR (2006) Computational inelasticity, vol 7. Springer, New York

Smit RJM, Brekelmans WAM, Meijer HEH (1998) Prediction of the mechanical behavior of nonlinear heterogeneous systems by multi-level finite element modeling. Comput Methods Appl Mech Eng 155(1–2):181–192

Snoek J, Larochelle H, Adams RP (2012) Practical Bayesian optimization of machine learning algorithms. In: Advances in Neural Information Processing Systems, pp 1435–1443