Abstract

This study aims to profile the scientific retractions published in journals indexed in the Web of Science database from 2010 to 2019, from researchers at the top 20 World Class Universities according to the Times Higher Education global ranking of 2020. Descriptive statistics, Pearson's correlation coefficient, and simple linear regression were used to analyze the data. Of the 330 analyzed retractions, Harvard University had the highest number of retractions and the main reason for retraction was data results. We conclude that the universities with a higher ranking tend to have a lower rate of retraction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Unethical research undermines confidence in researchers, universities, journals, and in science itself. In this sense, the peer review process, along with the responsible and transparent correction of articles, aims to guarantee the quality of knowledge available (Fang & Casadevall, 2011; Fennell, 2019; Lei & Zhang, 2018). However, even if research is submitted to peer review, this process may fail (Van Leeuwen & Luwel, 2014), and research with honest or dishonest errors does get published, as well as those with scientific dishonesty. For example, in May 2020, the British journal The Lancet published an article associating the use of chloroquine and hydroxychloroquine with increased deaths in patients diagnosed with Covid-19, which resulted in the interruption of testing of the drug globally. A few days later, the journal published a retraction stating that it could not guarantee the accuracy of primary data sources and opted to withdraw the article, which caused repercussions worldwide (Boseley & Davey, 2020). Incorrect publications like the chloroquine study result in negative impacts not only on the scientific community, but also on society (Berlinck, 2011; Dobránszki & Silva, 2019; Elliott et al., 2013; Fang & Casadevall, 2011; Resnik & Dinse, 2013) and the authors themselves, which can jeopardize their careers (Mongeon & Larivière, 2016). When a mistake or misconduct is identified, a retraction of the fraudulent article must be published (Sox & Rennie, 2006), providing a mechanism for correcting the literature, alerting the scientific community, and demonstrating to society that the scientific community corrects its mistakes (Browman, 2019; Fang & Casadevall, 2011; Vuong et al., 2020).

Some authors lay blame with the global publishing industry (Fanelli et al., 2015; Lei & Zhang, 2018), claiming that developing, publishing, and evaluating research should be limited to only a few qualified professionals, and not to the vast scientific workforce that currently exists (Hu et al., 2019). While the honesty and integrity of researchers are threatened by the atmosphere of “publish or perish”, a consequence of the pressure to publish (Fanelli et al., 2015; Hu et al., 2019; Lacetera & Zirulia, 2011; Lei & Zhang, 2018), the Committee on Publication Ethics (COPE) has made important steps toward ensuring that ethical practices become part of the editorial culture, encouraging researchers and publishers to report errors to safeguard the reliability of the journal (Wager et al., 2010). In addition, COPE recommends that retractions should: avoid defamatory language; be published free of charge and immediately in all versions of the journal; ensure that the retraction is clearly identified as such, including the title and authors; and highlight the reason for the article’s withdrawal, which can minimize harmful effects and further dissemination of the error (COPE, 2020).

In recent years, studies on retractions have been conducted on publications in areas such as clinical and health sciences (Brainard, 2018; Fang et al., 2012; Fennell, 2019; Gasparyan et al., 2014; Nair et al., 2020; Steen et al., 2013; Van Leeuwen, 2019), information science (Ajiferuke & Adekannbi, 2020), and engineering (Rubbo, et al., 2019). Other studies have correlated scientific retractions to the impact factor of the journals (Fang et al., 2012; Fang & Casadevall, 2011; Resnik, 2015), assessed the probability of retraction and elapsed time between publication and retraction (Tang et al., 2020), examined post-retraction citations (Rubbo, Pilatti, et al., 2019), and discussed the impact of retractions on the academic life of authors and co-authors (Mongeon & Larivière, 2016). In addition, studies have identified factors that affect the scientific integrity of retracted researchers (Fanelli et al., 2015), analyzed retractions in the Chinese scientific community (Lei & Zhang, 2018; Tang, 2019), proposed the correction of indicators such as the impact factor and H-index, considering the growing numbers of retractions and a greater need for transparency in science (Dobránszki & Silva, 2019), and developed a taxonomy of the distribution of errors in retraction notices (Andersen & Wray, 2019).

Based on these studies, the current situation in relation to retractions has at least four relevant characteristics. First, the retraction rate has increased since the first published retraction in the 1970s (Ajiferuke & Adekannbi, 2020; Brainard, 2018; Fang et al., 2012; Grieneisen & Zhang, 2012; He, 2013; Lei & Zhang, 2018; Resnik, 2015; Tang et al., 2020; Vuong et al., 2020). This growth is linked to the number of journals that began to retract articles, and not necessarily an increase in scientific misconduct (Brainard, 2018; Fanelli et al., 2015). Analyses have focused on understanding whether misconduct has increased or whether more cases are identified due to improved detection tools (Grieneisen & Zhang, 2012; Steen et al., 2013).

Studies have also shown that the number of retractions has been decreasing (Brainard, 2018; Van Leeuwen, 2019). However, this reduction is due to the fact that many erroneous articles have not yet been discovered (Lacetera & Zirulia, 2011; Lei & Zhang, 2018; Van Leeuwen, 2019) and not all articles with ethical problems will be retracted (Cokol et al., 2007). It is likely that the fraudulent articles discovered to date correspond to an insignificant portion of the scientific production with ethical problems, since almost 2% of scientists questioned in a previous study admitted to having falsified or manufactured data (Fanelli, 2009).

The second characteristic of retractions in the current context is that most of the articles withdrawn for fraud originate from countries with a long tradition in research, such as the United States, Germany, Japan, and China (Fang et al., 2012; He, 2013; Hu et al., 2019; Tang et al., 2020; Van Leeuwen, 2019), and are published in journals with a high impact factor (Cokol, 2007; Fang et al., 2012; He, 2013; Steen, 2011; Tang et al., 2020). On the other hand, plagiarism and duplicate publications generally come from countries that are newer to research and are generally associated with low-impact factor journals (Fang et al., 2012) or reflect idiosyncratic factors (Brainard, 2018). Such findings may represent the greater scrutiny granted to articles in high-impact journals, and the uncertainties commonly associated with cutting-edge, innovative research (Cokol et al., 2007; Fang & Casadevall, 2011). While Brainard’s (2018) research shows that researchers from countries or institutions that have well-developed policies to address research misconduct tend to have fewer retractions, the number of journals that have established these policies remains low.

Although retractions mostly come from countries with a tradition in research and high-impact journals, they occur in all areas of knowledge, from medicine to engineering and the social sciences (Grieneisen & Zhang, 2012; Lei & Zhang, 2018; Rubbo et al., 2019). However, when analyzing retractions in the Web of Science database (WoS), studies by Grieneisen and Zhang (2012), Fanelli et al. (2015), Fang et al. (2012), Tang et al. (2020), and Vuong et al. (2020), have shown that the percentages of retractions in the area of chemistry, biomedical sciences, and life sciences are high.

The third characteristic refers to the reasons for retraction identified in the studies, which can be classified as either misconduct (fabrication and falsification of data, duplicate publication, or plagiarism) or unintentional or honest errors (Fang et al., 2012; Grieneisen & Zhang, 2012; Tang et al., 2020; Van Leeuwen, 2019). There are several identified types of misconduct, including self-plagiarism (Broome, 2004; Russo, 2014), data concealment, flawed procedures in data collection, inadequate data retention and storage, incorrect authorship (Thomas & Nelson, 2002), salami slicing, bias and conflict of interest, intentional erroneous use of statistical methods (Mojon-Azzi & Mojon, 2004), publication of images without permission (Sharma & Shingh, 2011), inclusion of authors who did not contribute to the research, limited participation in the granting of funds, materials or equipment (Amorim, 2011), and fake peer review which has become one of the main reasons for retraction (Vuong et al., 2020). According to COPE, all cases of scientific misconduct must be retracted (Wager et al., 2010).

A limiting factor in identifying the reason for retraction is that notices of withdrawal are often either very vague, making it difficult to distinguish between misconduct and error, or the reason is not mentioned, thus generating a certain amount of ambiguity (Almeida et al., 2016; Bosch et al., 2012; Van Leeuwen, 2019; Van Noorden, 2011). This leads to an underestimation of the percentage of fraudulent articles (Fang, et al., 2012). Retractions must describe the reason for withdrawal, indicate those responsible for the misconduct, if any, and identify any other factors that resulted in the retraction of the article (Silva & Dobránszki, 2017).

The fourth characteristic refers to ‘prolific retractors’, or authors for which several articles have been retracted, which generally correspond to researchers who are highly productive (Brainard, 2018; Fanelli et al., 2015; Grieneisen & Zhang, 2012; Tang et al., 2020). Brainard’s (2018) research has shown that a few authors are responsible for a disproportionate number of retractions. Only 500 of the more than 30,000 authors named in Retraction Watch represent about a quarter of the 10,500 retractions analyzed. Approximately 100 authors have 13 or more retractions each, suggesting that these withdrawals are the result of deliberate misconduct rather than honest mistakes (Brainard, 2018). Similarly, Grieneisen and Zhang’s (2012) results show that, of the 15 researchers with the highest number of withdrawn articles, nine had more than 20 retractions each, and 13 researchers were responsible for 391, or 54% of the total 725 retractions due to scientific misconduct. The main reoffenders are collectively responsible for 52% of the world’s retractions due to alleged misconduct in research (Grieneisen & Zhang, 2012). Tang et al. (2020), who analyzed 2,087 retractions in the WoS in the period from 1978 to 2013, also listed the top ten reoffenders and showed that together these authors were responsible for 226 articles identified, or approximately 11% of the sample. Nine of the ten reoffenders are researchers from the countries with the highest number of retractions (Japan, Germany and the US), and all ten are men.

Despite these observations, publications from authors linked to elite universities or World Class Universities (WCUs) are less prone to retraction, particularly in relation to those due to falsification, fabrication, or plagiarism (Tang et al., 2020). WCUs are considered leaders in a range of criteria, including: international research and cited works; high incidence of researchers who are references in their fields; capacity to drive innovative ideas; and strong ability to recruit talent and attract financial resources or donations (Altbach & Salmi, 2011; Cheng et al., 2014; Deem et al., 2008; Lee, 2013). Notably, several countries have begun to modify their research funding policies (Li, 2012) to focus on the development of “global universities” (Deem, 2008; Doğan & Al, 2019). In addition to ensuring WCU status, the better a university’s position in the global rankings, the greater the visibility of the institution (Altbach & Hazelkorn, 2017; Lee, 2013) and the greater the public and private funding (Marginson, 2017). According to Altbach and Salmi (2011), WCUs are directly linked to international university rankings.

Global rankings are evaluative systems that have begun to bring about important changes in universities in recent decades (Altbach & Hazelkorn, 2017; Morris, 2011). Among the currently published global rankings, the most well-known worldwide is the Times Higher Education World University ranking (THE-WU) (Pilatti & Cechin, 2018), which is the result of a partnership between the Times Higher Education and Thomson Reuters. It evaluates indicators of teaching, research, citations, international profile and industry income, and is one of the most complete in terms of assessment (Moed, 2017).

In analyzing the impact of retractions on the scientific community and consequently on WCUs, the present study aims to outline the profile of scientific retractions published in journals indexed in the WoS from 2010 to 2019, from the 20 best WCUs according to the global ranking classification of the THE 2020.

The in-depth examination of scientific retractions by researchers linked to WCUs was motivated by two reasons. First, although previous studies provide valuable insights and evidence regarding retractions, none have examined in detail the retractions published by researchers linked to WCUs. Secondly, of the 20 WCUs selected, 14 are North American, with the US having the highest rate of publication and retraction globally (He, 2013), and similar percentages of articles published and retracted in relation to the global total, 32.3% and 29.8% respectively (Tang et al., 2020).

Materials and methods

The Times Higher Education World University Ranking 2020 assessed a total of 1,400 universities in 92 countries. The indicators analyzed in the assessment are presented in Table 1. The ranking is available online for free on the THE website under the ‘Rankings’ tab by selecting ‘World University Rankings 2020′. All universities and countries that the ranking assessed were considered, but only the top 20 ranked universities were included in the present study.

To define our sample of scientific retractions published in the Web of Science database (WoS) from 2010 to 2019, we first searched for documents with the following words using the Boolean operator ‘OR’: retracted, retraction, withdrawal, and redress. As such, the following type of documents were selected: retracted publication, retraction, and correction. Then, each university was selected in the category ‘organization’ to identify retractions affiliated with each university. This process was repeated individually for all 20 selected universities. The research was carried out in May 2020.

After the search, a count was made of retractions published by each university, disregarding duplicate documents, those that were not found, and those that fell outside of the defined time interval. It should be noted that retractions with authors from two or more selected universities were considered only one retraction for each university. Subsequently, the number of articles published in the WoS between 2010 and 2019 from each university were identified to calculate the proportion of published articles versus retractions.

Considering the selected retractions, it was possible to analyze the particularities of the journals in terms of impact factor, number of retractions, language of publication, and whether the journal is a member of COPE. The research also verified whether the person responsible for the retraction (authors, editor, or both) had been identified, and the time period between the article's publication and retraction. The reasons for retraction were categorized according to COPE (2009) guidelines, as shown in Table 2.

For the analysis of each variable, descriptive statistics (mean, frequency and/or percentage) were used. A spreadsheet was used to calculate the statistics. Complementarily, Pearson’s correlation coefficient (for parametric data) was performed between the variables THE ranking and rate of article retraction. As an evaluation parameter, a significance level lower than 0.05 (p < 0.05) was considered. For the magnitude of the relationship, we considered: weak correlation between 0.1 and 0.3; moderate between 0.4 and 0.6; and strong between 0.7 and 0.9.

In addition, a simple linear regression was used to verify the prediction between the variables THE ranking and retraction rate. The correlation has no predictive power, so in this case, a regression test was chosen.

Results

Characterization of universities

The 20 best universities as classified by THE 2020 were selected and are listed in Table 3, together with information about the country, year of foundation, and type of institution (public/private).

After the selection of universities, the process to identify retractions began, which provided a total of 397 retractions. Of these, nine did not occur within the established period, 53 were duplicates, and five were not found. Therefore, a total of 330 retractions were analyzed (period between 2010 and 2019).

Number of retractions, language of publication/retraction, COPE affiliation

The 330 retractions were published between 2010 and 2019 and correspond to research published between 1997 and 2019 in journals with JCR between 0 and 70.67. Articles were published predominantly in English (96.67%; n = 330) and in periodicals affiliated to COPE (79.39%), as shown in Figs. 1 and 2.

During the evaluated period, 1.52% of the articles had up to three retractions and 8.18% of the articles had up to two retractions. The 20 best ranked WCUs had an average (µ) of 16.50 ± 12.90 retractions during the analyzed period.

Number of articles published, country of origin, number of retractions by university, and percentage of retractions by university

Together, the analyzed universities published a total of 1,535,266 articles, of which 330 were retracted, for a retraction rate of 0.021%. Table 4 shows the retraction rate relative to the total number of publications.

Duke University showed the highest percentage of retraction (0.056%), followed by Cornell University (0.037%), both of which occupy the last two positions of the ranking of the selected WCUs (rank of 20 and 19, respectively).

As for the country of origin, 72.12% were from the US, 17.58% from the United Kingdom, 7.88% from Canada, and 2.42% from Switzerland.

Considering the distribution of retractions per university in the period from 2010 to 2019, based on the total number of publications indexed in the WoS database and without considering all publications, Harvard University had the largest number of retraction records among the institutions evaluated (17.87%). Duke University occupied the second position (10.30%), while California Institute of Technology presented the lowest number of retractions (0.61%).

Journal in which the retraction was published

Figure 3 shows the distribution of retractions per journal in the period from 2010 to 2019. Nature (5.15%) published the most retractions during the study period, followed by the Journal of Clinical Investigation (3.33%). The evaluated journals presented an average of 1.58 ± 1.65 retractions in the period evaluated.

Reason for retraction, time between the year of publication and retraction, and person responsible

Figure 4 shows the distribution based on reason for retraction, while Fig. 5a–c shows the year of publication of the article, the year of retraction, and the time between publication and retraction. The main reason to retract an article was data results (66.4%), which includes falsification, fabrication, and unreliable results.

On average, the evaluated universities published 16.5 ± 15.13 articles per year that later suffered retractions, representing a rate of 0.02 ± 0.01% of publications. Most of the articles retracted were published in 2011 (14.55%) (Fig. 5a).

An average of 33 ± 14.50 retractions were published per year from 2010 to 2019, with the highest concentration in 2019 (16.67%) (Fig. 5b). The retractions occur on average 3.20 ± 3.11 years after initial publication and most frequently at a shorter interval of two years (7.07%) (Fig. 5c).

Relationship between impact factor and retractions

Figure 6 shows the relationship between the impact factor (based on the years 2018 and 2019) and retractions. Journals with a Journal Citation Report (JCR) between 3 and 3.99 had 16.36% (2019) and 13.94% (2018) of retractions, and journals with JCR between 10 and 19.99 had 14.85% (2019) and 13.03% (2018) of retractions.

Person responsible for the retraction

Figure 7 shows the person responsible for the retractions. In almost half of the retractions (43.64% of the cases), the author was listed as responsible.

Correlation and regression

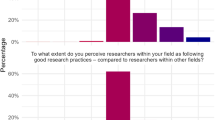

Through Pearson's correlation coefficient, significant (p = 0.011) and moderate (r = 0.54) correlation was found between THE ranking and retraction rate of articles published by the analyzed universities. Figure 8 shows the Linear Regression test between retraction ratio % and THE ranking.

We found that university rank has a predictive capacity of 30.51% of the variability of the article retraction rate (dependent variable). Thus, it is likely that the higher the rank, the lower the retraction rate.

Discussion

This study provides a profile of retractions of scientific articles indexed in the Web of Science database from the 20 best WCUs based on the THE 2020 ranking. Harvard University published the most scientific articles and had the most retractions. However, if we consider the retraction rate, it appears that the better the university's rank, the lower the retraction rate. The country with the highest retraction incidence was the US (72.92%), which is also the country with the largest number of universities that are considered world-class (14). The magazine with the most retracted publications was Nature. Most articles are written in English (96.67%) and published in COPE member journals (79.39%). The most common reason for retraction was data results (66.4%). Retraction took an average of 3.2 years, and mainly authors are responsible for retractions (43.64%).

On average, the studied institutions retracted 0.021% of the published work. The institution with the lowest incidence was California Institute of Technology with 0.006% and the greatest incidence was Duke University at 0.056%. The average retraction rate found herein is high when compared to the study by Almeida et al. (2016), who obtained an average of 0.005% for retractions in the SciElo and LILACS databases, and also when compared to studies on specific areas of research: 0.008% for engineering (Rubbo, Helmann, et al., 2019); 0.014% for biology and medicine; 0.014% for multidisciplinary studies; 0.006% for other sciences; 0.002% for social sciences; and 0.001% for arts and humanities (Lu et al., 2013).

The predominant reason for retraction was data results (66.4%) followed by unethical research (20.0%). Corroborating the results of this study, Brainard (2018), who evaluated retractions between 1997 and 2015, pointed out that most retractions involved scientific fraud (fabrication, falsification, and plagiarism), in addition to false peer review. The study by Ajiferuke and Adekannbi (2020) pointed out that articles in the library and information sciences are withdrawn mainly due to plagiarism, duplication, non-reproducible results, and errors in data. Within anesthesiology, Nair et al. (2020) identified that the most common motive for withdrawals was fraud (49.4%), followed by lack of appropriate ethical approval (28%). Fang et al. (2012), when analyzing 2,047 retractions indexed in PubMed in biomedicine and life sciences, identified the following main reasons for retraction: 43.4% for fraud or suspected fraud; 21.3% for errors; 14.2% for redundant research; and 9.8% for plagiarism. Madlock-Brown and Eichmann (2015) identified three main reasons for retraction: 31% error; 17% redundant publication; and 16% plagiarism. Resnik and Dinse (2013), when investigating 119 retractions published by the U.S. Office of Research Integrity between the years 1992 and 2011, identified that retractions were motivated by falsification (44.5%); fabrication and falsification (40.3%); only plagiarism (5.9%); only fabrication (4.2%); and all three types of unethical research (5%). Wager and Williams (2011) analyzed 312 retractions from the Medline database, and identified the following main reasons for retraction: 28% for error; 17% for redundant publication; 16% for plagiarism; and 11% for irreproducible results. Almeida et al. (2016) and Van Noorden (2011) identified in their studies that most retractions are due to plagiarism.

In China, Lei and Zhang (2018) analyzed bibliometric information from 834 WoS retractions showing that plagiarism, fraud, and false peer review explained three quarters of the published retractions. Most of these retractions corresponded to deliberate fraud, as demonstrated by retractions from reoffending authors. In the study by Van Leeuwen (2019), in almost 55% of the cases analyzed, intentional misconduct was the reason for the retraction, with regular errors related to 10% and negligence by the authors (issues of reproducibility, ethics, and authorship) implicated in about 11% of the retractions.

It should be noted that only 3.3% (n = 330) of the analyzed retractions did not provide a reason. The ambiguous and evasive description of some retraction notices can end up obscuring the motives (Brainard, 2018; Fang et al., 2012; Van Leeuwen & Luwel, 2014), and this is probably due to embarrassment on behalf of the authors or editors (Van Leeuwen & Luwel, 2014). The publication of a retraction is detrimental both to the authors’ academic careers (Van Noorden, 2011; Yadav et al., 2016; Zhang & Grieneisen, 2013) and to the journal, directly affecting its perceived reputation and reliability (Williams & Wager, 2013). However, a lack of clarity in relation to the reason for retraction is beneficial for neither the public perception of science nor the academic community itself (Van Leeuwen & Luwel, 2014).

In the present analysis, the average time between publication and retraction was 3.2 years. Longer average times were found by Dal-Ré and Ayuso (2019) of 4.6 and 5.1 years, but the results were not statistically significant, while a shorter period of two years was identified by Rubbo et al., (2019). According to Foo (2011), since the year 2000, the elapsed time between publication and withdrawal of an article has decreased.

With regard to journals, most publish in English (96.67%) and are members of COPE (79.39%), which is consistent with the results found by Rubbo et al., (2019), who identified that 94% of journals publish predominantly in English and 84.87% are affiliated with COPE. In addition, 76.55% of the journals had only one incidence of retraction and 11% had two. These results similar to those reported by Steen (2011) and Rubbo et al., (2019) who found that approximately 68% of journals had only one incidence of retraction and 32% with two or more.

Regarding the impact factor, it was not possible to state that journals with a higher impact factor had a greater number of retractions. However, in evaluating the journals analyzed, Nature, with a JCR of 42.778, the fourth-best JCR of the journals analyzed, showed the highest number of retractions (5.15%), followed by the Journal of Clinical Investigation, with a JCR of 11.864 and 3.33% of retractions. In a previous study, Rubbo et al., (2019) found that journals with a higher impact factor had a higher incidence of retraction. Meanwhile, Furman et al. (2012) and Lei and Zhang (2018) argue that journals with a lower impact factor are more likely to retract articles.

Resnik (2015) analyzed the retraction policies of 147 leading journals classified by impact factor and concluded that only 65% of the journals had retraction policies. Among these, 94% adhered to the policy that allows editors to withdraw articles without the authors’ consent.

Both the editors and reviewers have the responsibility to avoid the publication of an article with misconduct (Carafoli, 2015; Fox, 1994; Mojon-Azzi & Mojon, 2004; Stroebe et al., 2012). However, the editor is responsible for the final deliberation on the retraction of an article (Atwater, 2014; Wager et al., 2010; Williams & Wager, 2013). This may also be the responsibility of the author(s) or both authors and editors, even when one or all of the authors refuse to do so (Wager et al., 2010). The term ‘heroic act’ refers to retractions requested by the authors themselves (Alberts et al., 2015), which in the present study was the motivation for withdrawal of most of the analyzed retractions (43.64%). These results are consistent with the work by Vuong (2020), who identified an increase in the number of authors responsible for retractions in the period between 2017 and 2018. This highlights an increasing concern with the reputation of science and a commitment to protect the quality of scientific literature. On the other hand, Vuong (2020), looking at 2,046 articles retracted between 1975 and 2019 in Retraction Watch, showed that 15% of retractions are initiated by the authors and 53% do not specify who was responsible for the withdrawal. A smaller number was found in the present study, in which only 25% did not identify the person responsible for the retraction, while Rubbo et al. (2019) showed that 64.29% of the retractions were initiated by the editors, 17.23% did not identify the person responsible, 12.18% were requested by the authors, and 6.3% by both the authors and editors.

Strengthening ethical conduct and establishing more severe punishments is a challenge in the scientific community, as it is a complex and self-regulating environment where various actors interact in a range of ways, including as competitors, researchers, and reviewers (Lacetera & Zirulia, 2011). In this environment, retraction is seen as a paradox. For the research group the consequences can include funding restrictions and dismissals; for the authors their names and reputations can be compromised; and for the journals they can experience a loss of high quality research and indexation on prominent databases (Hu et al., 2019). Thus, the stigma associated with retraction inhibits efforts to protect the integrity of scientific literature (Brainard, 2018; Grieneisen & Zhang, 2012; Vuong, 2020). Rather than being treated as means of disqualifying research, retractions should be seen as an academic tool for correction, improving the legitimacy of research citations, which consider retractions and citations of retracted articles (Dobránszki & Silva, 2019).

To help decrease the number of fraudulent studies, the US National Academy of Sciences has submitted five reports on scientific misconduct over the past 28 years. All reports presented the same recommendations, including: mandatory training in good research practices; improvements in the science reward system and stricter penalties; protecting whistleblowers (Kornfeld, 2018; Kornfeld & Titus, 2017); education in integrity and scientific ethics (Mumford et al., 2008); improved training in logic, probability and statistics; establishment of uniform and transparent guidelines for retraction notices; changes in the peer review system and severe sanctions (Brainard, 2018; Fanelli et al., 2015; Fang et al., 2012; Lei & Zhang, 2018; Tang, 2019); in addition to easing the competitive pressure among scientists (Lacetera & Zirulia, 2011). Nevertheless, the academy remains without an effective action plan to address the serious problems of “fabrication (lying), falsification (cheating), and plagiarism (stealing) that continue to plague science” (Kornfeld, 2018, p. 1103).

Obviously, researchers and editors worldwide are being encouraged to establish regulations and structures to deal with cases of misconduct (Andersen & Wray, 2019; Fanelli et al., 2015; Resnik et al., 2015), due to the damage that erroneous research results can cause, including leading other researchers toward unproductive lines of investigation or the unfair distribution of resources (Dobránszki & Silva, 2019; Fang & Casadevall, 2011). However, joint efforts are still needed to improve mechanisms and increase researchers’ awareness of misconduct (Brainard, 2018; Grieneisen & Zhang, 2012).

Honesty and integrity in research are associated with sociological and psychological factors, such as: specific policies and cultural and socioeconomic factors; peer influence; pressure to publish; and early-career reputation, where younger scientists are more often implicated in retractions (Fanelli et al. 2015). On the other hand, Lacetera and Zirulia (2011) argue that researchers with a better reputation are more likely to publish a fraudulent article but are less likely to be identified. Another explanation may be associated with the fact that researchers from universities that are considered world-class have a high degree of concern for their reputation and the reputation of their institutions (Fanelli et al., 2015; Lacetera & Zirulia, 2011).

The results herein suggest that universities with a better position in the THE ranking have a lower rate of retraction of scientific articles. The finding corroborates the results of Tang et al. (2020) who also analyzed the WoS and demonstrated that publications by authors from the most prominent universities in the world are less prone to retraction, particularly in relation to falsification, fabrication, or plagiarism. The authors found that only one in five (22.6% of the retracted articles) involved at least one author from a top 100 ranked university. The chances of an article with at least one author from a top 100 ranked university being retracted is 23% lower compared to an article without such an author. We can infer that there is a stronger tendency among elite global universities to inhibit retractions. Meanwhile, the time between publishing and retraction for an article which involves researchers from WCUs is generally short (Tang et al., 2020). This may be because research by leading scientists, to a large extent, is widely accessed and receives greater scrutiny. However, in the present study, which evaluated only WCUs, an average retraction time of 3.2 years was identified, a period that can be considered long, given the potential negative impacts of the scientific errors.

Considering that WCUs are universities that excel in research and have a flexible and effective financing system, by attracting investors or receiving donations from graduates and private institutions (Altbach, 2004), retractions can have an even more harmful impact. This relationship between global rankings and retractions, although inconvenient for WCUs, could be a good reason to hold universities responsible for research fraud, clearly disclosing the findings and penalizing the culprits (Hu et al., 2019; Tang et al., 2020; Xin, 2009). The rankings that encourage global competitiveness, by attracting talent and funding and stimulating excellence and productivity in research and citations, may play a significant role in the academy by promoting specific programs or actions that monitor scientific misconduct in universities (Shin, 2013).

The present study had some limitations. First, the research was carried out only on research published in the Web of Science database. Second, we chose the top 20 universities as ranked by THE. Third, the period of time between publication and retraction was treated in years, disregarding months. Fourth, the reasons for the retractions were restricted to those indicated in the COPE guidelines. Finally, we did not identify whether universities and journals employ any type of measures to prohibit unethical behavior in research. One area of future research is examining the link between prolific authors and WCUs.

Conclusion

The results show that a better the position in the THE ranking correlates with a lower rate of retraction, since Duke University (20th position) appears with the highest percentage of retraction (0.056%). However, Harvard University presented the greatest number of records of retraction among the institutions evaluated (17.88%), while California Institute of Technology presented the lowest (0.61%). The journal that most published retractions was Nature. Most of the articles published are written in English (96.67%), in journals that are members of COPE (79.39%). In addition, the main reason for retraction was data results, which represented 66.4% of the retractions.

The study established a relationship between WCUs that are deeply interested in high-impact research, which requires strategic and increasingly competitive funding, and ethical conduct in research, which today is monitored through scientific retractions. This relationship is pertinent as WCU status is achieved not only by the productivity of research, but by the quality of research and its contributions to society.

Data availability

We authors declare the total availability of data from our research.

References

Ajiferuke, I., & Adekannbi, J. O. (2020). Correction and retraction practices in library and information science journals. Journal of Librarianship and Information Science, 52(1), 169–183.

Alberts, B., Cicerone, R. J., Fienberg, S. E., Kamb, A., McNutt, M., Nerem, R. M., & Zuber, M. T. (2015). Self-correction in science at work. Science, 348(6242), 1420–1422.

Altbach, P. (2004). The costs and benefits of world-class universities. Academe, 90(1), 20–23.

Altbach, P. G., & Hazelkorn, E. (2017). Pursuing rankings in the age of massification: For most—forget about it. International Higher Education, 89, 8–10.

Altbach, P. G., & Salmi, J. (Eds.). (2011). The road to academic excellence: The making of world-class research universities.

Amorim, L. (2011). Abaixo a má conduta na ciência. Ciência hoje. http://www.cienciahoje.org.br/noticia/v/ler/id/1647/n/abaixo_a_ma_conduta_na_ciencia.

Andersen, L. E., & Wray, K. B. (2019). Detecting errors that result in retractions. Social Studies of Science, 49(6), 942–954.

Atwater, L. E., Mumford, M. D., Schriesheim, C. A., & Yammarino, F. J. (2014). Retraction of leadership articles: Causes and prevention. The Leadership Quarterly, 25(6), 1174–1180.

Bechhoefer, J. (2007). Plagiarism: Text-matching program offers an answer. Nature, 449(7163), 658–658.

Berlinck, R. G. (2011). The academic plagiarism and its punishments - A review. Revista Brasileira de Farmacognosia, 21(3), 365–372.

Bosch, X., Hernandez, C., Pericas, J. M., Doti, P., & Marušić, A. (2012). Misconduct policies in high impact biomedical journals. PLoS ONE, 7(12), e51928.

Boseley, S. & Davey, M. (2020, Jun 4). Covid-19: Lancet retracts paper that halted hydroxychloroquine trials. The Guardian https://www.theguardian.com/world/2020/jun/04/covid-19-lancet-retracts-paper-that-halted-hydroxychloroquine-trials

Brainard, J. (2018). Rethinking retractions. Science, 362(6413), 390–393. http: DOI: https://doi.org/10.1126/science.362.6413.390

Broome, M. E. (2004). Self-plagiarism: Oxymoron, fair use, or scientific misconduct? Nursing Outlook, 52(6), 273–274.

Browman, H. (2019). Retraction Guidelines 2019 Update. COPE European Seminar 2019, Leiden, Netherlands. https://publicationethics.org/files/Retraction%20Guidelines%20presentation_Browman.pdf.

Carafoli, E. (2015). Scientific misconduct: The dark side of science. Rendiconti Lincei, 26(3), 369–382.

Cheng, Y., Wang, Q., & Liu, N. C. (2014). How world-class universities affect global higher education. In Y. Cheng, Q. Wang, & N. C. Liu (Eds.), How world-class universities affect global higher education. (pp. 1–10). Springer: Rotterdam.

Cokol, M., Iossifov, I., Rodriguez-Esteban, R., & Rzhetsky, A. (2007). How many scientific papers should be retracted? EMBO reports, 8(5), 422–423.

COPE (Committee on Publication Ethics). (2020). Retraction Guidelines. https://publicationethics.org/retraction-guidelines

Dal-Ré, R., & Ayuso, C. (2019). Reasons for and time to retraction of genetics articles published between 1970 and 2018. Journal of Medical Genetics, 56(11), 734–740.

de Almeida, R. M. V. R., Rocha, K. A., Catelani, F., Fontes-Pereira, A. J., & Vasconcelos, S. M. R. (2016). Plagiarism allegations account for most retractions in major Latin American/Caribbean databases. Science and Engineering Ethics, 22(5), 1447–1456.

Deem, R., Mok, K. H., & Lucas, L. (2008). Transforming higher education in whose image? Exploring the concept of the ‘world-class’ university in Europe and Asia. Higher Education Policy, 21(1), 83–97.

Dobránszki, J., & da Silva, J. A. T. (2019). Corrective factors for author-and journal-based metrics impacted by citations to accommodate for retractions. Scientometrics, 121(1), 387–398.

Doğan, G., & Al, U. (2019). Is it possible to rank universities using fewer indicators? A study on five international university rankings. Aslib Journal of Information Management, 71(1), 18–37.

Elliott, T. L., Marquis, L. M., & Neal, C. S. (2013). Business ethics perspectives: Faculty plagiarism and fraud. Journal of Business Ethics, 112(1), 91–99.

Fanelli, D. (2009). How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS ONE, 4(5), e5738.

Fanelli, D., Costas, R., & Larivière, V. (2015). Misconduct policies, academic culture and career stage, not gender or pressures to publish, affect scientific integrity. PLoS ONE, 10(6), e0127556.

Fang, F. C., & Casadevall, A. (2011). Retracted science and the retraction index. Infection and Immunity, 79(10), 3855–3859.

Fang, F. C., Steen, R. G., & Casadevall, A. (2012). Misconduct accounts for the majority of retracted scientific publications. Proceedings of the National Academy of Sciences, 109(42), 17028–17033.

Fang, F. C., Bennett, J. W., & Casadevall, A. (2013). Males are overrepresented among life science researchers committing scientific misconduct. MBio, 4(1), e00640-e712.

Fennell, C. (2019). Retractions, a publisher‘s perspective: Daily realities & future challenges. COPE European Seminar 2019, Leiden, Netherlands. https://publicationethics.org/files/CFennell_COPE_Leiden.pdf

Foo, J. Y. A. (2011). A retrospective analysis of the trend of retracted publications in the field of biomedical and life sciences. Science and Engineering Ethics, 17(3), 459–468.

Fox, M. F. (1994). Scientific misconduct and editorial and peer review processes. The Journal of Higher Education, 65(3), 298–309.

Furman, J. L., Jensen, K., & Murray, F. (2012). Governing knowledge in the scientific community: Exploring the role of retractions in biomedicine. Research Policy, 41(2), 276–290.

Gasparyan, A. Y., Ayvazyan, L., Akazhanov, N. A., & Kitas, G. D. (2014). Self-correction in biomedical publications and the scientific impact. Croatian Medical Journal, 55(1), 61.

Grieneisen, M. L., & Zhang, M. (2012). A comprehensive survey of retracted articles from the scholarly literature. PLoS ONE, 7(10), e44118.

He, T. (2013). Retraction of global scientific publications from 2001 to 2010. Scientometrics, 96(2), 555–561.

Hu, G., Yang, Y., & Tang, L. (2019). Retraction and research integrity education in China. Science and Engineering Ethics, 25(1), 325–326.

Kornfeld, D. S. (2018). It’s time for action on research misconduct. Academic Medicine, 93(8), 1103.

Kornfeld, D. S., & Titus, S. L. (2017). More research won’t crack misconduct. Nature, 548(7665), 31–31.

Lacetera, N., & Zirulia, L. (2011). The economics of scientific misconduct. The Journal of Law, Economics, & Organization, 27(3), 568–603.

Lee, J. (2013). Creating world-class universities: Implications for developing countries. Prospects, 43(2), 233–249.

Lei, L., & Zhang, Y. (2018). Lack of improvement in scientific integrity: An analysis of WoS retractions by Chinese researchers (1997–2016). Science and Engineering Ethics, 24(5), 1409–1420.

Li, J. (2012). World-class higher education and the emerging Chinese model of the university. Prospects, 42(3), 319–339.

Lu, S. F., Jin, G. Z., Uzzi, B., & Jones, B. (2013). The retraction penalty: Evidence from the Web of science. Scientific Reports, 3, 3146.

Madlock-Brown, C., & Eichmann, D. (2015). The (lack of) impact of retraction on citation networks. Science and Engineering Ethics, 21(1), 127–137.

Marginson, S. (2017). Do rankings drive better performance? International Higher Education, 89, 6–8.

Moed, H. F. (2017). A critical comparative analysis of five world university rankings. Scientometrics, 110(2), 967–990.

Mojon-Azzi, S. M., & Mojon, D. S. (2004). Scientific misconduct: from salami slicing to data fabrication. Ophthalmic research, 36(1), 1–3.

Mongeon, P., & Larivière, V. (2016). Costly collaborations: The impact of scientific fraud on co-authors’ careers. Journal of the Association for Information Science and Technology, 67(3), 535–542.

Morris, H. (2011). Rankings and the reshaping of higher education: the battle for world class excellence. Studies in Higher Education, 36(6), 741–742.

Mumford, M. D., Connelly, S., Brown, R. P., Murphy, S. T., Hill, J. H., Antes, A. L., & Devenport, L. D. (2008). A sensemaking approach to ethics training for scientists: Preliminary evidence of training effectiveness. Ethics & Behavior, 18(4), 315–339.

Nair, S., Yean, C., Yoo, J., Leff, J., Delphin, E., & Adams, D. C. (2020). Reasons for article retraction in anesthesiology: A comprehensive analysis. Canadian Journal of Anesthesia/Journal canadien d’anesthésie, 67(1), 57–63.

Pilatti, L. A., & Cechin, M. R. (2018). Perfil das universidades brasileiras de e com potencial de classe mundial. Avaliação, 23(1), 75–103.

Resnik, D. B., & Dinse, G. E. (2013). Scientific retractions and corrections related to misconduct findings. Journal of Medical Ethics, 39, 46–50.

Resnik, D. B., Wager, E., & Kissling, G. E. (2015). Retraction policies of top scientific journals ranked by impact factor. Journal of the Medical Library Association, 103(3), 136.

Rubbo, P., Helmann, C. L., Bilynkievycz dos Santos, C., & Pilatti, L. A. (2019a). Retractions in the engineering field: A study on the web of science database. Ethics & Behavior, 29(2), 141–155.

Rubbo, P., Pilatti, L. A., & Picinin, C. T. (2019b). Citation of retracted articles in engineering: A study of the Web of Science database. Ethics & Behavior, 29(8), 661–679.

Russo, M. (2014). Ética e integridade na ciência: da responsabilidade do cientista a responsabilidade coletiva. Estudos Avançados, 28(80), 189–198.

Sharma, B. B., & Singh, V. (2011). Ethics in writing: Learning to stay away from plagiarism and scientific misconduct. Lung India, 28(2), 148–150.

Shin, J. C. (2013). The World-Class University: Concept and Policy Initiatives. In J. Shin & B. Kehm (Eds.), Institutionalization of World-Class University in Global Competition. (pp. 17–32). Springer: Dordrecht.

Silva, J. A. T., & Dobránszki, J. (2017). Notices and policies for retractions, expressions of concern, errata and corrigenda: Their importance, content, and context. Science and Engineering Ethics, 23(2), 521–554.

Sox, H. C., & Rennie, D. (2006). Research misconduct, retraction, and cleansing the medical literature: lessons from the Poehlman case. Annals of Internal Medicine, 144(8), 609–613.

Steen, R. G. (2011). Retractions in the scientific literature: Is the incidence of research fraud increasing? Journal of Medical Ethics, 37, 249–253.

Steen, R. G., Casadevall, A., & Fang, F. C. (2013). Why has the number of scientific retractions increased? PLoS ONE, 8(7), e68397.

Stroebe, W., Postmes, T., & Spears, R. (2012). Scientific misconduct and the myth of selfcorrection in science. Perspectives on Psychological Science, 7(6), 670–688.

Tang, L. (2019). Five ways China must cultivate research integrity. Nature, 575, 589–591.

Tang, L., Hu, G., Sui, Y., Yang, Y., & Cao, C. (2020). Retraction: The “Other Face” of research collaboration? Science and Engineering Ethics, 26, 1681–1708.

Thomas, J. R., Nelson, J. K., & Silverman, S. J. (2002). Métodos de Pesquisa em Atividade Física. Artemed Editora.

Van Leeuwen, T. (2019). Analysis of retracted papers: Initiators and reasons for retraction. COPE European Seminar 2019, Leiden, Netherlands.

Van Noorden, R. (2011). Science publishing: The trouble with retractions. Nature, 478(7367), 26–28.

Van Leeuwen T. N. & Luwel, M. (2014). An in-depth analysis of papers retracted in the Web of Science. In E. Noyons (Ed.), “Context Counts: Pathways to Master Big and Little Data”. Proceedings of the science and technology indicators conference 2014 Leiden (pp. 337–344). Universiteit Leiden.

Vuong, Q. H. (2020). The limitations of retraction notices and the heroic acts of authors who correct the scholarly record: An analysis of retractions of papers published from 1975 to 2019. Learned Publishing, 33, 119–130.

Vuong, Q. H., La, V. P., Hồ, M. T., Vuong, T. T., & Ho, M. T. (2020). Characteristics of retracted articles based on retraction data from online sources through February 2019. Science Editing, 7(1), 34–44.

Wager, E., & Williams, P. (2011). Why and how do journals retract articles? An analysis of Medline retractions 1988–2008. Journal of Medical Ethics, 37(9), 567–570.

Wager, E., Barbour, V., Yentis, S., & Kleinert, S. (2010). Retractions: Guidance from the committee on publication ethics (COPE). International Journal of Polymer Analysis and Characterization, 15(1), 2–6.

Williams, P., & Wager, E. (2013). Exploring why and how journal editors retract articles: Findings from a qualitative study. Science and Engineering Ethics, 19(1), 1–11.

Xin, H. (2009). Retractions put spotlight on China’s part-time professor system. Science, 323(5919), 1280–1281.

Yadav, S., Rawal, G., & Baxi, M. (2016). Plagiarism-a serious scientific misconduct. International Journal of Health Science and Research, 6(2), 364–366.

Zhang, M., & Grieneisen, M. L. (2013). The impact of misconduct on the published medical and non-medical literature, and the news media. Scientometrics, 96(2), 573–587.

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The author declares that they have no competing of interest.

Rights and permissions

About this article

Cite this article

Lievore, C., Rubbo, P., dos Santos, C.B. et al. Research ethics: a profile of retractions from world class universities. Scientometrics 126, 6871–6889 (2021). https://doi.org/10.1007/s11192-021-03987-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-021-03987-y