Abstract

In recent times, composite indicators have gained astounding popularity in a wide variety of research areas. Their adoption by global institutions has further captured the attention of the media and policymakers around the globe, and their number of applications has surged ever since. This increase in their popularity has solicited a plethora of methodological contributions in response to the substantial criticism surrounding their underlying framework. In this paper, we put composite indicators under the spotlight, examining the wide variety of methodological approaches in existence. In this way, we offer a more recent outlook on the advances made in this field over the past years. Despite the large sequence of steps required in the construction of composite indicators, we focus particularly on two of them, namely weighting and aggregation. We find that these are where the paramount criticism appears and where a promising future lies. Finally, we review the last step of the robustness analysis that follows their construction, to which less attention has been paid despite its importance. Overall, this study aims to provide both academics and practitioners in the field of composite indices with a synopsis of the choices available alongside their recent advances.

Similar content being viewed by others

1 Introduction

In the past decades, we have witnessed an enormous upsurge in available information, the extent and use of which are characterised by the founder of the World Economic Forum as the ‘Fourth Industrial Revolution’ (Schwab 2016, para. 2). While Schwab focuses on the use and future impact of these data—ranging from policy and business analysis to artificial intelligence—one of the key underlying points is that this enormous and exponential increase in available information hides another issue: the need for its interpretation and consolidation. Indeed, an ever-increasing variety of information, broadly speaking in the form of indicators, increases the difficulty involved in interpreting a complex system. To illustrate this, consider for example a phenomenon like well-being. In principle, it is a very complex concept that is particularly difficult to capture with only a single indicator (Decancq and Lugo 2013; Decancq and Schokkaert 2016; Patrizii et al. 2017). Hence, one should enlarge the range of indicators to encompass all the necessary information on a matter that is generally multidimensional in nature (Greco et al. 2016). However, in such a case, it would be very difficult for the public to understand ‘well-being’ by, say, identifying common trends among several individual indices. They would understand a complex concept more easily in the form of a sole number that encompasses this plethora of indicators (Saltelli 2007). Reasonably, this argument may raise more questions than it might answer. For instance, how would this number be produced? Which aspects of a concept would it encompass? How would they be aggregated into the form of a simple interpretation for the public and so on? This issue, and the questions that it raises, introduce the concept of ‘composite indicators’.

Defining ‘composite’ (sometimes also encountered as ‘synthetic’) indicators should be a straightforward task given their widespread use nowadays. Even though it appears that there is no single official definition to explain this concept, the literature provides a wide variety of definitions. According to the European Commission’s first state-of-the-art report (Saisana and Tarantola 2002, p. 5), composite indicators are ‘[…] based on sub-indicators that have no common meaningful unit of measurement and there is no obvious way of weighting these sub-indicators’. Freudenberg (2003, p. 5) identifies composite indicators as ‘synthetic indices of multiple individual indicators’. Another potential definition provided by the OECD’s first handbook for constructing composite indicators (Nardo et al. 2005, p. 8) is that a composite indicator ‘[…] is formed when individual indicators are compiled into a single index, on the basis of an underlying model of the multi-dimensional concept that is being measured’. This list of definitions could continue indefinitely. By pooling them together, a common pattern emerges and relates to the central idea of the landmark work of Rosen (1991). Essentially, a composite indicator might reflect a ‘complex system’ that consists of numerous ‘components’, making it easier to understand in full rather than reducing it back to its ‘spare parts’. Although this ‘complexity’, from a biologist’s viewpoint, refers to the causal impact that organisations exert on the system as a whole, the intended meaning here is astonishingly appropriate for the aim of composite indicators. After all, Rosen asserts that this ‘complexity’ is a universal and interdisciplinary feature.

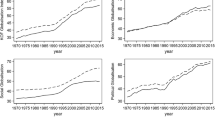

Despite their vague definition, composite indicators have gained astounding popularity in all areas of research. From social aspects to governance and the environment, the number of their applications is constantly growing at a rapid pace (Bandura 2005, 2008, 2011). For instance, Bandura (2011) identifies over 400 official composite indices that rank or assess a country according to some economic, political, social, or environmental measures. In a complementary report by the United Nations’ Development Programme, Yang (2014) documents over 100 composite measures of human progress. While these inventories are far from being exhaustive—compared with the actual number of applications in existence—they give us a good understanding of the popularity of composite indicators. Moreover, a search for ‘composite indicators’ in SCOPUS, conducted in January 2017, shows this trend (see Fig. 1). The increase over the past 20 years is exponential, and the number of yearly publications shows no sign of a decline. Moreover, their widespread adoption by global institutions (e.g. the OECD, World Bank, EU, etc.) has gradually captured the attention of the media and policymakers around the globe (Saltelli 2007), while their simplicity has further strengthened the case for their adoption in several practices.

Nevertheless, composite indicators have not always been so popular, and there was a time when considerable criticism surrounded their use (Sharpe 2004). In fact, according to the author, their very existence was responsible for the creation of two camps in the literature: aggregators versus non-aggregators. In brief,Footnote 1 the first group supports the construction of synthetic indices to describe an overall complex phenomenon, while the latter opposes it, claiming that the final product is statistically meaningless. While it seems idealistic to assume that this debate will ever be resolved (Saisana et al. 2005), it quickly drew the attention of policymakers and the public. Sharpe (2004) describes the example of the Human Development Index (HDI), which has received a vast amount of criticism since its creation due to the arbitrariness of its methodological framework (Ray 2008). However, it is the most well-known composite index to date. Moreover, it led the 1998 Nobel Prize-winning economist A. K. Sen, once one of the main critics of aggregators, to change his position due to the attention that the HDI attracted and the debate that it fostered afterwards (Saltelli 2007). He characterised it as a ‘success’ that would not have happened in the case of non-aggregation (Sharpe 2004, p. 11). Seemingly, this might be considered as the first win for the camp of aggregators. Nevertheless, the truth is that we are still far from settling the disputes and the criticism concerning the stages of the construction process (Saltelli 2007).

This is natural, as there are many stages in the construction process of a composite index and criticism could grow simultaneously regarding each of them (Booysen 2002). Moreover, if the procedure followed is not clear and reasonably justified to everyone, there is considerable room for manipulation of the outcome (Grupp and Mogee 2004; Grupp and Schubert 2010). Working towards a solution to this problem, the OECD (2008, p. 15) identifies a ten-step process, namely a ‘checklist’. Its aim is to establish a common guideline as a basis for the development of composite indices and to enhance the transparency and the soundness of the process. Undeniably, this checklist aids the developer in gaining a better understanding of the benefits and drawbacks of each choice and overall in achieving the kind of coherency required in the steps of constructing a composite index. In practice, though, this hardly reduces the criticism that an index might receive. This is because, even if one does indeed achieve perfect coherency (from choosing the theoretical framework to developing the final composite index), there might still be certain drawbacks in the methodological framework itself.

The purpose of this study is to review the literature with respect to the methodological framework used to construct a composite index. While the existing literature contains a number of reviews of composite indicators, the vast majority particularly focuses on covering the applications for a specific discipline. To be more precise, several reviews of composite indicators’ applications exist in the fields of sustainability (Bohringer and Jochem 2007; Singh et al. 2009, 2012; Pissourios 2013; Huang et al. 2015), the environment (Juwana et al. 2012; Wirehn et al. 2015), innovation (Grupp and Mogee 2004; Grupp and Schubert 2010), and tourism (Mendola and Volo 2017). However, the concept of composite indicators is interdisciplinary in nature, and it is applied to practically every area of research (Saisana and Tarantola 2002). Since the latest reviews on the methodological framework of composite indices were published a decade ago (Booysen 2002; Saisana and Tarantola 2002; Freudenberg 2003; Sharpe 2004; Nardo et al. 2005; OECD 2008) and a great number of new publications have appeared since then (see Fig. 1), we re-examine the literature focusing on the methodological framework of composite indicators and more specifically on the weighting, aggregation, and robustness steps. These steps are the focus of the paramount criticism as well as the recent development. In the following, Sect. 2 describes the weighting schemes found in the literature and Sect. 3 covers the step of aggregation. Section 4 provides an overview of the methods used for robustness checks following the construction of an index, and Sect. 5 contains a discussion and concluding remarks.

2 On the Weighting of Composite Indicators

The meaning of weighting in the construction of composite indicators is twofold (OECD 2008, pp. 31–33). First, it refers to the ‘explicit importance’ that is attributed to every criterion in a composite index. More specifically, a weight may be considered as a kind of coefficient that is attached to a criterion, exhibiting its importance relative to the rest of the criteria. Second, it relates to the implicit importance of the attributes, as this is shown by the ‘trade-off’ between the pairs of criteria in an aggregation process. A more detailed description of the latter and the difference between these two meanings is presented in Sect. 3, in which we describe the stage of aggregation and explain the distinction between ‘compensatory’ and ‘non-compensatory’ approaches.

Undeniably, the selection of weights might have a significant effect on the units ranked. For instance, Saisana et al. (2005) show that, in the case of the Technology Achievement Index, changing the weights of certain indicators seems to affect several of the units evaluated, especially those that are ranked in middle positions.Footnote 2 Grupp and Mogee (2004) and Grupp and Schubert (2010, p. 69) present two further cases of science and technology indicators, for which the country rankings could significantly change or otherwise be ‘manipulated’ in the case of different weighting schemes. This is a huge challenge in the construction of a composite indicator, often referred to as the ‘index problem’ (Rawls 1971). Basically, even if we reach an agreement about the indicators that are to be used, the question that follows—and the most ‘pernicious’ one (Freudenberg 2003)—is how a weighting scheme might be achieved. Although far from reaching a consensus (Cox et al. 1992), the literature tries to solve this puzzle in several ways. Before we venture further to analyse the weighting approaches in existence, we should first note that no weighting system is above criticism. Each approach has its benefits and drawbacks, and there is no ultimate case of a clear winner or a kind of ‘one-size-fits-all’ solution. On the contrary, it is up to the index developer to choose a weighting system that is best fitted to the purpose of the construction, as disclosed in the theoretical framework (see OECD 2008, p. 22).

2.1 No or Equal Weights

As simple as it sounds, the first option is not to distribute any weights to the indicators, otherwise called an ‘attributes-based weighting system’ (see e.g. Slottje 1991, pp. 686–688). This system may have two consequences. First, the overall score (index) could simply be the non-weighted arithmetic average of the normalised indicators (Booysen 2002; Singh et al. 2009; Karagiannis 2017). A common problem that appears here, though, is that of ‘double counting’Footnote 3 (Freudenberg 2003; OECD 2008). Of course, this issue might partially be moderated by averaging the collinear indicators as well prior to their aggregation into a composite (Kao et al. 2008). The second alternative in the absence of weights is that the composite index is equal to the sum of the individual rankings that each unit obtains in each of the sub-indicators (e.g. see the Information and Communication Technologies Index in Saisana and Tarantola 2002, p. 9). By relying solely on aggregating rankings, this approach fails to achieve the purpose of vastly improving the statistical information, as it does not benefit from the absolute level of information of the indicators (Saisana and Tarantola 2002).

Equal weighting is the most common scheme appearing in the development of composite indicators (Bandura 2008; OECD 2008). It is important to note here that the difference between distributing equal weights and not distributing weights at all (e.g. the ‘non-weighted arithmetic average’ discussed above) is that equal weighting schemes could be applied hierarchically. More specifically, if the indicators are grouped into a higher order (e.g. a dimension) and the weighting is distributed equally dimension-wise, then it does not necessarily mean that the individual indicators will have equal weights (OECD 2008). For instance, ISTAT (2015) provides the ‘BES’, a broad data set of 134 socio-economic indicators for the 20 Italian regions. These are unevenly grouped into 12 dimensions. If equal weights are applied to the highest hierarchy level (e.g. dimensions) a priori, then the sub-indicators are not weighted equally due to the different number of indices in each dimension. In general, there are various justifications for most applications choosing equal weights a priori. These include: (1) simplicity of construction, (2) a lack of theoretical structure to justify a differential weighting scheme, (3) no agreement between decision makers, (4) inadequate statistical and/or empirical knowledge, and, finally, (5) alleged objectivity (see Freudenberg 2003; OECD 2008; Maggino and Ruviglioni 2009; Decancq and Lugo 2013). Nevertheless, it is often found that equal weighting is not adequately justified (Greco et al. 2017). For instance, choosing equal weights due to the ‘simplicity of the construction’,Footnote 4 instead of an alternative scheme that is based on a proper theoretical and methodological framework, bears a huge oversimplification cost, especially in certain aggregation schemes (Paruolo et al. 2013). Furthermore, we could argue that, conceptually, equal weights miss the point of differentiating between essential and less important indicators by treating them all equally. In any case, the co-operation of experts and the public in an open debate might resolve the majority of the aforementioned justifications (Freudenberg 2003). Finally, considering equal weights as an ‘objective’ technique (relative to the ‘subjective’ exercise of a developer who sets the weights arbitrarily) is far from being undisputable. Quoting Chowdhury and Squire (2006, p. 762), setting weights to be equal ‘[seems] obviously convenient, but also universally considered to be wrong’. Ray (2008, p. 5) and Mikulić et al. (2015) claim that equal weighting is not only wrong—as it does not convey the realistic image—but also an equally ‘subjective judgement’ to other arbitrary weighting schemes in existence. This last argument prepares the scene for the consideration of a plurality of weighting systems, mainly related to the representation of the preferences of a ‘plurality of individuals’ (see e.g. Greco et al. 2017).

2.2 Plurality of Weighting Systems

Understandably, the decision maker could choose from a range of weighting schemes, depending on the structure and quality of the data or her beliefs. More specifically, in the first case, higher weighting could be assigned to indicators with broader coverage (as opposed to those with multiple cases of treated missing data) or those taken from more trustworthy sources, as a way to account for the quality of the indicators (Freudenberg 2003). However, an issue here is that this could result in a ‘biased selection’ in favour of proxies that are not able to identify and capture properly the information desired to measure (see e.g. Custance and Hillier 1998, pp. 284–285; OECD 2008, p. 32). Moreover, indicators should be chosen carefully a priori and according to a conceptual and quality framework (OECD 2008). Otherwise, a ‘garbage in–garbage out’ outcome may be produced (Funtowicz and Ravetz 1990), which in this case is that of a composite indicator reflecting ‘insincere’ dimensions in relation to those desired (Munda 2005a). When the weighting scheme is chosen by the developer of an index, naturally this means that it is conceived as ‘subjective’, since it relies purely on the developer’s perceptions (Booysen 2002). There are several participatory approaches in the literature to make this subjective exercise as transparent as possible. These involve a single or several stakeholders deciding on the weighting scheme to be chosen. Stakeholders could be expert analysts, policymakers, or even citizens to whom policies are addressed. From a social viewpoint, the combination of all of them in an open debate could be an ideal approach theoretically (Munda 2005b, 2007),Footnote 5 but it is only viable if a well-defined framework for a national policy exists (OECD 2008). Indeed, if one could imagine a framework on which policies will be based, enlarging the set of decision makers to include all the participants’ preferences is probably the desired outcome (Munda 2005a). However, if the objective is not well defined or the number of indicators is very large and it is probably impossible to reach a consensus about their importance, this procedure could result in an endless debate and disagreement between the participants (Saisana and Tarantola 2002). Moreover, if the objective involves an international comparison, in which no doubt the problem is significantly enlarged, common ground is even harder to achieve or simply ‘inconsistent outcomes’ may be produced (OECD 2008, p. 32). For instance, one country’s most important objective could be different from another country’s (e.g. economy vs environment). In general, participatory methods are seen as a conventional way for transparent and subjective judgements, and they could be effective and of great use when they fulfil the aforementioned requirements. However, since these techniques may yield alternative weighting schemes (Saisana et al. 2005),Footnote 6 one should carefully choose the most suitable according to their properties, of which we provide a brief overview in the following subsections.

2.2.1 Budget Allocation Process

In the budget allocation process (BAP), a set of chosen decision makers (e.g. a panel of experts) is given ‘n’ points to distribute to the indicators, or groups of indicators (e.g. dimensions), and then an average of the experts’ choices is used (Jesinghaus 1997).Footnote 7 Two prerequisites are the careful selection of the group of experts and the total number of indicators that will be evaluated. A rule of thumb is to have fewer than 10 indicators so that the approach is optimally executed cognitively. Otherwise, problems of inconsistency could be introduced (Saisana and Tarantola 2002). The BAP is used for estimating the weights in one of the Economic Freedom Indices (Gwartney et al. 1996) and by the European Commission (JRC) for the creation of the ‘e-Business Readiness Index’ (Pennoni et al. 2005) and the ‘Internal Market Index’ (Tarantola et al. 2004). Moreover, several studies in the literature use this method; for the most recent see, for example, Hermans et al. (2008), Couralet et al. (2011), Zhou et al. (2012), and Dur and Yigitcanlar (2015). A specific issue with the BAP arises during the process of indicator comparison. Decision makers might be led to ‘circular thinking’ (see e.g. Saisana et al. 2005, p. 314), the probability of which increases with the number of indicators to be evaluated. Circular thinking is both moderated and verifiable in the analytic hierarchy process (AHP), which is discussed in the following subsection.

2.2.2 Analytic Hierarchy Process

Originally introduced by Saaty in the 1970s (Saaty 1977, 1980), the AHP translates a complex problem into a hierarchy consisting of three levels: the ultimate goal, the criteria, and the alternatives (Ishizaka and Nemery 2013, pp. 13–14). Experts have to assign the importance of each criterion relative to the others. More specifically, pairwise comparisons among criteria are carried out by the decision makers. These are expressed on an ordinal scale with nine levels, ranging from ‘equally important’ to ‘much more important’, representing how many times more important one criterion is than another one.Footnote 8 The weights elicited with the AHP are less prone to errors of judgement, as discussed in the previous subsection. This happens because, in addition to setting the weights relatively, a consistency measure is introduced (namely the ‘inconsistency ratio’), assessing the cognitive intuition of decision makers in the pairwise comparison setting (OECD 2008). Despite its popularity as a technique to elicit weights (Singh et al. 2007; Hermans et al. 2008), it still suffers from the same problem as the BAP (Saisana and Tarantola 2002). That is, on the occasion that the number of indicators is very large, it exerts cognitive stress on decision makers, which in the AHP is amplified due to the pairwise comparisons required (Ishizaka 2012).

2.2.3 Conjoint Analysis

Conjoint analysis (CA) is commonly encountered in consumer research and marketing (Green et al. 2001; OECD 2008; Wind and Green 2013), but applications in the field of composite indices follow suit, mainly in the case of quality-of-life indicators (Ülengin et al. 2001, 2002; Malkina-Pykh and Pykh 2008). CA is a disaggregation method. It could be seen as the exact opposite of the AHP, as it moves from the overall priority to determining the weight of the criteria. More specifically, the model first seeks the preferences of individuals (e.g. experts or the public) regarding a set of alternatives (e.g. countries, firms, or products) and then decomposes them according to the individual indicators. Theoretically, the indicators’ weights are obtained via the calculation of the marginal rates of substitution of the overall probability function.Footnote 9 In practice we can derive the importance of a criterion by dividing the range of importance of that criterion in the respondent’s opinion by the total sum of ranges of all the criteria (Maggino and Ruviglioni 2009). While it might seem easier to obtain a preference estimation of the ultimate objective first and then search for the importance of its determinants (in contrast to the AHP), CA carries alternative limitations. Its major drawbacks are its overall complexity, the requirement of a large sample, and an overall pre-specified utility function, which is very difficult to estimate (OECD 2008; Wind and Green 2013).

What one might derive from the above section is that participatory techniques are helpful tools overall. They make the subjectivity behind the process of weighting the indicators controllable and, most importantly, transparent. In fact, this whole act of gathering a panel consisting of experts, policymakers, or even citizens, who will mutually decide on the importance of the factors at stake, is a natural and desired behaviour in a society (Munda 2005a). Nevertheless, it is rather difficult to apply in contexts in which the phenomena to be measured are not well defined and/or the number of underlying indicators is very large. These approaches then stop being consistent, and they ultimately become unmanageable and ineffective. What is more, in the case in which the participatory audience does not clearly understand a framework (e.g. to evaluate the importance of an indicator/phenomenon or what it actually represents), these methods would lead to biased results (OECD 2008).

2.3 Data-Driven Weights

In the aftermath of participatory approaches, this ‘subjectivity’ behind the arbitrariness in decision makers’ weight selection is dismissed by other statistical methods that claim to be more ‘objective’.Footnote 10 This property is increasingly claimed to be desirable in the choice of weights (Ray 2008), thus stirring up interest in approaches like correlation analysis or regression analysis, principal component analysis (PCA) or factor analysis (FA), and data envelopment analysis (DEA) models and their variations. These so-called ‘data-driven techniques’ (Decancq and Lugo 2013, p. 19), as their name suggests, emerge from the data themselves under a specific mathematical function. Therefore, it is often argued that they potentially do not suffer from the aforementioned problems of ‘manipulation’ of the results (Grupp and Mogee 2004, p. 1382) and the subjective, direct weighting exercise of various decision makers (Ray 2008, p. 9). However, these approaches bear a different kind of criticism, deeply rooted in the core of their philosophy. More specifically, Decancq and Lugo (2013, p. 9) distinguish these techniques from the aforementioned ones based on the ‘is–ought’ distinction that is found in the work of a notable philosopher of the eighteenth century, David Hume. In the authors’ words: ‘it is impossible to derive a statement about values from a statement about facts’ (p. 9). In other words, they claim that one should be very cautious in deriving the importance of a concept (e.g. indicator/dimension) based on what the data ‘consider’ to be a fact, as this appears to be the ‘is’ that we observe but not the ‘ought’ that we are seeking. After all, statistical relationships between indicators—for example in the form of correlation—do not always represent the actual influence between them (Saisana and Tarantola 2002). This appears to be one side of the criticism that these approaches receive, and it is related to the philosophical aspect underlying their use. Further criticism appearing in the literature is focused on their specific properties, which we will examine individually in the following subsections.

2.3.1 Correlation Analysis

Correlation analysis is mostly used in the first steps of the construction process to examine the structure and the dynamics of the indicators in the data set (Booysen 2002; OECD 2008). For instance, it might determine a very strong correlation between two sub-indicators within a dimension, which, depending on the school of thought (e.g. see Saisana et al. 2005, p. 314), may then be moderated by accounting for it in the weighting step (OECD 2008; Maggino and Ruviglioni 2009). Nevertheless, this approach might still serve as a tool to obtain objective weights (Ray 2008). According to the author, there are two ways in which weights might be elicited using correlation analysis. The first is based on a simple correlation matrix, with the indicator weights being proportional to the sum of the absolute values of that row or column, respectively. In the second method, known as ‘capacity of information’ (Hellwig 1969; Ray 1989), first the developer chooses a distinctive variable in the data set that, according to the author, plays the role of an endogenous criterion. Then the developer computes the correlation of each indicator with that distinctive variable. These correlation coefficients are used to determine the weights of the indicators, with those having the highest correlation accordingly gaining the highest weights. More specifically, an indicator’s weight is given by the ratio of the squared correlation coefficient of that indicator with the distinctive variable to the sum of the squared correlation coefficients of the rest of the indicators with that variable (Ray 2008). One issue with both the aforementioned uses is that the correlation could be statistically insignificant. Moreover, even if statistical significance applies, it does not imply causality but rather shows a similar or opposite co-movement between indicators (Freudenberg 2003; OECD 2008).

2.3.2 Multiple Linear Regression Analysis

Multiple linear regression analysis is another approach through which weights can be elicited. By moving beyond simple statistical correlation, the decision maker is able to explore the causal link between the sub-indicators and a chosen output indicator. However, this raises two concerns that the developer must bear in mind. First, these models assume strict linearity, which is hardly the norm with composite indices (Saisana et al. 2005). Second, if there was an objective and effective output measure for the sub-indicators to be regressed on, there would not be a need for a composite index in the first place (Saisana and Tarantola 2002). With respect to the latter, according to the authors, an indicator that is generally assumed to capture the wider phenomenon to be studied might be used. For instance, in the National Innovative Capacity Index (Porter and Stern 2001), the dependent variable used in the regression analysis is the log of patents. The authors argue that this is a broadly accepted variable in the literature, as it sufficiently captures the levels of innovation in a country. In the absence of such a specific indicator, the gross national product per capita could serve as a more generalised variable (Ray 2008), as it is often linked to most socio-economic aspects that a composite index might be aiming to measure. However, that would dismiss the whole momentum that composite indicators have gained by refraining from following the common approach of solely economic output (Costanza et al. 2009; Stiglitz et al. 2009; Decancq and Schokkaert 2016; Patrizii et al. 2017). Finally, in the case in which a developer has multiple such output variables, canonical correlation analysis could be used, which is a generalisation of the previous case (see e.g. Saisana and Tarantola 2002, p. 53).

2.3.3 Principal Component Analysis and Factor Analysis

Principal component analysis (PCA; Pearson 1901) and factor analysis (FA; Spearman 1904) are statistical approaches with the aim of reductionism. More specifically, the core of their philosophy is to capture the highest variance possible in the original variables (standardised for this purpose) with as few components as possible (Ram 1982). In PCA the original data may be described by a series of equations, as many as the number of indicators. These equations essentially represent linear transformations of the original data, constructed in such a way that the maximum variance of the original variables is explained with the first equation, the second-highest variance (which is not explained by the first equation) is explained by the second equation, and so on. In FA the outcome is rather similar, but the idea is somewhat different. Here the original data supposedly depend on underlying common and specific factors, which can possibly explain the variance in the original data set. FA is slightly more complex than PCA in the sense that it involves an additional step, in which a choice has to be made by the developer (e.g. the choice of an extraction method). Finally, for both PCA and FA, certain choices must be made by the decision maker; hence, subjectivity is introduced to a certain degree. These choices involve the number of components/factors to be retained or the rotation method to be used. Nonetheless, several criteria or rules of thumb exist in the literature for each of the two approaches to facilitate the proper choice (e.g. see OECD 2008, pp. 66–67 and p. 70).

In general, there are several applications using FA or PCA to elicit the weights for the indicators, especially in the context of well-being and poverty.Footnote 11 One of the first applications is that of Ram (1982), using PCA in the case of a physical quality-of-life indicator, followed by Noorbakhsh (1996), who uses PCA to weigh the components of HDI. Naturally, further applications follow suit both in the literature (Klasen 2000; McGillivray 2005; Dreher 2006) and in official indicators provided by large organisations (e.g. the Internal Market Index, Science and Technology Indicator, and Business Climate Indicator, see Saisana and Tarantola 2002; the Environmental Degradation Index, see Bandura 2008). The standard procedure in using PCA as a weight elicitation technique is to use the factor loadings of the first component to serve as weights for the indicators (Greyling and Tregenna 2016). However, sometimes the first component alone is not adequate to explain a large portion of the variance of the indicators; thus, more components are needed. Nicoletti et al. (2000) develop indicators of product market regulation, illustrating how these can be accomplished using FA. The authors use PCA as the extraction method and rotate the components with the varimax technique, in this way minimising the number of indicators with high loadings on each component. By considering the factor loadings of all the retained factors (see Nicoletti et al. 2000, pp. 19–22), this allows the preservation of the largest proportion of the variation in the original data set.

This method is frequently used in composite indicators produced by large organisations (e.g. the Business Climate Indicator, Relative Intensity of Regional Problems in the Community, and General Indicator of Science and Technology, see Saisana and Tarantola 2002) and can be found in several studies in the literature (Mariano and Murasawa 2003; Gupta 2008; Hermans et al. 2008; Ediger and Berk 2011; Salvati and Carlucci 2014; Riedler et al. 2015; Li et al. 2016; Tapia et al. 2017). However, according to Saisana and Tarantola (2002), the use of these approaches is not feasible in certain cases, due to either negative weights assigned (e.g. the Environmental Sustainability Index) or a very low correlation among the indicators (e.g. synthetic environmental indices). Finally, PCA can be used for cases in which the elicitation of weights is not the main goal. For instance, Ogwang and Abdou (2003) review the use of these models in selecting the ‘principal variables’. More specifically, PCA/FA could be used to select a single or a subset of variables to include in the construction of a composite index that can explain the variation of the overall data set adequately. Thus, they could serve as an aiding tool, enabling the developer to gain a better understanding of the dimensionality in the considered phenomenon or the structure of the indicators accordingly.

Understandably, these approaches might seem popular (e.g. with respect to their use in the literature) and convenient (e.g. with respect to the objectivity and transparency in their process). Nevertheless, it is important to note a few issues relating to their use at this point. First, property-wise, the use of PCA/FA involves the assumptions of having continuous indicators and a linear relationship among them. In the case in which these assumptions do not hold, the use of non-linear PCA (or otherwise categorical PCA; CATPCA) is suggested (see e.g. Greyling and Tregenna 2016, p. 893). Second, the nature and philosophy of these approaches rely on the statistical properties of the data, which can be seen as both an advantage and a drawback. For instance, this reductionism could be proven to be very useful in some cases in which problems of ‘double counting’ exist. On the other hand, if there is no correlation between the indicators or the variation of a variable is very small, these techniques might even fail to work.Footnote 12 Furthermore, the weights that are assigned endogenously by PCA/FA do not necessarily correspond to the actual linkages among the indicators, particularly statistical ones (Saisana and Tarantola 2002). Therefore, one should be cautious about how to interpret these weights and especially about the extent to which one might use these methods, as the truth is that they do not necessarily reflect a sound theoretical framework (De Muro et al. 2011). Additionally, a general issue with both these approaches is that they are sensitive to the construction of the data. More specifically, if, in an evaluation exercise using PCA/FA, several units are added or subtracted afterwards (especially outliers), this may significantly change the weights that are used to construct the overall index (Nicoletti et al. 2000). However, this issue is addressed with robust variations of PCA (e.g. see Ruymgaart 1981; Li and Chen 1985; Hubert et al. 2005). Finally, with the obtained weights being inconsistent over time and space, the comparison might eventually prove to be very difficult (De Muro et al. 2011, p. 6).

2.3.4 Data Envelopment Analysis (DEA)

Originally developed by Charnes et al. (1978), DEA uses mathematical programming to measure the relative performance of several units (e.g. businesses, institutions, countries, etc.), and hence to evaluate them, based on a so-called ‘efficiency’ score (see Cooper et al. 2000). This score is obtained by a ratio (the weighted sum of outputs to the weighted sum of inputs) that is computed for every unit under a minimisation/maximisation function set by the developer. From this linear programming formulation, a set of weights (one for each unit) is endogenously determined in such a way as to maximise their ‘efficiency’ under some given constraints (Hermans et al. 2008). According to Mahlberg and Obersteiner (2001, in Despotis 2005a, p. 970), the first authors to propose the use of DEA in the HDI context, this approach constitutes a more realistic application, because each country is ‘benchmarked against best practice countries’. In the context of composite indicators, the classic DEA formulation is adjusted, as usually all the indicators are treated as outputs, thereby considering no inputs (see Hermans et al. 2008). Therefore, the denominator of the abovementioned ratio—that is, the weighted inputs of the units—comprises a dummy variable equal to one, whereas the nominator—that is, the weighted outputs—comprises a weighted sum of the indicators that forms the overall composite index (Yang et al. 2017). In this field, this model is mostly referred to as the classic ‘benefit-of-the-doubt’ approach (Cherchye 2001; Cherchye et al. 2004, 2007), originally introduced by Melyn and Moesen (1991) in a context of macroeconomic evaluation.

Due to the desirable properties of the endogenously calculated differential weighting, applications in the literature follow suit (e.g. Takamura and Tone 2003; Despotis 2005a; Murias et al. 2006; Zhou et al. 2007; Cherchye et al. 2008; Hermans et al. 2008; Antonio and Martin 2012; Gaaloul and Khalfallah 2014; Martin et al. 2017). Indeed, the differential weighting scheme between units (e.g. countries) is potentially a desirable property for policymakers, because each unit chooses its own weights in such a way as to maximise its performance.Footnote 13 Thus, any potential conflicts, for example the chosen weights not favouring any unit, are in fact dismissed (Yang et al. 2017). This is a key reason for the huge success of this approach (Cherchye et al. 2007, 2008). To understand this argument better, one may consider the following example of two countries. Let us imagine that these countries have different policy goals for different areas (e.g. economy vs environment); thus, each spends its resources accordingly. Potentially, they could perform better in different areas precisely for that particular reason. Therefore, in a weighting exercise, each country would choose to weigh significantly higher those exact dimensions on which it performs better to reflect that effect. However, this argument is criticised for the following reasons. First, on a theoretical basis, this approach dismisses one of the three basic requirements in social choice theory, which acts as a response to Arrow’s theorem (Arrow 1963): ‘neutrality’. In brief, neutrality states that ‘all alternatives (e.g. countries) must be treated equally’ (OECD 2008, p. 105).Footnote 14 Second, if we indeed accept that each unit could declare its own preferences in the weighting process, for example according to the different policies that they follow (Cherchye et al. 2007, 2008)—thus entirely dismissing the ‘neutrality’ principle—another problem that arises in the process is related to the calculation of these weights. More specifically, consider an example of a DEA approach, in which the desired output is the maximisation of the value of the composite index from each unit’s perspective. Executing this technique with the basic constraints (e.g. see Despotis 2005a, or Cherchye et al. 2007) will probably result in all the weighting capacity being assigned to the indicator with the highest value (e.g. see Hermans et al. 2008, pp. 1340–1341). Furthermore, since these DEA models are output-maximised, holding the unitary input constant, it often occurs that, in the absence of further constraints, after the maximisation/minimisation process, a multiplicity of equilibria is introduced (Fusco 2015, p. 622). Meanwhile, the majority of the units evaluated will be deemed to be efficient (e.g. they are assigned a value equal to ‘1’)Footnote 15 (Zhou et al. 2007; Decancq and Lugo 2013; Yang et al. 2017).

A simple solution to this problem is for more constraints to be placed by the decision maker, controlling, for instance, the lower and upper bounds of the weights of each indicator or group of indicators (e.g. dimensions).Footnote 16 For instance, Hermans et al. (2008) ask a panel of experts to assign weights to several indicators, using their opinions as binding constraints on the weights to be chosen by the DEA model. In the absence of information on such restrictions, the classic BoD model could be transformed into a ‘pessimistic’ one (Zhou et al. 2007; Rogge 2012). More specifically, while the classic BoD model finds the most favourable weights for each unit, the ‘pessimistic’ BoD model finds the least favourable weights. They are afterwards combined (either by a weighted or by a non-weighted average) to form a single, final index score (Zhou et al. 2007). There are several other methods in the literatureFootnote 17 that deal with the issue of adjusting the discrimination in BoD models, the most popular being the super-efficiency (Andersen and Petersen 1993), cross-efficiency (Sexton et al. 1986; Doyle and Green 1994; Green et al. 1996), PCA-DEA (Adler and Yazhemsky 2010), and DEA entropy (Nissi and Sarra 2016) models.

Another issue with most BoD models regards the differential weighting inherent in the process. The beneficial weights obtained by the model prove to be a challenge when comparability among the units is at stake. More specifically, each unit has a different set of weights, making it difficult to compare them by simply looking at the overall score. For this reason, a number of techniques exist in the literature that arrive at a common weighting scheme (e.g. see, among others, Despotis 2005a; Hatefi and Torabi 2010; Kao 2010; Morais and Camanho 2011; Sun et al. 2013). Of course, this rather decreases the desirability of this method—that of favourable weights in the eyes of policymakers—based on which this approach gained such momentum in the first place (Decancq and Lugo 2013).

Finally, we will discuss some recent developments in this area regarding the function or type of aggregation. More specifically, with respect to the aggregation function, while the classic BoD model is often specified as a weighted sum, recent studies present multiplicative forms merely to account for the issue of complete compensation, as it is introduced in the basic model of the weighted sum (e.g. see Blancas et al. 2013; Giambona and Vassallo 2014; Tofallis 2014; van Puyenbroeck and Rogge 2017). With respect to the type of aggregation, Rogge (2017), based on an earlier work of Färe and Zelenyuk (2003),Footnote 18 puts forward the idea of aggregating individual composite indicators into groups of composite indices. According to the author, one could be interested in analysing the performance of a cluster of individual units (e.g. groups of countries) rather than simply examining the units themselves. After the individual units’ performance is determined through classic BoD, a second aggregation takes place, again through BoD, but this time the indicators are the scores of countries, obtained in the previous step, and the weights reflect the shares of units in the aggregate form.

3 On the Aggregation of Composite Indicators

Weighting the indicators naturally leads to the final step in forming a composite index: ‘aggregation’. According to the latest handbook on constructing composite indices, aggregation methods may be divided into three distinctive categories: linear, geometric, and multi-criteria (see OECD 2008, p. 31, Table 4). However, this division might send a somewhat misleading message, since all these methods are included in the multi-criteria decision analysis framework.Footnote 19 Another distinctive categorisation of the aggregation methods in the literature would be that of choosing between ‘compensatory’ and ‘non-compensatory’ approaches (Munda 2005b). As we highlighted at the beginning of the previous section, the interpretation of the weights could be twofold: ‘trade-offs’ or ‘importance coefficients’.Footnote 20 The choice of the proper annotation, though, essentially boils down to the choice of the proper aggregation method (Munda 2005a, p. 118; OECD 2008, p. 33). Quoting the latter: ‘To ensure that weights remain a measure of importance, other aggregation methods should be used, in particular methods that do not allow compensability’. In other words, ‘compensability’ is inseparably connected with the term ‘trade-off’ (and vice versa), and, as a result, its very definition is presented as such (Bouyssou 1986). According to the author (p. 151): ‘A preference relation is non-compensatory if no trade-offs occur and is compensatory otherwise. The definition of compensation therefore boils down to that of a trade-off’. Consequently, according to the latter categorisation of aggregation approaches (i.e. that of ‘compensatory’ and ‘non-compensatory’), the linearFootnote 21 and geometricFootnote 22 aggregation schemes lie within the ‘compensatory’ aggregation scheme, while the ‘non-compensatory’ aggregation scheme contains other multi-criteria approaches, considering preferential relationships from the pairwise comparisons of the indicators (e.g. see OECD 2008, pp. 112–113). Similar to the issue of a non-existent perfect weighting scheme, there is no such thing as a ‘perfect aggregation’ scheme (Arrow 1963; Arrow and Raynaud 1986). Each approach is mostly fit for a different purpose and involves some benefits and drawbacks accordingly. In the following two subsections, we provide a brief overview of this situation by analysing the two aggregation settings and their properties, respectively.

3.1 Compensatory Aggregation

Among the compensatory aggregation approaches, the linear one is the most commonly used in composite indicators (Saisana and Tarantola 2002; Freudenberg 2003; OECD 2008; Bandura 2008, 2011). Two general issues must be considered in this additive utility-based approach. The first is that it assumes ‘preferential independence’ among indicators (OECD 2008, p. 103; Fusco 2015, p. 621), something that is conceptually considered as a very strong assumption to make (Ting 1971). Second, there is a chasm between the two perceptions of weights, translated into importance measures and trade-offs. More specifically, if one sets the weights by considering them as importance measures for the indicators, one will soon find that this is far from actually happening in this aggregation setting, and this situation is the norm rather than the exception (Anderson and Zalinski 1988; Munda and Nardo 2005; Billaut et al. 2010; Rowley et al. 2012; Paruolo et al. 2013). Quoting the latter (p. 611): ‘This gives rise to a paradox, of weights being perceived by users as reflecting the importance of a variable, where this perception can be grossly off the mark’. This happens because the weights in this setting should be perceived as trade-offs between pairs of indicators and therefore assigned as such from the very beginning. Decancq and Lugo (2013) stress this point by showing how weights in this setting express the marginal rates of substitution among pairs of indicators. Understandably, this trade-off implies constant compensability between indicators and dimensions; thus, a unit could compensate for the loss in one dimension with a gain in another (OECD 2008; Munda and Nardo 2009). This, however, is far from desirable in certain cases. For instance, Munda (2012, p. 338) considers an example of a hypothetical sustainability index, in which economic growth could compensate for a loss in the environmental dimension in the case of a compensatory approach. Of course, this argument could easily be extended to applications in other socio-economic areas,Footnote 23 albeit with the following point: constant compensation is always assumed in linear aggregation at the rate of substitution among pairs of indicators (e.g. wa/wb) (Decancq and Lugo 2013, p. 17). That is something that should be taken into consideration at the very beginning of the construction stage, the theoretical framework (OECD 2008).

One partial solution to that issue could be to use geometric aggregation instead. This approach is adopted when the developer of an index prefers only ‘some’ degree of compensability (OECD 2008, p. 32). While linear aggregation assumes constant trade-offs for all cases, geometric aggregation offers inferior compensability for indices with lower values (diminishing returns) (van Puyenbroeck and Rogge 2017). This makes it far more appealing in a benchmarking exercise in which, for instance, regions with lower scores in a given dimension will not be able to compensate fully in other dimensions (Greco et al. 2017). Moreover, the same regions could be even more motivated to increase their lower scores, as the marginal increase in these indicators will be much higher in contrast to regions that already achieve high scores (Munda and Nardo 2005). Therefore, under these circumstances, a switch from linear to geometric aggregation could even be considered both appealing and more realistic. One such case is that of probably the most well-known composite index to date, the Human Development Index (HDI). Having received paramount criticism (Desai 1991; Sagar and Najam 1998; Chowdhury and Squire 2006; Ray 2008; Davies 2009), the developers of the HDI switched the aggregation function from linear to geometric in 2010, addressing one of their main methodological criticisms. More specifically, in their yearly report (UNDP 2010, p. 216), they state the following: ‘It thus addresses one of the most serious criticisms of the linear aggregation formula, which allowed for perfect substitution across dimensions’. There is no doubt that, compared with the linear type of aggregation, geometric is the solid first step towards a solution to the issue of an index’s compensability. In fact, it is argued that, under such circumstances, it provides more meaningful results (see e.g. Ebert and Welsch 2004). However, this still appears to be only a partial solution or a ‘trade-off’ between compensatory and non-compensatory techniques (Zhou et al. 2010, p. 171). Therefore, if complete ‘inelasticity’ of compensation, or the meaning of weights to be interpreted solely as ‘importance coefficients’, is the actual objective of a composite index, a non-compensatory approach is ideal and strongly suggested to be reconsidered (Paruolo et al. 2013, p. 632).

3.2 Non-compensatory Aggregation

Non-compensatory aggregation techniques (Vansnick 1990; Vincke 1992; Roy 1996) are mainly based on ELECTRE methods (see e.g. Figueira et al. 2013, 2016) and PROMETHEE methods (Brans and Vincke 1985; Brans and De Smet 2016). Given the weights for each criterion (interpreted as ‘importance coefficients’ in this exercise) and some other preference parameters (e.g. indifference, preference, and veto thresholds), the mathematical aggregation is divided into the following steps: (1) ‘pair-wise comparison of units according to the whole set of indicators’ and (2) ‘ranking of units in a partial, or complete pre-order’ (Munda and Nardo 2009, p. 1516). The first step creates the ‘outranking matrix’Footnote 24 (Roy and Vincke 1984), which essentially discloses the pairwise comparisons of the alternatives (e.g. countries) for each criterion (Munda and Nardo 2009). Moving to the second step (i.e. the exploitation procedure of the outranking matrix), an approach must be selected regarding the proper aggregation. The exploitation procedures can mainly be divided into the Condorcet- and the Borda-type approach (Munda and Nardo 2003). These two are radically differentFootnote 25 and as such yield different results (Fishburn 1973). Moulin (1988) argues that the Borda-type approach is ideal when just one alternative should be chosen. Otherwise, the Condorcet-type approach is the most ‘consistent’ and thus the most preferable for ranking the considered alternatives (Munda and Nardo 2003, p. 10). A big issue with the Condorcet approach, though, is that of the presence of cycles,Footnote 26 the probability of which increases with both the number of criteria and the number of alternatives to be evaluated (Fishburn 1973). A large amount of work has been carried out with the aim of providing solutions to this issue (Kemeny 1959; Young and Levenglick 1978; Young 1988). A ‘satisfying’ one is for the ranking of alternatives to be obtained according to the maximum likelihood principle,Footnote 27 which essentially chooses as the final ranking the one with the ‘maximum pair-wise support’ (Munda 2012, p. 345). While this approach enjoys ‘remarkable properties’ (Saari and Merlin 2000, p. 404), one drawback is that it is computationally costly, making it unmanageable when the number of alternatives increases considerably (Munda 2012). Nevertheless, the C–K–Y–L approach is of great use for the concept of a non-compensatory aggregation scheme, and it could be used as a solid alternative solution to the common practice of linear aggregation schemes. Munda (2012) applies this approach to the case of the Environmental Sustainability Index (ESI), produced by Yale University and Columbia University in collaboration with the World Economic Forum and the European Commission (Joint Research Centre). According to the author, there are noticeable differences in the rankings between the two approaches (linear and non-compensatory), mostly apparent in the countries ranked among the middle positions and less apparent among those ranked first or last.

Despite its desirable properties, judging from the number of applications existing in this literature, the non-compensatory multi-criteria approach (NCMCA) is not met hugely popular. This could be attributed to the simplicity of construction of other methods (e.g. linear or geometric aggregation) or the issue of being computationally costly to calculate. Furthermore, NCMCA approaches are so far used to provide the developer with a ranking of the units evaluated; thus, one can only follow the rankings through time (Saltelli et al. 2005, p. 364), swapping the absolute level of information in possession with an ordinal scale. Despite these drawbacks, Paruolo et al. (2013, p. 631) urge developers to reflect on the cost of oversimplification that other techniques bear (e.g. linear), and, whenever possible, to use NCMC approaches, in which the weights exhibit the actual importance of the criteria. Otherwise, the authors suggest that the developers of an index should inform the audience to which the index is targeted that, in the other settings (e.g. linear or geometric aggregation), weights express the relative importance of the indicators (trade-offs) and not the nominal ones that were originally assigned.

3.3 Mixed Strategies

Owing to the unresolved issues of choosing a weighting and an aggregation approach, several methodologies appear in the literature, dealing with these steps in different manners. These methodologies are hybrid in the sense that they do not particularly fit into one category or the other both weighting- and aggregation-wise. This is because they use a combination of different approaches to solving the aforementioned issues. These are discussed further below.

3.3.1 Mazziotta–Pareto Index (MPI)

The Mazziotta–Pareto Index (MPI), originally introduced in 2007 (Mazziotta and Pareto 2007), aims to produce a composite index that penalises substitutability among the indicators, as this is introduced in the case of linear aggregation. More specifically, in linear aggregation a unit that performs very well in one indicator can offset a poor performance in another, proportionally to the ratio of their weights. In the MPI this is addressed by adding (subtracting) a component to (from) a non-weighted arithmetic mean (depending on the direction of the index), designed in such a way as to penalise this unbalance between the indicators (De Muro et al. 2011). This component, usually referred to as a ‘penalty’, is equal to a multiplication term of the unit’s standard deviation and the coefficient of variation among its indicators. Essentially, what the authors aim for is a simplistic methodology calculation-wise that favours not only a high-performing unit on average (as in the linear aggregation) but also a consistent one throughout all the indicators. Due to the desirability of simplicity, the MPI’s use of the arithmetic mean still bears the cost of compensability regarding aggregation. Nevertheless, one could argue that it is fairly adjusted to account for the unbalance among the indicators with its ‘penalty’ component. A newer variant of the index allows for the ‘absolute assessment’ of the units over time (Mazziotta and Pareto 2016, p. 989). To achieve this, the authors change the normalisation method from a modified z-score to a rescaling of the original variables according to two policy ‘goalposts’. These are a minimum and a maximum value that accordingly represent the potential range to be covered by each indicator in a certain period. In this way the normalised indicators exhibit absolute changes over time instead of the relative changes that are captured by the standardisation approach used in their previous model. As an illustrative application, the authors measure the well-being of the OECD countries in 2011 and 2014.

3.3.2 Penalty for a Bottleneck

Working towards the creation of the Global Entrepreneurship and Development Index, Ács et al. (2014) present a novel methodology in the field of composite indices, known as the ‘penalty for a bottleneck’. Although different from the MPI methodologically, their approach is conceptually in line with penalising the unbalances when producing the overall index. This penalisation is achieved by ‘correcting’ the sub-indicators prior to the aggregation stage. More specifically, a component of an exponential function adjusts all the sub-indicators according to the overall weakest-performing indicator (minimum value) of that unit (otherwise described as a ‘bottleneck’). After the unbalance-adjusted indicators have been computed, a non-weighted arithmetic mean is used to construct the final index. In this way the complete compensability, as introduced in the linear aggregation setting, is significantly reduced. However, an issue raised here by the authors is that the amount of the ‘penalty’ adjustment is in fact unknown, as it depends on each data set and on the presence or otherwise of any outliers in an indicator’s value. This is something that, as they state, also implies that the solution is not always optimal. Despite the original development of this approach towards the measurement of national innovation and entrepreneurship at the country level, the authors claim that this methodology can be extended to the evaluation of any unit and for any discipline beyond innovation.

3.3.3 Mean–Min Function

The mean–min function, developed by Tarabusi and Guarini (2013), is another approach working towards the penalisation of the unbalances in the construction of a composite index. What the authors aim to achieve is an intermediate but controllable case between the zero penalisation of the arithmetic mean and the maximum penalisation of the min function.Footnote 28 To achieve this, they start with the non-weighted arithmetic average—as in the case of the MPI—from which they subtract a penalty component. This comprises the difference between the arithmetic average and the min function, interacted with two variables, 0 ≤ α ≤ 1 and β ≥ 0, to control the amount of penalisation intended by the developer. For α = 0, the equation is reduced back to the arithmetic average, while, for α = 1 (and β = 0), it is reduced back to the min function. Therefore, ‘β’ can be seen as a coefficient that determines the compensability between the arithmetic mean and the min function. One issue that is potentially encountered here, though, is that of the subjectivity, or even ignorance, behind the control of penalisation. In other words, what should the values of ‘α’ and ‘β’ be to determine the proper penalisation intended? The authors suggest that, in the case of standardised variables, a reasonable value could be that of α = β = 1, as this introduces progressive compensability.

3.3.4 ZD Model

The ZD model is developed by Yang et al. (2017) for an ongoing project of the Taiwan Institute of Economic Research. The core idea behind it is inspired by the well-known Z-score, in which the mean stands as a reference point, with values lower (higher) than it exhibiting worse (better) performance. Similarly, a virtual unit (e.g. country, region, or firm) is constructed in such a way as to perform equally to the average of each indicator to be used as such a reference point. The evaluation of the units is attained by a DEA-like model and thus presented in the form of an ‘efficiency’ score. More specifically, this score is obtained by minimising the sum of the differences between the units that are above average and those that are below average. In this way a common set of weights is achieved for all the units, which exhibits the smallest total difference between the relative performance of the unit evaluated and that of the average. The limitations of this approach are the same as those appearing in the rest of the DEA-like models in the literature, as described in Sect. 2.3.4.

3.3.5 Directional Benefit-of-the-Doubt (BoD)

Directional BoD, introduced by Fusco (2015), is another approach using a DEA-like model for the construction of composite indicators. According to the author, one of the main drawbacks of the classic BoD model (see Sect. 2.3.4) is that it still assumes complete compensability among the indicators. This is attributed to the nature of the linear aggregation setting. To overcome this issue, Fusco (2015) suggests including a ‘directional penalty’ in the classic BoD model by using the directional distance function introduced by Chambers et al. (1998). To obtain the direction, ‘g’, the slope of the first principal component is used. The output’s (viz. the overall index) distance to the frontier is then evaluated, and the directional BoD estimator is obtained by solving a simple linear problem. According to the author, there is one limitation to this approach regarding the methodological framework. The overall index scores obtained with this approach are sensitive to outliers, as both the DEA and the PCA approach that are used suffer from this drawback. To moderate this issue, robust frontier and PCA techniques could be used instead (see Fusco 2015, p. 629).

4 On the Robustness of Composite Indicators

Composite indicators involve a long sequence of steps that need to be followed meticulously. There is no doubt that ‘incompatible’ or ‘naive’ choices (i.e. without knowing the actual consequences) in the steps of weighting and aggregation may result in a ‘meaningless’ synthetic measure. However, in such a case, the developer is inevitably compelled to draw wrong conclusions from it. This is one of the indicators’ main drawbacks and needs extreme caution (Saisana and Tarantola 2002), especially when indices are used in policy practices (Saltelli 2007). One example of such a case is presented by Billaut et al. (2010). The authors examine the ‘Shanghai Ranking’, a composite index used to rank the best 500 universities in the world. They claim that, despite the paramount criticism that this index receives in the literature (regarding both its theoretical and its methodological framework), it attracts such interest in the academic and policymaking communities that policies are designed on behalf of the latter, heavily influenced by the ranking of the index. However, if the construction of an index fully neglects the aggregation techniques’ properties, it ‘vitiates’ the whole purpose of evaluation and eventually shows a distorted picture of reality (Billaut et al. 2010, p. 260). Indeed, a misspecified aggregate measure may radically alter the results, and drawing conclusions from it is inadvisable in policy practices (Saltelli 2007; OECD 2008).

Regardless of the composite’s objective (e.g. serving as a tool for policymakers or otherwise), these aggregate measures ought to be tested for their robustness as a whole (OECD 2008). This will act as a ‘quality assurance’ tool that illustrates how sensitive the index is to changes in the steps followed to construct it and will highly reduce the possibilities to convey a misleading message (Saisana et al. 2005). Despite its importance, robustness analysis is often found to be completely missing for the vast majority of the composite indices (OECD 2008), while some only partially use it (Freudenberg 2003; Dobbie and Dail 2013). To understand its importance better, we will analyse this concept further in the subsequent sections, covering all its potential forms.

4.1 Traditional Techniques: Uncertainty and Sensitivity Analyses

Robustness analysis is usually accomplished through ‘uncertainty analysis’, ‘sensitivity analysis’, or their ‘synergistic use’ (Saisana et al. 2005, p. 308). These are characterised as the ‘traditional techniques’ (Permanyer 2011, p. 308). Putting it simply, uncertainty analysis (UA) refers to the changes that are observed in the final outcome (viz. the composite index value) from a potentially different choice made in the ‘inputs’ (viz. the stages to construct the composite index). On the other hand, sensitivity analysis (SA) measures how much variance of the overall output is attributed to those uncertainties (Saisana et al. 2005). It is often seen that these two are treated separately, with UA being the most frequent kind of robustness used (Freudenberg 2003; Dobbie and Dail 2013). However, both are needed to give the developer, and the audience to which the index is referred, a better understanding.Footnote 29 By solely applying uncertainty analysis, the developer may observe how the performance of a unit (e.g. ranking) deviates with changes in the steps of the construction phase. This is usually illustrated in a scatter plot, with the vertical axis exhibiting the country performance (e.g. ranking) and the horizontal axis exhibiting the input source of uncertainty being tested for (e.g. alternative weighting or aggregation scheme) (OECD 2008). To gain a better understanding, however, it is also important to identify the portion of this variation in the rankings that is attributed to that particular change. For instance, is it the weighting scheme that mainly changes the rankings, is it the aggregation scheme that affects them, or is it a combination of these changes in the inputs (interactions) that has a greater effect on the final output? These questions are answered via the use of sensitivity analysis, and they are generally expressed in terms of sensitivity measures for each input tested. More specifically, they show by how much the variance would decrease in the index if that uncertainty input were removed (OECD 2008). Understandably, with the use of both, a composite index might convey a more robust picture (Saltelli et al. 2005), and it can even be proven useful in dissolving some of the criticisms surrounding composite indicators (e.g. see Saisana et al. 2005, for an example using the Environmental Sustainability Index). Having discussed the concept of robustness analysis through the use of uncertainty and sensitivity analyses, we will now briefly discuss how these are applied after the construction of a composite index.Footnote 30

The first step in uncertainty analysis is to choose which input factors will be tested (Saisana et al. 2005). These are essentially the choices made in each step (e.g. selection of the indicators, imputation of missing data, normalisation, and weighting and aggregation schemes) where applicable. Ideally, one should address all sources of uncertainty (OECD 2008). These inputs are translated into scalar factors, which, in a Monte Carlo simulation environment, are randomly chosen in each iteration. Then the following outputs are captured and monitored accordingly: (1) the overall index value; (2) the difference in the values of the composite index between two units of interest (e.g. countries or regions); and (3) the average shift in the rank of each unit.

Unlike UA, sensitivity is applied to only two of the above-mentioned outputs, which are relevant to the evaluation of the quality of the composite. These are (2) and (3) as mentioned in the previous paragraph (Saisana et al. 2005). According to the authors, variance-based techniques are more appropriate due to the non-linear nature of composite indices. For each input factor being tested, a sensitivity index is computed, showing the proportion of the overall variance of the composite that is explained, ceteris paribus, by changes in this output. These sensitivity indices are calculated for all the input factors via a decomposition formula (see Saisana et al. 2005, p. 311). To obtain an even better understanding, it is also important to identify the interactions between the considered inputs (e.g. how a change in an input factor interacts with a change in another). For this exercise, total sensitivity indices are produced. According to the authors, the most commonly used method is the one by Sobol (1993), in a computationally improved form given by Saltelli (2002).

4.2 Stochastic Multi-criteria Acceptability Analysis

Stochastic multi-attribute acceptability analysis (SMAA; Lahdelma et al. 1998; Lahdelma and Salminen 2001) has become popular in multiple criteria decision analysis for dealing with the issue of uncertainty in the data or the preferences required by the decision maker during the evaluation process (e.g. see Tervonen and Figueira 2008). SMAA has recently been introduced in the field of composite indicators as a technique to deal with uncertainties in the construction process. More specifically, Doumpos et al. (2016) use this approach to create a composite index that evaluates the overall financial strength of 1200 cross-country banks in different weighting scenarios.Footnote 31 SMAA can prove to be a great tool in the hands of indices’ developers, and it can extend beyond its use as an uncertainty tool. For instance, Greco et al. (2017) propose SMAA to deal with the issue of weighting in composite indicators by taking into consideration the whole set of potential weight vectors. In this way it is possible to consider a population in which preferences (represented by each vector of weights) are distributed according to a considered probability. In a complementary interpretation, the plurality of weight vectors can be imagined as a representative of the preferences of a plurality of selves, of which each individual can be imagined to be composed (see e.g. Elster 1987). On the basis of these premises, SMAA is applied to the ‘whole space’ of weight vectors for the considered dimensions, obtaining a probabilistic ranking. Essentially, this output illustrates the probability that each considered entity (a country, a region, a city, etc.) attains the first, the second and so on position, as well as the probability that each entity is preferred to another one. Moreover, Greco et al. (2017) introduce a specific SMAA-based class of multidimensional concentration and polarisation indices (the latter extending the EGR index) (Esteban and Ray 1994; Esteban et al. 2007), measuring the concentration and the polarisation of the probability of a given entity being ranked in a given position or better/worse (e.g. the concentration and the polarisation to be ranked in the third or a better/worse position).

The use of SMAA as a tool that extends beyond its standard practice (e.g. dealing with uncertainty) is a significant first step towards a conceptual issue in the construction of composite indicators: representative weights. More specifically, constructing a composite index using a single set of weights automatically implies that they are representative of the whole population (Greco et al. 2017). Quoting the authors (p. 3): ‘[…] the usual approach considering a single vector of weights levels out all the individuals, collapsing them to an abstract and unrealistic set of ‘representative agents”’. Now one can imagine a cross-country comparison using a single set of weights that act as a representative set for all the countries involved. Understandably, it is a rather difficult assumption to make, given Arrow’s theorem (Arrow 1950). Decancq and Lugo (2013, p. 10) describe this fundamental problem with a simple example of a theoretical well-being index. According to the authors, the literature is well documented with respect to the variation of personal opinions on what a ‘good life’ is. Therefore, following the same reasoning, how can a developer assume that a set of weights acts as a representative of all this variation? Quoting the authors (p. 10): ‘Whose value judgements on the “good life” are reflected in the weights?’ This is a classic example of a conflictual situation in public policy, arising due to the existence of a plurality of social actors (see e.g. Munda 2016). This issue of the representative agent (see e.g. Hartley and Hartley 2002) has long been criticised in the economics literature, one of the most well-known criticisms being made by Kirman (1992). According to Decancq et al. (2013), inevitably there are many individuals who are ‘worse off’ when a policymaker chooses a single set of weights. On the one hand, SMAA extends above and beyond the issue of representativeness by providing the developer of an index with the option to include all possible viewpoints. However, for every viewpoint taken into account, a different ranking is produced; thus, a choice has to be made afterwards regarding how to deal with these outcomes. Usually, the mode ranking is chosen, obtained by the ranking acceptability indices (see e.g. Greco et al. 2017). Moreover, in its current form, SMAA can only provide the developer with a ranking of the units evaluated. Thus, it still suffers from the same issue as other non-compensatory techniques: swapping the available information in possession with an ordinal scale in the form of a ranking.

4.3 Other Approaches

Several other approaches appear in the literature, with which the robustness of composite indices may be evaluated or which may simply provide more robust rankings. An example of the latter is given by Cherchye et al. (2008), presenting a new approach according to which several units may be ranked ‘robustly’ (i.e. rankings are not reversed for a wide set of weighting vectors or aggregation schemes). To achieve this, they propose a generalised version of the Lorenz dominance criterion, which leaves to the user the choice of how ‘weak’ or ‘strong’ the dominance relationship will be for the ranking to be considered robust. This approach can be implemented via linear programming, an illustrative application of which is given with the well-known HDI. In regard to the robustness evaluation, Foster et al. (2012) present another approach,Footnote 32 in which several other weight vectors are considered to monitor the existence of rank reversals. In essence, by changing the weights among the indicators, this approach measures how well the units’ rankings are preserved (e.g. in terms of percentage). In an illustrative application, the authors examine three well-known composite indices, namely the HDI, the Index of Economic Freedom, and the Environmental Performance Index. Similar to Foster et al. (2012), Permanyer (2011) suggests considering the whole space of weight vectors, though the objective is slightly different this time. The author proposes to find three sets of weights according to which: (1) a unit, say ‘α’, is not ranked below another unit, say ‘β’; (2) units ‘α’ and ‘β’ are equally ranked; and (3) ‘β’ dominates ‘α’. Essentially, the original intended weight vector set by the developer can fairly be considered to be ‘robust’ the further it is from the second subset (viz. the set of weights according to which ‘α’ is equal to unit ‘β’), because the closer to it that it is, the more possible it is for a rank reversal to happen. This intuitive approach is further extended to multiple examples and specifications, details of which can be found in Permanyer (2011, pp. 312–316). An illustrative example is provided using the well-known HDI, the Gender-related Development Index, and the Human Poverty Index.