Abstract

We present the focal flow sensor. It is an unactuated, monocular camera that simultaneously exploits defocus and differential motion to measure a depth map and a 3D scene velocity field. It does this using an optical-flow-like, per-pixel linear constraint that relates image derivatives to depth and velocity. We derive this constraint, prove its invariance to scene texture, and prove that it is exactly satisfied only when the sensor’s blur kernels are Gaussian. We analyze the inherent sensitivity of the focal flow cue, and we build and test a prototype. Experiments produce useful depth and velocity information for a broader set of aperture configurations, including a simple lens with a pillbox aperture.

Similar content being viewed by others

References

Alexander, E., Guo, Q., Koppal, S., Gortler, S., & Zickler, T. (2016). Focal flow: Measuring distance and velocity with defocus and differential motion. In European conference on computer vision (pp. 667–682). Berlin: Springer.

Bracewell, R. N. (1956). Strip integration in radio astronomy. Australian Journal of Physics, 9(2), 198–217.

Chakrabarti, A., & Zickler, T. (2012). Depth and deblurring from a spectrally-varying depth-of-field. In European Conference on Computer Vision (ECCV).

Dana, K. J., Van-Ginneken, B., Nayar, S. K., & Koenderink, J. J. (1999). Reflectance and texture of real-world surfaces. ACM Transactions on Graphics (TOG), 18(1), 1–34. http://www.cs.columbia.edu/CAVE/software/curet/html/about.php.

Duhamel, P. E. J., Perez-Arancibia, C. O., Barrows, G. L., & Wood, R. J. (2013). Biologically inspired optical-flow sensing for altitude control of flapping-wing microrobots. IEEE/ASME Transactions on Mechatronics, 18(2), 556–568.

Farid, H., & Simoncelli, E. P. (1998). Range estimation by optical differentiation. Journal of the Optical Society of America A, 15(7), 1777–1786.

Favaro, P., Burger, M., & Soatto, S. (2004). Scene and motion reconstruction from defocused and motion-blurred images via anisotropic diffusion. In European Conference on Computer Vision (ECCV).

Fisher, S. D. (1999). Complex variables. North Chelmsford, MA: Courier Corporation.

Floreano, D., Zufferey, J. C., Srinivasan, M. V., & Ellington, C. (2009). Flying insects and robots. New York: Springer.

Grossmann, P. (1987). Depth from focus. Pattern Recognition Letters, 5(1), 63–69.

Horn, B. K., & Schunck, B. G. (1981). Determining optical flow. In 1981 Technical Symposium East. International Society for Optics and Photonics.

Horn, B. K., Fang, Y., & Masaki, I. (2007). Time to contact relative to a planar surface. In Intelligent vehicles symposium (IV).

Horn, B.K., Fang, Y., & Masaki, I. (2009). Hierarchical framework for direct gradient-based time-to-contact estimation. In Intelligent Vehicles Symposium (IV).

Humber, J. S., Hyslop, A., & Chinn, M. (2007). Experimental validation of wide-field integration methods for autonomous navigation. In Intelligent robots and systems (IROS).

Koppal, S. J., Gkioulekas, I., Zickler, T., & Barrows, G. L. (2011). Wide-angle micro sensors for vision on a tight budget. In Computer vision and pattern recognition (CVPR).

Lee, D. N. (1976). A theory of visual control of braking based on information about time-to-collision. Perception, 5, 437–59.

Levin, A. (2010). Analyzing depth from coded aperture sets. In European conference on computer vision (ECCV).

Levin, A., Fergus, R., Durand, F., & Freeman, W. T. (2007). Image and depth from a conventional camera with a coded aperture. In ACM transactions on graphics (TOG).

Lin, H. Y., & Chang, C. H. (2006). Depth from motion and defocus blur. Optical Engineering, 45(12), 127,201–127,201.

Myles, Z., & da Vitoria Lobo, N. (1998). Recovering affine motion and defocus blur simultaneously. Pattern Analysis and Machine Intelligence, 6, 652–658.

Ng, R. (2005). Fourier slice photography. In ACM transactions on graphics (TOG).

Paramanand, C., & Rajagopalan, A. N. (2012). Depth from motion and optical blur with an unscented kalman filter. IEEE Transactions on Image Processing, 21(5), 2798–2811.

Pentland, A. P. (1987). A new sense for depth of field. Pattern Analysis and Machine Intelligence, 4, 523–531.

Raghavendra, C. S., Sivalingam, K. M., & Znati, T. (2006). Wireless sensor networks. New York: Springer.

Rajagopalan, A., & Chaudhuri, S. (1997). Optimal selection of camera parameters for recovery of depth from defocused images. In Computer vision and pattern recognition (CVPR).

Rajagopalan, A., Chaudhuri, S., & Mudenagudi, U. (2004). Depth estimation and image restoration using defocused stereo pairs. Pattern Analysis and Machine Intelligence, 26(11), 1521–1525.

Rudin, W. (1991). Functional analysis. New York: McGraw-Hill.

Schechner, Y. Y., & Kiryati, N. (2000). Depth from defocus vs. stereo: How different really are they? International Journal of Computer Vision, 39(2), 141–162.

Seitz, S. M., & Baker, S. (2009). Filter flow. In International conference on computer vision (ICCV).

Sellent, A., & Favaro, P. (2014). Coded aperture flow. In German conference on pattern recognition (GCPR)

Subbarao, M. (1988). Parallel depth recovery by changing camera parameters. In International conference on computer vision (ICCV).

Subbarao, M., & Surya, G. (1994). Depth from defocus: A spatial domain approach. International Journal of Computer Vision, 13(3), 271–294.

Tai, Y. W., & Brown, M. S. (2009). Single image defocus map estimation using local contrast prior. In International conference on image processing (ICIP).

Tang, H., Cohen, S., Price, B., Schiller, S., Kutulakos, K. N. (2017). Depth from defocus in the wild. In Computer vision and pattern recognition (CVPR).

Tao, M., Hadap, S., Malik, J., & Ramamoorthi, R. (2013). Depth from combining defocus and correspondence using light-field cameras. In International conference on computer vision (ICCV).

Veeraraghavan, A., Raskar, R., Agrawal, A., Mohan, A., & Tumblin, J. (2007). Dappled photography: Mask enhanced cameras for heterodyned light fields and coded aperture refocusing. In ACM transactions on graphics (TOG).

Watanabe, M., & Nayar, S. K. (1998). Rational filters for passive depth from defocus. International Journal of Computer Vision, 27(3), 203–225.

Yasuma, F., Mitsunaga, T., Iso, D., & Nayar, S. K. (2010). Generalized assorted pixel camera: Postcapture control of resolution, dynamic range, and spectrum. IEEE Transactions on Image Processing, 19(9), 2241–2253.

Zhou, C., Lin, S., & Nayar, S. (2009). Coded aperture pairs for depth from defocus. In International conference on computer vision (ICCV).

Zhou, C., Lin, S., & Nayar, S. K. (2011). Coded aperture pairs for depth from defocus and defocus deblurring. International Journal of Computer Vision, 93(1), 53–72.

Acknowledgements

We would like to thank J. Zachary Gaslowitz and Ioannis Gkioulekas for helpful discussion. This work was supported by a gift from Texas Instruments Inc. and by the National Science Foundation under awards No. IIS-1212928 and 1514154 and Graduate Research Fellowship No. DGE1144152 to E.A.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Edwin Hancock, Richard Wilson, Will Smith, Adrian Bors, Nick Pears.

Appendices

Appendix A: Introduction to Distributions and Complex Analysis

The theory of distributions generalizes functional analysis beyond the classic notion of a function. Much of its usefulness comes from extending results from the study of differential equations to include discontinuous objects whose derivatives do not exist in the traditional sense, but can nonetheless be abstracted in consistent and powerful ways. While standard functions and measures can be treated as distributions, so can more exotic objects like the Dirac delta “function”, which is the distributional derivative of the discontinuous step function, as well as its derivatives in turn.

In this context, a distribution is defined as a linear functional that maps some set of well-behaved functions to the real numbers. Unlike functions, they do not have values at given points in a domain, though this can be a useful way to visualize their effect. Any locally-integrable function P can induce a distribution \(\tilde{P}\) that maps a good test function f (more detail below) to the reals through integration:

while the Dirac delta maps each function to its value at the origin:

Distributions in this sense should not be confused with probability or frequency distributions, distributions as defined in differential geometry, or any of the many other scientific uses of the term.

Many operations require more care in their application to distributions than to functions. While distributions can be added together and multiplied with real numbers or with infinitely differentiable functions, the product of two distributions, for example, is not well-defined. One of the most useful operations that can be performed on a distribution is taking its derivative. This operation is defined by moving the derivative onto the test function (with a sign change), and allows all distributions to be treated as infinitely differentiable with many of the properties of classical derivatives. This allows us to meaningfully use objects like the nth derivative of the Dirac delta:

In describing the properties of distributions, it is useful to classify them by the sets of test functions that they handle gracefully. There are many choices of f that could lead Eq. (75) to violate our definition of a distribution, such as any complex-valued function. Typically, test functions are drawn from the space \(D(\mathbb {R}^n)\), which is the set of infinitely-differentiable, real-valued, compactly-supported functions. A distribution must linearly map any member of this set to a real number. The space of distributions is called \(D'(\mathbb {R}^n)\), as the dual space of \(D(\mathbb {R}^n)\).

By considering larger sets of test functions, one can define smaller sets of distributions that still linearly map all allowed test functions to the reals. Two such classes are used in this paper. The first is the set of tempered distributions. The test function of a tempered distribution does not have to be compactly supported, but can be any rapidly-decreasing smooth function. The space of these test functions is called Schwartz space or \(S(\mathbb {R}^n)\) and notably includes Gaussians and their derivatives. By its integrability and boundedness, the most general form of our aperture filter is a tempered distribution, and tempered distributions are closed under differentiation: \(\tilde{\kappa }, \tilde{\kappa }_x, \tilde{\kappa }_y \in S'(\mathbb {R}^2)\).

A useful subset of the tempered distributions is the set of distributions with compact support. These distributions map any test function to zero if the support of that function excludes a certain compact region, called the support of the distribution. The Dirac delta is a classic example of a compactly-supported distribution, because any test function with \(f(0)=0\) is mapped to zero, so \(\text {supp}(\delta )=\{0\}\). We require the distributions induced by our post-processing operation and the texture to have compact support: \(\tilde{m}, \tilde{P} \in \mathcal {E}'(\mathbb {R}^2)\).

All of this is relevant because we want to rigorously specify what m and \(\kappa \) can be, without requiring them to be differentiable functions. Specifically, we want to know when the quantity \(M[I] = m *k *P\) is well-defined. The convolution theorem, which states that convolution can be performed by multiplication of Fourier transforms, holds for:

-

1.

two \(L^1\) functions, producing another \(L^1\) function.

-

2.

A tempered distribution and a compactly-supported distribution, producing a tempered distribution.

-

3.

A rapidly decreasing function with a tempered distribution, producing another tempered distribution.

The first of these describes the traditional use of the theorem, the second is the reason we require P compactly supported for general (tempered) \(\kappa \) in the theorem, and the third lets us drop this assumption of compactness on P (which is bounded and locally integrable, so \(\tilde{P}\) is tempered) in the corollary after \(m *\kappa \) is specified as a rapidly-decreasing function.

We also use Schwartz’s Paley–Weiner theorem, which states that the Fourier transform of a compactly-supported distribution on the reals is an entire function. This is a very powerful result in complex analysis, for which we suggest (Fisher 1999) as a reference. Complex analysis extends analysis to functions on the complex numbers, creating alternate versions of familiar ideas from calculus on the reals. Several of these appear in our proof, particularly in Claims 3 and 4.

Perhaps the most important of these concepts is the complex derivative. This is defined, just as on the reals, as the limit of the difference quotient, but it will exist in far fewer cases. Take, for example, the function \(\mathfrak {R}(z)\), which returns the real part of its complex input z. Using the standard metaphor of \(\mathbb {R}^2\) for \(\mathbb {C}^1\), we could imagine this function as having perfectly well-defined partial derivatives: 1 along the real axis, 0 along the imaginary axis. However, because the derivative is a single limit, which must match from all directions of approach in order to exist, the function \(\mathfrak {R}(z)\) is in fact nowhere complex differentiable.

As a result of this restrictive definition, differentiable functions are much rarer in complex analysis, and they have a number of remarkable properties. Functions that are complex differentiable in a neighborhood, called analytic or holomorphic functions, are, for example, infinitely differentiable everywhere the first derivative exists.

For complex functions that are holomorphic except at isolated points, there are three kinds of singularities that can occur: removable singularities, poles, and essential singularities. A removable singularity is like a patchable hole in the function—the function is not defined at the point, but it can be continuously extended to a function that is. A pole is a point at which the function goes to complex infinity (a quantity with infinite magnitude and indeterminate phase) but where the product of the function and some polynomial is holomorphic at that point. Anything more serious, like an oscillating discontinuity or a non-pole infinity, is called an essential singularity.

A holomorphic function with no singularities at any point other than infinity is called an entire function, and these are very special. They include polynomials, exponentials, trigonometric functions, and their sums, products, compositions, derivatives, and integrals. According to Liouville’s theorem, any entire function whose magnitude is bounded must be constant, so any non-constant entire function must have a singularity at infinity. If this singularity is essential, the function is transcendental (e.g. \(\sin \) and \(\cos \)) and if it is a pole, the function is a polynomial. We use this restriction, along with Schwartz’s Paley–Weiner theorem, to prove Claim 4.

Appendix B: Alternate Derivations of the Focal Flow Constraint

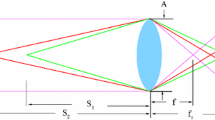

Putting aside the question of uniqueness, the correctness of the focal flow constraint (17) is easily verified by setting \(I=k*P\) with Gaussian k and simply taking the relevant derivatives. Here we provide two alternative confirmations that may provide additional intuition. One of these derivations is based on a truncated Taylor expansion, mirroring a common derivation for linearized optical flow. The other is based on sinusoidal textures, illustrated in Fig. 1 and analyzed in Sect. 4 for inherent sensitivity.

1.1 B.1 From Taylor Expansion

Following the well-known Taylor series derivation for differential optical flow, we can consider the difference in intensity at a pixel between a pair of images taken a time step \(\varDelta t\) apart. We take advantage of the fact that the brightness of the underlying sharp texture does not change, but we must correct for the change in blur to process the images.

To do so, we assume Gaussian blur kernels k,

and define a reblurring filter b that takes narrow Gaussians to wider Gaussians under spatial convolution:

This reblurring filter takes the form

The unchanging texture brightness constraint states that for an all-in-focus pinhole image P,

with features moving from (x, y) to \((x+\varDelta x,y+\varDelta y)\) on the image. We are free to convolve both sides of this constraint by a Gaussian, for example:

Then, for images blurred with different Gaussian kernels, where we set the sign of \(\varDelta t\) without loss of generality so that \(\sigma (t+\varDelta t) > \sigma (t)\), we can express this modification of the unchanging texture brightness constraint in terms of blurred images I:

where the \(\frac{Z+\varDelta Z}{Z}\) term accounts for the change in magnification between images. Taking the Taylor expansion of either side and dropping terms above first order, we have the approximation

Subtracting the I(x, y, t) term from each side, dividing by \(\varDelta t\), and noting that

our approximate constraint becomes

In the absence of blur, \(v=0\) and this is identical to optical flow. In the limit as \(\varDelta t\) approaches zero, and under the separation of \((\dot{x},\dot{y})\) into translation and magnification terms, this produces the focal flow constraint (17).

1.2 B.2 From Sinusoidal Textures

For general sinusoidal texture

a pinhole camera will record the image

Under Gaussian blur as in Eq. (78), frequency and phase will not change but amplitude will:

The derivatives of this image are as follows:

so that

By the linearity of convolution and differentiation, Eq. (101) holds for all sum-of-sinusoid textures, so that the focal flow constraint applies to any texture with a Fourier transform.

Appendix C: List of Parts

No. | Component | Source | Part number | Quantity | Description |

|---|---|---|---|---|---|

1 | Camera | Point Grey | GS3-U3-23S6M-C | 1 | High speed, monochrome, powered by USB |

2 | Lens | Thorlabs | LA1509-A | 1 | Planar-convex, \(\varnothing 1''\), \(f=100\) mm, AR coated(350–700 nm) |

2 | Apodizing filter (optional) | Thorlabs | NDYR20B | 1 | Reflective, \(\varnothing 25\) mm, ND, OD: 0.04–2 |

3 | Lens tube | Thorlabs | SM1 Family | Flexible | SM1 thread, \(\varnothing 1''\), recommend SM1V15 for adjustable \(\mu _s\) |

4 | Lens tube mounts | Thorlabs | SM1TC\(\,+\,\)TR075 | 2 | |

5 | Aperture diaphragm | Thorlabs | SM2D25D or SM1D12D | 1 | SM1/SM2 thread, \(\varnothing 2''\) or \(\varnothing 1''\), removed when using apodizing filter |

6 | Calibration diaphragm | Thorlabs | SM2D25D | 1 | SM2 thread, \(\varnothing 2''\), connected with SM1A2 and SM2A6 |

7 | Pitch and Yaw platform | Thorlabs | PY003 | 3 | |

8 | Rotation platform | Thorlabs | PR01+PR01A | 2 | |

9 | Translation stage | Thorlabs | LNR50S | 1 | Controlled and powered by 12 |

10 | X–Y translation stage | Thorlabs & EO | 2\(\times \)PT1\(\,+\,\)PT101\(\,+\,\) PT102\(\,+\,\)EO56666 | 2 | |

11 | Wide plate holder | Thorlabs | FP02 | 1 | |

12 | Stepper motor controllers | Thorlabs | BSC201 | 1 | Powered by 110V, connected with PC via USB |

13 | Laser | Thorlabs | CPS532 | 1 | Mounted with AD11F, SM1D12SZ, CP02, NE20A-A, SM1D12D |

Appendix D: Detailed Experimental Results

1.1 Performance Versus Noise

To counteract sensor noise, several shots can be averaged to create an input image (inset) to the measurement algorithm. (zoom in to see difference in noise level). Comparing measured depth to ground truth (solid black line) shows that that, as expected, measurement accuracy improves with shot count. Unless otherwise noted, all results the paper use 10-shot averages.

1.2 Working Range Versus Aperture

We show working range (\(\equiv \) range for which depth error \(< 1\% \mu _f\)) versus focal depth \(\mu _f\) for four apertures, over two scene textures. We also show a sample point spread function for each aperture, at the same scale as the input image.

1.3 Performance for Varying Apertures and Textures

Distance measurements versus ground truth (black lines) for a variety of focal distances and aperture configurations. Each row is a different aperture configuration, and the left and middle columns show results for both lower-frequency scene textures (left column) and higher-frequency scene textures (middle column). The right-most column shows corresponding sample point spread functions, each for a variety of depths. The measurement algorithm is quite robust to deviations from the idealized Gaussian blur model. From top to bottom, the aperture configurations are: (I) diaphragm \(\varnothing 4.5\) mm, no filter; (II) diaphragm open, with apodizing filter; (III) diaphragm \(\varnothing 8.5\) mm, no filter; (IV) diaphragm \(\varnothing 25.4\) mm, no filter.

Rights and permissions

About this article

Cite this article

Alexander, E., Guo, Q., Koppal, S. et al. Focal Flow: Velocity and Depth from Differential Defocus Through Motion. Int J Comput Vis 126, 1062–1083 (2018). https://doi.org/10.1007/s11263-017-1051-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-017-1051-5