Abstract

Acoustic modeling using mixtures of multivariate Gaussians is the prevalent approach for many speech processing problems. Computing likelihoods against a large set of Gaussians is required as a part of many speech processing systems and it is the computationally dominant phase for Large Vocabulary Continuous Speech Recognition (LVCSR) systems. We express the likelihood computation as a multiplication of matrices representing augmented feature vectors and Gaussian parameters. The computational gain of this approach over traditional methods is by exploiting the structure of these matrices and efficient implementation of their multiplication. In particular, we explore direct low-rank approximation of the Gaussian parameter matrix and indirect derivation of low-rank factors of the Gaussian parameter matrix by optimum approximation of the likelihood matrix. We show that both the methods lead to similar speedups but the latter leads to far lesser impact on the recognition accuracy. Experiments on 1,138 work vocabulary RM1 task and 6,224 word vocabulary TIMIT task using Sphinx 3.7 system show that, for a typical case the matrix multiplication based approach leads to overall speedup of 46 % on RM1 task and 115 % for TIMIT task. Our low-rank approximation methods provide a way for trading off recognition accuracy for a further increase in computational performance extending overall speedups up to 61 % for RM1 and 119 % for TIMIT for an increase of word error rate (WER) from 3.2 to 3.5 % for RM1 and for no increase in WER for TIMIT. We also express pairwise Euclidean distance computation phase in Dynamic Time Warping (DTW) in terms of matrix multiplication leading to saving of approximately \({1} \over {3}\) of computational operations. In our experiments using efficient implementation of matrix multiplication, this leads to a speedup of 5.6 in computing the pairwise Euclidean distances and overall speedup up to 3.25 for DTW.

Similar content being viewed by others

References

Agaram, K., Keckler, S.W., Burger, D. (2001). A characterization of speech recognition on modern computer systems. In: IEEE international workshop on workload characterization, 2001 (pp. 45–53).

Krishna, R., Mahlke, S., Austin, T. (2002). Insights into the memory demands of speech recognition algorithms. In: Proceedings of the 2nd annual workshop on memory performance issues.

Lai, C., Lu, S.-L., Zhao, Q. (2002). Performance analysis of speech recognition software. In: Proceedings of the 5th workshop on computer architecture evaluation using commercial workloads.

Povey, D., Kingsbury, B., Mangu, L., Saon, G., Soltau, H., Zweig, G. (2005). Fmpe: Discriminatively trained features for speech recognition. In: IEEE international conference on acoustics, speech, and signal processing, 2005 (Vol. 1, pp. 961–964).

Chan, A., Ravishankar, M., Rudnicky, A., Sherwani, J. (2004). Four-layer categorization scheme of fast gmm computation techniques in large vocabulary continuous speech recognition systems. In: Proceedings of international conference on spoken language processing, Interspeech 2004.

Bocchieri, E. (1993). Vector quantization for the efficient computation of continuous density likelihoods. In: IEEE international conference on acoustics, speech, and signal processing, 1993 (Vol. 2, pp. 692–695).

Lee, A., Kawahara, T., Shikano, K. (2001). Gaussian mixture selection using context-independent hmm. In: IEEE international conference on acoustics, speech, and signal processing, 2001 (Vol. 1, pp. 69–72).

Ravishankar, M., Bisiani, R., Thayer, E. (1997). Sub-vector clustering to improve memory and speed performance of acoustic likelihood computation. In: Proceedings of European conference on speech communication and technology, Eurospeech 1997.

Pellom, B.L., Sarikaya, R., Hansen, J.H.L. (2001). Fast likelihood computation techniques in nearest-neighbor based search for continuous speech recognition. IEEE Signal Processing Letters, 8(8), 221–224.

Saraclar, M., Riley, M., Bocchieri, E., Goffin, V. (2002). Towards automatic closed captioning: low latency real time broadcast news transcription. In: Proceedings of international conference on spoken language processing, interspeech 2002.

Cardinal, P., Dumouchel, P., Boulianne, G., Comeau, M. (2008). Gpu accelerated acoustics likelihood computations. In: Proceedings of international conference on spoken language processing, interspeech 2008.

Dixon, P.R., Caseiro, D.A., Oonishi, T., Furui, S. (2007). The titech large vocabulary wfst speech recognition system. In: IEEE workshop on automatic speech recognition & understanding, 2007 (pp. 443–448).

Dixon, P.R., Oonishi, T., Furui, S. (2009). Harnessing graphics processors for the fast computation of acoustic likelihoods in speech recognition. Computer Speech & Language, 23(4), 510–526.

Chong, J., Gonina, E., Yi, Y., Keutzer, K. (2009). A fully data parallel wfst-based large vocabulary continuous speech recognition on a graphics processing unit. In: Proceedings of international conference on spoken language processing, interspeech 2009.

Dixon, P.R., Oonishi, T., Furui, S. (2009). Fast acoustic computations using graphics processors. In: IEEE international conference on acoustics, speech and signal processing, 2009 (pp. 4321–4324).

Cai, J., Bouselmi, G., Fohr, D., Laprie, Y. (2008). Dynamic gaussian selection technique for speeding up hmm-based continuous speech recognition. In: IEEE international conference on acoustics, speech and signal processing, 2008 (pp. 4461–4464).

Morales, N., Gu, L., Gao, Y. (2008). Fast gaussian likelihood computation by maximum probability increase estimation for continuous speech recognition. In: IEEE international conference on acoustics, speech and signal processing, 2008 (pp. 4453–4456).

Hennessy, J.L., & Patterson, D.A. (2003). Computer architecture: A quantitative approach. San Francisco: Morgan Kaufmann Publishers Inc.

You, K., Lee, Y., Sung, W. (2007). Mobile cpu based optimization of fast likelihood computation for continuous speech recognition. In: IEEE international conference on acoustics, speech and signal processing, 2007 (Vol. 4, pp. IV–985–IV–988).

Choi, Y., You, K., Choi, J., Sung, W. (2010). A real-time fpga-based 20000-word speech recognizer with optimized dram access. IEEE Transactions on Circuits and Systems I: Regular Papers, 57(8), 2119–2131.

Dongarra, J.J., Croz, J.D., Hammarling, S., Duff, I.S. (1990). A set of level 3 basic linear algebra subprograms. ACM Transactions on Mathematical Software, 16(1), 1–17.

Duda, R.O., Hart, P.E., Stork, D.G. (2000). Pattern classification. Wiley-Interscience Publication.

Paliwal, K.K. (1992). Dimensionality reduction of the enhanced feature set for the hmm-based speech recognizer. Digital Signal Processing, 2, 157–173.

Eckart, C., & Young, G. (1936). The approximation of one matrix by another of lower rank. Psychometrika, 1(3), 211–218.

Lee, D.D., & Seung, H.S. (2000). Algorithms for non-negative matrix factorization. In: Neural Information Processing Systems, NIPS 2000 (pp. 556–562).

Huang, X., Acero, A., Hon, H.-W. (2001). Spoken language processing: A guide to theory, algorithm, and system development (1st ed.). Upper Saddle River: Prentice Hall PTR.

Rabiner, L., & Juang, B.-H. (1993). Fundamentals of speech recognition. Englewood Cliffs: Prentice-Hall.

Author information

Authors and Affiliations

Corresponding author

Additional information

The major part of work was done when the first author was at Indian Institute of Science.

Appendices

Appendix A: Efficient Euclidean Distance Matrix Computation in Dynamic Time Warping (DTW) Using Matrix Multiplication

Computing pairwise distances in Euclidean space between two sets of vectors is a general problem in pattern classification and is often a key computation affecting the performance of speech recognition systems. The matrix multiplication based formulation presented in Section 2 for computation of Gaussian likelihoods in LVCSR can be applied to compute pairwise Euclidean Distances between the Test and Template utterances in Dynamic Time Warping (DTW) algorithm. We show that it results in saving approximately \(1 \over 3\) of computational operations without using any low-rank matrix approximations.

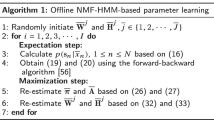

1.1 A.1 Dynamic Time Warping (DTW) Algorithm

Dynamic Time Warping (DTW) is a technique for optimally aligning variable length sequences of symbols based on some distance measure between the symbols [27]. In speech processing applications, each symbol is called a feature vector and we have two speech utterances (also called template and test utterances) which are variable length sequences of feature vectors. Typically, a feature vector is obtained by feature extraction algorithms [27] for 10ms of speech. Euclidean distance measure is widely applied for the feature vectors thus obtained. DTW in recursive form is given in Algorithm 1.

DTW can be implemented without recursion for efficient implementation. Non-recursive DTW can be seen as two distinct phases. (1) Computing the Euclidean distance matrix (2) Computing the accumulated distance matrix and extracting the least cost path. In the next subsection we show how we can reduce the computational operations in the first phase, i.e., Euclidean distance matrix computation.

1.2 A.2 Efficient Euclidean Matrix Computation Using Matrix Multiplication

The distance computation problem can be stated as follows: Given a set X of m feature vectors (of dimension d) denoted by x i , 1 ≤ i ≤ m from template utterance and a set Y of n feature vectors denoted by y j , 1 ≤ j ≤ n from test utterance, we compute Euclidean distance for all pairs (x i ,y j ) feature vectors. For a pair, computing the distance results in a dot product operation among augmented vectors obtained from feature vectors. So, the entire distance computation problem is a matrix-matrix multiplication problem among matrices obtained from feature vectors.

The squared Euclidean distance between two vectors x and y can be written is:

The total number of arithmetic operations (addition and multiplication) for computing a dot product is 3 d. So, the total number of operations for computing distances for all pairs (x i ,y j ) forming the distance matrix D is

If we expand the quadratic term \({(x_i - y_i)^2}\), we can see that the Euclidean distance is a sum of three different terms: (1) \(\sum_{i=1}^{d} {x_{i}^2}\) (2) \(\sum_{i=1}^{d} {y_{i}^2}\) and (3) −2 × dot product of vector x with vector y. Now, let us consider the sequence of feature vectors in template utterance , i.e., x 1 to x m ≡ X, a m ×d matrix where each row is a feature vector, and similarly Y is a d ×n matrix of columns of feature vectors from the test utterance, then the matrix of Euclidean distances can be expressed as:

-

X 2 is a rank-1 matrix of size m ×n where \(\bf {X^2}_{ij}={x_i}^\top x_i\).

-

Y 2 is a rank-1 matrix of size m ×n where \(\bf {Y^2}_{ij}={y_j}^\top y_j\).

The number of arithmetic operations for computing X Y is 2 m n d (i.e., matrix multiplication). Multiplying X Y with scalar − 2 leads to extra m n multiplications. X 2 requires computing \(\bf x_i^\top x_i\) where 1 ≤ i ≤ m, requiring 2 d operations. There are m vectors in X so total 2 m d operations. Similarly computing Y 2 will take 2 n d operations. So the total number of arithmetic operations for computing D is

compared to the original 3 m n d operations. Thus the condition for saving arithmetic operations is

that is

which makes sense if

which is true for all but very small values of m and n. Putting m = n suggests m > 4.

The number of operations saved are

3m n d being the original number of computations, the fraction f of original operations saved is

f approaches to \(1 \over 3\) as m, n and d increases. In our context, typical values of m and n are few hundreds to few thousands and d is typically 39. Thus the fraction of computations saved is \(\sim {1 \over 3}\), that is ~m n d operations.

Note that in Eq. 12, m n multiplications are required for computing − 2 (X Y), if we compute ( − 2X )Y instead we will incur m d multiplications, similarly for computing X( − 2 Y), we incur n d operations. Performing the same analysis by putting m d in place of m n in Eq. 12, we can derive

Thus the fraction of total operations saved only depends on m and n. The price to be paid is the induced error in the precision of − 2 X Y matrix due to different order of evaluation.

1.3 A.3 Performance Results

In Table 10, we measure the impact of matrix-multiplication based Euclidean distance matrix computation for DTW on Intel Xeon E5440 CPU @ 2.83 GHz. For efficient matrix-multiplication we have used GotoBLAS2 v1.13 library. We use only single core for the computation. Baseline:Total indicates total running time of the non-recursive baseline DTW algorithm complied with -O2 optimization of gcc 4.2. Matrix:Total indicates the total running time of non-recursive DTW algorithm, where Euclidean distances are computed using matrix-multiplication approach. For our experiments, we generate the test and template utterances randomly. Each utterance is a sequence of 39 dimensional vectors. Size shows the length (in no. of 39 dimensional vectors) of the template and test utterances. Without loss of generality we have taken identical lengths for both test and template utterance. We can see that matrix multiplication based approach for distance computation brings substantial speedups for overall DTW computation. As we increase the size of utterances speedup decreases, due to more time spent on dynamic programming based distance accumulation phase.

In Table 11, we measure time for only Euclidean distance computation phase. Baseline:DistCalc shows time spent in distance computation in Baseline version and Matrix:DistCalc shows time spent in distance computation using matrix-multiplication. We get the speedup of 5.6 even as we scale the problem size. This confirms our theoretical analysis that, irrespective of the size of template and test utterances we are going to save a constant fraction \(1\over3\) of arithmetic operations. However, in practise speedup achieved is much more due to the fact that BLAS implementation improves the cache memory utilization too.

Appendix B: Comparison with Principal Component Analysis (PCA)

In this section, we compare and discuss our method of deriving low-rank factors of the Gaussian parameters by optimum low-rank approximation of G (henceforth the svd-augmented method) with other feature space rank reduction method PCA as described in [23].

1.1 B.1 Computational Performance Comparison

We will assume that both the methods, PCA and svd-augmented are implemented in matrix-matrix multiplication manner, described in this paper. Let X be a feature vector matrix of size m ×d where rows containing individual feature vectors and let there be n Gaussians. The baseline number of arithmetic operations using matrix multiplication approach is 4mnd because we require two matrix multiplications of size (m ×d) ×(d ×n) to compute the likelihood matrix or equivalently one (m ×2d) ×(2d ×n) multiplication.

When the rank gets reduced to d − k, the computation will accordingly reduce to 4mn(d − k).

We will analyze algorithms in terms of overhead and savings. The ideal savings will correspond to the fraction of the rank reduced, i.e., 4mnk. But to reduce the features to the lowered rank, we will have to apply some transformation and thus, it is the overhead. It is also assumed that n > > m, otherwise the overhead may become greater than savings. The analysis of at what point the overhead will dominate the savings is performed in Section 2.2.

The computational overhead of PCA requires transforming X by a factor of size d ×d − k where 0 < k ≤ d. The remaining computation then becomes a (m ×2d − 2k) ×(2d − 2k ×n), which is 4mn(d − k) operations.

Since svd-augmented method operates on augmented feature vectors \(\left[ {\bf X^2} | {\bf X} \right]\) of size m ×2d, we have to multiply it with a factor of size 2d ×2k, to get it in the form m ×2d − 2k. From, then on it is the same (m ×2d − 2k) ×(2d − 2k ×n) multiplication.

The overhead in case of PCA is: (m ×d) ×(d ×d − k), that is 2md(d − k) operations. In case of svd-augmented method, the overhead is: (m ×2d) ×(2d ×2d − 2k), that is 8md(d − k) operations.

So, svd-augmented method incurs four times the overhead than PCA. However, since the computation savings is 4mnk, this overhead would be a small fraction of it, given n > > m,d.

There is one minor point still left. In case of svd-augmented method, before going for matrix-multiplication, we have to compute element wise square of X that is X 2, which takes m 2 d 2 operations. In case of PCA, we first reduce the rank of X, so we have to compute squares on reduced size matrix of m ×(d − k), that is m 2(d − k)2 operations.

So, The original baseline computation:

Computation after rank reduction of k for PCA:

Computation after rank reduction of 2k for svd-augmented:

1.1.1 B.1.1 Discussion

One point here is that we have implicitly assumed the equivalence of reduction of rank by k in feature space with that of reduction of rank 2k in augmented feature space of dimension 2d. Strictly speaking, we can not compare PCA with the svd-augmented because it operates on the augmented feature space. For ideal comparison with svd-augmented method, PCA have to be carried out on the augmented features, but then we can not use existing training algorithms, because that would mean we are estimating means for vectors of type \(\left[ {\bf X^2} | {\bf X} \right]\), that will change the model and the recognizer completely. So, to work on augmented feature space PCA, we need new training algorithms that train the co-efficients that finds some equivalence in multivariate Gaussian modeling of feature space. We have not explored this direction.

1.2 B.2 Recognition Performance Comparison with a Version of PCA

The svd-augmented method is an unsupervised method similar to PCA. PCA retains the directions in which the variance in the training data is maximum. Let us denote the matrix which transforms the feature space into lower dimensional one as described above as P which is d ×d − k. For the transformed features, XP the means will become MP and the covariances will be \({\bf P}\boldsymbol{\Sigma} {\bf P}^{\bf T}\). Here the covariance matrices will become full covariance. So, we need to estimate the diagonal covariances once again.

If we project the reduced rank features back on to d dimensional space, the resulting features XPP T will give minimum sum of square errors (Frobenius norm) with X. Now we can compare this version of PCA with svd-augmented method which minimizes sum of square errors in the likelihood matrix \(\left[ {\bf X}^{\bf 2} | {\bf X} \right] \cdot \left[ {\bf S} \over {\bf M} \right]\) for a given Gaussian parameter model \(\left[ {\bf S} \over {\bf M} \right]\). Table 12 shows the WER against effective rank comparison. We can observe that as the rank reduces, svd-augmented performs better as PCA method’s WER increases sharply. We think that, this happens because as we lower the rank, more and more of useful information carrying dimensions are discarded in case of the PCA method. While, since the svd-augmented method approximates likelihoods, it contains the information of the higher dimensions and that is encoded in its augmented feature transform matrix of size 2d ×2d − 2k. Intuitively, we can say that the svd-augmented method captures more information at the cost of increased overhead.

1.3 B.3 Summary

-

PCA method requires storing different factors for different ranks separately while we can select the top-k rows(columns) for selecting optimum factors for rank-k. This is an elegant way of efficiently implementing an LVCSR system where we can trade off computation with accuracy dynamically.

-

The svd-augmented method approximates the baseline model, while PCA retrains the models for lower rank.

-

The svd-augmented method operates on augmented feature space while PCA transforms original feature space.

-

The svd-augmented method works better than the PCA as the effective rank reduces because it captures the highest variances in the likelihoods, and errors in likelihoods have direct impact on word error rate in LVCSR systems. However, the svd-augmented method incurs three times more computational overhead compared to PCA, which is still small because the original overhead of PCA is small, given the number of Gaussians in the model are large.

Rights and permissions

About this article

Cite this article

Gajjar, M.R., Sreenivas, T.V. & Govindarajan, R. Fast Likelihood Computation in Speech Recognition using Matrices. J Sign Process Syst 70, 219–234 (2013). https://doi.org/10.1007/s11265-012-0704-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-012-0704-4