Abstract

According to the pulverized coal combustion flame image texture features of the rotary-kiln oxide pellets sintering process, a combustion working condition recognition method based on the generalized learning vector (GLVQ) neural network is proposed. Firstly, the numerical flame image is analyzed to extract texture features, such as energy, entropy and inertia, based on grey-level co-occurrence matrix (GLCM) to provide qualitative information on the changes in the visual appearance of the flame. Then the kernel principal component analysis (KPCA) method is adopted to deduct the input vector with high dimensionality so as to reduce the GLVQ target dimension and network scale greatly. Finally, the GLVQ neural network is trained by using the normalized texture feature data. The test results show that the proposed KPCA-GLVQ classifier has an excellent performance on training speed and correct recognition rate, and it meets the requirement for real-time combustion working condition recognition for the rotary kiln process.

Similar content being viewed by others

1 Introduction

The rotary kiln oxidized pellet sintering industrial process involves mass transfer, heat transfer and complex chemical reactions. The control of the modern pellet palletizing process is very complex, and it relates to a large number of physical parameters such as temperature, pressure, speed and flow rate, and many other factors including the physical changes and chemical reactions as well as the distribution of gas in the solid material layers[1].For a long time, the control strategy of the rotary kiln palletizing process is half automation by relying on the operating workers’ experience about fire. Because the control performances are susceptible to the operating workers’ subjective factors, it is difficult to ensure long-term stable and qualified product quality. It is important to stabilize the rotary kiln sintering process and improve the pulverized coal combustion efficiency by recognizing the pulverized coal combustion working conditions in the rotary kiln using digital image processing technique.

Inspired by the operation mode of watching fire, and close relationship between the rotary kiln combustion working conditions[1] and the flame images, many scholars carried out researches on working condition recognition and temperature detection by analyzing the flame images in the rotary kiln sintering process. Li et al.[2] proposed a neural network control system for rotary kiln based on flame image feature, which adopted a visual inspection system to identify rotary kiln with a flame burning, including flame image acquisition, preprocessing, segmentation, feature extraction and recognition. Szatvanyi et al.[3] studied the combustion related variables and prediction of product quality based on the flame digital RGB images and multivariate image analysis (MIA) technology. Li et al.[4] proposed a second simulation method based on a clustering image processing and pattern recognition method. Jiang et al.[5] proposed an improved sintering state identification method, which included image preprocessing, image segmentation, feature extraction based on integration of image processing methods and the support vector machine (SVM) theory. The simulation results showed that the multi-level SVM recognition rate was 93.89%, while the back propagation (BP) neural network recognition rate was 65%. Zhang et al.[6] proposed a flame image description method based on Fourier transform and used artificial neural network and the SVM to realize flame image recognition. Sun et al.[7] proposed a machine identification method for the firing zone status based on the flame image features and the key process data fusion. Yuan et al.[8] proposed an improved ART-2 clustering decomposition algorithm to identify the rotary kiln working conditions by using information fusion technology. Sun et al.[9] proposed an image FCM segmentation method based on the image gray values and texture roughness characteristics.

Based on the texture features of the pulverized coal combustion flame images in the rotary kiln oxidized pellet sintering process, the paper proposed a kernel principal component analysis and generalized learning vector (KPCA-GLVQ) working condition recognition method. Experiments showed that the method achieved good results in the execution speed and recognition accuracy.

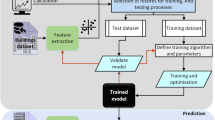

2 Recognition strategy

The block diagram of the rotary kiln combustion condition recognition system is shown in Fig. 1. Firstly, the 14 texture parameters of the combustion flame image based on the grey-level co-occurrence matrix (GLCM) are calculated to describe the visual features of the flame image. Then in order to reduce the target dimension and the size of the GLVQ neural network, the KPCA method is used to realize the dimension reduction of the high dimensional input vector composed of the normalized texture features data. Finally, the GLVQ network is used to recognize the rotary kiln combustion working conditions.

3 Texture features extraction

3.1 Grey-level co-occurrence matrix

The statistical characteristics of the combustion flame image texture variables of the rotary kiln can reflect the working conditions of the pulverized coal combustion. The grey-level co-occurrence matrix (GLCM) is an important method to analyze the image texture features based on the second combination condition probability density function of the estimated image[10, 11]. Fig. 2 is a GLCM schematic diagram, where i and j denote the gray scale of the corresponding pixel.

GLCM means the simultaneous occurrence probability P(i, j, δ,δ) of two pixels. They are the pixel with gray scale i from the image f(x, y) and thepixel (x + Δx, y + Δy) with gray scale j, declination θ and distance δ.Themathematical formula is

where i, j = 0, 1, ⋯, L − 1, x and y are the coordinates of the image pixel, L is the image gray level, N x and N y represent the numbers of columns and rows of the image. Haralick et al.[12] proposed 14 GLCM based texture parameters (f 1–f 14): angular second moment (ASM), contrast, correlation, sum of squares (SS), sum average (SA), inverse difference moment (IDM), entropy, sum variance (SV), sum entropy (SE), difference entropy (DE), difference variance (DV), maximum correlation coefficient (MCC) and information measures of correlation (IOC), whose calculation equations are shown in Table 1.

This paper adopts four methods (GLCM_Features 1, GLCM_Features 2, GLCM_Features 3 and GLCM_Features 4) to calculate the GLCM based combustion flame image texture parameters. The experimental results of the time complexity are shown in Fig. 3. It can be seen from Fig. 3 that the GLCM_Features 4 method has the shortest time. The combustion flame image texture parameters can be used to reflect the rotary kiln sintering working conditions, which are divided into the complete combustion (represented by 1) and incomplete combustion (represented by 0). The paper utilizes the formulas in Table 1 and GLCM_Features 4 method to calculate the texture features of 100 combustion flame images. The results are shown in Table 2.

3.2 Dimension reduction of flame image texture features based on KPCA

The kernel principal component analysis (KPCA) method is used to reduce the dimensionality of the high-dimensional input vector[13, 14]. The KPCA analysis is carried out on the combustion flame image texture features to reduce the dimensionality of the high-dimensional input vector, whose basic principle is described as follows[15, 16].

Given a sample set x i (i = 1, 2, ⋯,M) and x i ∈ R N, the nonlinear mapping relation is given as

So sample x i is mapped to φ(x i ). Then the covariance matrix of the new sample space is calculated according to

The eigenvalue decomposition is carried out according to

where λ (λ > 0) is the eigenvalue of R,and Q is the corresponding eigenvector. Multiplying both sides of (4) by φ(x i ), we obtain

And coefficient α i (i = 1, 2, ⋯, M) exists such that the following equation holds.

By combining the above two equations, matrix K(M × M) is defined as

Set α as the corresponding eigenvector of the kernel matrix K. Then

where α = (α 1, α 2, …, α M )T.

Assume that the solution of (9) is \({\lambda _1} \geqslant {\lambda _2} \geqslant \cdots \geqslant {\lambda _p} \geqslant \cdots \geqslant {\lambda _M}\). λ P is the last non-zero eigenvalue, whose corresponding eigenvector is (\(\alpha _1^k\), ⋯, \(\alpha _1^p\), ⋯ \(\alpha _M^k\)). Then the eigenvector of F is normalized according to

Putting \(Q = \mathop \sum \nolimits_{i = 1}^M \,\alpha \varphi ({x_i})\) and \({K_{ij}} = (\varphi ({x_i})\varphi ({x_j}))\) into (10) leads to

The principal component of a new sample x i is obtained by mapping sample φ(x)of F into Q k, which is described by

For the sake of simplicity, \(\hat K = K - {I_M}K - K{I_M} + {I_M}K{I_M}\) is used to substitute kernel matrix of all mapping samples, among which \({({I_M})_{ij}} = {1 \over M}\). The paper adopts Gaussian function as the KPCA kernel function, which is described as

Based on the aforementioned, the procedure of KPCA algorithm is described as

-

1)

Calculate kernel matrix \({\hat K}\).

-

2)

Calculate eigenvalues and eigenvectors of kernel matrix \({\hat K}\).

-

3)

Sort eigenvalues in the descend order; assume \({\lambda_1}\geqslant{\lambda_2}\geqslant \cdots \geqslant {\lambda _M}\); calculate the contribution ratio by (14) to decide the number of the extracted character information ø(}P})

$$\phi \left(p \right) = {{\sum\limits_{i = 1}^p {{\lambda _i}} } \over {\sum\limits_{i = 1}^M {{\lambda _i}} }}.$$(14) -

4)

The eigenvectors in accordance with the previous p (1 ≼ p ≼ M) biggest eigenvalues are normalized according to (11).

-

5)

Calculate a new principal component by (12).

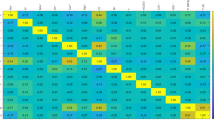

The GLCM based texture parameters of flame images are carried out by kernel principal component analysis, whose results are described in Table 3. It can be seen that the contribution ratio of the previous 5 principal components already exceed 85 %. Thus, the principal components obtained by the KPCA on the original variables data are the input variables of the GLVQ neural network model, which not only reserves the character information of original variables, but also simplifies the network scale of GLVQ neural network.

4 Working condition recognition

4.1 Training of LVQ neural network

The learning vector quantization (LVQ) neural network is a self-organizing neural network model with the supervised learning strategy proposed by Kohonen[17, 18].The LVQ neural network is composed of three layers of neurons, namely input layer, hidden layer (competitive layer) and output layer. The network structure is shown in Fig. 4.

In the training procedure, x p is the p-th training vector, T p is the belonged category of x p , C j is the belonged category of j-th output neuron, and the cluster number is n. Thus the training steps of the competition layer weights are described as follows:

-

Step 1. Initialize the competitive layer weight vectors W = {w 1, w 2, ⋯, w n }, the learning ratio ŋ ∈ [0, 1], the number of iterations n, and the total iteration number N.

-

Step 2. Execute (1) and (2) for each vector x p in the training set.

-

1)

Calculate the distance between each sample xp and the clustering center, and find the cluster center k with the minimum distance to obtain the winning neuron by \(\Vert{w_k} - {x^p}\Vert < \Vert{w_j} - {x^p}\Vert,\,(j = 1,2,\, \ldots ,n)\).

-

2)

Revise the weights by the following equations.

$$\matrix{{W_{ij}^{{\rm{new}}} = W_{ij}^{{\rm{old}}} + \eta \>({x_i} - W_{ij}^{{\rm{old}}}),\>\>\>\>\>\>{\rm{Right}}\>\>{\rm{classification}}} \hfill \cr {W_{ij}^{{\rm{new}}} = W_{ij}^{{\rm{old}}} - \eta \>({x_i} - W_{ij}^{{\rm{old}}}),\>\>\>\>\>\>{\rm{False}}\>\>{\rm{classification}}.} \hfill \cr }$$(15)

-

Step 3. The learning rate ŋ is updated by

$$\eta = {\eta _0}\left({1 - {n \over N}} \right).$$(16) -

Step 4. Check the termination condition. If n is smaller than N, return to Step 2, otherwise terminate the training procedure.

4.2 GLVQ neural network

The LVQ algorithm has two drawbacks: 1) there are underutilized neurons; 2) the information between the input samples and the competition units are wasted[19].So, Pal et al.[20] proposed a generalized learning vector quantization (GLVQ) network. For a given input vector, the GLVQ algorithm updates all neurons weights in the competitive layer. Given n samples, the feature space is p dimensional, namely X = {X 1, X 2, ⋯, X n }, where i represents the notation of the optimal matched neuron. The loss function L x is defined as

where c is the number of categories.

The purpose of the GLVQ learning algorithm is to find the c cluster centers W r . Then the set W = {W r } makes the expected value Γ(W) of the loss function L x calculated by (19) minimum. The gradient descent algorithm can be utilized to solve this optimization problem.

The procedure of the GLVQ learning algorithm can be summarized as follows:

-

1)

Give a set of data X = {X 1, X 2, ⋯, X n } ∈ R p without notations, the class number c, the iteration number T and the allowable error ε > 0.

-

2)

Initialize W 0 = {W 10, W 20, ⋯, W c0} and the initial learning step α 0.

-

3)

For t = 1, 2, ⋯, T, calculate \({\alpha _t} = {\alpha _0}(1 - {t \over T})\). For k =1, 2, ⋯,n, find the X k satisfying the following expression.

$$||{X_k} - {W_i}(t)|| = \mathop {\min }\limits_{1 \leqslant j \leqslant c} \{ ||{X_k} - {W_j}(t)||\} .$$(20)Then the c weight vector {W r (t + 1)} is updated in accordance with the following equation.

$$\matrix{{{W_i}(t + 1) = {W_i}(t) + {\alpha _t}[{X_k} - {W_i}(t)]\cdot} \hfill \cr {{{{D^2} - D + ||{X_k} - {W_i}(t)|{|^2}} \over {{D^2}}},{\rm{ }}r = i.} \hfill \cr }$$(21)Otherwise,

$${W_r}(t + 1) = {W_r}(t) + {\alpha _t}[{X_k} - {W_i}(t)]\cdot{{||{X_k} - {W_r}(t)|{|^2}} \over {{D^2}}}$$(22)where \(D = \sum _{r = 1}^c||{X_k} - {W_r}(t)||{^2}\)

-

4)

Calculate

$$\matrix{{{E_t} = ||W(t + 1) - W(t)|{|_1} = \sum\limits_{r = 1}^c {||{W_r}(t + 1) - {W_r}(t)|{|_1}} = } \hfill \cr {\sum\limits_{k = 1}^n {\sum\limits_{r = 1}^c {\left| {{w_{rk}}(t + 1) - {w_{rk}}(t)} \right|} } .} \hfill \cr }$$(23) -

5)

if E t ≼ ε, terminate the procedure. Otherwise, recalculate for the next iteration t.

-

6)

Compute the division U =[u ik ] c×n of the data set X to the c clustering centers, where

$$u_{ik} = \left\{ {\begin{array}{*{20}c} {1,} & {\left\| {X_k - W_i } \right\| \leqslant \left\| {X_k - W_j } \right\|,i \leqslant j \leqslant c,j \ne i} \\ {0,} & {1 \leqslant j \leqslant c,1 \leqslant k \leqslant n, Otherwise.} \\ \end{array} } \right.$$(24)

4.3 Recognition of rotary kiln combustion working conditions

The paper selected 100 combustion flame images with clear working conditions in the rotary kiln production process as sample images. The 60 randomly selected images served as training samples (42 complete combustion images and 18 incomplete combustion images). The remaining 40 images served as test samples (24 complete combustion images and 16 incomplete combustion images).

The main parameters of the GLVQ classifier can be set as follows. The input layer has 5 neurons in accordance with the 5 nonlinear principles of the texture feature variables obtained by the KPCA method. The output layer has 2 neurons representing the 2 combustion working conditions: Complete combustion notated as 1 and incomplete combustion notated as 0. The maximum iteration number is 5000, the allowable error is 0.001 and the learning step α 0 = 0.5. The LVQ classifier is utilized for comparison with the proposed KPCA-GLVQ classifier. The classification results are shown in Table 4.

As seen from Table 4, the identification positive ratios of the KPCA-GLVQ reach 95.83% and 93.75%, the latter one is 12.5 percentage points above the LVQ classifier. In the overall recognition rate, the identification positive ratio of the KPCA-GLVQ method reaches 95%, which indicates that the KPCA-GLVQ classification method has achieved better results in the execution speed and recognition accuracy.

5 Conclusions

A combustion working condition recognition method based on the GLVQ neural network is proposed based on the pulverized coal combustion flame image texture features of the rotary kiln oxide pellets sintering process. The test results show that the proposed KPCA-GLVQ classifier has an excellent performance for training speed and correct recognition ratio.

In the future, the proposed KPCA-GLVQ recognition method of rotary kiln combustion working conditions will be merged into real-time optimized process control. In addition, design of a better classification method is an important task that merits future study.

References

J. S. Wang, Y. Zhang, F. W. Cong, W. Wang. The design of 2 Mt/a capacity grate-kiln oxidized pellet process for Angang pelletizing plant. Sintering and Pelletizing, vol. 30, no. 4, pp. 9–13, 2005. (in Chinese)

S. T. Li, Y. N. Wang, C. F. Zhang. Neural network control system for rotary kiln based on features of combustion flame. Acta Automatica Sinica, vol. 28, no. 4, pp. 591–595, 2002. (in Chinese)

G. Szatvanyi, C. Duchesne, G. Bartolacci. Multivariate image analysis of flames for product quality and combustion control in rotary kilns. Industrial & Engineering Chemistry Research, vol. 45, no. 13, pp. 4706–4715, 2006.

J. Li, H. L. Zhang, X. T. Chen, D. F. Liu, Z. Zou. Secondary simulation based on pattern cluster and image processing and application in rotary kiln temperature inspection. The Chinese Journal of Nonferrous Metals, vol. 17, no. 7, pp. 1182–1197, 2007. (in Chinese)

H. Y. Jiang, X. J. Zhou, T. Y. Chai. Recognition based on multilevel SVM for different sintering states in rotary kiln. Journal of Northeastern University, vol. 30, no. 1, pp. 54–57, 2009. (in Chinese)

H. L. Zhang, Z. Zou, J. Li, X. T. Chen. Flame image recognition of alumina rotary kiln by artificial neural network and support vector machine methods. Journal of Central South University of Technology, vol. 15, no. 1, pp. 39–43, 2008. (in Chinese)

P. Sun, T. Y. Chai, X. J. Zhou, H. Yue. Flame image recognition system for alumina rotary kiln burning zone. Journal of Chemical Industry and Engineering, vol. 59, no. 7, pp. 1839–1842, 2008. (in Chinese)

Z. G. Yuan, Z. Y. Liu, R. Pei, H. L. Yu. The recognition of working condition of cement rotary kiln based on information fusion. In Proceedings of IEEE International Conference on Automation and Logistics, IEEE, New York, USA, pp. 1324–1329, 2009.

P. Sun, X. J. Zhou, T. Y. Chai. FCM segmentation for flame image of rotary kiln based on texture coarseness. Journal of System Simulation, vol. 20, no. 16, pp. 4438–4441, 2008. (in Chinese)

E. S. Gadelmawla. A vision system for surface roughness characterization using the gray level co-occurrence matrix. NDT & E International, vol. 37, no. 7, pp. 577–588, 2004.

J. F. Vargas, M. A. Ferrer, C. M. Travieso, J. B. Alouso. Offline signature verification based on grey level information using texture features. Pattern Recognition, vol. 44, no. 2, pp. 375–385, 2011.

R. Haralick, K. Shanmugam, I. Dinstein. Textural feature for image classification. IEEE Transactions on Systems, Man, and Cybernetics, vol. 3, no. 6, pp. 610–621, 1973.

H. J. Yin. Nonlinear dimensionality reduction and data visualization: a review. International Journal of Automation and Computing, vol. 4, no. 3, pp. 294–303, 2007.

M. L. Wang, N. Li, S. Y. Li. Model-based predictive control for spatially-distributed systems using dimensional reduction models. International Journal of Automation and Computing, vol. 8, no. 1, pp. 1–7, 2011.

L. J. Cao, K. S. Chua, W. K. Chong, H. P. Lee, Q. M. Gu. A comparison of PCA, KPCA and ICA for dimensionality reduction in support vector machine. Neurocomputing, vol. 55, no. 1–2, pp. 321–336, 2003.

J. M. Lee, C. Yoo, S. W. Choi, P. A. Vanroueghem, I. B. Lee. Nonlinear process monitoring using kernel principal component analysis. Chemical Engineering Science, vol. 59, no. 1, pp. 223–234, 2004.

B. Hammer, T. Villmann. Generalized relevance learning vector quantization. Neural Networks, vol. 15, no. 8–9, pp. 1059–1068, 2002.

S. S. Zhou, W. W. Wang, L. H. Zhou. A new technique for generalized learning vector quantization algorithm. Image and Vision Computing, vol. 24, no. 7, pp. 649–655, 2006.

W. Liu, B. X. Cui. The remote sensing image classification based on generalized learning vector quantization algorithm. Journal of Electronics & Information Technology, vol. 28, no. 7, pp. 1201–1203, 2006. (in Chinese)

N. R. Pal, J. C. Bezdek, E. C. K. Tsao. Generalized clustering networks and Kohonen’s self-organizing schemes. IEEE Transactions on Neural Networks, vol. 4, no. 4, pp. 549–557, 1993.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by China Postdoctoral Science Foundation (No. 20110491510), Program for Liaoning Excellent Talents in University (No. LJQ2011027), Anshan Science and Technology Project (No. 2011MS11) and Special Research Foundation of University of Science and Technology of Liaoning (No. 2011zx10)

Rights and permissions

About this article

Cite this article

Wang, JS., Ren, XD. GLCM Based Extraction of Flame Image Texture Features and KPCA-GLVQ Recognition Method for Rotary Kiln Combustion Working Conditions. Int. J. Autom. Comput. 11, 72–77 (2014). https://doi.org/10.1007/s11633-014-0767-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11633-014-0767-8