Abstract

In this paper, we assess the bibliometric parameters of 37 Dutch professors in clinical cardiology. Those are the Hirsch index (h-index) based on all papers, the h-index based on first authored papers, the number of papers, the number of citations and the citations per paper. A top 10 for each of the five parameters was compiled. In theory, the same 10 professors might appear in each of these top 10s. Alternatively, each of the 37 professors under assessment could appear one or more times. In practice, we found 22 out of these 37 professors in the 5 top 10s. Thus, there is no golden parameter. In addition, there is too much inhomogeneity in citation characteristics even within a relatively homogeneous group of clinical cardiologists. Therefore, citation analysis should be applied with great care in science policy. This is even more important when different fields of medicine are compared in university medical centres. It may be possible to develop better parameters in the future, but the present ones are simply not good enough. Also, we observed a quite remarkable explosion of publications per author which can, paradoxical as it may sound, probably not be interpreted as an increase in productivity of scientists, but as the effect of an increase in the number of co-authors and the strategic effect of networks.

Similar content being viewed by others

Introduction

In April 2009, we published a bibliometric analysis of the work of 28 Dutch professors in clinical cardiology [1]. We scored the number of papers, excluding abstracts and letters, and analysed their citation as well. In addition we determined the Hirsch index [2] (h-index). Hirsch proposed the h-index as an alternative parameter for measuring the scientific output of an individual [2]. Hirsch stated ‘I propose the index h, defined as the number of papers with citation number higher or equal to h, as a useful index to characterise the scientific output of a researcher.’ The h-index is a very simple and useful, albeit imperfect, parameter. When an author has an h-index of 50, it means that ‘he’ has published 50 papers that have each been cited 50 times or more. The remainder of ‘his’ papers are cited less than 50 times. Therefore the effect of a very large number of citations to a single paper (e.g. to a clinical trial in which the scientist was just ‘one of the 100 authors’) is mitigated, whereas authors who publish many papers in low impact journals (e.g. for educational reasons) are not punished compared with a system that is based on citation per paper.

At that time, with 1 June 2008 as the date of assessment, we observed a huge variability in h-index. The highest h-index was 95 for Dr. P.W. Serruys. The lowest h-index was 17. Obviously, and very different from financial indices, the h-index can only increase with time. Thereby it may favour the ‘scientific establishment’ to which professors in clinical cardiology may be considered to belong. It has been suggested by Hirsch himself [2] that this may be solved by dividing the h-index by ‘scientific age’, which could be defined as the time since publication of a first paper. Such a correction may be a disadvantage for anyone taking an alternate path in his/her career. It may also be a disadvantage for young medical students who are successful early in their career and then proceed with intensive internships with little scientific output for a substantial number of years. Finally, it is a drawback for those who have invested a considerable time over many years to raise a family, as was pointed out by Spaan [3]. When we nevertheless applied this correction, we found Dr. Serruys again at poll position with 2.97 (h-index 95 divided by 32; his first paper being published in 1977). Now, another professor received the red lantern with a score of 0.74.

In 2009, we made the choice to publish these data with the names of these professors in alphabetical order, thereby down-tuning the impression of quality ranking. We have been criticised for not publishing our data anonymously. Although we have sympathy for that point of view, we feel that the readability of our paper at that time [1] and again now, would suffer from anonymous data. Moreover, the data are available for those with entrance to the Web of Science of Thomson Reuters, which is normally the case in academic institutions. One of the reasons is that we have emphasised that it is far from certain that even a relatively homogeneous group of scientists as professors in clinical cardiology can be assessed with similar methods. To assess this, it is unavoidable that the subfields are uncovered and this requires linkage to individuals. As long as the methods have not been validated with adequate (and published) research, we feel that citation analysis should be discouraged in complex organisations as university medical centres and universities. This position has been at the heart of a clear-cut disagreement with the (previous) management of our own university medical centre (Academic Medical Center, Amsterdam) including its research council [4, 5].

Whether citation analysis can be used as a parameter of quality or not was extensively discussed in our previous paper [1], and here, we restrict ourselves to referring to the specialised literature [6–14].

Present analysis (2010)

Table 1 shows all new numerical data as assessed by 31 December 2010 from the Web of Science of Thomson Reuters. This time, all documents were included apart from meeting abstracts, corrections and book reviews. Therefore, compared with the previous one, the present analysis also comprises letters (in general, a very minor subset of the total output). All parameters are self-explanatory, but for the sake of clarity, we will use the work of one of the eight more recently appointed professors in clinical cardiology, Dr. Suryapranata, to explain the calculation of the h-index. Figure 1 shows his 193 papers ranked from most cited to non-cited. The first paper was cited 979 times, the second 779 times and the third 439 times. A total of 24 papers were cited 100 times or more. Of his 193 papers, 26 remained uncited. In the inset of Fig. 1, both axes are restricted to 100 papers along the abscissa and 100 citations along the ordinate. Thus, the most cited 24 papers are missing in the inset, restricting them effectively to 76 papers. Paper number 46 was cited 47 times and paper 47 was cited 45 times. Therefore the h-index, indicated by the thick lines in the inset, is 46. Table 1 shows the names of the 37 professors in clinical cardiology in alphabetical order, followed by their affiliation in the left two columns. Then in column 3, the year of publication of the first paper appears. Compared with the data in our previous paper [1], we have amended some years of first publication because they were incorrect in our previous report [1]. Next, to the right, there are two framed boxes. In the left-hand box, all publications from each author between 1971 and 2010 are considered. The citation window was similar. Thus, a paper published by ‘Arnold AER’ in 1988 was followed from 1988 to 2010. In the right-hand box, the papers under consideration were restricted to the years 2005–2009. Their citation window was from 2005 to 2010. The rationale behind the right-hand box is to find a means to compensate the younger authors for the fact that they have had less time to create an oeuvre than the older authors. The rationale behind the citation window of 2005–2010 vs. a publication window of 2005–2009 is that papers published in 2009 had the chance to be cited in 2009 when published in January 2009, but not when published in December 2009. Within each framed box, we have assessed: the h-index based on all papers, the h-index of all first authored papers, the total number of papers, the total number of citations and the citations per paper.

All of Dr. H. Suryapranata's papers. All 193 papers were ranked from most cited to not cited. The inset shows nos. 25–100 of the 100 most cited papers (nos. 1–24 are missing because these were cited more than 100 times (see ordinate)). Paper 46 was cited 47 times; paper 47 was cited 45 times. Therefore the h-index is 46, indicated by the two solid lines

At the right of Table 1, there is a column with ‘editing comment’. The first ‘y’ or ‘n’ indicates whether the author's work has been checked for less than his/her total number of initials. The ‘y’ or ‘n’ behind the slash indicates whether we were forced to edit the results by hand or not. This is necessary when there is another author with exactly the same family name and initials. Finally, at the bottom of the columns, the arrhythmic mean of the numbers are given. In case of the h-indices, there are two averages. ‘Average 1’ is the simple average of the 37 values. ‘Average 2’ is the square root of the average of the squared indices, which seems to us the appropriate way to determine it. At this stage, we present all data without further commenting on them, apart from the fact that we wish to underscore despite the fact that we are analysing the work of 37 individuals, these sets of papers may have a substantial overlap, in particular when the authors are working at the same university medical centre. The degree to which this overlap occurs also differs. From these data, it is not possible to calculate the values for each centre, let alone for all centres.

Finally, we underscore that there are now alternatives for the Web of Science of Thomson Reuters such as Scopus, Science Direct or Google Scholar. These databases have different systems of indexing. Therefore it is important that all groups and/or individuals under assessment are evaluated using the same database.

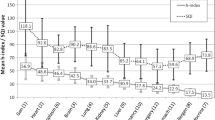

h-index (all papers)

The h-index is a tool that can easily be explained and determined by individuals interested in their research performance. As could be expected, many alternatives have been formulated thereafter [15]. Table 2 shows the first 10 authors based on the h-index of all papers. The ranking is done by dividing the h-index by ‘scientific age’. At the top of the listing is Dr. Bax from LUMC with 3.28 h/year and an h-index of 59. Number 2 is Dr. Serruys with 3.15 h/year and an h-index of 107. Four authors from the LUMC and three from the Erasmus Center dominate this list. The averages at the bottom of the table (and in all further tables) are the average of all 37 professors, not just from the top 10. It remains undecided whether all 37 professors have an à priori equal chance to score the same h/year, because there is heterogeneity in citation frequencies even within clinical cardiology [14], which means that subfields as atherosclerosis, arrhythmias and congenital heart disease in fact require their own different reference standards.

When one compares the right with the left box, it is surprising that the h/year is 3.03 for all 37 cardiology professors during 2005–2009, vs. 1.58 during ‘all years’. Our observations therefore do not comply with Hirsch's expectation [2] that the h-index increases linearly over time. Concerning the individual aspects, we found that nine of the 10 professors who were leading over ‘all years’ were also leading over the period 2005–2009 (compare the left with right boxes).

Figure 2 shows the h-index and h/year of the work of Dr. Verheugt. The steady increase of the h-index over the years is obvious. The same is pertinent for the gradual increase of h/year, confirming that the normalisation to ‘scientific age’ cannot completely compensate for the advantage of ‘older’ scientists. Figure 3 shows a comparison of 28 professors who could be assessed both during 1971–2008 [1] and during 1971–2010 with an averaged period difference of 2.5 years. Thus, the ‘scientific oldest’ author, Dr. Simoons, was assessed over 37.5 years in the previous analysis [1] and over 40 years in the present years, whereas Dr. De Winter was assessed over a period of 14.5 years in the previous analysis [1] and over 17 years in the present one. Despite this small increase in time frame, the h/year increased from 1.43 ± 0.10 to 1.65 ± 0.12. The paired difference was 0.23 ± 0.04 (p < 0.0005). It follows that, as also shown in Fig. 2 for an individual, the normalisation of the h-index is an improvement, but does not eliminate the disadvantage of younger scientists.

All of F.W.A. Verheugt's papers. The h-index increased from 0 in 1977 to 54 in 2010. Correction for ‘scientific age’ was done by dividing the h-index at each year at the abscissa by the difference between that particular year and 1976. Thus, the h-index was 54 in 2010 and h/year was 54/34 = 1.59. Although this correction normalises the h-index substantially, it is obvious that h/year still increases with the years

The h/year of 28 professors in clinical cardiology at the time of our previous report (date 1 June 2008) [1] along the abscissa and the present report (date 31 December 2010) along the ordinate. The dashed line indicates the line of identity. Average values 1.43 ± 0.10 (2008) and 1.65 ± 0.12 (mean ± s.e.m.). Distance to line of identity 0.23 ± 0.04 (p < 0.0005)

h-index based on first-authored papers

An alternative to overcome the disadvantage of younger scientists is to limit the assessment to first-authored papers. This also makes it clear whether or not senior scientists remain active themselves other than by steering younger scientists or providing them with facilities. For senior (last) authorship, it is not possible to distinguish between authorship with a relevant intellectual contribution and ‘honorary authorship’. Besides, this can only be done completely manually. Such an approach will not be easily accepted by the scientific establishment, because it can be expected that it defends its position in the same way as in the rest of the society. Still, history has taught us that scientists are in general not at their top when they are over 45 or so. It will, for example, come as a surprise to many that younger reviewers of scientific journals produce better reports than older ones despite the difference in experience [16].

Another major advantage of focusing on first authorships is that in such a system each paper is only counted once. This may help to prevent the blurring that follows from the comparison of the scientific output of investigators with a large overlap in their papers.

Table 3 shows the performance of the 37 professors in terms of first authorships. At poll position is Dr. Serruys with 1.15 h/year with also, by far, the highest all time h-index for first authors (39). Five authors (Drs Von Birgelen, Van Belle, De Winter, Van Gelder and Zijlstra) appear on this list who were not on the h-index based on all papers. Drs. Serruys, Bax, Van Veldhuisen, De Feyter and Wilde are on both top 10 lists. For the whole group, the average was at 0.46 h/year, which returns ‘relevant productivity’ to a human scale.

When the focus was on the 2005–2009 period (right-hand framed box), only five authors, Drs. Serruys, Bax, Van Veldhuisen, Van Gelder and Wilde, remained in the top 10. The others in that top 10 were Drs. Voors, Heymans, Verheugt, Van den Berg, Van der Wall, Mulder and Waltenberger (ex aequo; not included in Table 3). The fact that both ‘older’ and ‘younger’ scientists could appear on such a list underscores its dynamic characteristics.

Although the h/year increased from 1.58 to 3.03 when ‘all years’ were compared with ‘2005–2009’ when it was based on all papers (Table 2), it decreased from 0.46 to 0.36 when it was compared between the same periods, but now based on first-authored papers only. Such numbers may provide the scientific arena with a return of the human scale in a period of galloping inflation. We have indicated previously that not much has changed since 1907–1916 when Lotka formulated his famous law [17], which says that the proportion of papers by authors publishing 1, 2, 3 and more papers follows 1/n 2 in which n is the number of papers. Thus, of all authors, about 60% publish only one first-author paper in their career, 15% of authors publish two and less than 1% of authors publish eight or more first-authored papers. This situation was virtually unchanged in 1996 [18]. The enormous increase in publication of papers per author probably results almost completely from the fact that more scientists are recruited and from the strategic organisation of networks.

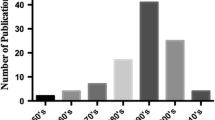

Total number of papers

Table 4 shows the ranking of the professors in clinical cardiology based on the total number of papers. This list is dominated by the professors of the LUMC, all four being in the top 6. When the period was restricted to the publication years 2005–2009, eight of the 10 authors remained at the top of this listing focusing on ‘productivity’. The inflation of ‘productivity’ (see previous section) is underscored by the fact that all 10 professors more or less doubled their average number of papers over the years. This also follows for the averages at the bottom of Table 4 for all 37 professors. It was 11.0 over all years (including 2005–2009), but 21.3 for 2005–2009. Dr. Bax remained at the top with a miraculous 110.8 papers per year. In the top year 2009 he published 138 papers, which implies one paper each 1.9 working days. One may wonder where this will end.

Total number of citations

Table 5 shows the top 10 based on the total number of citations. In this listing the Erasmus Center dominates the list at 1, 3 and 5. Also the position of the professors of the LUMC is prominent. Drs. Verheugt and Suryapranata from UMCN appear in this top 10. Eight out of 10 of these professors also appear in this top 10 when the period is restricted to papers published between 2005 and 2009. It is remarkable that for all 37 professors, the average number of citations obtained per year was the same when their whole publication period was assessed, or when it was restricted to 2005–2009. In both cases, there were just over 300 citations per year.

Citations per paper

Table 6 shows the top 10 based on the number of citations obtained per paper. Here, there is no correction for the ‘scientific age’. The reason is simple. The number of citations for a paper increases during the first years after publication. It reaches a zenith and then wanes again. Thus, if one calculates the cumulative citations per year for a paper, the average increases also, reaches a zenith as well and then declines again. This creates a hybrid scenario even for the different papers of the same author.

Table 6 shows that this listing is headed by Dr. Arnold, one of the few professors not primarily affiliated with one of the eight university medical centres. His score of 77.45 citations per paper stems from the time that he was working at the Erasmus Center. This parameter remained high, primarily by publishing relatively few papers in recent years. This becomes clear in the right-hand box where the citations per paper have fallen to 13.83 and the ranking is 19 instead of 1. The same phenomenon is seen for the recently appointed Drs Suryapranata and De Boer with a decrease of citations per paper by two thirds. However, these numbers should be considered against the background that for all 37 professors, the average citation was 30.09 for the whole period and only 15.67 for the period 2005–2009 as cited between 2005 and 2010. The explanation is simple: the papers published between 2005 and 2009 had less time to accumulate citations than the papers published during all years. Therefore, for this parameter, the absolute numbers have little significance. The significance of the ranking is more important. In this listing the second rank of Dr. Simoons with 62.02 citations per paper is striking. His high ranking was consolidated when the ranking was based on the 2005–2009 period. It means that he has succeeded in focusing on work that was frequently cited. It is quite surprising that none of the professors affiliated with the LUMC appear in this top 10 either based on all years or on the 2005–2009 period. In the 2005–2009 period, the high positions of Dr. Peters (AMC) and the recently appointed Dr. Heymans (MUMC) are striking.

Final remarks

When the five parameters in this brief analysis were used to compile a top 10, it could have been possible, in theory, that each of these five top 10s would have consisted of the same 10 individuals. Alternatively, all 37 professors under assessment could have appeared one or more times. Instead, we found 22 out of 37 professors in at least one of the five top 10s. This underscores that there is no golden parameter. Two individuals, Dr. Serruys and the recently retired Dr. De Feyter, appeared in all five top 10s.

Quality assessment of scientific output remains a heavily disputed issue. We recommend, at this stage, to be very careful until better tools have been developed. The methods are not good enough and are too dynamic at the moment [14, 19] to arrive at criteria acceptable and fair for all involved.

We wish to state here that any future assessment system should (1) work with publicly available data and (2) should be understandable and (3) reproducible for scientists under assessment. The pivotal issue remains (4) a reference standard that is fair and objective. We can illustrate this with a very simple example directed to a specialty of one of us (AAMW) and one of our close colleagues, Dr. Mulder, in the Academic Medical Center. The h-index does not only apply to individuals, but also to journals and even to topics of research. Thus, when we select the six leading journals in cardiovascular science and define a publication period of 2000–2009 in the Web of Science of Thomson Reuters, we can automatically generate an h-index for topics. Selecting ‘Brugada Syndrome’ and ‘Marfan Syndrome’ would represent appropriate topics for part of the work of both scientists. The result is a h-index 59 for ‘Brugada Syndrome’ and a h-index 29 for ‘Marfan Syndrome’. When such large differences exist within what on the surface seems to be a relatively homogeneous field as ‘clinical cardiology’, little imagination is needed to see how such differences could work out for scientists who are active in fields as ‘robotics’ and ‘atherosclerosis’ in one and the same university medical centre. If we cannot find a solution for these problems, the combination of fundamental and clinical sciences into university medical centres may eventually end as a bad adventure when ‘science managers’ misinterpret or deny these issues.

Comparing citation frequencies of scientists in different (sub)fields may be regarded as assessing the skills of fishermen. In doing so, it is important to know (1) how many fishing rods are involved (money, co-workers, network), (2) how eager the fish are to bite (the number of references in the citing papers) and (3) how many fish are in the pond (number of scientists in the same (sub)field). In particular, this last point has not been addressed by the specialists in bibliometrics.

As in our previous analysis [1], the variability in h-index between individual professors in clinical cardiology was substantial (Tables 1, 2, 3). At this stage we repeat our hesitation to interpret the observed differences as differences in scientific quality.

Limitation

We would certainly not publish an analysis of the scientific output of younger scientists at the beginning of their careers without more research. In this case, we thought that the information is of sufficient interest and the fact that the individuals have a completed career in the sense that they have reached the top of the academic ranks, played a role as well. The large interindividual differences as observed for these 37 professors in clinical cardiology should be an important warning for those who wish to compare scientists of different disciplines. To mention one pitfall, the citation frequency of clinical cardiology papers is about 40% higher than that of basic cardiology-oriented papers [14]. Within these categories the differences between subfields are probably even higher [14]. Ignoring such pivotal information may create a situation in academic hospitals where the quality of research of small fields is severely underestimated. It would be very counterproductive when high quality of research is misjudged by the use of poor quality indicators. This is a danger for smaller groups in smaller fields. Excellent work can attract citations from all other scientists active in the field and still score low because the number of scientists in the field is low.

References

Opthof T, Wilde AAM. The Hirsch-index: a simple, new tool for the assessment of scientific output of individual scientists. The case of Dutch professors in clinical cardiology. Neth Heart J. 2009;17:145–54.

Hirsch JE. An index to quantify an individual's scientific research output. Proc Natl Acad Sci USA. 2005;102:16569–72.

Spaan JAE. Arrogance and ignorance in assessing scientific quality in a multidisciplinary academic medical centre. Neth Heart J. 2010;18:319–22.

Van Kammen J, Van Lier R, Gunning-Schepers L. Assessing scientific quality in a multidisciplinary academic medical centre. Neth Heart J. 2009;17:500.

Opthof T, Wilde AAM. Comment: assessment of scientific quality is complicated. Neth Heart J. 2009;17:501–2.

Cole S. Citations and the evaluation of individual scientists. Trends Biochem Sci. 1989;14:9–13.

MacRoberts MH, MacRoberts BR. Citation analysis and the science policy arena. Trends Biochem Sci. 1989;14:8–12.

Seglen PO. From bad to worse: evaluation by Journal Impact. Trends Biochem Sci. 1989;14:326–7.

MacRoberts MH, MacRoberts BR. Problems of citation analysis. Scientometrics. 1996;36:435–44.

Opthof T. Sense and nonsense about the impact factor. Cardiovasc Res. 1997;33:1–7.

Adler R, Ewing J, Taylor P. Citation Statistics: A report from the International Mathematical Union (IMU) in cooperation with the International Council of Industrial and Applied Mathematics (ICIAM) and Institute of Mathematical Statistics (IMS). Statistical Science. 2009;24(1):1–14.

Leydesdorff L. Caveats for the use of citation indicators in research and journal evaluations. JASIS. 2008;59:278–87.

MacRoberts MH, MacRoberts BR. Problems of citation analysis: a study of uncited and seldom-cited influences. JASIST. 2010;61:1–12.

Opthof T. Differences in citation numbers between and within sets of clinical and basic papers in cardiovascular research: why citation indicators fail. Med Biol Eng Comput 2011; 49 (in press)

Bornmann L, Mutz R, Daniel HD. Are there better indices for evaluation purposes than the h index? A comparison of nine different variants of the h index using data from biomedicine. JASIS. 2008;59:830–7.

Callaham M, McCullouch C. Longitudinal trends in the performance of scientific peer reviewers. Ann Emerg Med. 2011;57:141–8.

Lotka AJ. The frequency distribution of scientific productivity. J Washington Acad Sci. 1926;16:317–23.

Opthof T, Coronel R. Productivity in science: more and more? Cardiovasc Res. 2002;56:175–7.

Leydesdorff L, Bornmann L, Mutz R et al. Normalizing the measurement of citation performance: principles for comparing sets of documents. JASIST 2011. doi:10.1002/asi21534

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Opthof, T., Wilde, A.A.M. Bibliometric data in clinical cardiology revisited. The case of 37 Dutch professors. Neth Heart J 19, 246–255 (2011). https://doi.org/10.1007/s12471-011-0128-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12471-011-0128-y