Abstract

In the last years, unmanned aerial vehicle (UAV) systems have become very attractive for various commercial, industrial, public, scientific, and military operations. Potential tasks include pipeline inspection, dam surveillance, photogrammetric survey, infrastructure maintenance, inspection of flooded areas, fire fighting, terrain monitoring, volcano observations, and any utilization which requires land recognition with cameras or other sensors. The flying capabilities provided by UAVs require a well-trained pilot to be fully and effectively exploited; moreover the flight range of the piloted helicopter is limited to the line-of-sight or the skill of the pilot to detect and follow the orientation of the helicopter. Such issues are even more important considering that the vehicle will carry and operate automatically a camera used for a photogrammetric survey. All this has motivated research and design for autonomous guidance of the vehicle which could both stabilize and guide the helicopter precisely along a reference path. The constant growth of research programs and the technological progress in the field of navigation systems, as denoted by the production of more and more performing global positioning systems integrated with inertial navigation sensors, allowed a strong cost reduction and payload miniaturization, making the design of low-cost UAV platforms more feasible and attractive. In this paper, we present the results of a flight simulation system developed for the setup of the vehicle’s servos, which our autonomous guidance system, as well as the module for camera photogrammetric image acquisition and synchronization, will be based on. Building a simulated environment allows to evaluate in advance what the main issues of a complex control system are to avoid damage of fragile and expensive instruments as the ones mounted on a model helicopter and to test methods for synchronization of the camera with flight parameters.

Similar content being viewed by others

Introduction

The name UAV denotes all vehicles, which are flying in the air with no pilot on board and with capability for remote control of the aircraft. Within this category, helicopters play an interesting role as they are suited for many applications for which fixed-wing aircraft have operational difficulties (Eisenbeiss 2006). Indeed such crafts offer more flexible maneuvers, as they allow for vertical takeoff and landing, hovering and side flight. These flying capabilities on a standard model helicopter would require a well-trained pilot to be fully and effectively exploited (Hing and Oh 2009); moreover, the flight range of the piloted helicopter is limited to the line-of-sight or to the skill of the pilot to detect and follow the orientation of the helicopter. Such issues have motivated the research and the design for autonomous system guidance (Jerzy and Ignacy 2000) which could both stabilize and also guide the helicopter precisely along a reference path. Such research resulted in numerous works of literature about the development of fully autonomous or partially autonomous helicopters (Conway 1995; Everaerts et al. 2004; Schwarz and El-Sheimy 2004).

The goal of this paper is to present the results of the simulation software implemented to evaluate in advance issues related with the development of an autonomous control guidance system for our low-cost model helicopter, whose main components have been already described in the work of Guarnieri et al. (2006). Such simulation software will enable autonomous navigation and permit a semiautomatic procedure for synchronization of image acquisition by the mounted cameras.

System overview

The primary goal of our model helicopter is to provide the user with a top view of the territory without resorting to more expensive classical aerial photogrammetry. The system is designed to collect oriented images for mapping and land monitoring purposes working on areas which represent a difficult task for already existing ground-based mobile mapping systems. From this viewpoint, our system can be regarded as a lightweight and low-cost complementary mapping tool to existing Mobile Mapping Systems (MMS). According to project specifications, the model helicopter will be used to survey areas of limited extent such as open mines, river segments, and cultivated fields. Final utilizations will be not only land change detection in the terrain morphology but also identification of illegal uses of land resources. A further example of its application deals with the mapping of small channels of particular hydrologic importance located along the Venice lagoon, which cannot be accurately mapped through classical aerial photogrammetry due to flight height and resulting image scale.

Several kinds of UAV helicopters have been so far developed for photogrammetric data acquisition and terrain or object modeling. For example, in (Nagai et al. 2004) the developed system integrates laser scanner and digital cameras with global positioning systems integrated with inertial navigation sensors data for constructing digital surface models. This system uses a Subaru helicopter with a payload of 100 kg and diameter of the main rotor of 4.8 m. According to the range (3 km) and altitude (2,000), the helicopter can be defined as a mini or close range UAV. In Sik et al. (2004), an alternative mini-UAV helicopter is presented, which was used as a photographic system for the acquisition of ancient towers and temple sites. The helicopter should replace high camera tripods and ladder trucks, which are uneconomical in cost and time. The helicopter Hirobo & Eagle 90 has a main rotor diameter of 1.8 m of the main rotor and a payload capability of 8.5 kg. The helicopter could carry different camera systems like miniature (35 mm), medium (6 × 4.5 cm) and panorama (6 × 12 cm) format cameras and video cameras. A gimbal was designed as a buffer that can absorb noises as well as vibrations. On board the system, a small video camera is installed too, which is connected to the ground station to transmit the images to a monitor in real time.

Our proposed mapping system differentiates from existing UAV helicopters mainly because of the adopted imaging system and the maximum flying height. Indeed, we planned to employ a model helicopter equipped with GPS, orientation sensors, two color digital cameras, working in continuous mode, synchronization devices, data transfer unit, and batteries as power source. In order to keep the system as compact and lightweight as possible, digital images and positioning data will be stored onboard on memory cards. Figure 1 shows a close-up view of our model helicopter, while related technical specifications are presented in Table 1.

All the sensors are mounted on a customized platform fixed below the helicopter cell between the landing vats. The imaging system is based on a pair of color lightweight digital cameras (Panasonic DMC-FX3) that will be properly placed on the platform and tilted in order to provide an image overlap between right and left camera of 70%. The base length will be established according to such requirement and the field of view (FOV) of the cameras. Position and attitude of the model helicopter will be provided by a MEMS-based inertial measurement unit (IMU) with integrated GPS and static pressure sensor, the Mti-G from Xsens Technologies (Fig. 2). This measurement unit has an onboard Attitude and Heading Reference System (AHRS) and navigation processor which runs a real-time Xsens Kalman filter providing drift-free GPS positions, 3D orientation data, and 3D earth-magnetic data. Main specifications of the MTi-G are reported in Table 2.

Different approaches have been evaluated for the helicopter control system: we found that the better solution, in terms of complexity, costs, and development times, was to mount the control system on board. In this way, the need to establish a bidirectional data communication link between ground station and helicopter for the whole flight session can be avoided. However, we planned to use a radio link in order to manually pilot the helicopter during takeoff and landing operations. Given the size and weight constraints for the guidance system components, we adopted a 520-MHz X-Scale Mini processor from RLC Enterprises, Inc. (Fig. 3). This unit can be programmed with C++ language through direct interface with the Microsoft Visual Studio suite, allowing us to implement not only the code needed for the helicopter control but also for the direct geo-referencing of acquired digital images.

Simulation environment

In order to evaluate in advance the main issues related with the development of an autonomous guidance system for our unmanned model helicopter, we decided to build a simulation software with Simulink®, a Matlab® programming environment based on the block scheme algebra. The main goal of this approach was to define and to tune the set of servos needed for the helicopter control, avoiding any possible damage of the system components caused by a trial and error approach in a real environment. In order to reliably simulate the dynamics of our small-size helicopter, we took into account the effects of following components: main rotor, tail rotor, aerodynamic drag (wind effect) generated by the fuselage, and horizontal and vertical fins. To this aim, we considered four main servos: the collective pitch control, the cyclic stick, the collective stick, and the throttle. The whole block scheme implemented in Simulink® is summarized in Fig. 4. Here, the first block models the helicopter servos which are input into the second block, the dynamic model. In turn, this block outputs translational acceleration and angular velocity, related to the body frame, and position and attitude in the inertial frame, which are then fed into the measurement sensor block. Here, noise is added in order to better simulate the real behavior of the GPS, IMU, and earth-magnetic sensors. The output of this block represents the (noisy) state of the system which is evaluated by an Extended Kalman Filter (fourth block). At next step, the output of the filter is compared with a reference trajectory in order to determine again the values of the servos. This trajectory, acting as the feedback loop required in every control system, has been generated using position and attitude information derived by taking into account helicopter and digital cameras technical specifications (field of view). Each block of Fig. 4 will be described in the following subsections.

The dynamic model

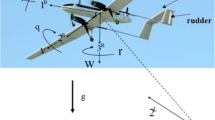

The Raptor 90 has been modeled as a 6 dof rigid body (three rotations and three translations), whose state is described by following measurements like proposed by Eck (2001) (see Fig. 5):

Center of mass position

where: x, y, and z are, respectively, the position (m) on the x-, y-, and z-axes.

Attitude

where: ϕ = roll, θ = pitch, and ψ = yaw are the euler angles (rad), respectively, on the x-, y-, and z-axes.

Velocity

where: u, v, and w are, respectively, the velocity (m·s−1) along the x-, y-, and z-axes.

Angular velocity

where: p, q, and r are, respectively, the angular velocity (rad·s−1) around the x-, y-, and z-axes.

With regard the dynamic and kinematic equations, two different reference systems have been considered: the inertial frame (a) and the body frame (b) (Fig. 6).

Basically, the analytical model for the helicopter can be summarized in following equation system:

Where A = inertial reference frame, B = body reference frame, C = center of mass

- M :

-

is the rotational helicopter dynamics obtained by: I = mass moment of inertia (kg·m2), w = angular velocity—Eq. 4.

- F :

-

is the translational helicopter dynamics obtained by: m = mass of helicopter, w = angular velocity—Eq. 4, n = velocity—Eq. 3.

- q :

-

is the derivate body frame (aircraft) attitude in respect to the inertial frame obtainted by: Y = angular velocity matrix, q = attitude, w = angular velocity.

- p :

-

is the derivate body frame (aircraft) position in respect to the inertial frame obtainted by: R = rotation matrix, q = attitude, n = velocity—Eq. 3.

Such measurements, derived from angular and translational velocity computed in the inertial frame, are related to the body frame through rotation matrices Y(q) and R(q). Besides dynamic and kinematic equations, a complete analytical model of the helicopter requires the knowledge about forces and couples acting on it. After exhaustive reading of most recent literature on UAV helicopters, we decided to take into account following force couples:

-

Gravity force;

-

Main rotor;

-

Tail rotor;

-

Fuselage;

-

Horizontal fin;

-

Vertical fin.

For brevity’s sake, we highlight here that the fuselage has been considered as a planar plate subjected to dynamic pressure along the three axes’ directions of the body frame. Values of the three corresponding equivalent surfaces are reported in Table 3 along with the other values necessary for simulation. We also took into account wind gust effects (aerodynamic drag), which acts on three helicopter components: fuselage, tail plane, and the rudder unit. In this case, we did not use any of the existing models already implemented in Simulink®; but rather, we employed three small Slider Gain blocks which allow the user to directly modify the simulation by introducing wind gusts by simply moving three cursors with the mouse.

The helicopter dynamic model block outputs four different measurements, which are used by the subsequent block for sensor simulation: translational and angular accelerations in the body frame and position and attitude in the inertial frame.

Simulation of measurement sensors

According with the servos, the four outputs returned by helicopter dynamic model, according with the input servos, were used to simulate the operation of three measurement sensors embedded in the MTi-G unit: the IMU platform, the GPS receiver, and the three-axis magnetometer.

With regard the first sensor, input parameters are represented by the translational acceleration and the angular velocity in the body frame as derived from the solution of the first two equations shown in (5).To better simulate the behavior of a real attitude sensor, we added a uniformly distributed noise, whose amplitude has been calculated as product between the noise density and the square root of the sensor bandwidth. Corresponding values were obtained by the IMU specifications reported in Table 4. Moreover, the update rates were simulated by using Zero-Order Hold blocks, i.e., Simulink® components able to hold the signal for a certain amount of time. In this case, the update rate was set to 200 Hz.

A similar approach was adopted even for the GPS receiver and the magnetometer. The GPS sub-block takes as input the inertial position output by the dynamic model block. Successively, a uniformly distributed noise is added to such measurement to simulate a real operational flight. The noise amplitude was set to 10 cm. Again, a Zero-Order Hold block was implemented to simulate an update rate of 4 Hz. For the magnetometer, we added a 2° uniformly distributed noise to the orientation measurement returned by the dynamic model. The magnetometer output was then timely made discrete with a Zero-Order Hold block with an update rate of 120 Hz. Technical specifications for the GPS receiver and the earth-field magnetic sensor are reported in Tables 5 and 6, respectively.

The EKF simulation block

In order to properly combine together the data obtained by the positioning and orientation sensors integrated in the Mti-G unit, an Extended Kalman filter (EKF) had to be employed. The filter takes as input the following parameters:

Translational acceleration, derived in the body frame from IMU accelerometers (Gelb 1974):

-

Angular velocity as measured in the body frame by the IMU gyros;

-

Inertial position provided by the GPS receiver;

-

Attitude measurements provided by the magnetometer.

Obviously, all these data are considered noisy as mentioned in the previous subsection.

In our filter implementation, the state equation is described as follows:

where s = state of the system, f = nonlinear state/time equation, k = time step, u = control function parameters, w = noise (white random noise with Gaussian distribution), while the measurement equation is:

where z = measure, s = state of the system, h = nonlinear state/measure equation, k = time step, v = noise (white random noise with Gaussian distribution).

The state vector at time step k (s k ) ensembles four variables related to the inertial frame: position, orientation, translational velocity, and angular velocity.

For brevity sake, we report here just the equations we used for the translational dynamic (Eq. 8), the rotational dynamic (Eq. 9), and for the state measurements (Eqs. 10–13).

where R denotes the rotation matrix converting attitude and position data from the body frame to the inertial frame.

where vel = velocity, k = time step, B = body frame, I = moment of inertia

where GPS = position from GPS measure, k = time step, I = moment of inertia

where mag = attitude values at ϕ = roll, θ = pitch, and ψ = yaw, k = time step

The reference trajectory

In the previous subsections, the set of Simulink® blocks we used to model different aspects of our helicopter flight (dynamics, state measurements, and Kalman filetring) has been presented. All related information has been then employed to get the helicopter guidance control trough step-by-step comparison with a reference trajectory. To properly design this flight path, we developed an algorithm in Matlab® based on following assumptions:

-

mapping area should have a rectangular shape;

-

vertex coordinates of the rectangle were defined in the inertial frame;

-

the trajectory should be automatically generated once the four vertex of the rectangle were known;

-

trajectory had to stop at the same helicopter starting position;

-

digital images had to be captured in hovering mode, i.e., while the helicopter is kept stationary;

-

stop points should be chosen in such a way to ensure enough side and along path image overlap, according to user requirements;

Trajectory should be designed taking into account the use of a pair of digital cameras acquiring simultaneously, their field of view, and their tilting with respect to the vertical.

In order to meet all these requirements, our trajectory generating algorithm requires a set of input parameters describing the size of the mapping area, the helicopter’s geometry, and the digital camera pair mounting. Here, we recall just the most important ones: helicopter starting position, operating height (20 m), stop time (10 s), average translational velocity (0.5 m/s), vertex coordinates of the rectangular area, along-path overlap (2 m), side overlap (3 m), and FOV.

Testing and results

For the test, we set an average velocity of 0.5 m/s, though this not a very high speed when compared with the typical performance of acrobatic helicopters; however, it fits well in the case of automatically controlled flight. The designed trajectory has been used as a reference for the control loop (see Fig. 4), which we implemented as a MIMO system generating the four servos needed to control the flight path according to the values of the six variables describing helicopter’s position and attitude in 3D space.

Figure 7 shows the helicopter position on the reference trajectory along the x-axis: horizontal steps correspond to the red points in Fig. 8 (stop positions), while vertical steps denote helicopter motion in between. As shown in Fig. 9, the range of the yaw angle computed for the same trajectory lies between −90° and +90°. These wide angle variations are needed to scan the whole area displayed in Fig. 8. Both measurements are computed in the inertial frame.

Figure 10 displays in green color the GPS position, computed along the x-axis, and returned by the measuring sensor block on the first 100 s of simulation. This noisy measurement is compared with the values of the reference trajectory (blue lines) and with those representing the current state as output by the helicopter dynamic model (noiseless). Then, in Fig. 11, the output of the EKF for the roll angle is shown overlaid on similar data. Therefore, this figure summarizes the whole block scheme developed for the autonomous control of our model helicopter. The blue line represents the reference trajectory; the red curve is the current UAV state (for the roll angle) which is indirectly “seen” by the control system through the noisy measurements output by the Mti-G unit, shown in green. The latter are entered in the Kalman filter in order to obtain a better tracking (light blue line) of the reference trajectory.

Finally, Figs. 12 and 13 show an example of the trajectory tracked in Simulink® by the control system in terms of the inertial position along the x-axis and of the yaw angle for the first 400 s of simulation. The time shift for both curves with respect the theoretical ones is due to a typical side effect of control systems based on filtered measurements. Here, both curves (in red) are time-delayed by 1 s. Achieved results show that during a scan line (Fig. 8), our model helicopter would shift correctly along the y-axis, but the yaw angle tends to produce a translational component even along the x-axis. However, in this case, major displacements occur during motion between stop points: here, the control loop correctly moves the helicopter on the reference trajectory so that the image capture position is very nearly to the reference one.

In order to evaluate the performance of implemented control system, we computed the root mean square (RMS) error for all the six variables defining the helicopter position and attitude, that is:

From this computation, we achieved the following results:

An analysis of accuracies on Table 7 show that position and attitude error magnitudes are not acceptable for full automatic external orientation of images; nevertheless, the navigation data are useful for initial orientation of the photogrammetric block and for autonomous navigation. The user can rely on a set of stereographic images which are externally oriented with a RMS error which can be estimated from Table 7 to be ≈0.7 m at a flying height of 20 m above ground. The image block is, therefore, “initialized” the single images are in correct order and positioned with an error of known magnitude. Such error can be successively corrected using standard photogrammetric procedures (tie points, control points) for robust block adjustment and image orientation.

Conclusions

In this paper, we have presented the results of a control system developed in Matlab® Simulink®, aimed to simulate the behavior of an autonomous guidance system to be applied to a model helicopter. This small-size UAV is a low-cost MMS designed for the collection of directly geo-referenced color digital images while flying at low altitude over areas of limited extent. The photogrammetric processing effort will be assisted by an initial image orientation using navigation data. After photogrammetric processing, such images will be used to produce digital elevation models, orthophotos, and other kinds of GIS data which can be used for several purposes related to land management. Given the capability of surveying areas of limited access, the proposed model helicopter can be regarded as a complementary mapping tool of already existing and well-proven ground-based mobile mapping systems like backpack mapping (Guarnieri et al. 2008) or mobile mapping vans.

References

Conway A (1995). Autonomous control of an unstable model helicopter using carrier phase GPS only. Dissertation on the department of Electrical Engineering. Stanford University

Eck C (2001) Navigation algorithms with applications to unmanned helicopters. Dissertation at the Swiss federal institute of technology Zurich

Eisenbeiss H (2006) Applications of photogrammetric processing using an autonomous model helicopter. Int Arch Photogramm Remote Sens Spat Inf Sci 185:51–56

Everaerts J, Lewyckyi N, Fransaer D (2004). Pegasus: design of astratospheric long endurance UAV system for remote sensing. Int Arch Photogramm, Remote Sens Spat Inf Sci XXXV

Gelb A (1974) Applied optimal estimation. MIT, Boston

Guarnieri A, Vettore A, Pirotti F (2006) Project for an autonomous model helicopter navigation system. Proc. of ISPRS Commission I Symposium, “From Sensors to imagery”, Marne La Vallee, Paris, 4–6 July

Guarnieri A, Vettore A, Pirotti F, Coppa U (2008) Backpack mobile mapping system. Int Arch Photogramm, Remote Sens Spat Inf Sci XXXVI-5/C55.

Hing JT, Oh PY (2009) Development of an unmanned aerial vehicle piloting system with integrated motion cueing for training and pilot evaluation. Journal of Intelligent and Robotic Systems. doi:0.1007/s10846-008-9252-3

Jerzy ZS, Ignacy D (2000) 3D Local trajectory planner for UAV. Journal of Intelligent and Robotic Systems. doi:10.1023/A:1008108910932

Nagai M, Shibasaki R, Manandhar D, Zhao H (2004) Development of digital surface and feature extraction by integrating laser scanner and CCD sensor with IMU. Int Arch Photogramm, Remote Sens Spat Inf Sci XXXV

Schwarz K, El-Sheimy N (2004) Mobile mapping systems—state of the art and future trends. Int Arch Photogramm Remote Sens XXXV

Sik JH, Chool LJ, Sik KM, Joon KI, Kyum KV (2004) Construction of national cultural heritage management system using a helicopter photographic surveying system. Int Arch Photogramm, Remote Sens Spat Inf Sciences XXXV

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Coppa, U., Guarnieri, A., Pirotti, F. et al. Accuracy enhancement of unmanned helicopter positioning with low-cost system. Appl Geomat 1, 85–95 (2009). https://doi.org/10.1007/s12518-009-0009-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12518-009-0009-x