Abstract

Agricultural UAV-based remote sensing tools to facilitate decision-making for increasing productivity in developing countries were developed and tested. Specifically, a high-quality multispectral sensor and sophisticated-yet-user-friendly data processing techniques (software) under an open-access policy were implemented. The multispectral sensor—IMAGRI-CIP—is a low-cost adaptable multi-sensor array that allows acquiring high-quality and low-SNR images from a UAV platform used to estimate vegetation indexes such as NDVI. Also, a set of software tools that included wavelet-based image alignment, image stitching, and crop classification have been implemented and made available to the remote sensing community. A validation field experiment carried out at the International Potato Center facilities (Lima, Peru) to test the developed tools is reported. A thorough comparison study with a wide-used commercial agricultural camera showed that IMAGRI-CIP provides highly correlated NDVI values (R2≥ 0.8). Additionally, an application field experiment was conducted in Kilosa, Tanzania, to test the tools in smallholder farm settings, featuring high-heterogeneous crop plots. Results showed high accuracy (> 82%) to identify 13 different crops either as mono-crop or as mixed-crops.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Remote sensing techniques play an essential role in agricultural applications including crop and soil monitoring, natural resource management, irrigation and fertilization methods, and non-invasive plant diseases detection (Moran et al. 1997; Chavez et al. 2012). Temporal and spatial variability in agricultural areas can be assessed through multispectral aerial images as spectral properties are associated with physiological responses to crop management and environmental conditions. Depending on the area to be covered and the desired spatial resolution, images can be acquired from several remote sensing platforms such as satellites, airplanes, and drones or unmanned aerial vehicles (UAV) (Toth and Jóźków 2016). UAV-based remote sensing is very promising in agriculture applications, particularly for crop health surveillance and yield predictions. Projected investments for drones in agriculture in 2016–2020 are $ 5.9 bn (The Economist ). However, smallholder farmers in developing countries might not benefit from these developments. Low-cost instrumentation and open-access software, capacity building and appropriate regulatory frameworks were cited by a recent analysis about drones for agriculture in developing countries (CTA 2016) as requirements for widespread use of this auspicious technology.

Although smallholding farming contributes about 70% of food globally (Wolfenson 2013)], the diversity of their cropping systems is not accurately captured by national crop statistics. Indeed, crop statistics are important tools for planning, policy-making, and timely intervening to address food insecurity. A data gathering system that can generate sufficiently accurate crop statistics right from the farm, rather than from the markets, has the potential to contribute to improve crop production and inform decision makers to act in case of anticipated food shortages or crop surpluses.

The potential of satellite remote sensing for gathering crop data has been demonstrated (Moran et al. 1997; Dadhwal et al. 2002). For example, cropland GIS data layers were generated using 30-m resolution Landsat imageries in the USA (Hanuschak et al. 2004), high-resolution images were used to improve statistics for sweet potato growing areas in Uganda (Zorogastua et al. 2007), and cropland areas were estimated with 95% accuracy by unsupervised classification of 30-m resolution satellite data in China (Wu et al. 2014). Nowadays, satellite products have different spatial resolutions, i.e., kilometers, hundreds of meters, or tens of meters. Most of these coarse-resolution products are open-access but have limited applications for smallholder farming settings. Although advanced satellite systems have an unprecedented spatial resolution of a few meters, and even sub-meter, associated costs are yet prohibitive for widespread applications in agriculture and development. Recently, several satellite products have been made accessible, such as Landsat and Sentinels. The European Space Agency (ESA)’s Sentinel-2 mission provides an open-access data product with measurements in the visible (VIS) and near-infrared (NIR) wavelengths, specifically designed for vegetation studies. With 10-m spatial resolution (for VIS and NIR spectral bands), 12-bit radiometric resolution, five days revisit time, and coverage of ≈ 290 km wide, this product is excellent for monitoring crops at a large scale in an efficient manner. Besides spatial resolution, satellite systems have other limitations directly related to their flight height. The large distance between the satellite and the ground means that the emission signal reaching the satellite’s sensor is affected by water vapor, ozone, aerosols, clouds, etc., and this signal attenuation could significantly affect data quality. The effect of most of these particles could be sufficiently corrected by atmospheric models such as FLAASH (Fast Line-of-sight Atmospheric Analysis of Spectral Hypercubes) (Cooley et al. 2002), SMAC (Simplified Method for Atmospheric Corrections) (Rahman and Dedieu 1994), and SNAP (Sentinel Application Platform) (SNAP ).

Smallholder farmers often grow different types of crops on small plots creating a highly heterogeneous mosaic of vegetation, which complicates the crop discrimination through space-borne data due to their still limited spatial resolution. Also, satellite imagery analysis can be adversely affected by landscape factors such as elevation and soil type; for example, terraced crops versus those planted on a steep incline (Craig and Atkinson 2013). Thus, the emergence of remote sensing systems based on UAVs brought with them an immense potential for agriculture, particularly in the smallholder farming context. The low flying heights result in extremely high spatial resolutions (in the order of a few centimeters) depending on the optical properties of the on-board sensor (e.g., camera). This extremely detailed UAV-based data can deal with the complex heterogeneity of smallholder farming systems. Additionally, UAV-based systems provide a solution to the cloud cover effect, since UAVs fly at very low heights < 500 m. Also, the air column between the UAV and the ground is very thin, resulting in negligible atmospheric effects on the acquired data. Nonetheless, the major shortcoming of the UAV-based remote sensing systems is the limited spatial coverage resulting from short flight times and low flying height, which ultimately limit the field of view.

UAV-based agricultural remote sensing includes several hardware and software components, and our work intended to reduce overall costs by providing open-source tools and methods freely available to the scientific community. Regarding the hardware, we developed a low-cost multispectral camera that enables the estimation of a specific vegetation index. Commercial multispectral cameras are expensive and oriented to perform multiple applications as they currently include 3 to 5 spectral bands. Once the images are acquired, pre- and post-processing of data are needed. Several software packages can be found in the market that can perform all those tasks; however, they are less accessible for professionals serving smallholder farmers due to their high cost. We addressed this problem by developing two pre-processing algorithms: image alignment and image stitching, and one post-processing algorithm: crop determination through classification. The hardware and software described in this paper were designed and developed in response to the needs expressed by an African community of practice conformed by potential developers, application scientists, farmer cooperatives, and policy makers (Chapter 12 in James 2018). The characteristics listed as desirable for adopting UAV-based remote sensing platforms (hardware and software) included the following: low-cost, flexibility (i.e., adaptable to different conditions and needs), adaptable and repairable by local professionals, capable of discriminating mixed crops, and user-friendliness. In sum, this work presents three main innovations. First, to our knowledge, there is no scientific report where technology developers incorporate specific demands of potential stakeholders to design and implement a UAV-based agricultural remote sensing system which includes hardware, software, and signal acquisition methodologies. Second, we propose a workflow or automated processing chain for UAV imagery based on open-source tools. Finally, within our workflow, we present a novel method for multispectral image alignment and stitching based on wavelet transform, specifically oriented to agricultural applications.

In this work we describe the main components in a UAV-based remote sensing system oriented to agricultural applications. Both commercial and our open-source solutions are characterized to help final users to make a sound selection, considering their needs and budget. We summarize the results of an assessment of our developed technology through a temporal NDVI analysis of potato crops against a commercial camera. Then, an application of this technology to smallholding cropping areas is described. Finally, we discuss the cost reduction of using open-access tools, the viability of using hybrid commercial/open-source options, and their respective pros and cons, as well as the implications of the use of this technology by professionals serving farmer communities.

UAV-based technology and methods

Building a UAV-based remote sensing system

A UAV-based remote sensing system has two main components: (1) the platform or vehicle that provides support for a given payload and the stability needed for the data acquisition, and (2) the sensors, for data acquisition from a given target. Several options can be found in the market ranging from specialized ready-to-use systems—usually associated with high prices—to do-it-yourself (DIY) solutions that ultimately require the final user to have technical knowledge in several areas such as electronics, mechanics, and software usage. Hence, although this section mainly aims to show the implementation of a low-cost multispectral camera, we briefly mention the commercial options for the UAV platforms.

There are several well-known UAV platforms in the market, such as Mikrokopter (http://www.mikrokopter.de), DJI (http://www.dji.com), and Parrot (http://www.parrot.com). As a descriptive example, our field applications in smallholder farming was conducted with the multicopter Okto-XL (Mikrokopter, HiSystems GmbH, Germany), an 8-rotors UAV that includes an Inertial Measurement Unit (IMU), a GPS, eight motor controllers, and flight and navigation control boards. The payload capacity is 1.5 kg and its flight duration ranged from 15 to 18 min. The flight control board handles digital signals to control other devices such as servo motors or cameras. Mikrokopter UAVs bring specialized software to set flight plans through GPS positions (waypoints) as well as to receive telemetry data that show UAV position, speed, altitude, battery status, among other important variables for the pilot. The price of this system is ≈ $4,500 without considering shipping fees. A significant advantage of commercial solutions is the reduced implementation time-frame. Furthermore, the final user does not need any technical or specialized background to handle the platforms, which make them appealing for remote sensing applications.

There are also several affordable navigation systems that allow entrepreneurs and scientists to build a UAV platform. The most widely used are Ardupilot (http://www.ardupilot.org) and PixHawk (http://www.pixhawk.org). A navigation system is an electronic device in charge of rotor control that provides stability to the mechanical structure during the flight, useful to keep the sensor (camera) within a fixed line-of-sight. It also allows the user to remotely control the UAV from the ground. The average cost is ≈ 500$. Mechanical parts and rotors are not included and the additional cost to build a DIY 8-rotors UAV ranges between ≈ USD $400 and USD $750.

It is important to mention that the essential component of a UAV-based system for agricultural applications is the sensor or multispectral camera. Most common commercial options include RedEdgeMX from Micasense (http://www.micasense.com), ADC-micro from Tetracam Inc. (http://www.tetracam.com), and Sequoia+ from Parrot (http://www.parrot.com), with prices ranging from ≈USD $3,000 to USD $5,500. The application cited in this manuscript was conducted with the ADC-micro model, a 3-band Agricultural Digital Camera (ADC) manufactured by Tetracam (the term TTC is used to refer to this device). It is a 90-g RG-NIR (red, green, and near-infrared) camera, specifically designed for operation aboard UAVs due to its small dimensions (75 mm × 59 mm × 33 m). Its sensor provides 3.2-megapixel (2048 × 1536 pixels) images that are stored along with meta-data such as GPS coordinates and attitude information (pitch, roll, and yaw).

The Integrated Multi-spectral Agricultural (IMAGRI - CIP) camera system that we developed was designed to measure high signal-to-noise ratio (SNR) red and NIR images and thus obtain a reliable normalized difference vegetation index (NDVI) estimation. IMAGRI-CIP implementation followed the multiple camera approach given by Yang (2012). Thus, the system is composed of a pcDuino1 embedded computer, two identical monochrome cameras (Chameleon, Point Grey, Canada), two lenses (Edmund Optics, Barrington, NJ), and two filters (Andover Corporation, Salem, NH) (see Fig. 1). The pcDuino1 is a 1-Ghz ARM Cortex A8 processor-based system with the Lubuntu Linux (12.04) operating system. It has two USB 2.0 ports which are used to handle the two cameras. This mini PC platform was chosen due to its light weight (< 200 g), convenient for UAV applications. Each monochrome camera is based on the Sony ICX445 CCD image sensor whose quantum efficiencies are: 64% in 525 nm, 16% in 650 nm and 5% in 850 nm (ICX455,Technical Application Note). The fixed distance between the cameras optical axes is 45 mm. Both cameras are configured with a zero gain and 4-pixel binding to improve the signal to noise ratio (SNR = 49 dB) of the images. The binding generates a reduced spatial resolution image equal to 640 × 480 pixels. Moreover, due to the pcDuino1’s computational limitations, the images are acquired with a radiometric resolution of 8 bits per pixel. The two bandpass interference filters have a diameter of 25 mm with center wavelengths 650 nm (red) and 850 nm (NIR) and their Full-Width at Half-Maximum (FWHM) are 80 nm and 100 nm, respectively. The lenses have a focal length of 8.5 mm and a numerical aperture scale that ranges from f/8 to f/16. A C-language based software was implemented to control the camera parameters such as shutter time, gain, image resolution, and frame rate, and to capture images from both cameras. The overall system is mounted over a 3-D-printed modular plastic case (i.e. each part can be removed for maintenance or replacement) with fixed distance between the cameras. The CCD cameras together with mount adapters, filters and focal lenses were calibrated following the methodology to correct lens distortion described by Zhang (2000). Depending on users’ need, filters can be exchanged to register images in different sectors of the VIS-NIR region of the electromagnetic spectrum. Also, in this study, we adopted the auto-exposure mode for both cameras, where the exposure value (EV) is set to EV= 4 based on previous experiments at ground level. Since most of the optical and camera parameters are fixed (such as the focal length and gain), this mode estimates the best integration time values while avoiding saturation. Our tests, including interference filters NIR and red, provided an adequate number of counts for the 8-bit dynamic range. It is noteworthy that the approximate cost of the IMAGRI-CIP system is USD $1,200.

Integrated Multi-spectral Agricultural (IMAGRI-CIP) Camera system. a Monochrome cameras with interference filters in the red and NIR spectral bands. The white circuit board next to the cameras is the PCduino1 embedded system utilized for image acquisition and storage. b The 3-D printed case which supports the cameras, PCduino1, and the battery. For image acquisition, it is assembled to the UAV platform with a nadir viewing geometry

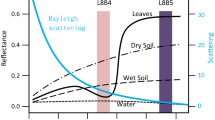

A major aspect in remote sensing applications is the identification of those regions of the light spectrum that provide quantitative and qualitative information about key features of earth or material surfaces under analysis. This is achieved by measuring the spectral response from the desired target using a spectrometer. Such information is crucial to design a camera with a few bands centered at designated wavelength regions. In vegetation studies, the spectral region with central wavelengths green (\(\sim \)560 nm), red (\(\sim \)665 nm) and near-infrared (\(\sim \)842 nm) are the most informative bands used to calculate vegetation indexes such as NDVI (Rouse et al. 1973). Widely used commercial spectrometers are USB2000+ from Ocean Optics (http://www.oceanoptics.com), SensLine from Avantes (http://www.avantes.com), and Glacier spectrometer from Edmund Optics (http://www.edmundoptics.com) with prices that range from ≈ USD $2,000 to USD $4,000.

For IMAGRI-CIP implementation, we selected interference filter wavelengths based on spectral studies carried out with the USB2000+ Ocean Optics Spectrometer. For this, we developed an additional tool called SpectraCIP (see Fig. 2), which aims to facilitate the spectra acquisition through a user-friendly software under open-access policy. Spectra-CIP is a C-language-based graphical user interface (GUI) software. Hence, the user is able to control several acquisition parameters such as exposure time, the number of samples to average, binning, among others. Also, it can acquire reference measurements (using a Lambertian surface) to ultimately calculate the vegetation reflectance. The software has been tested with the USB 2000+ model for visible and NIR input light. This software is available to be used by the agricultural remote sensing community and can be downloaded from Loayza et al. (2017).

UAV multispectral imagery pre-processing

Images acquired with a multispectral camera, either TTC or IMAGRI-CIP, require pre-processing and our study focuses on two procedures. First, the multispectral images captured by the IMAGRI-CIP have to be aligned since the camera registers two independent red and NIR images. Second, when two or more scenes are registered, they need to be stitched together to generate a large-area mosaic. The stitching process was applied to both TTC and the aligned IMAGRI-CIP imageries.

Wavelet-based Multispectral Image Aligment

The IMAGRI-CIP camera system provides multispectral images acquired by two different sensors. One may argue that due to the fixed location of such sensors, the alignment could be restricted to a geometrical transformation governed by the distance between the cameras. However, during in-flight acquisition, the final scenes are affected by spatial translation. Specifically, cameras sequentially retrieve data, i.e., one camera captures one image at the time with a < 1-sec delay, generating a spatial shift in the scene due to the UAV motion. To cope with it, we developed an automatic image alignment protocol for NIR and red images based on the wavelet transform.

The use of wavelets in image registration is supported by their time-frequency characteristics and multiresolution capability, which enable decomposing an image into lower resolution images, enhancing its features without losing information (Stone et al. 1999; Le Moigne et al. 2002, 2011). It is well-known that the Discrete Wavelet Transform (DWT) can enhance such features in three specific orientations: vertical, horizontal, and ± 45∘ mixed orientation. Notwithstanding, DWT faces two main problems: (1)the shift-invariance and (2) the lack of directionality. These problems have been solved with the Dual-Tree Complex Wavelet Transform (DT-CWT), which offers six different orientations ± 15∘, ± 45∘, and ± 75∘ (Chauhan et al. 2012). For further information and implementation details, the reader is referred to Selesnick et al. (2005). The procedure for NIR and red images alignment can be summarized in the following steps (also depicted in Fig. 3):

-

1.

Perform six-orientation feature enhancement for both red and NIR images using DT-CWT. As a result, we have six pairs of sub-images (1/4 the size of the original image).

-

2.

For each pair, the red sub-image is rotated by 𝜃j with 0.1∘ steps in the clockwise direction in the range [− 1∘,1∘], where j ∈ [1,20] and j = 1 yields 𝜃1 = − 1∘. This step follows the specifications reported in Wang et al. (2010).

-

3.

Perform correlation between each pair and store the peak value in the translation vector Ti = (xi, yi)T, where i ∈ [1,6] identifies a pair.

-

4.

The rotation angle \(\hat {\theta }\) and the translation vector \(\hat {T} = (\hat {x},\hat {y})^{T}\) are estimated by solving:

$$ \{\hat{T},\hat{\theta}\} = \underset{x,y,\theta_{i}}{\text{argmax}}\left\{\text{Corr}(I_{red}^{\theta=\theta_{i}},I_{NIR}^{\theta = 0})\right\} $$(1) -

5.

The red image is transformed using \(\{\hat {T},\hat {\theta }\}\) and superimposed over the NIR image to generate a multispectral aligned image.

Schematic overview of the Wavelet-based Multispectral Image Alignment (WMIA-CIP) software. We use the DT-CWT to enhance six-directional features in the input red and NIR images. A rotation transform given by the angle 𝜃j is applied to the red image and its correlation with the corresponding NIR image is performed. Then, six translation vectors \(\hat {T}_{i}\) (with i ∈ [1,6]) are estimated for a given 𝜃j. This process iterates 20 times for each pair, and the values of \(\{\hat {T}_{i} , \theta _{j} \}\) that yields maximum value for \(\text {Corr}(I_{red}^{\theta =\theta _{i}},I_{NIR}^{\theta = 0})\) are selected as the transform parameters for final alignment

In the first step, we use the DT-CWT to enhance six-directional features in the input red and NIR images. In the second step, a rotation transform given by the angle 𝜃j is applied to the red image and its normalized correlation with the corresponding NIR image is performed. Then, six translation vectors \(\hat {T}_{i}\) (with i ∈ [1,6]) are estimated for a given 𝜃j. This process iterates 20 times for each pair, and the pair \(\{\hat {T}_{i} , \theta _{j} \}\) that yields maximum value for \(\text {Corr}(I_{red}^{\theta =\theta _{i}},I_{NIR}^{\theta = 0})\) are selected as the transform parameters for final alignment.

Figure 4 shows three examples to demonstrate the application of the WMIA-CIP. Here, we have images acquired with the IMAGRI-CIP system in a UAV flying at 25-m (cassava) and 60-m (sweet potato) altitude in April 2015 at CIP facilities. Images a, b, and c show NIR , red, and aligned images for cassava crops, respectively. Similarly, images d, e, and f show NIR, red, and aligned images for sweet potatoes. The software Wavelet-based Multispectral Image Alignment (WMIA-CIP) can be downloaded from Palacios et al. (2019).

Application examples of the proposed wavelet-based algorithm over IMAGRI-CIP images. Images a, b, and c show NIR , red, and aligned images for cassava crops, respectively. Similarly, images d, e, and f show NIR, red, and aligned image for sweet potatoes. Aerial images were acquired at CIP facilities flying at altitudes of 25 m for cassava and 60 m for sweet potatoes

Image Stitching for Aerial Multi-spectral images

Acquired and subsequently aligned images are usually stitched together to generate a large mosaic of the study area. For this purpose, an open-source software named Image Stitching for Aerial Multi-spectral images (ISAM-CIP) was developed by our team. ISAM-CIP is a C-language software based on OpenCV libraries whose main objective is to stitch high-resolution imageries seamlessly. The main procedures within the software include the following:

-

1.

Finding features: Corners, intersections, or manually located squared white panels (also known as ground control points (GCP)) in overlapped regions in the images can help linking images. We selected the free-patent algorithm ORB for feature detection. The reader is referred to Rublee et al. (2011) for further information.

-

2.

Matching features: Features were used to connect image pairs through a process called homography. The output of this process is a transformation matrix (translation and rotation) per pair.

-

3.

Estimation of camera parameters: It refers to a three-dimensional correction based on estimated camera parameters from every image used in the mosaic. The algorithm is called Bundle Adjustment (Triggs et al. 1999; Lourakis and Argyros 2009). Small variations in the estimation of the UAV angle with respect to the horizon can be estimated here.

-

4.

Image transformation and stitching: The updated transformation matrices are applied to each of the images to produce the complete mosaic. The algorithm detects the overlapping region and generates a customized mask that measures the contrast/brightness level of each image and equalizes the pixel values to get a corrected illumination.

The ISAM’s user interface is presented in Fig. 5, and an example of a stitched image is shown in Fig. 6. This mosaic was generated using 16 multispectral images acquired with the commercial agricultural ADC-micro Tetracam (TTC) camera over avocados at the National Institute for Agricultural Innovation (INIA) - Huaral, Peru facilities in April 2016. The resulting image has a total area of 375 m × 180 m acquired with six ≈ 8 min UAV flights at an altitude ≈ 120 m. ISAM-CIP can be downloaded from Loayza et al. (2017a).

Multispectral mosaic generated using 16 individual multispectral images acquired with TTC camera at the National Institute for Agricultural Innovation (INIA) - Huaral, Peru facilities in April 2016. The output image has an area of 375 m × 180 m registered with a UAV flying for ≈ 8 min at an altitude of ≈ 120 m

These pre-processing steps can also be performed by commercial solutions. For example, PIX4Dmapper from PIX4D (http://www.pix4d.com) and Agisoft Metashape from Agisoft (http://www.agisoft.ca) provide the needed tools to align and stitch UAV-based images. In most cases, the data processing is carried out in a “cloud,” i.e., the data must be sent to their headquarters to be processed, and the final product is delivered to the user. Their cost is ≈USD $ 3,500 for a yearly single-user license.

UAV multispectral imagery post-processing

Classification approach for cropping area estimation

Estimation of cropping areas in highly diverse small farms is a challenging task. To this end, we used UAV-based, very high-resolution images that can provide rich information about spatial characteristics with granular data. Notwithstanding, its spectral resolution is usually low as a result of the limited payload capability (i.e., additional weight from extra optical sensors) of small and medium-size UAVs.

We propose a Maximum Likelihood Classification (MLC) approach based on additional texture analysis to increment the efficacy of the feature vector as demonstrated for a similar application in Laliberte and Rango (2009). Thus, the first task was to determine the most suitable texture measure as well as the optimal image analysis scale, i.e., the kernel size (Pinto et al. 2016; Ge et al. 2006). We tested several statistical operations (i.e., mean, median, standard deviation, variance, entropy, among others) calculated from all the spectral bands using moving windows of determined scales, i.e., kernels of 3 by 3, 5 by 5, and 7 by 7 pixels. The optimal scale allows us to identify and map the boundaries of different objects from the image (Ferro and Warner 2002). Our experimental analysis demonstrated that with a kernel size of 3 by 3 pixels, the mean and standard deviation operations over the red image for RGB images, and NIR images in the multispectral images are convenient settings for classification purposes of the highly heterogeneous crop plots.

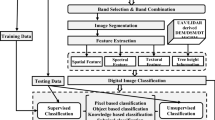

Workflow for UAV imagery

Our workflow for UAV imagery is depicted in Fig. 7. It has three main stages: the acquisition of input data that could be obtained by our IMAGRI system or a commercial TTC camera. As previously indicated, IMAGRI yields two independent images, and an Image Alignment procedure should be performed with the WMIA-CIP. Then, ISAM-CIP utilizes multispectral images to generate an image mosaic. Finally, post-processing involves NDVI calculation or classification for cropping area estimation. Blocks in yellow represent the steps carried out with the hardware and software developed in this project. Given the flexibility of IMAGRI-CIP to exchange filters and acquired images from different spectral bands, the user might use SPECTRA-CIP to determine the appropriate central wavelength of such a new filter.

Workflow for UAV-based imagery. Acquisition stage make use of a camera system such as IMAGRI-CIP to obtain multispectral images. Pre-processing procedures involves the alignment of multi-sensor images and stitching of several small area multispectral images. Finally, postprocessing aims at calculating the NDVI index over the resulting mosaic or performing crop classification. Blocks in yellow have been implemented in this research and are publicly available for the remote sensing community

Normalized difference vegetation index (NDVI) for potato fields at CIP facilities acquired with IMAGRI-CIP and Tetracam cameras in 2015. Almost simultaneous data collection was carried out with the Multikopter UAV at the height of 70 m for five sampling days during August and September 2015. IMAGRI-CIP images were aligned, stitched, and geo-referenced. Similarly, Tetracam images were geo-referenced. Five grids (in colors) were randomly selected for further analysis

Validation of the developed technology

As a demonstration of the feasibility of the developed technology, we conducted an NDVI temporal analysis over potato fields in Lima, Peru. To assess our results, we conduct an identical analysis using images from an ADC-micro Tetracam (TTC) commercial camera as workflow inputs. This experiment was conducted at the International Potato Center (CIP) in Lima, Peru (12.1∘ S, 77.0∘ W), from August to September 2015 over potato fields with dimensions ≈ 70 m × 50 m. Planting was carried out on July 14, 2015. Drought stress was induced in September 1st, condition that usually cause variations in the NDVI. We used 50 cm × 50 cm squared white panels as ground control points (GCP) that were distributed in the border and center of the study area. Calibration of the IMAGRI-CIP camera was conducted in an indoor laboratory using the OpenCV calibration tool (see http://www.opencv.org for further details).

During the period August–September 2015, image acquisition was performed five times, and two sets of flight paths were designed with the microkopter UAV for each camera. First, we used the TTC camera, and a ground measurement of a reference (Teflon calibration plate) was acquired to get the NDVI values through the Tetracam software PixelWrench2. After that, we assembled the TTC camera to the UAV, and a single image was acquired at the height of 70 m since it could cover the entire area of interest. Second, we assembled the IMAGRI-CIP camera system to the UAV, and four images were acquired to cover the whole crop area at the height of 70 m. A Lambertian surface (Spectralon) was used to do the spectral calibration before and after each flight.

IMAGRI-CIP images were pre-processed for alignment using the WMIA-CIP software and stitched with the ISAM-CIP software (see Section 6). Then, both TTC and IMAGRI-CIP images were geo-referenced using QGIS and GCP’s coordinates. Finally, NDVI values were calculated based on the formula: NDV I = (NIR − RED)/(NIR + RED).

Figure 8 shows NDVI values per pixel for crop blocks of ≈ 40 m × 40 m acquired with both IMAGRI-CIP (first row) and TTC (second row) cameras. Five grids were randomly selected (shown in colors) to conduct the comparative assessment between the two camera systems. For each grid, four sub-grid columns containing NDVI values were analyzed. Their mean and standard deviations were used to compare the multispectral camera performance.

As an example, results from the statistical analysis for the red grid for August 17, 2015, are shown in Table 1. Every column or sub-grid was identified with a number from 1 to 4 (located in Fig. 8 from left to right within a grid), and their mean (μ) and standard deviation (σ) were calculated. The number of pixels inside a sub-grid was ≈ 75 pixels.

In addition, we conducted a temporal analysis of the mean and standard deviation of NDVI values per grid (see Fig. 9). Here, the solid red line and red shaded region represent the mean and standard deviation of NDVI estimates using the TTC camera. Similarly, the solid blue line and blue shaded region are associated with the mean and standard deviation of the NDVI estimations using the IMAGRI camera system. NDVI variations as a function of the day of the year (DOY) evidenced phenological changes in the crop. In all the cases, we can observe an NDVI peak value around DOY= 250. After this point, recorded NDVI decrements were associated with induced drought stress and senescence onset (starting on September 1st (DOY= 244)).

NDVI values from IMAGRI-CIP and Tetracam images. Mean NDVI and standard deviations are depicted for the following: a red grid, b green grid, c yellow grid, d blue grid, and e orange grid, respectively. The solid red line and red shaded region represent the mean and standard deviation of NDVI estimations using the Tetracam camera. Similarly, the solid blue line and blue shaded regions depict the mean and standard deviation of NDVI estimations using the IMAGRI camera system. At DOY = 244, induced drought stress starts. As a result, we can observe a reduction of NDVI value after DOY = 250 for all the cases

Finally, we calculated the time-dependent (5 measurements throughout the season) correlation of the mean NDVI estimations using both TTC and IMAGRI-CIP for each colored grid. Table 2 depicts these results. The correlation values are all greater than 0.8, which confirms an excellent performance of the IMAGRI-CIP camera system in comparison with the commercial camera. Further analysis has to be done to identify how this subtle difference can affect more complex NDVI-based studies such as agricultural stress identification, irrigation water-efficiency, and prediction of phenological stages, among others.

Example of technology application in the smallholder farming context

The purpose of this “proof-of-concept” project was to determine if the technology could generate information that would ultimately benefit smallholder farmers. For this, we used a translational research approach to engage with developers of technologies, applications by scientists, and understanding by potential users. As a result of these interactions (further described in the Discussion Section), we identified that smallholder farmers require the following: (1) an accurate spatial indicator of crop status that allows them to determine management strategies, and (2) crop statistic in terms of crop area quantification for the several cultivated species. In the previous section, we described the response to the first requirement where a two month-length NDVI analysis under controlled plots demonstrated that our camera system provides similar NDVI values than a commercial camera. In this Section, we focus on the crop area determination in a smallholder scenario and test our classification algorithms.

This field work was conducted in Kilosa, Tanzania (6.86∘ S, 36.98∘ E in a plot of ≈ 1.5 ha), and was supported by the Tanzania National Bureau of Statistics (NBS), the Sugarcane Research Institute (SRI - Kibaha), the Sokoine University of Agriculture (SUA), and the Kilosa district office. We used the commercial Mikrokopter UAV with an RGB and TTC cameras in seven independent ≈ 15 min flights which were carried out on April 29, 2016. The RGB camera is a Canon EOS 100D with 10.1 Megapixel (effective pixels). Each flight path was designed to cover the total study area, and the selected height was 80 m for the RGB camera and 65 m for the TTC camera. A total of 40 and 45 images were acquired with RGB and TTC cameras, respectively. Then, the images were geo-referenced with QGIS software and stitched with the ISAM-CIP software. The resulting RGB mosaic is shown as Fig. 10, whereas the TTC false-color mosaic, composed of the NIR, red and green bands, is shown in Fig. 11.

Supervised classification for both RGB and TTC mosaics was performed using the Maximum Likelihood (ML) method, including statistical textures as a complement to original bands to create more robust feature vectors. Thus, mean and standard deviation were calculated for the red and NIR bands from the RGB and TTC mosaics, respectively, using Kernel sizes of 3 by 3 pixels. The following classes were discriminated: mango, banana, grassland, cowpeas, sunflower, sweet potato, maize 1 (around 2 weeks after planting), maize 2 (about 1 month after planting), paddy rice, rice 1 (around 2 weeks after planting), rice 2 (approximately 1 month after planting), bare soil and road. Training sites were used for classification with the Maximum Likelihood method and validation sites for testing its accuracy. Finally, to determine the improvement of the classification using textures, classification using only spectral bands and including statistical textures were also compared.

The results of the classification of the RGB mosaic are depicted in Fig. 12, where the 13 different land cover classes were identified. Unclassified pixels are shown as white pixels and are less than 10% of the total image. Similarly, results for the classification of the TTC mosaic are shown in Fig. 13. The number of unclassified pixels was less than 2% of the total number of input pixels. Moreover, an error matrix that includes accuracy and kappa number was calculated for both RGB and TTC classification procedures (see in Table 3). Also, complete confusion matrix is provided as supplementary information.

Discussions

Translational research in the smallholder farming context

This research aimed at contributing to the development of the low-cost UAV-based agricultural remote sensing information system to survey crops in sampling areas of smallholder farming. The work was performed in East Africa in a multi-stage fashion. The first stage was an inception workshop carried out in October 2014 in Nairobi, Kenya, which engaged stakeholders from several African countries such as Tanzania, Uganda, Kenya, East African organizations, and six international agricultural research centers with headquarters in Africa. It was an occasion for developers to present state-of-the-art drone-based remote sensing tools for agricultural applications. The workshop facilitated the development of collaborative networks needed for fieldwork testing and identified key features stakeholders wanted in a low-cost UAV-based system.

The second stage was focused on the development of the UAV-based technology, which was conducted in collaboration with universities and national agricultural institutions in Kenya, Tanzania, Uganda, and Rwanda, which facilitated field-testing and promoted innovation and capacity building on open-access software and lowcost hardware development in the region.

In the third stage, another workshop was held on June 2016 where CIP’s team reported back to the stakeholders participating in the community of practice. They had the opportunity of monitoring the work progress, assessing the products, and providing feedback (James 2018).

Multispectral camera system assessment

Results of the comparison between the multispectral images acquired by TTC and the IMAGRI camera systems for NDVI assessment under controlled experimental conditions in Peru indicated that the contrast and clarity of the images are comparable, as shown in Fig. 8. Correlation coefficients greater than 0.8 (see Fig. 9 and Table 2) confirmed that the IMAGRI system could estimate NDVI values that approximate those obtained by the commercial TTC camera. IMAGRI-CIP comprises two independent cameras with independent exposure time set and each camera (NIR and red) acquires an image of a reference surface (Spectralon) on the ground. The exposure time is set as the time needed for the camera to obtain the 80% of the maximum DN (256 for 8 bits) and thus increase the signal-to-noise ratio. On the other hand, the TTC camera has only one optical sensor that estimates the integration time of two images, red and green, since it only computes with a high dynamic range the NIR image. For this, the TTC camera set its optimal exposure time based on the NIR input, which might saturate the sensor faster. It is therefore reasonable that NDVI estimations in both multispectral systems may not be necessarily the same. Conceptually, IMAGRI-CIP provides a higher signal-to-noise ratio data and might improve the measurements of NDVI values.

Moreover, the advantage of the IMAGRI system is its capacity to adapt to and measure different regions of the light spectrum. In this context, the innovative SpectraCIP software helps to analyze spectral signatures of vegetation canopy at ground level. With this information, vegetation studies can be assessed under different types of abiotic and biotic stress conditions making it possible to identify specific spectral regions responding to particular problems affecting the plants. The user can select required optical filters for an IMAGRI-type camera system and register the information needed for building different vegetation indexes, e.g., the Photochemical Refraction Index (PRI) that makes use of reflectance values in the spectral bands 531 nm and 570 nm (Alonso et al. 2017). However, the IMAGRI-based NDVI results reported here should, of course, be considered preliminary, and firm conclusions regarding the differences with the commercial TTC camera require further analysis that may include longer assessment periods. It is noteworthy that in Candiago et al. (2015), a similar study using TETRACAM cameras was performed, and the authors indicated that the lack of ground radiometric measures precluded the conversion of digital number (DN) to reflectance values.

Evaluation of image stitching software in comparison with commercial solutions

Image stitching by our open-source software ISAM-CIP, based on identified features in the scenes, was quite satisfactory. Feature detection is achieved using the free-license Oriented Fast and Rotated BRIEF (ORB) algorithm. However, the literature describes two more effective methods for this task, with a lower computational cost and a higher rate of feature detection: the Scale Invariant Feature Transform (SIFT) and the Speed Up Robust Features (SURF) (Rublee et al. 2011). One well-known stitching software based on SIFT is AutoStitch (Brown and Lowe 2007) which is robust to scale and orientation variations as well as to different illumination conditions. Its demo version (freely available) allows the user to get a mosaic from three-band images with excellent seamless results. Their full use, however, is restricted to a contract. In comparison, an advantage of ISAM-CIP is its open-access and the fact that it is tailored for agricultural applications rather than for general purposes. Thus, users are able to select the best spectral band for the stitching process, i.e., use only NIR images to determine features in the scene as NIR band provide significant texture information from crops.

UAV versus satellite debate

UAV-based remote sensing systems can provide very high-resolution images that can be used for precise analysis and identification of affected crop regions in the scene. The work presented in Stratoulias et al. (2017), also conducted in African countries, points out that limitations of Sentinel-2 imagery are determined by its coarse spatial resolution with a ground sampling distance (GSD) of 10 m. Image segmentation and spatial filtering of trees, as required by that study, could not be confidently quantified. Furthermore, it is also stated that the typical climatic conditions of the tropical savanna caused frequent cloud coverage, which precluded the acquisition of high-quality data. Thus, UAV provides a reliable alternative when it comes to gathering information in a faster and inexpensive way. However, the main drawbacks of UAVs are the required logistic and expertise. While a satellite can independently cover the site of interest once or twice per day acquiring images, a group of a least two people should usually travel by car to the site. A driver and a UAV pilot with some technical expertise (for battery charging and calibration settings) should participate in a campaign that can take several hours or even days. Also, rainy weather can hamper an entire image acquisition campaign. Indeed, satellites can provide a large amount of data that can be collected quickly, covering vast areas. For example, Landsat 8 and Sentinel 2 can provide useful data for agricultural applications and current studies (Stratoulias et al. 2017; Lebourgeois et al. 2017; Mansaray et al. 2017) are focused on obtaining useful information for decision-making. High-resolution images registered with UAVs platforms can be used for a “micro” analysis where high spatial and temporal resolution is needed. Merging these two approaches i.e., integrate UAV very high-resolution data with open-software satellite-data processors to increment the effectiveness in classification and decision-making seems to be the way forward.

Future of commercial solutions

Commercial remote sensing technologies with agriculture applications are becoming more robust. A plethora of new companies that are making use of UAVs as platforms provide the users with several technological options. For example, multispectral cameras such as TETRACAM ADC Snap or MICASENSE RedEdge-M are designed with light weights to be flown on UAVs. In addition, RedEdge-M is built to capture 5-band images being one of the most used cameras nowadays due to the possibility to estimate several vegetation indexes and its price is ≈ USD $4,900 without shipping fees. In the image pre- and post- processing domain, there are also numerous solutions such as Pix4D and AGIsoft. Pix4D is fully final-user oriented and provides the option to upload acquired images to its “cloud” for further processing. Its use requires a yearly or monthly subscription with an average price of USD $3,500 per year. Certainly, the costs of these technologies are still high and inaccessible for applications oriented to facilitate the work demanded by professionals advising smallholder farmers. Moreover, most commercial hardware are designed to be used with the company’s software, not always included with the package and oftentimes should be purchased separately. The options presented here do not intend to compete with commercial technologies in the market but aim at providing low-cost specific solutions to final users that are interested in using a do-it-yourself approach where they can replicate and improve what has been developed.

As a final remark, efforts made by companies and scientists to make technology accessible in terms of cost will be hampered if the capacity to assemble drones, repair hardware, collect data, and how to interpret it for decision making is left behind. Building those capacities on site was one of our most significant accomplishments.

Conclusions

The open-source tools developed in this research are freely available for any researcher and advisers serving smallholder farmers in the analysis of vegetation parameters such as NDVI or cropping area. These tools, of course, have their commercial counterpart which have higher prices, and the work presented here focused not only on reducing those costs but also on giving the scientific and agricultural communities the “know-how” and the skills to improve their capabilities.

Open-Software tools developed during this research: Spectra-CIP, ISAM-CIP, and WMIA-CIP can be freely downloaded from our website. We encourage scientists and other users to test the software and provide us with feedback, which will be useful to improve the tools. Open-hardware information for its replication can also be provided by direct communication with the corresponding author. Available information includes blueprints for 3-D printed-plastic platforms and selection criteria for the camera and optical systems. Also, dataset, i.e., > 500 multispectral images acquired in the Kilosa, Tanzania experiment, can be freely downloaded from Loayza et al. (2017b).

UAV-based technology is a new frontier in the agricultural sector and brings the ability to acquire data with unprecedented precision. A lot of work is, however, required to make the technology more accessible to users to allow them to gather accurate data without incurring higher costs. Training and advocacy are needed to make UAV-based remote sensing a regular tool for gathering agricultural data.

References

Alonso L, Van Wittenberghe S, Amorós-López J, Vila-Francés J, Gómez-Chova L, Moreno J (2017) Remote Sensing 9(8). https://doi.org/10.3390/rs9080770. https://www.mdpi.com/2072-4292/9/8/770

Brown M, Lowe DG (2007) . Int J Comput Vis 74(1):59. https://doi.org/10.1007/s11263-006-0002-3

Candiago S, Remondino F, De Giglio M, Dubbini M, Gattelli M (2015) . Remote Sens 7:4026. https://doi.org/10.3390/rs70404026

Chauhan RPS, Dwivedi R, Negi S (2012) . IJAIS Int J Appl Inf Syst 4:40. https://doi.org/10.5120/ijais12-450662

Chavez P, Yarleque C, Loayza H, Mares V, Hancco P, Priou S, Marquez P, Posadas A, Zorogastua P, Flexas J, Quiroz R (2012) . Precis Agric 13:236

Cooley T, Anderson G, Felde G, Hoke M, Ratkowski A, Chetwynd J, Gardner J, Adler-Golden S, Matthew M, Berk A, Bernstein L, Acharya P, Miller D, Lewis P (2002) . IGARSS IEEE Int Geosci Remote Sens Symp 3:1414. https://doi.org/10.1109/IGARSS.2002.1026134

Craig M, Atkinson D (2013) A literature review of crop area estimation. http://www.fao.org/fileadmin/templates/ess/documents/meetings_and_workshops/GS_SAC_2013/Improving_methods_for_crops_estimates/Crop_Area_Estimation_Lit_review.pdfhttp://www.fao.org/fileadmin/templates/ess/documents/meetings_and_workshops/GS_SAC_2013/Improving_methods_for_crops_estimates/Crop_Area_Estimation_Lit_review.pdfhttp://www.fao.org/fileadmin/templates/ess/documents/meetings_and_workshops/GS_SAC_2013/Improving_methods_for_crops_estimates/Crop_Area_Estimation_Lit_review.pdf

Dadhwal V, Singh R, Dutta S, Parihar J (2002) . Tropic Ecol 43(1):107

Ferro CJS, Warner TA (2002) . ASPRS American Society for Photogrammetry and Remote Sensing 68:51

Ge S, Carruthers R, Gong P, Herrera A (2006) . Environ Monit Assess 114:65. https://doi.org/10.1007/s10661-006-1071-z

Hanuschak G, Delincé J, Unit A (2004) In: Proceedings of the 3rd World Conference on Agricultural and Environmental Statistical Application. Cancun, Mexico, pp 2–4

James H (2018) Ethical tensions from new technology: the case of agricultural biotechnology (CABI 2018)

Laliberte AS, Rango A (2009) . IEEE Trans Geosci Remote Sens 47:761. https://doi.org/10.1109/TGRS.2008.2009355

Le Moigne J, Cambell W, Cromp R (2002) . IEEE Trans Geosci Remote Sens 40:1849. https://doi.org/10.1109/TGRS.2002.802501

Le Moigne J, Zavorin L, Stone H (2011) image registration for remote sensing, vol 40. Cambridge University Press, Cambridge

Lebourgeois V, Dupuy S, Vintrou E, Ameline M, Butler S, Begue A (2017) Remote Sensing 9. https://doi.org/10.3390/rs9030259

Loayza H, Cucho-Padin G, Balcazar M (2017) SpectraCIP. https://doi.org/10.21223/P3/2OWKHR

Loayza H, Cucho-Padin G, Palacios S (2017a) ISAM: image stitching for aerial images. https://doi.org/10.21223/P3/6X6HNC

Loayza H, Silva L, Palacios S, Balcazar M, Cheruiyot E, Quiroz R (2017b) Dataset for: Low-cost UAV-based agricultural remote sensing platform (UAV-ARSP) for surveying crop statistics in sampling areas. https://doi.org/10.21223/P3/J2QZCH

Lourakis M, Argyros A (2009) . ACM Trans Math Softw 36:1. https://doi.org/10.1145/1486525.1486527

Mansaray L, Huang W, Zhang D, Huang J, Li J (2017) Remote Sensing 9. https://doi.org/10.3390/rs9030257

Moran M, Inoue Y, Barnes E (1997) . Remote Sens Environ 61:319. https://doi.org/10.1016/S0034-4257(97)00045-X

Palacios S, Loayza H, Quiroz R (2019) Dataset for: Wavelet-based Multispectral Image Aligment (WMIA-CIP). https://doi.org/10.21223/9ANIYM

Pinto LS, Ray A, Reddy MU, Perumal P, Aishwarya P (2016) Proceedings of the IEEE international conference on recent trends in electronics, Information & Communication Technology (RTEICT), pp 825–828. https://doi.org/10.1109/RTEICT.2016.7807942

Rahman H, Dedieu G (1994) . Int J Remote Sens 15:123. https://doi.org/10.1080/01431169408954055

Rouse J, Haas R, Deering D, Schell J (1973) In: NASA/GSFC, Final Report, pp 1–137

Rublee E, Rabaud V, Konolige K, Bradski G (2011) International Conference on Computer Vision (ICCV 2011). pp 2564–2571. https://doi.org/10.1109/ICCV.2011.6126544

Selesnick H, Baraniuk R, Kingsbury N (2005) . IEEE Signal Proc Mag 22:123. https://doi.org/10.1109/MSP.2005.1550194

Sentinel application platform. https://step.esa.int/main/toolboxes/snap/. Accessed October 23, 2019

Stone H, Le Moigne M, McGuire J (1999) . IEEE Trans Pattern Anal Mach Intell 21:1074. https://doi.org/10.1109/34.799911

Stratoulias D, Tolpekin V, De By R, Zurita-Milla R, Retsios V, Bijker W, Hasan M, Vermote E (2017) Remote Sensing 9. https://doi.org/10.3390/rs9101048

Technology quarterly: Taking flight - Civilian drones. The Economist (2017). https://www.economist.com/technology-quarterly/2017-06-08/civilian-droneshttps://www.economist.com/technology-quarterly/2017-06-08/civilian-drones

Technical centre for agricultural and rural cooperation. Drones for agriculture, Wageningen, The Netherlands, 2016, ICT Update (82) CTA (2016). https://hdl.handle.net/10568/89779

Toth C, Jóźków G (2016) . ISPRS J Photogramm Remote Sens 115:22. https://doi.org/10.1016/j.isprsjprs.2015.10.004. http://www.sciencedirect.com/science/article/pii/S0924271615002270http://www.sciencedirect.com/science/article/pii/S0924271615002270. Theme issue ’State-of-the-art in photogrammetry, remote sensing and spatial information science’

Triggs B, McLauchlan P, Hartley R, Fitzgibbon A (1999) Proceedings of the International Workshop on Vision Algorithms: theory and practice, pp 298–372. https://doi.org/10.1007/3-540-44480-7_21

Wang X, Yang W, Wheaton A, Cooley N, Moran B (2010) . Comput Electron Agric 8:230. https://doi.org/10.1016/j.compag.2010.08.004

Wolfenson K (2013) Coping with the food and agriculture challenge: smallholders’ agenda. Food and Agriculture Organization of the United Nations, Rome, Italy. http://www.fao.org/family-farming-2014/resources/publication-detail/en/item/224468/icode/http://www.fao.org/family-farming-2014/resources/publication-detail/en/item/224468/icode/

Wu B, Meng J, Li Q, Yan N, Du X, Zhang M (2014) . Int J Digit Earth 7:113. https://doi.org/10.1080/17538947.2013.821185

Yang C (2012) . Comput Electron Agric 88:13. https://doi.org/10.1016/j.compag.2012.07.003

Zhang Z (2000) . IEEE Trans Pattern Anal Mach Intell 22:1330. https://doi.org/10.1109/34.888718

Zorogastua P, Quiroz R, Potts M, Namanda S, Mares V, Claessens L (2007) Utilization of high-resolution satellite images to improve statistics for the sweetpotato cultivated area of kumi district, uganda. Working Paper No. 2007-5 for Natural Resources Management Division CIP

Acknowledgments

We thankour colleagues Elijah Cheruyot and Arnold Bett from the University of Nairobi and Luis Silva from the International Potato Center, who provided insight and expertise that greatly assisted the research. We would also like to show our gratitude to Dr. Corinne Valdivia (University of Missouri) for leading the translational research component of the Project and for establishing the community of practice that made the work possible. The authors are endebted to Dr. Victor Mares for his critical comments and contributions for the refinement of the manuscript.

Funding

This research conducted by the International Potato Center was supported by The Bill and Melinda Gates Foundation Project OPP1070785.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cucho-Padin, G., Loayza, H., Palacios, S. et al. Development of low-cost remote sensing tools and methods for supporting smallholder agriculture. Appl Geomat 12, 247–263 (2020). https://doi.org/10.1007/s12518-019-00292-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12518-019-00292-5