Abstract

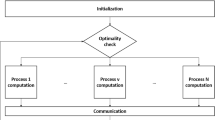

This paper describes a general purpose method for solving convex optimization problems in a distributed computing environment. In particular, if the problem data includes a large linear operator or matrix \(A\), the method allows for handling each sub-block of \(A\) on a separate machine. The approach works as follows. First, we define a canonical problem form called graph form, in which we have two sets of variables related by a linear operator \(A\), such that the objective function is separable across these two sets of variables. Many types of problems are easily expressed in graph form, including cone programs and a wide variety of regularized loss minimization problems from statistics, like logistic regression, the support vector machine, and the lasso. Next, we describe graph projection splitting, a form of Douglas–Rachford splitting or the alternating direction method of multipliers, to solve graph form problems serially. Finally, we derive a distributed block splitting algorithm based on graph projection splitting. In a statistical or machine learning context, this allows for training models exactly with a huge number of both training examples and features, such that each processor handles only a subset of both. To the best of our knowledge, this is the only general purpose method with this property. We present several numerical experiments in both the serial and distributed settings.

Similar content being viewed by others

References

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Luenberger, D.G.: Optimization by Vector Space Methods. Wiley, New York (1969)

Nesterov, Y., Nemirovskii, A.: Interior-Point Polynomial Methods in Convex Programming. Society for Industrial and Applied Mathematics (1994)

Ben-Tal, A., Nemirovski, A.: Lectures on Modern Convex Optimization: Analysis, Algorithms, and Engineering Applications. Society for Industrial and Applied Mathematics (2001)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Wen, Z., Goldfarb, D., Yin, W.: Alternating direction augmented Lagrangian methods for semidefinite programming. Math. Program. Comput. 2(3), 203–230 (2010)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 58(1), 267–288 (1996)

Group, I.M.R.T.C.W.: Intensity-modulated radiotherapy: Current status and issues of interest. Int. J. Radiat. Oncol. Biol. Phys. 51(4), 880–914 (2001)

Webb, S.: Intensity-Modulated Radiation Therapy. Taylor & Francis (2001)

Moreau, J.J.: Fonctions convexes duales et points proximaux dans un espace Hilbertien. Rep. Paris Acad. Sci. Ser. A 255, 2897–2899 (1962)

Parikh, N., Boyd, S.: Proximal algorithms. Found. Trends Optim. 1(3), 1–108 (2013). To appear

Eckstein, J., Ferris, M.C.: Operator-splitting methods for monotone affine variational inequalities, with a parallel application to optimal control. INFORMS J. Comput. 10(2), 218–235 (1998)

Yang, J., Zhang, Y.: Alternating direction algorithms for \(\backslash \text{ ell }\_1\)-problems in compressive sensing. SIAM J. Sci. Comput. 33(1), 250–278 (2011)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B (Statistical Methodology) 68(1), 49–67 (2006)

Ohlsson, H., Ljung, L., Boyd, S.: Segmentation of ARX-models using sum-of-norms regularization. Automatica 46(6), 1107–1111 (2010)

Agarwal, A., Chapelle, O., Dudik, M., Langford, J.: A reliable effective terascale linear learning, system. arXiv:1110.4198 (2011)

Grant, M., Boyd, S.: CVX: Matlab software for disciplined convex programming (2008). http://cvxr.com/cvx

Sturm, J.: Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cones. Optim. Methods Softw. 11(1–4), 625–653 (1999)

Toh, K.C., Todd, M.J., Tütüncü, R.H.: SDPT3: a MATLAB software package for semidefinite programming, version 1.3. Optim. Methods Softw. 11(1–4), 545–581 (1999)

CVX Research, I.: CVX: Matlab software for disciplined convex programming, version 2.0 beta. http://cvxr.com/cvx/examples (2012). Example library

Whaley, R.C., Dongarra, J.J.: Automatically tuned linear algebra software. In: Proceedings of the 1998 ACM/IEEE Conference on Supercomputing (CDROM), pp. 1–27 (1998)

Vanderbei, R.J.: Symmetric quasi-definite matrices. SIAM J. Optim. 5(1), 100–113 (1995)

Saunders, M.A.: Cholesky-based methods for sparse least squares: the benefits of regularization. In: Adams, L., Nazareth, J.L. (eds.) Linear and Nonlinear Conjugate Gradient-Related Methods, pp. 92–100. SIAM, Philadelphia (1996)

Davis, T.A.: Algorithm 8xx: a concise sparse Cholesky factorization package. ACM Trans. Math. Softw. 31(4), 587–591 (2005)

Amestoy, P., Davis, T.A., Duff, I.S.: An approximate minimum degree ordering algorithm. SIAM J. Matrix Anal. Appl. 17(4), 886–905 (1996)

Amestoy, P., Davis, T.A., Duff, I.S.: Algorithm 837: AMD, an approximate minimum degree ordering algorithm. ACM Trans. Math. Softw. 30(3), 381–388 (2004)

Davis, T.A.: Direct Methods for Sparse Linear Systems. SIAM, Philadelphia (2006)

Benzi, M., Meyer, C.D., Tuma, M.: A sparse approximate inverse preconditioner for the conjugate gradient method. SIAM J. Sci. Comput. 17(5), 1135–1149 (1996)

Benzi, M.: Preconditioning techniques for large linear systems: a survey. J. Comput. Phys. 182(2), 418–477 (2002)

Golub, G.H., Van Loan, C.: Matrix Computations, vol. 3. Johns Hopkins University Press, Baltimore (1996)

Wilkinson, J.H.: Rounding Errors in Algebraic Processes. Dover (1963)

Moler, C.B.: Iterative refinement in floating point. J. ACM 14(2), 316–321 (1967)

Acknowledgments

We thank Eric Chu for many helpful discussions, and for help with the IMRT example (including providing code for data generation and plotting results). Michael Grant provided advice on integrating a new cone solver into CVX. Alex Teichman and Daniel Selsam gave helpful comments on an early draft. We also thank the anonymous referees and Kim-Chuan Toh for much helpful feedback.

Author information

Authors and Affiliations

Corresponding author

Additional information

N. Parikh was supported by a National Science Foundation Graduate Research Fellowship under Grant No. DGE-0645962.

Appendices

Appendix A: Implementing graph projections

Evaluating the projection \(\Pi _A(c,d)\) involves solving the problem

with variables \(x \in \mathbf{R}^n\) and \(y \in \mathbf{R}^m\).

This can be reduced to solving the KKT system

where \(\lambda \) is the dual variable corresponding to the constraint \(y = Ax\). Substituting \(\lambda = y - d\) into the first equation and simplifying gives the system

Indeed, we can rewrite the righthand side to show that solving the system simply involves applying a linear operator, as in (6):

The coefficient matrix of (10), which we refer to as \(K\), is quasidefinite [22] because the (1,1) block is diagonal positive definite and the (2,2) block is diagonal negative definite. Thus, evaluating \(\Pi _{ij}\) involves solving a symmetric quasidefinite linear system. There are many approaches to this problem; we mention a few below.

1.1 A.1 Factor-solve methods

Block elimination. By eliminating \(x\) from the system above and then solving for \(y\), we can solve (10) using the two steps

If instead we eliminate \(y\) and then solve for \(x\), we obtain the steps

The main distinction between these two approaches is that the first involves solving a linear system with coefficient matrix \(I + AA^T \in \mathbf{R}^{m \times m}\) while the second involves the coefficient matrix \(I + A^T A \in \mathbf{R}^{n \times n}\).

To carry out the \(y\)-update in (11), we can compute the Cholesky factorization \(I + AA^T = LL^T\) and then do the steps

where \(w\) is a temporary variable. The first step is computed via forward substitution, and the second is computed via back substitution. Carrying out the \(x\)-update in (12) can be done in an analogous manner.

When \(A\) is dense, we would choose to solve whichever of (11) and (12) involves solving a smaller linear system, i.e., we would prefer (11) when \(A\) is fat (\(m < n\)) and (12) when \(A\) is skinny (\(m > n\)). In particular, if \(A\) is fat, then computing the Cholesky factorization of \(I + AA^T\) costs \(O(nm^2)\) flops and the backsolve (13) costs \(O(mn)\) flops; if \(A\) is skinny, then computing the factorization of \(I + A^T A\) costs \(O(mn^2)\) flops and the backsolve costs \(O(mn)\) flops. In summary, if \(A\) is dense, then the factorization costs \(O(\max \{m,n\} \min \{m,n\}^2)\) flops and the backsolve costs \(O(mn)\) flops.

If \(A\) is sparse, the question of which of (11) and (12) is preferable is more subtle, and requires considering the structure or entries of the matrices involved.

\(\mathbf{LDL}^{T}\) factorization. The quasidefinite system above can also be solved directly using a permuted \(\hbox {LDL}^\mathrm{T}\) factorization [22–24]. Given any permutation matrix \(P\), we compute the factorization

where \(L\) is unit lower triangular and \(D\) is diagonal. The factorization exists and is unique because \(K\) is quasidefinite.

Explicitly, this approach would involve the following steps. Suppose for now that \(P\) is given. We then form \(PKP^T\) and compute its \(\hbox {LDL}^\mathrm{T}\) factorization. In order to solve the system (10), we compute

where applying \(L^{-1}\) involves forward substitution and applying \(L^{-T}\) involves backward substitution, as before; applying the other matrices is straightforward.

Many algorithms are available for choosing the permutation matrix; see, e.g., [25–27]. These algorithms attempt to choose \(P\) to promote sparsity in \(L\), i.e., to make the number of nonzero entries in \(L\) as small as possible. When \(A\) is sparse, the number of nonzero entries in \(L\) is at least as many as in the lower triangular part of \(A\), and the choice of \(P\) determines which \(L_{ij}\) become nonzero even when \(A_{ij}\) is zero.

This method actually generalizes the two block elimination algorithms given earlier. In block form, the \(\hbox {LDL}^\mathrm{T}\) factorization can be written

where \(D_i\) are diagonal and \(L_{11}\) and \(L_{22}\) are unit lower triangular.

We now equate \(PKP^T\) with the \(\hbox {LDL}^\mathrm{T}\) factorization when \(P\) is the identity, i.e.,

Since \(I = L_{11} D_1 L_{11}^T\), it is clear that both \(L_{11}\) and \(D_1\) are the identity. Thus this equation simplifies to

It follows that \(L_{21} = A\), which gives

Finally, rearranging \(-I = AA^T + L_{22} D_2 L_{22}^T\) gives that

In other words, \(L_{22} D_2 L_{22}^T\) is the negative of the \(\hbox {LDL}^\mathrm{T}\) factorization of \(I + AA^T\).

Now suppose

Then \(P\!K\!P^T\) is given by

Equating this with the block \(\hbox {LDL}^\mathrm{T}\) factorization above, we find that \(L_{11} = I, D_1 = -I\), and \(L_{21} = -A^T\). The \((2,2)\) block then gives that

i.e., \(L_{22}D_2 L_{22}^T\) is the \(\hbox {LDL}^\mathrm{T}\) factorization of \(I + A^T A\).

In other words, applying the \(\hbox {LDL}^\mathrm{T}\) method to \(PKP^T\) directly reduces, for specific choices of \(P\), to the simple elimination methods above (assuming we solve those linear systems using an \(\hbox {LDL}^\mathrm{T}\) factorization). If \(A\) is dense, then, there is no reason not to use (11) or (12) directly. If \(A\) is sparse, however, it may be beneficial to use the more general method described here.

Factorization caching. Since we need to carry out a graph projection in each iteration of the splitting algorithm, we actually need to solve the linear system (10) many times, with the same coefficient matrix \(K\) but different righthand sides. In this case, the factorization of the coefficient matrix \(K\) can be computed once and then forward-solve and back-solves can be carried out for each righthand side. Explicitly, if we factor \(K\) directly using an \(\hbox {LDL}^\mathrm{T}\) factorization, then we would first compute and cache \(P, L\), and \(D\). In each subsequent iteration, we could simply carry out the update (14).

Of course, this observation also applies to computing the factorizations of \(I + AA^T\) or \(I + A^T A\) if the steps (11) or (12) are used instead. In these cases, we would first compute and cache the Cholesky factor \(L\), and then each iteration would only involve a backsolve.

This can lead to a large improvement in performance. As mentioned earlier, if \(A\) is dense, the factorization step costs \(O(\max \{m,n\} \min \{m,n\}^2)\) flops and the backsolve costs \(O(mn)\) flops. Thus, factorization caching gives a savings of \(O(\min \{m,n\})\) flops per iteration.

If \(A\) is sparse, a similar argument applies, but the ratio between the factorization cost and the backsolve cost is typically smaller. Thus, we still obtain a benefit from factorization caching, but it is not as pronounced as in the dense case. The precise savings obtained depends on the structure of \(A\).

1.2 A.2 Other methods

Iterative methods. It is also possible to solve either the reduced (positive definite) systems or the quasidefinite KKT system using iterative rather than direct methods, e.g., via conjugate gradient or LSQR.

A standard trick to improve performance is to initialize the iterative method at the solution \((x^{k-1/2}, y^{k-1/2})\) obtained in the previous iteration. This is called a warm start. The previous iterate often gives a good enough approximation to result in far fewer iterations (of the iterative method used to compute the update \((x^{k+1/2}, y^{k+1/2})\)) than if the iterative method were started at zero or some other default initialization. This is especially the case when the splitting algorithm has almost converged, in which case the updates will not change significantly from their previous values.

See [1, §4.3], for further comments on the use of iterative solvers, and various techniques for improving speed considerably over naive implementations.

Hybrid methods. More generally, there are methods that combine elements of direct and iterative algorithms. For example, a direct method is often used to obtain a preconditioner for an iterative method like conjugate gradient. The term preconditioning refers to replacing the system \(Ax = b\) with the system \(M^{-1}Ax = M^{-1}b\), where \(M\) is a well-conditioned (and symmetric positive definite, if \(A\) is) approximation to \(A\) such that \(Mx = b\) is easy to solve. The difficulty is in balancing the requirements of minimizing the condition number of \(M^{-1}A\) and keeping \(Mx = b\) easy to solve, so there are a wide variety of choices for \(M\) that are useful in different situations.

For instance, in the incomplete Cholesky factorization, we compute a lower triangular matrix \(H\), with tractable sparsity structure, such that \(H\) is close to the true Cholesky factor \(L\). For example, we may require the sparsity pattern of \(H\) to be the same as that of \(A\). Once \(H\) has been computed, the preconditioner \(M = HH^T\) is used in, say, a preconditioned conjugate gradient method. See [28, 29] and [30, §10.3], for additional details and references.

Alternatively, we may use a direct method, but then carry out a few iterations of iterative refinement to reduce inaccuracies introduced by floating point computations; see, e.g., [30, §3.5.3], and [31, 32].

Appendix B: Derivations

Here, we discuss some details of the derivations needed to obtain the simplified block splitting algorithm. It is easy to see that applying graph projection splitting to (8) gives the original block splitting algorithm. The operators \(\mathbf{avg}\) and \(\mathbf{exch}\) are obtained as projections onto the sets \(\{ (x, \{x_i\}_{i=1}^M) \mid x = x_i,\ i = 1, \dots , M\}\) and \(\{ (y, \{y_j\}_{j=1}^N \mid y = \sum _{j=1}^N y_j \}\), respectively.

To obtain the final algorithm, we eliminate \(\tilde{y}_{ij}^{k+1}\) and \(x_{ij}^{k+1}\) and simplify some steps.

Simplifying y variables. Substituting for \(y_{ij}^{k+1}\) in the \(\tilde{y}_{ij}^{k+1}\) update gives that

and similarly,

Since \(\tilde{y}_i^{k+1} = -\tilde{y}_{ij}^{k+1}\) (for all \(j\), for fixed \(i\)) after the first iteration, we eliminate the \(\tilde{y}_{ij}^{k+1}\) updates and replace \(\tilde{y}_{ij}^{k+1}\) with \(-\tilde{y}_i^{k+1}\) in the graph projection and exchange steps. The exchange update for \(y_i^{k+1}\) simplifies as follows:

i.e., there is no longer any dependence on the dual variables.

Simplifying x variables. To lighten notation, we use \(x_{ij}\) for \(x_{ij}^{k+1/2}, x_{ij}^+\) for \(x_{ij}^{k+1}, \tilde{x}_{ij}\) for \(\tilde{x}_{ij}^k\), and \(\tilde{x}_{ij}^+\) for \(\tilde{x}_{ij}^{k+1}\). Adding together the dual updates for \(\tilde{x}_{ij}^+\) (across \(i\) for fixed \(j\)) and \(\tilde{x}_j^+\), we get

Since \(x_{ij}^+ = x_j^+\) after the averaging step, this becomes

Plugging

into the righthand side shows that \(\tilde{x}_j^+ + \sum _{i=1}^M \tilde{x}_{ij}^+ = 0\). Thus the averaging step simplifies to

Since \(x_{ij}^{k+1} = x_j^{k+1}\) (for all \(i\), for fixed \(j\)), we can eliminate \(x_{ij}^{k+1}\), replacing it with \(x_j^{k+1}\) throughout. Combined with the steps above, this gives the simplified block splitting algorithm given earlier in the paper.

Rights and permissions

About this article

Cite this article

Parikh, N., Boyd, S. Block splitting for distributed optimization. Math. Prog. Comp. 6, 77–102 (2014). https://doi.org/10.1007/s12532-013-0061-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12532-013-0061-8

Keywords

- Distributed optimization

- Alternating direction method of multipliers

- Operator splitting

- Proximal operators

- Cone programming

- Machine learning