Abstract

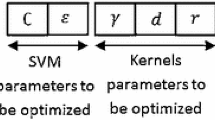

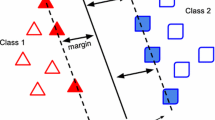

Support vector machine (SVM) is considered to be one of the most powerful learning algorithms and is used for a wide range of real-world applications. The efficiency of SVM algorithm and its performance mainly depends on the kernel type and its parameters. Furthermore, the feature subset selection that is used to train the SVM model is another important factor that has a major influence on it classification accuracy. The feature subset selection is a very important step in machine learning, specially when dealing with high-dimensional data sets. Most of the previous researches handled these important factors separately. In this paper, we propose a hybrid approach based on the Grasshopper optimisation algorithm (GOA), which is a recent algorithm inspired by the biological behavior shown in swarms of grasshoppers. The goal of the proposed approach is to optimize the parameters of the SVM model, and locate the best features subset simultaneously. Eighteen low- and high-dimensional benchmark data sets are used to evaluate the accuracy of the proposed approach. For verification, the proposed approach is compared with seven well-regarded algorithms. Furthermore, the proposed approach is compared with grid search, which is the most popular technique for tuning SVM parameters. The experimental results show that the proposed approach outperforms all of the other techniques in most of the data sets in terms of classification accuracy, while minimizing the number of selected features.

Similar content being viewed by others

References

Aljarah I, Faris H, Mirjalili S. Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput. 2016; 1–15.

Aljarah I, Faris H, Mirjalili S, Al-Madi N. Training radial basis function networks using biogeography-based optimizer. Neural Comput Appl. 2016; 1–25.

Faris H, Aljarah I, Mirjalili S. Training feedforward neural networks using multi-verse optimizer for binary classification problems. Appl Intell. 2016;45(2):322–332.

Faris H, Aljarah I, Al-Betar MA, Mirjalili S. Grey wolf optimizer: a review of recent variants and applications. Neural Comput Appl. 2017; 1–23.

Arana-Daniel N, Gallegos A A, López-Franco C, Alanís AY, Morales J, López-Franco A. Support vector machines trained with evolutionary algorithms employing kernel adatron for large scale classification of protein structures. Evol Bioinform Online. 2016;12:285.

Babaoġlu I, Fındık O, Bayrak M. Effects of principle component analysis on assessment of coronary artery diseases using support vector machine. Expert Syst Appl. 2010;37(3):2182–2185.

Bao Y, Hu Z, Xiong T. A pso and pattern search based memetic algorithm for svms parameters optimization. Neurocomputing. 2013;117:98–106.

Beyer K, Goldstein J, Ramakrishnan R, Shaft U. When is “nearest neighbor” meaningful? In: International conference on database theory. Springer; 1999. p. 217–235.

Blondin J, Saad A. Metaheuristic techniques for support vector machine model selection. In: 2010 10th International conference on hybrid intelligent systems (HIS). IEEE; 2010. p. 197–200.

Boser BE, Guyon IM, Vapnik VN. A training algorithm for optimal margin classifiers. In: Proceedings of the fifth annual workshop on Computational learning theory. ACM; 1992. p. 144–152.

Bouraoui A, Jamoussi S, BenAyed Y. A multi-objective genetic algorithm for simultaneous model and feature selection for support vector machines. Artif Intell Rev. 2017; 1–21.

Chang CC, Lin CJ. Libsvm: a library for support vector machines. ACM Trans Intell Syst Technol (TIST). 2011;2(3):27.

Cortes C, Vapnik V. Support-vector networks. Mach Learn. 1995;20(3):273–297.

Eswaramoorthy S, Eswaramoorthy S, Sivakumaran N, Sivakumaran N, Sekaran S, Sekaran S. Grey wolf optimization based parameter selection for support vector machines. COMPEL-The Int J Comput Math Electr Electron Eng. 2016;35(5):1513–1523.

Faris H, Hassonah MA, Ala’M AZ, Mirjalili S, Aljarah I. A multi-verse optimizer approach for feature selection and optimizing svm parameters based on a robust system architecture. Neural Comput Appl. 1–15.

Gepperth A, Karaoguz C. A bio-inspired incremental learning architecture for applied perceptual problems. Cogn Comput. 2016;8(5):924–934.

Goldberg DE. Genetic algorithms in search, optimization and machine learning, 1st ed. Boston: Addison-Wesley Longman Publishing Co., Inc.; 1989.

Huang CL. Aco-based hybrid classification system with feature subset selection and model parameters optimization. Neurocomputing. 2009;73(1):438–448.

Huang CL, Wang CJ. A ga-based feature selection and parameters optimizationfor support vector machines. Expert Syst Appl. 2006;31(2):231–240.

James G, Witten D, Hastie T, Tibshirani R. An introduction to statistical learning, vol. 6. Springer; 2013.

Kanevski M, Pozdnukhov A, Timonin V. 2008. Machine learning algorithms for geospatial data applications and software tools.

Kohavi R, John GH. Wrappers for feature subset selection. Artif Intell. 1997;97(1):273–324.

Li C, An X, Li R. A chaos embedded gsa-svm hybrid system for classification. Neural Comput Applic. 2015;26(3):713–721.

Lichman M. UCI machine learning repository; 2013. http://archive.ics.uci.edu/ml.

Lin SW, Ying KC, Chen SC, Lee ZJ. Particle swarm optimization for parameter determination and feature selection of support vector machines. Expert Syst Appl. 2008;35(4):1817–1824.

Liu X, Zeng Y, Zhang T, Xu B. Parallel brain simulator: a multi-scale and parallel brain-inspired neural network modeling and simulation platform. Cogn Comput. 2016;8(5):967–981.

Liu Y, Wang G, Chen H, Dong H, Zhu X, Wang S. An improved particle swarm optimization for feature selection. J Bionic Eng. 2011;8(2):191–200.

Mirjalili S, Mirjalili SM, Hatamlou A. Multi-verse optimizer: a nature-inspired algorithm for global optimization. Neural Comput Applic. 2016;27(2):495–513.

Mirjalili S, Mirjalili SM, Lewis A. Grey wolf optimizer. Adv Eng Softw. 2014;69:46–61.

Mirjalili SZ, Mirjalili S, Saremi S, Faris H, Aljarah I. Grasshopper optimization algorithm for multi-objective optimization problems. Appl Intell. 2017; 1–16.

Nguyen MH, De la Torre F. Optimal feature selection for support vector machines. Pattern Recog. 2010;43(3):584–591.

Perdisci R, Gu G, Lee W. Using an ensemble of one-class svm classifiers to harden payload-based anomaly detection systems. In: Sixth International conference on data mining, 2006. ICDM’06. IEEE; 2006. p. 488–498.

Puntura A, Theera-Umpon N, Auephanwiriyakul S. Optimizing support vector machine parameters using cuckoo search algorithm via cross validation. In: 2016 6th IEEE International conference on control system, computing and engineering (ICCSCE). IEEE; 2016. p. 102–107.

Rodan A, Faris H. Credit risk evaluation using cycle reservoir neural networks with support vector machines readout. In: Asian Conference on intelligent information and database systems. Springer; 2016. p. 595–604.

Rodan A, Faris H, Alsakran J, Al-Kadi O. A support vector machine approach for churn prediction in telecom industry. Int Inf Inst (Tokyo) Inf. 2014;17(8):3961.

Samadzadegan F, Soleymani A, Abbaspour RA. Evaluation of genetic algorithms for tuning svm parameters in multi-class problems. In: 2010 11th International symposium on computational intelligence and informatics (CINTI). IEEE; 2010. p. 323–328.

Saremi S, Mirjalili S, Lewis A. Grasshopper optimisation algorithm: theory and application. Adv Eng Softw. 2017;105:30–47.

Sheta A, Ahmed SE, Faris H. A comparison between regression, artificial neural networks and support vector machines for predicting stock market index. Int J Adv Res Artif Intell (IJARAI). 2015;4(7):55–63.

Sotiris VA, Peter WT, Pecht MG. Anomaly detection through a bayesian support vector machine. IEEE Trans Reliab. 2010;59(2):277–286.

Staelin C. 2003. Parameter selection for support vector machines. Hewlett-Packard Company, Tech. Rep HPL-2002-354R1.

Takeuchi K, Collier N. Bio-medical entity extraction using support vector machines. Artif Intell Med. 2005; 33(2):125–137.

Tanveer M. Robust and sparse linear programming twin support vector machines. Cogn Comput. 2015;7(1): 137–149.

Tuba E, Mrkela L, Tuba M. Support vector machine parameter tuning using firefly algorithm. In: 2016 26th International conference Radioelektronika (RADIOELEKTRONIKA). IEEE; 2016. p. 413–418.

Tuba E, Tuba M, Simian D. Adjusted bat algorithm for tuning of support vector machine parameters. In: 2016 IEEE Congress on evolutionary computation (CEC). IEEE; 2016. p. 2225–2232.

Vapnik V. An overview of statistical learning theory. IEEE Trans Neural Netw. 1999;5:988–999.

Vapnik V. 2013. The nature of statistical learning theory. Springer Science & Business Media.

Weston J, Mukherjee S, Chapelle O, Pontil M, Poggio T, Vapnik V. Feature selection for svms. In: Proceedings of the 13th international conference on neural information processing systems. MIT Press; 2000. p 647–653.

Wolpert DH, Macready WG. No free lunch theorems for optimization. IEEE Trans Evol Comput. 1997;1(1):67–82.

Wu T, Yao M, Yang J. Dolphin swarm extreme learning machine. Cogn Comput. 2017;9(2):275–284.

Xin J, Chen G, Hai Y. A particle swarm optimizer with multi-stage linearly-decreasing inertia weight. In: International Joint conference on computational sciences and optimization, 2009. CSO 2009, vol 1. IEEE; 2009. p. 505–508.

Xu Y, Guo R, Wang L. A twin multi-class classification support vector machine. Cogn Comput. 2013;5(4):580–588.

Yang J, Gong L, Tang Y, Yan J, He H, Zhang L, Li G. An improved svm-based cognitive diagnosis algorithm for operation states of distribution grid. Cogn Comput. 2015;7(5):582– 593.

Yang XS, Deb S. Cuckoo search via lévy flights. In: World Congress on nature & biologically inspired computing, 2009. NaBIC 2009. IEEE; 2009. p. 210–214.

Yang XS, Deb S, Fong S. Accelerated particle swarm optimization and support vector machine for business optimization and applications. In: International conference on networked digital technologies. Springer; 2011. p. 53–66.

Yang XS, He X. Firefly algorithm: recent advances and applications. Int J Swarm Intell. 2013;1(1):36–50.

Yang XS, Hossein Gandomi A. Bat algorithm: a novel approach for global engineering optimization. Eng Comput. 2012;29(5):464–483.

Yin S, Zhu X, Jing C. Fault detection based on a robust one class support vector machine. Neurocomputing. 2014;145:263–268.

Zhang H, Berg AC, Maire M, Malik J. Svm-knn: discriminative nearest neighbor classification for visual category recognition. In: IEEE Computer society conference on computer vision and pattern recognition, 2006, vol. 2. IEEE; 2006. p. 2126– 2136.

Zhang J, Tittel FK, Gong L, Lewicki R, Griffin RJ, Jiang W, Jiang B, Li M. Support vector machine modeling using particle swarm optimization approach for the retrieval of atmospheric ammonia concentrations. Environ Model Assess. 2016;21(4):531–546.

Zhang X, Chen X, He Z. An aco-based algorithm for parameter optimization of support vector machines. Expert Syst Appl. 2010;37(9):6618–6628.

Acknowledgements

The authors would like to thank Dr. Simon Andrews from Babraham Institute, Cambridge, UK for thoroughly proofreading this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Compliance with Ethical Standards

All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008 (5).

Conflict of interests

The authors declare that they have no conflict of interest.

Informed Consent

All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008 (5).

Human and Animal Rights

This article does not contain any studies with human or animal subjects performed by any of the authors.

Rights and permissions

About this article

Cite this article

Aljarah, I., Al-Zoubi, A.M., Faris, H. et al. Simultaneous Feature Selection and Support Vector Machine Optimization Using the Grasshopper Optimization Algorithm. Cogn Comput 10, 478–495 (2018). https://doi.org/10.1007/s12559-017-9542-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-017-9542-9