Abstract

In risk theory with application to insurance, the identification of the relevant distributions for both the counting and the claim size processes from given observations is of major importance. In some situations left-truncated distributions can be used to model, not only the single claim severity, but also the inter-arrival times between two consecutive claims. We show that left-truncated Weibull distributions are particularly relevant, especially for the claim severity distribution. For that, we first demonstrate how the parameters can be estimated consistently from the data, and then show how a Kolmogorov-Smirnov goodness-of-fit test can be set up using modified critical values. These critical values are universal to all left-truncated Weibull distributions, independent of the actual Weibull parameters. To illustrate our findings we analyse three applications using real insurance data, one from a Swiss excess of loss treaty over automobile insurance, another from an American private passenger automobile insurance and a third from earthquake inter-arrival times in California.

Similar content being viewed by others

Notes

In our study the left-truncation parameter \(x_L>0\) is known and not to be confused with the unknown location parameter \(x_0\) of the 3-parameter Weibull distribution. Here, we only consider 2-parameter Weibull distributions.

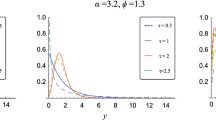

Due to a scaling property of the Weibull distribution, first noted for the complete Weibull sample by [32], the modified critical values are independent of various parameter combinations of \(\alpha\) and \(\beta\). Our results show that although the CVs depend predominantly on the sample size, there is also a slight dependence on the truncation value. This dependence leads us to two sets of critical values: one anti-conservative and one conservative test. The difference between using conservative and anti-conservative CVs leads to an error of the first kind less than 2%.

Statements 1. and 3. actually follow for MLE from statement 2. but we have kept them in the theorem to be consistent with Lehmann & Casella [20]).

From this point onwards we will drop the index \(n\) and use \(\hat{\alpha }\) and \(\hat{\beta }\).

In the table, the theoretical truncated percentage is estimated as \(\approx 90\%\) using the relation \(\rho =1-e^{-\eta }\) by assuming that the untruncated data set is Weibull. Since we do not have the complete data set and the sample size is small this value should be considered a very rough approximation.

Note that \(\xi =\hat{\beta }/\beta ^0\) is the unique solution to the MLE equation Eq. (22) which is equivalent to the original MLE Eq. (6). For the existence of the unique solution Lemma 1 requires the inequality Eq. (8) to be satisfied. In our notation using Eq. (20) this means \(2 \cdot \left( \frac{1}{n} \sum _{i=1}^{n} \log {(1+\eta ^{-1}y_i )} \right) ^{2} > \frac{1}{n} \sum _{i=1}^{n} \log ^{2}{(1+\eta ^{-1}y_i )}\), which depends only on sample size \(n\), parameter \(\eta\) and some sample of standard exponential random variables \(y_1,...,y_n\).

References

Afonso LB, Cardoso RM, Egídio dos Reis AD (2013) Dividend problems in the dual risk model. Insur Math Econ 53(3):906–918

Aho M, Bain LJ, Engelhardt M (1985) Goodness-of-fit tests for the Weibull distribution with unknown-parameters and heavy censoring. J Stat Comput Simul 21(3–4):213–225

Balakrishnan N, Kateri M (2008) On the maximum likelihood estimation of parameters of Weibull distribution based on complete and censored data. Stat Probab Lett 78(17):2971–2975

Bar-Lev SK (1984) Large sample properties of the mle and mcle for the natural parameter of a truncated exponential family. Annal Inst Stat Math 36(2):217–222

Barndorff-Nielsen O (1978) Information and exponential families in statistical theory. Wiley, New York

Bergel AI, Egídio dos Reis AD (2013) On a Sparre-Andersen risk model with PH(\(n\)) interclaim times. Tech. rep., CEMAPRE Working paper, http://cemapre.iseg.ulisboa.pt/archive/preprints/617

Buchanan R, Priest C (2006) Deductible, encyclopedia of actuarial science, 1st edn. Wiley, New York

Chandra M, Singpurwalla ND, Stephens MA (1981) Kolmogorov statistics for tests of fit for the extreme-value and Weibull-distributions. J Am Stat Assoc 76(375):729–731

David FN, Johnson NL (1948) The probability integral transformation when parameters are estimated from the sample. Biometrika 35(1–2):182–190

Deemer WL, Votaw DF (1955) Estimation of parameters of truncated or censored exponential distributions. Annal Math Stat 26(3):498–504

Dickson DCM (2005) Insurance risk and ruin. Cambridge University Press, New York

Embrechts P, Klüppelberg C, Mikosch T (1997) Modelling extremal events for insurance and finance, vol 33. Springer, Berlin

Farnum NR, Booth P (1997) Uniqueness of maximum likelihood estimators of the 2-parameter Weibull distribution. IEEE Trans Reliab 46(4):523–525

Freedman D, Diaconis P (1981) On the histogram as a density estimator---L2 theory. Z Wahrscheinlichkeit 57(4):453–476

Frees EW (2009) Regression modelling with actuarial and financial applications (Wisconsin School of Business). http://instruction.bus.wisc.edu/jfrees/jfreesbooks/Regression

Ji L, Zhang C (2012) Analysis of the multiple roots of the Lundberg fundamental equation in the ph(n) risk model. Appl Stoch Model Bus Ind 28(1):73–90

Kendall MG, Stuwart A (1979) The advanced theory of statistics, vol 2. C. Griffin, London

Klugman SA, Panjer HH, Willmot GE (2012) Loss models: from data to decisions, 4th edn. Wiley, New York

Klüppelberg C, Villasenor JA (1993) Estimation of distribution tails—a semiparametric approach. Blätter der DGVFM 21(2):213–235

Lehmann EL, Casella G (1998) Theory of point estimation. Springer, New York

Lilliefors H (1969) On the Kolmogorov-Simirnov test for the exponential distribution with mean unknown. J Am Stat Assoc 64(325):387–389

Littell RC, McClave JT, Offen WW (1979) Goodness-of-fit tests for the 2 parameter Weibull distribution. Commun Stat Part B Simul Comput 8(3):257–269

Miller LH (1956) Table of percentage points of Kolmogorov statistics. J Am Stat Assoc 51(273):111–121

Mosteller F (1946) On some useful inefficient statistics. Annal Math Stat 17(4):377–408

National Geophysical Data Center (2015) The significant earthquake database. (n.d.). http://www.ngdc.noaa.gov/nndc/struts/form?t=101650&s=1&d=1

Parsons FG, Wirsching PH (1982) A Kolmogorov Simirnov goodness-of-fit test for the 2-parameter Weibull distribution when the parameters are estimated from the data. Microelect Reliab 22(2):163–167

Rinne H (2009) The Weibull distribution: a handbook. CRC Press, Boca Raton

Rodríguez-Martínez EV, Cardoso RMR, Egídio dos Reis AD (2015) Some advances on the Erlang(n) dual risk model. ASTIN Bull 45(1):127–150

Shorack GR, Wellner JA (2009) Empirical processes with applications to statistics. SIAM, Philadelphia

Smith RL (1985) Maximum-likelihood estimation in a class of nonregular cases. Biometrika 72(1):67–90

Stroock L (2011) Stroock special bulletin: reinsurance implications of the Japanese earthquake. http://www.stroock.com/SiteFiles/Pub1091

Thoman DR, Bain LJ, Antle CE (1969) Inferences on the parameters of the Weibull distribution. Technometrics 11(3):445–460

Weibull W (1951) A statistical distribution function of wide applicability. J Appl Mech Trans Asme 18(3):293–297

Wingo DR (1989) The left-truncated Weibull distribution: theory and computation. Statist Papers 30:39–48

Woodruff BW, Moore AH, Dunne EJ, Cortes R (1983) A modified Kolmogorov-Simirnov test for Weibull-distributions with unknown location and scale-parameters. IEEE Trans Reliab 32(2):209–213

Acknowledgments

The authors are greatful to Ross Frick for useful comments and discussions. The 4th author gratefully acknowledges financial support from FCT - Fundação para a Ciência e a Tecnologia (Project reference PEst-OE/EGE/UI0491/2013).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Proofs

1.1 Proof of Lemma 1

-

1.

Starting from the Lemma 1 precondition \(X_i>x_L>0\) for all \(i=1,2,...,n\), we see from Eq. (7) with the new variables \(\zeta _i \equiv X_i/x_L >1\) that the first derivative of \(h(\cdot )\) with respect to \(\beta\) is given by

$$\begin{aligned}&h'(\beta ) \nonumber \\&= - \frac{ \left[ \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) \right] ^2 + \beta ^2 \sum _{i=1}^{n} (\zeta _i^{\beta } \log ^2{\zeta _i}) \cdot \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1 ) - \beta ^2 \left( \sum _{i=1}^{n} \zeta _i^{\beta } \log {\zeta _i} \right) ^2 }{ \beta ^2 \left[ \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) \right] ^2 }\,. \end{aligned}$$(12)To prove that \(h(\cdot )\) is monotonically decreasing, we need to show that \(h'(\beta )<0\) for \(\beta >0\). Thus we need to show that the numerator of Eq. (12) is positive. We prove this statement by mathematical induction over \(n\)

$$\begin{aligned} A(n): \left[ \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) \right] ^2 + \beta ^2 \sum _{i=1}^{n} (\zeta _i^{\beta } \log ^2{\zeta _i}) \cdot \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1 ) - \beta ^2 \left( \sum _{i=1}^{n} \zeta _i^{\beta } \log {\zeta _i} \right) ^2 > 0 \end{aligned}$$(13)For the base case \(A(1)\) we have to show that for any \(\zeta _1\,>\,1\) and \(\beta >0\)

$$\begin{aligned} A(1): ( \zeta _1^{\beta } - 1)^2 + \beta ^2 ( \zeta _1^{\beta } \log ^2{\zeta _1} ) \cdot ( \zeta _1^{\beta } - 1 ) - \beta ^2 ( \zeta _1^{\beta } \log {\zeta _1} )^2 > 0 \end{aligned}$$(14)Simplifying Eq. (14) leads to

$$\begin{aligned} e^{\beta \log {\zeta _1}} - 2 + e^{-\beta \log {\zeta _1}} &> (\beta \log {\zeta _1})^2 \nonumber \\ 2\,\left( \cosh \left[ y\right] -1 \right)&> y^2 \nonumber \\ \sum _{k=2}^{\infty } \frac{y^{2k}}{(2k)!}&> 0 \end{aligned}$$(15)where \(y= \beta \log {\zeta _1}\) and the base case \(A(1)\) is verified. Next we do the inductive step \(A(n) \rightarrow A(n+1)\). \(A(n+1)\) reads as

$$\begin{aligned}&\left[ \sum _{i=1}^{n+1} ( \zeta _i^{\beta } - 1) \right] ^2 + \beta ^2 \left\{ \sum _{i=1}^{n+1} \zeta _i^{\beta } \log ^2{\zeta _i} \cdot \sum _{i=1}^{n+1} ( \zeta _i^{\beta } - 1 ) - \left( \sum _{i=1}^{n+1} \zeta _i^{\beta } \log {\zeta _i} \right) ^2 \right\} \nonumber \\&= \left[ \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) + ( \zeta _{n+1}^{\beta } - 1) \right] ^2 + \nonumber \\&+ \beta ^2 \left\{ \left[ \sum _{i=1}^{n} \zeta _i^{\beta } \log ^2{\zeta _i} + \zeta _{n+1}^{\beta } \log ^2{\zeta _{n+1}} \right] \cdot \left[ \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1 ) + ( \zeta _{n+1}^{\beta } - 1 ) \right] \right. \nonumber \\& \left. - \left( \sum _{i=1}^{n} \zeta _i^{\beta } \log {\zeta _i} + \zeta _{n+1}^{\beta } \log {\zeta _{n+1} } \right) ^2 \right\} \nonumber \\&= \left[ \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) \right] ^2 + 2 \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) \cdot ( \zeta _{n+1}^{\beta } - 1) + ( \zeta _{n+1}^{\beta } - 1)^2 \nonumber \\&+ \beta ^2 \left\{ \sum _{i=1}^{n} ( \zeta _i^{\beta } \log ^2{\zeta _i} ) \cdot \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) + \sum _{i=1}^{n} ( \zeta _i^{\beta } \log ^2{\zeta _i} ) \cdot ( \zeta _{n+1}^{\beta } -1 ) \right. \nonumber \\& + \left. ( \zeta _{n+1}^{\beta } \log ^2{\zeta _{n+1}} ) \cdot \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) + ( \zeta _{n+1}^{\beta } \log ^2{\zeta _{n+1}} ) \cdot ( \zeta _{n+1}^{\beta } -1 ) \right. \nonumber \\& - \left. \left( \sum _{i=1}^{n} \zeta _i^{\beta } \log {\zeta _i} \right) ^2 - 2 \sum _{i=1}^{n} (\zeta _i^{\beta } \log {\zeta _i} ) \cdot ( \zeta _{n+1}^{\beta } \log {\zeta _{n+1}} ) - ( \zeta _{n+1}^{\beta } \log {\zeta _{n+1}} )^2 \right\} \nonumber \\&> 2 \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) \cdot ( \zeta _{n+1}^{\beta } - 1) \nonumber \\&+ \beta ^2 \left\{ \sum _{i=1}^{n} ( \zeta _i^{\beta } \log ^2{\zeta _i} ) \cdot ( \zeta _{n+1}^{\beta } -1 ) + ( \zeta _{n+1}^{\beta } \log ^2{\zeta _{n+1}} ) \sum _{i=1}^{n} ( \zeta _i^{\beta } - 1) \right. \nonumber \\&\quad - \left. 2 \sum _{i=1}^{n} (\zeta _i^{\beta } \log {\zeta _i} ) \cdot ( \zeta _{n+1}^{\beta } \log {\zeta _{n+1}} ) \right\} \ge 0 \end{aligned}$$(16)where we have used the Base Case \(A(1)\), Eq. (14), and the induction assumption \(A(n)\), Eq. (13), to arrive at Eq. (16). We shall prove this inequality Eq. (16) again by mathematical induction. Rewriting the inequality in terms of new variables \(z_i\,\equiv \,\zeta _i^{\beta }>1\) the induction statment \(B(n)\) reads as:

$$\begin{aligned} B(n){:}\, &2 \sum _{i=1}^{n} (z_i - 1) \cdot ( z_{n+1} - 1) + \sum _{i=1}^{n} ( z_i \log ^2{z_i} ) \cdot ( z_{n+1} -1 ) \nonumber \\&+ \sum _{i=1}^{n} ( z_i - 1) \cdot ( z_{n+1} \log ^2{z_{n+1}} ) - 2 \sum _{i=1}^{n} (z_i \log {z_i} ) \cdot ( z_{n+1} \log {z_{n+1}} ) \ge 0\,. \end{aligned}$$(17)The Base Case \(B(1)\) is given by Proposition 1 below. The inductive step \(B(n) \rightarrow B(n+1)\) is done by some simple algebraic mainipulations. We write \(B(n+1)\) as:

$$\begin{aligned}&2 \sum _{i=1}^{n+1} (z_i - 1) \cdot ( z_{n+2} - 1) + \sum _{i=1}^{n+1} ( z_i \log ^2{z_i} ) \cdot ( z_{n+2} -1 ) \nonumber \\&\qquad+ \sum _{i=1}^{n+1} ( z_i - 1) \cdot ( z_{n+2} \log ^2{z_{n+2}} ) - 2 \sum _{i=1}^{n+1} (z_i \log {z_i} ) \cdot ( z_{n+2} \log {z_{n+2}} ) \nonumber \\&\quad= \left\{ 2 \sum _{i=1}^{n} (z_i - 1) \cdot ( z_{n+2} - 1) + \sum _{i=1}^{n} ( z_i \log ^2{z_i} ) \cdot ( z_{n+2} -1 ) \right. \nonumber \\& \left. \qquad+ \sum _{i=1}^{n} ( z_i - 1) \cdot ( z_{n+2} \log ^2{z_{n+2}} ) - 2\sum _{i=1}^{n} (z_i \log {z_i} ) \cdot ( z_{n+2} \log {z_{n+2}} ) \right\} \nonumber \\&\qquad+ \Bigg \{ 2 (z_{n+1} - 1) \cdot ( z_{n+2} - 1) + ( z_{n+1} \log ^2{z_{n+1}} ) \cdot ( z_{n+2} -1 ) \nonumber \\& \qquad+ ( z_{n+1} - 1) \cdot ( z_{n+2} \log ^2{z_{n+2}} ) - 2 (z_{n+1} \log {z_{n+1}} ) \cdot ( z_{n+2} \log {z_{n+2}} ) \Bigg \} \nonumber \\&\quad \ge 0 \end{aligned}$$(18)Here, all terms in the first curly brackets are non-negative by the induction assumption \(B(n)\), Eq. (17), (with some arbitrary number \(z_{n+2}>1\) playing the role of \(z_{n+1}>1\)), and likewise the terms in the second curly brackets, constituting the Base Case \(B(1)\), Eq. (19), (with two arbitrary numbers \(z_{n+1},z_{n+2}>1\), playing the roles of \(z_1,z_2>1\)).

-

2.

Note that the order statistic \(X_{(n)} = \max {\{x_L, X_1,..., X_n \}}\). Rewrite Eq. (7) as

$$\begin{aligned} \lim _{\beta \rightarrow +\infty } h(\beta )&= \lim _{\beta \rightarrow +\infty } \left( \frac{1}{\beta } - \frac{ \left( \frac{X_{(n)}}{x_L}\right) ^{\beta } \sum _{i=1}^{n} \left( \frac{X_i}{X_{(n)}}\right) ^{\beta } \log {\frac{X_i}{x_L}} }{ \left( \frac{X_{(n)}}{x_L}\right) ^{\beta } \sum _{i=1}^{n} \left[ \left( \frac{X_i}{X_{(n)}}\right) ^{\beta } - \left( \frac{x_L}{X_{(n)}}\right) ^{\beta } \right] } + \frac{1}{n} \sum _{i=1}^{n} \log {\frac{X_i}{x_L}} \right) \\&= 0 - \frac{ 1 \cdot \log { \frac{X_{(n)}}{x_L} }}{1-0} + \frac{1}{n} \sum _{i=1}^{n} \log {\frac{X_i}{x_L}} \\&= -\log { \frac{X_{(n)}}{x_L} } + \frac{1}{n} \sum _{i=1}^{n} \log {\frac{X_i}{x_L}} < 0 \end{aligned}$$because the geometric mean of \(n\) different real numbers is smaller than their largest number.

-

3.

Rewrite Eq. (7) as

$$\begin{aligned} h(\beta )&= \frac{ \sum _{i=1}^{n} \left[ \left( \frac{X_i}{x_L}\right) ^{\beta } - 1 \right] - \beta \cdot \sum _{i=1}^{n} \left( \frac{X_i}{x_L}\right) ^{\beta } \log {\frac{X_i}{x_L}} }{ \beta \cdot \sum _{i=1}^{n} \left[ \left( \frac{X_i}{x_L}\right) ^{\beta } - 1 \right] } + \frac{1}{n} \sum _{i=1}^{n} \log {\frac{X_i}{x_L}} \end{aligned}$$and apply L’Hospital’s rule for \(\beta \rightarrow 0+\) twice to obtain

$$\begin{aligned} \lim _{\beta \rightarrow 0+} h(\beta ) = \frac{2\left( \frac{1}{n}\sum _{i=1}^{n} \log {\frac{X_i}{x_L}} \right) ^{2} - \frac{1}{n} \sum _{i=1}^{n} \log ^{2}{\frac{X_i}{x_L} } }{\frac{2}{n} \sum _{i=1}^{n} \log {\frac{X_i}{x_L} }} \end{aligned}$$and the proof is finished.

\(\square\)

Proposition 1

For two real numbers \(z_1,z_2\ge 1\) we have

Proof

We start from the following inequality

By the monotonicity of the Riemann integral we have

which is the desired inequality, Eq. (19). \(\square\)

1.2 Proof of Theorem 1: checking the assumptions of [20]

For the proof we apply [20], Theorem 5.1 of section 6.5 (p. 463). Thus, we only need to check conditions (A0)–(A2) of section 6.3 and assumptions (A)–(D) from Sect. 6.5. Let us define as in this reference the general parameter vector \(\varvec{\theta }\equiv (\alpha ,\beta )\) and the true parameter vector of the distribution as \(\varvec{\theta }^0 \equiv (\alpha ^0,\beta ^0)\). Then the calculations for this are as follows:

1.3 Conditions

-

(A0):

requires that the distributions of the observations are distinct, i.e. for different sets of parameters \(\varvec{\theta } \ne \varvec{\theta }'\) the corresponding pdf’s are different. This is readily checked because for \(\varvec{\theta }=(\alpha ,\beta )\) and \(\varvec{\theta }'=(\alpha ',\beta ')\) we see that \(f(X|\alpha ,\beta ,x_L) \ne f(X|\alpha ',\beta ',x_L)\) almost everywhere in \(X\).

-

(A1):

requires that all distributions have a common support, which is true, since \(X\in (x_L,\infty )\).

-

(A2):

requires the observations \(X_1,...,X_n\) are i.i.d. with a probability density \(f(X|\varvec{\theta }^0,x_L)\), which follows from our assumption.

1.4 Assumptions

-

(A):

There exists an open subset \(\omega\) of \(\Omega\) containing the true parameter point \(\varvec{\theta }^0\) such that for almost all \(X\) the pdf \(f(X|\varvec{\theta },x_L)\) admits all third derivatives \((\partial ^3 / \partial \theta _j \partial \theta _k \partial \theta _l )f(X|\varvec{\theta },x_L)\) for all \(\varvec{\theta }\) in \(\omega\). This condition is also readily checked because the left-truncated Weibull distribution is contineously differentiable with respect to its parameters \(0<\theta _j<\infty\), with \(j=1,2\) as mentioned in the first section.

-

(B):

This condition requires the first and second logarithmic derivatives of \(f\) satisfy

$$\begin{aligned} \mathbb {E}_{\varvec{\theta }} \left[ \frac{\partial }{\partial \theta _j} \log {f(X|\varvec{\theta },x_L)} \right] = 0\, {\rm for}\, j=1,2 \end{aligned}$$and

$$\begin{aligned} I_{jk}(\varvec{\theta })&= \mathbb {E}_{\varvec{\theta }} \left[ \frac{\partial }{\partial \theta _j} \log {f(X|\varvec{\theta },x_L)} \cdot \frac{\partial }{\partial \theta _k} \log {f(X|\varvec{\theta },x_L)} \right] \\&= - \mathbb {E}_{\theta } \left[ \frac{\partial ^2}{\partial \theta _j \partial \theta _k} \log {f(X|\varvec{\theta },x_L)} \right] {\rm for}\, j,k=1,2 \end{aligned}$$Here, the expectation operator \(\mathbb {E}_{\theta } \left[ \cdot \right]\) denotes the expectation over the absolute continuous probability measure \(f(X|\varvec{\theta },x_L) dX\). These two conditions are readily verified as the left-truncated Weibull distribution functions are continously differentiable and in \(C^{\infty }((x_L,\infty )\times (0,\infty ) \times (0,\infty ))\). Thus integration and differentiation can be interchanged and integration by parts leads to the desired result since the integral of \(f\) over the integration domain \((x_L,\infty )\) is 1 by normalisation and thus any of the derivatives vanishes.

-

(C):

All \(I_{jk}(\varvec{\theta })\) defined in Assumption (B) are finite and the \(2\times 2\) matrix \(I(\varvec{\theta })\) is positive definite for all \(\varvec{\theta }\) in \(\omega\): the relevant integrals can be computed explicitly and are finite, due to the asymptotic condition for \(x\rightarrow \infty\) we have \(f(x|\alpha , \beta , x_L) =O( \exp { [-(x/\alpha )^{\beta '}]})\) for \(\alpha >0\) and any \(\beta ' \in (0,\beta )\). Hence also the \(I_{jk}(\varvec{\theta })\) are finite. Thus the matrix \(I_{jk}\) is well-defined and as a covariance matrix by construction positive definite. That the functions

and

and  are affinely linear independent with probability 1 can be seen immediately by explicit computation.

are affinely linear independent with probability 1 can be seen immediately by explicit computation. -

(D):

The absolute values of all third derivatives \(\left| (\partial ^3 / \partial \theta _j \partial \theta _k \partial \theta _l )\log {f(X|\varvec{\theta },x_L)}\right|\) again can be bounded by integrable functions and their expectations \(\mathbb {E}_{\theta } \left[ \cdot \right]\) can be computed and are finite. This is a consequence of the properties of the left-truncated Weibull distributions, namely as \(x\rightarrow \infty\) we have \(f(x|\alpha , \beta , x_L) =O( \exp { [-(x/\alpha )^{\beta '}]})\) for \(\alpha >0\) and any \(\beta ' \in (0,\beta )\).

Appendix 2: Truncated Weibull random variates and their representation by exponential variates

Let \(u_i \in (0,1)\) denote the standard uniform random variable. Then from the cdf in Eq. (2) we obtain a left truncated Weibull distributed random variable \(X_i\)

where \(y_i\) is a standard exponential random variate and \(\eta \equiv \left( {x_L}/{\alpha } \right) ^{\beta }\).

Appendix 3: Universality of pivotal functions

As in [32] we wish to demonstrate that for a given sample size \(n\) and the truncation parameter \(\eta >0\) the pivotal functions \((\alpha ^0 / \hat{\alpha })^{\hat{\beta }}\) and \(\hat{\beta }/\beta ^0\) are distributed independently with the choice of \((\alpha ^0,\beta ^0)\) and have the same distribution as \((1/\hat{\alpha }_{(1,1)})^{\hat{\beta }_{(1,1)}}\) and \(\hat{\beta }_{(1,1)}\) respectively for the same \(n\) and \(\eta >0\), see Eq. (11). Our preference of \((\alpha ^0,\beta ^0) = (1,1)\) is the simplest choice for the Weibull distribution, i.e. a standard exponential distribution. Demonstrating universality of the KS distance can be achieved by confirming the equality in distribution of the pivotal functions, namely Eq. (11). For this purpose we show that the MLE equations Eqs. (5) and (6) can be written in terms of the pivotal functions, \(\hat{\beta }/\beta ^0\), \((\hat{\alpha }/\alpha ^0)^{\hat{\beta }}\), the truncation parameter \(\eta =(x_L/\alpha ^0)^{\beta ^0}\) and \(n\). Here \((\hat{\alpha },\hat{\beta })\) denote the solutions of the MLE equations and \((\alpha ^0,\beta ^0)\) are the true values.

Making use of Eq. (20) to re-write the MLE equation for \(\hat{\alpha }\), Eq. (5), becomes

where the \(y_i\) are standard exponential random variates. Note that Eq. (21) is just the inverse of the first pivotal function \((\alpha ^0 / \hat{\alpha })^{\hat{\beta }}\). If we can demonstrate that \(\hat{\beta }/\beta ^0\) is distributed as \(\hat{\beta }_{(1,1)}\) (for fixed \(n\) and \(\eta\)), then we can conclude that \((\alpha ^0 / \hat{\alpha })^{\hat{\beta }}\) is distributed as \(1/(\hat{\alpha }_{(1,1)})^{\hat{\beta }_{(1,1)}}\) because the right-hand side of Eq. (21) only depends on \(n\), \(\eta\), the sample of standard exponential random variables \(y_1,...,y_n\) and \(\hat{\beta }_{(1,1)}\), and hence the first line in Eq. (11) is shown.

Let us now show that \(\hat{\beta }/\beta ^0\) is distributed as \(\hat{\beta }_{(1,1)}\). Using Eq. (20) and performing some algebraic manipulations we can rewrite the MLE equation for \(\hat{\beta }\), Eq. (6), in terms of a new random variable \(\xi \equiv \hat{\beta }/\beta ^0\)

Solving Eq. (22)Footnote 6 for the unknown \(\xi\), which is actually the second pivotal function, we see that \(\xi =\xi (y_1,...y_n|\eta ,n)\) is a uniquely defined random variable depending on the sample of standard exponential random variates \(y_1,...,y_n\), sample size \(n\) and parameter \(\eta\) only - but not on the original parameters \((\alpha ^0,\beta ^0)\). Next we consider the special case of Weibull distributions with \((\alpha ^0,\beta ^0)=(1,1)\) and conclude that the unique solution of Eq. (22), \(\xi =\hat{\beta }_{(1,1)}=\xi (y_1,...y_n|\eta ,n)\) depends only on sample size \(n\), parameter \(\eta\) and the same sample of standard exponential random variables \(y_1,...,y_n\). Thus our claim and the second line in Eq. (11) is shown.

Appendix 4: Computing the fisher information matrix

The covariance matrix in Theorem 1, \(Z(\alpha ,\beta )^{-1}\) is derived from the logarithm of the pdf \(f(\cdot )\) from Eq. (3). One readily obtains the second derivatives necessary for the calculation of the Fisher information matrix \(Z(\alpha ,\beta )\), writing as usual \(\eta =(x_L/\alpha )^{\beta }\),

To evaluate all relevant expectations \(\mathbb {E} \left( \cdot \right)\) in the definition of the Fisher information matrix, the following integrals are needed

where we have used the functions

In the limit \(\eta \longrightarrow 0+\) we recover the covariance matrix for the untruncated system as given by [27] (Eq. 11.17)

Note that in our representation of \(Z(\alpha ,\beta )^{-1}\) the factor \(1/n\) is displayed separately. For an estimate of the error in the MLE estimation of \(\hat{\alpha }\) and \(\hat{\beta }\), one may apply this expression with the hat-ed parameters rather than the unknown “true” parameters in conjunction with Theorem 1, statements 2 and 3.

Rights and permissions

About this article

Cite this article

Kreer, M., Kızılersü, A., Thomas, A.W. et al. Goodness-of-fit tests and applications for left-truncated Weibull distributions to non-life insurance. Eur. Actuar. J. 5, 139–163 (2015). https://doi.org/10.1007/s13385-015-0105-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13385-015-0105-8

and

and  are affinely linear independent with probability 1 can be seen immediately by explicit computation.

are affinely linear independent with probability 1 can be seen immediately by explicit computation.