Abstract

Motion blur and defocus blur are common cause of image degradation. Blind restoration of such images demands identification of the accurate point spread function for these blurs. The identification of joint blur parameters in barcode images is considered in this paper using logarithmic power spectrum analysis. First, Radon transform is utilized to identify motion blur angle. Then we estimate the motion blur length and defocus blur radius of the joint blurred image with generalized regression neural network (GRNN). The input of GRNN is the sum of the amplitudes of the normalized logarithmic power spectrum along vertical direction and concentric circles for motion and defocus blurs respectively. This scheme is tested on multiple barcode images with varying parameters of joint blur. We have also analyzed the effect of joint blur when one blur has same, greater or lesser extents to another one. The results of simulation experiments show the high precision of proposed method and reveals that dominance of one blur on another does not affect too much on the applied parameter estimation approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Barcodes are commonly used system of encoding of machine understandable information on most commercial services and products [1]. In Comparison to 1D barcode, 2D barcode has high density, capacity, and reliability. Therefore, 2D barcodes have been progressively more adopted these days. For example, a consumer can access essential information from the web page of the magazine or book, when he reads it, by just capturing the image of the printed QR code (2D barcode) related to URL. In addition to the URLs, 2D barcodes can also symbolize visual tags in the supplemented real-world environment [2], and the adaptation from the individual profiles to 2D barcodes usually exists. Whereas 1D barcodes are traditionally scanned with laser scanners, 2D barcode symbologies need imaging device for scanning. Detecting bar codes from images taken by digital camera is particularly challenging due to different types of degradations like geometric distortion, noise, and blurring in image at the time of image acquisition. Image blurring is frequently an issue that affects the performance of a barcode identification system. Blur may arise due to diverse sources like atmospheric turbulence, defocused lens, optical abnormality, and spatial and temporal sensor assimilation. Two common types of blurs are motion blur and defocus blur. Motion blur is caused by the relative motion between the camera and object during image capturing while the defocus blur is caused by the inaccurate focal length adjustment at the time of image acquisition. Blurring induces the degradation of sharp features of image like edges, specifically for barcode images where the encoded information is easily lost due to blur. Image restoration techniques available in the literature can be classified as blind deconvolution, where the blur kernel is not known and non-blind deconvolution, where the blur kernel is known [3]. The first and foremost step in any blind image restoration technique is blur estimation. Various techniques have been presented over the years which attempt to estimate point spread function (PSF) of blur simultaneously with the image [4, 5]. However, in recent years, a number of efficient methods [6–9] have suggested that blind deconvolution can be handled better with separate PSF estimation and after that non-blind deconvolution can be used as the subsequent step. The work presented in this paper falls in the former category where PSF parameters are estimated before image deconvolution.

Bhaskar et al. [10] utilized line spread function (LSF) information to estimate defocus blur. They used the power spectrum equalization (PSE) restoration filter for image restoration. However, this method works only for little areas of frequency. Shiqian et al. [11] presented a method which analyzes LSF to find the exact location of blur edges in spatial domain and then used this information for defocus parameter estimation. But in presence of noise it is difficult to find exact location of edges. Sang et al. [12] proposed a digital auto focusing system which applies block based edge categorization to decide the defocus blur extent. This method fails for restoration of high frequency details because it works for the low and median frequencies. There exist few methods which work in frequency domain. Vivirito et al. [13] applied extended discrete cosine transform (DCT) of Bayer patterns to extract edge details and used this information to find defocus blur amount. Gokstop [14] computed image depth for defocus blur estimation in his work. However, this method requires two images of same scene from different angles to estimate depth. Moghadam [15] presented an iterative algorithm using optical transfer function (OTF) estimate blur parameter. However, this method is noise independent, but it requires manually adjustment of some parameters. Some other methods presented in [16–19] have used wavelet coefficients as features to train and test the radial basis function (RBF) or cellular neural network for parameter estimation.

Cannon [20] proposed the technique to identify the motion blur parameters using power spectrum of many sub images by dividing blurred image into different blocks. Fabian and Malah [21] proposed a method based on Cannon’s method. Initially, they applied spectral subtraction method to reduce high level noise then transformed improved spectral magnitude function to cepastral domain for identification of blur parameters. Chang et al. [22] proposed a method using the bispectrum of blurred image. In this method blur parameters obtained in the central slice of the bispectrum. Rekleitis [23] suggested a method to estimate the optical flow map of a blurred image using only information from the motion blur. He applied steerable filters to estimate motion blur angle and 1D cepstrum to find blur length. Yitzhaky and Kopeika [24] used autocorrelation function of derivative image based on the examination that image characteristics along the direction of motion blur are dissimilar from the characteristic in other directions. Lokhande et al. [25] estimated parameters of motion blur by using periodic patterns in Frequency domain. They proposed blur direction identification using Hough transform and blur length estimation by collapsing the 2D spectrum into 1D spectrum. Aizenberg et al. [26] presented a work that identifies blur type, estimates blur parameters and perform image restoration using neural network. Dash et al. [27] presented an approach to estimate the motion blur parameters using Gabor filter for blur direction and radial basis function neural network for blur length with sum of Fourier coefficients as features. Dobes et al. [28] presented a fast method of finding motion blur length and direction. This method computes the power spectrum of the image gradient in the frequency domain filtered by using a band pass Butterworth filter to suppress the noise. The orientation of blur is found using Radon transform and the distance between the neighbouring stripes in power spectrum is used to estimate the blur length. Fang et al. [29] proposed another method consisting of Hann windowing and histogram equalization as pre-processing steps. Dash et al. [30] modeled the blur length detection problem as a multiclass classification problem and used support vector machine. Though there are large amount of work reported, no method is completely accurate. Researchers are still active in this field in order to improve the restoration performance by searching for robust method of blur parameters estimation.

In the real environment, it is more common that acquired images may be degraded by simultaneous blur combining motion and defocus blur instead of image blurring due to only motion or defocus blur. In the literature, little attention has been paid to joint blur identification by the researchers. Wu et al. [31] proposed a method to estimate defocus blur parameter in joint blur with the assumption that motion blur PSF is known in the joint blur. In this method a reduced update Kalman filter is applied for blurred image restoration and the best defocus parameter is estimated on the basis of maximum entropy. Chen et al. [32] presented a spread function-based scheme considering fundamental characteristics of linear motion and out-of-focus blur based on geometric optics to restore joint blurred images without application dependent parameters selection. Zhou et al. [33] analyzed cepstrum information for blur parameter estimation. Liu et al. [34] solved the problem of blur parameter identification using radon transform and back propagation neural network.

This work deals with combined blur parameters identification. The term blur refers the joint blur with the coexistence of defocus and motion blur throughout the paper. The rest of the paper is structured as follows. Section 2 describes the image degradation model. Section 3 briefly discusses GRNN model. In Sect. 4, the overall methodology has been discussed. Section 5 presents the simulation results of parameter estimation. Finally in Sect. 6, conclusions and future work are discussed.

2 Image degradation model

The image degradation process in spatial domain can be modeled by the following convolution process [3]

where \( g\left( {x,y} \right) \) is the degraded image, f(x, y) is the uncorrupted original image, \( h\left( {x,y} \right) \) is the point spread function that caused the degradation and \( \eta \left( {x,y} \right) \) is the additive noise. Since, convolution in spatial domain (x, y) is equivalent to the multiplication in frequency domain (u, v), Eq. (1) can be written as

When the scene to be recorded translates relative to the camera at a constant velocity (vrelative) under an angle of θ radians with the horizontal axis during the exposure interval [0, texposure], the distortion is one dimensional. Defining the length of motion as \( {\text{L }} = {\text{v}}_{\text{relative}} \times {\text{t}}_{\text{exposure}} , \) the point spread function (PSF) for uniform motion blur described as [23, 24]

The frequency response of PSF is called optical transfer function (OTF). The frequency response of h m is a SINC function given by

Figure 1a and b show an example of motion blur PSF and corresponding OTF with specified parameters.

In most cases, the out of focus blur caused by a system with circular aperture can be modeled as a uniform disk with radius R given by [10, 11]

The frequency response of Eq. (5) is given by (6), which is based on a Bessel function of the first kind [12]

where \( J \) is the Bessel function of first kind and R is radius of uniform disk. Fig. 2a and b show an example of PSF and corresponding OTF of defocus blur with specified radius.

In the case where both out-of-focus blur and motion blur are simultaneously present in the same image, the blur model is [31]

Since convolution is commutative, so joint blur PSF can be obtained as convolution of two blur functions as

where h d (x, y), h m (x, y) are point spread functions for motion defocus and blur respectively and * is the convolution operator. Fig. 3a and b show an example of PSF and corresponding OTF of combined blur with specified parameters. This paper treats the blur effect caused by both defocus and camera motion while ignoring the noise term in model. The PSF estimation for blur is corresponding to estimate three parameters angle (θ), length (L) and radius (R).

3 Generalized regression neural network (GRNN) model

A generalized regression neural network (GRNN) is a dynamic neural network architecture that can solve any function approximation problem if adequate data is available [35, 36]. Training of these type of networks does not depend on iterative procedure like back propagation networks. The main aim of a GRNN is to estimate a linear or nonlinear regression surface on independent variables. The network calculates the most probable value of an output given only by training vectors. It is also confirm that the prediction error approaches zero, as the training set size becomes large with barely minor restrictions on the function. GRNN has been identified to give superior results than the back-propagation network or radial basis function neural network (RBFNN) in terms of prediction accuracies [37, 38]. For an input vector F, the output Y of the GRNN is [35]

where n is the number of sample observations, σ is the spread parameter and \( D_{k}^{ } \) is the squared distance between the input vector F and the training vector X k defined as

The smoothing factor σ determines the spread for regions of neurons. The value of the spread parameter should be smaller than the average distance between the input vectors to fit the data very closely. So, a variety of smoothing factors and methods for choosing those factors should be tested empirically to find the optimum smoothing factors for the GRNN models [39]. A schematic diagram of the GRNN model for blur identification problem is shown in Fig. 4, in which GRNN consists of an input layer, a hidden layer (pattern layer), a summation layer, and an output layer. The numbers of neurons in input layer are equal to the number of independent features in dataset. Each unit in the pattern layer depicts a training pattern. The summation layer keeps two different processing units, i.e., the summation and single division unit. The summation unit adds all the outputs of the pattern layer, whereas the division unit only sums the weighted activations of the pattern units. Each node in the pattern layer is connected to each of the two nodes in the summation layer. The weights Y k and one are assigned on the links between node k of the pattern layer and the first and second node of the summation layer respectively. The output unit calculates the quotient of the two outputs of the summation layer to give the estimated value.

4 Methodology

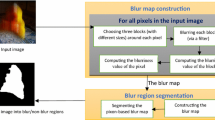

Blurring reduces significant features of image such as boundaries, shape, regions, objects etc., which creates problem for image analysis in spatial domain. The motion blur, defocus blur appear differently in frequency domain, and the blur identification can be easily done using these patterns. If we transform the blurred image in frequency domain, it can be seen from frequency response of motion blurred image that the dominant parallel lines appear which are orthogonal to the motion orientation with near zero values [20, 21]. In defocused blur one can see appearance of some circular zero crossing patterns [10, 11] and in case of coexistence of both blurs, combined effect of both blurs become visible. The steps of the algorithm for joint blur parameter identification are detailed in Fig. 5. These are five major steps: preprocessing of images, motion blur angle estimation, image rotation in case of non horizontal motion blur angle, motion blur length estimation and defocus blur length estimation.

4.1 Preprocessing

Blur classification requires a number of preprocessing steps. First, the color image obtained by the digital camera is changed into an 8-bit grayscale image. This can be made by averaging the color channels or by weighting the RGB-parts according to the luminance perception of the human eye. The period transitions from one boundary of image to the next frequently lead to high frequencies, which are converted into visible vertical and horizontal lines in the power spectrum of image. Because these lines may distract from or even superpose the stripes caused by the blur, they have to be removed by applying a windowing function prior to frequency transformation. The Hanning window gives a fine trade-off between forming a smooth transition towards the image borders and maintaining enough image information in power spectrum. A 2D Hann window of size N × M defined as the product of two 1D Hann windows as [29]

After that step, the windowed image can be transferred into the frequency domain by performing a fast Fourier transform. The power spectrum is calculated to facilitate the identification of particular features of the Fourier spectrum. However, as the coefficients of the Fourier spectrum decrease rapidly from its centre to the borders, it can be hard to identify local differences. Taking the logarithm of the power spectrum helps to balance this fast drop off. In order to obtain a centred version of the spectrum, its quadrants have to be swapped diagonally. In view of the fact that the remarkable features are around the centre of the spectrum, a centred portion of size 128 × 128 is cropped to perform further processing (Fig. 6).

a Original image containing QR code [42], b defocus blurred image with R = 20, c motion blurred image with L = 10 and θ = 0°, (g) joint blurred image with R = 20, L = 10 and θ = 0°, e–h log power spectrums of images (a–d) respectively, i–l log power spectrums after Hann windowing of images (a–d) respectively

4.2 Blur angle estimation using enhanced Radon transform

Radon transform [40] is competent to transform two dimensional images with lines into a domain of possible line parameters \( \left( {\theta , \rho } \right) \), where θ is the angle between the perpendicular from the origin to the given line and the x-axis and ρ is the length of the perpendicular. Each line in the image will give a peak positioned at the corresponding line parameters. It computes the projections of an image matrix along specified directions. A projection of a two-dimensional function f(x, y) is a set of line integrals. The Radon function computes the line integrals from multiple sources along parallel paths or beams in a certain direction. An arbitrary point in the projection expressed as ray-sum along the line \( x\, cos \theta + y\, sin \theta = \rho \) is given by [41]

where δ(.) is the delta function. The advantage of Radon transform over other line fitting algorithms, such as Hough transform and robust regression, is that we do not need to specify edge pixels of the lines. Frequency response of motion blurred image shows the dominant parallel lines orthogonal to the motion orientation. To find direction of these lines, let R be the Radon transform of an image, and then the position of high spots along the θ axis of R shows the motion direction. Figure 7b shows the result of applying Radon transform to the logarithmic power spectrum (LGPS) of blurred image shown in Fig. 7a. The peak in Radon transform corresponds to the motion blur angle. To reduce the computation time and improve the results, we have projected the spectrum with a step of 5° and estimated the line orientation. Then near that orientation, we have further projected the spectrum with a step of 1° to find final orientation.

a Fourier spectrum of Fig. 1a blurred with motion length 10 pixels and motion orientation 45°, b Radon transform of (a)

4.3 Blur length estimation

The idea of motion blur length estimation uses the blur patterns appearance corresponding to the motion blur in the joint blurred images. The equally spaced parallel dark stripes in the LGPS contain motion blur length information. The distance between two dark stripes decreases as the motion blur length (L) increases. Therefore, one can estimate the motion blur length by calculating the distance between two dark stripes but accurate estimation of these spacing is complex. We can solve this problem with summation of frequency amplitudes in certain direction and then utilize the GRNN to find the relationship between summed amplitudes and motion blur length. For example, consider an image degraded by uniform horizontal motion blur (i.e. angle is 0°) and defocus blur with parameter L and R respectively. Due to motion blur vertical parallel dark stripes appear in spectrum. So, we add amplitudes vertically and use this vector as feature vector for GRNN. For the other motion blur orientations, we need to rotate the spectrum by the estimated angle using enhanced radon transform before summing the amplitudes in vertical direction.

4.4 Blur radius estimation

In the spectrum of the blurred image containing joint blur, we can see the alternating light and dark concentric circle stripes due to defocus blur. The distance between two dark circular stripes decreases as the defocus blur radius (R) increases. Therefore, one can estimate the defocus blur parameter by calculating the spacing between the adjacent dark circle stripes but accurate estimation of these spacing is critical. Similar to the identification of uniform linear motion blur length, the identification of defocus blur radius makes use of GRNN. The sum of the amplitudes for each concentric circle is taken as input feature vector and R as output for GRNN.

5 Simulation results

The performance of the proposed technique has been evaluated using numerous 2D barcode images. The barcode image database used for the simulation is the Brno Institute of Technology QR code image database [42]. Numerous 2D barcode images from the database were considered to introduce joint blur synthetically with varying degree of parameters. We have also analyzed the effect of joint blur on parameter identification approach with consideration of three situations as:

-

a.

Blur extent of motion and defocus blurs is same in joint blur \( (L = R) \).

-

b.

Blur extent of motion blur dominates defocus blur in joint blur \( (L > R) \).

-

c.

Blur extent of defocus blur dominates motion blur in joint blur (\( L\, <\, R \)).

We selected the GRNN for the purpose of blur parameter identification owing to its excellent prediction ability. We use sum of amplitudes feature vector as inputs to the GRNN as discussed in Sects. 4.3 and 4.4. The whole training and testing features set is normalized into the range [0, 1]. The GRNN was implemented using the function newgrnn available in MATLAB neural network toolbox. The only parameter to be determined is the spread parameter σ. In view of the fact that there exists no a priori scheme of selecting it, we compared the performance with a variety of values. To evaluate the performance two statistical measures, mean absolute error (MAE) and root mean square error (RMSE), between the estimated output and target have been used, which are the widely acceptable indicators to give a statistical description for the effectiveness of the model. They are computed using (13) and (14) respectively.

where T is target vector, Y is predicted output and N is number of samples. RMSE and MAE signify the residual errors, which provide an overall idea of the variation among the target and predicted values. In results, we have shown the best case and worst case blur parameter tolerances. These values illustrate the absolute errors (i.e. difference between the real values and the estimated values of the angle and length). We have also plotted the regression results for each blur length and radius. These plots illustrate the original data points along with the line providing the best fit through the points. The equation for the line is also given.

5.1 Blur angle estimation

To carry out motion blur angle estimation experiment, we have applied the enhanced radon transform method on a barcode image that was degraded by different orientations with step of 5 degree in the range 0° ≤ φ < 180° with fixed L and R. We have selected L and R parameters as 20 pixels for first situation of joint blur. For the next two situations where one blur extent is higher to other one, we have considered L = 20, R = 15 and L = 20, R = 25 respectively. Table 1 presents the summary of results. In this table, the column named “angle tolerance” illustrates the absolute value of errors (i.e. difference between the real values and the estimated values of the angles). The low values of the mean absolute error and root mean square errors show the high accuracy of the method. The results in table also disclose that prediction accuracy is slightly better when motion blur extent is more than defocus blur in comparison to other situations of joint blur. Figure 8 plots the absolute errors between true blur angle and estimated blur angle for all three situations of joint blur.

5.2 Blur length estimation

To carry out extensive experiment, we applied the proposed method on 100 barcode images that are synthetically degraded by keeping blur orientation fixed at 0° and varying L and R in the range 1–20 (i.e., 1 ≤ L ≤ 20 and 1 ≤ R ≤ 20 pixels) for all three considered cases of joint blur separately. So, total 2,000 blurred images were created. Out of these degraded images 1,000 were used to train the GRNN and all were used to test the model. The best spread parameter for fitting was found to be 2. Table 2 and regression plots in Fig. 9 present the summary of results. Results show the robustness of proposed method and also reveal that change in the blur extent ratios has negligible effect on the performance.

5.3 Blur radius estimation

To validate the proposed method, we applied the proposed scheme for blur radius estimation on 100 barcode images that were synthetically degraded by keeping blur orientation fixed at 0° and varying L and R in the range 1–20 (i.e., 1 ≤ L ≤ 20 and 1 ≤ R ≤ 20 pixels) for all three considered cases of joint blur separately. So, total 2,000 blurred images were created. Out of these degraded images 1,000 were used to train the GRNN and all are used to test the model. The best spread parameter for fitting was found to be 1. Table 3 and plots in Fig. 10 present the summary of results. Results in table and regression plots overall conclude that proposed scheme gives very accurate results. Though the blur radius prediction is slightly better when R < L, the general conclusion about the results is that different ratios of L and R do not affect too much on performance.

6 Conclusion

In this paper, we have proposed a proficient method that identifies the blur parameters in case of coexistence of defocus and motion blurs in barcode images. We have utilized blur pattern appearances in frequency spectrum. Enhanced radon transform is used to estimate blur orientation. To estimate blur length and radius, we have utilized generalized regression neural network model with sum of amplitudes in a specific manner as input features. Results show that proposed scheme for joint blur parameter identification is very accurate. Analysis of results also shows that different ratios of blur extents do not alter the performance significantly. In future, this work can be extended to identification of parameters of the blurred image with noise interference.

References

ISO/IEC 18004:2000 (2000) Information technology—automatic identification and data capture techniques-bar code symbology-QR code

Parikh TS, Lazowska ED (2006) Designing an architecture for delivering mobile information services to the rural developing world. In: ACM international conference on world wide web, pp. 123–130

Tiwari S, Shukla VP, Biradar SR, Singh AK (2013) Texture features based blur classification in barcode images. Int J Inf Eng Electron Bus 5:34–41

Kundur D, Hatzinakos D (1996) Blind image deconvolution. IEEE Signal Process Mag 13(3):43–64

Schulz TJ (1993) Multiframe blind deconvolution of astronomical images. JOSA 10(5):1064–1073

Gennery D (1973) Determination of optical transfer function by inspection of frequency domain plot. JOSA 63:1571–1577

Hummel R, Zucker K, Zucker S (1987) Debluring gaussian blur. CVGIP 38:66–80

Lane R, Bates R (1987) Automatic multidimensional deconvolution. JOSA 4(1):180–188

Tekalp A, Kaufman H, Wood J (1986) Identification of image and blur parameters for the restoration of non causal blurs. IEEE Trans Acoust Speech Signal Process 34(4):963–972

Bhaskar R, Hite J, Pitts DE (1994) An iterative frequency-domain technique to reduce image degradation caused by lens defocus and linear motion blur. Int Conf Geosci Remote Sens 4:2522–2524

Shiqian W, Weisi L, Lijun J, Wei X, Lihao C (2005) An objective out-of-focus blur measurement. In: Fifth international conference on information, communications and signal processing, pp. 334–338

Sang KK, Sang RP, Joon KP (1998) Simultaneous out-of-focus blur estimation and restoration for digital auto focusing system. IEEE Trans Consum Electron 44:1071–1075

Vivirito P, Battiato S, Curti S, Cascia ML, Pirrone R (2002) Restoration of out of focus images based on circle of confusion estimate. In: Proceedings of SPIE 47th annual meeting, vol 4790, pp. 408–416

Gokstop M (1994) Computing depth from out-of-focus blurring a local frequency representation. In: Proceeding of international conference of pattern recognition, vol 1, pp. 153–158

Moghadam ME (2008) A robust noise independent method to estimate out of focus blurs. In: IEEE international conference on acoustics, speech and signal processing, pp. 1273–1276

Jiang Y (2005) Defocused image restoration using RBF network and kalman filter. IEEE Int Conf Syst Man Cybernet 3:2507–2511

Su L (2008) Defocused image restoration using RBF network and iterative wiener filter in wavelet domain. CISP’08 congress on image and signal processing, vol 3, pp. 311–315

Jongsu L, Fathi AS, Sangseob S (2010) Defocus blur estimation using a cellular neural network. CNNA 1(4):3–5

Chen H-C, Yen J-C, Chen H-C (2012) Restoration of out of focus images using neural network. In: Information security and intelligence control (ISIC), pp. 226–229

Cannon M (1976) Blind deconvolution of spatially invariant image blurs with phase. IEEE Trans Acoust Speech Signal Process 24:58–63

Fabian R, Malah D (1991) Robust identification of motion and out-of-focus blur parameters from blurred and noisy images. Graph Models Image Process 53(5):403–412

Chang M, Tekalp AM, Erdem TA (1991) Blur identification using the bispectrum. IEEE Trans Signal Process 39(10):2323–2325

Rekleitis IM (1995) Visual motion estimation based on motion blur interpretation. MSc thesis, School of Computer Science, McGill University, Montreal, QC, Canada

Yitzhaky Y, Kopeika NS (1997) Identification of blur parameters from motion blurred images. Graph Models Image Process 59:310–320

Lokhande R, Arya KV, Gupta P (2006) Identification of blur parameters and restoration of motion blurred images. In: Proceedings of ACM symposium on applied computing, pp. 301–305

Aizenberg I, Paliy DV, Zurada JM, Astola JT (2008) Blur identification by multilayer neural network based on multivalued neurons. IEEE Trans Neural Netw 19(5):883–898

Dash R, Sa PK, Majhi B (2009) RBFN based motion blur parameter estimation. In: IEEE international conference on advanced computer control, pp. 327–331

Dobes M, Machala L, Frst M (2010) Blurred image restoration: a fast method of finding the motion length and angle. Digit Signal Process 20(6):1677–1686

Fang X, Wu H, Wu Z, Bin L (2011) An improved method for robust blur estimation. Inf Technol J 10:1709–1716

Dash R, Sa PK, Majhi B (2012) Blur parameter identification using support vector machine. ACEEE Int J Control Syst Instrum 3(2):54–57

Wu Q, Wang X, Guo P (2006) Joint blurred image restoration with partially known information. In: International conference on machine learning and cybernetics, pp. 3853–3858

Chen C-H, Chien T, Yang W-C, Wen C-Y (2008) Restoration of linear motion and out-of-focus blurred images in surveillance systems. In: IEEE international conference on intelligence and security informatics, pp. 239–241

Zhou Q, Yan G, Wang W (2007) Parameter estimation for blur image combining defocus and motion blur using cestrum analysis. J Shanghai Jiao Tong Univ 12(6):700–706

Liu Z, Peng Z (2011) Parameters identification for blur image combining motion and defocus blurs using BP neural network. In: 4th international congress on image and signal processing (CISP), vol 2, pp. 798–802

Specht DF (1991) A general regression neural network. IEEE Trans Neural Netw 2(6):568–576

Chartier S, Boukadoum M, Amiri M (2009) BAM learning of nonlinearly separable tasks by using an asymmetrical output function and reinforcement learning. IEEE Trans Neural Netw 20(8):1281–1292

Tomandl D, Schober A (2001) A modified general regression neural network (MGRNN) with new, efficient training algorithms as a robust ‘black box’-tool for data analysis. Neural Netw 14(8):1023–1034

Li Q, Meng Q, Cai J, Yoshino H, Mochida A (2009) Predicting hourly cooling load in the building: a comparison of support vector machine and different artificial neural networks. Energy Convers Manage 50(1):90–96

Li CF, Bovik AC, Wu X (2011) Blind image quality assessment using a general regression neural network. IEEE Trans Neural Netw 22(5):793–799

Tiwari S, Shukla VP, Biradar SR, Singh AK (2012) Certain investigations on motion blur detection and estimation. In: Proceedings of international conference on signal, image and video processing, IIT Patna, pp. 108–114

Tiwari S, Shukla VP, Biradar SR, Singh AK (2013) Review of motion blur estimation techniques. J Image Graph 1(4):176–184

Szentandrási I, Dubská M, Herout A (2012) Fast detection and recognition of qr codes in high-resolution images. Graph@FIT, Brno Institute of Technology

Acknowledgments

We highly appreciate Faculty of Engineering and Technology, Mody Institute of Technology & Science University, Laxmangarh for providing facility to carry out this research work.

Conflict of interest

The authors declare that there is no conflict of interests regarding the publication of this article.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tiwari, S., Shukla, V.P., Biradar, S.R. et al. Blur parameters identification for simultaneous defocus and motion blur. CSIT 2, 11–22 (2014). https://doi.org/10.1007/s40012-014-0039-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40012-014-0039-3