Abstract

While exam-style questions are a fundamental educational tool serving a variety of purposes, manual construction of questions is a complex process that requires training, experience, and resources. This, in turn, hinders and slows down the use of educational activities (e.g. providing practice questions) and new advances (e.g. adaptive testing) that require a large pool of questions. To reduce the expenses associated with manual construction of questions and to satisfy the need for a continuous supply of new questions, automatic question generation (AQG) techniques were introduced. This review extends a previous review on AQG literature that has been published up to late 2014. It includes 93 papers that were between 2015 and early 2019 and tackle the automatic generation of questions for educational purposes. The aims of this review are to: provide an overview of the AQG community and its activities, summarise the current trends and advances in AQG, highlight the changes that the area has undergone in the recent years, and suggest areas for improvement and future opportunities for AQG. Similar to what was found previously, there is little focus in the current literature on generating questions of controlled difficulty, enriching question forms and structures, automating template construction, improving presentation, and generating feedback. Our findings also suggest the need to further improve experimental reporting, harmonise evaluation metrics, and investigate other evaluation methods that are more feasible.

Similar content being viewed by others

Introduction

Exam-style questions are a fundamental educational tool serving a variety of purposes. In addition to their role as an assessment instrument, questions have the potential to influence student learning. According to Thalheimer (2003), some of the benefits of using questions are: 1) offering the opportunity to practice retrieving information from memory; 2) providing learners with feedback about their misconceptions; 3) focusing learners’ attention on the important learning material; 4) reinforcing learning by repeating core concepts; and 5) motivating learners to engage in learning activities (e.g. reading and discussing). Despite these benefits, manual question construction is a challenging task that requires training, experience, and resources. Several published analyses of real exam questions (mostly multiple choice questions (MCQs)) (Hansen and Dexter 1997; Tarrant et al. 2006; Hingorjo and Jaleel 2012; Rush et al. 2016) demonstrate their poor quality, which Tarrant et al. (2006) attributed to a lack of training in assessment development. This challenge is augmented further by the need to replace assessment questions consistently to ensure their validity, since their value will decrease or be lost after a few rounds of usage (due to being shared between test takers), as well as the rise of e-learning technologies, such as massive open online courses (MOOCs) and adaptive learning, which require a larger pool of questions.

Automatic question generation (AQG) techniques emerged as a solution to the challenges facing test developers in constructing a large number of good quality questions. AQG is concerned with the construction of algorithms for producing questions from knowledge sources, which can be either structured (e.g. knowledge bases (KBs) or unstructured (e.g. text)). As Alsubait (2015) discussed, research on AQG goes back to the 70’s. Nowadays, AQG is gaining further importance with the rise of MOOCs and other e-learning technologies (Qayyum and Zawacki-Richter 2018; Gaebel et al. 2014; Goldbach and Hamza-Lup 2017).

In what follows, we outline some potential benefits that one might expect from successful automatic generation of questions. AQG can reduce the cost (in terms of both money and effort) of question construction which, in turn, enables educators to spend more time on other important instructional activities. In addition to resource saving, having a large number of good-quality questions enables the enrichment of the teaching process with additional activities such as adaptive testing (Vie et al. 2017), which aims to adapt learning to student knowledge and needs, as well as drill and practice exercises (Lim et al. 2012). Finally, being able to automatically control question characteristics, such as question difficulty and cognitive level, can inform the construction of good quality tests with particular requirements.

Although the focus of this review is education, the applications of question generation (QG) are not limited to education and assessment. Questions are also generated for other purposes, such as validation of knowledge bases, development of conversational agents, and development of question answering or machine reading comprehension systems, where questions are used for training and testing.

This review extends a previous systematic review on AQG (Alsubait 2015), which covers the literature up to the end of 2014. Given the large amount of research that has been published since Alsubait’s review was conducted (93 papers over a four year period compared to 81 papers over the preceding 45-year period), an extension of Alsubait’s review is reasonable at this stage. To capture the recent developments in the field, we review the literature on AQG from 2015 to early 2019. We take Alsubait’s review as a starting point and extend the methodology in a number of ways (e.g. additional review questions and exclusion criteria), as will be described in the sections titled “Review Objective” and “Review Method”. The contribution of this review is in providing researchers interested in the field with the following:

- 1.

a comprehensive summary of the recent AQG approaches;

- 2.

an analysis of the state of the field focusing on differences between the pre- and post-2014 periods;

- 3.

a summary of challenges and future directions; and

- 4.

an extensive reference to the relevant literature.

Summary of Previous Reviews

There have been six published reviews on the AQG literature. The reviews reported by Le et al. 2014, Kaur and Bathla 2015, Alsubait 2015 and Rakangor and Ghodasara (2015) cover the literature that has been published up to late 2014 while those reported by Ch and Saha (2018) and Papasalouros and Chatzigiannakou (2018) cover the literature that has been published up to late 2018. Out of these, the most comprehensive review is Alsubait’s, which includes 81 papers (65 distinct studies) that were identified using a systematic procedure. The other reviews were selective and only cover a small subset of the AQG literature. Of interest, due to it being a systematic review and due to the overlap in timing with our review, is the review developed by Ch and Saha (2018). However, their review is not as rigorous as ours, as theirs only focuses on automatic generation of MCQs using text as input. In addition, essential details about the review procedure, such as the search queries used for each electronic database and the resultant number of papers, are not reported. In addition, several related studies found in other reviews on AQG are not included.

Findings of Alsubait’s Review

In this section, we concentrate on summarising the main results of Alsubait’s systematic review, due to its being the only comprehensive review. We do so by elaborating on interesting trends and speculating about the reasons for those trends, as well as highlighting limitations observed in the AQG literature.

Alsubait characterised AQG studies along the following dimensions: 1) purpose of generating questions, 2) domain, 3) knowledge sources, 4) generation method, 5) question type, 6) response format, and 7) evaluation.

The results of the review and the most prevalent categories within each dimension are summarised in Table 1. As can be seen in Table 1, generating questions for a specific domain is more prevalent than generating domain-unspecific questions. The most investigated domain is language learning (20 studies), followed by mathematics and medicine (four studies each). Note that, for these three domains, there are large standardised tests developed by professional organisations (e.g. Test of English as a Foreign Language (TOEFL), International English Language Testing System (IELTS) and Test of English for International Communication (TOEIC) for language, Scholastic Aptitude Test (SAT) for mathematics and board examinations for medicine). These tests require a continuous supply of new questions. We believe that this is one reason for the interest in generating questions for these domains. We also attribute the interest in the language learning domain to the ease of generating language questions, relative to questions belonging to other domains. Generating language questions is easier than generating other types of questions for two reasons: 1) the ease of adopting text from a variety of publicly available resources (e.g. a large number of general or specialised textual resources can be used for reading comprehension (RC)) and 2) the availability of natural language processing (NLP) tools for shallow understanding of text (e.g. part of speech (POS) tagging) with an acceptable performance, which is often sufficient for generating language questions. To illustrate, in Chen et al. (2006), the distractors accompanying grammar questions are generated by changing the verb form of the key (e.g. “write”, “written”, and “wrote” are distractors while “writing” is the key). Another plausible reason for interest in questions on medicine is the availability of NLP tools (e.g. named entity recognisers and co-reference resolvers) for processing medical text. There are also publicly available knowledge bases, such as UMLS (Bodenreider 2004) and SNOMED-CT (Donnelly 2006), that are utilised in different tasks such as text annotation and distractor generation. The other investigated domains are analytical reasoning, geometry, history, logic, programming, relational databases, and science (one study each).

With regard to knowledge sources, the most commonly used source for question generation is text (Table 1). A similar trend was also found by Rakangor and Ghodasara (2015). Note that 19 text-based approaches, out of the 38 text-based approaches identified by Alsubait (2015), tackle the generation of questions for the language learning domain, both free response (FR) and multiple choice (MC). Out of the remaining 19 studies, only five focus on generating MCQs. To do so, they incorporate additional inputs such as WordNet (Miller et al. 1990), thesaurus, or textual corpora. By and large, the challenge in the case of MCQs is distractor generation. Despite using text for generating language questions, where distractors can be generated using simple strategies such as selecting words having a particular POS or other syntactic properties, text often does not incorporate distractors, so external, structured knowledge sources are needed to find what is true and what is similar. On the other hand, eight ontology-based approaches are centred on generating MCQs and only three focus on FR questions.

Simple factual wh-questions (i.e. where the answers are short facts that are explicitly mentioned in the input) and gap-fill questions (also known as fill-in-the-blank or cloze questions) are the most generated types of questions with the majority of them, 17 and 15 respectively, being generated from text. The prevalence of these questions is expected because they are common in language learning assessment. In addition, these two types require relatively little effort to construct, especially when they are not accompanied by distractors. In gap-fill questions, there are no concerns about the linguistic aspects (e.g. grammaticality) because the stem is constructed by only removing a word or a phrase from a segment of text. The stem of a wh-question is constructed by removing the answer from the sentence, selecting an appropriate wh-word, and rearranging words to form a question. Other types of questions such as mathematical word problems, Jeopardy-style questions,Footnote 1 and medical case-based questions (CBQs) require more effort in choosing the stem content and verbalisation. Another related observation we made is that the types of questions generated from ontologies are more varied than the types of questions generated from text.

Limitations observed by Alsubait (2015) include the limited research on controlling the difficulty of generated questions and on generating informative feedback. Existing difficulty models are either not validated or only applicable to a specific type of question (Alsubait 2015). Regarding feedback (i.e. an explanation for the correctness/incorrectness of the answer), only three studies generate feedback along with the questions. Even then, the feedback is used to motivate students to try again or to provide extra reading material without explaining why the selected answer is correct/incorrect. Ungrammaticality is another notable problem with auto-generated questions, especially in approaches that apply syntactic transformations of sentences (Alsubait 2015). For example, 36.7% and 39.5% of questions generated in the work of Heilman and Smith (2009) were rated by reviewers as ungrammatical and nonsensical, respectively. Another limitation related to approaches to generating questions from ontologies is the use of experimental ontologies for evaluation, neglecting the value of using existing, probably large, ontologies. Various issues can arise if existing ontologies are used, which in turn provide further opportunities to enhance the quality of generated questions and the ontologies used for generation.

Review Objective

The goal of this review is to provide a comprehensive view of the AQG field since 2015. Following and extending the schema presented by Alsubait (2015) (Table 1), we have structured our review around the following four objectives and their related questions. Questions marked with an asterisk “*” are those proposed by Alsubait (2015). Questions under the first three objectives (except question 5 under OBJ3) are used to guide data extraction. The others are analytical questions to be answered based on extracted results.

- OBJ1::

-

Providing an overview of the AQG community and its activities

- 1.

What is the rate of publication?*

- 2.

What types of papers are published in the area?

- 3.

Where is research published?

- 4.

Who are the active research groups in the field?*

- 1.

- OBJ2::

-

Summarising current QG approaches

- 1.

What is the purpose of QG?*

- 2.

What method is applied?*

- 3.

What tasks related to question generation are considered?

- 4.

What type of input is used?*

- 5.

Is it designed for a specific domain? For which domain?*

- 6.

What type of questions are generated?* (i.e., question format and answer format)

- 7.

What is the language of the questions?

- 8.

Does it generate feedback?*

- 9.

Is difficulty of questions controlled?*

- 10.

Does it consider verbalisation (i.e. presentation improvements)?

- 1.

- OBJ3::

-

Identifying the gold-standard performance in AQG

- 1.

Are there any available sources or standard datasets for performance comparison?

- 2.

What types of evaluation are applied to QG approaches?*

- 3.

What properties of questions are evaluated?Footnote 2 and What metrics are used for their measurement?

- 4.

How does the generation approach perform?

- 5.

What is the gold-standard performance?

- 1.

- OBJ4::

-

Tracking the evolution of AQG since Alsubait’s review

- 1.

Has there been any progress on feedback generation?

- 2.

Has there been progress on generating questions with controlled difficulty?

- 3.

Has there been progress on enhancing the naturalness of questions (i.e. verbalisation)?

- 1.

One of our motivations for pursuing these objectives is to provide members of the AQG community with a reference to facilitate decisions such as what resources to use, whom to compare to, and where to publish. As we mentioned in the Summary of Previous Reviews, Alsubait (2015) highlighted a number of concerns related to the quality of generated questions, difficulty models, and the evaluation of questions. We were motivated to know whether these concerns have been addressed. Furthermore, while reviewing some of the AQG literature, we made some observations about the simplicity of generated questions and about the reporting being insufficient and heterogeneous. We want to know whether these issues are universal across the AQG literature.

Review Method

We followed the systematic review procedure explained in (Kitchenham and Charters 2007; Boland et al. 2013).

Inclusion and Exclusion Criteria

We included studies that tackle the generation of questions for educational purposes (e.g. tutoring systems, assessment, and self-assessment) without any restriction on domains or question types. We adopted the exclusion criteria used in Alsubait (2015) (1 to 5) and added additional exclusion criteria (6 to 13). A paper is excluded if:

- 1.

it is not in English

- 2.

it presents work in progress only and does not provide a sufficient description of how the questions are generated

- 3.

it presents a QG approach that is based mainly on a template and questions are generated by substituting template slots with numerals or with a set of randomly predefined values

- 4.

it focuses on question answering rather than question generation

- 5.

it presents an automatic mechanism to deliver assessments, rather than generating assessment questions

- 6.

it presents an automatic mechanism to assemble exams or to adaptively select questions from a question bank

- 7.

it presents an approach for predicting the difficulty of human-authored questions

- 8.

it presents a QG approach for purposes other than those related to education (e.g. training of question answering systems, dialogue systems)

- 9.

it does not include an evaluation of the generated questions

- 10.

it is an extension of a paper published before 2015 and no changes were made to the question generation approach

- 11.

it is a secondary study (i.e. literature review)

- 12.

it is not peer-reviewed (e.g. theses, presentations and technical reports)

- 13.

its full text is not available (through the University of Manchester Library website, Google or Google scholar).

Search Strategy

Data Sources

Six data sources were used, five of which were electronic databases (ERIC, ACM, IEEE, INSPEC and Science Direct), which were determined by Alsubait (2015) to have good coverage of the AQG literature. We also searched the International Journal of Artificial Intelligence in Education (AIED) and the proceedings of the International Conference on Artificial Intelligence in Education for 2015, 2017, and 2018 due to their AQG publication record.

We obtained additional papers by examining the reference lists of, and the citations to, AQG papers we reviewed (known as “snowballing”). The citations to a paper were identified by searching for the paper using Google Scholar, then clicking on the “cited by” option that appears under the name of the paper. We performed this for every paper on AQG, regardless of whether we had decided to include it, to ensure that we captured all the relevant papers. That is to say, even if a paper was excluded because it met some of the exclusion criteria (1-3 and 8-13), it is still possible that it refers to, or is referred to by, relevant papers.

We used the reviews reported by Ch and Saha (2018) and Papasalouros and Chatzigiannakou (2018) as a “sanity check” to evaluate the comprehensiveness of our search strategy. We exported all the literature published between 2015 and 2018 included in the work of Ch and Saha (2018) and Papasalouros and Chatzigiannakou (2018) and checked whether they were included in our results (both search results and snowballing results).

Search Queries

We used the keywords “question” and “generation” to search for relevant papers. Actual search queries used for each of the databases are provided in the Appendix under “Search Queries”. We decided on these queries after experimenting with different combinations of keywords and operators provided by each database and looking at the ratio between relevant and irrelevant results in the first few pages (sorted by relevance). To ensure that recall was not compromised, we checked whether relevant results returned using different versions of each search query were still captured by the selected version.

Screening

The search results were exported to comma-separated values (CSV) files. Two reviewers then looked independently at the titles and abstracts to decide on inclusion or exclusion. The reviewers skimmed the paper if they were not able to make a decision based on the title and abstract. Note that, at this phase, it was not possible to assess whether all papers had satisfied the exclusion criteria 2, 3, 8, 9, and 10. Because of this, the final decision was made after reading the full text as described next.

To judge whether a paper’s purpose was related to education, we considered the title, abstract, introduction, and conclusion sections. Papers that mentioned many potential purposes for generating questions, but did not state which one was the focus, were excluded. If the paper mentioned only educational applications of QG, we assumed that its purpose was related to education, even without a clear purpose statement. Similarly, if the paper mentioned only one application, we assumed that was its focus.

Concerning evaluation, papers that evaluated the usability of a system that had a QG functionality, without evaluating the quality of generated questions, were excluded. In addition, in cases where we found multiple papers by the same author(s) reporting the same generation approach, even if some did not cover evaluation, all of the papers were included but counted as one study in our analyses.

Lastly, because the final decision on inclusion/exclusion sometimes changed after reading the full paper, agreement between the two reviewers was checked after the full paper had been read and the final decision had been made. However, a check was also made to ensure that the inclusion/exclusion criteria were interpreted in the same way. Cases of disagreement were resolved through discussion.

Data Extraction

Guided by the questions presented in the “Review Objective” section, we designed a specific data extraction form. Two reviewers independently extracted data related to the included studies. As mentioned above, different papers that related to the same study were represented as one entry. Agreement for data extraction was checked and cases of disagreement were discussed to reach a consensus.

Papers that had at least one shared author were grouped together if one of the following criteria were met:

they reported on different evaluations of the same generation approach;

they reported on applying the same generation approach to different sources or domains;

one of the papers introduced an additional feature of the generation approach such as difficulty prediction or generating distractors without changing the initial generation procedure.

The extracted data were analysed using a code written in R markdown.Footnote 3

Quality Assessment

Since one of the main objectives of this review is to identify the gold standard performance, we were interested in the quality of the evaluation approaches. To assess this, we used the criteria presented in Table 2 which were selected from existing checklists (Downs and Black 1998; Reisch et al. 1989; Critical Appraisal Skills Programme 2018), with some criteria being adapted to fit specific aspects of research on AQG. The quality assessment was conducted after reading a paper and filling in the data extraction form.

In what follows, we describe the individual criteria (Q1-Q9 presented in Table 2) that we considered when deciding if a study satisfied said criteria. Three responses are used when scoring the criteria: “yes”, “no” and “not specified”. The “not specified” response is used when either there is no information present to support the criteria, or when there is not enough information present to distinguish between a “yes” or “no” response.

Q1-Q4 are concerned with the quality of reporting on participant information, Q5-Q7 are concerned with the quality of reporting on the question samples, and Q8 and Q9 describe the evaluative measures used to assess the outcomes of the studies.

- Q1::

-

When a study reports the exact number of participants (e.g. experts, students, employees, etc.) used in the study, Q1 scores a “yes”. Otherwise, it scores a “no”. For example, the passage “20 students were recruited to participate in an exam…” would result in a “yes”, whereas “a group of students were recruited to participate in an exam…” would result in a “no”.

- Q2::

-

Q2 requires the reporting of demographic characteristics supporting the suitability of the participants for the task. Depending on the category of participant, relevant demographic information is required to score a “yes”. Studies that do not specify relevant information score a “no”. By means of examples, in studies relying on expert reviews, those that include information on teaching experience or the proficiency level of reviewers would receive a “yes”, while in studies relying on mock exams, those that include information about grade level or proficiency level of test takers would also receive a “yes”. Studies reporting that the evaluation was conducted by reviewers, instructors, students, or co-workers without providing any additional information about the suitability of the participants for the task would be considered neglectful of Q2 and score a “no”.

- Q3::

-

For a study to score “yes” for Q3, it must provide specific information on how participants were selected/recruited, otherwise it receives a score of “no”. This includes information on whether the participants were paid for their work or were volunteers. For example, the passage “7th grade biology students were recruited from a local school.” would receive a score of “no” because it is not clear whether or not they were paid for their work. However, a study that reports “Student volunteers were recruited from a local school…” or “Employees from company X were employed for n hours to take part in our study… they were rewarded for their services with Amazon vouchers worth $n” would receive a “yes”.

- Q4::

-

To score “yes” for Q4, two conditions must be met: the study must 1) score “yes” for both Q2 and Q3 and 2) only use participants that are suitable for the task at hand. Studies that fail to meet the first condition score “not specified” while those that fail to meet the second condition score “no”. Regarding the suitability of participants, we consider, as an example, native Chinese speakers suitable for evaluating the correctness and plausibility of options generated for Chinese gap-fill questions. As another example, we consider Amazon Mechanical Turk (AMT) co-workers unsuitable for evaluating the difficulty of domain-specific questions (e.g. mathematical questions).

- Q5::

-

When a study reports the exact number of questions used in the experimentation or evaluation stage, Q5 receives a score of “yes”, otherwise it receives a score of “no”. To demonstrate, consider the following examples. A study reporting “25 of the 100 generated questions were used in our evaluation…” would receive a score of “yes”. However, if a study made a claim such as “Around half of the generated questions were used…”, it would receive a score of “no”.

- Q6::

-

Q6a requires that the sampling strategy be not only reported (e.g. random, proportionate stratification, disproportionate stratification, etc.) but also justified to receive a “yes”, otherwise, it receives a score of “no”. To demonstrate, if a study only reports that “We sampled 20 questions from each template …” would receive a score of “no” since no justification as to why the stratified sampling procedure was used is provided. However, if it was to also add “We sampled 20 questions from each template to ensure template balance in discussions about the quality of generated questions…” then this would be considered as a suitable justification and would warrant a score of “yes”. Similarly, Q6b requires that the sample size be both reported and justified.

- Q7::

-

Our decision regarding Q7 takes into account the following: 1) responses to Q6a (i.e. a study can only score “yes” if the score to Q6a is “yes”, otherwise, the score would be “not specified”) and 2) representativeness of the population. Using random sampling is, in most cases, sufficient to score “yes” for Q7. However, if multiple types of questions are generated (e.g. different templates or different difficulty levels), stratified sampling is more appropriate in cases in which the distribution of questions is skewed.

- Q8::

-

Q8 considers whether the authors provide a description, a definition, or a mathematical formula for the evaluation measures they used as well as a description of the coding system (if applicable). If so, then the study receives a score of “yes” for Q8, otherwise it receives a score of “no”.

- Q9::

-

Q9 is concerned with whether questions were evaluated by multiple reviewers and whether measures of the agreement (e.g., Cohen’s kappa or percentage of agreement) were reported. For example, studies reporting information similar to “all questions were double-rated and inter-rater agreement was computed…” receive a score of “yes”, whereas studies reporting information similar to “Each question was rated by one reviewer…” receive a score of “no” .

To assess inter-rater reliability, this activity was performed by two reviewers (the first and second authors), who are proficient in the field of AQG, independently on an exploratory random sample of 27 studies.Footnote 4 The percentage of agreement and Cohen’s kappa were used to measure inter-rater reliability for Q1-Q9. The percentage of agreement ranged from 73% to 100%, while Cohen’s kappa was above .72 for Q1-Q5, demonstrating “substantial to almost perfect agreement”, and equal to 0.42 for Q9,Footnote 5

Results and Discussion

Search and Screening Results

Searching the databases and AIED resulted in 2,012 papers and we checked 974.Footnote 7 The difference is due to ACM which provided 1,265 results and we only checked the first 200 results (sorted by relevance) because we found that subsequent results became irrelevant. Out of the search results, 122 papers were considered relevant after looking at their titles and abstracts. After removing duplicates, 89 papers remained. This set was further reduced to 36 papers after reading the full text of the papers. Checking related work sections and the reference lists identified 169 further papers (after removing duplicates). After we read their full texts, we found 46 to satisfy our inclusion criteria. Among those 46, 15 were captured by the initial search. Tracking citations using Google Scholar provided 204 papers (after removing duplicates). After reading their full text, 49 were found to satisfy our inclusion criteria. Among those 49, 14 were captured by the initial search. The search results are outlined in Table 3. The final number of included papers was 93 (72 studies after grouping papers as described before). In total, the database search identified 36 papers while the other sources identified 57. Although the number of papers identified through other sources was large, many of them were variants of papers already included in the review.

The most common reasons for excluding papers on AQG were that the purpose of the generation was not related to education or there was no evaluation. Details of papers that were excluded after reading their full text are in the Appendix under “Excluded Studies”.

Data Extraction Results

In this section, we provide our results and outline commonalities and differences with Alsubait’s results (highlighted in the “Findings of Alsubait’s Review” section). The results are presented in the same order as our research questions. The main characteristics of the reviewed literature can be found in the Appendix under “Summary of Included Studies”.

Rate of Publication

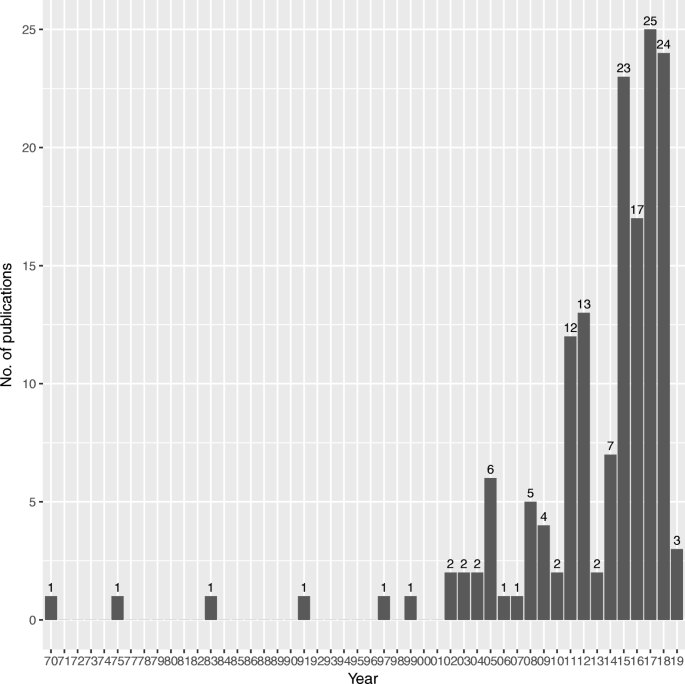

The distribution of publications by year is presented in Fig. 1. Putting this together with the results reported by Alsubait (2015), we notice a strong increase in publication starting from 2011. We also note that there were three workshops on QGFootnote 8 in 2008, 2009, and 2010, respectively, with one being accompanied by a shared task (Rus et al. 2012). We speculate that the increase starting from 2011 is because workshops on QG have drawn researchers’ attention to the field, although the participation rate in the shared task was low (only five groups participated). The increase also coincides with the rise of MOOCs and the launch of major MOOC providers (Udacity, Udemy, Coursera and edX, which all started up in 2012 (Baturay 2015)) which provides another reason for the increasing interest in AQG. This interest was further boosted from 2015. In addition to the above speculations, it is important to mention that QG is closely related to other areas such as NLP and the Semantic Web. Being more mature and providing methods and tools that perform well have had an effect on the quantity and quality of research in QG. Note that these results are only related to question generation studies that focus on educational purposes and that there is a large volume of studies investigating question generation for other applications as mentioned in the “Search and Screening Results” section.

Types of Papers and Publication Venues

Of the papers published in the period covered by this review, conference papers constitute the majority (44 papers), followed by journal articles (32 papers) and workshop papers (17 papers). This is similar to the results of Alsubait (2015) with 34 conference papers, 22 journal papers, 13 workshop papers, and 12 other types of papers, including books or book chapters as well as technical reports and theses. In the Appendix, under “Publication Venues”, we list journals, conferences, and workshops that published at least two of the papers included in either of the reviews.

Research Groups

Overall, 358 researchers are working in the area (168 identified in Alsubait’s review and 205 identified in this review with 15 researchers in common). The majority of researchers have only one publication. In Appendix “Active Research Groups”, we present the 13 active groups defined as having more than two publications in the period of both reviews. Of the 174 papers identified in both reviews, 64 were published by these groups. This shows that, besides the increased activities in the study of AQG, the community is also growing.

Purpose of Question Generation

Similar to the results of Alsubait’s review (Table 1), the main purpose of generating questions is to use them as assessment instruments (Table 4). Questions are also generated for other purposes, such as to be employed in tutoring or self-assisted learning systems. Generated questions are still used in experimental settings and only Zavala and Mendoza (2018) have reported their use in a class setting, in which the generator is used to generate quizzes for several courses and to generate assignments for students.

Generation Methods

Methods of generating questions have been classified in the literature (Yao et al. 2012) as follows: 1) syntax-based, 2) semantic-based, and 3) template-based. Syntax-based approaches operate on the syntax of the input (e.g. syntactic tree of text) to generate questions. Semantic-based approaches operate on a deeper level (e.g. is-a or other semantic relations). Template-based approaches use templates consisting of fixed text and some placeholders that are populated from the input. Alsubait (2015) extended this classification to include two more categories: 4) rule-based and 5) schema-based. The main characteristic of rule-based approaches, as defined by Alsubait (2015), is the use of rule-based knowledge sources to generate questions that assess understanding of the important rules of the domain. As this definition implies that these methods require a deep understanding (beyond syntactic understanding), we believe that this category falls under the semantic-based category. However, we define the rule-based approach differently, as will be seen below. Regarding the fifth category, according to Alsubait (2015), schemas are similar to templates but are more abstract. They provide a grouping of templates that represent variants of the same problem. We regard this distinction between template and schema as unclear. Therefore, we restrict our classification to the template-based category regardless of how abstract the templates are.

In what follows, we extend and re-organise the classification proposed by Yao et al. (2012) and extended by Alsubait (2015). This is due to our belief that there are two relevant dimensions that are not captured by the existing classification of different generation approaches: 1) the level of understanding of the input required by the generation approach and 2) the procedure for transforming the input into questions. We describe our new classification, characterise each category and give examples of features that we have used to place a method within these categories. Note that these categories are not mutually exclusive.

Level of understanding

Syntactic: Syntax-based approaches leverage syntactic features of the input, such as POS or parse-tree dependency relations, to guide question generation. These approaches do not require understanding of the semantics of the input in use (i.e. entities and their meaning). For example, approaches that select distractors based on their POS are classified as syntax-based.

Semantic: Semantic-based approaches require a deeper understanding of the input, beyond lexical and syntactic understanding. The information that these approaches use are not necessarily explicit in the input (i.e. they may require reasoning to be extracted). In most cases, this requires the use of additional knowledge sources (e.g., taxonomies, ontologies, or other such sources). As an example, approaches that use either contextual similarity or feature-based similarity to select distractors are classified as being semantic-based.

Procedure of transformation

Template: Questions are generated with the use of templates. Templates define the surface structure of the questions using fixed text and placeholders that are substituted with values to generate questions. Templates also specify the features of the entities (either syntactic, semantic, or both), that can replace the placeholders.

Rule: Questions are generated with the use of rules. Rules often accompany approaches using text as input. Typically, approaches utilising rules annotate sentences with syntactic and/or semantic information. They then use these annotations to match the input to a pattern specified in the rules. These rules specify how to select a suitable question type (e.g. selecting suitable wh-words) and how to manipulate the input to construct questions (e.g. converting sentences into questions).

Statistical methods: This is where question transformation is learned from training data. For example, in Gao et al. (2018), question generation has been dealt with as a sequence-to-sequence prediction problem in which, given a segment of text (usually a sentence), the question generator forms a sequence of text representing a question (using the probabilities of co-occurrence that are learned from the training data). Training data has also been used in Kumar et al. (2015b) for predicting which word(s) in the input sentence is/are to be replaced by a gap (in gap-fill questions).

Regarding the level of understanding, 60 papers rely on semantic information and only ten approaches rely only on syntactic information. All except three of the ten syntactic approaches (Das and Majumder 2017; Kaur and Singh 2017; Kusuma and Alhamri 2018) tackle the generation of language questions. In addition, templates are more popular than rules and statistical methods, with 27 papers reporting the use of templates, compared to 16 and nine for rules and statistical methods, respectively. Each of these three approaches has its advantages and disadvantages. In terms of cost, all three approaches are considered expensive. Templates and rules require manual construction, while learning from data often requires a large amount of annotated data which is unavailable in many specific domains. Additionally, questions generated by rules and statistical methods are very similar to the input (e.g. sentences used for generation), while templates allow the generating of questions that differ from the surface structure of the input, in the use of words for example. However, questions generated from templates are limited in terms of their linguistic diversity. Note that some of the papers were classified as not having a method of transforming the input into questions because they only focused on distractor generation or gap-fill questions for which the stem is the same input statement with a word or a phrase being removed. Readers interested in studies that belong to a specific approach are referred to the “Summary of Included Studies” in the Appendix.

Generation Tasks

Tasks involved in question generation are explained below. We grouped the tasks into the stages of preprocessing, question construction, and post-processing. For each task, we provide a brief description, mention its role in the generation process, and summarise different approaches that have been applied in the literature. The “Summary of Included Studies” in the Appendix shows which tasks have been tackled in each study.

Preprocessing

Two types of preprocessing are involved: 1) standard preprocessing and 2) QG-specific preprocessing. Standard preprocessing is common to various NLP tasks and is used to prepare the input for upcoming tasks; it involves segmentation, sentence splitting, tokenisation, POS tagging, and coreference resolution. In some cases, it also involves named entity recognition (NER) and relation extraction (RE). The aim of QG-specific preprocessing is to make or select inputs that are more suitable for generating questions. In the reviewed literature, three types of QG-specific preprocessing are employed:

Sentence simplification: This is employed in some text-based approaches (Liu et al. 2017; Majumder and Saha 2015; Patra and Saha 2018b). Complex sentences, usually sentences with appositions or sentences joined with conjunctions, are converted into simple sentences to ease upcoming tasks. For example, Patra and Saha (2018b) reported that Wikipedia sentences are long and contain multiple objects; simplifying these sentences facilitates triplet extraction (where triples are used later for generating questions). This task was carried out by using sentence simplification rules (Liu et al. 2017) and relying on parse-tree dependencies (Majumder and Saha 2015; Patra and Saha 2018b).

Sentence classification: In this task, sentences are classified into categories, which is, according to Mazidi and Tarau (2016a) and Mazidi and Tarau (2016b), a key to determining the type of question to be asked about the sentence. This classification was carried out by analysing POS and dependency labels, as in Mazidi and Tarau (2016a) and Mazidi and Tarau (2016b) or by using a machine learning (ML) model and a set of rules, as in Basuki and Kusuma (2018). For example, in Mazidi and Tarau(2016a, 2016b), the pattern “S-V-acomp” is an adjectival complement that describes the subject and is therefore matched to the question template “Indicate properties or characteristics of S?”

Content selection: As the number of questions in examinations is limited, the goal of this task is to determine important content, such as sentences, parts of sentences, or concepts, about which to generate questions. In the reviewed literature, the majority approach is to generate all possible questions and leave the task of selecting important questions to exam designers. However, in some settings such as self-assessment and self-learning environments, in which questions are generated “on the fly”, leaving the selection to exam designers is not feasible.

Content selection was of interest for those approaches that utilise text more than for those that utilise structured knowledge sources. Several characterisations of important sentences and approaches for their selection have been proposed in the reviewed literature which we summarise in the following paragraphs.

Huang and He (2016) defined three characteristics for selecting sentences that are important for reading assessment and propose metrics for their measurement: keyness (containing the key meaning of the text), completeness (spreading over different paragraphs to ensure that test-takers grasp the text fully), and independence (covering different aspects of text content). Olney et al. (2017) selected sentences that: 1) are well connected to the discourse (same as completeness) and 2) contain specific discourse relations. Other researchers have focused on selecting topically important sentences. To that end, Kumar et al. (2015b) selected sentences that contain concepts and topics from an educational textbook, while Kumar et al. (2015a) and Majumder and Saha (2015) used topic modelling to identify topics and then rank sentences based on topic distribution. Park et al. (2018) took another approach by projecting the input document and sentences within it into the same n-dimensional vector space and then selecting sentences that are similar to the document, assuming that such sentences best express the topic or the essence of the document. Other approaches selected sentences by checking the occurrence of, or measuring the similarity to, a reference set of patterns under the assumption that these sentences convey similar information to sentences used to extract patterns (Majumder and Saha 2015; Das and Majumder 2017). Others (Shah et al. 2017; Zhang and Takuma 2015) filtered sentences that are insufficient on their own to make valid questions, such as sentences starting with discourse connectives (e.g. thus, also, so, etc.) as in Majumder and Saha (2015).

Still other approaches to content selection are more specific and are informed by the type of question to be generated. For example, the purpose of the study reported in Susanti et al. (2015) is to generate “closest-in-meaning vocabulary questions”Footnote 9 which involve selecting a text snippet from the Internet that contains the target word, while making sure that the word has the same sense in both the input and retrieved sentences. To this end, the retrieved text was scored on the basis of metrics such as the number of query words that appear in the text.

With regard to content selection from structured knowledge bases, only one study focuses on this task. Rocha and Zucker (2018) used DBpedia to generate questions along with external ontologies; the ontologies describe educational standards according to which DBpedia content was selected for use in question generation.

Question Construction

This is the main task and involves different processes based on the type of questions to be generated and their response format. Note that some studies only focus on generating partial questions (only stem or distractors). The processes involved in question construction are as follows:

Stem and correct answer generation: These two processes are often carried out together, using templates, rules, or statistical methods, as mentioned in the “Generation Methods” Section. Subprocesses involved are:

transforming assertive sentences into interrogative ones (when the input is text);

determination of question type (i.e. selecting suitable wh-word or template); and

selection of gap position (relevant to gap-fill questions).

Incorrect options (i.e. distractor) generation: Distractor generation is a very important task in MCQ generation since distractors influence question quality. Several strategies have been used to generate distractors. Among these are selection of distractors based on word frequency (i.e. the number of times distractors appear in a corpus is similar to the key) (Jiang and Lee 2017), POS (Soonklang and Muangon 2017; Susanti et al. 2015; Satria and Tokunaga2017a, 2017b; Jiang and Lee 2017), or co-occurrence with the key (Jiang and Lee 2017). A dominant approach is the selection of distractors based on their similarity to the key, using different notions of similarity, such as syntax-based similarity (i.e. similar POS, similar letters) (Kumar et al. 2015b; Satria and Tokunaga 2017a, 2017b; Jiang and Lee 2017), feature-based similarity (Wita et al. 2018; Majumder and Saha 2015; Patra and Saha 2018a, 2018b; Alsubait et al. 2016; Leo et al. 2019), or contextual similarity (Afzal 2015; Kumar et al. 2015a, 2015b; Yaneva et al. 2018; Shah et al. 2017; Jiang and Lee 2017). Some studies (Lopetegui et al. 2015; Faizan and Lohmann 2018; Faizan et al. 2017; Kwankajornkiet et al. 2016; Susanti et al. 2015) selected distractors that are declared in a KB to be siblings of the key, which also implies some notion of similarity (siblings are assumed to be similar). Another approach that relies on structured knowledge sources is described in Seyler et al. (2017). The authors used query relaxation, whereby queries used to generate question keys are relaxed to provide distractors that share some of the key features. Faizan and Lohmann (2018) and Faizan et al. (2017) and Stasaski and Hearst (2017) adopted a similar approach for selecting distractors. Others, including Liang et al. (2017, 2018) and Liu et al. (2018), used ML-models to rank distractors based on a combination of the previous features.

Again, some distractor selection approaches are tailored to specific types of questions. For example, for pronoun reference questions generated in Satria and Tokunaga (2017a, 2017b), words selected as distractors do not belong to the same coreference chain as this would make them correct answers. Another example of a domain specific approach for distractor selection is related to gap-fill questions. Kumar et al. (2015b) ensured that distractors fit into the question sentence by calculating the probability of their occurring in the question.

Feedback generation: Feedback provides an explanation of the correctness or incorrectness of responses to questions, usually in reaction to user selection. As feedback generation is one of the main interests of this review, we elaborate more fully on this in the “Feedback Generation” section.

Controlling difficulty: This task focuses on determining how easy or difficult a question will be. We elaborate more on this in the section titled “Difficulty” .

Post-processing

The goal of post-processing is to improve the output questions. This is usually achieved via two processes:

Verbalisation: This task is concerned with producing the final surface structure of the question. There is more on this in the section titled “Verbalisation”.

Question ranking (also referred to as question selection or question filtering): Several generators employed an “over-generate and rank” approach whereby a large number of questions are generated, and then ranked or filtered in a subsequent phase. The ranking goal is to prioritise good quality questions. The ranking is achieved by the use of statistical models as in Blšták (2018), Kwankajornkiet et al. (2016), Liu et al. (2017), and Niraula and Rus (2015).

Input

In this section, we summarise our observations on which input formats are most popular in the literature published after 2014. One question we had in mind is whether structured sources (i.e. whereby knowledge is organised in a way that facilitates automatic retrieval and processing) are gaining more popularity. We were also interested in the association between the input being used and the domain or question types. Specifically, are some inputs more common in specific domains? And are some inputs more suitable for specific types of questions?

As in the findings of Alsubait (Table 1), text is still the most popular type of input with 42 studies using it. Ontologies and resource description framework (RDF) knowledge bases come second, with eight and six studies, respectively, using these. Note that these three input formats are shared between our review and Alsubit’s review. Another input, used by more than one study, are question stems and keys, which feature in five studies that focus on generating distractors. See the Appendix “Summary of Included Studies” for types of inputs used in each study.

The majority of studies reporting the use of text as the main input are centred around generating questions for language learning (18 studies) or generating simple factual questions (16 studies). Other domains investigated are medicine, history, and sport (one study each). On the other hand, among studies utilising Semantic Web technologies, only one tackles the generation of language questions and nine tackle the generation of domain-unspecific questions. Questions for biology, medicine, biomedicine, and programming have also been generated using Semantic Web technologies. Additional domains investigated in Alsubait’s review are mathematics, science, and databases (for studies using the Semantic Web). Combining both results, we see a greater variety of domains in semantic-based approaches.

Free-response questions are more prevalent among studies using text, with 21 studies focusing on this question type, 18 on multiple-choice, three on both free-response and multiple-choice questions, and one on verbal response questions. Some studies employ additional resources such as WordNet (Kwankajornkiet et al. 2016; Kumar et al. 2015a) or DBpedia (Faizan and Lohmann 2018; Faizan et al. 2017; Tamura et al. 2015) to generate distractors. By contrast, MCQs are more prevalent in studies using Semantic Web technologies, with ten studies focusing on the generation of multiple-choice questions and four studies focusing on free-response questions. This result is similar to those obtained by Alsubait (Table 1) with free-response being more popular for generation from text and multiple-choice more popular from structured sources. We have discussed why this is the case in the “Findings of Alsubait’s Review” Section.

Domain, Question Types and Language

As Alsubait found previously (“Findings of Alsubait’s Review” section), language learning is the most frequently investigated domain. Questions generated for language learning target reading comprehension skills, as well as knowledge of vocabulary and grammar. Research is ongoing concerning the domains of science (biology and physics), history, medicine, mathematics, computer science, and geometry, but there are still a small number of papers published on these domains. In the current review, no study has investigated the generation of logic and analytical reasoning questions, which were present in the studies included in Alsubait’s review. Sport is the only new domain investigated in the reviewed literature. Table 5 shows the number of papers in each domain and the types of questions generated for these domains (for more details, see the Appendix, “Summary of Included Studies”). As Table 5 illustrates, gap-fill and wh-questions are again the most popular. The reader is referred to the section “Findings of Alsubait’s Review” for our discussion of reasons for the popularity of the language domain and the aforementioned question types.

With regard to the response format of questions, both free- and selected-response questions (i.e. MC and T/F questions) are of interest. In all, 35 studies focus on generating selected-response questions, 32 on generating free-response questions, and four studies on both. These numbers are similar to the results reported in Alsubait (2015), which were 33 and 32 papers on generation of free- and selected-response questions respectively (Table 1). However, which format is more suitable for assessment is debatable. Although some studies that advocate the use of free-response argue that these questions can test a higher cognitive level,Footnote 10 most automatically generated free-response questions are simple factual questions for which the answers are short facts explicitly mentioned in the input. Thus, we believe that it is useful to generate distractors, leaving to exam designers the choice of whether to use the free-response or the multiple-choice version of the question.

Concerning language, the majority of studies focus on generating questions in English (59 studies). Questions in Chinese (5 studies), Japanese (3 studies), Indonesian (2 studies), as well as Punjabi and Thai (1 study each) have also been generated. To ascertain which languages have been investigated before, we skimmed the papers identified in Alsubait (2015) and found three studies on generating questions in languages other than English: French in Fairon (1999), Tagalog in Montenegro et al. (2012), and Chinese, in addition to English, in Wang et al. (2012). This reflects an increasing interest in generating questions in other languages, which possibly accompanies interest in NLP research in these domains. Note that there may be studies on other languages or more studies on the languages we have identified that we were not able to capture, because we excluded studies written in languages other than English.

Feedback Generation

Feedback generation concerns the provision of information regarding the response to a question. Feedback is important in reinforcing the benefits of questions especially in electronic environments in which interaction between instructors and students is limited. In addition to informing test takers of the correctness of their responses, feedback plays a role in correcting test takers’ errors and misconceptions and in guiding them to the knowledge they must acquire, possibly with reference to additional materials.

This aspect of questions has been neglected in early and recent AQG literature. Among the literature that we reviewed, only one study, Leo et al. (2019), has generated feedback, alongside the generated questions. They generate feedback as a verbalisation of the axioms used to select options. In cases of distractors, axioms used to generate both key and distractors are included in the feedback.

We found another study (Das and Majumder 2017) that has incorporated a procedure for generating hints using syntactic features, such as the number of words in the key, the first two letters of a one-word key, or the second word of a two-words key.

Difficulty

Difficulty is a fundamental property of questions that is approximated using different statistical measures, one of which is percentage correct (i.e the percentage of examinees who answered a question correctly).Footnote 11 Lack of control over difficulty poses issues such as generating questions of inappropriate difficulty (inappropriately easy or difficult questions). Also, searching for a question with a specific difficulty among a huge number of generated questions is likely to be tedious for exam designers.

We structure this section around three aspects of difficulty models: 1) their generality, 2) features underlying them, and 3) evaluation of their performance.

Despite the growth in AQG, only 14 studies have dealt with difficulty. Eight of these studies focus on the difficulty of questions belonging to a particular domain, such as mathematical word problems (Wang and Su 2016; Khodeir et al. 2018), geometry questions (Singhal et al. 2016), vocabulary questions (Susanti et al. 2017a), reading comprehension questions (Gao et al. 2018), DFA problems (Shenoy et al. 2016), code-tracing questions (Thomas et al. 2019), and medical case-based questions (Leo et al. 2019; Kurdi et al. 2019). The remaining six focus on controlling the difficulty of non-domain-specific questions (Lin et al. 2015; Alsubait et al. 2016; Kurdi et al.2017; Faizan and Lohmann 2018; Faizan et al. 2017; Seyler et al. 2017; Vinu and Kumar 2015a, 2017a; Vinu et al. 2016; Vinu and Kumar 2017b, 2015b).

Table 6 shows the different features proposed for controlling question difficulty in the aforementioned studies. In seven studies, RDF knowledge bases or OWL ontologies were used to derive the proposed features. We observe that only a few studies account for the contribution of both stem and options to difficulty.

Difficulty control was validated by checking agreement between predicted difficulty and expert prediction in Vinu and Kumar (2015b), Alsubait et al. (2016), Seyler et al. (2017), Khodeir et al. (2018), and Leo et al. (2019), by checking agreement between predicted difficulty and student performance in Alsubait et al. (2016), Susanti et al. (2017a), Lin et al. (2015), Wang and Su (2016), Leo et al. (2019), and Thomas et al. (2019), by employing automatic solvers in Gao et al. (2018), or by asking experts to complete a survey after using the tool (Singhal et al. 2016). Expert reviews and mock exams are equally represented (seven studies each). We observe that the question samples used were small, with the majority of samples containing less than 100 questions (Table 7).

In addition to controlling difficulty, in one study (Kusuma and Alhamri 2018), the author claims to generate questions targeting a specific Bloom level. However, no evaluation of whether generated questions are indeed at a particular Bloom level was conducted.

Verbalisation

We define verbalisation as any process carried out to improve the surface structure of questions (grammaticality and fluency) or to provide variations of questions (i.e. paraphrasing). The former is important since linguistic issues may affect the quality of generated questions. For example, grammatical inconsistency between the stem and incorrect options enables test takers to select the correct option with no mastery of the required knowledge. On the other hand, grammatical inconsistency between the stem and the correct option can confuse test takers who have the required knowledge and would have been likely to select the key otherwise. Providing different phrasing for the question text is also of importance, playing a role in keeping test takers engaged. It also plays a role in challenging test takers and ensuring that they have mastered the required knowledge, especially in the language learning domain. To illustrate, consider questions for reading comprehension assessment; if the questions match the text with a very slight variation, test takers are likely to be able to answer these questions by matching the surface structure without really grasping the meaning of the text.

From the literature identified in this review, only ten studies apply additional processes for verbalisation. Given that the majority of the literature focuses on gap-fill question generation, this result is expected. Aspects of verbalisation that have been considered are pronoun substitutions (i.e. replacing pronouns by their antecedents) (Huang and He 2016), selection of a suitable auxiliary verb (Mazidi and Nielsen 2015), determiner selection (Zhang and VanLehn 2016), and representation of semantic entities (Vinu and Kumar 2015b; Seyler et al. 2017) (see below for more on this). Other verbalisation processes that are mostly specific to some question types are the following: selection of singular personal pronouns (Faizan and Lohmann 2018; Faizan et al. 2017), which is relevant for Jeopardy questions; selection of adjectives for predicates (Vinu and Kumar 2017a), which is relevant for aggregation questions; and ordering sentences and reference resolution (Huang and He 2016), which is relevant for word problems.

For approaches utilising structured knowledge sources, semantic entities, which are usually represented following some convention such as using camel case (e.g anExampleOfCamelCase) or using underscore as a word separator, need to be represented in a natural form. Basic processing which includes word segmentation, adaptation of camel case, underscores, spaces, punctuation, and conversion of the segmented phrase into a suitable morphological form (e.g. “has pet” to “having pet”), has been reported in Vinu and Kumar (2015b). Seyler et al. (2017) used Wikipedia to verbalise entities, an entity-annotated corpus to verbalise predicates, and WordNet to verbalise semantic types. The surface form of Wikipedia links was used as verbalisation for entities. The annotated corpus was used to collect all sentences that contain mentions of entities in a triple, combined with some heuristic for filtering and scoring sentences. Phrases between the two entities were used as verbalisation of predicates. Finally, as types correspond to WordNet synsets, the authors used a lexicon that comes with WordNet for verbalising semantic types.

Only two studies (Huang and He 2016; Ai et al. 2015) have considered paraphrasing. Ai et al. (2015) employed a manually created library that includes different ways to express particular semantic relations for this purpose. For instance, “wife had a kid from husband” is expressed as “from husband, wife had a kid”. The latter is randomly chosen from among the ways to express the marriage relation as defined in the library. The other study that tackles paraphrasing is Huang and He (2016) in which words were replaced with synonyms.

Evaluation

In this section, we report on standard datasets and evaluation practices that are currently used in the field (considering how QG approaches are evaluated and what aspects of questions such evaluation focuses on). We also report on issues hindering comparison of the performance of different approaches and identification of the best-performing methods. Note that our focus is on the results of evaluating the whole generation approach, as indicated by the quality of generated questions, and not on the results of evaluating a specific component of the approach (e.g. sentence selection or classification of question types). We also do not report on evaluations related to the usability of question generators (e.g. evaluating ease of use) or efficiency (i.e. time taken to generate questions). For approaches using ontologies as the main input, we consider whether they use existing ontologies or experimental ones (i.e. created for the purpose of QG), since Alsubait (2015) has concerns related to using experimental ontologies in evaluations (see “Findings of Alsubait’s Review” section). We also reflect on further issues in the design and implementation of evaluation procedures and how they can be improved.

Standard Datasets

In what follows, we outline publicly available question corpora, providing details about their content, as well as how they were developed and used in the context of QG. These corpora are grouped on the basis of the initial purpose for which they were developed. Following this, we discuss the advantages and limitations of using such datasets and call attention to some aspects to consider when developing similar datasets.

The identified corpora are developed for the following three purposes:

Machine reading comprehension

The Stanford Question Answering Dataset (SQuAD)Footnote 12 (Rajpurkar et al. 2016) consists of 150K questions about Wikipedia articles developed by AMT co-workers. Of those, 100K questions are accompanied by paragraph-answer pairs from the same articles and 50K questions have no answer in the article. This dataset was used by Kumar et al. (2018) and Wang et al. (2018) to perform a comparison among variants of the generation approach they developed and between their approach and an approach from the literature. The comparison was based on the metrics BLEU-4, METEOR, and ROUGE-L which capture the similarity between generated questions and the SQuAD questions that serve as ground truth questions (there is more information on these metrics in the next section). That is, questions were generated using the 100K paragraph-answer pairs as input. Then, the generated questions were compared with the human-authored questions that are based on the same paragraph-answer pairs.

NewsQAFootnote 13 is another crowd-sourced dataset of about 120K question-answer pairs about CNN articles. The dataset consists of wh-questions and is used in the same way as SQuAD.

Training question-answering (QA) systems

The 30M factoid question-answer corpus (Serban et al. 2016) is a corpus of questions automatically generated from Freebase.Footnote 14 Freebase triples (of the form: subject, relationship, object) were used to generate questions where the correct answer is the object of the triple. For example, the question: “What continent is bayuvi dupki in?” is generated from the triple (bayuvi dupki, contained by, europe). The triples and the questions generated from them are provided in the dataset. A sample of the questions was evaluated by 63 AMT co-workers, each of whom evaluated 44-75 examples; each question was evaluated by 3-5 co-workers. The questions were also evaluated by automatic evaluation metrics. Song and Zhao (2016a) performed a qualitative analysis comparing the grammaticality and naturalness of questions generated by their approach and questions from this corpus (although the comparison is not clear).

SciQFootnote 15 (Welbl et al. 2017) is a corpus of 13.7K science MCQs on biology, chemistry, earth science, and physics. The questions target a broad cohort, ranging from elementary to college introductory level. The corpus was created by AMT co-workers at a cost of $10,415 and its development relied on a two-stage procedure. First, 175 co-workers were shown paragraphs and asked to generate questions for a payment of $0.30 per question. Second, another crowd-sourcing task in which co-workers validate the questions developed and provide them with distractors was conducted. A list of six distractors was provided by a ML-model. The co-workers were asked to select two distractors from the list and to provide at least one additional distractor for a payment of $0.20. For evaluation, a third crowd-sourcing task was created. The co-workers were provided with 100 question pairs, each pair consisting of an original science exam question and a crowd-sourced question in a random order. They were instructed to select the question likelier to be the real exam question. The science exam questions were identified in 55% of the cases. This corpus was used by Liang et al. (2018) to develop and test a model for ranking distractors. All keys and distractors in the dataset were fed to the model to rank. The authors assessed whether ranked distractors were among the original distractors provided with the questions.

Question generation

The question generation shared task challenge (QGSTEC) datasetFootnote 16 (Rus et al. 2012) is created for the QG shared task. The shared task contains two challenges: question generation from individual sentences and question generation from a paragraph. The dataset contains 90 sentences and 65 paragraphs collected from Wikipedia, OpenLearn,Footnote 17 and Yahoo! Answers, with 180 and 390 questions generated from the sentences and paragraphs, respectively. A detailed description of the dataset, along with the results achieved by the participants, is given in Rus et al. (2012). Blšták and Rozinajová (2017, 2018) used this dataset to generate questions and compare their performance on correctness to the performance of the systems participating in the shared task.

Medical CBQ corpus (Leo et al. 2019) is a corpus of 435 case-based, auto-generated questions that follow four templates (“What is the most likely diagnosis?”, “What is the drug of choice?”, “What is the most likely clinical finding?”, and “What is the differential diagnosis?”). The questions are accompanied by experts’ ratings of appropriateness, difficulty, and actual student performance. The data was used to evaluate an ontology-based approach for generating case-based questions and predicting their difficulty.

MCQL is a corpus of about 7.1K MCQs crawled from the web, with an average of 2.91 distractors per question. The domains of the questions are biology, physics, and chemistry, and they target Cambridge O-level and college-level. The dataset was used in Blšták and Rozinajová (2017) to develop and evaluate a ML-model for ranking distractors.

Several datasets were used for assessing the ability of question generators to generate similar questions (see Table 8 for an overview). Note that the majority of these datasets were developed for purposes other than education and, as such, the educational value of the questions has not been validated. Therefore, while use of these datasets supports the claim of being able to generate human-like questions, it does not indicate that the generated questions are good or educationally useful. Additionally, restricting the evaluation of generation approaches to the criterion of being able to generate questions that are similar to those in the datasets does not capture their ability to generate other good quality questions that differ in surface structure and semantics.

Some of these datasets were used to develop and evaluate ML-models for ranking distractors. However, being written by humans does not necessarily mean that these distractors are good. This is, in fact, supported by many studies on the quality of distractors in real exam questions (Sarin et al. 1998; Tarrant et al. 2009; Ware and Vik 2009). If these datasets were to be used for similar purposes, distractors would need to be filtered based on their functionality (i.e. being picked by test takers as answers to questions).

We also observe that these datasets have been used in a small number of studies (1-2). This is partially due to the fact that many of them are relatively new. In addition, the design space for question generation is large (i.e. different inputs, question types, and domains). Therefore, each of these datasets is only relevant for a small set of question generators.

Types of Evaluation

The most common evaluation approach is expert-based evaluation (n = 21), in which experts are presented with a sample of generated questions to review. Given that expert review is also a standard procedure for selecting questions for real exams, expert rating is believed to be a good proxy for quality. However, it is important to note that expert review only provides initial evidence for the quality of questions. The questions also need to be administered to a sample of students to obtain further evidence of their quality (empirical difficulty, discrimination, and reliability), as we will see later. However, invalid questions must be filtered first, and expert review is also utilised for this purpose, whereby questions indicated by experts to be invalid (e.g. ambiguous, guessable, or not requiring domain knowledge) are filtered out. Having an appropriate question set is important to keep participants involved in question evaluation motivated and interested in solving these questions.

One of our observations on expert-based evaluation is that only in a few studies were experts required to answer the questions as part of the review. We believe this is an important step to incorporate since answering a question encourages engagement and triggers deeper thinking about what is required to answer. In addition, expert performance on questions is another indicator of question quality and difficulty. Questions answered incorrectly by experts can be ambiguous or very difficult.

Another observation on expert-based evaluation is the ambiguity of instructions provided to experts. For example, in an evaluation of reading comprehension questions (Mostow et al. 2017), the authors reported different interpretations of the instructions for rating the overall question quality, whereby one expert pointed out that it is not clear whether reading the preceding text is required in order to rate the question as being of good quality. Researchers have also measured question acceptability, as well as other aspects of questions, using scales with a large number of categories (up to a 9-point scale) without a clear categorisation for each category. Zhang (2015) found that reviewers perceive scale differently and not all categories of scales are used by all reviewers. We believe that these two issues are reasons for low inter-rater agreement between experts. To improve the accuracy of the data obtained through expert review, researchers must precisely specify the criteria by which to evaluate questions. In addition, a pilot test needs to be conducted with experts to provide an opportunity for validating the instructions and ensuring that instructions and questions are easily understood and interpreted as intended by different respondents.