Abstract

Fuzzy methods are widely used in the study of trustworthiness. Based on this fact, the paper researches the fuzzy trustworthiness system and probability presentation theory based on bounded product implication and Larsen square implication. Firstly, we convert a group of single-input and single-output data into fuzzy inference rules and generate fuzzy relation by selecting the appropriate fuzzy implication operator, then calculate joint probability density function of two-dimensional random variables by using of this fuzzy relation. Two specific probability density functions can be obtained by selecting the fuzzy implication as bounded product implication or Larsen square implication. Secondly, we study the marginal distribution and numerical characteristics of these two kinds probability distributions and point out that two of these probability distributions have the same mathematical expectation and nearly the same variance and covariance. Finally, we study of the center-of-gravity fuzzy trustworthiness system based on these two probability distributions. We gave the sufficient conditions of universal approximations for those fuzzy trustworthiness systems.

Similar content being viewed by others

1 Introduction

Fuzziness, widely existing in real life and various systems, is a kind of uncertainty that is entirely different from contingency [1]. Fuzzy methods have wide application backgrounds in the study of trustworthiness, for example, (1) in fuzzy systems, the input, output and their cross linking state could be fuzzy; (2) in human behaviors, there also exists various kinds of fuzziness; (3) a software is unique, and trustworthiness behaviors of software are fuzzy in nature; (4) in control systems, we may have fuzzy control, design trustworthiness and intelligent control, etc. ; (5) in expert system research, it is necessary to take into consideration of the consistency, completeness, independence and redundancy of knowledge bases, but it is also important to consider fuzzy expert systems and the spread of non-trustworthiness factors. Therefore, it is significant to study the fuzzy trustworthiness system and its probability presentation theory, and this will benefit a lot to production and life practices.

Construction of fuzzy trustworthiness systems and the universal approximation of them are hot topics in the research of fuzzy system and fuzzy control theory. The most familiar construction of fuzzy trustworthiness system is divided into four processes [2]: (1) fuzzifier of the input variables; (2) construct the inference relationship; (3) fuzzy inference; (4) defuzzifier of the output variables set.

In the construction of fuzzy trustworthiness systems, the single fuzzifier of the input variable has been mentioned in most literatures until now. And fuzzy inference is CRI reasoning method [3, 4] or triple I method [5]. We must determine the fuzzy implication operator before constructing fuzzy inference relationship and fuzzy reasoning. The choice of fuzzy implication operator will have a huge impact on the fuzzy trustworthiness system. References [6–9] point out that, CRI reasoning method and triple I method have impact on the fuzzy trustworthiness system when only using the conjunction type fuzzy implication operators ,such as Mamdani implication and Larsen implication. The common defuzzification methods are: (a) center-average defuzzification method, (b) center-of-gravity defuzzification method, and (c) maximum defuzzification method. Researchers have constructed fuzzy systems by using the center-average defuzzification method [10–14] and maximum defuzzification method [15–17] until now. Because of involved some complex integral, researchers have not yet given the specific expression of the fuzzy trustworthiness system when constructing them by using center-of-gravity method. How to obtain the specific expression of this fuzzy trustworthiness system is one motivation in our paper.

As we know, the fuzzy trustworthiness system constructed by center-of-gravity method can be approximately reduced to some forms of interpolation [18] and this fuzzy trustworthiness system has meaning of probability theory. It is the best approximation of system under the sense of least-squares [19]. But how to determine the corresponding probability density function of fuzzy implication operator has not been resolved, and this is the another motivation in our paper.

In order to obtain the corresponding probability density function of fuzzy implication operator, we are starting from the input and output data and through restrictions on the fuzzy reasoning relationship then we succeeded in getting the corresponding probability density function of bounded product implication and Larsen square implication. We found that adopting different fuzzy implication operators will obtain different probability density functions, but the corresponding random variables have the same mathematical expectation and almost the same variance and covariance. Further, we derived the corresponding center-of-gravity fuzzy trustworthiness systems by using these two kinds of probability distributions and gave the sufficient conditions of universal approximation for them.

This article is organized as follows: Sect. 2 is Preliminary; Sect. 3 is the probability distributions and marginal probability distribution of bounded product implication and Larsen square implication; Sect. 4 discusses the numerical characteristic of these two distributions; Sect. 5 gives the center-of-gravity method fuzzy trustworthiness systems of these two probability distributions and the sufficient condition with universal approximation for these systems ; Sect. 6 is a overlook of application analysis of fuzzy trustworthiness systems and we have our conclusion in Sect. 7.

2 Preliminary

Suppose \(\{(x_{i},y_{i})\}_{(1\le i \le n)}\) is a group of input-output data, then

or

we construct a two-phase triangular wave by using these data, namely

for \(i=2,3,\ldots ,n-1\),

then \(x_{i},y_{i}\) is the peak point of \(A_{i}\) and \(y_{i}\), namely \(A_{i}(x_{i})=1,B_{i}(y_{i})=1,(i=1,2,\ldots ,n)\), and

-

when \(x\in [x_{i},x_{i+1}]\), \(A_{i}(x)+A_{i+1}(x)=1,\quad A_{j}(x)=0(j \ne i,i+1),\)

-

when \(y\in [y_{i},y_{i+1}]\), \(B_{i}(y)+B_{i+1}(y)=1,\quad B_{j}(y)=0(j \ne i,i+1).\)

Then we have fuzzy inference rule

Let \(\theta \) be the fuzzy implication operator, then from (1) can get the fuzz relationship \(R(x,y)=\displaystyle \mathop {\vee }\nolimits _{i=1}^{n}\theta (A_{i}(x),B_{i}(y)).\)

Let \(q(x,y)={\left\{ \begin{array}{ll} R(x,y),(x,y)\in X\times Y\\ 0, \quad other \end{array}\right. },\) and \(H(2,n,\theta ,\vee ) \triangleq \int ^{+\infty }_{-\infty }\int ^{+\infty }_{-\infty }q(x,y)dxdy,\) when \(X=[a,b],Y=[c,d]\), we have

we call \(H(2,n,\theta ,\vee )\) H function which has parameter \(2,n,\theta ,\vee \), and “2” indicates q(x, y) is binary function; n means the amount of inference rules; \(\theta \) is fuzzy implication operator, and \(\vee = \)“max”.

If \(H(2,n,\theta ,\vee )>0\), let

clearly, (a)\(f(x,y)\ge 0\); (b)\(\int ^{+\infty }_{-\infty }\int ^{+\infty }_{-\infty }f(x,y)dxdy=1\). So,f(x, y) can be regarded as a random vector of the joint probability density function \((\xi ,\eta )\) [19].

Suppose R(x, y) is fuzzy relationship determined by inference rules (1), and \(A^{*}(x)\) is a fuzzy single point of input variables, namely \(A^{*}(x^{\prime })={\left\{ \begin{array}{ll} 1,x^{\prime }=x\\ 0,x^{\prime }\ne x \end{array}\right. }\). Let \(B^{*}=A\circ R\), namely \(B^{*}(y)=\displaystyle \mathop {\vee }_{x^{\prime }\in X}(A^{*}(x^{\prime })\wedge R(x^{\prime },y))=R(x,y)\). Then

is a center-of-gravity method fuzzy trustworthiness system [18].

3 The Probability Distribution of Several Single-Input and Single-Output Fuzzy Trustworthiness System

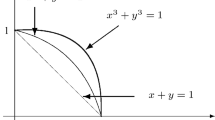

If \(\{(x_{i},y_{i})\}_{1\le i\le n}\) satisfies:

Establishing fuzzy relations according to the fuzzy inference rule (1) is one of the four processes of constructing a fuzzy trustworthiness system. If using the traditional method, then the fuzzy relation is \(R(x,y)=\displaystyle \mathop {\vee }\nolimits _{i=1}^{n}\theta (A_{i}(x),B_{i}(y))\).Since the fuzzy implication is conjunction implication, we have the following result:

if \(x\in [x_{i},x_{i+1}]\), then \(R(x,y)=\theta (A_{i}(x),B_{i}(y))\vee \theta (A_{i+1}(x),B_{i+1}(y))\).

Because the data is monotonic, we make some adjustments to the fuzzy reasoning relationship: when \(x\in [x_{i},x_{i+1}]\), let

We discuss the reasoning relationship determine by (5), then we have the following probability results:

Theorem 1

If \(\theta (a,b)=T_{m}(a,b)=(a+b-1)\vee 0\), let \(H=\displaystyle \frac{1}{3}\displaystyle \mathop {\sum }\nolimits _{i=1}^{n-1}(x_{i+1}-x_{i})(y_{i+1}-y_{i}). D_{i}=\{(x,y)\in I_{i}\times J_{i} | y\le A_{i+1}(x)y_{i}+A_{i}(x)y_{i+1}\}, E_{i}=I_{i}\times J_{i}-D_{i}\), then

-

(1)

Probability density function f(x, y) (called Bounded plot distribution) determined by formula (3) is

$$\begin{aligned} f(x,y)={\left\{ \begin{array}{ll} \displaystyle \frac{1}{H}(A_{i}(x)+B_{i}(y)-1),\quad (x,y)\in D_{i}\\ \displaystyle \frac{1}{H}(A_{i+1}(x)+B_{i+1}(y)-1),\quad (x,y)\in E_{i}\\ 0, \quad other \end{array}\right. } \end{aligned}$$(6) -

(2)

Marginal distribution functions of f(x, y) are

$$\begin{aligned} f_{\xi }(x)=\displaystyle \frac{1}{2T}(y_{i+1}-y_{i})(1-2A_{i}(x)A_{i+1}(x)),\quad x\in [x_{i},x_{i+1}](i=1,2,\ldots n).\nonumber \\ \end{aligned}$$(7)$$\begin{aligned} f_{\eta }(x)=\displaystyle \frac{1}{2T}(x_{i+1}-x_{i})(1-2B_{i}(y)B_{i+1}(y)),\quad y\in [y_{i},y_{i+1}](i=1,2,\ldots n).\nonumber \\ \end{aligned}$$(8)

Proof

(1) Because

then

If \(A_{i}(x)+B_{i}(y)\ge 1\), then \(A_{i+1}(x)+B_{i+1}(y)\le 1\) and \(B_{i}(y)\ge 1-A_{i}(x)=A_{i+1}(x)\), namely \(\displaystyle \frac{y_{i+1}-y}{y_{i+1}-y_{i}}\ge A_{i+1}(x)\). Then \(y\le A_{i+1}(x)y_{i}+A_{i}(x)y_{i+1}\triangleq y^{*}_{i}\). So

Let \(A_{i+1}(x)=\displaystyle \frac{x-x_{i}}{x_{i+1}-x_{i}}=t\), then \(A_{i}(x)=1-t, dx=(x_{i+1}-x_{i})dt\). So

Then \(H(2,n,\theta ,\vee )=\displaystyle \frac{1}{3}\sum \nolimits _{i=1}^{n-1}(x_{i+1}-x_{i})(y_{i+1}-y_{i})\triangleq H.\) And we have,

(2)

Because \(y^{*}_{i}-y_{i}=(y_{i+1}-y_{i})A_{i}(x),y_{i+1}-y_{i}^{*}=(y_{i+1}-y_{i})A_{i+1}(x)\), we make convert \(B_{i+1}(y)=\displaystyle \frac{y-y_{i}}{y_{i+1}-y_{i}}=t\) , then we get

The others can be proved similarly.\(\square \)

Theorem 2

If \(\theta (a,b)=a^{2}b\), then

-

(1)

Probability density function f(x, y) (called Larsen square distribution) determined by formula (3) is

$$\begin{aligned} f(x,y)={\left\{ \begin{array}{ll} \displaystyle \frac{1}{C}A^{2}_{i}(x)B_{i}(y),\quad \exists i,(x,y)\in F_{i}\\ \displaystyle \frac{1}{C}A^{2}_{i+1}(x)B_{i+1}(y),\quad \exists i,(x,y)\in G_{i}\\ 0, \quad other \end{array}\right. } \end{aligned}$$(9) -

(2)

Marginal density function of f(x, y) is: for \(x\in [x_{i},x_{i+1})(i=1,2,\ldots ,n)\)

$$\begin{aligned} f_{\xi }(x)= & {} \displaystyle \frac{1}{2C}(y_{i+1}-y_{i})\displaystyle \frac{(1-A_{i}(x)A_{i+1}(x))(1-3A_{i}(x)A_{i+1}(x))}{1-A_{i}(x)A_{i+1}(x)}, \quad \end{aligned}$$(10)$$\begin{aligned} f_{\eta }(y)= & {} \displaystyle \frac{1}{3C}(x_{i+1}-x_{i})\left( 1-\displaystyle \frac{B_{i}(y)B_{i+1}(y)}{1+2\sqrt{B_{i}(y)B_{i+1}(y)}}\right) (y\in [y_{i},y_{i+1}]),\qquad \end{aligned}$$(11)where \(F_{i}=\left\{ (x,y)\in I_{i}\times J_{i}|y\le \frac{A^{2}_{i+1}(x)}{A^{2}_{i}(x)+A^{2}_{i+1}(x)} y_{i}+ \frac{A^{2}_{i}(x)}{A^{2}_{i}(x)+A^{2}_{i+1}(x)}y_{i+1}\right\} ,G_{i}=(I_{i}\times J_{i})-F_{i}, K=\frac{1}{2}-\frac{1}{16}\pi ,and ~C=K\displaystyle \mathop {\sum }\nolimits _{i=1}^{n-1}(x_{i+1}-x_{i})(y_{i+1}-y_{i}).\)

Proof

(1) \(H(2,n,\theta ,\vee )=\int ^{b}_{a}\int ^{d}_{c}p(x,y)dydx\), here when \(x\in [x_{i},x_{i+1}]\), we have \(p(x,y)=A^{2}_{i}(x)B_{i}(y)\vee A^{2}_{i+1}(x)B_{i+1}(y).\)

Then \(H(2,n,\theta ,\vee )=\displaystyle \mathop {\sum }_{i=1}^{n-1}\int ^{x_{i+1}}_{x_{i}}\int ^{y_{i+1}}_{y_{i}}(A^{2}_{i}(x)B_{i}(y)\vee A^{2}_{i+1}(x)B_{i+1}(y))dydx\).

For \(x\in [x_{i},x_{i+1}]\), when \(A^{2}_{i}(x)B_{i}(y)\ge A^{2}_{i+1}(x)B_{i+1}(y)=A^{2}_{i}(x)(1-B_{i}(y))\), then

so

Then \( {\varDelta }^{*}=\int ^{y_{i+1}}_{y_{i}}(A^{2}_{i}(x)B_{i}(y)\vee A^{2}_{i+1}B_{i+1}(y))dy=\int _{y_{i}}^{\widetilde{y}_{i}}A^{2}_{i}(x)B_{i}(y)dy+\int ^{y_{i+1}}_{\widetilde{y}_{i}}A^{2}_{i+1}(x)B_{i+1}(y)dy=A^{2}_{i}(x)\int _{y_{i}}^{\widetilde{y}_{i}}B_{i}(y)dy+A^{2}_{i+1}(x)\int ^{y_{i+1}}_{\widetilde{y}_{i}}B_{i+1}(y)dy.\)

And

So

And

So \(\int ^{y_{i+1}}_{\widetilde{y}_{i}}B_{i}(y)dy=\displaystyle \frac{A^{2}_{i+1}(x)(2A^{2}_{i}(x)+A^{2}_{i+1}(x))}{2(A^{2}_{i}(x)+A^{2}_{i+1}(x))^{2}}(y_{i+1}-y_{i})\).

Then

so

Let \(A_{i+1}(x)=\displaystyle \frac{x-x_{i}}{x_{i+1}-x_{i}}=t\), then \(dx=(x_{i+1}-x_{i})dt, A_{i}(x)=1-t.\) Then

Easy to calculate

Then \(H(2,n,\theta ,\vee )=K\displaystyle \mathop {\sum }_{i=1}^{n-1}(x_{i+1}-x_{i})(y_{i+1}-y_{i})\triangleq C.\) So,

here \(F_{i}=\left\{ (x,y)\in I_{i}\times J_{i}|y\le \displaystyle \frac{A_{i+1}^{2}(x)}{A_{i}^{2}(x)+A_{i+1}^{2}(x)}y_{i}+\displaystyle \frac{A_{i}^{2}(x)}{A_{i}^{2}(x)+A_{i+1}^{2}(x)}y_{i+1}\right\} ,\) \(G_{i}=(I_{i}\times J_{i})-F_{i}.\)

(2)

where \(\widetilde{y}_{i}=\displaystyle \frac{A^{2}_{i+1}(x)y_{i}+A^{2}_{i}(x)y_{i+1}}{A^{2}_{i}(x)+A^{2}_{i+1}(x)}.\) Let \(B_{i+1}(y)=\displaystyle \frac{y-y_{i}}{y_{i+1}-y_{i}}=t,\) then \(dy=(y_{i+1}-y_{i})dt, B_{i}(y)=1-t; \) when \( y=y_{i}, t=0;\) when \( y=y_{i+1}, t=1;\) when \( y=\widetilde{y}_{i}, t=\displaystyle \frac{A^{2}_{i}(x)}{A^{2}_{i}(x)+A^{2}_{i+1}(x)}\triangleq A^{*}_{i}(x).\)

Let \(A^{*}_{i+1}(x)=\displaystyle \frac{A^{2}_{i+1}(x)}{A^{2}_{i}(x)+A^{2}_{i+1}(x)}\), then \(A^{*}_{i}(x)+A^{*}_{i+1}(x)=1\).

Because \((x,y){\in } F_{i}\Leftrightarrow y \le \displaystyle \frac{A^{2}_{i}(x)y_{i}+A^{2}_{i+1}(x)y_{i+1}}{A^{2}_{i}(x)+A^{2}_{i+1}(x)}\Leftrightarrow B_{i+1}(y)\le \displaystyle \frac{A^{2}_{i}(x)}{A^{2}_{i}(x)+A^{2}_{i+1}(x)}\Leftrightarrow \displaystyle \frac{A^{2}_{i+1}(x)}{A^{2}_{i}(x)}\le \displaystyle \frac{B_{i}(y)}{B_{i+1}(y)}\Leftrightarrow \displaystyle \frac{A_{i+1}(x)}{1-A_{i+1}(x)} \le \displaystyle \frac{\sqrt{B_{i}(y)}}{\sqrt{B_{i+1}(y)}}\Leftrightarrow A_{i+1}(x)\le \displaystyle \frac{\sqrt{B_{i}(y)}}{\sqrt{B_{i}(y)}+\sqrt{B_{i+1}(y)}}\Leftrightarrow x\le \displaystyle \frac{\sqrt{B_{i}(y)}}{\sqrt{B_{i}(y)}+\sqrt{B_{i+1}(y)}}x_{i+1}+\displaystyle \frac{\sqrt{B_{i+1}(y)}}{\sqrt{B_{i}(y)}+\sqrt{B_{i+1}(y)}}x_{i}\le \widetilde{x}_{i}.\)

Let \(\widetilde{B}_{i}(y)=\displaystyle \frac{\sqrt{B_{i}(y)}}{\sqrt{B_{i}(y)}+\sqrt{B_{i+1}(y)}}\), then

Let \(A_{i+1}(x)=t\), then \(\widetilde{x}_{i}-x_{i}=\widetilde{B}_{i}(y)(x_{i+1}-x_{i}).\) So

\(\square \)

Notes 1: In fact,Theorem 1 and Theorem 2 establish a method to construct probability distribution from the known data structures.

4 The Numerical Characteristic of Two Distributions

This section studies the numerical characteristic of two distributions described in Sect. 3, including the mathematical expectation, variance and covariance. We have the following results.

Theorem 3

Suppose \((\xi ,\eta )\) obey bounded plot distribution, then

where

Proof

(1) \(E(\xi )=\displaystyle \frac{1}{2T} \mathop {\sum }\nolimits _{i=1}^{n-1}(y_{i+1}-y_{i})\int ^{x_{i+1}}_{x_{i}}x(1-2A_{i}(x)A_{i+1}(x))dx\)

Then

Similarly,

(2) \(E(\xi ^{2})=\displaystyle \frac{1}{2T}\displaystyle \mathop {\sum }\nolimits _{i=1}^{n-1}(y_{i+1}-y_{i})\int ^{x_{i+1}}_{x_{i}}x^{2}(1-2A_{i}(x)A_{i+1}(x))dx\)

Then

Let \(\displaystyle {\varDelta }_{n}=\displaystyle \mathop {\max }_{1\le i\le n-1}(x_{i+1}-x_{i})^{2}.\) Then

When n is sufficiently large, and \({\varDelta }_{n}\) is sufficiently small,

Then

In a similar way, \(E(\eta ^{2})=\displaystyle \mathop {\sum }\nolimits _{i=1}^{n-1}\omega _{i}y_{i}y_{i+1}+\frac{17}{20}\displaystyle \mathop {\sum }\nolimits _{i=1}^{n-1}\omega _{i}(y_{i+1}-y_{i})^{2}\approx \displaystyle \mathop {\sum }\nolimits _{i=1}^{n-1}\omega _{i}y_{i}y_{i+1}.\)

Then \(D(\eta )\approx \displaystyle \mathop {\sum }\nolimits _{i=1}^{n-1}\omega _{i}y_{i}y_{i+1}-\left( \mathop {\sum }\nolimits _{i=1}^{n-1}\omega _{i}\overline{y}_{i}\right) ^{2}.\)

Then

Let \(A_{i}(x)=\frac{x_{i+1}-x}{x_{i+1}-x_{i}}=t.\) Then \(dx=-(x_{i+1}-x_{i})dt.\) Then

Then \(\mathrm {Cov}(\xi ,\eta )=E(\xi \eta )-E(\xi )E(\eta )\thickapprox \displaystyle \mathop {\sum }_{i=1}^{n-1}\omega _{i}\overline{z}_{i}-\left( \displaystyle \mathop {\sum }_{i=1}^{n-1}\omega _{i}\overline{x}_{i}\right) \left( \displaystyle \mathop {\sum }_{i=1}^{n-1}\omega _{i}\overline{y}_{i}\right) .\) \(\square \)

Theorem 4

Suppose \((\xi ,\eta )\) obey Larsen square distribution, then:

where \(\displaystyle \overline{x}_{i}=\frac{1}{2}(x_{i}+x_{i+1}),\overline{y}_{i}=\frac{1}{2}(y_{i}+y_{i+1}),\overline{z}_{i}=\frac{1}{2}(x_{i}y_{i}+x_{i+1}y_{i+1}).\)

Proof

(2)Let \(k=\displaystyle \frac{1}{2}-\frac{1}{16}\pi \), then

So, \(D(\xi )=E(\xi ^{2})-(E(\xi ))^{2}\approx \displaystyle \mathop {\sum }_{i=1}^{n-1}\omega _{i}x_{i}x_{i+1}-\left( \displaystyle \mathop {\sum }_{i=1}^{n-1}\omega _{i}\overline{x}_{i}\right) ^{2}\).

So \(D(\eta )=E(\eta ^{2})-(E(\eta ))^{2}\thickapprox \displaystyle \mathop {\sum }_{i=1}^{n-1}\omega _{i}y_{i}y_{i+1}-\left( \mathop {\sum }_{i=1}^{n-1}\omega _{i}\overline{y}_{i}\right) ^{2}\).

Then

Then

So \(\displaystyle \mathrm {Cov} (\xi ,\eta )\approx \mathop {\sum }\nolimits _{i=1}^{n-1}\omega _{i}\overline{z}_{i}-\left( \mathop {\sum }\nolimits _{i=1}^{n-1}\omega _{i}\overline{x}_{i}\right) \left( \mathop {\sum }\nolimits _{i=1}^{n-1}\omega _{i}\overline{y}_{i}\right) .\) \(\square \)

Notes 2: (1)From Theorem 3 and Theorem 4 we can see that, bounded integral distribution and Larsen square distribution have the same mathematical expectation and nearly the same variance and covariance.

(2) The above results are obtained in the suppose which is \(a=x_{1}<x_{2}<\ldots <x_{n}=b,c=y_{1}<y_{2}<\ldots <y_{n}=d\). And we can get the similar conclusion when \(a=x_{1}<x_{2}<\ldots <x_{n}=b,c=y_{1}>y_{2}>\ldots >y_{n}=d\).

5 Center-of-Gravity Fuzzy Trustworthiness System

Let f(x, y) be the probability density function obtained in our study, we can know that from the discussion above,

so we have the following theorem.

Theorem 5

If \(\theta =\) Bounded Product implication, where \(\overline{S}_{T}(x)=A^{*}_{i}(x)y_{i}+A^{*}_{i+1}(x)y_{i+1}\), where

and \(\overline{S}_{T}(x_{i})=\displaystyle \frac{2}{3}y_{i}+\frac{1}{3}y_{i+1},\overline{S}_{L}(x_{i+1})=\displaystyle \frac{1}{3}y_{i}+\frac{2}{3}y_{i+1}\).

Proof

From the theorem above we can know that:

and

so

and

And because

we have

\(\square \)

We study the fuzzy trustworthiness system exported by Larsen square distribution below.

Theorem 6

\(\displaystyle \overline{S}_{L^{2}}(x)=\frac{\int ^{+\infty }_{-\infty }yf(x,y)dy}{\int ^{+\infty }_{-\infty }f(x,y)dy}=C^{*}_{i}(x)y_{i}+C^{*}_{i+1}(x)y_{i+1}\), where

\(C^{*}_{i}(x)y_{i}+C^{*}_{i+1}(x)y_{i+1}\equiv 1\) and \(\displaystyle \overline{S}_{L^{2}}(x_{i})=\frac{2}{3}y_{i}+\frac{1}{3}y_{i+1},\overline{S}_{L^{2}}(x_{i+1})=\frac{1}{3}y_{i}+\frac{2}{3}y_{i+1}\).

Proof

Then

So, \(C^{*}_{i}(x)+C^{*}_{i+1}(x)=1\). And

thus

So \(\overline{S}(x)=C^{*}_{i}(x)y_{i}+C^{*}_{i+1}(x)y_{i+1}\), \(\displaystyle C^{*}_{i+1}(x_{i+1})=\frac{2}{3};C^{*}_{i}(x_{i+1})=1-\frac{2}{3}=\frac{1}{3};C^{*}_{i+1}(x_{i})=\frac{1}{3};C^{*}_{i}(x_{i+1})=1-\frac{1}{3}=\frac{2}{3}\), so \(\overline{S}(x_{i})=\frac{2}{3}y_{i}+\frac{1}{3}y_{i+1},\overline{S}(x_{i+1})=\frac{1}{3}y_{i}+\frac{2}{3}y_{i+1}\). \(\square \)

We study the universal approximations of fuzzy trustworthiness systems \(\overline{S}_{T}(x)\) and \(\overline{S}_{L^{2}}(x)\). Suppose s(x) is a known system and \(s(x_{i})=y_{i}\). Let \(h=\displaystyle \mathop {\max }_{1\le i\le n-1}{\varDelta } x_{i}, \Vert s\Vert _{\infty }=\displaystyle \mathop {\max }_{x\in [a,b]}|s(x)|.\) Suppose \(F_{1}(x)=A_{i}(x)y_{i}+A_{i+1}(x)y_{i+1}, (x\in [x_{i},x_{i+1}]).\) From reference [11], we can know that: \(\displaystyle \Vert s-F_{1}\Vert \le \frac{1}{8}\Vert s^{''}\Vert _{\infty }h^{2}\). So we have

Theorem 7

When \(\overline{S}(x)\in \{\overline{S}_{T}(x),\overline{S}_{L^{2}}(x)\}\),

Proof

(1) When \(\overline{S}(x)=\overline{S}_{T}(x)\),

Then

Then

Then

(2)

then

Then \(\overline{S}_{L^{2}}(x)-F_{1}(x)=(C^{*}_{i+1}(x)-\,A^{*}_{i+1}(x))(y_{i+1}-y_{i}).\) So

And

Because of \(\displaystyle \left| \frac{[A^{2}_{i}(x)-A^{2}_{i+1}(x)]}{3[A^{2}_{i}(x)+A^{2}_{i+1}(x)]}\right| \le 1, \,\displaystyle 1\ge 1-\frac{A^{2}_{i}(x)A^{2}_{i+1}(x)}{A^{4}_{i}(x)+A^{2}_{i}(x)A^{2}_{i+1}(x)+A^{4}_{i+1}(x)}-3A_{i}(x)A_{i+1}(x)\ge 1-\frac{1}{3}-\frac{3}{4}=\frac{5}{12}>0.\)

Thus \(\displaystyle |C^{*}_{i+1}(x)-A_{i+1}(x)|\le \frac{1}{3}.\) So

Then \(\displaystyle \Vert \overline{S}_{L^{2}}(x)-F_{1}(x)\Vert _{\infty }\le \frac{1}{3}\Vert s^{'}\Vert _{\infty }h.\) \(\square \)

Theorem 8

When \(\overline{S}(x)\in \{\overline{S}_{T}(x),\overline{S}_{L^{2}}(x)\}\),

Proof

Notes 3 (1) We get \(\overline{S}_{T}(x)\) and \(\overline{S}_{L^{2}}(x)\) are assumed s(x) to be monotonic function. For non-monotonic function, we can divide X into several intervals, and let s(x) are monotonic in each interval. Because \(\overline{S}_{T}(x)\) and \(\overline{S}_{L^{2}}(x)\) can approximate s(x) in each monotonic interval, \(\overline{S}_{T}(x)\) and \(\overline{S}_{L^{2}}(x)\) can approximate s(x) in entire X. For example, let \(\displaystyle s(x)=\sin x, X=[-\pi ,\pi ], X_{1}=[-\pi ,-\frac{\pi }{2}], X_{2}=(-\frac{\pi }{2},-\frac{\pi }{2}),X_{3}=[-\frac{\pi }{2},-\pi ]\). Then s(x) is monotonic function in \(X_{1},X_{2},X_{3}\). If \(\varepsilon =0.1\), then from Theorem 8 we can know that: \(n=17\) in \(X_{1}\) and \(X_{3}\), \(n=24\) in \(X_{2}\). So we let \(n=17\times 2+33=67\), then \(\overline{S}_{L^{2}}(x)\) can approximate s(x) with error not more than 0.1.

(2) From Theorem 8 we can know that: \(\overline{S}_{T}(x)\) and \(\overline{S}_{L^{2}}(x)\) have first-order approximation accuracy to s and they have the same error estimates upper bound. \(\square \)

Example 1

Let \(s(x)=\sin x,x\in [-3,3]\). Then \(\Vert S''\Vert _{\infty }=\Vert S'\Vert _{\infty }=1\).

If \(\varepsilon =0.1,\) from \(\displaystyle \frac{1}{8}\Vert S''\Vert h^{2}+\frac{1}{3}\Vert S'\Vert _{\infty }h<0.1\) we can know that \(n=24\).

For \(\varepsilon =0.1\), Figs. 1 and 2 show the simulation figure and the error estimates curves of \(\overline{S}_{T}(x)\) and \(\overline{S}_{L^{2}}(x)\) to s.

6 Application Analysis

The fuzzy trustworthiness theory and systems in this paper have wide application fields. It provides a completely new thought in theory and application study on such fields as fuzzy logic and neural networks [20],factor neural networks [21–25],and fuzzy expert systems [26]. Fuzzy trustworthiness system, together with its theories, can be used in quantifiable description and measurement of trustworthy software [27–31],and this would be a new direction of expand research on software trustworthiness metrics models. From the view of trustworthiness theory and fuzzy system, network attack and defence [31–34], engineering system automatic design process [35, 36] and the design of fuzzy controller [37], etc., can also be explored, providing a new research method and direction for these fields.

7 Conclusion

This paper has researched bounded product implication and Larsen square implication and obtained two specific probability density functions. It is pointed out that these probability distributions have the same mathematical expectation and nearly the same variance and covariance although we have different probability density function expression by use of the different fuzzy implication operators. And we also got the center-of-gravity fuzzy trustworthiness systems of these two probability distributions, then we gave sufficient condition of the universal approximations for those fuzzy trustworthiness systems. As for application researches, fuzzy trustworthiness system can be applied in software trustworthiness metrics,network attack and defence, engineering system automatic design process and the design of fuzzy controller,etc.

References

Kai-yuan C, Chuan-yuan W, Ming-lian Z (1993) Basic concepts in fuzzy reliability theories. Acta Aeronaut et Astronaut Sin 14(7):388–398

WANG LX (1997) A course in fuzzy systems and fuzzy control. Prentice-Hall, Inc., Upper Saddle River

Zadeh LA (1975) The concept of a linguistic variable and its application to approximate reasoning I. Inf Sci 8(3):199–251

Zadeh LA (1975) The concept of a linguistic variable and its application to approximate reasoning II. Inf Sci 8(4):301–359

WANG GJ (1999) The triple I method with total inference rules of fuzzy reasoning. Sci China Ser E 29(1):43–53

Li H-X, You F, Peng J (2004) Fuzzy controllers based on some fuzzy implication operators and their response functions. Prog Nat Sci 14(1):15–20

Li H, Peng J, Wang J (2005) Fuzzy systems and their response functions based on commonly used fuzzy implication operators. Control Theory Appl 22(3):341–347

Li H, Peng J, Wang J (2005) Fuzzy systems based on triple I algorithm and their response ability. J Syst Sci Math Sci 25(5):578–590

Hou J, You F, Li HX (2005) Some fuzzy controllers based on the triple I algorithm and their response ability. Prog Nat Sci 15(1):29–37

Wang LX, Mendel JM (1992) Fuzzy basis functions, universal approximation and orthogonal least sequares learning. IEEE Trans Neural Netw 3(5):807–814

Zeng XJ, Singh MG (1994) Approximation theory of fuzzy systems-SISO case. IEEE Trans Fuzzy Syst 2(2):162–176

Zeng XJ, Singh MG (1995) Approximation theory of fuzzy systems-MIMO case. IEEE Trans Fuzzy Syst 3(2):219–235

Ying H (1994) Sufficient conditions on general fuzzy systems as function approximators. Automatica 30(3):521–525

Zeng K, Zhang N-Y, Xu W (2000) A comparative study on sufficient conditions for Takagi–Sugeno fuzzy systems as universal approximators. IEEE Trans Fuzzy Syst 8(6):773–780

Li YM, Shi ZK, Li ZH (2002) Approximation theory of fuzzy systems based upon genuine many-valued implication-SISO case. Fuzzy Sets Syst 130(2):147–157

Li YM, Shi ZK, Li ZH (2002) Approximation theory of fuzzy systems based upon genuine many-valued implication-MISO case. Fuzzy Sets Syst 130(2):159–174

Li D, Shi Z, Li Y (2008) Sufficient and necessary conditions for Boolean fuzzy systems as universial approximators. Inf Sci 178(2):414–424

Li H (1998) Interpolation mechanism of fuzzy control. Sci China Ser E 28(3):312–320

Li H (2006) Probability representation of fuzzy systems. Sci China Ser E 36(4):373–397

Liu Z, Liu Y (1996) Fuzzy logic and neural networks-a research to the basic theory. BUAA (Beihang University) Press

Liu Z, Liu Y (1992) The theory of factor neural networks and Its realizing strategy. Normal University Press, Beijing

Liu Z, Liu Y (1994) The theory of factor neural networks and its applications. Guizhou Science and Technology Publishing House

Liu Z (2010) Advances in factor neural network theory and its applications. In: 4th international conference of fuzzy information and engineering, Shomal University, Amol. 14–15 Oct 2010

Liu Z (1994) Method of factor neural networks to knowledge process. Fuzzy Syst Math

Liu Y, Liu Z (1994) A Method of factor neural networks. Fuzzy Syst Math

Liu Y, Liu Z (1995) Principle and design for fuzzy expert system, BUAA (Beihang University) Press

Tang Y, Liu Z (2010) Progress in software trustworthiness metrics models. Comput Eng Appl 46(27):12–16

Wang Y, Liu Z (2006) Risk assessment model for network security based on PRA. Comput Eng 32(1):40–42

Liu X, Liu Z, Yu D (2007) Research on method of security management metrics based on hierarchy protection. Control Manag 45:39–40

Liu X, Liu Z, Yu D (2007) Method of security management metrics based on AHP model. Microcomput Inf 160:33–34

Wang X, Chen Y, Liu Z (2008) Component-based software developing method for group companies management information system; Source Wuhan University. J Nat Sci 13(1):37–44

Tao Y, Xia Y, Liu Z (2008) Research on intelligent simulation model of network defense[A]. In: Computational intelligence and industrial application PACIIA

Tao Y, Liu Z, Zhang Z, Wang P, Guo C (2010) Research on network attack situation niching model based on FNN theory. Chin High Technol Lett 20(7):680–684

Xu P, Hou Z, Liu Z (2011) Driving and control of torque for direct-wheel-driven electric vehicle with motors in serial. Expert Syst Appl 38(1):80–86

Liu Z, Liu Y (1987) Application of the fuzzy set theory in the engineering system automatic design process. Int Fuzzy Syst Knowl Eng 2:388–396

Liu Z, Liu Y (1987) Some possible Application of the fuzzy set theory in the engineering system automatic design process. In: Proceedings of SICE’87 in Hiroshima

Liu Z (1989) The fuzzy inference of programmable fuzzy controller. In: Paper of 19th international symposium on ML

Acknowledgments

This research was supported by National Natural Science Foundation of China (Major Research Plan, No.90818025).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhong, YB., Liu, ZL. & Yuan, XH. A Fuzzy Trustworthiness System with Probability Presentation Based on Center-of-gravity Method. Ann. Data. Sci. 2, 335–362 (2015). https://doi.org/10.1007/s40745-015-0062-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40745-015-0062-8